PRE2024 3 Group17

Mental Health Support AI Chatbot for TU/e Students

| Bridget Ariese | 1670115 | b.ariese@student.tue.nl |

| Sophie de Swart | 2047470 | s.a.m.d.swart@student.tue.nl |

| Mila van Bokhoven | 1754238 | m.m.v.bokhoven1@student.tue.nl |

| Marie Bellemakers | 1739530 | m.a.a.bellemakers@student.tue.nl |

| Maarten van der Loo | 1639439 | m.g.a.v.d.loo@student.tue.nl |

| Bram van der Heijden | 1448137 | b.v.d.heijden1@student.tue.nl |

Introduction

Mental health is a topic that is affecting many people all over the world. One of the groups where mental health is an increasingly important topic is for students. The amount of students in need of psychological help and support is growing, but the amount of help available is not growing along with it. Psychologists, in and out of campus facilities, are overbooked and have long waiting lists. And these are still just some of the problems that students face once they have already been looking for and accepting help. Mental health, even though important and necessary, is a topic that people feel embarrassed talking about and find it hard to admit they struggle with it. Going to a professional for help is then hard, and it can be hard to even find where to start.

This project is conducted to help find a solution for some of these problems using something almost every student has access to; the internet. Focusing on TU/e students, where we propose creating an additional feature within ChatGPT designed to support mental health. This tool will serve as an accessible starting point for students on their mental health journey, providing a safe, non-judgmental environment to express their feelings and receive guidance. By offering immediate, empathetic responses and practical advice, this tool aims to lower the barrier to seeking help and empower students to take proactive steps toward well-being. While it is not intended to replace professional counseling directly, it can serve as an initial support system, helping students gain insights into their mental health challenges, creating a bridge for longer waiting periods and guiding them toward appropriate resources when necessary.

Approach

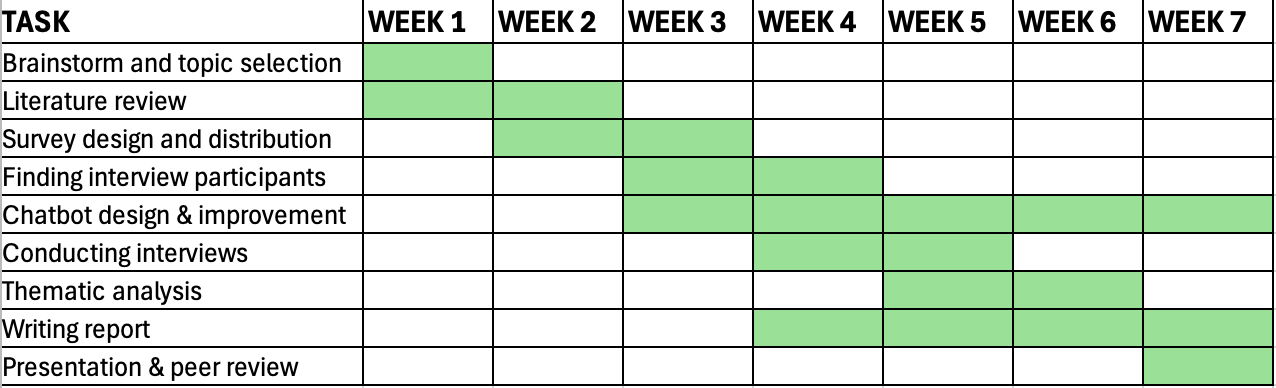

Our research follows a structured, multi-phase process to develop a chatbot that helps students with mental health support. We place a strong emphasis on extensive research both before and after development to ensure the chatbot is as effective and tailored to user needs as possible. By continuously refining our approach based on findings, we aim for maximum optimization and user satisfaction. We start by identifying the problem, researching the needs of students, developing the chatbot, and then testing and improving it based on feedback.

1. Foundational Phase

The research begins with a foundational phase, where we clearly define the problem, decide on our approach and deliverables, and identify our target group. This includes:

- Defining the topic: We brainstorm and decide on what topic and problem we want to focus during our project.

- Deciding on approach and deliverables: We determine our approach, milestones and deliverables.

- Identify target group: We define our target group, user requirements, and two user cases.

This phase ensures that we build something meaningful and relevant for students.

2. Research Phase

To understand how best to develop the chatbot, we conduct three types of research:

- Literature Review: Looking at existing research on AI chatbots for mental health, common challenges, and best practices.

- User Survey: A short Microsoft Forms survey, with around 50 responses, to understand students' experiences, concerns, and preferences regarding mental health problems and support.

- GPT Training Research: We look into how we can train a GPT for optimal results.

By combining academic knowledge with real user feedback, we ensure that our chatbot is both evidence-based and aligned with student needs.

3. Development Phase

Using what we learned from our research, we start developing the chatbot. This includes:

- Training the GPT Model: We train the chatbot with self-written instructions to make sure it responds in a helpful and empathetic way.

- Applying Psychological Techniques: We integrate psychological strategies so the chatbot can acknowledge emotions and guide students toward self-help or professional support.

- Iterative Testing: We create early versions of the chatbot, test them, and improve to ensure good response quality and ease of use.

This phase requires balancing AI technology with human-like emotional understanding to make the chatbot as supportive and functional as possible.

4. Evaluation Phase

To test how well the chatbot works, we conduct a detailed, three-fold evaluation:

- User Interviews: We conduct 12 interviews with TU/e students who review chatbot conversations and give feedback on usability, response accuracy, and emotional engagement.

- Comparison with an Untrained GPT: We test the same prompts on a standard GPT model as a baseline to measure improvements.

- Expert Feedback: A student psychologist and an academic advisor review the chatbot and provide recommendations for improvements.

This phase ensures we gather insights from both users and professionals to fine-tune the chatbot for the best possible experience.

5. Finalization Phase

In the final phase, we document our findings and complete the chatbot development. This includes:

- Final Report: The report consists of our entire process, including all intermediate steps with explanation and justification, as well as theoretical background information, conclusions, discussion and implications about the findings for future research.

- Presentation: We will present our findings in a final presentation

- GPT Handbook: We will write a handbook containing all the instructions used to train the chatbot, including general guidelines, disclaimers, referrals, crisis support, and psychological strategies.

By doing thorough research at every stage and continuously optimizing the chatbot, we ensure it is well-researched, user-friendly, and effective in supporting students with mental health challenges.

Problem statement

In this project we will be researching mental health challenges, specifically focusing on stress and loneliness, and exploring how Artificial Intelligence (AI) could assist people with such problems. Mental health concerns are on the rise, with stress and loneliness being particularly prevalent in today's society. Factors such as the rapid rise of social media channels and the increasing usage of technology in our everyday life contribute to higher levels of emotional distress. Additionally, loneliness is increasing in modern society, due to both societal changes and technological advancements. The increasing usage of social media and technology is replacing real-life interaction, creating superficial interactions that don’t fulfill deep emotional needs. Second, the shift to remote working and online learning means fewer face-to-face interactions, leading to weaker social bonds.

Seeking professional help can be a difficult step to take due to stigma, accessibility issues and financial constraints. There are long waiting times for psychologists, making it difficult for individuals to access professional help. This stigma and increase of stress and loneliness is especially apparent among young adults and adolescents, who are also particularly vulnerable to stigma. Especially with the ever increasing load of study material the education system has to teach students and children, study related stress is becoming a larger problem by the day.

Many students struggling with mental health challenges such as loneliness and stress, often feel that their issues aren’t ‘serious enough’ to seek professional support, even though they might be in need. But even when it is serious enough to consult a professional, patients are going to have to wait a long time before actually getting therapy, as waiting lines have been getting significantly larger over the past years. AI technologies might be the solution to bridge this gap, by offering accessible mental support that does not come with the same stigma as conventional therapy. The largest benefit is mainly that AI therapy can be accessed at any time, anywhere.

This study will conduct a literature review on the use of Large Language Models (LLMs) in supporting students and young adults with the rather common minor mental health issues of loneliness and stress. The study will start with reviewing the stigma, needs and expectations of users in regards to artificial intelligence in mental health issues and the current state of the art and its limitations. Based upon this information, a framework for a mental health LLM will be constructed, in the form of a GPT. Finally a user study will be conducted to analyse the effectiveness of this proposed framework.

Users

User Requirements

In this project, we focused on creating a chatbot that meets the real needs of students. To make it genuinely helpful, the chatbot should be able to guide students towards professional help when needed, offer practical tips to deal with stress and loneliness, and communicate in a way that feels warm and human — not robotic. Key user requirements:

- Referral to professional help to increase accessibility

- Coping strategies for stress and loneliness

- Empathetic and natural communication

Personas and User Cases

Our target users are students who are struggling with mental health challenges, specifically targeting loneliness and stress. The focus is on users that either feel like their problems are not 'serious' enough to go to a therapist, have to wait a long time to see a therapist and need something to bridge the wait, or those that struggle to seek help and need an easier alternative.

Joshua just started his second year in applied physics. Last year was stressful for him with obtaining his BSA, and now that this pressure has decreased he knows he wants to enjoy his student life more. But he doesn’t know where to start. All his classmates of the same year have formed groups and friendships, and he starts feeling lonely. Its hard for him to go out of his comfort zone and go to any association alone. His insecurities make him feel more alone. Like he doesn’t have anywhere to go, which makes him isolate himself even more, adding to the somber moods.

He knows that this is also not what he wants, and wants to find something to help him. Its hard to admit this to something, hard to put it into words. Therapy would be a big step, and it would take too long to even get an appointment with a therapist. He needs a solution that doesn’t feel like a big step and is easily accessible.

Olivia, a 21 year-old Sustainable innovation student, has been very busy with her bachelor end project for the past few months, and it has often been very stressful and caused her to feel overwhelmed. She has always struggled with planning and asking for help, and this has especially been a factor for her stress during this project.

It is currently 13:00, and she has been working on her project for four hours today already, only taking a 15-minute break to quickly get some lunch. Olivia has to work tonight, so she has a bunch of tasks she wants to finish before dinner. Without really realizing it, she has been powering through her stress, working relentlessly on all kinds of things without really having a clear structure in mind, and has become quite overwhelmed.

With her busy schedule and strict budget, a therapist has been a non-explored option. Olivia has not grown up in an environment where stress and mental problems were discussed openly and respectfully, and has always struggled to ask for help with these problems. However last week, she found an online help tool and used it a few times to help her calm down when things get too intense. On the screen, is an online AI therapist. This therAIpist made it easier for Olivia to accept that she needed help and look for it. She has found it to become increasingly easy for her to formulate her problems, and the additional stress of talking to someone about her problems have decreased. Olivia receives a way to talk about her problems and get advice.

When she is done explaining her problems to the AI tool, it applauds Olivia for taking care of herself, and asks her if she could use additional help of talking to a human therapist. Olivia realizes this would really help her, and decides to take further action in looking for help in an institution. In the time she would wait for an appointment she can make further use of the AI tool in situations where help is needed quickly and discreetly.

State-of-the-art

There are examples of existing mental health chatbots, and research has been done on these chatbots as well as on other AI-driven therapeutic technologies.

Woebot and Wysa are two existing AI chatbots that are designed to give mental health support by using therapeutic approaches like Cognitive Behavioral Therapy (CBT), mindfulness, and Dialectical Behavioral Therapy (DBT). These chatbots are available 24/7 and let users undergo self-guided therapy sessions.

Woebot invites users to monitor and manage their mood using tools such as mood tracking, progress reflection, gratitude journaling, and mindfulness practice. Woebot starts a conversation by asking the user how they’re feeling and, based on what the user shares, Woebot suggests tools and content to help them identify and manage their thoughts and emotions and offers techniques they can try to feel better. Wysa employs CBT, mindfulness techniques, and DBT strategies to help users navigate stress, anxiety, and depression. It has received positive feedback for fostering a trusting environment and providing real-time emotional support (Eltahawy et al., 2023). Current research indicates chatbots like these can help reduce symptoms of anxiety and depression, but are not yet proven to be more effective than traditional methods like journaling, or as effective as human-led therapy (Eltahawy et al., 2023).

The promising side of AI therapy in general is underscored in several articles. These highlight the level of professionality found in AI-driven therapy conversations, indicate the potential help that could be offered, and how to address university students in specific. Other articles investigate AI-driven therapy in more physical forms. The article "Potential Applications of Social Robots in Robot-Assisted Interventions for Social Anxiety" for example, shows how social robots can act as coaches or therapy assistants and help users engage in social situations in a controlled environment. The study "Humanoid Robot Intervention vs. Treatment as Usual for Loneliness in Long-Term Care Homes" examines the effectiveness of humanoid robots in reducing loneliness among people in care homes, and found that the AI-driven robot helped reduce feelings of isolation and helped improve the users' mood.

Challenges

Several challenges for AI therapy chatbots are often mentioned in current research on the subject. The first of these is that AI chatbots lack the emotional intelligence of humans; AI can simulate empathy but does not have the true emotional depth that humans have, which may make AI chatbots less effective when it comes to handling complex emotions (Kuhail et al., 2024). A second often-mentioned challenge is that the use of AI in mental healthcare raises concerns regarding privacy (user data security) and ethics (Li et al., 2024). Another challenge is that there is a risk at hand of users becoming over-reliant on AI chatbots instead of seeking out human help when needed (Eltahawy et al., 2023). Lastly, another difficulty is the limited adaptability of AI chatbots; they cannot quite offer fully personalized therapy like a human therapist can.

Research

Initial Survey

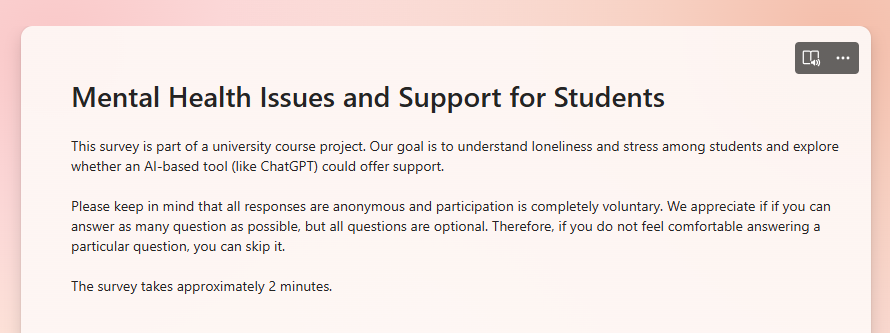

Purpose and Methodology

To gain a deeper understanding of student attitudes toward AI mental health support, we conducted a survey focusing on stress and loneliness among students. The objective was to explore how students currently manage these challenges, their willingness to use AI-based support, and the key barriers that might prevent them from engaging with such tools.

The survey specifically targeted students who experience mental health struggles but do not perceive their issues as severe enough to seek professional therapy, those facing long waiting times for professional support, and individuals who find it difficult to ask for help. By gathering insights from this demographic, we aimed to identify pain points, assess trust levels in AI-driven psychological tools, and determine how a chatbot could be designed to effectively address students’ needs while complementing existing mental health services.

Results

The survey was completed by 50 respondents, of whom 40% were female, with nearly all participants falling within the 18-23 age range. The responses provided valuable insights into the prevalence of stress and loneliness among students, as well as their attitudes toward AI-driven mental health support.

Stress and Loneliness

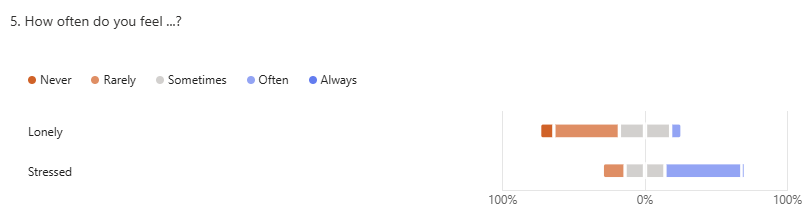

The results indicate that stress is a more frequent issue than loneliness among students. While 36% of respondents reported feeling lonely sometimes and 8% often, stress levels were significantly higher, with 28% sometimes feeling stressed, 54% often experiencing stress, and 2% reporting that they always feel stressed.

When asked about the primary causes of stress, students most frequently cited:

- Exams and deadlines

- Balancing university with other responsibilities

- High academic workload

For loneliness, the key contributing factors included:

- Spending excessive time studying

- Feeling disconnected from classmates or university life

To cope with these feelings, students employed various strategies. The most common methods included exercising, reaching out to friends and family, and engaging in entertainment activities such as watching movies, gaming, or reading.

Trust and Willingness to use AI Chatbot

One of the most striking findings from the survey is the low level of trust in AI for mental health support. When asked to rate their trust in an AI chatbot’s ability to provide reliable psychological advice on a scale of 0 to 10, the average trust score was 3.88, with a median of 4. This suggests that, while some students recognize potential benefits, a significant portion remains skeptical about whether AI can truly understand and assist with personal struggles.

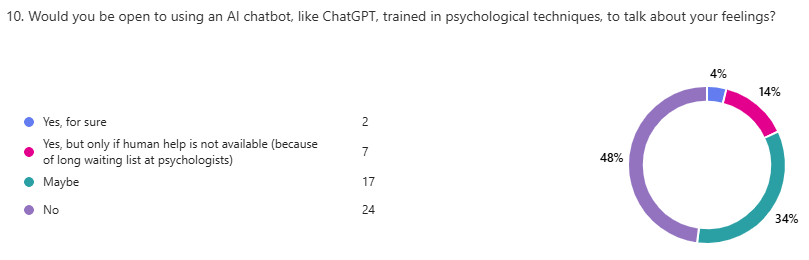

In terms of willingness to engage with an AI chatbot, the responses were mixed:

- 24 students (49%) stated they would not use an AI chatbot

- 16 students (33%) were unsure, selecting “Maybe”

- 7 students (14%) said they would only consider using it if human help was unavailable (e.g., due to long waiting times)

- Only 2 students (4%) expressed strong enthusiasm for the idea

Although a considerable number of respondents remained resistant, nearly half of the students expressed some level of openness to using an AI tool under the right conditions.

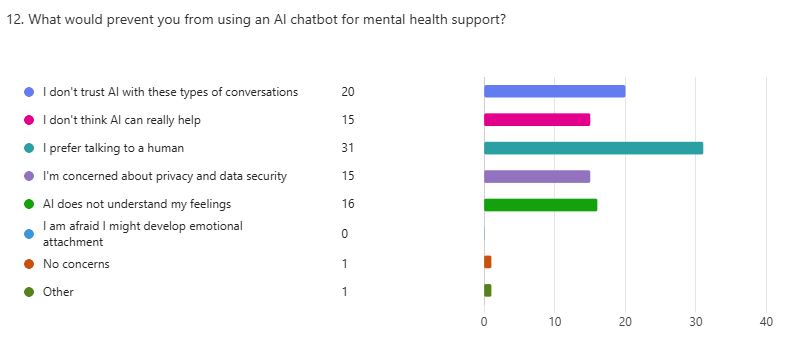

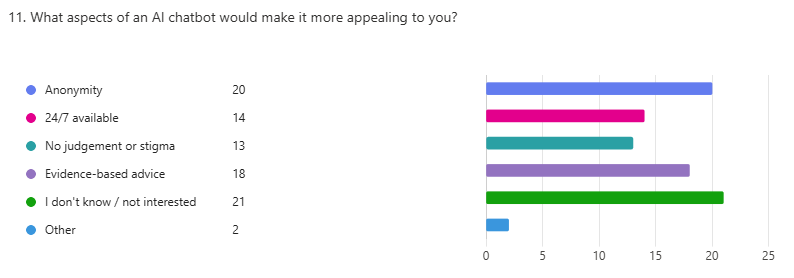

Concerns and Appeals

The survey revealed several key concerns that deter students from using an AI chatbot for mental health support. The most frequently mentioned barriers were:

- A strong preference for human interaction – 30 respondents stated they would rather talk to a human than an AI.

- Distrust in AI’s ability to provide meaningful support – 20 students were skeptical about AI’s capability in handling sensitive mental health conversations, fearing the responses would be impersonal or inadequate.

- Doubt that AI can truly understand emotions – 15 respondents felt that AI lacks the emotional depth needed for meaningful interaction.

- Uncertainty about AI’s effectiveness – 15 respondents questioned whether AI could actually provide real help for mental health concerns.

Despite these concerns, students identified several features that could make an AI chatbot more attractive for mental health support:

- Anonymity – 20 students highlighted the importance of privacy, indicating they would be more willing to use a chatbot if they could remain anonymous.

- Evidence-based advice – 17 respondents expressed interest in a chatbot that provides guidance based on scientifically validated psychological techniques.

- 24/7 availability – 14 students valued the ability to access support at any time, particularly in moments of distress.

The Role of Universities in Mental Health Support

A noteworthy finding from the survey is that more than 40% of respondents had either sought professional help for stress or loneliness or had wanted to but did not actively pursue it. This suggests that many students recognize their struggles but face barriers in seeking support.

Furthermore, when asked whether universities should provide more accessible mental health support for students, responses indicated significant demand for such initiatives:

- 60% of respondents agreed that more accessible support should be available.

- 32% were unsure.

- Only 8% felt that additional mental health support was unnecessary.

These findings highlight the need for universities to explore alternative mental health support options, including AI-based tools, to address gaps in accessibility and availability.

Discussion and Implications for AI Chatbot Design

The survey results underscore the challenges and opportunities in designing an AI chatbot for mental health support. The most pressing issue is the low trust in AI-generated psychological advice. Many students remain skeptical of AI’s ability to provide meaningful guidance, and the chatbot must actively work to establish credibility. One way to address this is by ensuring that all responses are based on scientifically validated psychological techniques. By referencing established methods such as Cognitive Behavioral Therapy (CBT) and mindfulness-based strategies, the chatbot can reinforce that its recommendations are grounded in evidence rather than generic advice. Including explanations or citations for psychological principles could further increase trust.

Another critical aspect is ensuring that the chatbot’s tone and conversational style feel natural and empathetic. The most common concern among respondents was the preference for human interaction, meaning the chatbot must be designed to acknowledge users’ emotions and offer responses that feel supportive rather than robotic. While AI cannot replace human therapists, it can be trained to respond with warmth and understanding, using conversational techniques that mimic human empathy. A key design feature should be adaptive responses based on sentiment analysis, allowing the chatbot to adjust its tone depending on the user’s emotional state.

Given that privacy concerns were a recurring theme, transparency in data handling will be essential. Before engaging with the chatbot, users should be explicitly informed that their conversations are anonymous and that no identifiable data will be stored. This reassurance could help mitigate fears surrounding data security and encourage more students to engage with the tool.

The survey also highlights the need for different chatbot functionalities to cater to varying student needs. Some students primarily need self-help strategies to manage stress and loneliness independently, while others require a referral system to guide them toward professional help. Another group of students, particularly those on mental health waiting lists, need interim support until they can see a therapist. To address these different needs, the chatbot should be designed with three core functions:

- Providing psychological support and coping strategies

- The chatbot will offer evidence-based techniques for managing stress and loneliness.

- It will emphasize anonymity and create a non-judgmental space for users to express their concerns.

- Referring students to professional help and university support services

- Users who prefer human interaction will be directed to mental health professionals at TU/e.

- The chatbot will provide information on how to access university support resources.

- Supporting students on waiting lists for professional help

- While students wait for therapy, TU/e's mental health professionals can refer to the chatbot, which will temporarily offer guidance to help them cope in the meantime.

- The tool will clarify that it is not a substitute for therapy but can provide immediate relief strategies.

To ensure the chatbot meets these objectives, further prototype testing will be necessary. A small-scale user trial will be conducted to gather qualitative feedback on conversational flow, response accuracy, and overall effectiveness. Additionally, the chatbot’s ability to detect and adapt to different emotional states will also be evaluated to refine its responsiveness.

The findings from this survey highlight both the limitations and possibilities of AI-driven mental health support. While trust remains a significant barrier, the potential for accessible, anonymous, and always-available support should not be underestimated. By designing a chatbot that prioritizes credibility, privacy, and adaptability, we can create a tool that helps students manage stress and loneliness while complementing existing mental health services. As we move forward, user feedback and iterative development will be crucial in shaping a system that students find genuinely useful.

Product Development: Our own GPT

Typically, developing a chatbot requires extensive training on data sets, refining models and implementing natural language processing (NLP) techniques. This process includes a vast amount of data collection, training and updating of the natural language understanding (NLU). It is also possible to implement AI networks within own systems, but this was not feasible within the time and budget limits of this course.

Therefore, our decision fell on OpenAI's ‘Create your own GPT’ functionality. Here, much of this technical work is abstracted away. Instead of training a model from scratch, this tool allows users to customize an already trained GPT-4 model through instructions, behavioral settings and uploaded knowledge bases. In this "Create your own GPT" functionality you are able to give the GPT a name, logo, description and example conversation starters. For functionality, you can upload the knowledge it has access to and give instructions on how it should behave. Finally, there are some options for the functionality of the GPT, such as web search, using DALL-E (image generator) and using code interpreter and information analysis. After setting these things up, the custom GPT can be created and be shared via a link. For our purpose, DALL-E and code interpreter were not used.

The things that needed to be done for our GPT training:

- Behavior and design: knowing how to guide conversations effectively, ensuring responses are matching with the desires of our users. This comes together with the prompt engineering: Determining how the GPT needs to respond.

- Training our GPT with real TU/e referral data.

- Testing example inputs and scenarios.

The Handbook

To guide the chatbot’s development, we created a tailored handbook that serves as the foundation for its behavior and conversation flow. The handbook provides clear instructions on how the chatbot should communicate, diagnose user needs, and apply psychological techniques in a way that is suitable for TU/e students.

It consists of several essential parts. First, it includes general communication guidelines to ensure the chatbot always responds in an empathetic, supportive, and non-judgmental manner. Second, it describes a diagnostic flow that allows the chatbot to determine whether a student needs coping strategies, professional help, or interim support while waiting for therapy. The handbook also integrates psychological frameworks, including CBT, PPT, MBSR, and ACT. For each, we provided techniques, conversational templates, and example interventions adapted for chatbot use. This ensures the advice given is both scientifically grounded and practically applicable.

A link to the handbook can be found in the Appendix.

Referral utility

In addition to the behavioral guidelines handbook, we provided the GPT with a text file of TU/e-specific support services. This file serves as a knowledge base that allows the chatbot to make accurate and appropriate referrals when users needed professional help. These resources were extracted from the TU/e website and included contact information, descriptions of services, and distinctions between types of support like stress, emotional well-being and personal counseling. This ensured that it was clear for the GPT which reference had what purpose.

Training

After the initial instruction and installation, we manually tested the GPT extensively through simulated user interactions. This allowed us to evaluate whether the chatbot's responses matched our ethical and functional goals. Based on these tests, we reformulated prompts from the handbook and updated the instructions of the GPT to match more with our target audience and desired behavior.

This manual training loop was needed to teach the GPT to respond more naturally and sensitively and to ensure it did not fall back on generic or overly scripted responses. Emphasis was placed on user-centered thinking, guiding conversations in a way that was validating, respectful and helpful without being directly directive or diagnostic.

Ethical safeguards

In the instructions of the GPT a disclaimer was also added to prevent unintended use of the chatbot, which goes as follows:

'It is important to inform you that this GPT is a project in development and not a fully-fledged therapy alternative. It is only is meant to serve as a tool to help ease stress and/or loneliness; it is not meant to treat or diagnose more severe mental problems such as depression or trauma. If you (think you might) be experiencing one such condition, it is strongly advised to seek professional (human) help. This GPT will guide you to the available resources for help at and around the TU/e, please do not hesitate to make use of these resources.'

Furthermore, we have indicated in the instructions that for topics that stray too far from our mental help functionality, the chatbot should indicate this and explain its functionality.

Psychological background of the GPT

In order to make an artificial intelligence be able to help and assist young adolescents with mental help, it needs a psychological framework. This psychological framework can be divided into a few different functions.

- Confidentiality: the chatbot should refrain from exposing personal data to others without the user having given his implicit consent.

- Understanding and inquiring: the chatbot should be able to understand the problems the user is describing and be able to inquire further on what is troubling the user.

- Concluding: after the chatbot has gathered enough information on the problems the user has, it should be able to conclude where the problem lies.

- Assessing: when the chatbot comes to a severe mental health problem, it should be able to reference help at the university or advance people in waiting lines, to help people get in touch with an actual professional faster.

- Referencing: when a solid conclusion of the problem has been made, the chatbot should reference the appropriate (human) person to get in touch with at the university.

- Generate advice: if the user does not want to get in touch with a human, it should generate advice based upon the problem and psychological theories the chatbot has been supplied with.

This pattern requires a lot of psychological background. All steps require at least an understanding of the human brain. When looking at psychological theories, there are a few options that should be analysed.

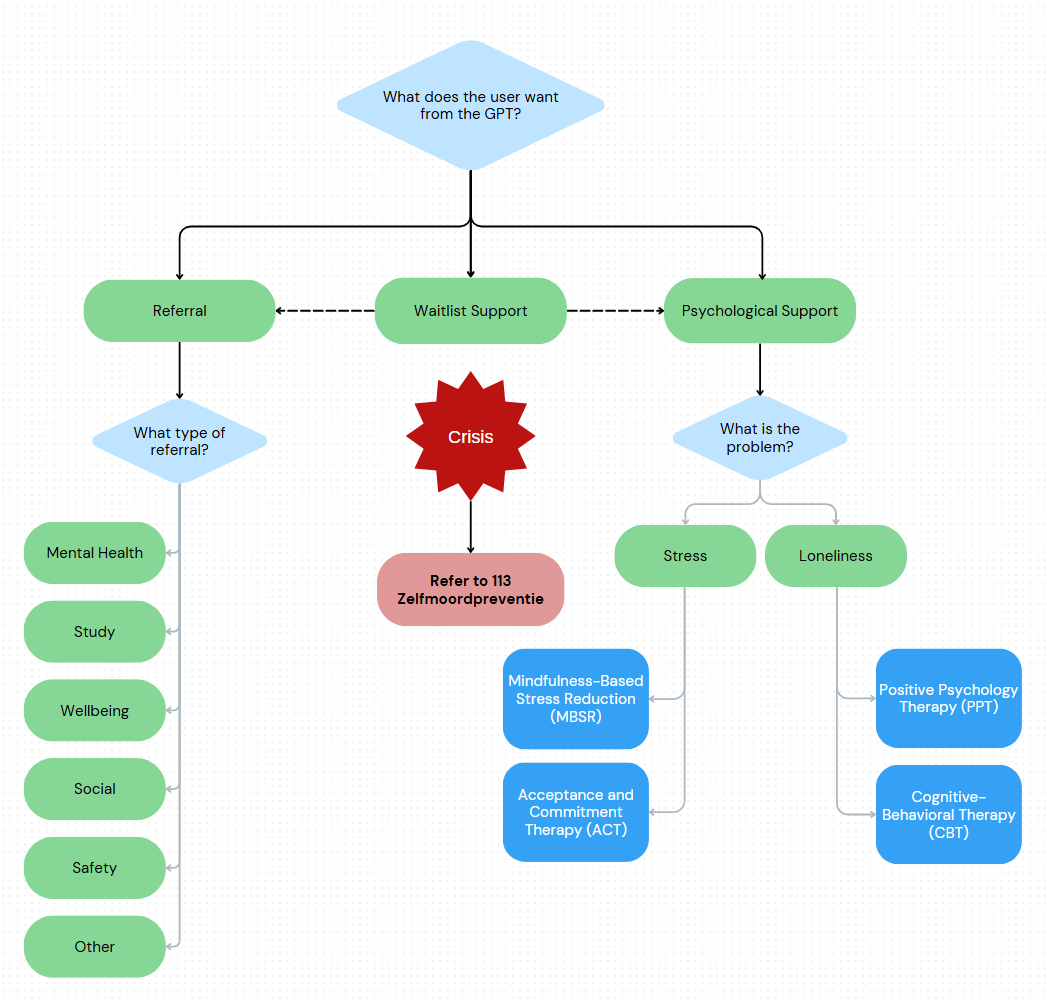

Diagnostics

To ensure that the GPT effectively supports students seeking mental health assistance, we designed a structured diagnostics process that allows it to determine the user's needs and provide appropriate guidance. The process consists of two key diagnostic questions:

- What does the user want from the GPT? The chatbot identifies whether the user is looking for psychological support, a referral to professional help, or support while on a waitlist.

- What is the user's specific issue? If the user is seeking psychological support or waitlist support, the GPT determines whether their primary concern is stress, loneliness, both, or an unspecified issue. If the user is requesting a referral, the GPT clarifies what type of support they need, such as mental health services, safety assistance, academic support, or social connection.

How the GPT Uses the Diagnostics Process

The chatbot engages in a natural, non-intrusive conversation to assess the user's situation. It asks guiding questions to classify their needs and ensures that it provides the most relevant and effective response.

- If a referral is needed, the GPT directs the user to the correct TU/e service, offering additional resources where applicable.

- If the user needs psychological support, the GPT provides evidence-based coping strategies tailored to their concerns.

- If the user is on a waitlist for professional care, the GPT offers self-help techniques while checking whether they need an alternative referral.

- If a crisis situation is detected, the GPT immediately refers the user to 113 Zelfmoordpreventie and encourages them to seek professional human help.

Techniques

Cognitive-Behavioral Therapy (CBT)

CBT mainly focuses on negative thinking patterns, therefore people suffering from mental health issues can learn new ways of coping with those thinking patterns to improve their mental health. Some treatment following CBT can include teaching the patient to recognize their own negative thinking patterns, encouraging the patient to understand behaviour and motivation of others or develop a larger confidence in the patients own abilities.

Advantages of using CBT in a chatbot:

- Firstly, this is a rather analytical/general manner of giving therapy. For instance, recognizing negative thinking patterns by analyzing the general word usage use given to the chatbot.

- Secondly, CBT primarily needs consistent effort from the patient themselves through recognizing their own thinking patterns and coping with those.

- Thirdly, CBT gives rather short-term and general mental health assistance, which means the chatbot does not need to save long term data. This decreases the possibility of leaking sensitive data, and it allows the chatbot to give immediate advice after a conversation.

Disadvantages of using CBT in a chatbot:

- Firstly, CBT requires the student to make a consistent effort in daring themselves to keep analyzing and assisting themselves with changing their thought patterns.

- Secondly, CBT lacks in tackling more severe and deeper mental health issues. However, these kinds of situations should not be solved by the chatbot directly, but rather be referred to the correct professional help within the university.

When looking at CBT in chatbots, it can be concluded that, since CBT is very structured and relatively safe to use on patients, a chatbot can make use of CBT to aid patients.

Acceptance and Commitment Therapy (ACT)

ACT is rather similar to CBT, however where CBT focuses more on changing the patient's thought pattern, ACT focuses on helping the patient accept their emotional state, and move forward from there. Treatments using ACT can include encouraging the patient to not dwell on past mistakes but rather live in the moment and encourage the patient to take steps towards his goals despite possible discomfort.

Advantages of using ACT in a chatbot:

- Firstly, ACT helps patients accept their feelings, therefore it is very general therapy and helps with a lot of different mental health issues.

- Secondly, since ACT can be used for a lot of different mental health issues, it provides considerable long term help for the patient.

Disadvantages of using ACT in a chatbot:

- Firstly, ACT is not as structured as CBT and requires a lot of conversation to understand the patients emotions and thoughts. Furthermore, the chatbot would need a lot more conversation to help the patient come to terms with how they are feeling and accept said feelings.

- Secondly, accepting emotions can be very difficult for people seeking relief and, in some cases, might push people to resign to suffering in their bad mental health state.

- Thirdly, ACT focuses on acceptance and not on symptom reduction. The patient will therefore not see results on the short-term.

When looking at ACT in chatbots, it can be concluded that chatbots in therapy should generally not use ACT, due to the technique's complexity and danger to push a student towards the wrong path. However, there are parts of ACT that could be of use to a chatbot. For example, in the case of a student struggling with exam stress, a chatbot could use ACT to help said student come to terms with the normality of exam stress and cope with their stress.

Positive Psychology Therapy (PPT)

PPT is an approach to therapy that focuses on increasing well-being and general happiness rather than treating mental illness. This is done by focusing on building positive emotions, engagement, relationships, meaning and achievements. Treatments using PPT can include encouraging the patient to write down what he or she is grateful for daily, encouraging the patient to engage in acts of kindness to boost their happiness or to visualize the patient's ideal self to increase motivation.

Advantages of using PPT in chatbots:

- Firstly, PPT boosts the patients well-being and happiness, which aids patients in the short term.

- Secondly, PPT can also be very helpful to people that do not struggle with mental health issues, since the technique helps a lot with personal growth.

- Thirdly, PPT can help create a long-term resilience to mental health issues, by teaching coping strategies and improving a patient's image of self.

Disadvantages of using PPT in chatbots:

- Firstly, PPT does not address deep psychological issues, but as mentioned for CBT, this is not an issue in this case because when the chatbot encounters deep psychological issues it should refer to a human professional rather than provide advice on its own.

- Secondly, PPT is less structured than regular therapy, thus the chatbot will be required to make more conversation in order to help a patient with their self-image.

- Thirdly, PPT requires the patient to make consistent effort in helping themselves.

In conclusion, PPT can be a very useful tool for chatbots, since it is very safe to apply and helps a lot with personal growth, which is especially useful for students. PPT can require more conversation, which can be more challenging for a chatbot. However, using general PPT strategies to increase the patient's image of self, can be very generally used. Therefore, it is beneficial to include PPT in a chatbot.

Mindfulness-Based Stress Reduction (MBSR)

MBSR therapy is a therapeutic approach that uses mindfulness meditation to help with mental or physical health. MBSR teaches patients to be more aware of their thoughts, emotions and senses, where the goal is to increase awareness of the current moment. Treatments using MBSR can include meditation, with some different focuses, either on thoughts and emotions, or mindfulness. This can conclude in decreased stress and anxiety and improved focus and concentration.

Advantages of using MBSR in chatbots:

- Firstly, MBSR works very well for reducing stress, anxiety and depression.

- Secondly, MBSR encourages the patient to have a better relationship with their emotions, teaching the patient to accept their emotions rather than supress or avoid them.

- Thirdly, MBSR can significantly help with sleeping, especially when having stress. This can provide numerous benefits, including improved concentration.

- Fourthly, MBSR teaches the patient exercises to decrease stress on their own.

Disadvantages of MBSR in chatbots:

- Firstly, MBSR requires high commitment on the patient's part to participate in these meditation exercises and do them consistently.

- Secondly, MBSR does not provide many short term benefits, as dealing with mental health issues through meditation takes practice in order to be effective.

- Thirdly, MBSR does not work by itself for severe mental health issues. Though it will be of some aid, MBSR on its own will not fully resolve the issue.

In conclusion, using MBSR in a chatbot is recommended. It is a relatively safe-to-use therapy technique, which is focused on long-term improvements on stress, anxiety, depression and concentration. This is highly applicable to the target group of the chatbot in this study.

A link to our GPT can be found in the Appendix.

Research ethics & concerns

While chatbots have become increasingly sophisticated and useful, they are not without challenges. Issues such as accuracy, ethical concerns, and user trust continue to shape their development and adoption. The following section explores some of the key concerns surrounding chatbots and their implications.

Privacy is one of the concerns that comes along with chatbots in general, but with personal information like in therapy this concern grows, as was previously discussed in the analysis of the survey. Users may be hesitant to use therapy chatbots because they are scared of data breaches, or the data simply being used for anything other than its purpose, like being sold for marketing reasons. Any licensed therapist will have to sign and adhere to a confidentiality agreement, which states that the therapist will not share the vulnerable information of the patients anywhere but appropriate. For AI this is more difficult. Data will somehow have to be saved and collected in order for the chatbot to become smarter and learn more about the patient. Privacy contains multiple concerns, including identity disclosure. Here the most important thing is that any of the collected data should not be traceable to the patient in any way. This corresponds to the notion of anonymity. Additionally, there are concerns of attribute disclosure and membership disclosure, going beyond anonymity. Where if sensitive information would be available to or found by others, this can be linked to the patients, even if anonymous, and the data can be further used to make assumptions about these patients. Because the chatbot for this project is made by creating and training a GPT, privacy concerns arise further. By using the chatbot, data on personal topics and experiences is fed into chatGPT. While building a chatbot ground-up to fully avoid these concerns is unfortunately out of scope for this course, actions can be taken to mitigate the privacy concerns. One such measure is to ensure private data such as names and addresses are both not fed into the tool (by warning users) and not asked for by the tool (by training the GPT). Another, which will be done to protect research participants, is to ensure testers of the chatbot do so on an account provided by the research team, and not on their personal account (Walsh et al., 2018).

Privacy is unfortunately not the only concern that arises with the use of AI therapy tools. The advancement of sophisticated language models has resulted in chatbots that are not only functional but also remarkably persuasive. This creates a situation where the distinction between human and machine blurs, and users can easily forget they are interacting with an algorithm. This is where the core of the deception problem lies. The discussion surrounding deception in technology has been going on for a while and has many dimensions. What precisely constitutes deception? Is it simply concealing the true nature of an entity, or is there more to it? Is intent relevant? If a chatbot is designed to assist but inadvertently misleads, does that still qualify as deception? Is deception always negative? In certain contexts, such as specific forms of entertainment, a degree of deception might be considered acceptable. The risk of deception is particularly present among vulnerable user groups, such as the elderly, children, or in the case of the therapy chatbot, individuals with mental health conditions. These groups may be less critical and more susceptible to the persuasive language of chatbots. The use of chatbots in therapy shows a scenario where deception can have consequences. While developers' intentions are positive, crucial considerations must be remembered, like the risk of false empathy. This is how chatbots can simulate empathetic responses, but they lack genuine understanding of human emotions. This can foster a false sense of security and trust in patients. The danger of over-reliance, vulnerable users may become overly dependent on a chatbot for emotional support, potentially leading to isolation from human interaction. There is also the potential for misdiagnosis or incorrect advice. Chatbots can provide inaccurate diagnoses or inappropriate advice, with serious implications for patient health. To mitigate these risks, it's essential that users are consistently reminded of their interaction with an algorithm. This can be done by clear identification, regular reminders and education. And while the therapy chat-tool is supposed to feel more empathetic and more human than a regular chat-tool, it should not lead to the user believing that they are talking to a human rather than a system. A disclaimer should be added along with referrals to human help.

While deception and privacy issues mostly concern the safety of the user, there are other problems or concerns that arise even before one uses the tool . A qualitative study on understanding the emergence of trust toward diagnostic chatbots, states that trust in systems has to be taught, learned and thought about. It doesn’t just happen like with interaction of humans. Even before the initial contact, trust in the systems plays an important role. In research and in our own survey we have found attitudes toward chatbots. Subjective norms, perceived risk, and beliefs about the system's structural environment may influence the willingness to use the systems and the subsequent trust-building process. Trust is more likely to arise when using the chat tool is socially legitimized, and users believe using such a system is embedded in a safe and well-structured environment. People tend to trust other people. What is thus important for trust in the system is legitimation, not just socially, but also from professionals. Having the system being recommended by professionals increases the likelihood of trusting that the system is okay to use. Trust in chatbots is also more likely to develop when they are embedded in well-established and regulated systems. Users feel more secure interacting with AI tools that operate within a structured environment where oversight and accountability are clear. Like in the case of this research, the online TU/e environment. The presence of legal, ethical, and professional frameworks reassures users that the system is reliable and safe. Without such legitimacy, users may hesitate to trust the chatbot, regardless of its actual capabilities.

While deception is a concern, the chat-tool must be sufficiently ‘real’ for deception to occur. While chatbots can mimic empathetic responses, they lack the capacity for genuine human empathy. This distinction is crucial in therapy, where authentic emotional connection forms the foundation of healing. Clinical empathy, practiced by healthcare professionals like therapists, involves using empathy to imagine and understand a patient's experience during a specific moment or period of their life. This could range from the emotional impact of receiving a serious diagnosis, to the challenges recovery. This real-time empathy, where clinicians resonate with a patient's emotional shifts and imagine the situation from their perspective, leads to more meaningful and effective medical care for several key reasons (Montemayor et al., 2021). The human ability to create deep, empathetic bonds, to truly understand and share in another's emotional experience, remains beyond the reach of AI systems. The difference lies in the nature of response, AI operates on learned patterns and algorithms, while a human therapist, even within ethical and professional guidelines, uses intuition, lived experience, and an understanding of human complexity. Though AI can process and apply moral frameworks, it cannot navigate the moral dilemmas faced by clients with the same level of judgment as a human. Furthermore, human therapists can adapt in a way that AI cannot replicate. They can adjust their approach, responding shifts in a client's emotional state, and engage in spontaneous, intuitive interactions. Moreover, a large part of human interaction, especially in therapy, happens beyond words. Non-verbal cues like facial expressions, body language, eye contact, and subtle shifts in tone all contribute to the depth of communication. Human therapists can read these cues to perceive a client’s emotions more accurately, even when words fail. They can also use their own non-verbal expressions to convey understanding and support. AI, on the other hand, operates purely through text or pre-recorded speech, making it incapable of engaging in this essential, unspoken layer of human connection. Without the ability to interpret or express non-verbal communication, AI lacks a crucial dimension of therapeutic interaction, reinforcing the value of human presence in mental health care. These human qualities are essential for creating a safe and supportive therapeutic environment, and they represent the value of human connection in the domain of mental health.

Even when these other concerns are mitigated and their effect is minimal, something can still go wrong. This is where the concern of responsibility and liability comes to place. For example, if the patient using the chatbot does something that is morally or lawfully wrong, but is suggested by the chatbot, who would be in the wrong? A human that has full agency over its actions would be responsible for these own actions. However, they have put their trust into this online tool, and this tool could potentially give them ‘wrong’ information. Depending on whether or not you regard the chat tool to be its own agent, it is not responsible either. Since its choices and sayings are programmed for its use. Therefore, the responsibility also falls to the developers of the chat tool. A significant ethical concern in AI therapy centers on the distribution of responsibility and liability. When a patient, guided by an AI chatbot, engages in actions that are morally or legally questionable, the question of who is responsible arises. While individuals are typically accountable for their choices, the reliance on AI-generated advice complicates matters. It matters whether or not an AI chatbot can be considered an agent; given that AI operates on programmed algorithms and data, it lacks genuine autonomy, making it impossible to hold it morally responsible in the same way as a human. Consequently, a substantial portion of the responsibility shifts to the developers, who are accountable for the AI's programming, the data it's trained on, and the potential repercussions of its advice. Furthermore, when therapists incorporate AI as a tool, they also bear responsibilities, including understanding the AI's limitations and applying their professional judgment. The legal framework surrounding AI liability is still developing, in need of clear guidelines and regulations to safeguard both patients and developers. AI therapy introduces a complex web of responsibility, where patient accountability is nuanced by the developers' and therapists' roles, and the legal system strives to keep pace with rapid technological advancements. What we want to highlight is that essentially there is always a human that is responsible and in control. And thus morally responsible and liable for any actions.

Product Evaluation

User interviews

Interview guide

Conducting interviews was a vital aspect of our research as they facilitate the collection of qualitative data, which is important for understanding subjective experiences of users. Collecting qualitative data about user experiences and thoughts would capture in-depth insights. Presenting participants with the first prototype of our created chatbot allowed them to engage with it firsthand. This allowed us to gain feedback on usability, credibility and trustworthiness. Furthermore, the interviews provided an opportunity to gather broader user opinions on the chatbot’s interface, tone and structure. The interviews were conducted to achieve two primary objectives. First of all, exploring the subjective experiences of users with the chatbot. Second, assessing user’s subjective opinions about the chatbot’s interface, conversational tone and overall structure of the chatbot.

The interview guide (which can be found in the appendix) was developed to provide the interviewers with structured yet flexible questions to conduct the interviews. Since multiple interviewers were involved in the process of conducting the interviews, it was crucial to create somewhat structured questions to ensure consistency throughout the collected data. That’s why we opted for a semi-structured guide. While the guide provided structured questions, it also allowed room for participants to elaborate on their thoughts and experiences. The intention was to keep the interviews as natural and open-ended as possible, hence almost all questions were phrased open-ended.

The interview questions were developed based on key themes that emerged from the analysis of the initial survey. The following topics formed the core structure of the interviews:

- Chatbot experience and usability

- Empathy and conversational style

- Functionality and user needs

- Trust and credibility

- Privacy and data security

- Acceptability and future use

The first topic was started after the user had interacted with the chatbot/read the manuscript and covered questions about the first impressions of the chatbot. Participants were asked what they found easy and difficult, if they felt comfortable sharing personal feelings and thoughts with the chatbot and whether they encountered any issues or frustration while using the chatbot/reading the manuscript. The second topic revolved around empathy and the chatbot’s conversational style. We asked participants if they felt the chatbot’s responses were empathetic, how the chatbot acknowledge their emotions and if this was done in an effective way, how natural the chatbot’s responses seemed and if they would change anything to the interaction if possible. Next, participants were asked about the chatbot’s main features, what features might be missing or how they could be improved, which one of the three functions they found most useful and if they would add any additional functionalities. Participants were then asked about trust and credibility levels, including privacy and data security concerns. The last topic revolved around acceptability and future use, we asked about potential barriers that could prevent using the chatbot and opinions about if it would be a valuable tool for TU/e students.

All interviews followed the same structured format to ensure consistency across all sessions. First an introduction was given in which the research project was briefly introduced, the purpose of the interview was explained and it, was once again, highlighted to participants that their participation is completely voluntary. Basic demographic questions were asked to understand participation context. After, participants were given instructions on how to interact with the chatbot/read the manuscript. After interacting with the chatbot/reading manuscript, the core topics were discussed in a flexible, conversational manner, allowing participants to share their thoughts freely. At the end of the interview, participants were asked if they had anything additional insights and were then thanked for their time and contribution.

Each question in the interview guide was carefully designed to align with the overarching research question. Each main topic was tried to give equal amount of time and depth. By structuring the questions in this manner, we ensured that the collected data directly contributed to answer our research objectives.

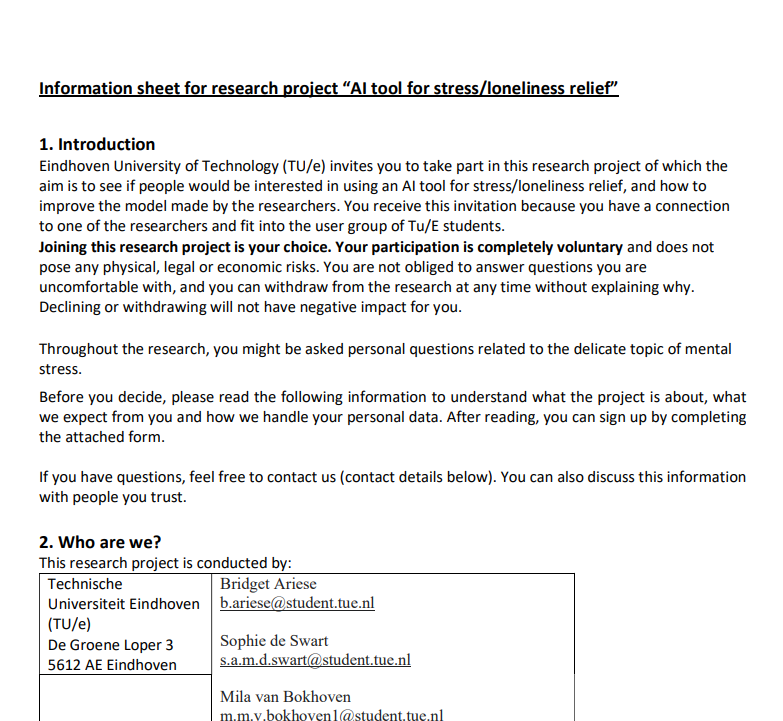

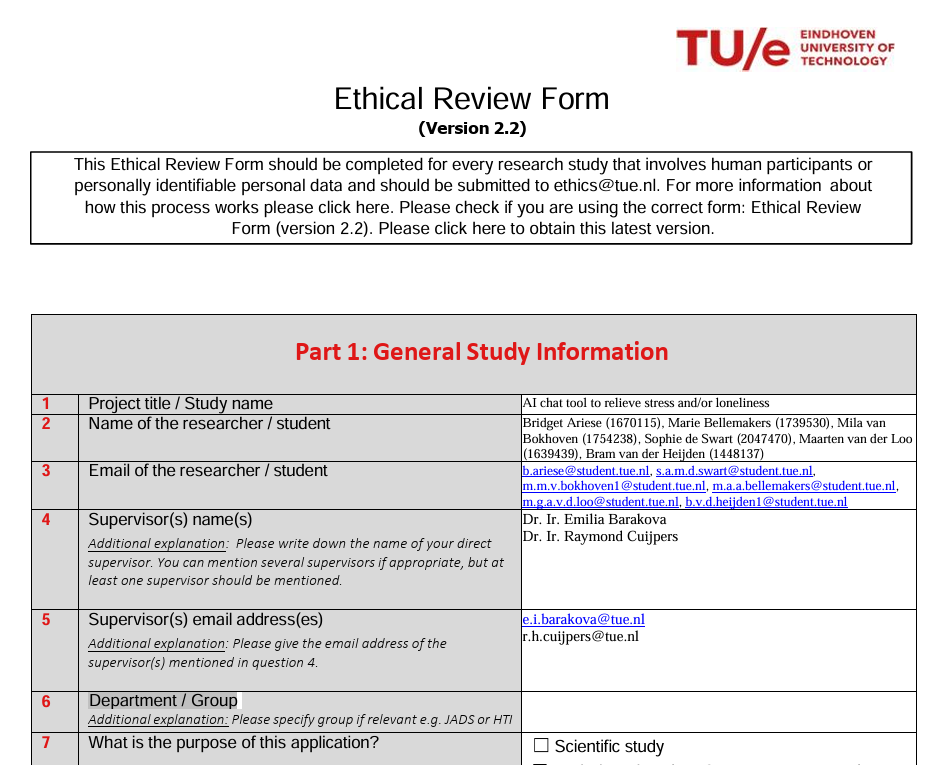

Measures taken to maintain ethical integrity included:

- Informed consent – participants were provided with consent forms before the interview, outlining the purpose of the study, their rights and privacy information. By providing the participants with the informed consent in advance of the interview, we allowed them to read it in their own time, on their own pace.

- Anonymity – all responses were anonymized to ensure confidentiality. After the research project, all participant data will be removed from personal devices.

- Participants were reminded before and during the interview that they could withdraw from the study at any point without any repercussions.

Conducting the interviews

We employed convenience sampling due to ease of access and feasibility within the study’s time frame. While this approach allowed us to gather diverse perspectives quickly, it may limit the diversity of our findings.

See the demographics below:

| Age | Gender | Education |

| 21 | Male | Biomedical technologies |

| 19 | Female | Psychology and Technology |

| 22 | Female | Bachelor biomedical engineering |

| 21 | Female | (Double) Master biomedical engineering and artificial intelligence engineering systems |

| 21 | Male | Psychology and technology |

| 21 | Female | Data science |

| 22 | Male | Biomedical technologies |

| 25 | Male | (Master) applied mathematics |

| 22 | Female | (Master) Architecture |

| 20 | Male | Computer Science & Engineering |

| 18 | Male | Electrical engineering |

| 20 | Female | Industrial Design |

Each interviewer sampled two participants and conducted two interviews, resulting in a total of 12 interviews. The interviews were either conducted online or in-person. All interviews were recorded for research purposes and recordings were deleted after transcription was finished.

Interview Analysis

Approach

After transcribing the interviews, we used thematic analysis to analyze the interview data. This qualitative method allows to identify, analyze and interpret patterns within qualitative data. Thematic analysis is especially well-suited for this study as it enables to capture users’ experiences, perceptions and opinions about the chatbot in a structured yet flexible way.

Our analysis will follow Braun & Clarke’s (2006) six-step framework for thematic analysis. The first step involves familiarizing yourself with the data. Specifically, this means that all interviews will be transcribed and all transcripts will be read multiple times in order to become familiar with the content. After the data will be systematically coded by identifying significant phrases and patterns. Coded will be assigned to relevant portions of text related to the main topics covered during the interviews. The codes will then be reviewed and grouped into potential themes based on recurring patterns. To do this, relationships between different codes will be examined to determine broader themes. Themes will then be refined, defined and named. Each theme will be clearly defined in order to highlight its significance in relation to the research question. Subthemes may also be identified to provide additional depth. Finally, the findings will be contextualized within the research objectives.

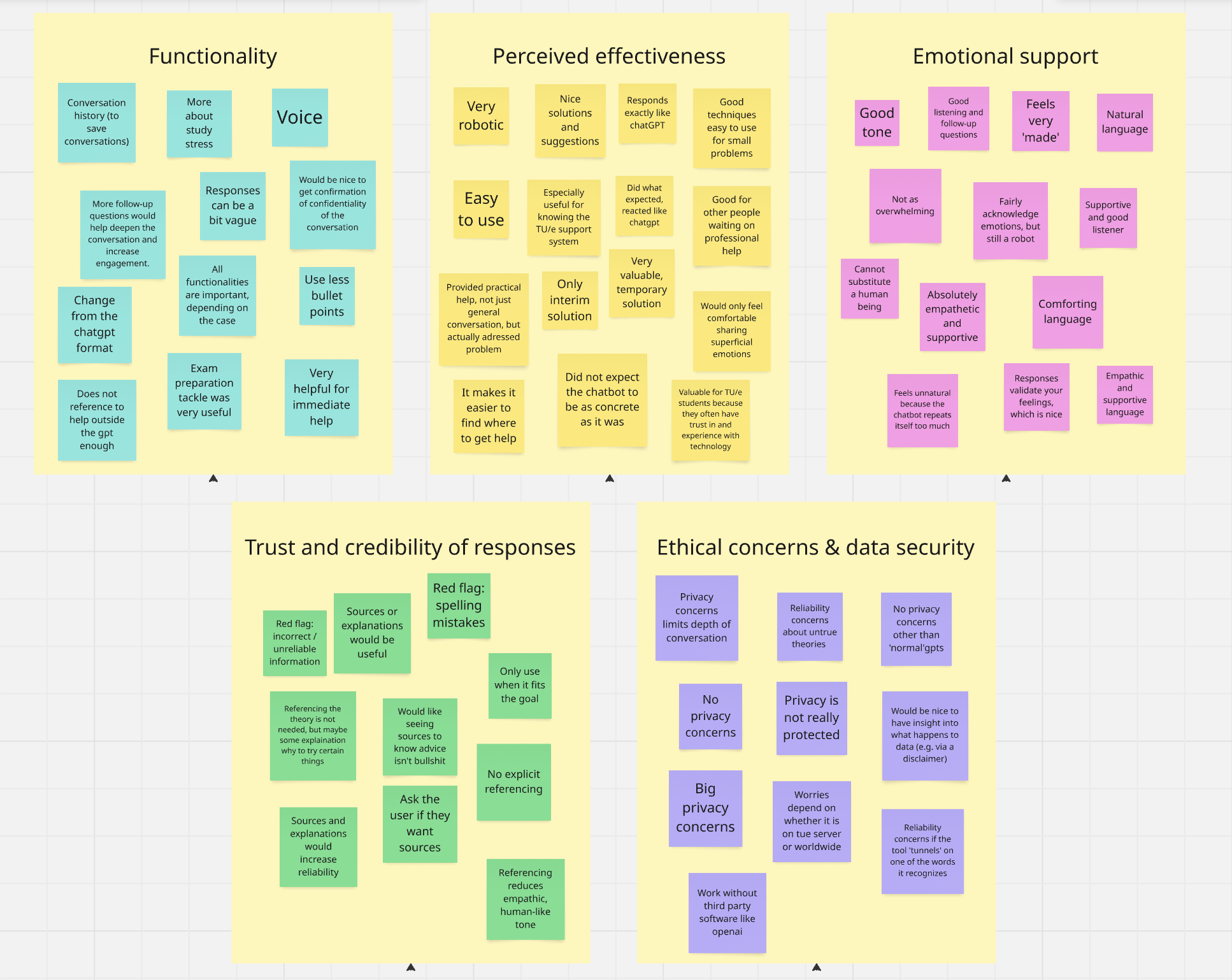

Thematic Analysis

Through thematic analysis, we identified five recurring themes. Overall, our interviews revealed that not a lot of participants had prior experience with using online mental health resources such as websites, apps or forums. Additionally, participants didn’t have any experience within the TU/e resource system.

The first theme is functionality, which covers current useful features as well as areas for improvement. Most participants were positive about all three functionalities, appreciating its structured response and ease of use. However, they noted that conversations did feel ‘robotic’ and participants highlighted that more follow-up questions would deepen the conversation and increase engagement. Asking more follow-up questions would create a more dynamic and engaging dialogue. Additionally, recalling previous sessions could make conversations feel more continuous and supportive. Another improvement area identified was the chatbot’s reliance on bullet-point responses, which contributed to its impersonal nature. Reducing the reliance on bullet-point responses could create a more human-like conversation.

Perceived effectiveness is the second theme that emerged from the interviews which focused on how well the chatbot met users’ needs. Participants generally found the AI useful for practical support, such as exam preparation and stress management strategies. Many were pleasantly surprised by the chatbot’s ability to generate detailed, actionable plans with step-by-tsep instructions that are easy to follow. However, when it came to providing deeper emotional support, participants found the chatbot lacking. While it could acknowledge emotions, it did not fully engage in empathetic dialogue. As a result, participants viewed the chatbot as a helpful supplementary tool but not as a substitute for human mental health professionals. Its ease of use was appreciated, but its lack of human warmth remains a drawback.

The next theme revolves around emotional support. While some participants found the chatbot supportive, others thought it felt robotic. The chatbot was able to recognize and validate users’ emotions, but its engagement with deeper emotional issues was limited. While the chatbot generally used natural and comforting language, some participants noticed inconsistencies in its tone, which reduced its perceived warmth and overall effectiveness. These inconsistencies made interactions feel less genuine, highlighting the need for improved emotional intelligence and conversational flow. Despite these limitations, the chatbot was still perceived as a supportive tool, showing support through listening and responding in an empathetic way.

The fourth theme identified was trust and credibility of responses. Participants emphasized the need for greater transparency in how the chatbot generated responses. Many expressed a desire for the chatbot to reference psychological theories or scientific research to enhance its credibility. The balance between scientific accuracy and natural conversation is seen as crucial, while explanations could strengthen trust, they should be communicated in an accessible and engaging manner. Some participants also questioned whether the chatbot’s advice was evidence based, underlining the need for clearer sourcing of information.

The final theme covers ethical concerns and data security, which emerged as significant issues. Participants expressed strong concerns regarding data privacy, particularly the potential for their information being stored or shared. These concerns was a barrier to engagement, as users were hesitant to share deeper emotional thoughts due to fears of insufficient anonymity. The reliance on third-party platforms, such as OpenAI, increased these concerns. Participants suggested that minimizing dependence on external AI providers and ensuring that robust data protection measures could alleviate some of these concerns and increase trust in the chatbot.

In summary, our findings suggest that while the chatbot serves as a valuable and accessible tool for mental health support, it remains an interim solution rather than a replacement for professional help. Participants appreciated its practical assistance but found its emotional engagement limited. Improvements in converstional flow, personalization and response transparency could improve the chatbot’s effectiveness. Additionally, addressing privacy concerns through stronger data security measures would further increase user trust. With these improvements, the chatbot could become a more reliable and empathetic support system for students seeking mental health assistance.

Differences in our model vs. ChatGPT

This section compares the outputs from the regular ChatGPT to our adjusted model. We tested this by inputting the same user scripts into the standard ChatGPT.

One of the main advantages of our developed model over the regular model is that we trained it specifically for TU/e students. This means that it is able to directly give suggestions for professional help within the university, which is easily accessible and reachable to students. While the original ChatGPT would be able to provide users with specific referrals to professionals within the university, this would require a lot more specific prompting and questioning from the user. This is not ideal, especially since a lot of students are not aware of all the resources the university has to offer. When the chatbot suggests trying to get help within the university, students will be made aware of this resource network. Another key advantage of our model is its ability to provide more effective suggestions for stress-related issues. The suggestions, based on researched and proven techniques, the improved model gave were more relevant to the user’s problem, less obvious (increasing the change they hadn’t tried them yet), and better explained in terms of their effectiveness. These improvements resulted from additional training we did and the integration of research-backed therapies and techniques. Lastly, since our model was designed specifically for stress and loneliness related problems, the model was quicker in determining the exact problem of the user since the model was able to assume certain things before the conversation even started. Additionally, the model was able to give more suggestions specifically related to these problems.

However, both models show the same amount of follow-up questions, meaning that they both need the same amount of information before coming with a possible solution, advice or follow-up question. Even though we tried to train the model to ask even more questions before coming with an advice, this remains an area for improvement. This is due to the nature of ChatGPT, as it always directly wants to start answering questions instead of first listening like a human being would do.

Expert interviews

For the further evaluation of the project we wanted some gain some insights from professionals/ help providers at the Tu/E. We conducted an in-depth interview with student advisor Monique Jansen, and student psychologist Ms. Neele.

TU/e Academic advisor

For the interview with academic advisor Monique Jansen-Vullers, we presented our four sample chatbot interactions as with the user interviews. She provided valuable, experience based insights, that helped us better align our concept with the real needs and context of students at the TU/e. One of the questions asked was which of the three chatbot functions she thought was most important, Monique emphasized the referral function. She noted that TU/e offers a wealth of mental health and wellness resources, but they are often underutilized due to poor visibility.

"I think TU/e offers a lot more than people realize.. making it accessible is the hard part."

Monique recognized the potential of such a chatbot to add value to TU/e's online ecosystem. However, she cautioned that implementation is key. The tool must function reliably and reducing friction for struggling students.

"If you can make the existing offerings clearer, it would already be a big win."

Her comments emphasize the importance and value that the chat-tool could provide in the referral function at the TU/e, yet also questioned where one would find the chat-tool. A major concern is thus the findability of the tool, Monique emphasized that building a tool is one thing, but making sure students can find and use it effectively is just as important. She suggested that thinking about where this tool would be is one of the first steps to implementation.

Although Monique thought that the chat-tool had a lot of potential in the referral function, she mentioned that she found the chatbot's language generally natural, but the responses were too direct and lacked the kind of empathetic listening that students need. She recommended a more organic flow of conversation, especially at the beginning of an interaction. The suggestion was that the tool currently asks for a category of where the ‘problem’ lies, but it would be more valuable for the tool to ask for the user’s story, and have it interpret from the story what the problem is. She recommended using the “LSD” method (Listening, Summarizing, and Redirecting) that counselors use: starting with attentive listening before steering toward support options. This would prevent students from feeling forced into predetermined paths and allow for more authentic conversations.

Monique responded positively to the use of psychological methods such as CBT, Positive Psychology and MBSR. Yet, she encouraged the inclusion of references and links to additional sources so students could research the theory behind the advice if they wanted to. Monique was also very curious on the review of a professional psychologist on this topic.

Monique also noted that the current sample chats felt too general. She stressed that the scenarios should reflect specific student challenges to really capture students' interests, such as BSA stress, difficult courses, housing or the experience of international students.

Along with the general use of the chat-tool, some questions that we asked the professionals and in the general interviews were about the safety of use.

A major “red flag” of use and reliability would, according to Monique, be a mishandling or a misrecognition of the chatbot in urgent cases."A lonely student who hasn’t left the house for weeks? That needs to be picked up on. Otherwise, you risk missing something serious."

She recommended linking to external services like "moetiknaardedokter.nl", as well as emergency lines like 113 or 112, wherever appropriate.

Outside of the safety for the users in urgent cases, Monique personally had few concerns on privacy. She did acknowledge that TU/e does not currently use chat systems for a reason and that such a tool should be subject to proper data management and ethical review.

In general there major insights that Monique provided where on the topics of referral and findability, personalization with emphasis on student lives, and the safety regarding urgent cases. In general Monique said “I think it’s a very fun and promising idea”, but also said that as of right now it is not ready to actually be used yet.

TU/e Student Psychologist

In order to get a professional's opinion on the chatbot, particularly on the quality of its advice, we also had conversation with Aryan Neele, a student psychologist at the TU/e. We wanted to try and let this conversation flow naturally, so we prepared some points of discussion and questions beforehand, but we kept the structure loose so as not to limit the feedback we would receive to areas we thought of beforehand.

To start, we informed Ms. Neele of our project; the problem we identified, our chatbot, its primary purposes, and the material used to train the chatbot so far.

She immediately identified privacy as an important ethical matter; we mediate this in part by the disclaimer that the chatbot provides, but Ms. Neele mentioned that despite such a disclaimer people may still enter sensitive/private information, possibly without realizing so, and that only a disclaimer is thus not enough. Users should be informed as clearly as possible what happens to their data, and provide consent for it before using the chatbot.

Another point of discussion raised by Ms. Neele was that user scenarios may not always indicate one problem as clearly as we think. When informing Ms. Neele of our chatbot, we explained that it is intended for students with less severe problems (stress/loneliness) and not for students with severe problems like, for example, depression. However, Ms. Neele explained that people often are not exactly aware of what problem/condition they have; they often simply do not feel good and try to look for help, but may not be able to articulate what their ‘problem’ is. This could make it difficult for the chatbot to find out what kind of help the user needs, because ‘simple’ keyword detection would not work well here, in order to find out whether the user needs to be referred to professional help.

A third initial concern raised by Ms. Neele was that a chatbot can simply not reply as spontaneously as a human could, due to the fact that all its responses need to be preprogrammed to a certain extent. This could lower the aptness and quickness of the replies and help a user receives.

After these first remarks, we showed Ms. Neele the chatbot, and typed in an example of a student struggling with stress for exams and fear of failure. We then had a free-flowing discussion about this example and important points to take into consideration and/or improve. The key points from this discussion were the following:

Overall, the way in which the chatbot responded to the user’s inputs was better than Ms. Neele expected. She underscored that it was very good that the chatbot normalizes the user’s feelings / problems; this keeps the conversation at a low threshold and shows interest to the user. However, Ms. Neele explained that in a chat like this, you are missing a part of the information, as you cannot get information about a user’s situation from their facial expressions and/or physical reactions.

Furthermore, she explained that it is very important to keep asking questions and find out where a user’s problem or situation stems from. This can be difficult if a user is not aware why they show the behaviour that they do (e.g. procrastinate / panic ). In that case, giving a user CBT-based exercises, like the chatbot did in the example, could already be a step too far, which could turn people off. Ms. Neele thus highlights the importance of keeping a human referral. For example, the chatbot could tell the user that it is not able to understand them well enough or that it realizes the user is not responding anymore, and refer the user to the correct professional help.

A key point of feedback given by Ms. Neele was to properly explain exercises to the user: what is the user experiencing, how does that work in the brain, what are the exercises you can do, why do these exercises help, what is the expected effect of the exercises, and when can this effect be expected. This helps keep the user engaged, makes them understand their situation better, and makes them more willing to do the exercises.

Another note from Ms. Neele was to ensure that, if applicable, exercises are called attention exercises, and not meditation exercises.

After this conversation, we still a little bit of time to ask some questions. The first question we asked Ms. Neele was whether she thought the target audience we selected for our research was apt. She found this difficult to answer: on the one hand she said she believed the chatbot to be of use for anyone who is a bit stuck in their head and struggling with what they are feeling. On the other, she indicates that loneliness may not be a suitable issue to target; loneliness is seeking more contact with humans, but a chatbot cannot offer this. So, for that case, she advises to help people find this contact by giving them clear information about ways to get more social contact (e.g. associations).

The second (and last) question we got to ask, was whether Ms. Neele found the chatbot to be valuable for TU/e students. She replied that she did not find it so in its current form, once again highlighting privacy as an obstacle. She could see the chatbot being valuable in the future; especially for people that just are a bit stuck for a moment, but not for heavier problems (here she once more underscored that it is essential to think thoroughly about how you can make this distinction). She also referred to a self-help website the TU/e used to have called ‘gezonde boel’ as something to look at and research, and did note that the format of a chatbot is nicer as it is more interactive.

Discussion