PRE2018 1 Group3 1203310

Introduction:

With the fast advances of technology there is a lot of change that the world is undergoing now. A lot of everyday tasks get more automated and some of these are taken over by a robot counterpart. Here we will take a look into the world of automated grocery shopping, or at least part of it. We are talking about a robotic store clerk that could be able to do the same things a regular one would do. One of these tasks are navigation around the store, but most of the time a common store clerk does not think a lot about it. If someone would want to design a robotic counterpart, there are several obstacles you would have to overcome. Obstacles in a literal sense, like store shelves, customers, shopping carts etc. There are apparently some cases that are interesting to look at and what effect they have to the shelf filling experience. What we are focussing on in this case is a robots interaction with the way customers act in tandem with a shopping cart. A shopping cart plays an interesting factor in the behavior of an average consumer.

Problem statement:

Ever wondered how customers move about in a store? Are you aware of your own behavior when moving about and how much do you take your environment in consideration. These are all questions that can be asked when we are talking about the navigation around a store. So when someone would design a robot to do the same activities, this should all be taken into consideration. The main differences between a robot and a human should be highlighted to know what we are capable of and look at the things we could take advantage of.

When navigating around a store you are bound to encounter an obstacle in your way. Most humans know how to move about in these settings, mostly because of prior experiences and common knowledge. Besides that, they are more flexible to adapt in different occasions, for example when another customer makes eye contact and almost instantly act accordingly. A robot has to be programmed in a certain way to process this type of cues to act in the best possible way. Even though a human has an upper hand in this occasion, there are some other things a robot can do, that a human can't. What we are mainly talking about is the sensory input a robot is capable of, besides the 5 main senses a human possesses.

The obstacles an agent could encounter can be devided in a couple of groups:

- Immobile

- Boxes

- Loose products

- Mobile/movable

- Carts

- Baskets

- Human customers

- Regular customers

- Customers with baskets

- Customers with shopping carts

What we are focussing in this case is the behavior of mainly customers with shopping carts and how an agent could act with them. There are a couple of reasons why this in particular is interesting. One of these things is that customers make it look like they act with irregular behavior, but there are some aspects we could take into consideration to maybe predict their actions in advance to eventually act upon these predictions. some of these are the effect of a customer that is pushing a cart compared to regularly moving about. What the popular isles are, especially for customers with carts. Besides that there is some phenomena like cart abandonement, how children act around and the lay-out of a supermarket to name a few.

The effects of a shopping cart on the movement of a customer

Retailers offer a means to facilitate shopping to their patrons in the form of providing carts. In particular, shoppers use these carts to temporarily store products before purchasing them at the checkout counters. Customers also use carts for general convenience, some customers prefer to put personal belongings in their carts rather than carrying them around. Yet shopping carts do hinder movement in the store by reducing walking speed and flexibility of walking direction. Therefore, the frequency of the parking of shopping carts depends on an evaluation that is based on whether there are accompanying advantages or disadvantages for doing so. Unfortunately, these drivers are not directly observable, when shopping, the number of shoppers present in the store at a given time and the perceived crowding they can induce, a fear of pickpockets, and the environmental characteristics of store and time. Effects of crowding can operate in opposite directions. On the one hand, with many shoppers present, individual space does become limited, so parking shopping carts somewhere in the store may help regain shopper mobility. On the other hand, leaving a cart unattended might prove risky in crowded areas especially when personal belongings are stored in a cart. Furthermore, the parked shopping carts of other shoppers can be perceived negatively if those carts restrict movement or access to racks of merchandise. Even worse, they may distract customers from buying, known as the butt-brush effect, a retail consumption phenomenon wherein shoppers do not like to be touched, brushed or bumped in and, therefore, will stop making purchases there to avoid such inconveniencies and moreover, they depend on such factors as the time pressure customers face.

The most basic relationship hypothesizes that patrons who spend more time in a store or walk longer distances will most likely purchase more often. They probably do so because their shopping demand depends on their needs and also personal circumstances. Typically, customers who stay longer in a store walk longer distances within that store, look more into the merchandise throughly, and are less goal oriented. Arguing along the same lines, we also assume that shoppers who stay in the shop longer are more likely to park their carts more often. This pattern might emerge even more often when considering those customers who need more time for buying than the typical shopper. They stay longer because they enjoy browsing through the assortment of products or the different departments, try a greater variety of products, need more time to navigate the store, etc. Naturally then, they probably park their carts more frequently. The situation might become different, however, when personal belongings are stored in the cart. In this case shoppers may refrain from leaving their carts unattended to shop. Shoppers are more likely to make a purchase in a store when their hands are free. When shoppers enter a store without a shopping cart or a shopping basket, they tend to select additional products only as long as their hands can hold them. Temporarily parking one’s cart might also combine the convenience of unrestricted movement with the ease of freeing one’s hands when they are overloaded. Thus, we expect to see a positive relationship between parking one’s cart and the number of purchases such a shopper makes.

To sum it all up, these are the main factors that we could pick up on that have a considerable effect on customer behavior:

- 1. Mobility

- 2. Personal belongings that are present in a cart

- 3. The amount of shoppers that are around

- 4. The amount of parked carts

- 5. The (extended) time a consumer spends in a store because of the use of a shopping cart

Assumptions that can be made when considering these aspects:

- 1. When a customer moves around with a shopping cart, he/she has limited mobility like previously mentioned. In this case we know that the customer doesn't move around much and we can assume that he/she will only do so to go to the desired isle of the product that they need. When they have arrived in said isle, they leave their carts to get the product they need or look around to find said product.

- 2. When there are personal belongings present in the cart, the user will most likely not leave the cart and rather move it wherever the user goes despite the lack of mobility.

- 3. If there are a lot of shoppers around, the shoppers themselves will not hang around for a long amount of time because of the pressure of the "butt-brush" effect

- 4. In there are a lot of parked karts present, there is a good chance that a considerable amount of shelves/racks are not accessible.

- 5. The general use of a shopping cart causes a user to spend more time shopping and roam around.

Valuable information for the robot to consider implementing in its programming:

- 1. If a customer has just came into an isle with a shopping cart and eventually stops, there is a high chance that the customer will leave the cart to look for a desired product.

- 2. If in the same case as in the previous point, the customer has personal belonging in their cart. The probability of the customer leaving the cart will be much lower, if not, the user would most likely still stay within the vicinity of the kart as to keep the cart in its view.

- 3. In presence of a lot of customers (with or without carts) in a single isle, most of them will move around to quickly get their desired product to avoid crowding

All of this information can be used with cost functions and algorithms to act in the most efficient way possible (as far as we know). But there is another thing that has to be adressed primarily and that is, how is the robot going to identify a customer, a shopping cart, personal belongings etc. And how can we take the robots abilities to our advantage? This is when we talk about the technology that we can use to satisfy this.

The Technology

There are some constraints connected to the tracking and recognition of human customers and shopping carts. One of these is the restrictive legal situation in Europe which prohibits methods such as cell phone tracking, and secondly, budget considerations limit the use of sophisticated tracking technologies (e.g., radio frequency identification technology [RFID] chips in shopping carts). What we are looking for in this case is a solution that is ethically accepted and has consideration of a moderate budget. In this section we will be discussing what type of technology we will use in this case.

Sensory input

For the robot to know if there is an obstacle present, it should be able to detect it. There are several ways to go about it, but the one chosen here are ceiling camera's. Why?, you may ask, well that is because in trying to know where customers and their carts are, a robot needs to know what is present in the whole isle. So to also what is behind other people, carts, shelves or behind itself. With normal sensors or camera's mounted on the robot, we restrict ourselves with the amount of crucial information the robot needs to take from its surroundings. A ceiling camera is ideal in this case, purely because there is nothing in the way, when looking from a top down view. Besides the location of every entity in an isle is also more easy to determine in this way. The type of camera used for optimal coverage is one with a 180 degree fish-eye lens. With this type of camera, we could get an image of the whole isle. Figure 1 shows an the image that is created by the camera in a isle.

Object Recognition

It is apparent that if we want the robot to be able to avoid collision with customers or other objects like carts, that the robot should have the ability to recognize said entities and distinguish them. At first the robot/camera should be able to pick up on the presence of an object within its vicinity. This task is delegated to the camera, being that the camera can detect everything from a top down view, compared to a robot which can’t see if there is anything else behind an object/person. So if the camera detects a presence, it should send the information to the robot to act accordingly.

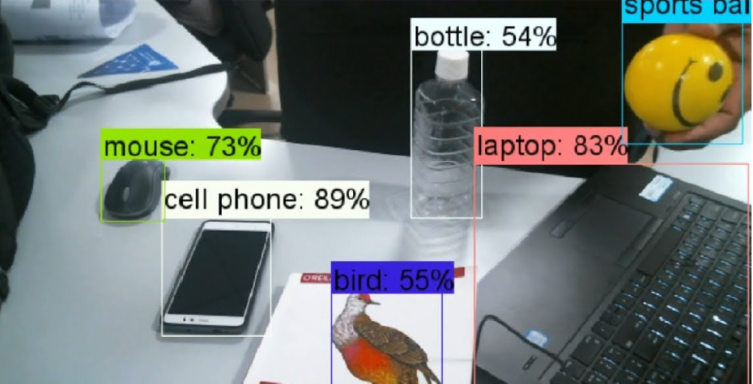

A way that this can be done is with the help of a program called TensorFlow object detection. This was developed by the Google brain team, who have a lot of experience researching and developing AI. It detects objects and recognizes them by using a neural network of data to identify an object and categorize it. It shows this on an image or video footage, by putting a coloured box around the designated object and the likeliness of it being a certain object in its database. Figure 2 shows an example of the interface at work. This program is an open source software library for deep learning, so it is open to the public to further develop it for their own premises. In this case the code that is provided has a basic implementation to recognize several objects in its dataset. This can range from a person up to a simple book or even some animals.

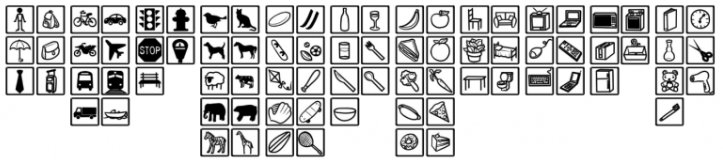

This code is called COCO, which is short for Common Objects in Context. We can alter this code to our liking for it to recognize objects that are essential in our case. In reality it is hard to add more objects to this database, because this will be very time consuming and take a lot of resources. We would have to teach the neural network to recognize other objects by training. This would need more than a couple of very distinct images of good quality and different angles, or else the system could fall victim to overfitting which is detrimental for the possibility of success. Unfortunately, the dataset doesn’t include things like shopping carts or cardboard boxes (pretty unusual), but at least it recognizes humans. So for the sake of demonstration, we could use other objects as a substitute that are provided in the dataset. For example, a chair could be a shopping cart and book could be a cardboard box. Combining multiple frameworks is also very difficult, as we are not proficient in coding in Python and we do not have the necessary time and resources to understand and rewrite the code accordingly. So our tracking software would be one with basic functionality, where we hope to connect it to depth sensing/distance measuring. Tracking is used to prevent the classification of duplicate objects and of course path updating and movable object recognition. For the sake of the project we simply assume that is is possible to add the recognition of shopping carts, isles etc. to the dataset.

Locating position

After being able to recognize and distinguish objects, we should also be able to determine their position, otherwise all the previous information will become obsolete. We could use the camera itself as a main reference/starting point. The camera can detect the position of several objects, including the robot. This way the robot doesn't need to keep track of its own position, when the camera does the work for him. This is of course also the case of the customers and their shopping carts.

Calculating size and distance

We can calculate the size and distance between objects and the camera and also between objects themselves. This we can do in several ways, but to keep things short and sweet, we will simply use only one monocular lens. To still be able to calculate depth and distance without stereo-vision, we can take advantage of our prior knowledge of our environment, in this case, we know that one of the things that will never change in our environment are the sizes of the shelves. Because we know these sizes, we can compare it to the amount of pixels that a shelve takes up in an image. By using this metric, we can apply this scale to all the objects, like a cart or the distance between a customer and the robot. The main way to go about it, is by using the well-known pythagoras theorem (a^2+b^2=c^2). In figure 4 is an example of the application of the theorem with a camera, for determining location, as well as size and distance.

Fish-eye distortion

Fisheye lens can capture extremely wide view, therefore, it is useful in this case for capturing all events in an isle. However, fisheye images have geometric distortion which is more severe toward the boundaries of an image. Moreover, depending on the position, foreground objects or a human is distorted severely, and then the fisheye image looks unrealistic. Therefore it is necessary to correct for the geometric distortion to restore natural and realistic figures. We describe an innovative concept of distortion correction: virtual 3D coordinates of foreground objects are estimated first and then re-project them to obtain distortion-free images. After finishing internal camera calibration which estimates internal parameters of the camera, such as the focal length and the principal point, we estimate the 3D coordinates of an object from image coordinates. We apply a perspective projection for re-projecting the obtained 3D coordinates to the image plane to correct for the fisheye lens distortion of the foreground image. We use indoor/outdoor real images for finding 3D coordinates of foregrounds, and then reconstruct a distortion-free result image by the perspective projection method.

Conclusion & Possible improvements

Unfortunately due to lack of resources and time constraits, there was no room for real implementation of the technical system or development of algorithms which model consumer behavior in tandem with the use of shopping carts. So there is obviously a lot of room for improvement. Besides that, there is a lot of case studies that can be done with this setup. Also there are a lot of way to use the main methods concerning our use of technology for multi-purpose use. Some of these are surveillance or keeping log of customer behavior.