Mobile Robot Control 2023 The Iron Giant

Group members:

| Name | student ID |

|---|---|

| Tobias Berg | 1607359 |

| Guido Wolfs | 1439537 |

| Tim de Keijzer | 1422987 |

| Marijn van Noije | 1436546 |

| Tim van Meijel | 1415352 |

| Xander de Rijk | 1364618 |

| Stern Eichperger | 1281232 |

Midterm presentation

The midterm presentation of The Iron Giant: File:Midterm-presentation-The-Iron-Giant.pdf

The feedback and questions received regarding the midterm presentation of The Iron Giant are as follows:

Feedback point 1:

The current state diagram does not include a recovery state to resolve a deadlock situation. If a passage suddenly becomes blocked and remains blocked, the robot could potentially end up in a deadlock. This could occur, for example, if a person obstructs a pathway between obstacles and does not move away.

Solution: To address this issue, an additional recovery loop should be added for handling suddenly blocked pathways. In this loop, the obstructing obstacle is added to the map, and an alternative new path is calculated using the A* algorithm.

Question 1:

- How does the robot transition into the pose recovery state? What parameter or condition is used?

Solution/answer: A condition based on the standard deviation of the particle spread should be implemented. If the deviation is too large, indicating a significant spread of particles and therefore an uncertain estimation, the robot has lost knowledge of its position in the world and needs to recover it.

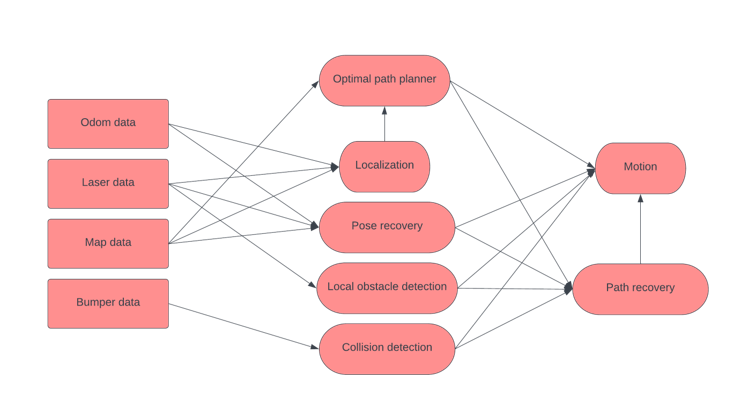

The pose recovery is based on odometry, map and laser data. This is shown in Figure 1.

Question 2:

- Why was the "happy flow" defined in this manner? Won't the robot always encounter disturbances and dynamic objects that cause it to loop through parts of both the happy and unhappy flows? In such cases, the loop may not necessarily be considered an unhappy flow.

Solution/answer: It is true that the definition of the "happy flow" was somewhat strictly defined. Indeed, it is true that certain segments of the "unhappy flow" may occur within the expected states the robot will pass through during the challenge. This does not pose a problem and does not represent an unhappy flow.

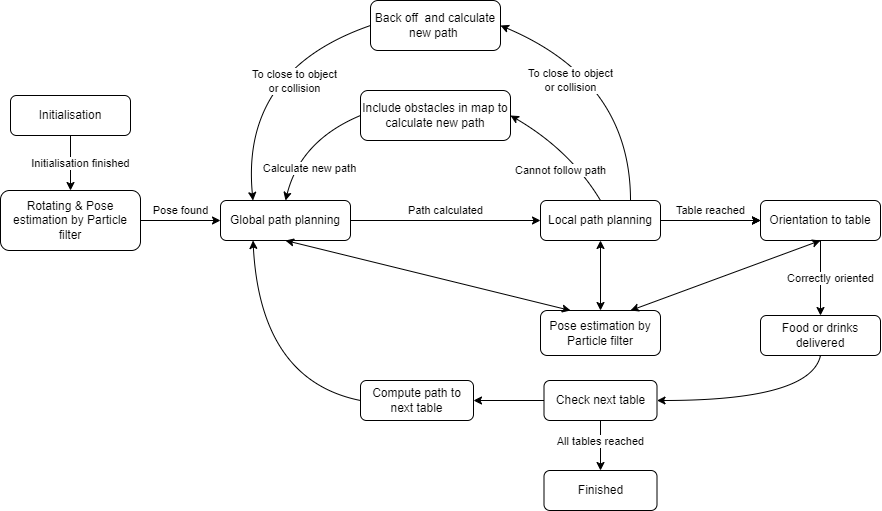

We updated the state diagram according to the feedback and questions as shown in Figure 2.

Final Challenge

Introduction

In this project, the goal is to successfully drive the Hero robot to a table in a restaurant, using a predefined map, with unknown static and dynamic obstacles. To apply the developed code to the Hero robot, the C++ programming language is used. The goal is to enable the Hero robot to navigate and locate itself within the environment. Localization and navigation are two aspects of autonomous robots that will be focused on.

For a robot to drive autonomously, it is important the robot knows its current location and orientation. This requires localization of the robot. This localization method must be based on a predefined map, but should also compensate for partially imperfect situations (living in the real world). The key idea of localization is to have a map of the environment and sensor measurements, which is used in a technique called "particle filtering" to get a better localization of the robot pose. This particle filtering method uses a probabilistic approximation of particles, each representing a possible hypothesis for the robot's position.

Next, the robot must be able to navigate itself to the desired position by following a certain path. To plan the optimal path of the Hero robot, the A* path planning algorithm is used for the robot to effectively reach its destination. This is the so-called global navigation the robot uses. In addition, the local navigation allows the robot to effectively navigate through the map and avoid obstacles in real-time. To account for the unknown and dynamic objects in the final challenge, the so-called artificial potential field method is used.

To complete the final challenge, localization and navigation techniques must be combined. By developing the software for the final challenge, the robot will identify its location on the map, plan an optimal path and complete the task by moving precisely to the designated table. This wiki page thoroughly describes how the Group The Iron Giant used and expanded these algorithms and explains how they are implemented. In addition, it discusses how these algorithms interact and their performance and robustness are determined. An evaluation is given at the end, with a conclusion, discussion and future steps.

Problem statement

The goal of this course is to enable the Hero robot to deliver orders to multiple tables. The robot needs to be compatible with an arbitrary order in which the tables must be reached as the final order is only determined on the day of the restaurant challenge itself. To accomplish this task, a number of challenges come into play. First, the robot must be able to locate itself after being placed in an unknown orientation and position within a certain starting area. To complete the tasks the robot needs to navigate throughout the "restaurant", to do so properly, at any point in time it is required for the robot to know its position and rotation in order for it to come up with logical follow-up steps to reach its goal. This localization task must be robust because if it fails, the robot can no longer work correctly. The localization method should not use too much computing power, because when it drives, it must continuously update itself. This navigation and localization each work according to their own respective coordinate frames, to combine the data of the two and successfully act on them, coordinate transformations between the two need to be found.

Path planning is another important challenge. The robot must be able to determine its path to reach the tables during the challenge. A global path planning algorithm must determine a path based on a predefined map. However, the robot must be aware of both possible unexpected static and dynamic objects. Therefore, the robot must also have a local planner that detects and responds to these unexpected objects. A major challenge will be to find a way for the global planner and the local planner to work together to find the most optimal path to a table, taking into account the map and the unexpected objects present.

Localization and navigation are already two major challenges during this project, but even more challenges are present even after the successful implementation of both methods. First of which, the fact that the robot must not touch or hit a wall. Some safety algorithms must be implemented to prevent this. In addition, the code must be prevented from forming a loop in which the robot gets stuck. This means a physical loop, for example that a robot can get into a certain position from which it can never get out, but also a digital loop, a loop somewhere in the code that the robot gets stuck in and doing so can not continue its operation.

The restaurant also has doors that can be opened by asking for them. When doors are implemented, new difficulties may arise. The path-planning algorithm must know that it can use a door to plan a path. In addition, the robot must use a voice command to open the door. This causes the robot's localization to change as well, as it passes through a door that localization might recognize as a wall. A way must be found to solve this or the robot will lose its position. In addition, an algorithm must be implemented to address what needs to happen if the door is not opened after a command.

Strategy description

To design a robust and efficient robot capable of delivering orders to tables based on a map with unknown static or dynamic obstacles, an approach is determined that provides localization, effective global path planning and obstacle avoidance in local path planning. This approach has been based one the state diagram, data flow diagram and desires to spec diagram created for the midterm and the feedback received on them.

A particle filter will be used for localization. This algorithm will be used to estimate the robot's position within the restaurant map. By resampling the particles, the particle algorithm converges to the actual position of the robot. To know the robot's position within the map, a coordinate frame transformation must be applied to express the robot's position in the coordinates of the map frame. The computation time of the particle filter must be carefully monitored to not lag behind the actual position by finding a good ratio between the number of particles and sub-sampling the map.

Once the robot has located itself, it computes the optimal global path using the A* algorithm. This algorithm finds the optimal path from the initial position to the target table. This algorithm uses a heuristic function to estimate the cost to reach a goal. To navigate around unknown obstacles on the map, the robot uses the artificial potential field method. The concept assigns attractive potentials to targets determined by A* and assigns repulsive potentials around the obstacles. Both repulsive and attractive forces result in a resulting force and direct the robot to a collision-free path.

To deal with the unknown obstacles, the robot's laser data is used. These sensors continuously scan the environment and detect unknown obstacles around the robot. To ensure the robustness of the robot, some tuning parameters are included to counteract measurement noise, noise in the localization and possible inaccuracies in the map display.

Algorithms used

In this chapter a breakdown of the three base algorithms on which the entire code has been build is provided. First the particle filter is explained which has been used for localization of the robot. Then the used A* global path planning algorithm is explained. Finally the Artificial Potential Field method which has been used for local path planning will be elaborated upon.

Particle Filter

The presence of wheel slip and noisy data causes imperfections in the odometry data, therefore it is not possible to fully rely on the odometry data to get an accurate enough estimation of the robot’s pose. In order to get a better estimation of the pose of the robot while it is going to the tables in the restaurant, it is important to implement a localization algorithm that estimates the pose of the robot on the map. To this end the Particle Filter technique is applied. A particle filter is a commonly used probabilistic filtering technique in robotics that estimates a robot’s pose based on sensor measurements while navigating in an (to a certain extent known) environment. The main idea of the particle filter is to estimate the robot's position based on the received sensor data. By matching this local data of objects with the predefined map, it is possible to estimate the robot's position.

The particle filter made in the previous group assignments forms the bases of the algorithm that was eventually implemented. In this algorithm, each particle describes a hypothesis of the current pose of the robot. At first, the particles are distributed with a Gaussian distribution around the robot, resulting in a broad range of the potential pose of the robot. Based on the lidar and odometry data of the robot, the particles are resampled with weights based on the likelihood of their pose. This is done by a filter that approximates the probability distribution of the robot’s pose. A “Recursive State Estimation Beam-based model” is implemented that computes the likelihood of each measurement given a prediction. This model accounts for measurement errors including, measurement noise, unexpected objects, errors due to failures to detect objects and random unrecognized noise. Finally, the particles will be resampled so that high likelihoods are represented heavily in the new particle set. A multinomial resampling method is used. This method selects particles based on their weights, emphasizing particles with a higher likelihood of representing the true pose. This results in a final estimation of the robot’s pose.

For the restaurant challenge, the particle filter is updated during each iteration in the main “while loop”. Updating the filter during each cycle ensures smaller differences in robot poses, making it easier for the particle filter to update and predict a more accurate pose when the robot moves. In Figure 3, it can be seen how the particle filter constantly updates during the movement of the robot in simulation. To increase the computation speed of the particle filter, the number of particles was slightly reduced together with a higher subsample value of the laser data. These values were adjusted and tested directly on the simulation and on the real robot to ensure that there is a good balance between computational speed and performance.

At the beginning of the restaurant challenge, the initial pose of the robot is unknown. Therefore, it was important to ensure that the particle filter finds the robot's position correctly. Since it was known that the robot starts in a certain area, the particle filter is adjusted such that while initializing (at the start), it only estimates its pose within this starting area. In addition, the robot will move left and right so that the particle filter can recognize objects and their orientation during robot motion at the beginning of the challenge, as shown in Figure 4. This way, it is ensured that no matter what initial orientation the robot has at the beginning of the restaurant challenge, the localization algorithm is always able to find the correct pose of the robot.

It is also important that the particle filter is robust to objects that do not match the map. Therefore, the "Measurement Model" parameters are also tuned to the real robot, such as the “hit probability”. Despite these robustness changes, it appeared during testing, that the particle filter was completely lost in case of significant changes in the map. An example of this is the opening of a door. In this case, the laser data does not match the map data, so the robot pose could no longer be tracked properly. A solution to this would be to update the map of the particle filter. This problem is further elaborated in “discussions” and “future steps”.

A* algorithm

For the global path planner, the A* algorithm (as shown in Figure 5) is used with some cost adjustments. Initially, the particle filter determines the input in the map frame in meters. This is converted to pixels for the input of the global planner. Goals are predefined in pixel positions and are also given as input for the planner. For robustness, the node list is first calculated, and then the closest node for those pairs of pixels is used as entry and exit nodes.

For calculating the node list, the image of the map is read with grey scaling. Since pixels can be either black (0), grey (128) or white (255), these values serve as thresholds for squeezing values to binary values. Another input of the global planner is a list of which doors the robot is allowed to use. Each door has a cluster of nodes, which are then used for updating the map after thresholding depending if they serve as a wall or not. Similarly, with the use of the particle filter and the laser data (within a range of 1 meter), the objects are converted from meters to pixels. A min and max function is applied to ensure all laser point locations are within the map and are then added to the obstacle map, separate from the map with walls and “closed” doors. For added robustness, a margin of 15 pixels (0.1875 meter) is applied for which an open node needs to be away from walls and obstacles. Each node is defined on a grid from the map with a defined step size of pixels, in this case, 10 pixels (0.125 meter) per step size.

With the remaining node list, a list of connections for each node is made in the directions, up, down, left, right and diagonal.

Before running A* the position and distances to each goal are computed in the map frame. An additional cost function is defined for every node near a wall or obstacle. If it is within a range of 20 pixels (0.25 meter) the node has a high cost of 100, whereas within 30 pixels (0.375 meter) it has a low cost of 2.

For A* with every step, the node with the lowest cost-to-come is used. For this node, it will add all connected nodes and applies the lowest cost-to-go. The cost-to-go is determined by the step size from one node to the next (meaning going to a diagonal node has a slightly higher cost) with the addition of the added cost of being near a wall or obstacle for the node-to-go, shown in Figure 6. After A* has reached the final node, it will create a path in the map frame in meters. Since the distance between nodes is small, the new path is every third step of the full path. This is then sent to the local path planner.

Every time A* calculates it will use the current robot position, the goal location, what doors it can go through and a list of objects. In this case the list of objects is the current laser data that is within 1 meter distance. The laser data positions are transformed to the map frame and converted to pixels on the map. This is shown in Figure 6, where unknown objects are visible in simulation. When A* can't find a path, the next time A* will run it will not use laser data, as shown in the last 3 paths of Figure 7.

For visualization, after each time it has found a path towards a goal it will make an image plotting both the walls, doors, path and obstacles in respective colors black, grey, red and blue. Each time the global path planner is run a new image is made, not overwriting the previous one. Due to some technical issues with rewriting images, the first three paths in Figure 7 are computed in simulation, whereas the latter three are from the final challenge. In the final challenge A* is calculated two more times between tables 0 and 3, but these didn't save either. In the latter three, no objects are taken into account, because it could not initially plan a path with obstacles. Furthermore, paths through doors are disabled by default for this run.

Similar to the A* algorithm, the Dijkstra algorithm without costs is used to determine which nodes in the closed node list are that of a single door. By putting the algorithm in a for loop of the nodes that are a door, it is possible to cluster all node positions per existing door. The reason for doing this is because it makes it robust to path through one door but not the other. The node list is determined similarly to the previous one by applying a threshold on the image and finding the nodes of the door. It is sufficient to only look in the directions up, down, left and right for the node connections. This information is also used as input for the global path planner.

Artificial potential field algorithm and collision avoidance

Local navigation utilizes an artificial potential field algorithm which was used before during the preliminary assignments. The artificial potential field algorithm is based on the principle of artificial repulsion from obstacles and artificial attraction towards a goal. These repulsions and attractions are then combined to generate an artificial resulting force which indicates the motion direction of the robot. This method aims to draw the robot towards a certain goal while avoiding obstacles on its path towards that goal.

For the implementation of the artificial goals, the path goals calculated by the A* algorithm are utilized to set the direction of the artificial attraction force. The magnitude of the force is kept constant over the range towards the goal. As the robot progresses, it visits each path goal one after another, updating the artificial attractive force to the next goal upon reaching its local path goal. The artificial repulsive forces of the obstacles are based on the detection of these obstacles by the laser sensor. Laser beams detected within a certain boundary are converted to artificial force vectors pointing towards the robot, resulting in repulsive forces. The magnitude of the forces is based on the detected length of the laser beams. The forces are scaled by the distance of the detected obstacle to the robot with a non-linear increasing force for a decreasing distance. Additionally, the repulsive forces are split up into two laser beam angle ranges, a range representing the front part of the robot and a range representing the sides of the robot. The repulsive force vectors in front of the robot are assigned a lower weight compared to those on the sides.

The algorithm is divided into two similar stages with different parameters. The normal local planner and the final goal planner. The final goal planner triggers when the robot is very close to the final goal in front of the table. In this stage, the repulsive forces and maximum speed are reduced so that the robot can get closer to the table without being pushed away because the table is detected as an obstacle.

The implementation of the artificial potential field algorithm has several advantages. First of all, the laser beam detection allows for the navigation around dynamic objects and in dynamic environments where obstacles may move or appear unexpected. Furthermore, the algorithm is computationally efficient allowing real-time navigation. Splitting up the repulsive forces in a front and side region allows the robot to navigate around corners and obstacles with a larger margin. A disadvantage of this method is the swaying of the robot when trying to move through small corridors or between objects that create a small passage. Another drawback of the artificial potential field algorithm is the problem of getting stuck in local minima like corners. This resulted in the need of additional methods to navigate out of these local minima and get back to a viable path.

Additions to the algorithm are used to deal with these shortcomings of the potential field algorithm and to increase safety by avoiding collisions in all cases. Two stages of critical collision prevention are added to the algorithm. The first stage is the emergency laser range detection. This part of the algorithm checks for any laser length being inside a certain range to close to the robot. Because the laser sensor is positioned on the front side of the robot, the laser beam angle range is split up into two laser beam angle ranges, a range representing the front part of the robot and a range representing the sides of the robot. These ranges have a slightly different threshold which results in a detection range around the robot which is more or less constant around the robot. When a laser beam range within the threshold is detected, the robot stops, gives a sound signal telling everyone to watch out because it is going to back up and subsequently backs up slightly, getting away from the obstacle that was too close to the robot. The second collision detection stage is triggered by the bumpers. The result is the same as the other stage: the robot is stopping, gives a warning and slightly backs up or slightly moves forward, depending on the front or back bumper trigger. An example of this is shown in Figure 8.

Furthermore, additions are implemented to avoid dead- and life-locks. The first addition checks the angle of the robot towards the next goal and acts on the magnitude of this angle. If the angle is sufficiently small meaning the robot is facing towards the goal, the robot moves forward with a relatively high speed and possibly a small angler speed to adjust for small angles. If the angle is larger, the forward velocity is reduced and the angler velocity is increased to get the robot to make sharper turns. When the angle is above a certain threshold and is too large, the robot turns around its axis with no forward velocity until it faces the goal again. These additions make the robot stop and turn towards its goal before the robot gets in a local minimum most of the time. Additionally the turning to goal can also be triggered by a timer to prevent life-locks even more. An example of the turning behavior is shown in Figure 9. When the occasion arises that the robot is moving away from its goal too much but is still facing the goal sufficiently another addition is triggered. The robot then stops, recalculated a path and turns towards its new following goal to find its path towards the table goal again. The last addition created to prevent life locks is a trigger when the next goal is not reached within a certain time frame. This addition triggers the same sequence of stopping, recalculating and turning towards the next goal.

Door handling algorithm

The restaurant environment contains one (it could have potentially been more) door with which the robot can interact. While Hero is not capable of physically opening the doors by itself, it may request assistance from a human actor to do so. The ability to open doors increases the number of possible routes Hero can take to reach a goal, thereby either increasing the likelihood of a valid path being found or enabling the robot to find a shorter path. For this reason, it is beneficial to integrate a door-handling algorithm into the control scheme.

The door handling algorithm starts by evaluating the next ‘N’ A* nodes to determine if any of the nodes lie within the extended door regions. Here ‘N’ refers to the number of A* nodes up and including the next goal, as goal points are obtained by subsampling the A* node list. The extended door regions consist of the predefined door regions of the map expanded by an adjustable margin region to ensure that the robot will maintain an adequate distance from the door. If any of the next ‘N’ nodes lie within the extended door region, the last node before this region is set as the goal point. Upon reaching the goal, Hero stops and turns towards the center point of the corresponding extended door region. By using laser measurements from the laser parallel to the driving direction, it is determined through a threshold whether the door is open. If the door is closed, the robot asks for a human actor to open it. After having measured that the door has been opened, using again the threshold on the laser measurement of the laser parallel to the driving direction, Hero thanks the human operator and resumes its path.

The implementation of the door handling algorithm has a major challenge. After opening a door, the map used for constructing the particle filter becomes invalid. This behaviour is shown in Figure 10. In both simulation and real life, the particle filter was no longer able to relate the sensor measurements in the changed environment to the expected measurements based on the particle state, and subsequently, the pose of the robot was quickly lost. This issue can be resolved by reconstructing the particle filter on the updated map using the last estimated position of the robot before the door was opened in the prior estimate for the reconstructed particle filter.

Due to limited testing time, the implementation of the door handling algorithm with particle filter updates could not be experimentally verified on the hero robot and was therefore pushed to a separate branch for the final challenge.

Software Architecture

First, individual components were designed for the final challenge. However, it is important to combine them in a structured way, a schematic overview is shown in Figure 11. It was decided to create one main file in which all the individual components are implemented. The different components are called by using functions. This way, the main file remains clean. In the main file, a while loop is used to repeatedly run the desired functions. To determine which parts of the code have to be run, a ‘switch’ is implemented, using ‘switch-cases’ for different states of the robot. By using booleans and ‘if’-statements, the next state can be chosen. In this way, a state machine is created. Obviously, the states involve a lot of safety-related conditions. First, the initialisation starts by running the particle filter (state A) while the robot turns left and right. By doing this, the environment around the robot can be better perceived and the particle filter gives a better approximation of the initial pose.

Secondly, when initialization is complete, the code proceeds to state B. In state B, the code calls the A* algorithm to calculate the optimal path. When state B is reached after state A, the A* algorithm will determine the optimal path starting from the robot's initial position determined by the particle filter. State B can also be reached from other states, this means, for example, that the robot has detected an object and cannot continue its path through its previously defined path. In that case, the A* algorithm is recalculated from its current position, taking into account the object it has detected via laser data. If the robot needs to reach several tables in succession, it first calculates the optimal path to the first table. Once the robot has reached that table, it will return to state B and run the A* algorithm to the next table.

Next, when the global path has been determined in state B, the local path planner will take over to reach the desired table in state C. The local path planner makes use of the intermediate steps which have been determined by the A* algorithm while taking into account objects. These objects have a repulsive force on the robot which reduces the chance of collisions. The intermediate goals have an attractive force on the robot. This means the robot has a preferred direction it wants to drive in, which will lead to the final goal which is the table.

During this state, the particle filter is run iteratively with the local planner. This means the pose of the robot remains accurate.

When an object comes too close to the robot, it will trigger a safety mechanism which will make sure the robot does not collide. The robot will then go back to state B and plan a path around the object by taking into account the found objects in the laser data, as mentioned before.

When the robot is close to its final goal, thus close to the table, the speed of the robot will reduce. This makes sure the approach to the table is smooth and safe. The script then switches to state E, which ensures that the robot orientates itself to the direction of the table. The food and drinks can now be picked up from the robot.

When all tables are reached, the main code switches to state D. In this state, the while loop breaks and the code stops running.

In the end this has created the state diagram as shown in Figure 11 for the final challenge. This diagram is quite similar to the originally created diagram, with as the biggest exception the emittance of pose recovery. This state was also used in the data flow diagram originally but has been emitted there for the same reason now as well. In the tightly packed restaurant challenge environment the conditions for this state were never triggered and thus implementation of it was emitted. Some states have been separated into separate states as this was helpful with building up a coherent while loop in the main code.

Performance

During the final challenge, the performance of the software that implements the previously mentioned control schemes was evaluated on the Hero robot. This took place in a restaurant setup where orders were delivered to specifically labelled tables in a predefined sequence. The restaurant setup is a dynamic environment with unknown static and dynamic obstacles such as boxes and human actors. Due to unresolved issues with the particle filter when the door handling algorithm is implemented, two separate software versions, one with the door handling algorithm and another without it, were tested.

The first trial involved testing the software version that did not include the door-handling algorithm. Upon initialization in the starting area, the particle filter was rapidly able to accurately estimate the robot's pose. A feasible path was promptly calculated by the A* algorithm following initialization. Hero navigated to the first table without any issues, although there were occasional brief standstills at each A* goal point, which resulted in slightly less fluid movement. The approach to the table was performed smoothly, and the Hero Robot correctly oriented itself towards the table. The event that indicates that the table is reached was triggered and Hero announced its arrival vocally. It then continued its route to the next table, which was table zero. Hero was able to safely navigate with a reasonable sideways distance from a static obstacle placed at a corner before table zero. As Hero approached the goal point of table Zero, it grazed the table leg which triggered the front bumper. However, Hero voiced that its rear bumper was triggered instead and would proceed with retreating in the forward direction. It appears that only the bumper speeches have been reversed, as the front bumper event was indeed triggered and Hero moved backwards. Shortly after, Hero reached the goal point and oriented itself towards table Zero and then continued its path to table three. A human actor was present in the aisle between tables Zero and Three. Hero detected the human actor and carefully navigated towards its goal in an assertive manner while ensuring it didn't collide with the human actor. After passing the human actor, table three was successfully reached. However, table three was the final table that Hero was able to reach during this trial, as there was a static object blocking the path towards table Four. The route to table four would require navigating through the door, which this software version did not include, thus no viable path to table four was found. Hero entered a soft lock state where it alternated between attempting to navigate around the obstacle and calculating a new path using the A* algorithm. Despite the soft lock, Hero managed to maintain an adequate distance from walls and obstacles while displacing sporadically.

A recording of the first trial can be viewed here: https://www.youtube.com/watch?v=8132jG1iocc

In the second trial, the software version that included the door-handling algorithm was tested. From previous simulations, it was known that the accuracy of the pose estimation by the particle filter would likely degrade significantly after the door was opened due to a significant discrepancy between the environment and the particle filter map. Hence, it was decided that Hero would follow an alternative path to table four only, in order to demonstrate the capabilities of the software with the door handling algorithm. Similar to the first trial, the particle filter rapidly estimated the robot's pose accurately upon initialization in the starting area and calculated a path towards table Four. The door handling algorithm was able to detect that the calculated path crosses a door and set the first node outside the extended door region as the door goal point. This door goal point was slightly within the minimum object distance measured by the laser, triggering a retreat event. After the retreat, the A* algorithm recalculated the path, shifting the door goal point to a location outside the minimum object distance, which more or less matched the current position. Hero oriented itself towards the midpoint of the door by turning for the offset angle between the forward-facing vector of the robot frame and the vector from the robot towards the midpoint of the door measured in the clockwise direction only, which causes the Hero robot to rotate in an unnecessarily long direction whenever Hero is oriented such that this offset angle is larger than pi. After Hero was properly oriented, it detected that the door was indeed closed, after which it voiced a request for a human operator to open it. Upon detecting that the door had been opened, Hero voiced its gratitude and resumed its path. As anticipated from the simulation, the particle filter promptly lost Hero's pose. This resulted in Hero entering a soft lock state; it kept driving near table four, but the table was not reached.

A recording of the second trial can be viewed here: https://www.youtube.com/watch?v=RXwWlNGVlNo

Robustness

The robustness of the software is evaluated by intensive testing. During testing, the environment was changed continuously by adding/removing unknown objects. This way, the local planner was optimized such that it could safely avoid all obstacles. Furthermore, the particle filter was tuned such that it was well-balanced between being fast and being reliable in an environment with unknown objects.

To improve robustness, several conditions are built in for specific cases. These conditions will initialize the corresponding sequences. For example, when the robot initializes, it will turn for a few seconds to look around and locate itself. This means the robot can be placed anywhere in the predefined area, in all possible orientations. Therefore, the performance is not dependent on the starting position.

The A* algorithm also accounts for unknown objects by using its laser data to create obstacles in the map. This way, the algorithm creates a path around the obstacles. This is shown in Figure 6. The algorithm will try to find a path around obstacles, however, when no path is found, the robot will ask to remove objects and wait. It will then calculate a new path based on the map without the objects. Recalculating the global path, in general, will be done when the robot moves away from the path when it has not made any progress for a certain amount of time, when it cannot reach a goal or when it has reached a goal. When encountering these situations, it is important to recalculate the global path to make sure the path is up to date, and a path actually exists. For example, a path can only be created if it has a width of at least 30 cm.

By using counters and timers it is made sure the robot does not perform the same task in a loop. This reduces the chance of a live-lock and increases robustness.

During testing, it was found that the software was performing well in the conditions in which it was tested. This is also shown during the final challenge, in which the robot successfully performed the tasks. It can be concluded the software of the robot is robust against changes in the environment.

When making changes during the experiments, safety was always kept in mind. This ensures for a robot that is at least safe from the start. When the safety features were working correctly, the other parameters were tested. These safety features for example involve an emergency bumper stop procedure and a predefined laser range in which the robot knows it is too close to an object and will stop. This makes the software more robust against for example dynamic objects.

Conclusion

Considering the performance in the final challenge that ended up winning the competition the easy conclusion is that the robot has performed well. The full challenge has not been completed, due to the emittance of the door opening algorithms in the final challenge code, yet this was a deliberate design choice, choosing for proper operation. The performance that the robot showed at the challenge was now fully in accordance with the expected behaviour. For this reason, despite not successfully reaching the final table, the robot's performance was deemed satisfactory considering the time and testing constraints.

It can be concluded based on the many unexpected and strategically placed disturbances in combination with the uncertain initial pose that the proposed robot operation algorithm is robust to the extent that it can operate in a restaurant environment in a safe manner for itself, as well as its surroundings.

Discussion and Future steps

Overall, it can be concluded that the performance of the robot in real life matched well with expectations based on simulation and previous test results. However, there is still room for improvement.

The first future step to be taken is to debug the minor bugs that are still present in the code. As mentioned before, the bumper collision detection can get triggered suddenly and incorrectly. To solve this problem it is recommended to first revise the code that causes the used condition bool to be true while the bumpers are not actually triggered. A potential solution would be an extra check with laser data that verifies if the bumper is correctly triggered. Furthermore, the threshold of the emergency laser range detection should be increased to detect dynamic objects sooner and be more responsive to them. It is important to be cautious with this because responding to them too soon results in the robot being unable to enter small gaps and corridors.

Besides the current bugs, one of the first obvious future steps would be to implement the option to pass through a door. The majority of the code for passing doors is implemented but it still has some issues, mainly with the particle filter. The current problem is that the map needs to be updated after opening the door. When the robot goes through the door, there is suddenly an open space where the door used to be while the particle filter still thinks there is a wall because of the predefined map. Therefore, the particle filter occasionally does not find the correct robot position when the robot passes through the doorway. The first step in tackling this problem will be to ensure that the particle filter can handle opening the door by updating its map after the door is opened. By updating the map of the particle filter, the robot would be able to finish the complete challenge with the current code. An extra condition/state can then also be introduced which verifies whether the door over time has been closed again which would then require the robot to switch back to the map with the door closed.

Another future step could be to make the robot move more smoothly. Due to the turning towards the goal addition in the potential field algorithm, the robot sometimes moves a bit shakily and can be very slow. Optimizing the parameters for the angle thresholds and forward/angular velocity will probably go a long way. In addition, more intermediate speeds could be implemented to ensure a more gradual speed reduction and increase.

Lastly, the robot is fairly intrusive toward people because it likes to move toward its goal. A distinction could be made between moving objects (humans) and static objects. With moving objects, the robot could then wait more, be more passive and possibly ask the person to move aside for him. This would make autonomous operation in a real-life restaurant environment safer and more pleasant for the guests.