Implementation MSD16

Tasks

The tasks which are implemented are:

- Detect Ball Out Of Bound (BOOP)

- Detect Collision

The skill that are needed to achieve these tasks are explained in the section Skills.

Skills

Detection skills

For the B.O.O.P. detection, it is necessary to know where the ball is and where the outer field lines are. To detect a collision the system needs to be able to detect players. Since we decided to use agents with cameras in the system, detecting balls, lines, and players requires some image-processing. The TURTLE already has good image processing software on its internal computer. This software is the product of years of development and has already been tested thoroughly, which is why we will not alter it and use it as is. Preferably we could use this software to also process the images from the drone. However, trying to understand years worth of code in order to make it useable for the drone camera (AI-ball) would take much more time than developing our own code. For this project, we decided to use Matlab's image processing toolbox to process the drone images. The images comming from the AI-ball are in the RGB color space. For detecting the field, the lines, objects and (yellow or orange) balls, it is more convenient to first transpose the image to the YCbCr color space.

Detect lines

Detect balls

Detect objects

Refereeing

B.O.O.P.

Collision detection

Locating skills

Locate agents

Locate objects

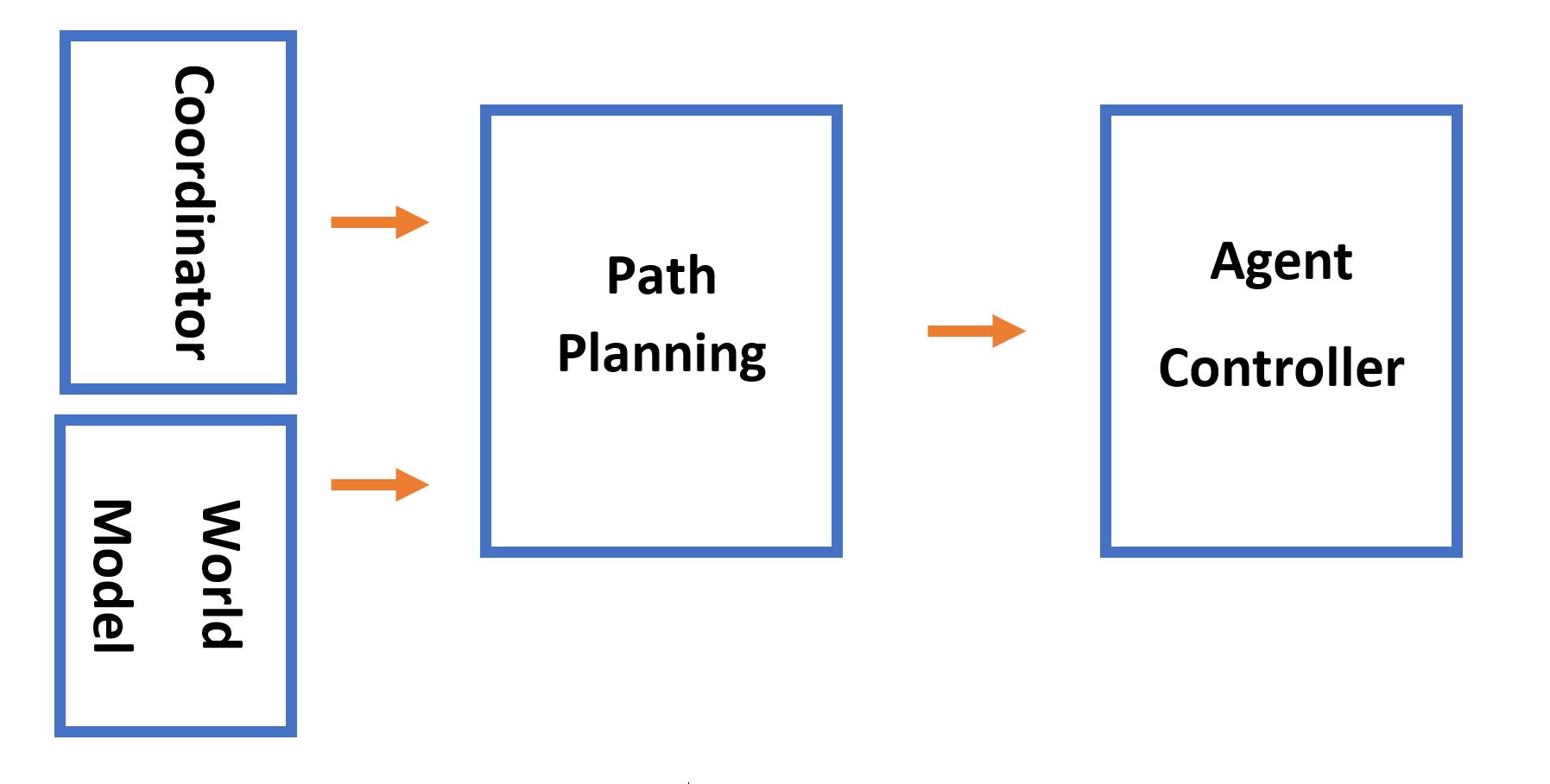

Path planning

The path planning block is mainly responsible for generating optimal path for agents to send it as a desired position for their controllers. In the system Architecture, the coordinator block decides about the skill that needs to be performed by agents. For instance, this blocks sends detect ball for agent A (drone) and locate player for agent B as a task. Then, path-planning block requests from the World-Model latest information about the target object position and velocity as well as the position and velocity of agents. Using information, the Path-Planning block will then generate reference point for an agent controller. As it is shown in Fig.1, it is assumed that the world model is able to provide position and velocity of objects like ball whether it has been updated by agent camera or not. In the latter case, the particle filter gives an estimation of ball position and velocity based on dynamics of the ball. Therefore, it is assumed that estimated information about an object which is assigned by coordinator is available.

There are two factors that have been addressed in the Path-Planning block. The first one is related to the case of multiple drone in order to avoid collision between them. Second, generating an optimal path as reference input for drone controller.

Reference generator

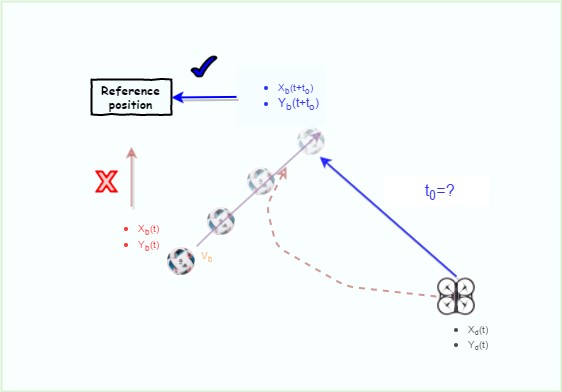

As we discussed earlier, the coordinator assigned a task to an agent for locating an object in a field. Subsequently, the world model would provide the path planner with the latest update of position and velocity of that object. Path-Planning block simply could us the position of the ball and send it to agent controller as a reference input. This could be a good decision when the agent and the object are relatively closed to each other. However, it is possible to take into account the velocity vector of the object in an efficient way.

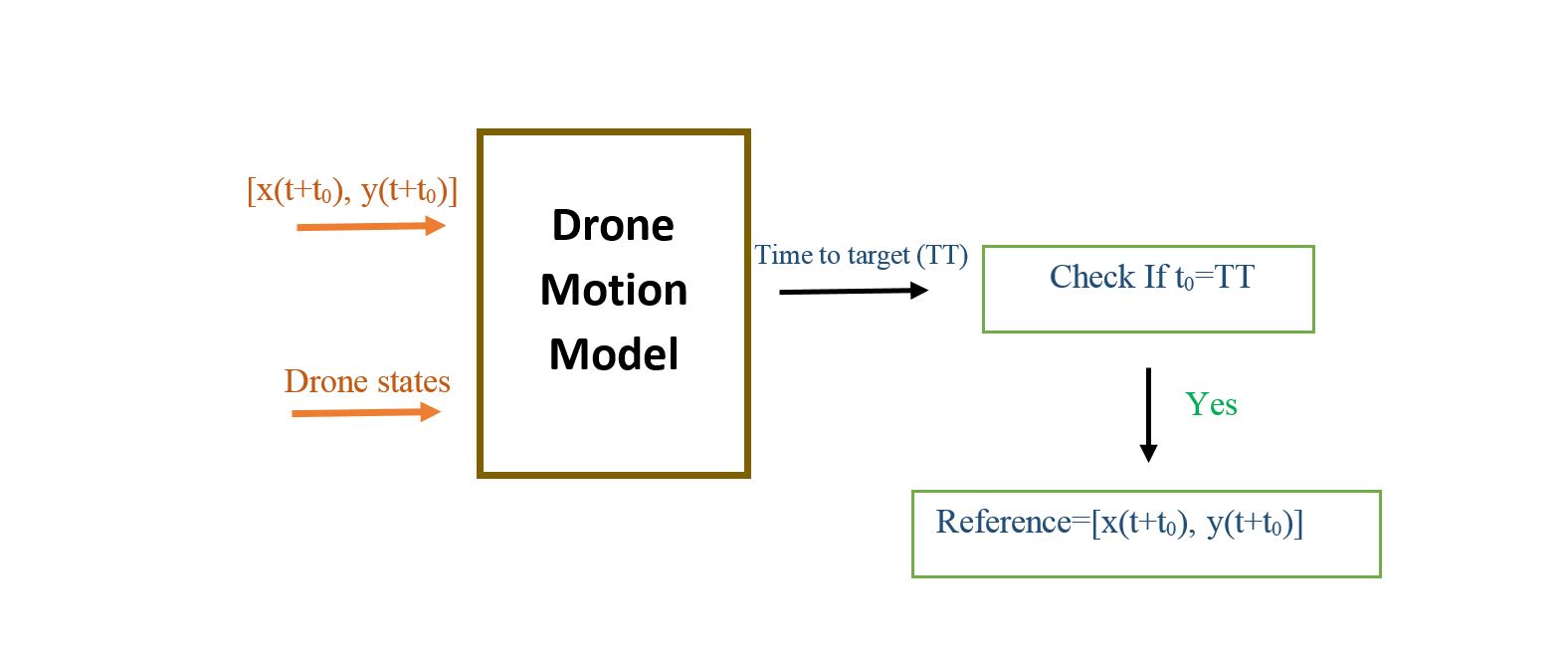

As it is shown in Fig.2, in a case of far distance between drone and ball, the drone should track the position ahead of the object to meet it in the intersection of the velocity vectors. Using the current ball position as a reference input would result in a curve trajectory (red line). However, if the estimated position of the ball in time ahead sent as a reference, the trajectory would be in a less curvy shape with less distance (blue line). This approach would result in better performance of tracking system, but more computational effort is needed. The problem that will be arises is the optimal time ahead t0 that should be set as a desired reference. To solve, we require a model of the drone motion with the controller to calculate the time it takes to reach a certain point given the initial condition of the drone. Then, in the searching Algorithm, for each time step ahead of the ball, the time to target (TT) for the drone will be calculated (see Fig.3). The target position is simply calculated based on the time ahead. The reference position is then the position that satisfies the equation t0=TT. Hence, the reference position would be [x(t+t0), y(t+t0)], instead of [x(t), y(t)]. It should be noted that, this approach wouldn’t be much effective in a case that the drone and object are close to each other. Furthermore, for the ground agents, that moves only in one direction, the same strategy could be applied. For the ground robot, the reference value should be determined only in moving direction of the turtle. Hence, only X component (turtle moving direction) of position and velocity of the interested object must be taken into account.

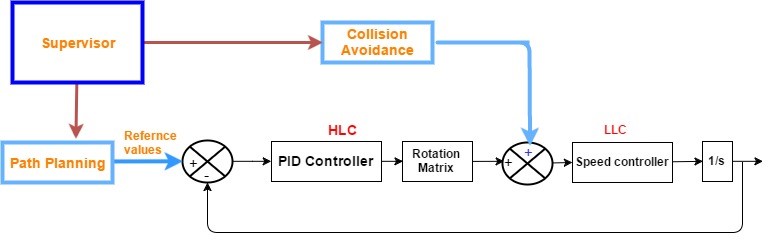

Collision avoidance

When Drones are flying above a field, the path planning should create a path for agents in a way that avoid collision between them. This can be done in collision avoidance block that has higher priority compared to optimal path planning that is calculated based on objective of drones (see Fig.4). Collision Avoidance-block is triggered when the drones state meet certain criteria that indicate imminent collision between them. Supervisory control then switch to the collision avoidance mode to repel the drones from getting closer. This is fulfilled by sending a relatively strong command to drones in a direction that maintain safe distance. Command as a velocity must be perpendicular to velocity vector of each drone. This is being sent to the LLC as a velocity command in the direction that results in collision avoidance and will be stopped after the drones are in safe positions. In this project, since we are dealing with only one drone, implementation of collision avoidance will not be conducted. However, it could be a possible area of interest to other to continue with this project.

World Model

Kalman filter

Particle filter

Estimator

Hardware

Drone

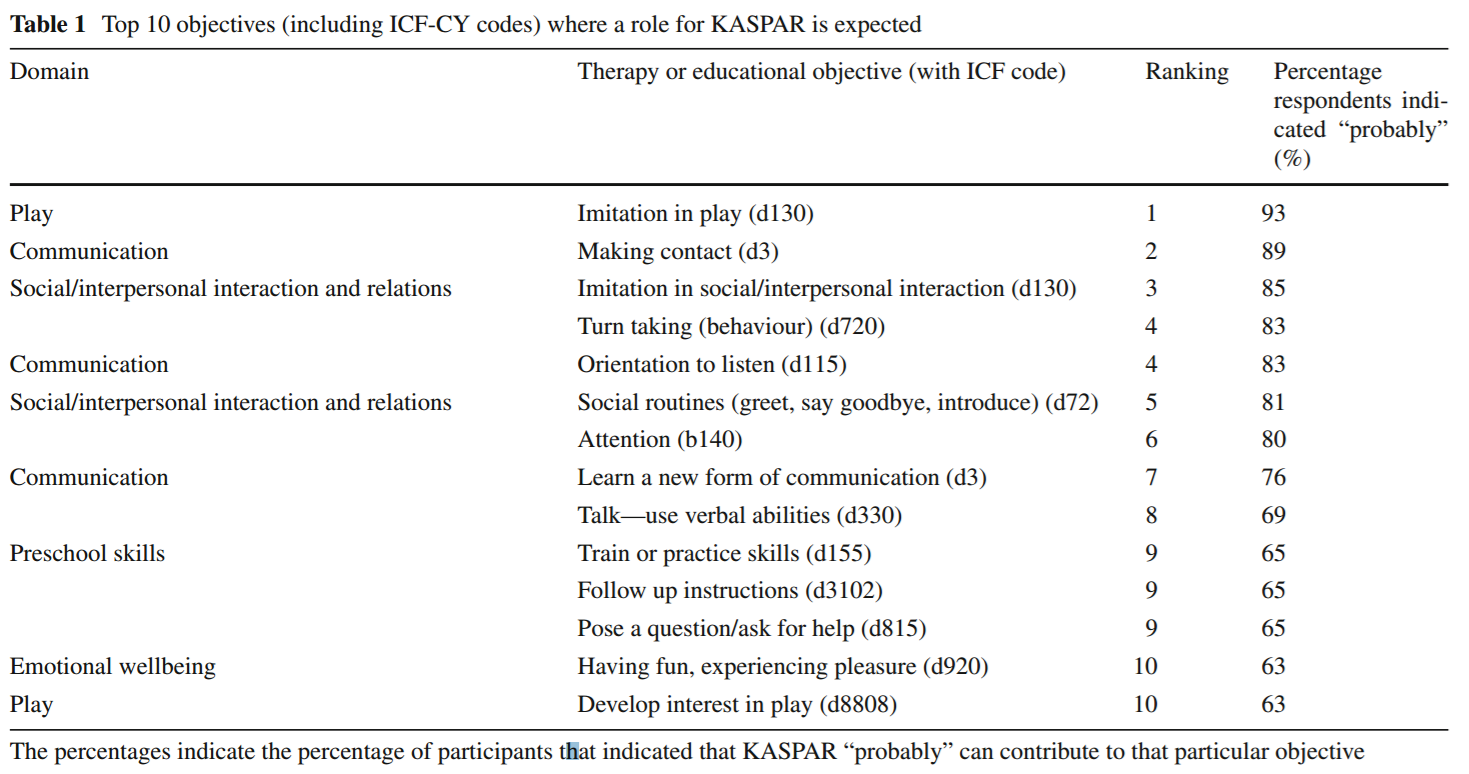

In the autonomous referee project, commercially available AR Parrot Drone Elite Edition 2.0 is used for the refereeing issues. The built-in properties of the drone that given in the manufacturer’s website are listed below in Table 1. Note that only the useful properties are covered, the internal properties of the drone are excluded.

The drone is designed as a consumer product and it can be controlled via a mobile phone thanks to its free software (both for Android and iOS) and send high quality HD streaming videos to the mobile phone. The drone has a front camera whose capabilities are given in Table 1. It has its own built-in computer, controller, driver electronics etc. Since it is a consumer product, its design, body and controller are very robust. Therefore, in this project, the drone own structure, control electronics and software are decided to use for positioning of the drone. Apart from that, the controlling of a drone is complicated and is also out of scope of the project.

Experiments, Measurements, Modifications

Swiveled Camera

As mentioned before, the drone has its own camera and this camera is used to catch images. The camera is placed in front of the drone. However for the refereeing, it should look to the bottom side. Therefore it will be disassembled and will be connected to a swivel to tilt down 90 degrees. This will create some change in the structures. When this change is finished, it will be added here.

Software Restrictions on Image Processing

Using a commercial and non-modifiable drone in this manner brings some difficulties. Since the source code of the drone is not open, it is very hard to reach some data on the drone including the images of the camera. The image processing will be achieved in MATLAB. However, taking snapshots from the drone camera directly using MATLAB is not possible with its built-in software. Therefore an indirect way is required and this causes some processing time. The best time obtained with the current capturing algorithm is 0.4 Hz using 360p standard resolution (640x360). Although the camera can capture images with higher resolution, processing will be achieved using this resolution to decrease the required processing time.

FOV Measurement

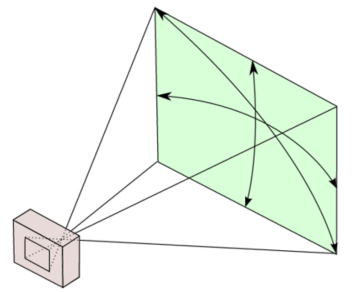

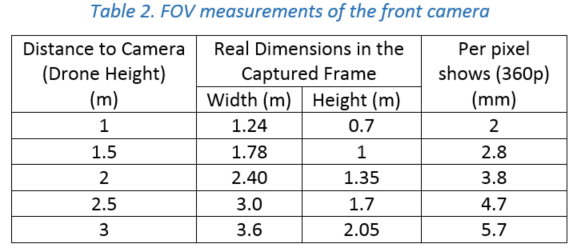

One of the most important properties of a vision system is the field of view (FOV) angle. The definition of the field of view angle can be seen in the figure. The captured images has a ratio of 16:9. Using this fact and after some measurements the achieved measurements showed that it is near to 70° view although given that the camera has 92° diagonal FOV. The achieved measurements and obtained results are summarized in Table 2 . Here corresponding distance per pixel is calculated in standard resolution (640x360).

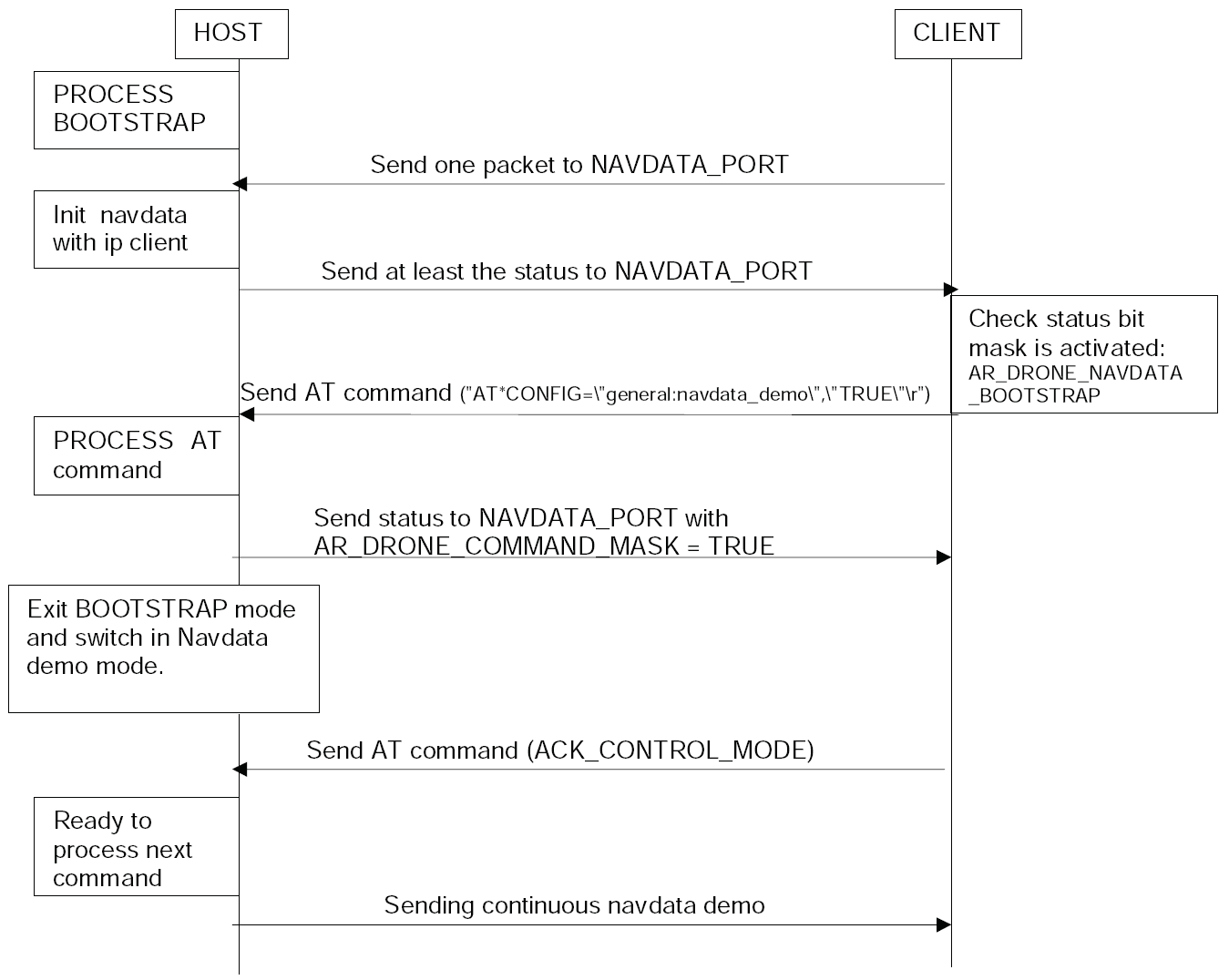

Initialization

The following properties have to be initialized to be able to use the drone. For the particular drone that is used during this project, these properties have the values indicated by <value>:

- SSID <ardrone2>

- Remote host <192.168.1.1>

- Control

- Local port <5556>

- Navdata

- Local port <5554>

- Timeout <1 ms>

- Input buffer size <500 bytes>

- Byte order <litte-endian>

Note that for all properties to initialize the UDP objects that are not mentioned here, MATLAB's default values are used.

After initializing the UDP objects, the Navdata stream must be initiated by following the steps in the picture below.

Finally, a reference of the horizontal plane has to be set for the drone internal control system by sending the command FTRIM. [1]

Wrapper

As seen in the initialization, the drone can be seen as a block with expects a UDP packet containing a string as input and which gives an array of 500 bytes as output. To make communicating with this block easier, a wrapper function is written that ensures that the both the input and output are doubles. To be more precise, the input is a vector of four values between -1 and 1 where the first two represent the tilt in front (x) and left (y) direction respectively. The third value is the speed in vertical (z) direction and the fourth is the angular speed (psi) around the z-axis. The output of the block is as follows:

- Battery percentage [%]

- Rotation around x (roll) [°]

- Rotation around y (pitch) [°]

- Rotation around z (yaw) [°]

- Velocity in x [m/s]

- Velocity in y [m/s]

- Position in z (altitude) [m]

Top-Camera

The topcam is a camera that is fixed above the playing field. This camera is used to estimate the location and orientation of the drone. This estimation is used as feedback for the drone to position itself to a desired location. The topcam can stream images with a framerate of 30 Hz to the laptop, but searching the image for the drone (i.e. image processing) might be slower. This is not a problem, since the positioning of the drone itself is far from perfect and not critical as well. As long as the target of interest (ball, players) is within the field of view of the drone, it is acceptable.

Ai-Ball

TechUnited TURTLE

Originally, the Turtle is constructed and programmed to be a football playing robot. The details on the mechanical design and the software developed for the robots can be found here and here respectively.

For this project it was to be used as a referee. All the software that has been developed at TechUnited did not need any further expansion as some part of the extensive code could be used to fulfill the role of the referee. This is explained in the section Software/Communication Protocol Implementation of this wiki-page.

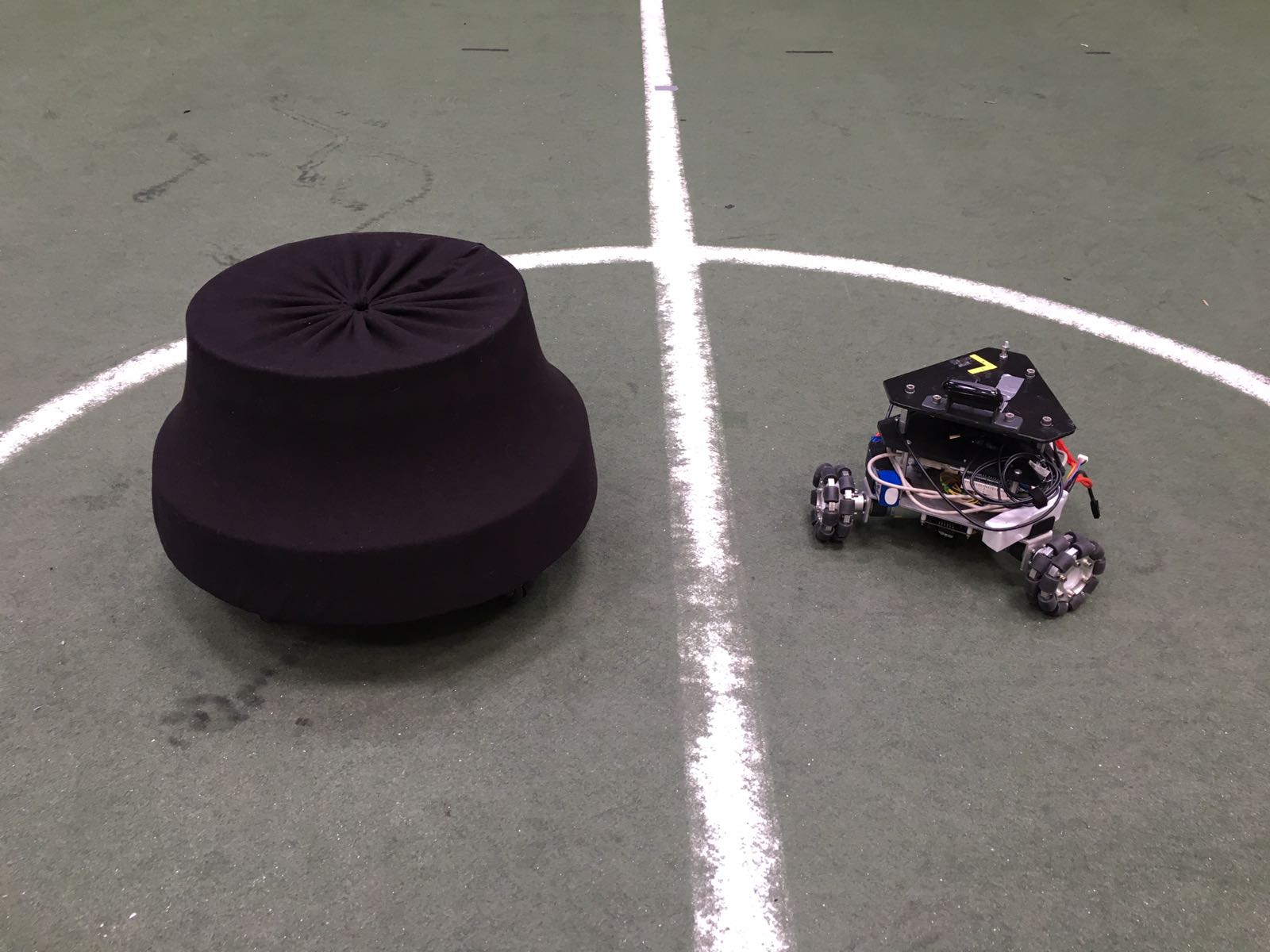

Player

The robots that are used as football players are shown in the picture. In the right side of the picture, the robot is shown as it was delivered at the start of the project. This robot contains a Raspberry Pi, an Arduino and three motors (including encoders/controllers) to control three omni-wheels independently. Left of this robot, a copy including a cover is shown. This cover must prevent the robots from being damaged when they are colliding. Since one of the goals of the project is to detect collisions, it must be possible to collide more than once.

To control the robot, Arduino code and a Python script to run on the Raspberry Pi are provided. The python script can receive strings via UDP over Wi-Fi. Furthermore, it processes these strings and sends commands to the Arduino via USB. To control the drone with a Windows device, MATLAB functions are implemented. Moreover, an Android application is developed to be able to control the robot with a smartphone. All the code can be found on GitHub.[2] >

Supervisory Blocks

Integration

Hardware Inter-connections

The Kinect and the omni-vision camera on the TechUnited Turle allow the robot to take images of the on-going game. With image processing algorithms useful information from the game can be extracted and a mapping of the game-state, i.e.

1. the location of the Turtle,

2. the location of the ball,

3. the location of players

and other entities present on the field can be computed. This location is with respect to the global coordinate system fixed at the geometric center of the pitch. At TechUnited, this mapping is stored (in the memory of the turtle) and maintained (updated regularly) in a real-time data-base (RTDb) which is called the WorldMap. The details on this can be obtained from the software page of TechUnited. In a Robocup match, the participating robots, maintain this data-base locally. Therefore, the Turtle which is used for the referee system, has a locally stored global map of the environment. This information was needed to be extracted from the Turtle and fused with the other algorithms and software that was developed for the drone. These algorithms and software were created on MATLAB and Simulink while the TechUnited software is written in C and uses Ubuntu as the operating system. The player-robots from TechUnited, communicate with each other via the UDP communication protocol and this is executed by the (wireless) comm block shown in the figure that follows.

References

<references/