Embedded Motion Control 2018 Group 4: Difference between revisions

| Line 231: | Line 231: | ||

The split and merge algorithm is used to determine the location of the corners and doors in a room. The algorithm is implemented in the following way. | The split and merge algorithm is used to determine the location of the corners and doors in a room. The algorithm is implemented in the following way. | ||

1. Preprocessing the data(input: LRF,odometry) | 1. Preprocessing the data(input: LRF, odometry, bias) | ||

* remove | * remove points that are not of interest. We just use the points within 180 degree of each scan. | ||

* push_back() them into a vector, both the laser and odometry data | * push_back() them into a vector, both the laser and odometry data | ||

* convert the laser points from polar coordinates to xy-coordinates and push_back() into vector | * convert the laser points from polar coordinates to xy-coordinates, make modification according to the bias from localization and push_back() into vector | ||

2. Merge multiple scans (optional) | 2. Merge multiple scans (optional) | ||

| Line 240: | Line 240: | ||

3. Find section(input: processed_laser) | 3. Find section(input: processed_laser) | ||

* Check if the distance between two points is larger than the threshold | * Check if the distance between every two points is larger than the threshold | ||

** If | ** If true, store the indexes A and B of these two points in a vector | ||

* | * in first section, the initial point is where laser scan begin; the end point is A from above | ||

* | * in later sections, the initial point is always the point with smaller index from above step, and end point is the one with larger index | ||

* check the | * check the number of in each section, if the number (section length) less than threshold | ||

** true, declare they are outlier, remove both initial and end point from index vector | ** if true, declare they are outlier, remove both initial and end point from index vector | ||

* output: each section's initial point, end point's index (of whole data after merge()) | * output: each section's initial point, end point's index (of whole data after merge()) | ||

4. Feature detection | 4. Feature detection | ||

* in each section, fit the line crossing initial point and end point using these equations: | |||

* in each section, fit the line crossing | |||

<math> ax+by+c=0 </math>, | <math> ax+by+c=0 </math>, | ||

<math> a=(y_{1}-y_{2})/(x_{1}-x_{2}) </math>, | <math> a=(y_{1}-y_{2})/(x_{1}-x_{2}) </math>, | ||

| Line 258: | Line 257: | ||

<math> abs(ax_{0}+by_{0}+c)/ \sqrt(a^2+b^2) </math> | <math> abs(ax_{0}+by_{0}+c)/ \sqrt(a^2+b^2) </math> | ||

* find the maximum distance to the wall, and check if this is smaller than the wall threshold | * find the maximum distance to the wall, and check if this is smaller than the wall threshold | ||

** if | ** if true, declare it's a wall between two points, go to the next step; | ||

** if | ** if false, declare it's a corner, replace the initial point with the found corner, go back to step 1 in this function and write down the max distance points index. | ||

* connect two points that are declared as wall | * connect two points that are declared as wall | ||

* connect each corner point with increasing index within current section | * connect each corner point with increasing index within current section | ||

* connect current sections initial point with the minimal index corner point, and connect the maximum index corner point with the end point | * connect current sections initial point with the minimal index corner point, and connect the maximum index corner point with the end point | ||

* output: a 2D vector, every | * output: a 2D vector, every contains all the feature point within that section | ||

5. Merge map (update world model) | 5. convert from index to point structure | ||

* extract index from input, assign their corresponding coordinates to point structure, | |||

6. Merge map (update world model) | |||

* define a 2D vector, that is empty initially | * define a 2D vector, that is empty initially | ||

* push_back() every row found in step 4 | * push_back() every row found in step 4 | ||

Revision as of 15:12, 12 June 2018

Group members

| TU/e Number | Name | |

|---|---|---|

| 1032743 | Shuyang (S.) An | s.an@student.tue.nl |

| 0890579 | Leontine (L.I.M.) Aarnoudse | l.i.m.aarnoudse@student.tue.nl |

| 0892629 | Sander (A.J.M.) Arts | a.j.m.arts@student.tue.nl |

| 1286560 | Pranjal (P.) Biswas | p.biswas@student.tue.nl |

| 0774811 | Koen (K.J.A.) Scheres | k.j.a.scheres@student.tue.nl |

| 0859466 | Erik (M.E.W.) Vos De Wael | m.e.w.vos.de.wael@student.tue.nl |

Files

File:EMC Group4 Initial Design.pdf

Initial Design

Goal

The main goal of the project is to enable PICO to autonomously map a given room and subsequently determine and execute a trajectory to leave this room. For the initial Escape Room Competition, the predefined task is completed once the finish line at the end of the corridor is crossed. For the Hospital Room Competition, the goal is to map all rooms situated in the complex, after which the robot returns to a given position from where it must be able to identify a placed object in order to position itself next to it. However, both competitions include several constraints:

- The robot must complete the task without bumping into any walls.

- The individual tasks must be completed within five minutes.

- The robot should not stand still for more than 30 seconds during the execution of each of the tasks.

Plan

Escape Room Competition

1. Initially, PICO should make a 360° turn once, to gather information about the surrounding environment.

2. It should act accordingly depending upon the initial data that is gathered. The following three scenarios are possible:

- A wall is detected, then it should go to the nearest wall and start following it.

- A door is detected, then it should go to the door, cross the finish line and stop.

- Nothing is found, then it should move forward until it detects either a wall or a door.

3. While following a wall, the following two scenarios are possible:

- Another wall is detected, then it should start following the next wall.

- A door is found, then it should go through the door and cross the finish line.

Hospital Competition

1. Initially, PICO should make a 360° turn once, to gather information on the surrounding environment.

2. It should start following the wall along the corridor, until a new door is found.

3. Then, it should enter the room through the new identified door, and start following the walls of the room. While following the walls of the room, it should identify all the corners of the room from the data gathered, and then exit the room. Also, while performing this task it is possible that a new door is detected inside the room, then it should enter that room and follow the same protocol as mentioned before.

4. Once PICO is in the corridor again, it should start searching for new doors, and explore each of the room similarly as mentioned above.

5. While performing these tasks, PICO simultaneously needs to create a map of the whole hospital. Once the whole hospital is mapped, it should park backwards to the wall behind the starting position.

6. After that depending on the hint given, PICO should find the object in the hospital and stop moving close to it.

Requirements

In order to follow the plan and reach the goal that was defined earlier, the system should meet certain requirements. From these requirements, the functions can be determined. The requirements are given by:

- The software should be easy to set-up and upload to the PICO.

- The software should not get stuck in deadlock or in an infinite loop.

- PICO should be able to run calculations autonomously.

- PICO should be able to make decisions based on these calculations.

- Walls, corners as well as doors should be detectable. This means that the data provided by the sensors must be read and interpreted to differentiate between these items.

- Based on the sensor data, a world model should be constructed. Hence, this model should store information that functions can refer to.

- PICO should be able to plan a path based on the strategy and autonomous decision-making.

- PICO should be able to follow a determined path closely

- PICO should be able to drive in a forward, backward, left and right motion.

- PICO should be able to turn with a predefined number of degrees (-90°, 90°, 180° and 360°).

- PICO should also be able to turn with a variable angle.

- The speed of each of the wheels should be monitored.

- The total velocity and direction of PICO should be monitored.

- The distance moved in any direction by PICO should be monitored.

- Detect when and for how long PICO is standing still.

- PICO should be able to either drive the entire rear wheel across the finish line or to stop moving close to the designated object for respective competition types.

Functions

In the table below, the different type of functions are listed. A distinction is made between the difficulty of the function, i.e. low-level, mid-level and high-level functions. In addition a distinction is made between functions that depend on input for the robot and functions that determine the output.

| Low-level | Mid-level | High-level | |

| Input | Read sensor data |

|

|

| Output |

|

Avoid collision |

|

Components and specifications

The hardware of the PICO robot can be classified into four categories, which can be seen in the table below.

| Component | Specifications |

| Platform |

|

| Sensors |

|

| Actuator | Omni-wheels |

| Controller | Intel i7 |

The escape room competition features the following environment specifications:

- PICO starts at a random position inside a rectangular room, which features one corridor leading to an exit.

- The corridor is oriented perpendicular to the wall and ranges in width from 0:5 to 1:5 meters.

- The finish line is located more than 3 down the corridor.

Most notably, the differences between the escape room competition and the hospital room competition include:

- PICO will start in the hallway of the hospital.

- The hospital features three to six separate rooms.

Interfaces

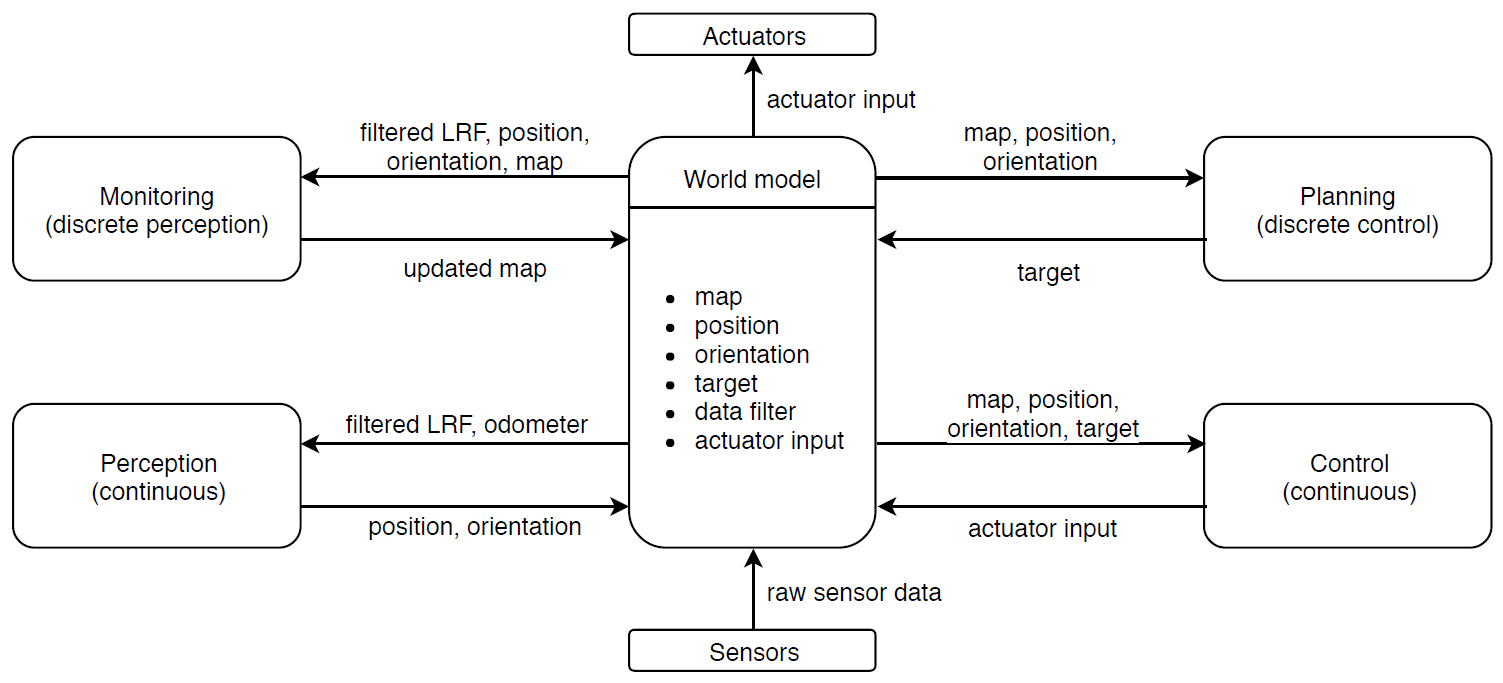

The interfaces for the initial design and the information exchanged between each interface are depicted in Figure 1.

Final Design

For the final design, we are still working based on the interfaces used for the initial design. The plan that was mentioned before has been updated based on new information, and consists of the following steps. These are explained further in the section on the planning and discrete control.

Hospital Competition

1. PICO will be oriented facing the corridor. Initially, it should scan what's in front of it and see whether a door is visible.

2. If a door is found initially, PICO should position itself before the door. Otherwise, it should start following the wall along the corridor, until a new door is found and position itself in front of it again.

3. Then, it should enter the room through the new identified door, and stop one meter into the room. Then it should scan, turn 180 degrees and scan again. It will determine all the corners of the room from the data gathered, and then exit the room. The information about the room, e.g. the location of the door and the corners, will be saved in the world model. While performing this task it is possible that a new door is detected inside the room, then it should enter that room and follow the same protocol as mentioned before. Information regarding whether a door has been entered before will be saved in the world model.

4. Once PICO is in the corridor again, it should start searching for new doors, and explore each of the room similarly as mentioned above.

5. While performing these tasks, PICO simultaneously needs to create a map of the whole hospital. Once the whole hospital is mapped and the end of the corridor is reached, it should park backwards to the wall behind the starting position.

6. For the second part of the challenge, PICO should determine what room requires the most doors to reach (which is the high level hint). This information can be found based on the information in the world model.

7. Then, the consecutive door locations are used as setpoints for PICO to follow towards the right room.

8. When the room is reached, PICO will scan it's surroundings and try to find a series of data points deviating from the know location of the walls. This will indicate the location of the object, so that a setpoint can be generated.

9. PICO will move to the setpoint next to the object and stand still, stating that he has found the object.

World Model and Registry

The center of the interface figure that was shown before is actually divided into two parts: the world map and the registry. The registry contains all current data, for example the position and orientation of PICO, the sensor data, a filtered version of the sensor data, the actuator inputs and the current target and setpoints. This is all relevant information that should be accessible for the functions, but should not be part of the actual world model.

The world model itself consists of different classes that each have their own properties. There are rooms and corridors, which are numbered and contain a collection of doors and corners. The doors have a state (used and unused), coordinates and a destination. The corners have coordinates as well. Using the coordinates of the doors and corners, the lines that form the walls of each of the rooms can be mapped. This information can then be used to determine the location of the object in the final challenge. Since the destination of each of the doors is known, it is possible to determine which room needs the most doors to reach and thus use the high-level hint. The known coordinates of the doors can then be used to generate setpoints to actually reach that room.

Perception

The perception part of the interface will receive filtered LRF data and odometry data, so that it can determine the position and orientation of PICO based on that data. The LRF data has been averaged over several scans, outliers have been removed and one out of every five data points is selected, resulting in 'filtered' LRF data. The odometry data is assumed to be inaccurate, since the omniwheels of PICO tend to slip often. Therefore a way is found to improve the position estimation using the LRF data as well.

Using the split and merge algorithm in the monitoring part of the interface, markers are defined. These markers are the corner points found by the algorithm. Once PICO is in a room and has made an initial scan, the location of the corner points is known and it can be used to improve the estimation of new positions of PICO in that same room. The split and merge algorithm is slower than the odometry updates, but it can still be run within 5ms. Therefore, it can be used regularly to improve the odometry data. The difference between the previous and current positions based on odometry is compared to the difference in the location of PICO relative to the markers. These two position estimates are then averaged, and since the LRF data is assumed to be more accurate this is weighted more.

One disadvantage of this approach is that it is required to have markers defined in a room, before the odometry data can be improved. Therefore everything will be relative to the odometry-based position estimation that is made when the room is first entered. This leaves room for errors. It is assumed that for the monitoring and mapping, the perception will be accurate enough to get a rough mapping of the location of the corners and doors. This will be enough to generate setpoints and paths, and to recognize the object in the final part of the challenge. Also, the control will include an algorithm that monitors the distance to the wall and prevents PICO from driving into a wall.

Monitoring - discrete perception

Feature Detection

Split and Merge

The split and merge algorithm is used to determine the location of the corners and doors in a room. The algorithm is implemented in the following way.

1. Preprocessing the data(input: LRF, odometry, bias)

- remove points that are not of interest. We just use the points within 180 degree of each scan.

- push_back() them into a vector, both the laser and odometry data

- convert the laser points from polar coordinates to xy-coordinates, make modification according to the bias from localization and push_back() into vector

2. Merge multiple scans (optional)

- Merge the overlapping parts from the front scan with back scan, and connect the two.

3. Find section(input: processed_laser)

- Check if the distance between every two points is larger than the threshold

- If true, store the indexes A and B of these two points in a vector

- in first section, the initial point is where laser scan begin; the end point is A from above

- in later sections, the initial point is always the point with smaller index from above step, and end point is the one with larger index

- check the number of in each section, if the number (section length) less than threshold

- if true, declare they are outlier, remove both initial and end point from index vector

- output: each section's initial point, end point's index (of whole data after merge())

4. Feature detection

- in each section, fit the line crossing initial point and end point using these equations:

[math]\displaystyle{ ax+by+c=0 }[/math], [math]\displaystyle{ a=(y_{1}-y_{2})/(x_{1}-x_{2}) }[/math], [math]\displaystyle{ b=-1 }[/math],[math]\displaystyle{ c=(x_{1}y_{2}-x_{2}y_{1})/(x_{1}-x_{2}) }[/math]

- calculate the distance from this line to each point within this section using:

[math]\displaystyle{ abs(ax_{0}+by_{0}+c)/ \sqrt(a^2+b^2) }[/math]

- find the maximum distance to the wall, and check if this is smaller than the wall threshold

- if true, declare it's a wall between two points, go to the next step;

- if false, declare it's a corner, replace the initial point with the found corner, go back to step 1 in this function and write down the max distance points index.

- connect two points that are declared as wall

- connect each corner point with increasing index within current section

- connect current sections initial point with the minimal index corner point, and connect the maximum index corner point with the end point

- output: a 2D vector, every contains all the feature point within that section

5. convert from index to point structure

- extract index from input, assign their corresponding coordinates to point structure,

6. Merge map (update world model)

- define a 2D vector, that is empty initially

- push_back() every row found in step 4

- In the end, each section has two rows. One contains the x-coordinate of all the corner points within that section, and the other contains the y-coordinates

Test Result

Static

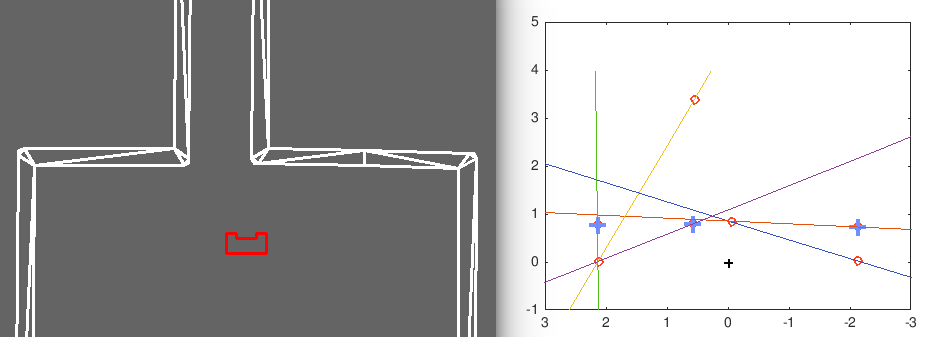

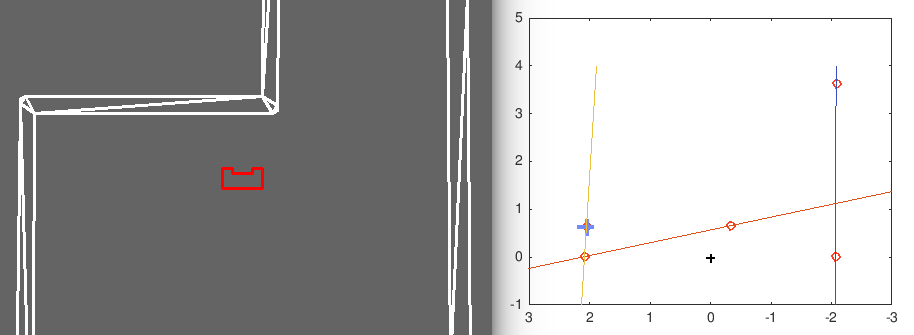

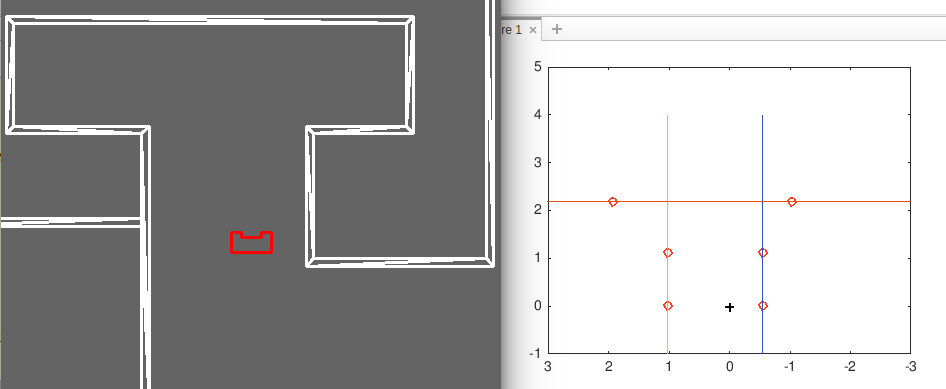

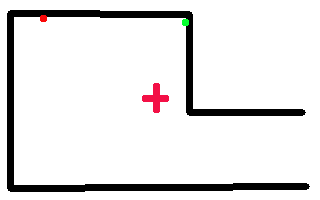

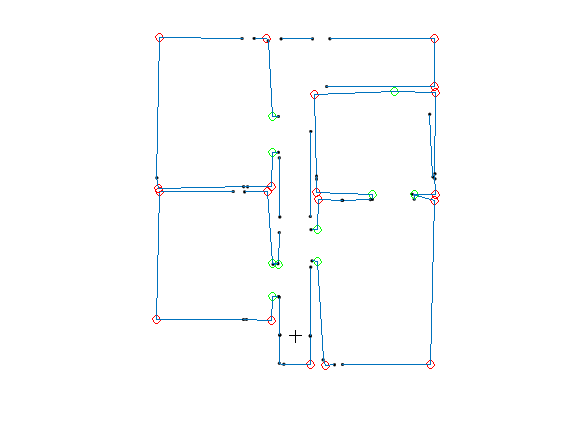

These are some results of the implementation of the split and merge algorithm in simulation. On the left, the simulation environment is visible. On the right, the corner points that are found can be seen, as well as the lines that are fitted through these points.

Dynamic

The working of the split and merge algorithm is illustrated further in the simulation gifs that can be found below. Since the corresponding simulator gif is too large to play fluently, it can be found using this link: http://cstwiki.wtb.tue.nl/images/Split-Merge-Simulator.gif

Limitations

There are several problems with the split and merge algorithm. These are explained below, with the suggested (and applied) solutions.

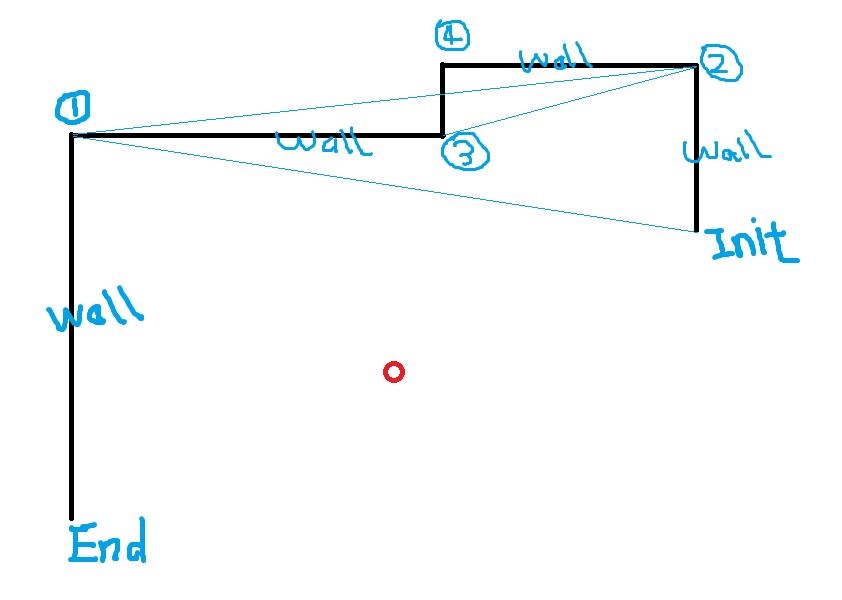

1. Wrong corner point

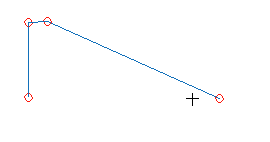

From simulations with different room setting, it is observed that split and merge works fine when PICO is not positioned straight in front of a wall. However, if PICO's x-direction is perpendicular to the wall, it's easy to find the wrong corner.

This is caused by the method that is used to calculate the distance of points within a certain section to the initial end-line, which is explained in step 4 above. When the wall is (nearly) parallel to PICO's y-axis, there might be several points with a similar maximum distance, and one of these points could happen to be a non-existing corner, which is indicated by the red point in the figure below.

Solution

- Always calculate the distance of all the points, from the original initial point to the end point, to the line. Do not only calculate the updated initial point, i.e. the found corner point. This should fix the problem, but the corner index may not be monotonic.

- When a corner is found, check its neighbouring points. If they could form a right angle, then it's concluded this is exactly the corner point.

- When a wall parallel to PICO's y-direction is detected, rotate over a small angle and scan once more.

2. Missing feature (corner) point

This happens when a corner point that has a large index, far away from the inital point, is detected first. Then the initial point is updated with the found corner, and the possbible corner with a small index that's closer to the initial point is missed. This is illustrated in the figure above.

Solution:

Rather than only connecting the found corner with the end point, it is also connected with the initial point (or the previous corner point). This is again illustrated in the figure below.

3. Evaluation of the quality of the detected feature

It is hard to determine wheter a detected feature (a corner, wall or door) is indeed what it seems to be, or if the point was an outlier of some kind.

4. Overlapping feature

The robot scans several times during it's tasks, and some sections or features are overlapping. These previous features should be updated, or connected to a larger information set.

Update Mapping

Strategy

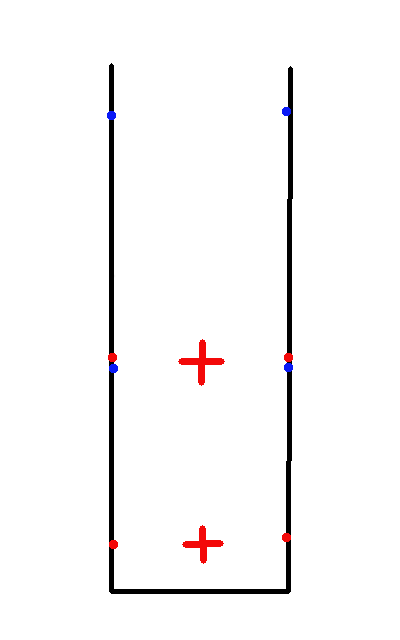

The robot may scan several times during the challenge and in order to deal with the information from LFR, we have to maintain a map.

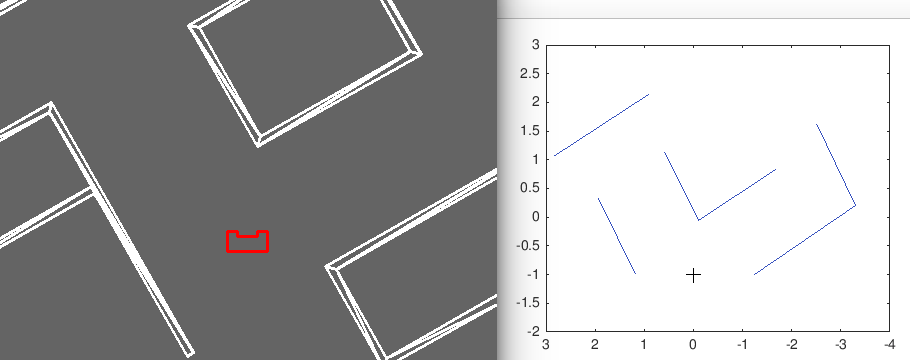

At first, we assumed that robot will always go to the middle point of an open space and do the scanning. The initial and end points of the new scanning are always quite close to the "open" points from the previous scans, or from the un-updated map, which is shown in the left figure below. The red points are found at first scanning, and the blue ones are found at next scanning. The distance of each blue point to the previous map (in this case, the red points) is calculated and the closest points are removed and their sections are merged.

However, this is not always the case, since we cannot guarantee, or might not even always require, that the robot goes to the middle point to scan. The right figure below illustrates this case.

Mapping resutls

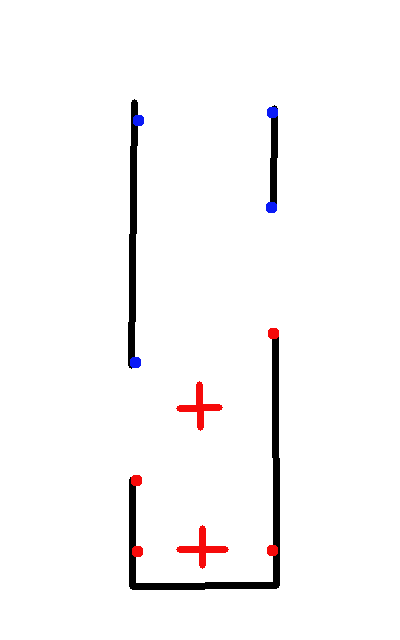

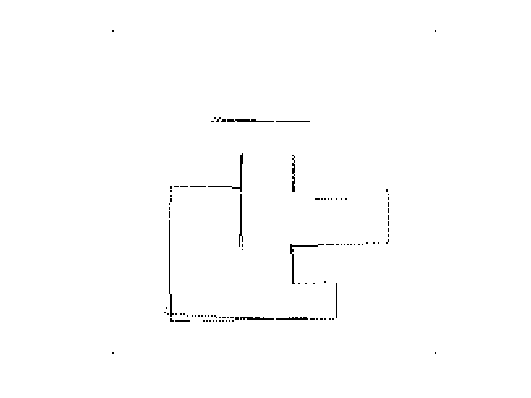

Figure below shows some mapping results using split & merge in the simulator.

Occupancy Mapping

It is possible to use a 2D array to store the map. Bresenham's line algorithm is helpful when assigning values to the free cells and the cells with obstacles could be determined by LRF data and coordinates conversion.

Mapping Results

Though we decided not to use occupancy map because it's more difficult to extract useful information and do the path planning, some tests were implement to see the influence of setting parameters. Figures below shows two different resolution of map.

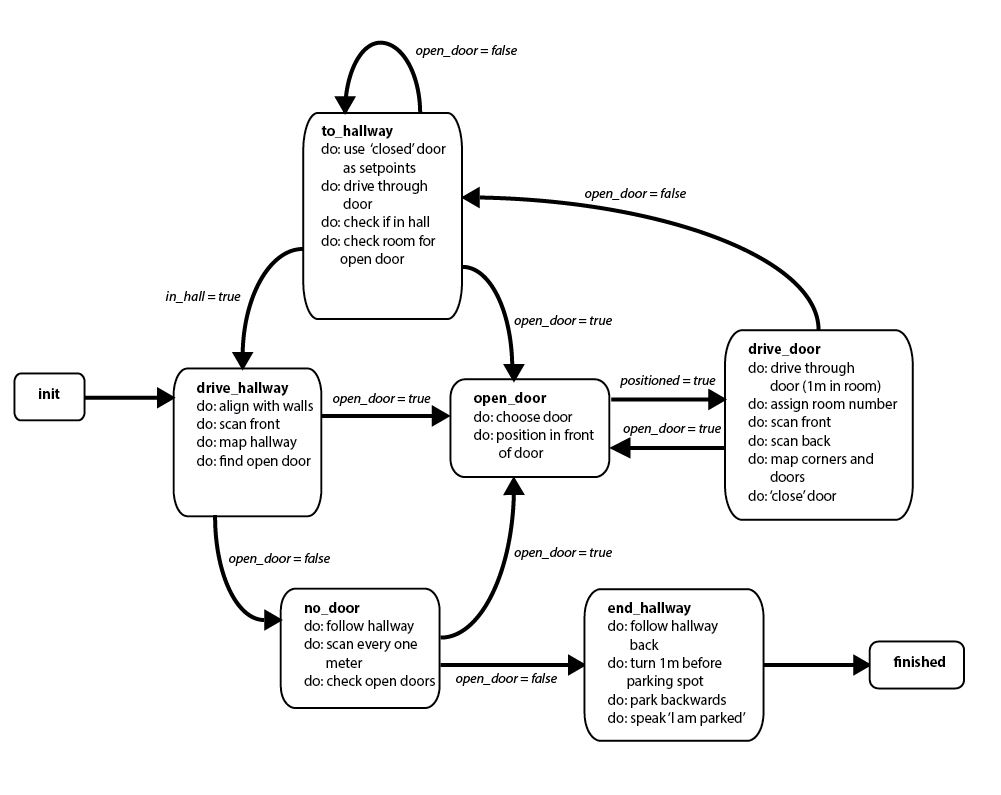

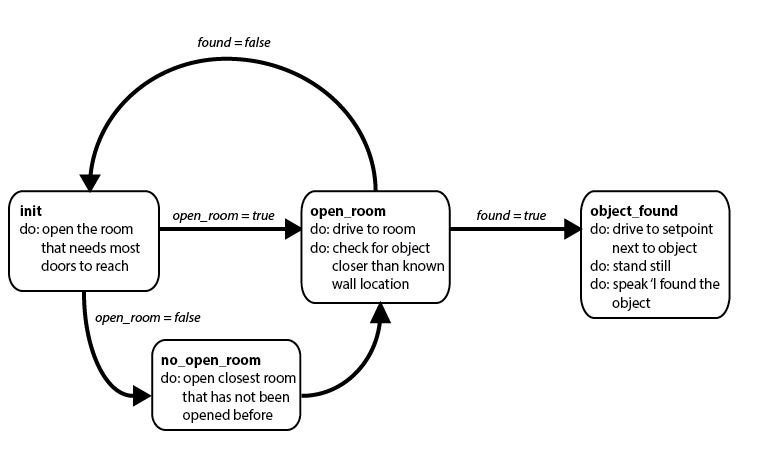

Planning - Discrete Control

For the hospital challenge, discrete (state) control will be required. Therefore, these following two state machines have been designed for the two parts of the challenge: mapping the hospital and finding the object. The first state machine works based on 'open' and 'closed' doors. Any door that is found that has not been entered yet, is saved as an 'open' door. When Pico starts this challenge in the hallway, he will scan what is in front of him. This might shown an open door, if so, Pico will enter a room through this door. If Pico cannot identify an open door, he will drive one meter further into the hallway and scan again. When Pico has entered a room, he will assign a number to this room and save the coordinates of the corners and doors of the room in the world model. The door through which he has entered will be closed, and he will check the room for open doors. If an open door is found, Pico will enter the next room through this door and repeat the proces. If not, Pico will return through the closed door and eventually get back to the hallway to continue his journey through the hospital.

When the entire hospital has been mapped and Pico has stated that he is parked in the correct spot, the next state machine will start running. The principle of open and closed doors will now be applied to rooms. Pico will open the room that needs the most doors to reach, and go towards that room. When he has reached the room, he will scan it and check if there is an object , which will present itself as a series of points closer to Pico than the wall that would be expected at that sensor angle. If no object is found, the closest room that has not been opened yet will be opened and Pico will continue his search there. When the object is found, Pico will stand next to it and tell us all that he has accomplished his task.

Control

Conclusions and discussion

Interesting code snippets

Notes

Rosbag

- Check bag info: rosbag info example.bag

- Split rosbag: while playing large rosbag file, open a new terminal, rosbag record (topic) -a --split --duration=8

- Extract rosbag data:

- In linux, rostopic echo -b example.bag -p /exampletopic > data.txt, or data.csv;

- In matlab, bag = rosbag(filename), bagInfo = rosbag('info',filename), rosbag info filename