AutoRef Honors2019 vision: Difference between revisions

| Line 9: | Line 9: | ||

= Ball tracking = | = Ball tracking = | ||

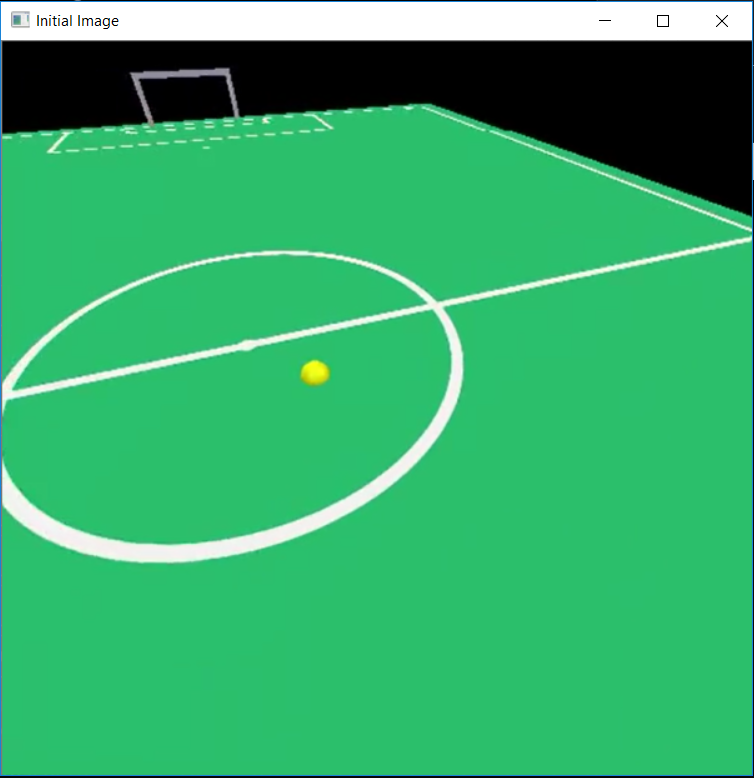

To track the ball from the drone camera a python code was found and adapted. | To track the ball from the drone camera a python code was found online and adapted to work for us. The code we found tracks a ball using a threshold in HSV color space, this threshold represents a range of colors that the ball can show on camera due to different lighting. | ||

The first thing the code will do is grab a frame from the video and make it into a standard size and apply a blur filter to create a less detailed image. Then all of the image that is not the color of the ball is made black, and more filters are applied to remove small parts in the image with the same color as the ball. Now a few functions of the cv2 package are used to encircle the largest piece of the image with the color of the ball. Then the center and radius of this circle are identified. | The first thing the code will do is grab a frame from the video and make it into a standard size and apply a blur filter to create a less detailed image. Then all of the image that is not the color of the ball is made black, and more filters are applied to remove small parts in the image with the same color as the ball. Now a few functions of the cv2 package are used to encircle the largest piece of the image with the color of the ball. Then the center and radius of this circle are identified. | ||

A problem we ran into was that the ball was sometimes detected in an incorrect position due to a color flickering, we then added a feature to only look for the ball in the same region of the image where the ball was detected in the previous frame. | A problem we ran into was that the ball was sometimes detected in an incorrect position due to a color flickering, we then added a feature to only look for the ball in the same region of the image where the ball was detected in the previous frame. | ||

= Line detection = | = Line detection = | ||

Revision as of 14:29, 24 May 2020

AutoRef Honors 2019 - Vision

Ball tracking

To track the ball from the drone camera a python code was found online and adapted to work for us. The code we found tracks a ball using a threshold in HSV color space, this threshold represents a range of colors that the ball can show on camera due to different lighting.

The first thing the code will do is grab a frame from the video and make it into a standard size and apply a blur filter to create a less detailed image. Then all of the image that is not the color of the ball is made black, and more filters are applied to remove small parts in the image with the same color as the ball. Now a few functions of the cv2 package are used to encircle the largest piece of the image with the color of the ball. Then the center and radius of this circle are identified. A problem we ran into was that the ball was sometimes detected in an incorrect position due to a color flickering, we then added a feature to only look for the ball in the same region of the image where the ball was detected in the previous frame.

Line detection

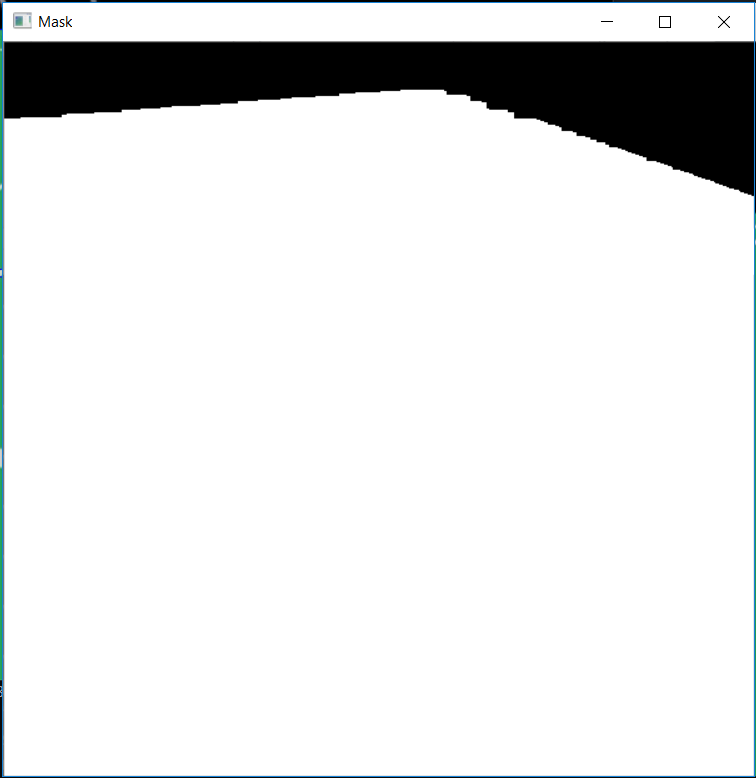

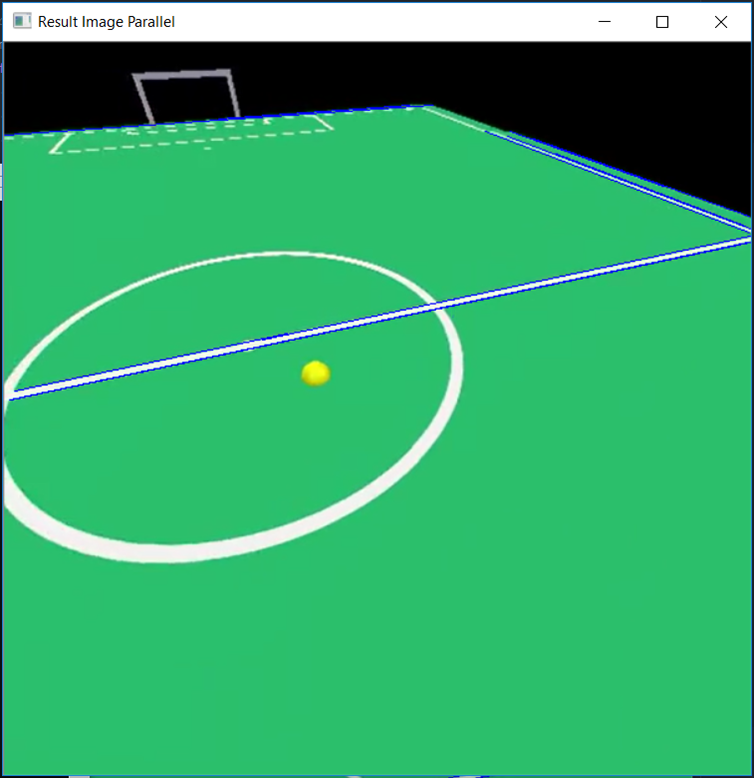

In contrast to the ball detection the line detection is made to work in the simulation and needs to be adapted to work on the actual drone. The main function used for detecting lines, or line segments to be more exact, is the HoughLinesP function from the before mentioned cv2 package, this function detects straight line segments in pictures from contrast in color. To now only detect the line segments we want to detect we applied filters and selections, each will be described below using an example image.

The first filter is a color based filter to not consider any lines that are not on the field.

Now we can use the HoughLinesP function to give us the line segments to work with.

Now we want exactly two lines detected for each white line, this implies that all lines detected should have corresponding parallel line. Using this assumption we can filter out all lines that do not have such a parallel line.

The next thing we notice is that a lot of times there are multiple lines close to eachother where we only want to detect one line. Now we can compute the distance between line segments and merge those segments that lie close to eachother.