Autonomous Referee System: Difference between revisions

No edit summary |

No edit summary |

||

| Line 7: | Line 7: | ||

__NOTOC__ | __NOTOC__ | ||

Building upon the project executed by the first generation of Mechatronics System Design trainees, the objective of | Building upon the project executed by the first generation of Mechatronics System Design trainees, the objective of this project is to extend the system architecture ( which was previously developed) and prove the concept by implementation of a robot-soccer match being refereed by a drone and a ground robot. These two robots (drone and ground robot) working together, are expected to evaluate two rules: | ||

1) ball going out of pitch i.e. the outside rule and | 1) ball going out of pitch i.e. the outside rule and | ||

Revision as of 14:43, 22 March 2017

'An objective referee for robot soccer'

- 1. Main

- 2. Project

- 2.1 Background

- 2.2 System Objectives

- 2.2 Project Scope

- 3. System Architecture

- 3.1 Paradigm

- 3.2 Layered Approach

- 3.3 System Layer

- 3.4 Approach Layer

- 3.5 Implementation Layer

- 4. Implementation

- 4.1 Tasks

- 4.2 Skills

- 4.3 World Model

- 4.4 Hardware

- 4.5 Supervisory Block

- 4.6 Integration

- 6. Manuals

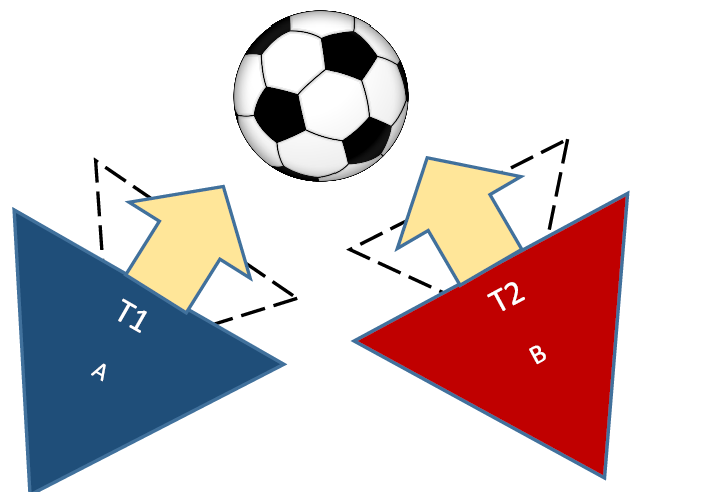

Building upon the project executed by the first generation of Mechatronics System Design trainees, the objective of this project is to extend the system architecture ( which was previously developed) and prove the concept by implementation of a robot-soccer match being refereed by a drone and a ground robot. These two robots (drone and ground robot) working together, are expected to evaluate two rules:

1) ball going out of pitch i.e. the outside rule and

2) collision between the players, i.e. a situation where a free-kick needs to be awarded against the defaulting team.

On this page details regarding the architecture, the hardware used and the software developed for implementation are made available.

Team

This project was carried out for the second module of the 2016 MSD PDEng program. The team consisted of the following members:

- Tim Verdonschot

- Tuncay Uğurlu Ölçer

- Sa Wang

- Joep Wolken

- Farzad Mobini

- Jordy Senden

- Akarsh Sinha

Ground Robot

Requirements for Ground Robot

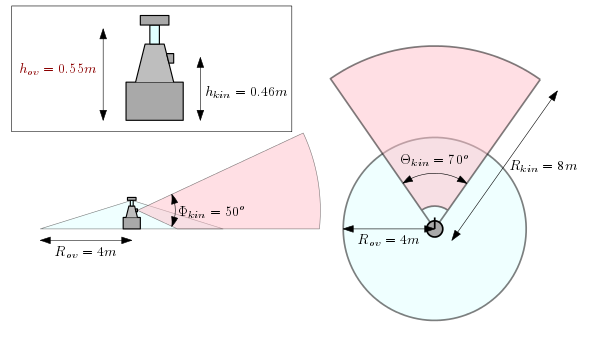

- Motion:

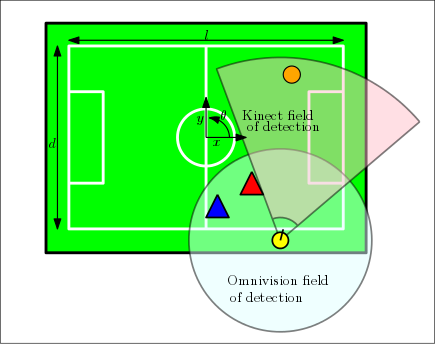

- The GR should be able to keep the ball in sight of its Kinect camera. If the ball is lost, GR should try to find it again with the Kinect.

- Since the ball is best tracked with the Kinect, the omni-vision camera can be used to keep track of the players.

- To accommodate the ball and player tracking, the GR needs to be able to drive next to the field at: x=-w/2+Δw, y=[-l/2,0], θ=[0,-π] during gameplay.

- Vision:

- Position self with respect to field lines

- Detect ball

- Estimate global ball position and velocity

- Detect objects (players) in field

- Estimate global position and velocity of objects

- Determine which team the player belongs to

- Communication:

- Send to laptop:

- Ball position + velocity estimate

- Player position + velocity estimate

- Player team/label

- Own position + velocity

- Own side/home goal

- Own detection of B.O.O.P. or Collision (maybe)

- Receive from laptop:

- Reference position

- Detection flag

- Extra:

- Get ball after B.O.O.P.

- Communicate with second Ground Robot

Drone

- AR Parrot Drone Elite Addition 2.0

- 19 min. flight time (ext. battery)

- 720p Camera (but used as 360p)

- ~70° Diagonal FOV (measured)

- Image ratio 16:9

Drone control

- Has own software & controller

- Possible to drive by MATLAB using arrow keys

- Driving via position command and format of the input data is a work to do

- x, y, θ position feedback via top cam and/or UWBS

- z position will be constant and decided according FOV

Positioning

Positioning System block is responsible for creating the reference position of the drone and the ground robot referee based on the information of the players and the ball. The low level controller of the both system will incorporate the reference position as a desired state for tracking purposes.

Currently :

- Ground referee (Turtle) focuses on ball

- Drone focuses on collision/players

Detection

The fault detection should

- Receive images and estimations of state related parameter from the drone and the ground robot.

- Based on the information, evaluate which of the two rules (BOOP and Collision) are violated.

- Communicate with respective refs the final verdict

- Collaboration with the ground ref

- Receive estimated

- Ball Position and velocity

- Player position and velocity

- Position of line/ ball boundary

- Transmit decision flag regarding BOOP

- Receive estimated

- Collaboration with the drone ref

- Receive estimated

- Player position and velocity

- Ball Position and velocity

- Transmit decision flag regarding Collision

- Receive estimated

- Collaboration with the ground ref

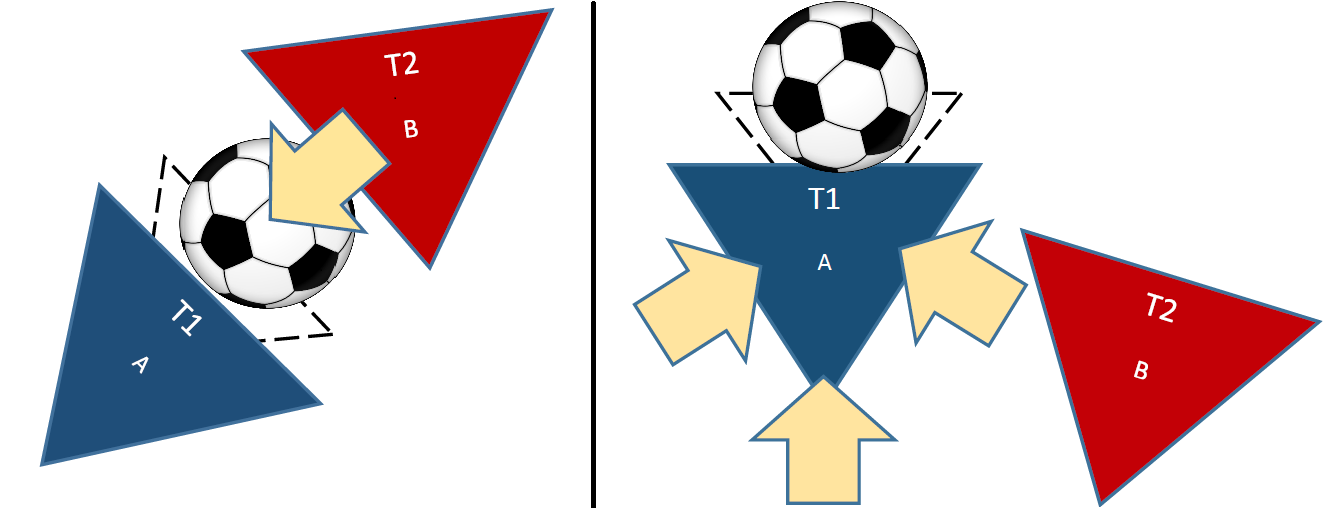

Definition of fault/foul

The definition of foul/fault or offence is based on the Robo Cup MSL Rule Book [1] . Simple physical contact does not represent an offence. Speed and impact of physical contact shall be used to define offence or a foul. There are two cases in which foul detection should be formulated.

- Case 1: One of the robots is in possession of the ball

- A foul will be defined in this case if Robot B impedes the progress of the opponent by

- Colliding after charging at A with v unit velocity

- Applying (instantaneous) pushing with ≥ 𝑭 unit force

- Continuing to push for time ≥ t seconds

- Knocking the ball off A by sudden (Instantaneous) application of force (≥ 𝑭 unit force)

- A foul will be defined in this case if Robot B impedes the progress of the opponent by

- Possible ways of measuring these

- Velocity

- Visual odometry (Image-based Object Velocity Estimation)

- Application of (instantaneous) force

- Use visual odometry and calculate velocity/ acceleration and include time data.

- Estimate force accordingly

- Continuous push (B is pushing A)

- Detect instantaneous application of F unit force

- Detect if B changes direction of movement within t seconds

- Knocking off ball (only visual data)

- Detect collision

- Detect ball and Player A after collision

- Case 2: None of the robots are in possession of the ball

- A foul will be defined in this case if Robot either A or B impedes the progress of the opponent by

- Colliding with larger momentum (say, pB ≥ pA units)

- Continues with the momentum the for time ≥ t seconds (dp/dt=0,for t seconds after impact)

- Possible ways of measuring these

- Momentum

- Use visual odometry to estimate velocity (and elapsed time)

- Estimate momentum accordingly

- Continuous application of momentum

- Detect if defaulter changes direction of movement within t seconds

- Momentum

- A foul will be defined in this case if Robot either A or B impedes the progress of the opponent by

Image processing

Capturing images

Objective: Capturing images from the (front) camera of the drone.

Method:

- MATLAB

- ffmpeg

- ipcam

- gigecam

- hebicam

- C/C++/Java/Python

- opencv

… No method chosen yet, but ipcam, gigecam and hebicam are tested and do not work for the camera of the drone. FFmpeg is also tested and does work, but capturing one image takes 2.2s which is way too slow. Therefore, it might be better to use software written in C/C++ instead of MATLAB.

Processing images

Objective: Estimating the player (and ball?) positions from the captured images.

Method: Detect ball position (if on the image) based on its (orange/yellow) color and detect the player positions based on its shape/color (?).

Top Camera

The topcam is a camera that is fixed above the playing field. This camera is used to estimate the location and orientation of the drone. This estimation is used as feedback for the drone to position itself to a desired location.

The topcam can stream images with a framerate of 30 Hz to the laptop, but searching the image for the drone (i.e. image processing) might be slower. This is not a problem, since the positioning of the drone itself is far from perfect and not critical as well. As long as the target of interest (ball, players) is within the field of view of the drone, it is acceptable.