Mobile Robot Control 2020 Group 6: Difference between revisions

| (331 intermediate revisions by 9 users not shown) | |||

| Line 1: | Line 1: | ||

<div style="width:calc(100vw - 200px);background-color:#EFEFEF;padding-bottom:40px;position:absolute;top:0;left:0;"> | <div style="width:calc(100vw - 200px);background-color:#EFEFEF;padding-bottom:40px;position:absolute;top:0;left:0;"> | ||

<div style="font-size: 13px; max-width: 1000px; word-wrap: break-word; color: #333; font-weight: 400; margin-left: auto; margin-right: auto; padding: 70px; background-color: white; padding-top: 25px;"> | <div style="font-size: 13px; max-width: 1000px; word-wrap: break-word; color: #333; font-weight: 400; margin-left: auto; margin-right: auto; padding: 70px; background-color: white; padding-top: 25px;"> | ||

=Group 6= | |||

Welcome to the Wiki page of group six of the course Mobile Robot Control 2020! On this Wiki, we will introduce you to the challenges we faced when trying to control an autonomous robot and the solutions we've come up with to tackle these challenges. This page can be used as a reference when designing your own robot software, or as an idea on how to approach this course from a organisational perspective. The goal of this course is to design the software to allow the autonomous robot PICO, to first of all flee an escape room and, eventually, to be able to navigate a hospital setting in the presence of unknown disturbances. The shape of the escape room is known to be rectangular, however its size is completely unkown to the robot, as well as the initial position and orientation with respect to the exit. For the hospital challenge, a rough floor plan is provided beforehand, but the rooms and corridors are filled with static objects. In order to mimic people in the hospital, unpredictable dynamic objects are also present. In a practical setting, designing software to tackle these issues would allow a robot similar to PICO, to assist nurses and doctors in a hospital by performing small tasks, such as transporting medicine and tools, while being safe to work around at all times. This means that the software we were to design had to be robust, even in the presence of unknown, possibly moving objects, efficient, to avoid clutter in the hospital and user-friendly, to make sure that people can understand and predict the robot and its actions. | |||

This page is roughly split into two parts, with the first being the relatively simple code required to traverse the escape room, and the second a description of the more elaborate code for the Hospital Challenge. Within each part, the general code structure is first propagated, after which a strategy is proposed in the form of a Finite State Machine. Then, with the foundations of the code laid, we go more into depth in explaining each component. In short, for the Escape Room Challenge, we have decided to detect walls and edges directly from laser range finder (LRF) data, from which a distinction could be made between walls of the room and of the exit corrider. Subsequently, a target point was calculated along one of the observed walls and the robot was steered towards this target point. For the Hospital Challenge, we have used a feature based Monte Carlo particle filter working with convex and concave edges to localise the robot in the hospital. With the known information about the shape of the hospital and the robot its position, a computationally light, optimal A* algorithm based on a network of efficient, pre-defined waypoints was designed, tasked with navigation between the rooms. Finally, a potential-field based collision avoidance algorithm was implemented to ensure safety for the robot and its surroundings. At the end of each part, the performance during the live demonstrations is shown and commented upon, accompanied with recommendations and improvements. | |||

=Table of Contents= | |||

__TOC__ | __TOC__ | ||

=Group Members= | =Group Members= | ||

| Line 14: | Line 18: | ||

Pim Scheers, 0906764 | Pim Scheers, 0906764 | ||

<br> | |||

=Design Document= | |||

At the start of the project, all customer specifications and preferences have been rewritten into our own words, in order to have a comprehensible and conscise overview of the important functions our software needed to have. The most important requirements are, as mentioned before, usefulness, reliability and ease of use, which are explained in more detail in the Design Document. In this document, research into the bare functionality of PICO is also included, from which the inputs and outputs of our software were derived. In short, PICO measures the distance to surrounding objects at 1000 points using a LRF data, and monitors the movement of its omniwheels. Actuating PICO is done through three signals, being a longitudinal, lateral, and rotational velocity, all three of which can be independently actuated through those omniwheels. | |||

Regarding the overall software structure, there are relatively few differences between both challenges. In both cases, the environment needs to be interpreted, decisions have to be made on what to do and these actions have to be performed. Based on this insight, an information architecture was developed for the Escape Room Challenge, which was extended and adapted for the Hospital Challenge. This information architecture contains the general software structure, the most important components and their key functionalities. It was used as a basis for the software design and task division. | |||

The Design Document, which describes the design requirements, specification, components, functions and interfaces can be found [[:File:4SC020_Design_Document_Group_6.pdf|here]]. | |||

=Escape Room Challenge= | |||

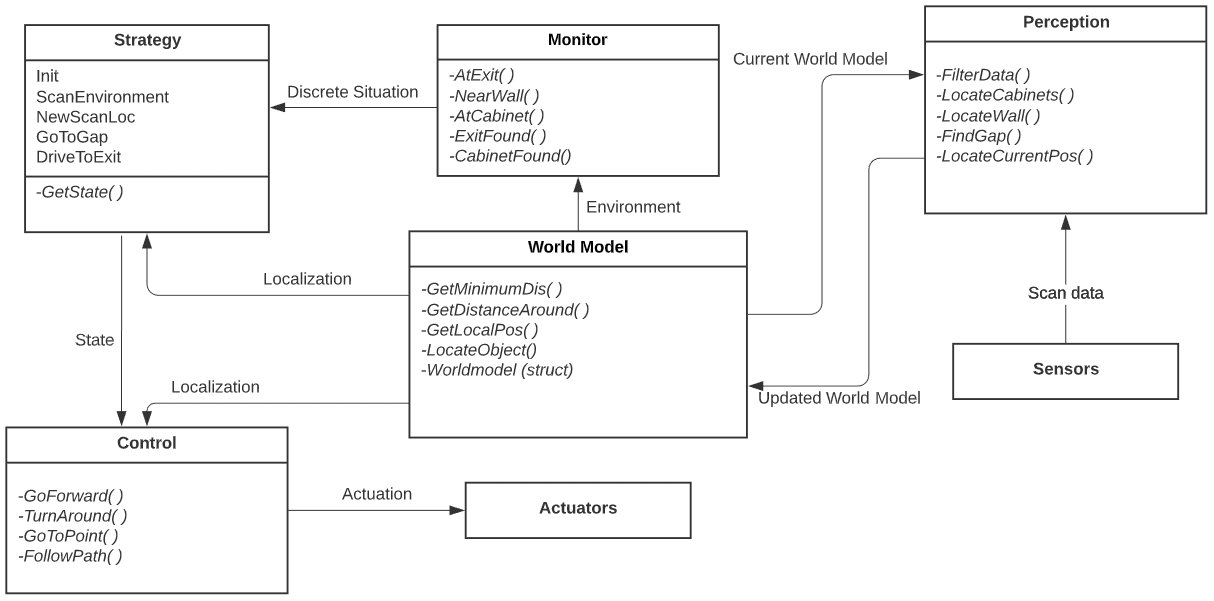

The Escape Room Challenge required PICO to escape a room with limited prior knowledge of the environment. The information architecture of the embedded software has been designed simultaneously with the design document, the main components being: ''PERCEPTION'', ''WORLD MODEL'', ''MONITOR & STRATEGY'' and ''CONTROL''. ''PERCEPTION'' is tasked with the interpretation of the sensor signals, the ''WORLD MODEL'' stores all necessary data which need to be memorised, ''MONITOR'' assesses the information in the world model, to allow ''STRATEGY'' to take the right decisisons. Finally, ''CONTROL'' calculates the outputs of the software system to have the robot perform the tasks desired by ''STRATEGY''. | |||

== Information architecture == | |||

[[File:InterfacesV2.PNG|thumb|center|1000px|Information architecture of the software during the escape room challenge]] | |||

== Monitor and strategy == | |||

The goal of ''MONITOR'' is to map the current situation into discrete states using information of the environment. | |||

For the escaperoom four different conditions are monitored, namely whether a wall, a gap, a corner or an exitwall is found in ''PERCEPTION''. | |||

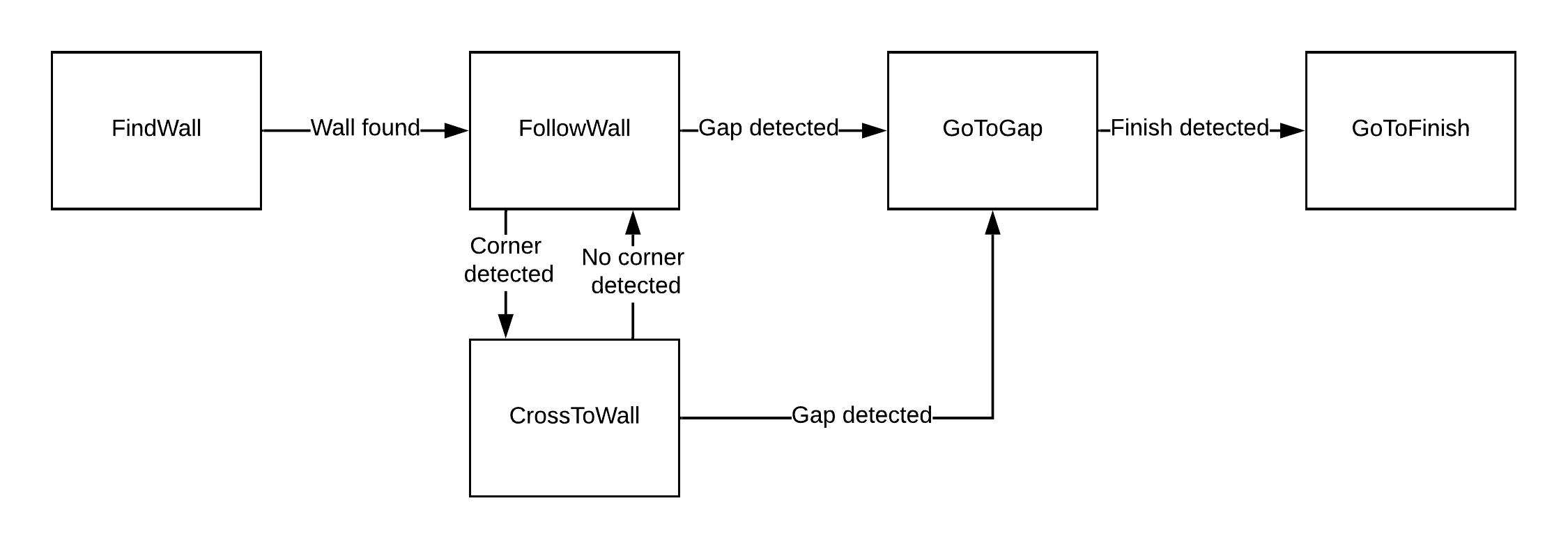

''STRATEGY'' controls a Finite State Machine, shown in the figure below, that is used to determine which action ''CONTROL'' should take. | |||

The discrete states from Monitor are used for the guards of this final state machine. | |||

When the state machine is in the state FindWall, ''CONTROL'' gets the objective to move untill a wall is detected. | |||

In the state FollowWall ''CONTROL'' follows the wall which is the closest to the robot. | |||

From FollowWall it can either go to GoToGap, when a gap is detected, or to CrossToWall. | |||

In CrossToWall the objective for ''CONTROL'' is to follow the wall that is perpendicular to the wall it is currently following. | |||

This way the corner is cut-off. | |||

When the gap is detected PICO directly goes to this gap and when it recognizes the finish it will drive to the finish. | |||

[[File:FSM escaperoomchallenge.png|thumb|center|900px|Finite State Machine used in the escape room challenge.]] | |||

| | |||

| | |||

== Perception == | == Perception == | ||

The objective of the | The objective of the Escape Room Challenge is finding and driving out of the exit. To be able to achieve this, the robot should recognize the exit and find its location, which is the main objective concerning ''PERCEPTION''. For this challenge, the features of the room are stored in the ''WORLD MODEL'' locally to PICO. First of all, unusable data points of the LRF sensor have been filtered out. The unusable data points consist of laser beams which do not lie within the required range of the LRF (which is between 0.2 and 10 meters). A line detection and an edge detection functionality has been implemented in order detect the walls of the room in local coordinates. This way, at each time step, the begin point, the end point, and the nearest point of the walls can be observed by PICO. The exit is determined by observing a gap between line segments. When this is the case, the exit walls are added to the ''WORLD MODEL'' separately. PICO constantly processes the data in order to recognize an exit. | ||

A more detailed description of the line, edge and gap (exit) detection can be seen below: | |||

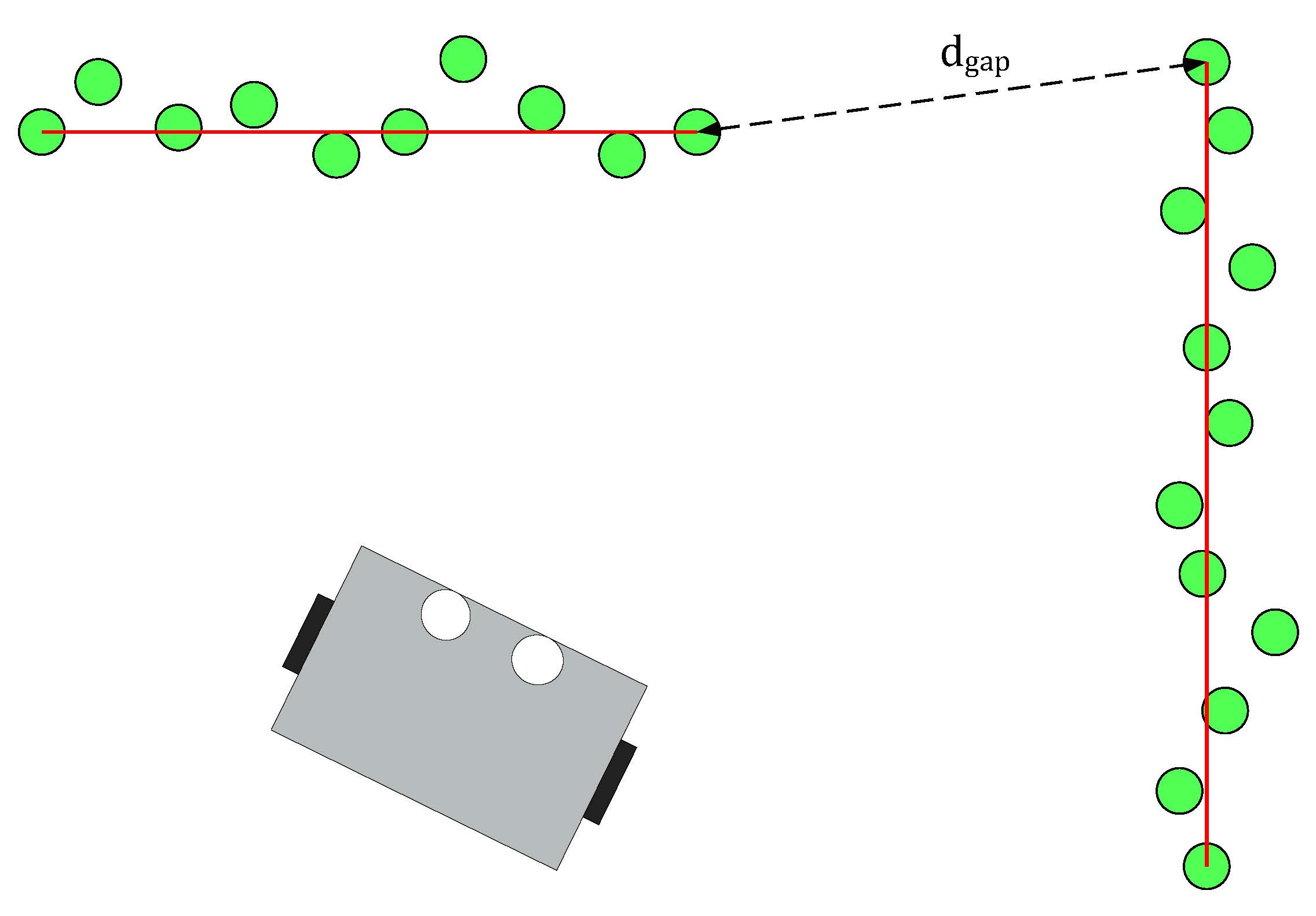

* ''Line detection'': the LRF data consist of 1000 points with each a range value, which is the absolute distance to PICO. The line detection function loops over the data and calculates the absolute distance between two neighboring data points. When the distance exceeds the value d<sub>gap</sub>, the line-segment can be separated. | * ''Line detection'': the LRF data consist of 1000 points with each a range value, which is the absolute distance to PICO. The line detection function loops over the data and calculates the absolute distance between two neighboring data points. When the distance exceeds the value d<sub>gap</sub>, the line-segment can be separated. | ||

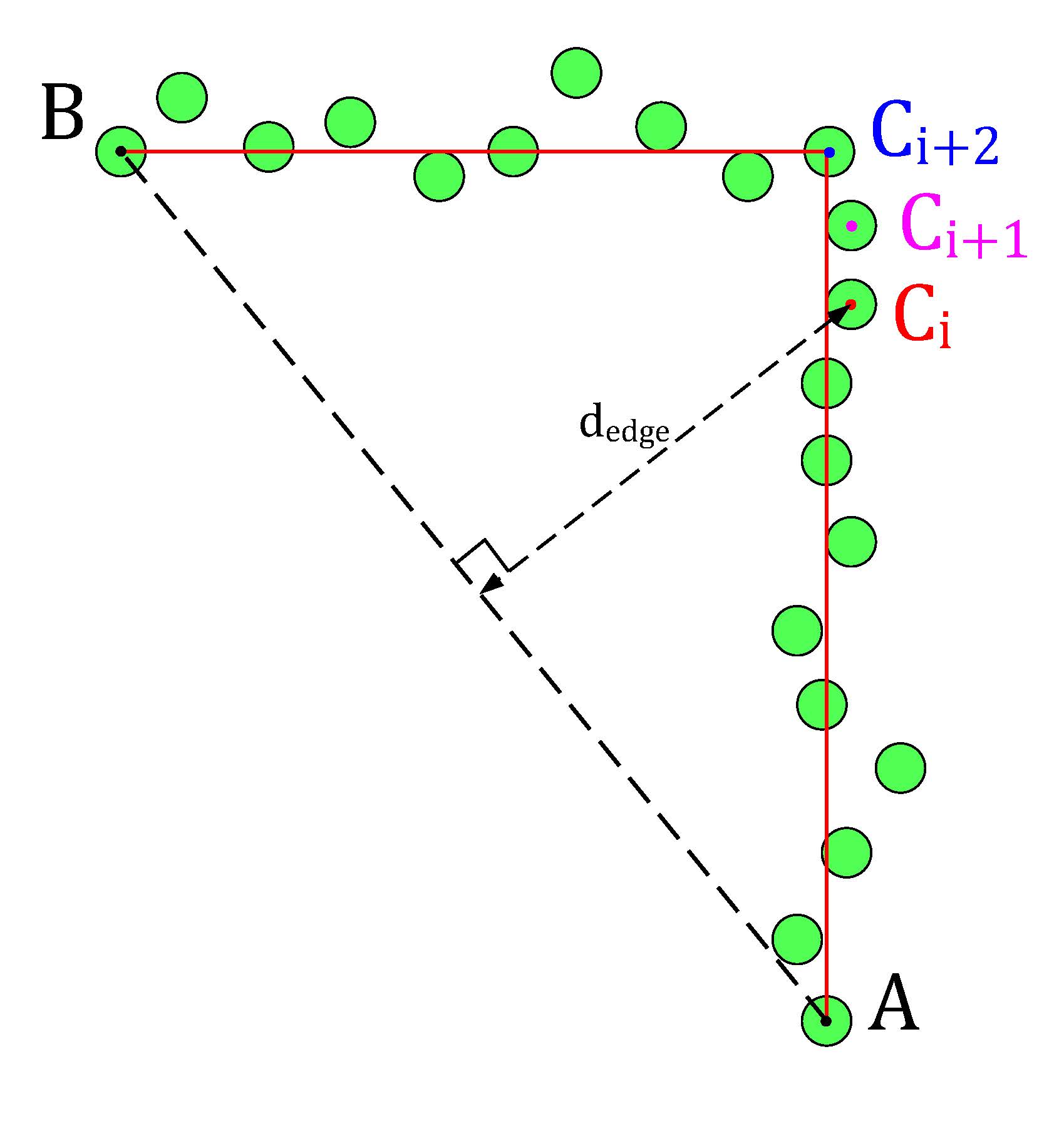

* ''Edge detection'': the line detection algorithm only detects if data points have a large distance relative to each other. The edge detection function detects if the line-segments (which result from the line detection) contain edges. The basic idea of the implemented algorithm can be seen in the figure below. The line segment in that figure has a starting data point A and an end point B. A virtual line, AB, is the drawn from point A to B. Finally the distance from the data points C<sub>i</sub>, which lie inside the segment, to the virtual line AB is calculated, d<sub>edge</sub>. The largest value d<sub>edge</sub> can be considered an edge. | * ''Edge detection'' [3]: the line detection algorithm only detects if data points have a large distance relative to each other. The edge detection function detects if the line-segments (which result from the line detection) contain edges. The basic idea of the implemented algorithm can be seen in the figure below. The line segment in that figure has a starting data point A and an end point B. A virtual line, AB, is the drawn from point A to B. Finally the distance from the data points C<sub>i</sub>, which lie inside the segment, to the virtual line AB is calculated, d<sub>edge</sub>. The largest value d<sub>edge</sub> can be considered an edge. | ||

| Line 126: | Line 66: | ||

With the ability to observe and locate walls, gaps can be easily detected. basic idea of this gap detection algorithm is that the robot looks for large distances between subsequent lines. The threshold for this difference can be tuned in order to set the minimum gap size. The | With the ability to observe and locate walls, gaps can be easily detected. The basic idea of this gap detection algorithm is that the robot looks for large distances between subsequent lines. The threshold for this difference can be tuned in order to set the minimum gap size. The ''WORLD MODEL'' not only stores the local coordinates of gaps, but it also stores the exit walls. The function '''gapDetect''' in the class ''PERCEPTION'' is responsible for storing both the gaps and exit walls in the ''WORLD MODEL''. The visualization beneath shows the localization of a gap in a room. The bright red circle represents the set-point to which PICO will drive towards. This set-point contains a small adjustable margin which prevents collisions with nearby walls. | ||

<!-- | <!-- | ||

| Line 143: | Line 83: | ||

* Adjustable parameter GAP_MARGIN, which as previously mentioned adds a margin to the gap set-point. | * Adjustable parameter GAP_MARGIN, which as previously mentioned adds a margin to the gap set-point. | ||

With these features, a rather robust | With these features, a rather robust ''PERCEPTION'' component has been developed. The resulting performance can be seen in the recording below. The detected lines and gap have been visualized. Small gaps and lines which are present in this custom map are ignored. | ||

[[File:2020_6_Percept_IgnoreGaps.gif|center|frame|1200px|Ignoring small gaps and short lines]] | [[File:2020_6_Percept_IgnoreGaps.gif|center|frame|1200px|Ignoring small gaps and short lines]] | ||

<!-- | <!-- | ||

Besides detecting the exit, the robot should also know where to drive to when one is found. First, this should be the position just before the beginning of the exit. Hereafter, the robot should drive at least a distance of 3 meters in the direction of this exit. To determine these locations, three situations are distinguished. The robot can detect the exit on its right side, it can detect the exit on its left side and it can look directly into the exit. The first two situations are distinguished by the order in which the robot looks into the exit, i.e. if the difference the LRF detects is increasing or decreasing in subsequent beams. If the two walls before and after the big step difference in two subsequent beams are parallel, the robot is in the third situation. From each situation, the position in front of the exit is calculated geometrically. This local position is updated in the | Besides detecting the exit, the robot should also know where to drive to when one is found. First, this should be the position just before the beginning of the exit. Hereafter, the robot should drive at least a distance of 3 meters in the direction of this exit. To determine these locations, three situations are distinguished. The robot can detect the exit on its right side, it can detect the exit on its left side and it can look directly into the exit. The first two situations are distinguished by the order in which the robot looks into the exit, i.e. if the difference the LRF detects is increasing or decreasing in subsequent beams. If the two walls before and after the big step difference in two subsequent beams are parallel, the robot is in the third situation. From each situation, the position in front of the exit is calculated geometrically. This local position is updated in the ''WORLD MODEL''. | ||

--> | --> | ||

== World Model == | == World Model == | ||

'' WORLD MODEL'' in the Escape Room Challenge, stored the following features: | |||

* ''segments'': this member variable in the world model class contains every line segment in a vector. A ''Line'' struct has been added which stores the beginning position and end position index and coordinates. The coordinates can be stored with a Vec2 struct. | * ''segments'': this member variable in the world model class contains every line segment in a vector. A ''Line'' struct has been added which stores the beginning position and end position index and coordinates. The coordinates can be stored with a Vec2 struct. | ||

* ''gaps'': this member variable in the world model class contains the perceived gaps in a vector. A ''Gap'' struct has been implemented which stores the gap coordinates (Coords), the gap coordinates including a margin (MCoords) and the gap size. | * ''gaps'': this member variable in the world model class contains the perceived gaps in a vector. A ''Gap'' struct has been implemented which stores the gap coordinates (Coords), the gap coordinates including a margin (MCoords) and the gap size. | ||

* ''exit_walls'': this | * ''exit_walls'': this member variable contains the 2 exit walls in a vector. These walls are stored as the before mentioned ''Line'' struct. | ||

Keep in mind that these features are being renewed constantly during the operation of PICO. | Keep in mind that these features are being renewed constantly during the operation of PICO. | ||

== Control == | == Control == | ||

In | In ''CONTROL'', a main functionality is to drive towards a target. Therefore the function "GoToPoint()" is created. This function allows PICO to drive towards a point in its local coordinates. The input is a vector which defines the point in local coordinates. Reference velocities are send towards the base in order to drive towards this point. Updating this point frequently makes sure that the robot will have very limited drift, as the reference and thus the trajectory will be adjusted. The robot will not drive and only turn when the angle towards the point is too high, this angle is made into a tunable parameter. | ||

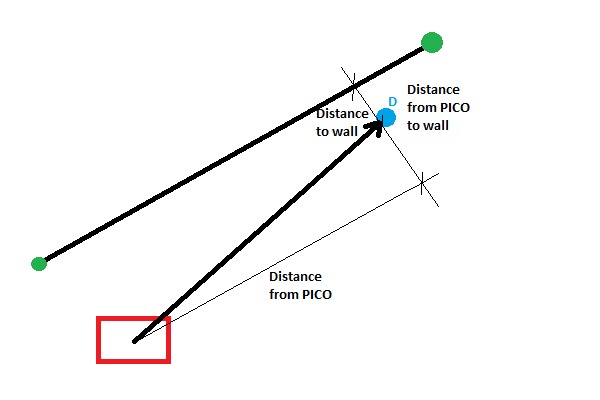

For our strategy, it is necessary that PICO can follow a wall, hence a “FollowWall( )” function is created. The “FollowWall( )” function creates an input point (vector) for the “GoToPoint( )” function. To create this point two parameters are used. One for the distance from the wall to the destination point, and one for the distance from PICO to the destination point. Both are tunable parameters. With the use of some vector calculations this point is created in local coordinates. The benefit of this method is that drift is eliminated, since the point is updated each timestep. Also PICO will approach the wall in a smooth curve and the way this curve looks like is easy tuned by altering the parameters. | For our strategy, it is necessary that PICO can follow a wall, hence a “FollowWall( )” function is created. The “FollowWall( )” function creates an input point (vector) for the “GoToPoint( )” function. To create this point two parameters are used. One for the distance from the wall to the destination point, and one for the distance from PICO to the destination point. Both are tunable parameters. With the use of some vector calculations this point is created in local coordinates. The benefit of this method is that drift is eliminated, since the point is updated each timestep. Also PICO will approach the wall in a smooth curve and the way this curve looks like is easy tuned by altering the parameters. | ||

The following figure presents this approach. | The following figure presents this approach. | ||

[[File:picowallfollow.jpg]] | [[File:picowallfollow.jpg|thumb|center|900px|Follow wall algorithm.]] | ||

== Challenge == | == Challenge == | ||

On May 13th the | On May 13th the Escape Room Challenge was held, where the task was to exit a simple rectangular room through its one exit without any prior information about the room. We had prepared two branches of code, to allow ourselves to have a backup. With the software described in the previous sections, the first attempt showed behavior which was very close to the video below. Unfortunately, when the robot turned its back towards the wall it should be following, it got stuck in a loop which it could not escape. From the terminal we could read that the robot remained in a single state, called FollowWall. However, its reference direction did constantly change. | ||

[[File:ER_fail_4-2020-05-18_10.34.30.gif]] | [[File:ER_fail_4-2020-05-18_10.34.30.gif|thumb|center|1400px|Performance during the escape room challenge]] | ||

The code for the second attempt, which omitted the use of the states '''GoToGap''' and '''GoToFinish''', made use of two states only, being '''FindWall''' and '''FollowWall'''. This meant that the issue we faced in the first attempt was still present in the new code, hence exactly the same behavior was observed. | The code for the second attempt, which omitted the use of the states '''GoToGap''' and '''GoToFinish''', made use of two states only, being '''FindWall''' and '''FollowWall'''. This meant that the issue we faced in the first attempt was still present in the new code, hence exactly the same behavior was observed. | ||

| Line 197: | Line 120: | ||

With this new observation about the reason the robot got stuck, which could essentially be reduced to the fact that the wall to be followed passed under the threshold, the first debugging step would be to lower this threshold. Reducing it from 50 to 20 points, allowed the robot to turn clockwise far enough, so that the portion of the wall towards the exit came closest and hence could be followed. This meant that the robot was able to drive towards the exit, and out of the escape room without any other issues, as can be seen in the video below. All in all, it turned out that the validation we had performed before the actual challenge missed this specific situation where the robot was in a corner and had to move more than 90 degrees towards the exit. As a result, we did not tune the threshold on the minimum amount of points in a wall well enough, which was actually the only change required to have the robot finish the escaperoom. | With this new observation about the reason the robot got stuck, which could essentially be reduced to the fact that the wall to be followed passed under the threshold, the first debugging step would be to lower this threshold. Reducing it from 50 to 20 points, allowed the robot to turn clockwise far enough, so that the portion of the wall towards the exit came closest and hence could be followed. This meant that the robot was able to drive towards the exit, and out of the escape room without any other issues, as can be seen in the video below. All in all, it turned out that the validation we had performed before the actual challenge missed this specific situation where the robot was in a corner and had to move more than 90 degrees towards the exit. As a result, we did not tune the threshold on the minimum amount of points in a wall well enough, which was actually the only change required to have the robot finish the escaperoom. | ||

[[File:ER_succ_4-2020-05-18_10.36.58.gif]] | [[File:ER_succ_4-2020-05-18_10.36.58.gif|thumb|center|14000px|Performance during the escape room challenge after the corrections.]] | ||

=Hospital Challenge= | =Hospital Challenge= | ||

With the Escape Room Challenge completed, the next part of the Wiki page considers the software designed for the Hospital Challenge. In here, the adaptations, new components and new functions within those components are put forward and explained. Additionally, a lot of testing has been performed to validate the reliability of the designed software and apply improvements wherever necessary. | |||

==Information Architecture== | |||

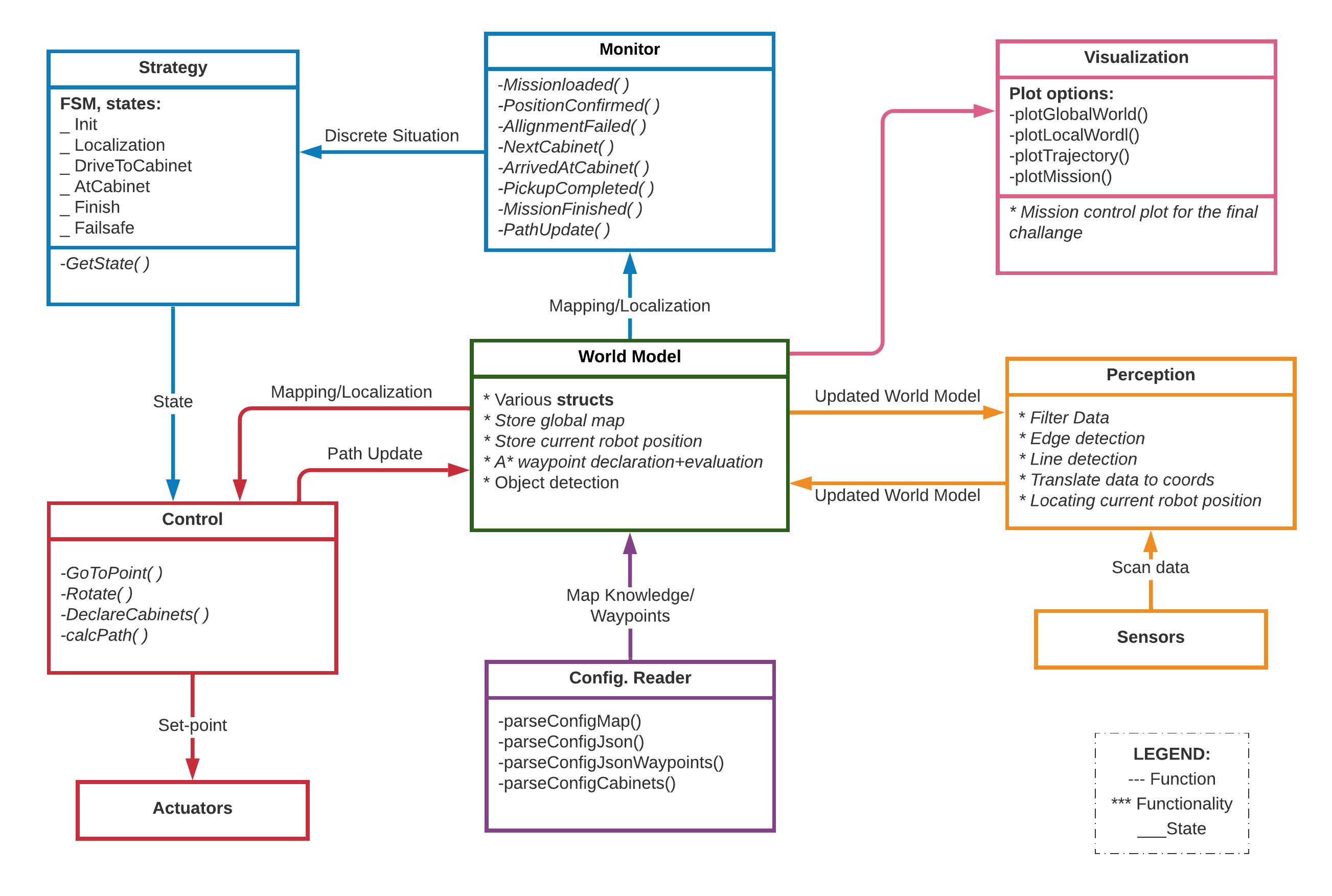

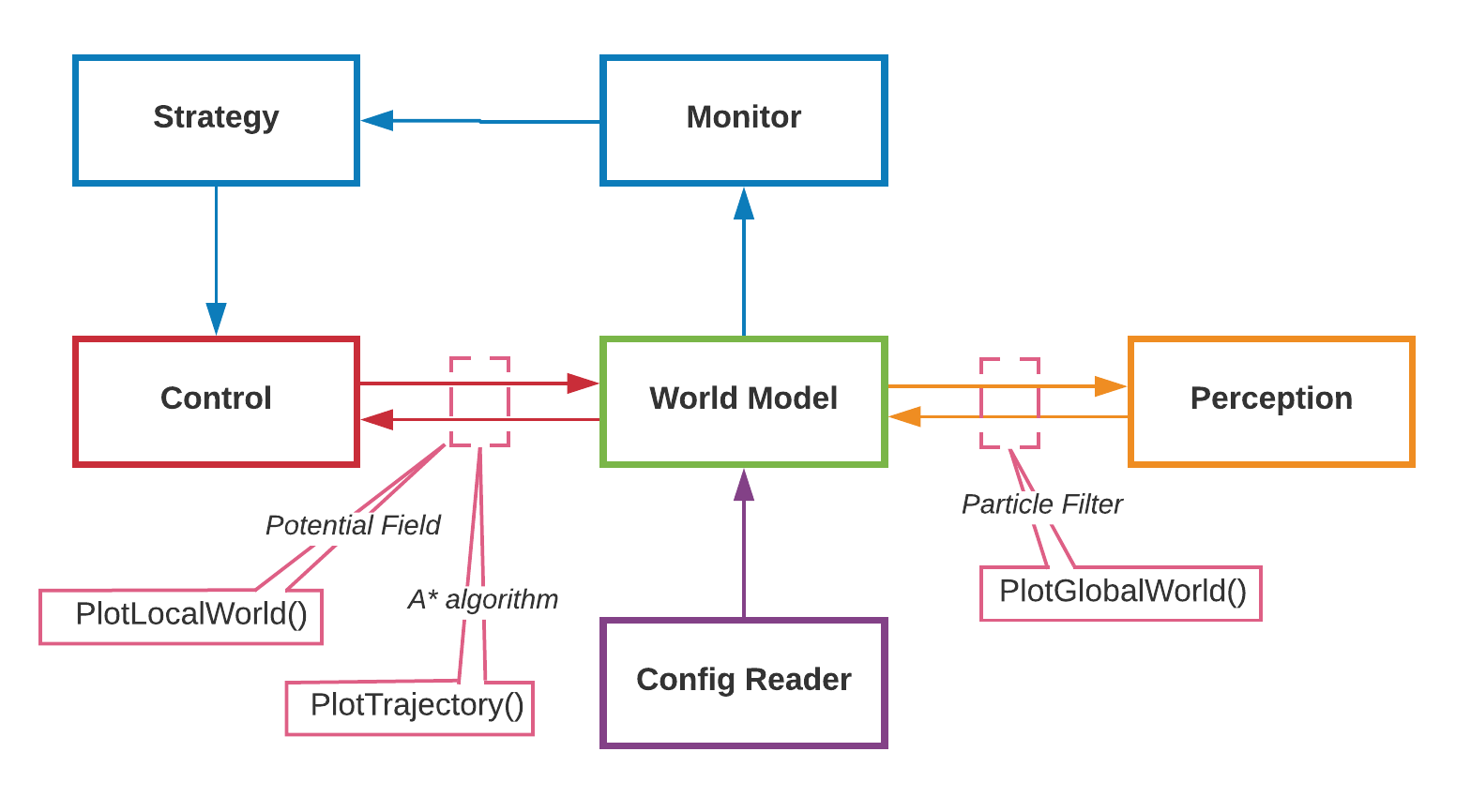

In order to finish the Hospital Challenge, we have first created an information architecture. The basic structure is very related to the Escape Room Challenge. The architecture is created in a logical manner, as it first locates the robot in ''PERCEPTION'', then stores this data in the world model from which the strategy is determined and the robot is actuated through a control structure. The components within the architecture contain the following components: | |||

*'''Config. Reader''': Is able to parse through JSON-files which contains points, walls and cabinets present within the map; Can also parse through JSON-files containing each waypoint and it neighbors. | |||

*'''Monitor''': Monitors the discrete state of the robot. | |||

*'''Strategy''': Determines the supervisory actions necessary to achieve the targets. | |||

*'''Perception''': Localizes PICO in the global map using a Monte Carlo particle filter; Detects unknown objects in the global map. | |||

*'''World Model'''; Stores the global map and local map; Stores the path list and waypoints link matrix. | |||

*'''Visualization''': Contains plot functions meant for debugging several components; Contains the mission control visualization used for the final challenge. | |||

*'''Control''': Actuates the robot to reach the current target specified by Strategy. Consists of global and local path planning methods. | |||

The following figure shows functions, functionalities and states within each component. | |||

[[File:2020 group6 info-architec.png|thumb|center|1000px|Improved information architecture which has been used for the hospital challenge.]] | |||

In | |||

In comparison to the Escape Room Challenge, the information architecture has not changed much. The largest difference is the addition of 2 components: the Visualization and the Config. Reader. The visualization became crucial in the debugging phase of several functionalities and components within the software architecture. While the config. reader helped improve the A* path planning and loading in several maps for testing. In the following sub-chapters, the main functionalities of each component will be explained and discussed. | |||

==Monitor== | |||

Like for the escaperoom challenge, the goal of ''MONITOR'' for the Hospital Challenge is to map the current situation into discrete states using information of the environment. | |||

For the hospital, ten different conditions are monitored. | |||

First of all, ''MONITOR'' analyses whether a mission loaded by inspecting the mission array. The mission is loaded if this array is not empty. | |||

For the localization, three conditions are monitored. The first condition is whether the position is confirmed and the second condition is whether enough edges have been used for the localization. This should be at least the threshold of three edges, as this is the minimum to constrain the three degrees of freedom. The third condition that is monitored for the localization is how many iterations PICO has made in finding its position. If the amount of iterations is above the threshold, it could be that it is not possible to allign in the current position. | |||

When PICO is driving to the next cabinet there are four condintions monitored by ''MONITOR''. One of the conditions is whether the path should be updated. For this it is monitored whether an object is detected, and if so, whether the object obstructs the path. | |||

The path consists of several waypoints, and it is checked whether a waypoint is reached. When a waypoint is reached a timer is set as well. This gives PICO five seconds to get to the next waypoint. If PICO is not able to reach the next waypoint in these five seconds, the path is most likely blocked. The last waypoint of a path is a cabinet. Whether a cabinet is reached is the last condition that is monitored during driving to the next cabinet. | |||

When PICO is at a cabinet there are two conditions monitored by ''MONITOR''. The first condition is whether the pickup is completed, and the second condition is whether it was the last cabinet of the mission. In the latter case it means the mission is finished. | |||

==Strategy== | ==Strategy== | ||

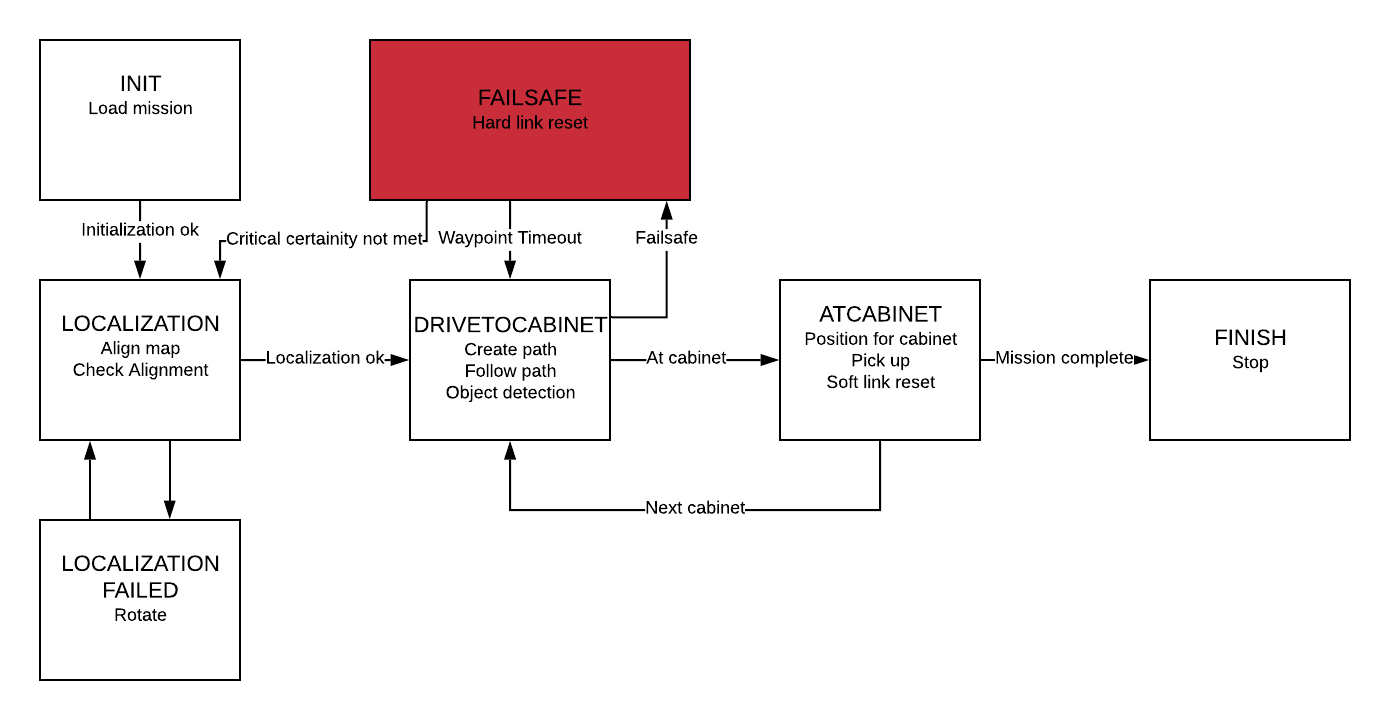

The | The Hospital Challenge is tackled with the following Finite State Machine (FSM) which is implemented in ''STRATEGY''. The guards are implemented in ''MONITOR''. | ||

[[File:MRC hospital challenge FSM-main.png|1000px]] | [[File:MRC hospital challenge FSM-main.png|1000px]] | ||

The | '''Init''' | ||

The first state of PICO is the Init state. This is the initialization, here the mission is loaded. When the mission is loaded the initialization is finished and PICO goes into the Localization state. | |||

'''Localization''' | |||

When PICO is in the Localization state, the localization is performed. When PICO confirms that it knows its position it is checked whether the conditions for correct localization are met. If this is not the case, or when the localization fails, PICO will go to the LocalizationFailed state. | |||

'''LocalizationFailed''' | |||

In this state PICO will make a small rotation, after which it goes back into the Localization state. | |||

'''DriveToCabinet''' | |||

When the localization is confirmed, PICO goes to the DriveToCabinet state. In this state, a path is calculated and followed. When PICO is following the path, it keeps scanning the environment continuously in order to confirm its position and to detect objects. If PICO does not reach its next waypoint before the timer ends or when the position uncertainty gets too high, it will go into the FAILSAFE. | |||

'''FailSafe''' | |||

In the Failsafe, the linkmatrix is reset. Depending on the reason why the Failsafe state is entered, it will either go back into Localization or DriveToCabinet. | |||

'''AtCabinet''' | |||

When PICO is close to the cabinet it is heading to in the DriveToCabinet state, it goes into the AtCabinet state. In this state PICO alligns with the heading of the cabinet and performs the pick up. A snapshot of the LRF data is made and in case the mission is completed the Finish state is reached. If the mission is not completed, the linkmatrix will get a soft reset. This means that only the links that have had a small increase in weight are reset. After this PICO goes back to the DriveToCabinet state. | |||

'''Finish''' | |||

The Finish state is the final state of PICO. | |||

This FSM is taken as a guidance throughout developing the functions. | This FSM is taken as a guidance throughout developing the functions. | ||

==Perception== | ==Perception== | ||

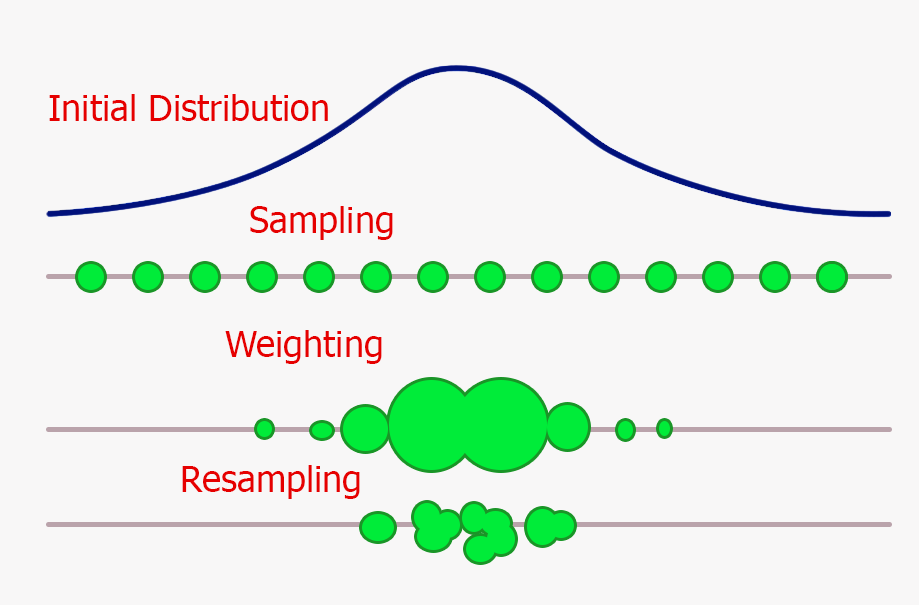

The most important task which | The most important task which ''PERCEPTION'' has to complete is the localization of PICO. This is chosen to be done by using a 'Monte Carlo particle filter'. This is chosen over other techniques as it is not limited to parametric distributions and is relatively simple to implement. Also, it outperforms other non-parametric filters such as the histogram filter [Jemmott et al., 2009]. | ||

One of the main challenges of the particle filter is the so called ray casting problem, i.e. determining what each particle with random position and orientation on the map should see with its LRF. Due to the complexity of this problem and the limited time of this project, it was chosen to solve this problem using a feature-based sensor model. This approach tries to extract a small number of features from high dimensional senor measurements. An advantage of this approach is the enormous reduction of computational complexity [Thrun et al., 2006]. Also, a converging algorithm is used in case particle filter algorithm initially fails to find the right position of PICO, which makes this global localisation algorithm extremely robust. Both the particle filter algorithm and the converging algorithm contain features that makes the localisation robust against unknown objects. In addition, an object detect algorithm is implemented that converts unknown corners to known corners, making the localisation increasingly more accurate during the challenge. The position of PICO is updated with odometry data in combination with these algorithms to account for uncertainties such as drift. | |||

In this section, first an overview on the implementation of the localization algorithm is given. The explanation of this algorithm is then divided in two parts, the particle filter algorithm and the converging algorithm. Hereafter it is shown how the localization algorithm is used in combination with the odometry data. Then a more elaborate explanation is given on certain key steps of the localization algorithm, i.e. the probability function, the resampling function and the uncertainty function. Lastly, it is shown how unknown objects can be detected and used for a more accurate localization. | |||

===Implementation=== | ===Implementation=== | ||

At the beginning of the Hospital Challenge, the position and orientation of PICO are unknown. Based on its LRF data, PICO should be able to find its global position and orientation on the provided map. As the map is known, a Monte Carlo particle filter can be used, which is a particle filter algorithm used for robot localization. This algorithm can be summarized by the following pseudo code: | |||

for all NUMBEROFPARTICLES do | |||

1. Generate particle with random position and orientation | |||

2. Translate the global position of the (known) corners to the local coordinate frame of the particle | |||

3. Filter out the corners not within the LRF range of the particle | |||

4. Calculate probability by comparing the seen corners of the particle with the corners PICO sees | |||

end | |||

5. Weighting the probabilities of all the particles to sum up to one | |||

6. Resample the particles according to weight | |||

7. Calculate the average of the remaining particles | |||

8. Calculate the uncertainty by comparing the corners PICO sees with the corners the computed average position should see | |||

9. Update the range where particles are generated according to this uncertainty | |||

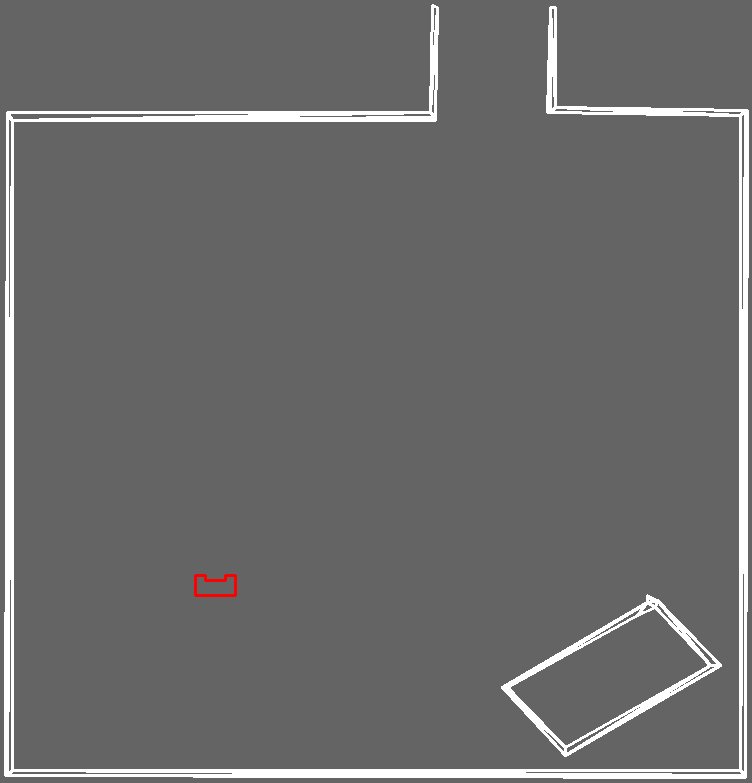

In order to validate the localisation of PICO, a simple test map is made. To also test robustness against unknown objects and object detection, a same test map is made with a object in the right lower corner. | |||

<center> | |||

<gallery mode=packed heights=360px widths=440px> | |||

File:PF_testmap.png|Test map for localization. | |||

File:PF_testmap_object.png|Test map for localization, with object. | |||

</gallery> | |||

</center> | |||

===General PF algorithm=== | ===General PF algorithm=== | ||

[[File:PF_resample_resize.gif|right|frame|300 px|Resampling particles with the highest probability.]] | |||

In step (1), particles are created using a random generator. The initial range where these particles are generated is given by user input. i.e. the starting room of the hospital. Then in step (2), all the features in the map, from which its global position is known, are translated to the local coordinate frame of the generated particle. As already an edge (or corner) detect function was implemented during the Escape Room Challenge, it was decided to use these corners as features. An alternative would be to use the walls as features. However the difficulty with walls was that they are often only partly visible, while the corners are either fully visible or not at all. In addition, a distinction is made in convex and concave corners, which gives PICO more information to find its global position. This required updating the edge detect function used in the escape room challenge to make this distinction. In step (3), the features the particle would not be able to see if it had the same LRF as PICO are filtered out. This is needed to make the comparison to what PICO sees with its LRF. As PICO is to be positioned within three degrees of freedom, at least three visible corners are needed for proper localization. In step (4), this comparison is done with a probability function that returns the probability the sampled particle is at the same position and orientation as PICO. After all the particles have been assigned a probability, this probability is weighted in step (5) in order to have the total sum of all probabilities equal to one. These weighted probabilities then represent a discrete probability distribution. From this distribution, the particles are resampled in step (6). After a few resamples, PICO's position and orientation is determined in step (7) as the average of the remaining particles. The number of particles generated and the number of resamples can be changed in the configuration and are fine-tuned by considering the trade-off between computational load and accuracy of the computed pose. | |||

On the right, a visualization of the localization using the particle filter is shown. It shows how after a few resamples, the correct position of PICO is found. The yellow and white circles represent the convex and concave corners present in this map. The green and blue circles represent the convex and concave corners observed by PICO. The red circles represent the resampled particles. | |||

===Converging algorithm=== | ===Converging algorithm=== | ||

[[File:PF_correction_resize2.gif|right|frame|300 px|Converging to the right position when initially incorrect.]] | |||

Step (7) concludes the standard Monte Carlo particle filter. However to cope with errors in localization, a converging algorithm is added. The implementation of this algorithm starts in step (8) with obtaining a measure of uncertainty on the determined position at step (7). First, the local position of the visible corners are translated to the global coordinate frame using the obtained global position of PICO after step (7). Then, the global position of these convex and concave corners is compared to their actual (known) global location on the map, by computing the distance between them. This quantity is then used as an uncertainty measure, as this value would be very small if all the seen corners are placed on the right location. Then in step (9), this uncertainty measure is multiplied by a weight and then used to update the range of particles generated around PICOs position when the PF algorithm reruns. This ensures that the global position of PICO always converges to the right position. This weight can be changed in the configuration and is fine-tuned by considering how aggressive the PF should react to uncertainty. When a high value is chosen, the computed position converges more quickly to the right position. However this would make the localisation less robust against corners from unknown obstacles. | |||

On the right, a visualization is seen of a situation where PICO first initially assesses its position at a wrong location. However due to the uncertainty evaluated after the particle filter, it corrects and converges to the right position. This uncertainty is visualized as the yellow circle around PICO. | |||

<br /> | |||

<br /> | |||

===Updating using odometry data=== | ===Updating using odometry data=== | ||

When driving, the position and orientation of PICO | [[File:PF_driving_resize2.gif|right|frame|300 px|Updating location using odometry data.]] | ||

When driving, the position and orientation of PICO are updated with the odometry data. When PICO drifts, the previously described uncertainty measure will increase as the distance between the visible edges and the known edges increases. As a result, the range around PICO where particles are generated increases, from which the right position can again be recovered. As PICO sometimes only drifts in a certain direction, the particle range around PICO is made as an ellipse in order to focus more on the direction of larger drift. | |||

<br /> | |||

<br /> | |||

<br /> | |||

<br /> | |||

<br /> | |||

<br /> | |||

<br /> | |||

<br /> | |||

<br /> | |||

<br /> | |||

<br /> | |||

<br /> | |||

<br /> | |||

===Probability function=== | ===Probability function=== | ||

A key part of determining whether a particle represents the correct position and orientation of PICO is implementing an efficient probability function. This function should compare the information generated for a particle, with the information of PICO. The more these values match, the higher the probability will be of that certain particle. Because a feature based sensor model is used, the information that is compared in this probability function is feature information. The features that are used are the corners of the walls. These corners contain information about their location and type, i.e. convex or concave. This information is compared with the use of two embedded for loops. A loop over all the corners PICO observes and a loop over all the corners that the particle should observe. This means that all the observed corners of the particle are compared to all the observed corners of PICO. This is done as PICO cannot know which particular corner it sees. Then, in this loop, all the corners are compared using a probability distribution. Several distributions were tested. The first guess was using a Gaussian distribution. However when PICO sees an unknown corner, using this distribution could result in giving a particle that has on average less distance between all the corners more probability than a particle on the right position but due to that unknown corner less probability. Therefore, in order to give a bigger penalty on a few small distances compared to one large distance (i.e. unknown corner), a reverse exponential distribution is used. The inputs for this distribution are the x-location, y-location, orientation and distance of the corner. A general probability is then created by taking the product of these individual probabilities. When a convex and a concave corner are compared, an big penalty is given, resulting in a small probability of the considered particle. This prevents high probabilities for corners that in reality do not coincide. | |||

===Resampling function=== | ===Resampling function=== | ||

[[File:PF_resampling.png|right|thumb|upright=1.5|Resampling, graphic representation.]] | |||

One of the main reasons the particle filter is computationally efficient, is due to the resampling step. It is possible to implement this filter without this step, this would, however, require a very large amount of particles for obtaining similar accuracy. After assigning each random generated particle with a probability, all probabilities are weighted in order for the total probability to be equal to one. Then, the already generated particles are resampled according to this weight. If, for example, one very accurate particle gets a weighted probability of 50%, this means that during resampling this particle will be chosen as approximately half of the total number of particles. After resampling, the probability of each particle is recalculated according to the number of times it has been chosen. The particles that have not been chosen are removed. The probability of all the particles are again weighted for the total sum to be equal to one. This resampling step can be done one or several times for each run of the PF algorithm. In [1], a code snippet of the implementation of this resampling algorithm is presented. | |||

<br /> | |||

<br /> | |||

<br /> | |||

===Uncertainty function=== | |||

The last key feature of the localisation algorithm that deserves some extra elaboration is the uncertainty function. As explained before, this function calculates an uncertainty measure that is used to ensure that PICO always converges to the right position. This done by first computing the distance between a corner that PICO should see at the obtained position from the particle filter algorithm and a corner that is actually observed. Then by looping over all the corners PICO should see, the smallest distance to an observed corner is found. The average of these values is a measure for uncertainty. The smaller these deviations are, the more accurate the calculated position of PICO is. To be robust against unknown objects any observed corners that do not coincide with corners that are known, are neglected in the calculations. The way this is determined is by taking the standard deviation of all the calculated distances and excluding the corners that have a too large deviation by taking a certain confidence interval. As the uncertainty measure is only reliable when 3 or more corners are observed, a constant value is taken when less than 3 corners are observed. This constant value should not be too small or too big. A too small uncertainty could result in PICO not being able to recover its position when it again sees 3 or more corners, and will be able to relocalise. A too big uncertainty could result in PICO trying to relocalise in a way to big area than necessary, which can put a risk on having a inaccurate localisation. Also, as PICO can still rely on its odometry data, this value can be taken relatively small. Below, a visualization is shown of an accurate localization while an unknown corner is visible. | |||

<center> | |||

<gallery mode=packed heights=300px widths=400px> | |||

File:PF_object_resize.gif|Localisation with unknown corner of obstacle. Initialization. | |||

File:PF_object_driving_resize.gif|Localisation with unknown corner of obstacle. Driving. | |||

</gallery> | |||

</center> | |||

===Object detection=== | |||

Besides the need of being robust against unknown objects, they can also be used in our advantage. This requires having an object detect functionality that makes the previously unknown corners, known. By increasing the number of known corners and reducing the amount of unknown corners, localisation will become increasingly accurate during the challenge. This function only works when PICO is certain enough of its position, i.e. a small uncertainty measure. When this condition is fulfilled, the corners that have a large deviation are added to an object-array. Besides having a good certainty, the unknown corner should be observed multiple times. This is done to make sure PICO does not add objects that are not there, as this would work in our disadvantage. Also, this prevents PICO in adding dynamic objects. Below, a visualization is shown of an unknown corner in the bottom right of the room, after a while this corner is added to the global map. In the second visualization PICO uses this corner to in the localisation algorithm, resulting in a more accurate localisation. | |||

<center> | |||

<gallery mode=packed heights=300px widths=400px> | |||

File:PF_objectdetect_resize.gif|Unknown corner detected as an object. | |||

File:PF_objectdetect_driving_resize.gif|New added corner used for better localisation. | |||

</gallery> | |||

</center> | |||

== | ==World Model== | ||

The ''WORLD MODEL'' stores all of the information of the surroundings of PICO. The world model contains the following information: | |||

= | * Local world model | ||

# ''line segments'': the begin and end coordinates of each line segment is stored. | |||

# gaps: all of the gap coordinates, which are large enough, are stored (only used in escape room challange). | |||

# ''concave/convex edges'': the coordinates of each edge is stored. A struct edge has been added, which also stores is the edge is concave. | |||

# ''raw LRF and odometry data'': each timestep the LRF and odometry data that is made available through PICOs sensors is updated in the World Model as well. This way the other components of the information architecture can retrieve the LRF and odometry data through the World Model. | |||

* Global world model | |||

# ''line segments'': again the begin and end coordinates of each line segment, but now in global coordinates. | |||

# ''concave/convex edges'': again the coordinates of each edge is stored, now in global coordinates. | |||

# ''current and previous position of PICO'' | |||

* Trajectories | |||

# ''waypoints and cabinet locations'': the waypoints, its neighbors and the cabinets, are stored during initialization. | |||

# ''link matrix'': the cost of each link (=path between 1 waypoint to another) is stored in a matrix. | |||

* Mission information | |||

# ''runtime and refresh rate'' | |||

# ''current mission'' | |||

The information stored in the world model is made available for all the other components to use. As can be seen in the information architecture, the World Model acts as the core of the where all information of PICOs environment is stored and updated continuously. During initialization, the config. reader will store the waypoints and map knowledge in the ''WORLD MODEL'' during initialization. This is being done by two separate JSON input files; 1 for the waypoints [4] and 1 for the map knowledge. The config. reader component is a new component added after the escape room challenge. This component can parse through several types of files. For the Hospital challenge, two types of parses have been used: | |||

# ''parseConfigJson()'': parses through the supplied JSON file of the hospital map. | |||

# ''parseConfigJsonWaypoints()'': parses through the waypoint coordinates and neighbors [5]. | |||

==Control== | ==Control== | ||

The ''CONTROL'' algorith is tasked with creating a path towards the cabinet and, using the current strategy, drive towards this cabinet. To create a global path for navigation, waypoints in combination with an A* algorithm are used. These waypoints are chosen in a strategic and computationally efficient way, allowing objects to be avoided but leaving out redundant positions. With links between these points having a weighting and strategically updating these weightings, the global path stays up-to-date. Additionally, in order to avoid hitting objects, doors or cutting corners, a local/sensor-based path following is used with a potential field algorithm. This will act as a safety layer for PICO to avoid collisions. This section will firstly elaborate on the choices made in the global path planning and secondly on the local path following. | |||

===Global path planning=== | ===Global path planning=== | ||

In order for the robot to navigate its way through a roughly known area, it benefits from all prior information about the shape, size and dependencies of the different rooms and corridors. One common way of shaping this information in a tangible and concise manner is by gridding the entire space into smaller areas. Consequently, these areas contain information about the presence of nearby walls or objects, allowing for some path to be calculated from any position to any of the predefined targets. Following this path is then a task for a low-level-controller, which compares the position of the robot to the desired position on the path and asymptotically steers the error to zero. | In order for the robot to navigate its way through a roughly known area, it benefits from all prior information about the shape, size and dependencies of the different rooms and corridors. One common way of shaping this information in a tangible and concise manner is by gridding the entire space into smaller areas. The choices made for defining area’s on the map is explained below in the section on waypoints and links. Consequently, these areas contain information about the presence of nearby walls or objects, allowing for some path to be calculated from any position to any of the predefined targets. The processing of this information is explained in the section on determining intersections and the path calculation algorithm is explained in the A* section. Following this path is then a task for a low-level-controller, which compares the position of the robot to the desired position on the path and asymptotically steers the error to zero. | ||

====Waypoints==== | ====Waypoints==== | ||

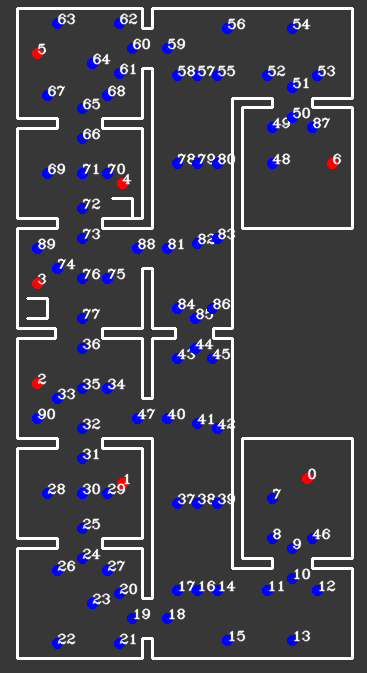

[[File:Waypoints.png|right|thumb|upright=1.5|Waypoints on map.]] | |||

As we know the map and the targets, the choice has been made to process all available data in a strategic and computational efficient manner. Instead of gridding the entire area into equally sized squares or hexagons, a network of waypoints was introduced to capture all the relevant area's to which the robot could have to move to succeed in going from cabinet to cabinet. These waypoints, which are shown in the figure below, could be thought of as only those grid areas that are required to traverse the rooms and corridors, instead of all of them. It would not make sense to consider grid areas in a corner the robot is either unable to ever reach, or is very unlikely to be the optimal path. Instead, waypoints would be cleverly defined before and after doors, in the middle of rooms and corridors and near cabinets. Due to the fact that unknown objects may be encountered while driving through the hospital, multiple waypoints are placed around each room, allowing for multiple routes to be taken between them. Moreover, as can be seen in the large vertical corridor in the figure below, in corridors, multiple waypoints are placed next to each other for redundancy. For both rooms and corridors, the presence of multiple feasible options should be guaranteed, even in the presence of large, possibly dynamical objects. A total of 90 waypoints have been chosen to accurately and efficiently grid the entire hospital layout. | |||

Seven of the waypoints have a special purpose, namely that they represent the positions in front of the cabinets. Specifically, they indicate the position centered in front of the cabinet | Seven of the waypoints have a special purpose, namely that they represent the positions in front of the cabinets. Specifically, they indicate the position centered in front of the cabinet. These waypoints representing cabinets are accompanied by a file containing information on which waypoints represent which cabinets, and which heading is required to face the cabinet. | ||

====Links==== | ====Links==== | ||

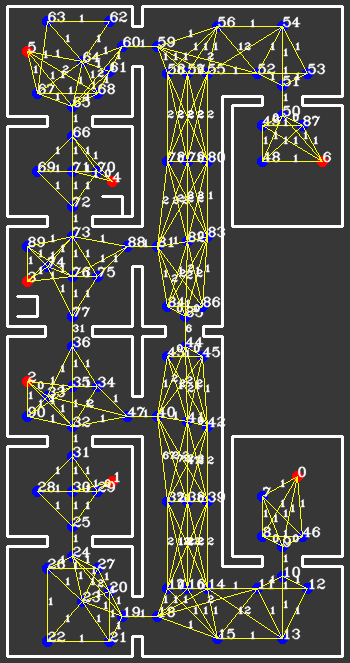

Between all waypoints, links can now be introduced, containing information about feasibility and some measure of the cost to follow that link. These links can be thought of as the roads on a real-life | [[File:Links.png|right|thumb|upright=1.5|Links with corresponding weighting.]] | ||

Between all waypoints, links can now be introduced, containing information about feasibility and some measure of the cost to follow that link. These links can be thought of as the roads on a real-life road-map, where the waypoints are intersections and crossroads. Then, an ordinary satellite navigation system is capable of calculating the optimal path from its current position to some desired destination, using only these links and their associated costs. In fact, by adapting the costs of these links online, or in the case of a real-life system using external communication, a highly flexible dynamic map of all relevant travelling options is maintained. This map can then be used to synthesize an optimal path, with optimality in a user-defined sense. In the case of our robot, we have specified in the input file all possible links that originate from each waypoint. Generally, this means that at each waypoint, approximately 3-8 options are available to continue a path, reducing the computational requirements of the optimal path synthesis. Moreover, a simple function was designed to calculate a relevant measure of cost of a link, which we decided to be the Euclidean distance between the two waypoints the link connects. Travel time was also considered instead of distance, however under the mild assumption of an equal velocity over all links, there seemed to be little benefit. This function is used as the initialization of the network of links, as well as the hard reset of all links by the failsafe. The benefit of only considering the pre-defined options for links is that no checks have to be performed by the robot to prevent links from passing through walls. | |||

The resulting network of waypoints and links is visualized in | The resulting network of waypoints and links is visualized in the figure above, where the links are indicated by the yellow lines. On top of each link, the cost is shown in white, rounded off to an integer. As mentioned above, this cost is basically the only over which is optimized when determining a path from a starting point to a cabinet. Therefore, this cost is the most logical way to dissuade the robot from following certain links. In the situation, for instance, where the robot finds a closed door ahead, it should ‘break’ the link passing through that door by increasing its cost severely. Instead of doing this at the first time a door is observed, the cost of the link through the door is increased rapidly over 10-20 iterations, to prevent noisy measurements from breaking too many links. Each iteration where a link is increased, it is considered whether the current path has become infeasible, after which a new optimal path is calculated. The rapid increase in link costs in the presence of a door and in the presence of a dynamical object are shown in the video below, where special attention should be given to the white numbers accompanying the links. | ||

[[FILE:HO_WIKI_door.gif|Right|frame|300 px|Incremental cost of links in the presence of a closed door and dynamical object.]] | |||

====Detecting intersections==== | ====Detecting intersections==== | ||

Unlike the situation where the network of links is initialized, where use could be made of the pre-defined set of allowed links, a detection is required to find intersections between links and newly found objects. Consider the door of the example mentioned in the previous paragraph, where the door is defined as a line segment between two points. Since we know the exact start and end point of the links as well, we should be able to calculate whether or not the two lines intersect. A first approach, based on linear polynomials of the form y = ax+b falls short in situations where nearly vertical lines are identified, or when the lines do in fact intersect, whereas the line segments do not. Instead, a method was developed based on the definition of a line segment as p_ | Unlike the situation where the network of links is initialized, where use could be made of the pre-defined set of allowed links, a detection is required to find intersections between links and newly found objects. Consider the door of the example mentioned in the previous paragraph, where the door is defined as a line segment between two points. Since we know the exact start and end point of the links as well, we should be able to calculate whether or not the two lines intersect. A first approach, based on linear polynomials of the form y = ax+b falls short in situations where nearly vertical lines are identified, or when the lines do in fact intersect, whereas the line segments do not. Instead, a method was developed based on the definition of a line segment as p_(i,start)+u_i*(p_(i,end)-p_(i,start)), with u_i a number between 0 and 1. Then, two line segments i and j can be equated and a solution for u_i and u_j can be found. Barring some exceptional cases where the line segments are for instance collinear, it can be concluded that an intersection between both line segments only occurs when both 0<=u_i<=1 and 0<=u_j<=1. In our software, this approach was implemented by the code snippet [2]. Then, this function was called, comparing each link to each known line segment on an object. As described in the object detection chapter of this Wiki, the objects are stored as a set of line segments in global coordinates in the ''WORLD MODEL'', ready to be used for detecting intersections. | ||

The intersections between links and objects is not the only relevant case where intersections should be identified to avoid collisions or the robot getting stuck. Identifying a path, using only waypoints and links, is generally not sufficient, as the robot is not always exactly on a waypoint when a new path is calculated. Therefore, ‘temporary links’ may be introduced, originating from the robot its current position and ending in all waypoints, of which the feasibility is not known a priori. Consequently, the same intersection algorithm can be used to assess feasibility for these temporary links, comparing them to known objects around the robot. In order to ensure that the robot does not want to drive along a link which is too narrow for PICO to follow, a ‘corridor’ of PICO's width is used for this intersection detection instead. Two additional temporary links are placed on both sides of the direct line segment between the robot and each waypoint, of which collisions are also checked. An example of a situation where this ‘corridor’ is required to avoid a deadlock is shown in the video below. | |||

[[FILE:HO_WIKI_corridor.gif|right|frame|300 px|Path calculation with 'corridor' intersection detection to avoid impossible path.]] | |||

====A* algorithm==== | ====A* algorithm==== | ||

With this definition and implementation of the waypoints and links, all relevant information for a global path planning algorithm is available. Two simple options come to mind, both with the guarantee that an optimum solution is found, namely the Dijkstra’s and the A* algorithm. They are very similar in the sense that they iteratively extend the most promising path with a new link, all the way until the path to the destination has become the most promising. The difference between the two algorithms lies in the assessment of ‘most promising’. For the Dijkstra’s, the only considered measure for promise is the cost-to-go from the initial position of the robot. This results in an equal spread into all directions of the currently considered paths, resulting in a computationally inefficient solution. However, the Dijkstra’s algorithm is always guaranteed to yield an optimal solutions, if it exists. On the other hand, the A* algorithm not only considers cost-to-go, but also takes into account the cost from the currently considered waypoint to the destination. This yields in a much quicker convergence to the optimal solution, especially in the presence of hundreds or even thousands of links. The exact cost from each waypoint to each destination, however, requires an optimization procedure of its own, quickly losing all benefits from the Dijkstra’s approach. However, it turns out that even a relatively inaccurate guess, often referred to as a heuristic, of the cost from a waypoint to the destination greatly benefits computational speed. In our application, this estimate is simply chosen to be the Euclidean distance, not taking into account walls or other objects. This could have been extended by incorporating some penalty in case the link does intersect a wall, but this did not seem like a large improvement and could have been detrimental for stability of the optimization procedure. The advantage of using the Euclidean distance as the heuristic is that its value is always equal to or lower than the actually achieved cost, which happens to be the requirement for the A* algorithm to be convergent to the optimal solution. Note that this heuristic only needs to be calculated once, since all waypoints and destinations are known beforehand and the Euclidean distance never changes. | With this definition and implementation of the waypoints and links, all relevant information for a global path planning algorithm is available. Two simple options come to mind, both with the guarantee that an optimum solution is found, namely the Dijkstra’s and the A* algorithm. They are very similar in the sense that they iteratively extend the most promising path with a new link, all the way until the path to the destination has become the most promising. The difference between the two algorithms lies in the assessment of ‘most promising’. For the Dijkstra’s, the only considered measure for promise is the cost-to-go from the initial position of the robot. This results in an equal spread into all directions of the currently considered paths, resulting in a computationally inefficient solution. However, the Dijkstra’s algorithm is always guaranteed to yield an optimal solutions, if it exists. On the other hand, the A* algorithm not only considers cost-to-go, but also takes into account the cost from the currently considered waypoint to the destination. This yields in a much quicker convergence to the optimal solution, especially in the presence of hundreds or even thousands of links. The exact cost from each waypoint to each destination, however, requires an optimization procedure of its own, quickly losing all benefits from the Dijkstra’s approach. However, it turns out that even a relatively inaccurate guess, often referred to as a heuristic, of the cost from a waypoint to the destination greatly benefits computational speed. In our application, this estimate is simply chosen to be the Euclidean distance, not taking into account walls or other objects. This could have been extended by incorporating some penalty in case the link does intersect a wall, but this did not seem like a large improvement and could have been detrimental for stability of the optimization procedure. The advantage of using the Euclidean distance as the heuristic is that its value is always equal to or lower than the actually achieved cost, which happens to be the requirement for the A* algorithm to be convergent to the optimal solution. Note that this heuristic only needs to be calculated once, since all waypoints and destinations are known beforehand and the Euclidean distance never changes. | ||

With the choice for A* as the solver and the availability of the waypoints, links and heuristic, the actual implementation of the global path planning is rather simple. The first iteration calculates the most promising path from the robot to any waypoint, taking collisions and | [[FILE:HO_WIKI_newpath.gif|right|frame|300 px|Soft link reset as a result of new cabinet target.]] | ||

With the choice for A* as the solver and the availability of the waypoints, links and heuristic, the actual implementation of the global path planning is rather simple. The first iteration calculates the most promising path from the robot to any waypoint, taking collisions and PICO’s width into account. Next, each new iteration the current most promising path is extended into the most promising direction, while preventing waypoints from being visited twice. This iterative procedure only ends when the current most promising path has arrived at the destination cabinet, after which the route is saved into the ''WORLD MODEL''. Due to the fact that the path remains optimal for any point on the path, as stated by the principle of optimality, it does not need to be re-calculated each time instant. Instead, a new path is only calculated if one of three situations arise, namely a cabinet being reached and a new cabinet awaiting, the aforementioned detection of an object on the current path and the robot entering a failsafe. | |||

Whenever the robot reaches a new cabinet and a new path should be calculated, a soft reset is placed on the cost of all links, meaning that the cost all links which have been briefly blocked by an object are reset back to their original value, being their lengths. This distinguishes between static objects and doors on the one hand, who have cause the links they intersect with to far exceed the soft reset threshold, and noise and dynamic objects on the other hand, which have only been identified briefly and therefore had less effect on the cost of the links. This soft reset ensures that the next calculated path will also be optimal, and not effected by temporary or noisy measurements. When an object is detected on the path, no reset needs to be taken place, as we specifically want the robot to find a new route around the object. Thirdly, when the failsafe is entered and a new path is required to be calculated, depending on the cause of the failsafe a hard reset, forgetting all doors and objects, or a soft reset is performed. | Whenever the robot reaches a new cabinet and a new path should be calculated, a soft reset is placed on the cost of all links, meaning that the cost all links which have been briefly blocked by an object are reset back to their original value, being their lengths. This distinguishes between static objects and doors on the one hand, who have cause the links they intersect with to far exceed the soft reset threshold, and noise and dynamic objects on the other hand, which have only been identified briefly and therefore had less effect on the cost of the links. This soft reset ensures that the next calculated path will also be optimal, and not effected by temporary or noisy measurements. When an object is detected on the path, no reset needs to be taken place, as we specifically want the robot to find a new route around the object. Thirdly, when the failsafe is entered and a new path is required to be calculated, depending on the cause of the failsafe a hard reset, forgetting all doors and objects, or a soft reset is performed. An example of a soft reset caused by the robot arriving at a cabinet and proceeding to drive to the next one is shown to the right. | ||

====Low level control==== | ====Low level control==== | ||

In order to closely follow the optimal path stored in the | In order to closely follow the optimal path stored in the ''WORLD MODEL'', a separate function is developed which is tasked to drive towards a point, which is in our case implemented in the GoToPoint() function. The functionality and approach to this function are similar to the function presented in the escape room challenge. However, for the hospital challenge, the global coordinates are to be provided as an input and a sensor based path planning determines the base reference output values. This is described below, in the local path planning chapter. | ||

===Local path planning - Potential field=== | ===Local path planning - Potential field=== | ||

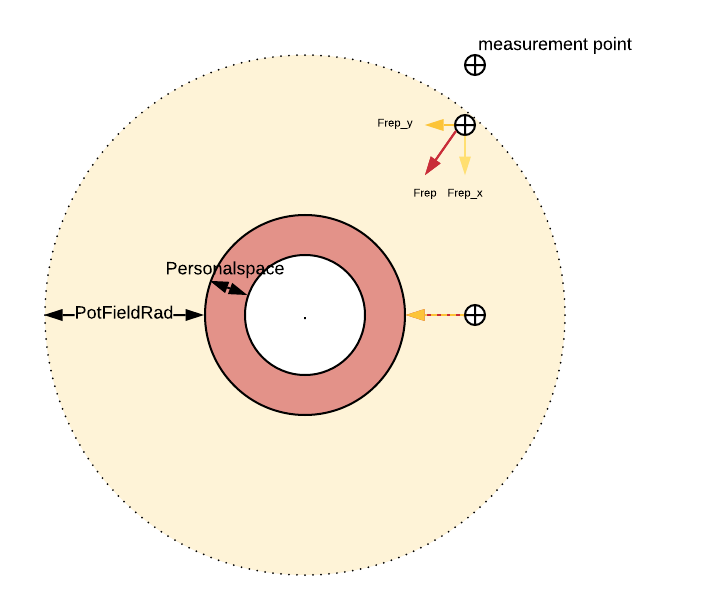

To avoid the robot bumping in to objects, walls or doors, for example | [[File:Blank Diagram(1).png|right|thumb|upright=1.5|Graphic representation of potential field]] | ||

To avoid the robot bumping in to objects, walls or doors, for example in the case of inaccurate localization, a potential field algorithm is implemented. This is a sensor based strategy, determining in which direction to move. Moving away from walls or objects and towards the desired coordinates. A potential force is created by the sum of an attractive force, coming from the target location, and a repulsive force field, coming from LRF data points around the robot. | |||

Every LRF point which is inside the potential field radius (a circle from the origin of the robot, with a tunable radius set to 70 cm), is taken into account creating a repulsive force vector. The repulsive force of a point scales quadratic, depending on the distance from the robot. The repulsive and attractive forces are tuned in such a way that the robot will not come closer to a wall or corner than the 'personal space' (a circle from the origin of the robot, with a tunable radius set to 10 cm plus the half of the width of PICO). A schematic is shown in the figure below. | |||

Choosing the scaling of the repulsive force (e.g. Quadratic or Cubic), as well as the parameters for the potential field and 'personal space', was done during testing. The formula chosen is Frepulsive=constant*(distance to LRF point- (radius robot +personalspace))^-2, with the constant 2*10^6. The repulsive force is then split into the vector in x and in y direction, as seen in the diagram above. The attractive field has a linear decay towards the goal. The combination of both fields is implemented with a cap on speed forwards and sideways of the maximum robot velocity and a cap of 0.1 times the maximum speed for moving backwards. This combination of a quadratic scaling repulsive field and linearly scaling attractive field seemed to have the most smooth and robust results. | |||

A known and common problem with the potential field algorithm is possible local minima. However, in combination with the global path planning with a lot of waypoints and our strategy, local minima are not expected. | |||

== | The GIF below shows the robot driving towards a local point straight ahead of the robot, not bumping into any walls. | ||

[[ | |||

[[File:potentialfield.gif|center|frame|300 px|Driving forward with an active potential field.]] | |||

==Visualization== | |||

The visualization is being done with the help of the OpenCV library, which is available though ROS. The World Model supplies the ''VISUALIZATION'' component with information, which is translated to OpenCV objects (e.g. cv::Point, cv::Circle, etc.). Three different ''VISUALIZATION'' functions meant for debugging have been developed, being: ''plotLocalWorld()'', ''plotGlobalWorld()'' and ''plotTrajectory()''. Each function served the purpose to help debugging a specific part of the code. In the figure below, a simplified information architecture has been made, also showing the part of the code for which the plotting functions were used to debug. | |||

[[File:2020 group6-Visualization-debug.png|thumb|center|upright=0.9|600px|The locations within the code structure, where the plotting function are meant to help debugging.]] | |||

* '' plotLocalWorld()'': which plots the components in the local map: local walls, local edges, PICOs safe-space. | |||

[[File:2020_group6-vis-localworld.gif|frame|center]] | |||

* ''plotGlobalWorld()'': which plots the components in the global map. It plots in red the particles with highest probability. It shows the area (in yellow) in which the particle are being placed. The walls and edges observed via the LRF data can also be seen, this time in global coordinates. | |||

[[File:2020_group6-vis-globalworld.gif|frame|center]] | |||

* ''plotTrajectory()'': which plots the trajectory points and links. The weight of each link is also shown in order to test the link breaking mechanism (e.g. when doors are closed in the hospital). The path which is chosen is highlighted, and the current waypoint to which PICO is navigating is highlighted in orange. | |||

[[File:2020_group6-vis-trajectory.gif|frame|center]] | |||

For the hospital challenge an additional plotting function has been written called, ''plotMission()''. This visualization shows the user a nice overview of the mission, including: | |||

* the cabinet to which PICO is driving, | |||

* the speed of PICO in x, y and theta direction, | |||

* the runtime and framerate, | |||

* the list of cabinets to which PICO is/has driving/driven, | |||

* and a proud Mario. | |||

[[File:2020_group6-vis-missioncontrol.gif|frame|center]] | |||

==Validation== | ==Validation== | ||

First, all parts were separately created and it was confirmed they worked. During this process, different visualizations were made and used to debug the individual components, as explained in the previous section. These visualizations were also used to debug the integrated code when all parts were implemented. | |||

During implementation of all components, various problems were faced and resolved. This section will shed light on the most prevalent challenges. | |||

The implementation of all components made it such that the code was not always able to run at the desired 20 Hz, sometimes it could not even reach 10 Hz. This caused problems in for example the potential field, which does not function properly when sensor data is only updated after a long time. Due to a hold of the base reference values, PICO could run into walls. By adding clock statements in between functions we were able to identify bottlenecks and make the code somewhat more efficient and faster. In order to make very sure PICO does not run into walls when a low code run speed, the PICO speed in the backup code was adjusted to the refresh rate (only in the backup code, because it is a competition and a visualization running into a wall has no big consequences). | |||

Implementing localization together with global path planning, was one of the bigger challenges. When the localization would not be accurate, a lot of links would be broken, causing the robot to follow sub-optimal paths, or even get stuck. Soft resets and hard resets of weights in the link matrix were added, as well as a failsafe for localization and path planning. | |||

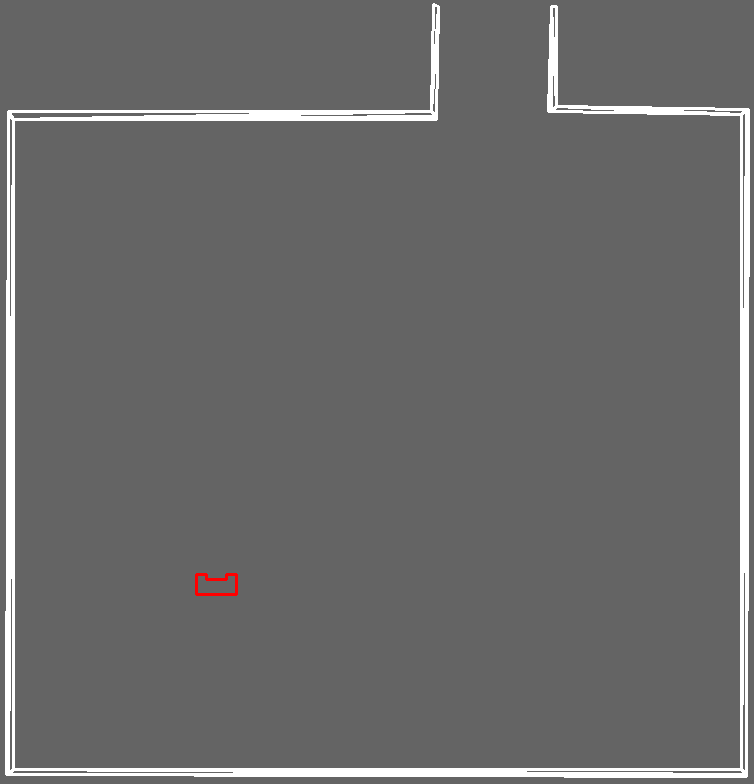

Different maps were created in order to test, alter and validate the code. The GIFs below show three of these maps and PICO successfully completing the challenge given. | |||

[[File:testmap1.gif|center|frame|300 px|Testmap 1.]] | |||

[[File:testmap2.gif|center|frame|300 px|Testmap 2.]] | |||

[[File:testmap3.gif|center|frame|300 px|Testmap 3.]] | |||

==Challenge== | ==Challenge== | ||

. | |||

During the final challenge, PICO did not finish. This was due to two small problems, one observed in the first run and the other in the second run. | |||

The first problem was that PICO was close to the cabinet but did not go into the 'atCabinet' state. During testing, this was observed a few times, however, the robot was almost always able to converge its localization and get out of the position it was temporarily stuck in. | |||

What happened is that the localization was just slightly off. As a result, global planning wanted the robot to move slightly further towards the cabinet. However, the local potential field made sure the robot did not move forward anymore. There are several fixes to this problem: | |||

* (Recommended) Adjusting the measure of certainty needed for localization around the cabinet. This could be very accurate and is not invasive on the code. It would be an addition to the strategy. | |||

* The potential field could be less strict around the cabinet as well as lowering the speed. This, however, could be risky. | |||

* Adjusting the config value which says how far the robot should be in front of the cabinet. This is a very quick fix but it loses the guarantee that the robot is located within the 0.4x0.4 square in front of the cabinet, before performing the pickup procedure. | |||

* Local positioning based on sensors when close to the cabinet, this could be very precise and an elegant solution, but would require some new functions. | |||

The second problem was a problem that never occurred before the challenge. We observed PICO wanting to drive to a way-point that was directly behind a wall. It did not drive through the wall due to the potential field. At this point PICO was stuck. | |||

There are multiple reasons why this happened and multiple options how it could be fixed. | |||

1. PICO should have observed the wall long before it was close to it, he should have broken the link. The reason that PICO did not show this behavior is because the path weight is added to by increments and are dependent on the refresh rate. The refresh rate was very low and PICO did not break the link before it was driving towards the way-point behind the wall. This can be resolved by either a higher and stable refresh rate or an adding of weight based on time instead of iterations. (A very fast, however less elegant, way of resolving this is raising the increment parameter in the config file) To show that the breaking of this link is no problem with a higher frame rate, the GIF below is given | |||

[[File:breakinglink.gif|center|frame|300 px|Breaking a link after observing the door.]] | |||