PRE2018 1 Group2: Difference between revisions

No edit summary |

|||

| (25 intermediate revisions by 3 users not shown) | |||

| Line 11: | Line 11: | ||

* [[0LAUK0 2018Q1 Group 2 - Design Plans Research]] | * [[0LAUK0 2018Q1 Group 2 - Design Plans Research]] | ||

* [[0LAUK0 2018Q1 Group 2 - Programming overview]] | * [[0LAUK0 2018Q1 Group 2 - Programming overview]] | ||

* [[0LAUK0 2018Q1 Group 2 - Behavior Experiment]] | |||

* [[0LAUK0 2018Q1 Group 2 - Prototype Functionality Testing]] | * [[0LAUK0 2018Q1 Group 2 - Prototype Functionality Testing]] | ||

* [[0LAUK0 2018Q1 Group 2 - Prototype User Testing]] | * [[0LAUK0 2018Q1 Group 2 - Prototype User Testing]] | ||

= Progress = | = Progress = | ||

| Line 24: | Line 24: | ||

* [https://drive.google.com/open?id=1W6kJX1ObIKA-OvwnHnnhjOuXd3LTkXWwjuxaI7nPdlc week 5 (08-10)] | * [https://drive.google.com/open?id=1W6kJX1ObIKA-OvwnHnnhjOuXd3LTkXWwjuxaI7nPdlc week 5 (08-10)] | ||

* [https://drive.google.com/open?id=1zibK-VCwIdIJRln_9P9O9krg5RX5BUKDhf4_LhIM93U week 6 (15-10)] | * [https://drive.google.com/open?id=1zibK-VCwIdIJRln_9P9O9krg5RX5BUKDhf4_LhIM93U week 6 (15-10)] | ||

=== Final Presentation === | |||

Our final presentation took place on the 25th of October, 2018. | |||

* [https://drive.google.com/open?id=1yc19F0enVYyGjpjj7pvUZCa8sWsSGNjN6UIPDAe2m3I group 2 final presentation (25-10)] | |||

=== Progression on milestones === | === Progression on milestones === | ||

| Line 100: | Line 104: | ||

=== User Requirements === | === User Requirements === | ||

User-based Requirements | '''User-based Requirements''' | ||

The aim of this AI is to prevent RSI, so in general the AI (table, chair, etc.) needs the requirement | The aim of this AI is to prevent RSI, so in general the AI (table, chair, etc.) needs the requirement | ||

that it is able to adjust itself in order to prevent RSI. This will be done by working with a user | that it is able to adjust itself in order to prevent RSI. This will be done by working with a user | ||

| Line 110: | Line 115: | ||

the interface there needs to be an option to steer the system manually. | the interface there needs to be an option to steer the system manually. | ||

Technical-based Requirements | '''Technical-based Requirements''' | ||

Considering technical requirements, all re-adjustable instruments need to have a little motor in | Considering technical requirements, all re-adjustable instruments need to have a little motor in | ||

order to readjust themselves in the first place. These motors need to be able to receive orders from | order to readjust themselves in the first place. These motors need to be able to receive orders from | ||

both the user interface and the AI system, in order to turn the right amount of degrees. | both the user interface and the AI system, in order to turn the right amount of degrees. | ||

'''Primary Requirements''' | |||

The requirements that our device must fulfill are listed below. Some secondary requirements can be found in the user testing section. | |||

* RSI/CANS is reduced. | |||

* The device is compatible with other system components. | |||

* The device is easy to use; it is user friendly. | |||

* The device doesn't distract or annoy the user. | |||

* The user will be encouraged by the system to take different postures from time to time. | |||

* There is no privacy violation. | |||

= Preparation = | = Preparation = | ||

| Line 155: | Line 170: | ||

= Design = | = Design = | ||

=== Introduction === | === Introduction === | ||

<div style="display: inline; width: 650px; float: right;"> | |||

[[File:infographic.png|600 px|thumb|Concept of Smart Flexplace System]] | |||

</div> | |||

In the [[0LAUK0 2018Q1 Group 2 - SotA Literature Study | literature study]] we found that more office works suffered from issues pertaining to their neck and back than to their arms or hands. We made it clear in our deliverables section that we intend to build at least one prototype that brings one or more of our design plans to life. Given the finding described previously, the team decided that the prototype would focus on minimizing neck issues by tackling the RSI issue of a maladjusted computer monitor. | In the [[0LAUK0 2018Q1 Group 2 - SotA Literature Study | literature study]] we found that more office works suffered from issues pertaining to their neck and back than to their arms or hands. We made it clear in our deliverables section that we intend to build at least one prototype that brings one or more of our design plans to life. Given the finding described previously, the team decided that the prototype would focus on minimizing neck issues by tackling the RSI issue of a maladjusted computer monitor. | ||

| Line 163: | Line 184: | ||

The team started out by doing research into actuators suitable for moving the monitor (strong enough to carry the weight of a monitor, silent enough not to annoy/cause hearing loss for the user). The team also looked into current face tracking technologies. While investigating current software libraries we came across the Open Source Computer Vision Library, OpenCV, which features over 2500 optimized algorithms for computer vision (OpenCV team, n.d.). | The team started out by doing research into actuators suitable for moving the monitor (strong enough to carry the weight of a monitor, silent enough not to annoy/cause hearing loss for the user). The team also looked into current face tracking technologies. While investigating current software libraries we came across the Open Source Computer Vision Library, OpenCV, which features over 2500 optimized algorithms for computer vision (OpenCV team, n.d.). | ||

For now a port of OpenCV ( | For now a port of OpenCV (Borenstein, 2013) for the Processing programming language (Processing Foundation, n.d.) seems highly interesting, as this software is readily available and in personal testing of the software we found that it's demo's worked straight away. However, testing stereoscopy will have to wait until we have the required camera equipment. | ||

Regarding the user experience, the team has to work out what the threshold will be for moving the monitor. We do not want the monitor to move with every movement of the user, as some of these movements have nothing to do with looking at the monitor (for instance, if the user looks down to read something from their paper notes, the monitor should not move in this situation). We also have to look into minimizing privacy concerns. Furthermore, we could measure the variation in user posture over time using logs of the face tracking software. | Regarding the user experience, the team has to work out what the threshold will be for moving the monitor. We do not want the monitor to move with every movement of the user, as some of these movements have nothing to do with looking at the monitor (for instance, if the user looks down to read something from their paper notes, the monitor should not move in this situation). We also have to look into minimizing privacy concerns. Furthermore, we could measure the variation in user posture over time using logs of the face tracking software. | ||

| Line 171: | Line 192: | ||

# The Processing Foundation. (n.d.). ''Processing''. Retrieved from [https://processing.org/] | # The Processing Foundation. (n.d.). ''Processing''. Retrieved from [https://processing.org/] | ||

# Borenstein, G. (2013). ''OpenCV for Processing'' [software library]. Retrieved from [https://github.com/atduskgreg/opencv-processing] | # Borenstein, G. (2013). ''OpenCV for Processing'' [software library]. Retrieved from [https://github.com/atduskgreg/opencv-processing] | ||

=== Literature study === | === Literature study === | ||

| Line 199: | Line 219: | ||

= Prototype = | = Prototype = | ||

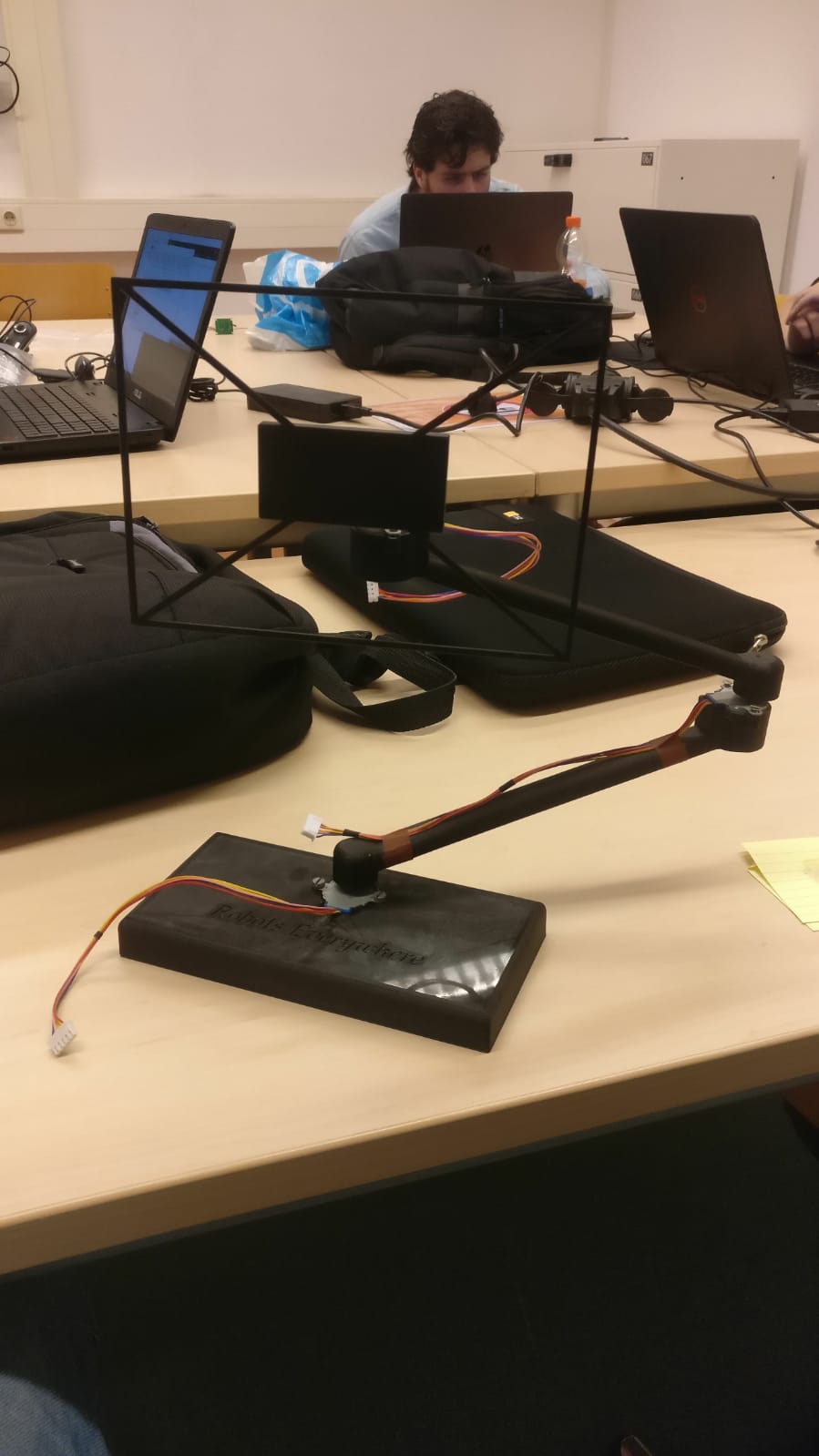

<div style="display: inline; width: 450px; float: right;"> | |||

[[File:prototype_mo.jpeg|400 px|thumb|Prototype of monitor arm]] | |||

</div> | |||

=== Introduction === | === Introduction === | ||

In tandem with | In tandem with conceptualization of the design, the team is also working on creating a functional prototype of an automated RSI preventing computer monitor stand. The goal is to create a prototype that comes as close as possible to our ideal design plan for such a prototype while taking into account budget and time limitations, given that we want to test the functionality of the system. The first test plan that the team devised focusses on whether the developed prototype meets the developed design plans. During week 5 the team will investigate how user satisfaction could be measured, and how a test plan for such research could be set up. | ||

=== Mechanical Design === | |||

When designing the prototype we came across a lot of ideas and concepts for a mechanical arm. This process is described on the [[0LAUK0 2018Q1 Group 2 - Prototype | Prototype page]]. Certain design choices and assumptions are explained as well as recommendations for components of the final monitor arm design. | |||

=== Software === | === Software === | ||

The team has continued to work with the software mentioned in the design section of this wiki. A switch was made from a dual camera, stereoscopic setup to a single camera + infrared sensor setup. The IR sensor is connected to the Arduino that will control movement of the monitor arm. The sensor is capable of measuring distance from 20 to 150 centimeters. | The team has continued to work with the software mentioned in the design section of this wiki. A switch was made from a dual camera, stereoscopic setup to a single camera + infrared sensor setup. The IR sensor is connected to the Arduino that will control movement of the monitor arm. The sensor is capable of measuring distance from 20 to 150 centimeters. | ||

All information regarding the software used in our prototype can be found on [[0LAUK0 2018Q1 Group 2 - Programming overview]] of our wiki.The following layout describes the main objects and functions of the program that will control the automated monitor. What is missing from this layout is the kinematic model, as this aspect will be developed when the 3D monitor arm is finished. | |||

=== Testing === | === Testing === | ||

We performed an initial behavior test using only the posture tracking software that we created in Processing using OpenCV. The goal of this experiment was to qualitatively inspect whether users will realy move around, such that our prototype would actually move around in a real-life setting. Full information on the experiment and its results can be viewed at [[0LAUK0 2018Q1 Group 2 - Behavior Experiment]]. | |||

Two types of research will be performed using the final prototype. First unit and integration tests will be executed to verify that the prototype is in line with our design requirements. This initial test can be found at [[0LAUK0 2018Q1 Group 2 - Prototype Functionality Testing]]. | Two types of research will be performed using the final prototype. First unit and integration tests will be executed to verify that the prototype is in line with our design requirements. This initial test can be found at [[0LAUK0 2018Q1 Group 2 - Prototype Functionality Testing]]. | ||

During the second test the user requirements will assessed using the final prototype. Its main page can be found at [[0LAUK0 2018Q1 Group 2 - Prototype User Testing]]. | During the second test the user requirements will assessed using the final prototype. Its main page can be found at [[0LAUK0 2018Q1 Group 2 - Prototype User Testing]]. | ||

= User Profiles = | |||

=== Input Parameters === | |||

Before the user can use the monitor, he/she first has to create an account. In this account, the user will be asked to fill in some physical properties (these are the input parameters). The following properties will be asked: | |||

* Name. | |||

* Date of birth. | |||

* Gender. | |||

* Length; in order for the monitor to determine at which height it should position itself. | |||

* Physical handicaps; the monitor could take these handicaps into account when moving and determining its position (how the monitor should exactly respond to every handicap should be further investigated). | |||

* Eye strength; in order for the monitor to determine the optimal distance and the optimal brightness. | |||

* Profile picture; in case there are more faces detected by the camera, it could compare those faces to the profile picture of the user who has logged in. It will then concentrate itself to the face that has the most similarities with the profile picture. | |||

=== Motivation === | |||

By defining some so-called 'input parameters', the device has for every user some values to take as reference point when taking an optimal position. This optimal position is based on the input parameters given. The given length tells the monitor what the optimal height is and the eye strength tells what the optimal distance to the user is. At the same time, these parameters can be used to determine an incorrect position (for instance if the user is 2 meters tall but the monitor has to move 10 centimeters down, it is bound that the user doesn't have a good posture). The profile picture can be used to 'memorise' the user's face. In case there are multiple faces spotted by the camera, the monitor will concentrate on the face that has the most similarities to the profile picture of the user logged in. At last some knowledge about physical handicaps of the user could be helpful by letting the device take this into account in determining the optimal position. How this would exactly affect the optimal position should be something for further investigation. | |||

= Recommendations = | |||

In order to make the device more user friendly, it would be a good idea to combine all systems together. The idea is that the monitor, the chair and the table would all cooperate together in the end, to create the optimal working experience for the user (see the picture of the Smart Flexplace System). Furthermore some additional investigation could be done, after having let the users test the device, whether it really makes a difference in reducing RSI and CANS complaints. If so, the device could become a success, if not, some critical investigation and new adjustments should be done in order to get the desired result. | |||

= Further Investigation = | = Further Investigation = | ||

| Line 220: | Line 267: | ||

* Some research could be done on finding an ‘undetectable’ speed; a moving speed of the monitor such that the user barely notices that the screen is moving. | * Some research could be done on finding an ‘undetectable’ speed; a moving speed of the monitor such that the user barely notices that the screen is moving. | ||

* Search for an optimal response time; it could be investigated how long the monitor should wait, when it knows that the movement of the user was not a spontaneous movement, before moving along with the user. What would be most convenient for the user? | * Search for an optimal response time; it could be investigated how long the monitor should wait, when it knows that the movement of the user was not a spontaneous movement, before moving along with the user. What would be most convenient for the user? | ||

* It could be investigated whether it is possible to implement the option to make the viewing | * It could be investigated whether it is possible to implement the option to make the viewing screen adjustable. Instead of moving towards or away from the user, the viewing screen could be projected smaller when the user is closer to the screen, and bigger when the user is further away from the screen. | ||

screen adjustable. Instead of moving towards or away from the user, the viewing screen | * As stated earlier, the final design could be manufactured according to the recommendations given in [http://cstwiki.wtb.tue.nl/index.php?title=0LAUK0_2018Q1_Group_2_-_Prototype#Movement Prototype]. With this final design experiments should be done to find out whether it really performs the way it should. | ||

could be projected smaller when the user is closer to the screen, and bigger when the user is | |||

further away from the screen. | |||

Latest revision as of 00:13, 30 October 2018

Project Robots Everywhere (Q1) - Group 2

Group 2 consists of:

- Hans Chia (0979848)

- Jared Mateo Eduardo (0962419)

- Roelof Mestriner (0945956)

- Mitchell Schijen (0906009)

Overview of all additional pages on our wiki

- 0LAUK0 2018Q1 Group 2 - SotA Literature Study

- 0LAUK0 2018Q1 Group 2 - Design Plans Research

- 0LAUK0 2018Q1 Group 2 - Programming overview

- 0LAUK0 2018Q1 Group 2 - Behavior Experiment

- 0LAUK0 2018Q1 Group 2 - Prototype Functionality Testing

- 0LAUK0 2018Q1 Group 2 - Prototype User Testing

Progress

Weekly Presentations

At the start of each weekly meeting we will prepare a short presentation about our progress. After the weekly meetings the newest presentation will be added to this section of the wiki.

Final Presentation

Our final presentation took place on the 25th of October, 2018.

Progression on milestones

(list of completed milestones + comments about their completion + completion date)

2018-09-06

The team decided on a topic. During the kick-off meeting on monday 2018-09-03 we brainstormed about several topics. During the first week we performed literature studies to inspect their originality and feasibility. We continued brainstorming and decided on a different topic, which you can read about in the remainder of this wiki page. The initial topics are listed below:

- Extending the Smart City concept by adding functionality to satellite navigation. When a driver enters a city, they are asked whether they want to reserve and drive to a free parking spot near their destination. If the driver agrees, their satnav will query the city network, which will then book a free parking spot near the driver's destination. The satnav will automatically change its destination to the chosen parking spot. We were under the impression that such a system would make driving in a unknown city less stressful, and increase the efficiency of city traffic. During an initial literature study we found that there were numerous implementations of this topic. Although each of these implementations differed from our own vision in some way, we eventually decided to look for a different topic.

- Creating a new Guitar Robot that builds upon the work done by PRE2017_4 Group 2. This topic appeared interesting to us as it included building an actual robot. We also had ideas for making a platform were disabled musicians can play each other’s songs, regardless of the specific modification that was done to their instrument. We were concerned about this topic as it had been done before and because there is only one person in our group who currently plays a musical instrument.

Week 2

During the first coaching session several new points of interest for the literature study were found. During week 2 we worked on expanding the literature study with more information about how many people have to deal with RSI, and how RSI can be prevented. The following sections were added to the literature study:

- how big of a problem is RSI

- RSI issues: upper extremity problems

- RSI prevention: how to properly setup a computer monitor

- RSI prevention: how to use breaks to combat RSI

- These additions can be viewed on the 0LAUK0 2018Q1 Group 2 - SotA Literature Study page. The results of these additions to the literature study also provide key information about the creation of the design plans and the creation of a prototype, which will be the focus of week 3 of this project.

Week 3

During this week the group started working on milestone 4: creating design plans. Using the conclusions of the literature studies done in the past two weeks we decided to focus on the automated monitor arm for the first design plan and prototype. The team also tried an existing software library for computer vision that can aid in the creation of a prototype of the automated monitor arm.

Week 4

In order to generate room in our schedule for testing a prototype, this week focused on designing the prototype.

Mitchell worked on the facial tracking software. At the start of the week we obtained two identical webcams, which would be used for testing stereoscopy. However, I soon ran into two problems with this setup. In Processing one can specify a video stream from a camera using the name of the desired camera, the desired resolution and the desired framerate. If Processing can find a video mode that meets all of these parameters, a video capture can be initiated. However, it is not possible to specify a camera by the USB port that it uses to connect to the computer. As such both of the webcams registered in the programming environment as the same device. Combined the two cameras had over 70 video modes available, the first half of this list belonging to one camera, the second half belonging to the second camera. No matter which video modes were chosen, only one video capture was willing to start. I eventually found a work-around, where I had to change the display name of one of the webcams in my computer’s registry. This gave both cameras a distinct name, allowing both of them to be selected. However, this work-around had to be reapplied on almost every reboot of the computer, and it only worked for the specific USB port the camera was plugged in at the time.

The second problem entails that there is no documented method in the port of OpenCV to Processing to calibrate the two cameras. Using a mix of my computer’s integrated webcam and one external webcam or both external webcams made for a limited improvement in depth perception. In both cases I was presented with a highly distorted depth image.

Another issue that needs working on is performance. I am currently working on making the face detection multi-threaded, since the program hovers around five frames per second of output when face detection is performed. Implementing multi-threading immediately increased performance to 30 frames per second, but debugging is needed, as the program would lock up after a second or so. For now I will continue to test with a combination of my computer’s built-in webcam and one of the external webcams. During the next week we will have to swap one of the external cameras for one of a different make or model, such that both can be detected without the problems described above. I am also looking into the calibration of two cameras for stereoscopic vision, as calibration should be able to correct for the differences between different cameras.

Hans worked on controlling the stepper motors that would be used in the prototype.

Roelof worked on the design of the monitor arm. A render of this design is available on our google drive project folder, and is visible here.

Jared worked on devising a test plan in order to assess whether the final prototype will function properly (see section prototype – testing). This first test plan details the unit, integration and user tests needed to evaluate if the prototype will function completely in line with the design plans.

Week 5

During this week the team focussed on creating a test plan for assessing the user requirements. We also further detailed user requirements and privacy concerns.

During this week the team also created designs of the monitor arm that can be 3D printed. Although these 3D printed componenets will not be able to support the weight of a monitor, they can be used to test the functionality of the monitor arm prototype.

Regarding the programming of the prototype, the calls to OpenCV were successfully migrated to a seperate thread, allowing the program to run smoothly regardless of the update rate of the facial detection functions. We also switched to using an IR sensor mounted to the Arduino to measure depth. For week 6 we hope to finalize communication between Processing and the Arduino, as well as finding and incorporating the appropriate transforms for converting the output voltage of the IR sensor into distance in meters, as well as converting distance in pixels to distance in meters in the webcam feed.

Week 6

During this week the team had a closer look at the requirements and both test plans, and implemented changes in both that are carried over to the wiki. The team brainstormed on more proactive behaviors that the system could have, and adapted the test plans such that they can be used to test these proactive behaviors. The literature search for finding appropriate constraints regarding movement speed of the monitor continues, but for now it seems that testing with the prototype will give some of the first benchmarks in this area of research.

3D printing of the components continued, and the program that will power the prototype was expanded upon. Posture tracking is now being worked on, with the program being able to figure out if the user has changed their posture. The program can now also track how long the user maintains their posture. We want to expand this such that the prototype can proactively communicate to the user that they have held the same posture for too long. An overview of how the program works is included in the prototype section of the wiki.

Week 7

During this week the team continued work on the test plans. More components of the monitor arm were 3D printed, and the software is being expanded to be able to gather data on user movement such that we can use statistical software to map how much the user changes their posture while working with their computer. We hope to finish these functionalities at the start of week 8, and to perform an observation where a test subject's posture is tracked while they work on their own laptop. On the Arduino side of things, motor control has been expanded, now that larger sections of the prototype have become functional. Unfortunately some components were not 3D printed properly, which has caused a delay in finalizing the prototype.

Topic

Topic in a nutshell

Our project centers around designing a RSI robot: a smart desk that automatically adjusts itself to the posture of its user to improve comfort, increase productivity and prevent medical conditions that are part of Repetitive Strain Injury (RSI). The working name of this concept is the Smart Flexplace System (SFS).

Problem statement and objectives

Flexplaces are used a lot nowadays by large companies. It occurs often that only a handful of directors have their own office, while the rest of the employees can work anywhere they want improving their productivity and work-attitude . The new Main Building at the TU/e, ATLAS, will also embark flexplaces only. The downside of these flexplaces is that the flexplace is not customarily adjusted for the person working at it, which can lead to bad working conditions. Most users do not exactly know what the best posture is and when to take a small break from work, which can lead to Repetitive Strain Injury (RSI).

To solve this problem 0LAUK0 Group 2 would like to introduce the Smart Flexplace System – TU/e ATLAS 2019 project. In this project the working condition problems of the flexplaces in ATLAS will be solved by studying multiple fields of interest and creating a Smart Flexplace System (SFS) by combining software with adjustable office-hardware. This system will be able to adjust automatically depending on the users posture and profile.

User Description

The RSI preventive AI will be attached to tables, chairs and computers. The users of this AI will therefore be people who work with computers regularly (daily). This will be the case for people working in the ICT sector, as well as for students, project managers, etc. In this study the focus will be specifically on flexible working spaces. Everyone making use of these flexible working spaces can be considered to be the users.

User Requirements

User-based Requirements

The aim of this AI is to prevent RSI, so in general the AI (table, chair, etc.) needs the requirement that it is able to adjust itself in order to prevent RSI. This will be done by working with a user interface. The user can use his or her account to log on into the AI system. This account needs to contain information about the length, size and disabilities of the user in order to set itself to the perfect position to prevent RSI the most. Secondly, so now and then the AI needs to readjust itself again (this can be done by warning the user to sit differently, or by just moving into another position itself). It would also be suitable if the user can adjust the chair, table or computer by himself, so in the interface there needs to be an option to steer the system manually.

Technical-based Requirements

Considering technical requirements, all re-adjustable instruments need to have a little motor in order to readjust themselves in the first place. These motors need to be able to receive orders from both the user interface and the AI system, in order to turn the right amount of degrees.

Primary Requirements

The requirements that our device must fulfill are listed below. Some secondary requirements can be found in the user testing section.

- RSI/CANS is reduced.

- The device is compatible with other system components.

- The device is easy to use; it is user friendly.

- The device doesn't distract or annoy the user.

- The user will be encouraged by the system to take different postures from time to time.

- There is no privacy violation.

Preparation

Approach

As you may have read in the topic section of this wiki, our concept entails the research and design of a smart desk that reduces/prevents RSI. We will use a literature study to gain insights about topics relevant to our goal. We will use both results from the literature study as well as information from our contact person, an Arbo-coordinator at the TU/e, to better define user requirements. From this we will develop design plans that encompass the main components of our concept. We aim to validate those plans with our contactperson, and we want to develop one or more of these design plans into a working prototype. After this prototype has been validated we will present our process, as well as the results of our research, design and prototyping in the final presentation of this course.

Milestones

List of milestones

We have defined several milestones that will guide the progression of our project.

- Choose a research topic.

- Research the State-of-the-Art regarding our topic by performing a literature study

- Use our contact person at the TU/e to gather additional information regarding our case.

- Create design plans that describe the different aspects of our envisioned product.

- Validate our design plans with our contact person.

- Build a prototype that focusses on one or more of our design plans.

- Validate the prototype with our contact person.

- Produce a final presentation in which we will discuss our process, design plans and prototype(s).

Clarification of the milestones

The State-of-the-Art literature study may give us insights that would require us to modify our ultimate goal within this project.

One of our team members has managed to get in touch with an Arbocoordinator at the TU/e. We use the new Atlas building as a case to focus on an application of our concept. We would also like to ask this contactperson to help validate our design plans and any prototype that we are able to build.

The design plans will encompass topics such as the user interface, user profiles, the design of electronic adjustable desks, the design of face tracking monitors.

Deliverables

The following deliverables will be created by the group:

- Design plans that encompass the relevant topics of our concept.

- Build one or more prototypes that implement our design plans.

- A final presentation in which we will discuss the design plans and the prototype(s).

Planning

Our group's planning is available for inspection here

State-of-the-Art literature study

The State-of-the-Art literature study has its own page, which can be found at 0LAUK0 2018Q1 Group 2 - SotA Literature Study.

Design

Introduction

In the literature study we found that more office works suffered from issues pertaining to their neck and back than to their arms or hands. We made it clear in our deliverables section that we intend to build at least one prototype that brings one or more of our design plans to life. Given the finding described previously, the team decided that the prototype would focus on minimizing neck issues by tackling the RSI issue of a maladjusted computer monitor.

The prototype will be an automatically adjusting computer monitor stand. It will feature two cameras that will make use of stereoscopic video to measure the distance between the user and the monitor. It will also keep track of head movements that the user might make. These measurements are used to move the monitor when necessary. Moving the monitor is necessary when the user changes their posture in such a way that their current posture is at odds with RSI prevention guidelines (for example, sitting too close to the monitor). Actuators in the base of the monitor will allow the monitor to move to an optimal RSI preventing stance.

We found that to reduce/prevent RSI the user needs to stay in motion by changing posture. During a visit to Tijn Borghuis at the IPO building we were shown one of the height-adjustable desks that will be used in the Atlas building. since these height adjustable desks can work as a sitting desk and a standing desk, it is safe to assume that the user will have varying posture throughout the working day when using such a desk. This enforces our case for the integration of an automatically adjusting monitor (along with the fact that there are many manually adjustable monitor arms already on the market).

The team started out by doing research into actuators suitable for moving the monitor (strong enough to carry the weight of a monitor, silent enough not to annoy/cause hearing loss for the user). The team also looked into current face tracking technologies. While investigating current software libraries we came across the Open Source Computer Vision Library, OpenCV, which features over 2500 optimized algorithms for computer vision (OpenCV team, n.d.).

For now a port of OpenCV (Borenstein, 2013) for the Processing programming language (Processing Foundation, n.d.) seems highly interesting, as this software is readily available and in personal testing of the software we found that it's demo's worked straight away. However, testing stereoscopy will have to wait until we have the required camera equipment.

Regarding the user experience, the team has to work out what the threshold will be for moving the monitor. We do not want the monitor to move with every movement of the user, as some of these movements have nothing to do with looking at the monitor (for instance, if the user looks down to read something from their paper notes, the monitor should not move in this situation). We also have to look into minimizing privacy concerns. Furthermore, we could measure the variation in user posture over time using logs of the face tracking software.

References

- OpenCV team. (n.d.). About. Retrieved from [1]

- The Processing Foundation. (n.d.). Processing. Retrieved from [2]

- Borenstein, G. (2013). OpenCV for Processing [software library]. Retrieved from [3]

Literature study

Our exact findings are available on the 0LAUK0 2018Q1 Group 2 - Design Plans Research page.

Privacy Concerns

The main goal of our system is to detect if the user has bad posture while working, and to make sure that working in a RSI preventive fashion is done as seamlessly and effortlessly as possible; every aspect of the user’s desk automatically adapts to their posture.

The main privacy concern of this system entails that it might look similar to the equipment described in 1984 (Orwell, 1949). Indeed, the user will have a camera and IR sensor looking in their direction for the entire duration of office work. As such it is pertinent to explain that our system is not capable of the atrocities that are often associated with video monitoring.

No capabilities to store and analyze data in the long term

The system uses a webcam to detect faces that are looking at the monitor. The system operates entirely in RAM, meaning that no permanent storage is used. The only time the system accesses the filesystem is during startup, when several configuration files are read, e.g. to choose the appropriate camera.

Limiting face detection

At a set interval the system will use the current frame of the webcam output to determine how many faces are looking at the monitor. The prototype will most likely use a simple image matching algorithm to determine which of the faces belongs to the user. We assume that the user will be sitting in a RSI preventing posture in front of the monitor at startup. The system will store the pixel matrix of the face detected most closely to the center of its field of view in memory. When more than one face is detected, the stored copy of the user’s face is used to determine which of the faces is the correct user. Please note that the system first tries to find the correct face using depth ques; a small face most likely belongs to a person standing very far away (unless the user is a small child, but this would be detected during startup when the face is initially detected). The system could also predict the location of the users face, based on acceleration calculated from previous input frames. This last method would not require a copy of the pixel matrix of the user’s face, but it might be unable to always find the user’s face.

Coping with cybercrime

An industry ready version of this system needs to be able to deal with cybercrime. We feel that two methods should be employed to ensure the safety of the users. The system should run on a separate server that is not connected to the internet.

An initial concept of the system envisioned it running as a background program on the user’s computer, where the user is free to opt-out of using the system if they really wanted to. However, if the user’s computer was to be targeted with malware, our system could be compromised. This is a point where we could still perform extra research. On the one hand, running the system on a server physically and digitally separated from possible malware on the user’s system is beneficial, it also introduces a single point of failure (take out the server, take down or compromise the entire system), and would make it harder for the user to use the webcam already built into their monitor for video calls, such as conference calls. On the other hand, during testing we found that the facial detection methods in OpenCV are already multithreaded, and have no issue in saturating the entire CPU. Meaning that, unless we can make the system work reliably with very few samples (one every couple of seconds), we do run the risk of the user’s computer using more electricity and outputting more heat (environmental concerns). On low-end systems it could drastically reduce productivity, on higher end systems it could also cause an unwarranted decrease in productivity.

Furthermore, the system should enforce a method of memory protection (sandboxing), that makes sure that no other program is capable of accessing and/or modifying the memory used by the system. In practice this means employing technology that is similar to anti-cheat software used in certain video-games. For more information, see https://en.wikipedia.org/wiki/Cheating_in_online_games#Anti-cheating_methods_and_limitations

References

- Orwell, G. (1949). Nineteen eighty-four. London: Secker and Warburg.

Prototype

Introduction

In tandem with conceptualization of the design, the team is also working on creating a functional prototype of an automated RSI preventing computer monitor stand. The goal is to create a prototype that comes as close as possible to our ideal design plan for such a prototype while taking into account budget and time limitations, given that we want to test the functionality of the system. The first test plan that the team devised focusses on whether the developed prototype meets the developed design plans. During week 5 the team will investigate how user satisfaction could be measured, and how a test plan for such research could be set up.

Mechanical Design

When designing the prototype we came across a lot of ideas and concepts for a mechanical arm. This process is described on the Prototype page. Certain design choices and assumptions are explained as well as recommendations for components of the final monitor arm design.

Software

The team has continued to work with the software mentioned in the design section of this wiki. A switch was made from a dual camera, stereoscopic setup to a single camera + infrared sensor setup. The IR sensor is connected to the Arduino that will control movement of the monitor arm. The sensor is capable of measuring distance from 20 to 150 centimeters.

All information regarding the software used in our prototype can be found on 0LAUK0 2018Q1 Group 2 - Programming overview of our wiki.The following layout describes the main objects and functions of the program that will control the automated monitor. What is missing from this layout is the kinematic model, as this aspect will be developed when the 3D monitor arm is finished.

Testing

We performed an initial behavior test using only the posture tracking software that we created in Processing using OpenCV. The goal of this experiment was to qualitatively inspect whether users will realy move around, such that our prototype would actually move around in a real-life setting. Full information on the experiment and its results can be viewed at 0LAUK0 2018Q1 Group 2 - Behavior Experiment.

Two types of research will be performed using the final prototype. First unit and integration tests will be executed to verify that the prototype is in line with our design requirements. This initial test can be found at 0LAUK0 2018Q1 Group 2 - Prototype Functionality Testing.

During the second test the user requirements will assessed using the final prototype. Its main page can be found at 0LAUK0 2018Q1 Group 2 - Prototype User Testing.

User Profiles

Input Parameters

Before the user can use the monitor, he/she first has to create an account. In this account, the user will be asked to fill in some physical properties (these are the input parameters). The following properties will be asked:

- Name.

- Date of birth.

- Gender.

- Length; in order for the monitor to determine at which height it should position itself.

- Physical handicaps; the monitor could take these handicaps into account when moving and determining its position (how the monitor should exactly respond to every handicap should be further investigated).

- Eye strength; in order for the monitor to determine the optimal distance and the optimal brightness.

- Profile picture; in case there are more faces detected by the camera, it could compare those faces to the profile picture of the user who has logged in. It will then concentrate itself to the face that has the most similarities with the profile picture.

Motivation

By defining some so-called 'input parameters', the device has for every user some values to take as reference point when taking an optimal position. This optimal position is based on the input parameters given. The given length tells the monitor what the optimal height is and the eye strength tells what the optimal distance to the user is. At the same time, these parameters can be used to determine an incorrect position (for instance if the user is 2 meters tall but the monitor has to move 10 centimeters down, it is bound that the user doesn't have a good posture). The profile picture can be used to 'memorise' the user's face. In case there are multiple faces spotted by the camera, the monitor will concentrate on the face that has the most similarities to the profile picture of the user logged in. At last some knowledge about physical handicaps of the user could be helpful by letting the device take this into account in determining the optimal position. How this would exactly affect the optimal position should be something for further investigation.

Recommendations

In order to make the device more user friendly, it would be a good idea to combine all systems together. The idea is that the monitor, the chair and the table would all cooperate together in the end, to create the optimal working experience for the user (see the picture of the Smart Flexplace System). Furthermore some additional investigation could be done, after having let the users test the device, whether it really makes a difference in reducing RSI and CANS complaints. If so, the device could become a success, if not, some critical investigation and new adjustments should be done in order to get the desired result.

Further Investigation

In case that our design would become a real project, there are several things that could be investigated a little bit more:

- Some research could be done on finding an ‘undetectable’ speed; a moving speed of the monitor such that the user barely notices that the screen is moving.

- Search for an optimal response time; it could be investigated how long the monitor should wait, when it knows that the movement of the user was not a spontaneous movement, before moving along with the user. What would be most convenient for the user?

- It could be investigated whether it is possible to implement the option to make the viewing screen adjustable. Instead of moving towards or away from the user, the viewing screen could be projected smaller when the user is closer to the screen, and bigger when the user is further away from the screen.

- As stated earlier, the final design could be manufactured according to the recommendations given in Prototype. With this final design experiments should be done to find out whether it really performs the way it should.