Autonomous Referee System: Difference between revisions

No edit summary |

|||

| (67 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

<div align="left"> | <div align="left"> | ||

<font size="4">'An objective referee for robot football'</font> | |||

<font size="4">'An objective referee for robot | |||

</div> | </div> | ||

= | <div STYLE="float: left; width:80%"> | ||

</div><div style="width: 35%; float: right;"><center>{{:Content_MSD16_large}}</center></div> | |||

__NOTOC__ | |||

A football referee can hardly ever make "the correct decision", at least not in the eyes of the thousands or sometimes millions of fans watching the game. When a decision will benefit one team, there will always be complaints from the other side. It is oft-times forgotten that the referee is also merely a human. To make the game more fair, the use of technology to support the referee is increasing. Nowadays, several stadiums are already equipped with [https://en.wikipedia.org/wiki/Goal-line_technology goal line technology] and referees can be assisted by a [http://quality.fifa.com/en/var/ Video Assistant Referee (VAR)]. If the use of technology keeps increasing, a human referee might one day become entirely obsolete. The proceedings of a match could be measured and evaluated by some system of sensors. With enough (correct) data, this system would be able to recognize certain events and make decisions based on these event. | |||

The aim of this project is to do just that; making a system which can evaluate a soccer match, detect events and make decisions accordingly. Making a functioning system which could actually replace the human referee would probably take a couple of years, which we don't have. This project will focus on creating a high level system architecture and giving a prove of concept by refereeing a robot-soccer match, where currently the refereeing is also still done by a human. This project will build upon the [[Robotic_Drone_Referee|Robotic Drone Referee]] project executed by the first generation of Mechatronics System Design trainees. | |||

To navigate through this wiki, the internal navigation box on the right side of the page can be used. | |||

<center>[[File:tumbnail_test_video.png|center|750px|link=https://www.youtube.com/embed/XyRR3rPQ4R0?autoplay=1]]</center> | |||

=Team= | |||

This project was carried out for the second module of the 2016 MSD PDEng program. The team consisted of the following members: | This project was carried out for the second module of the 2016 MSD PDEng program. The team consisted of the following members: | ||

* | * Akarsh Sinha | ||

* | * Farzad Mobini | ||

* Joep Wolken | |||

* Joep Wolken | * Jordy Senden | ||

* | * Sa Wang | ||

* | * Tim Verdonschot | ||

* | * Tuncay Uğurlu Ölçer | ||

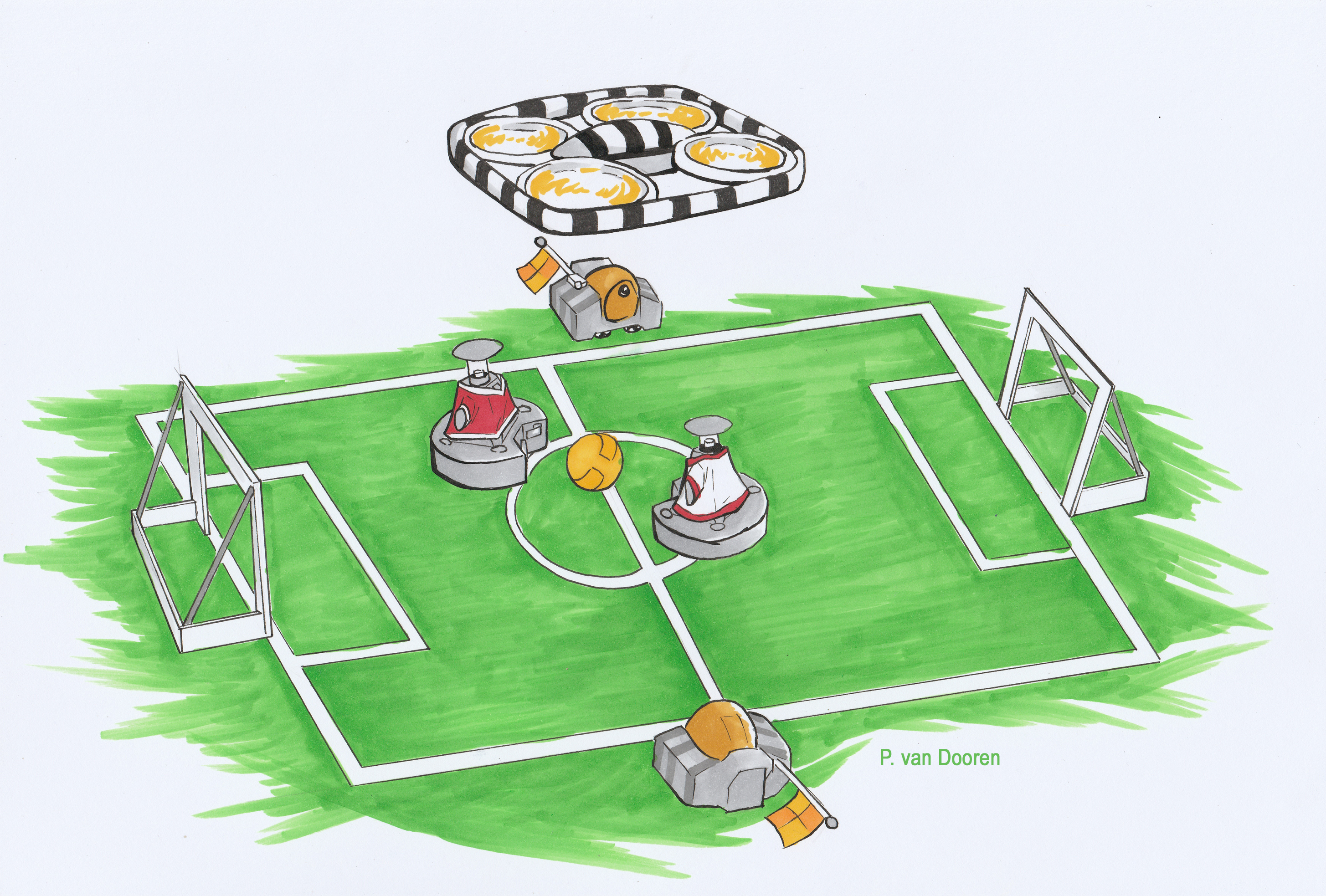

<center>[[File:Drone Ref.png|thumb|center|1000px|Illustration by Peter van Dooren, BSc student at Mechanical Engineering, TU Eindhoven, November 2016.]]</center> | |||

< | |||

[ | |||

=Acknowledgements= | |||

A project like this is never done alone. We would like to express our gratitude to the following parties for their support and input to this project. | |||

<center>[[File:logoAcknowledgements.png|center|1000px]]</center> | |||

</ | |||

<!-- | |||

==Ground Robot== | ==Ground Robot== | ||

| Line 68: | Line 60: | ||

** The GR should be able to keep the ball in sight of its Kinect camera. If the ball is lost, GR should try to find it again with the Kinect. | ** The GR should be able to keep the ball in sight of its Kinect camera. If the ball is lost, GR should try to find it again with the Kinect. | ||

** Since the ball is best tracked with the Kinect, the omni-vision camera can be used to keep track of the players. | ** Since the ball is best tracked with the Kinect, the omni-vision camera can be used to keep track of the players. | ||

<br> | <br> | ||

| Line 102: | Line 93: | ||

==Drone== | ==Drone== | ||

*AR Parrot Drone Elite Addition 2.0 | |||

*19 min. flight time (ext. battery) | |||

*720p Camera (but used as 360p) | |||

*~70° Diagonal FOV (measured) | |||

*Image ratio 16:9 | |||

===Drone control=== | |||

*Has own software & controller | |||

*Possible to drive by MATLAB using arrow keys | |||

*Driving via position command and format of the input data is a work to do | |||

*x, y, θ position feedback via top cam and/or UWBS | |||

*z position will be constant and decided according FOV | |||

==Positioning== | ==Positioning== | ||

| Line 163: | Line 165: | ||

***#Detect if defaulter changes direction of movement within t seconds | ***#Detect if defaulter changes direction of movement within t seconds | ||

</p> | </p> | ||

==Image processing== | |||

===Capturing images=== | |||

'''Objective''': Capturing images from the (front) camera of the drone. | |||

'''Method''': | |||

*MATLAB | |||

** ffmpeg | |||

** ipcam | |||

** gigecam | |||

** hebicam | |||

* C/C++/Java/Python | |||

** opencv | |||

… | |||

No method chosen yet, but ipcam, gigecam and hebicam are tested and do not work for the camera of the drone. FFmpeg is also tested and does work, but capturing one image takes 2.2s which is way too slow. Therefore, it might be better to use software written in C/C++ instead of MATLAB. | |||

===Processing images=== | |||

'''Objective''': Estimating the player (and ball?) positions from the captured images. | |||

'''Method''': Detect ball position (if on the image) based on its (orange/yellow) color and detect the player positions based on its shape/color (?). | |||

== Top Camera == | |||

The topcam is a camera that is fixed above the playing field. This camera is used to estimate the location and orientation of the drone. This estimation is used as feedback for the drone to position itself to a desired location. | |||

The topcam can stream images with a framerate of 30 Hz to the laptop, but searching the image for the drone (i.e. image processing) might be slower. This is not a problem, since the positioning of the drone itself is far from perfect and not critical as well. As long as the target of interest (ball, players) is within the field of view of the drone, it is acceptable. | |||

=References= | =References= | ||

<references/> | <references/> | ||

--> | |||

Latest revision as of 16:07, 24 October 2017

'An objective referee for robot football'

- 1. Main

- 2. Project

- 2.1 Background

- 2.2 System Objectives

- 2.2 Project Scope

- 3. System Architecture

- 3.1 Paradigm

- 3.2 Layered Approach

- 3.3 System Layer

- 3.4 Approach Layer

- 3.5 Implementation Layer

- 4. Implementation

- 4.1 Tasks

- 4.2 Skills

- 4.3 World Model

- 4.4 Hardware

- 4.5 Supervisory Block

- 4.6 Integration

- 6. Manuals

A football referee can hardly ever make "the correct decision", at least not in the eyes of the thousands or sometimes millions of fans watching the game. When a decision will benefit one team, there will always be complaints from the other side. It is oft-times forgotten that the referee is also merely a human. To make the game more fair, the use of technology to support the referee is increasing. Nowadays, several stadiums are already equipped with goal line technology and referees can be assisted by a Video Assistant Referee (VAR). If the use of technology keeps increasing, a human referee might one day become entirely obsolete. The proceedings of a match could be measured and evaluated by some system of sensors. With enough (correct) data, this system would be able to recognize certain events and make decisions based on these event.

The aim of this project is to do just that; making a system which can evaluate a soccer match, detect events and make decisions accordingly. Making a functioning system which could actually replace the human referee would probably take a couple of years, which we don't have. This project will focus on creating a high level system architecture and giving a prove of concept by refereeing a robot-soccer match, where currently the refereeing is also still done by a human. This project will build upon the Robotic Drone Referee project executed by the first generation of Mechatronics System Design trainees.

To navigate through this wiki, the internal navigation box on the right side of the page can be used.

Team

This project was carried out for the second module of the 2016 MSD PDEng program. The team consisted of the following members:

- Akarsh Sinha

- Farzad Mobini

- Joep Wolken

- Jordy Senden

- Sa Wang

- Tim Verdonschot

- Tuncay Uğurlu Ölçer

Acknowledgements

A project like this is never done alone. We would like to express our gratitude to the following parties for their support and input to this project.