Mobile Robot Control 2024 The Iron Giant: Difference between revisions

| (86 intermediate revisions by 6 users not shown) | |||

| Line 22: | Line 22: | ||

|1228489 | |1228489 | ||

|} | |} | ||

The final work is organized following a normal report structure as can be seen in the table of content. The localization exercises are improved based on the midterm feedback and placed before the introduction. The content in this report mostly reflects the new work after the midterm exercises and contains as little as repetition as possible. | |||

== Localization exercises improvement == | == Localization exercises improvement == | ||

| Line 192: | Line 166: | ||

The algorithm works fast and accurate such that the estimated position follows closely to the robot. This is verified on the real setup as shown in the video in the link below. | The algorithm works fast and accurate such that the estimated position follows closely to the robot. This is verified on the real setup as shown in the video in the link below. | ||

'''[https://gitlab.tue.nl/mobile-robot-control/mrc-2024/the-iron-giant/-/blob/main/4_localization/localization_shown_on_real_bot.mp4?ref_type=heads Localization test on | '''[https://gitlab.tue.nl/mobile-robot-control/mrc-2024/the-iron-giant/-/blob/main/4_localization/localization_shown_on_real_bot.mp4?ref_type=heads Localization test on Hero]''' | ||

Although somewhat difficult too see, | Although somewhat difficult too see, Hero has the difficult task to locate itself even though one of the larger walls is removed at the start. Hero glitches forward because it thinks it is already further along the hallway but as soon as Hero actually reaches the end of the hallway it finds itself back and moves without any problems through the remainder of the map. Clearly shown in the final shot of the video is the laser data mapped over the RVIZ map showing a close approximation. | ||

== Introduction == | == Introduction == | ||

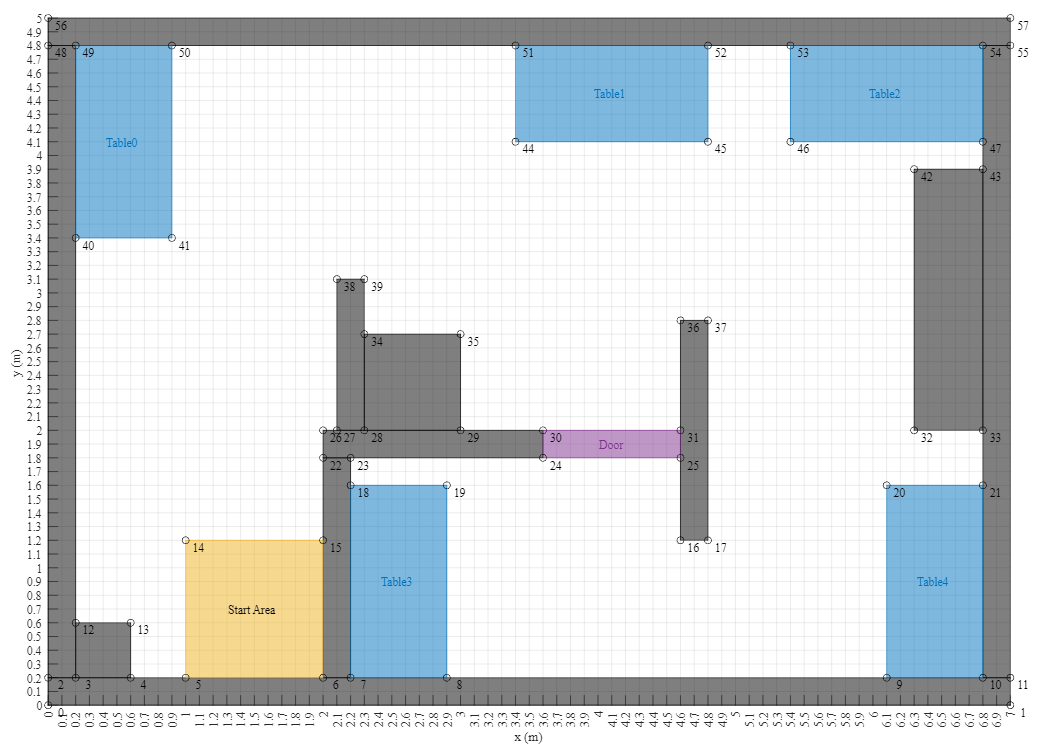

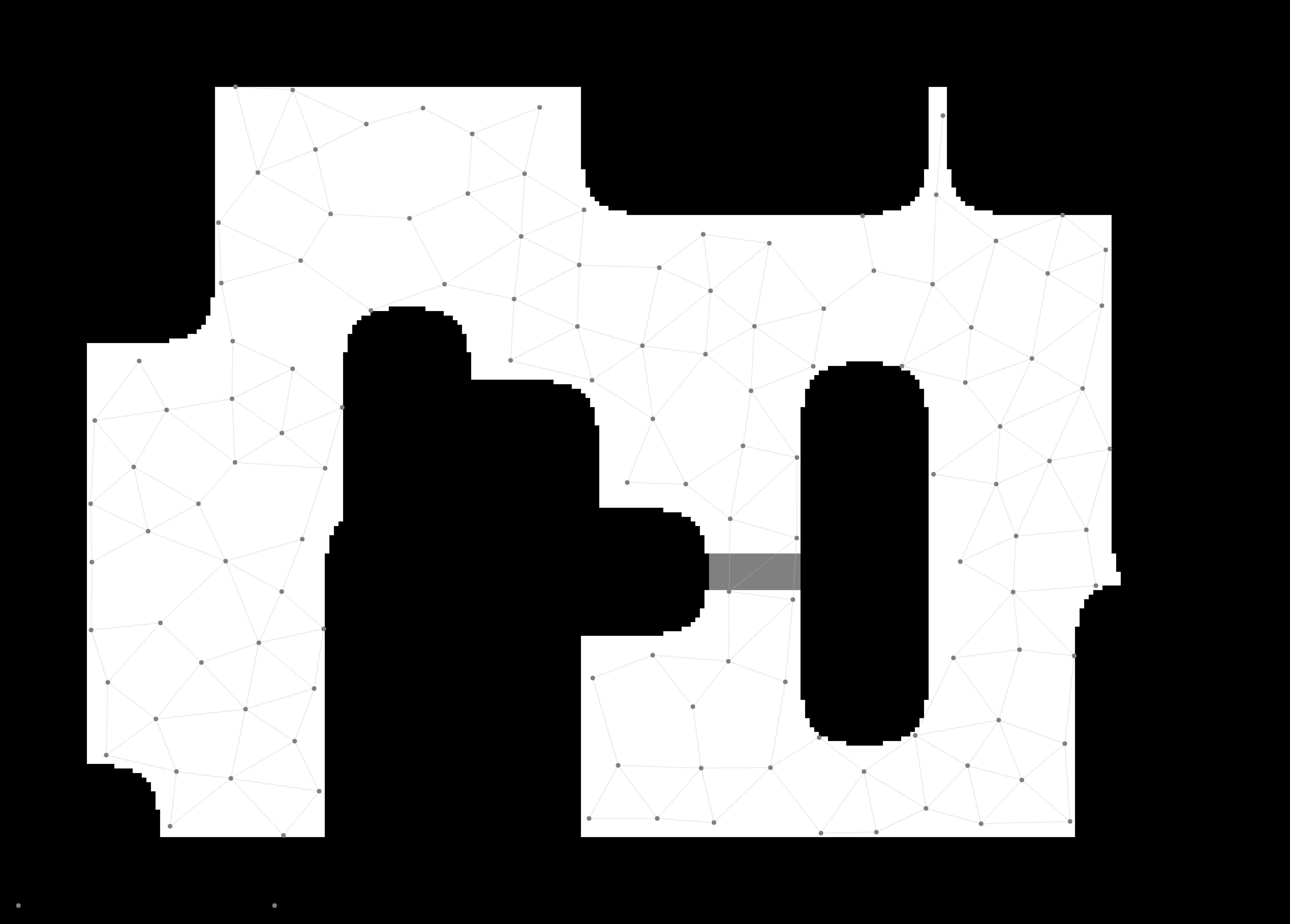

[[File:RestaurantMap2.png|thumb|395x395px]] | |||

The Restaurant Challenge is a great way to showcase Hero's skills in navigating and interacting autonomously in a dynamic, unpredictable setting. This challenge simulates a real-world scenario where Hero has to efficiently deliver orders to various tables in a restaurant. | |||

The layout of the restaurant map from the final challenge is shown in the figure to the right. The main goal of the Restaurant Challenge is to have Hero successfully deliver orders from the starting area to specific tables. The tables are assigned right before the challenge starts. Hero needs to navigate to each table, position itself correctly, and then signal its arrival so customers know their order has arrived. It has to do this for each table in the predefined sequence, without going back to the starting area. | |||

Several constraints are introduced to create a realistic and challenging environment for Hero. The restaurant has straight walls and tables that show up as solid rectangles in Hero’s LiDAR measurements. There are also static obstacles like chairs and dynamic obstacles like people moving around. Testing Hero's adaptability to dynamic change. Hero has to navigate around these in real-time, avoiding major collisions while ensuring smooth deliveries. In the final stages of the challenge, a corridor is even blocked. Forcing Hero to find its way along a different path through a door to the final table. The order in which Hero visits the tables is pre-set at the start, guiding its sequence of actions. | |||

The | |||

In this report, we discuss the components used by Hero during the Restaurant Challenge, detailing how they were chosen and why. We also explain the testing process for each component and how everything came together in the final challenge. These observations and arguments are based on video samples from simulations, testing and even the final challenge. | |||

These | |||

== Component selection for challenge == | |||

To complete the challenge successfully, several independent components need to work together in unison to lead Hero to its goals. Firstly, the supplied table order should be converted to locations on the map and at these locations, the robot should dock the table and give customers time take their order. This should ensure that the robot can precisely navigate to the intended delivery points. Next, static obstacles should be added to the map so the robot knows what places to avoid. By incorporating static obstacles, the robot can generate a safe and efficient path, minimizing the risk of collisions and ensuring smooth operation. This step is crucial for maintaining the safety of both the robot and the surrounding environment. | |||

Additionally, the robot should find a new route if the initial route is obstructed. Implementing dynamic path planning allows the robot to adapt to changing conditions in real-time, ensuring that it can navigate around unexpected obstacles and continue its task without interruption. Furthermore, the robot should also signal when a door is closed and continue its way when it is opened, or find a new route if it stays blocked. This feature ensures that the robot can handle common environmental changes, such as doors being opened or closed. | |||

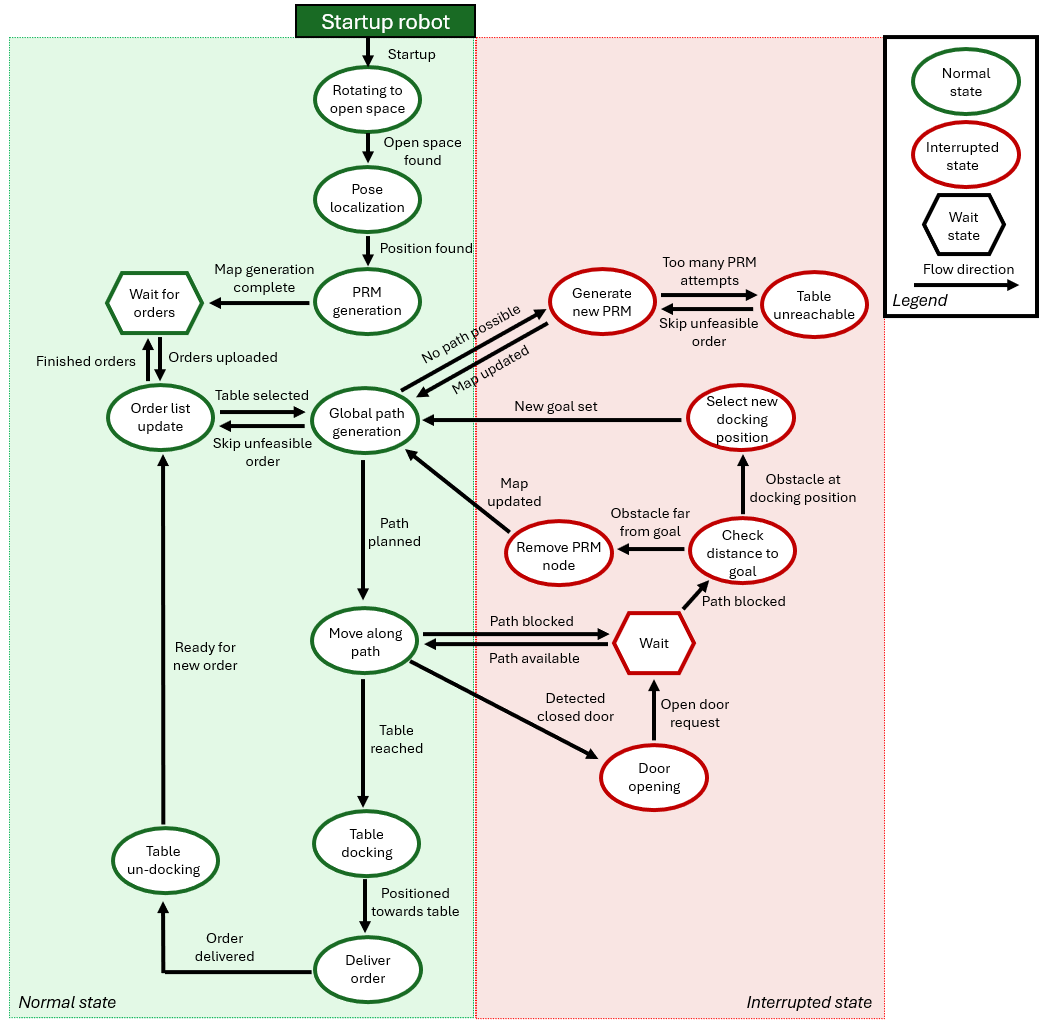

With this information, a state machine was made that incorporated all the components and their dependencies. The state machine was designed to manage the robot's behaviour and transitions between different states, ensuring that all components work together seamlessly. Each state corresponds to a specific task or decision point, allowing the robot to handle complex scenarios in a structured and organized manner. | |||

== State flow == | |||

The state flow for Hero is shown in the figure on the right of the state descript. The robot code works identical to this state flow by implementing a state machine in C++. This allowed us to debug code for Hero very efficiently and ensure that separate components worked most efficiently. The components of the state flow will be elaborated here. The specific inner working of the functions and states is described further on in the wiki. The explanation about the state machine below is exactly as the states are written in the code, these are not completely one to one as described in the figure describing the states of the robot. The state diagram figure gives a more organized overview of the state machine and the idea behind it where as the explanation below gives a detailed explanation of the working of the code. | |||

The main code updates each loop the laser, odometry data and it updates the localization. Then it enters the state machine where it goes to the state specified. In the state machine, the corresponding tasks are executed and either a new state is specified or the same state is kept for the next loop. Next, the poseEstimate of the robot is updated and the velocity is send to the robot. Then the loop ends and the next loop is started if the cycle time is completed. The system runs on 5Hz, meaning the data updates and the state machine is run every 0.2 seconds. The state machine and data flow will now be described in further detail. | |||

==== Robot startup ==== | |||

[[File:State flow FINAL BOT.png|thumb|729x729px|The state flow diagram, representing the state machine created to tackle the Mobile Robot Challenge. One can make a distinction between the normal and the interrupted states.]]The startup states are only called when Hero starts up and are not revisited. | |||

The | |||

'''1. Pose initialization:''' The first state initializes pose finding by rotating Hero away from the wall to orient it towards open space. It moves to state '''2. Localization and rotation'''. | |||

'''2. Localization and rotation:''' In the second state, Hero tries to localize itself for 10 timesteps. It will keep rotating to aid in localizing itself. Then, it moves to state '''3. PRM generation'''. | |||

'''3. PRM generation:''' The final startup state initializes the PRM, generating the nodes and connections, and the state machine moves to state '''4. Order update'''. | |||

==== Normal states ==== | |||

When Hero does not encounter any hurdles (shown by the green states), this sequence of states is followed to deliver all plates. Naturally, dynamic changes in the environment call for adaptive behavior indicated by the interrupted (red) states. | |||

'''4. Order update:''' From state '''3. PRM generation''', we move to state '''4. Order update''' in which the robot updates the order list. In this state, it can also reselect another location at the same table. This only happens when the first docking point is blocked recognized in state '''6. Movement''' (move along path state). After state '''4. Order update''', we always go to state '''5. A* path generation''' (global path generation state). | |||

'''5. A* path generation:''' In this state, the A* function is called to find a path based on the PRM and the order list. If no path can be found we enter our first interrupted state where it reinitializes the entire PRM; '''10. PRM reinitialization''' (generate new PRM state). If a path is found, we can start moving along our path now and we move to state '''6. Movement'''. | |||

'''6. Movement:''' State '''6. Movement''' is the movement state in which most potential interruptions present itself. Hero can encounter several situations in which the robot will go to an interrupted state. Ideally, the robot moves from node to node, following the path generated by the A* function in state '''5. A* path generation'''. The movement between nodes is governed by the local planner. If it has reached its final node, it will go to state '''7. Table docking''' to dock at the table and deliver the plate. However, if the path is blocked and the local navigator cannot find a path around the obstacle (which is nearly always the case per design in our case), Hero will go to state '''11. Door node check''' to check if it is stuck due to a closed door. If no door is in the way and HERO is closed to the final table (less than 2 setpoints to the final setpoint), Hero will go to state '''4. Order update''' to select a new position at the table to drop off its plate. Finally, if Hero gets stuck further away from the table, it goes to state '''12. Obstacle introduction''' (wait state) to introduce the blockage on the PRM map. | |||

'''7. Table docking:''' State '''7. Table docking''' is the docking state. Here, Hero will delicately approach the table in this state. When the robot is close enough and oriented towards the table, it goes to state '''8. Delivery idling ('''deliver order''')'''. | |||

'''8. Delivery idling:''' In this state, Hero will first wait 5 seconds to allow guests to take their plat before it goes to the next state. In the next state, state '''9. Table undocking''', Hero rotates away from the table to allow for better localization (sometimes Hero would think it is located in the table when facing the table). | |||

'''9. Table undocking:''' In this state, the robot will rotate to the opposite direction and it will go back to state '''4. Order update''' when it has rotated away from the table far enough. This will allowthe robot to get a new table to deliver the next order. | |||

==== Interrupted states ==== | |||

'''10. PRM reinitialization:''' State '''10. PRM reinitialization''' is called when no path can be found to the table. In this case, the introduced obstacles in the map are removed. These are obstacles that may be introduced in state '''12. Obstacle introduction'''. The obstacles are removed by reinitializing the PRM from the starting restaurant map to generate a new path without obstacles. We go back to state '''4. Order update'''. | |||

'''11. Door node check:''' In this state, Hero checks if the door has been opened earlier in the run and it checks whether his target node from the PRM is located next to a door. If the blocked path is likely caused by the door being closed, it will move to state '''13. Door open request'''. | |||

'''12. Obstacle introduction:''' This state is entered when a unknown object is located on the path while the robot is not too close from a table or door. The target nodes, including all nodes within a certain radius are removed. We move back to state '''5. A* path generation''' to generate a new path. | |||

'''13. Door open request:''' In this state Hero will ask for the door to be opened and go to state '''14. Door opening idling'''. | |||

'''14. Door opening idling:''' In state '''14. Door opening idling''', Hero will be idle for ten seconds to wait for the door to be opened and it moves on to state '''15. Door opened check'''. | |||

'''15. Door opened check:''' After waiting in state '''14. Door opening idling''', the robot looks in a narrow cone in front of it and scans if the door has been opened. If it remains closed it moves back to state '''6. Movement''' and it will add the door as an obstacle following the states from there. If it is opened, it moves to state '''16. Door map update'''. | |||

'''16. Door map update:''' If the door has been opened, Hero will update the world model. Removing the door from the world map to make sure the localization is not lost. Then it loops back to state '''6. Movement''' to move through the door. | |||

== Data flow == | == Data flow == | ||

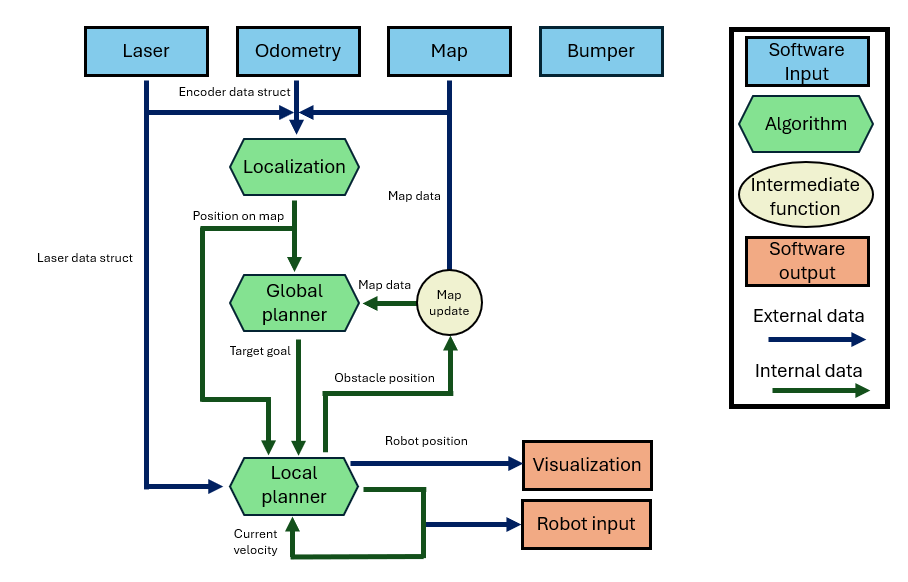

[[File:Data flow diagram The-iron giant.png|thumb|440x440px|Data flow]] | [[File:Data flow diagram The-iron giant.png|thumb|440x440px|Data flow]] | ||

The data flow is an important part of the code functionality. Therefore, a data flow diagram is created which clearly shows how inputs are connected to algorithms which output information to Hero. The data flow diagram is shown in the figure on the right. | |||

There are three main input originating from the robot and one input originating from the user. Important to notice is that the Bumper input is not connected. This component had simulation software bugs, which hindered the functionality of the sensor. Therefore, we decided to omit the bumper data from the task. The bumper sensor has the added value of measuring if Hero hits an obstacle which is not detected by the LiDAR sensor. This makes the sensor very valuable and therefore still displayed in the data flow. | |||

==== Laser data ==== | |||

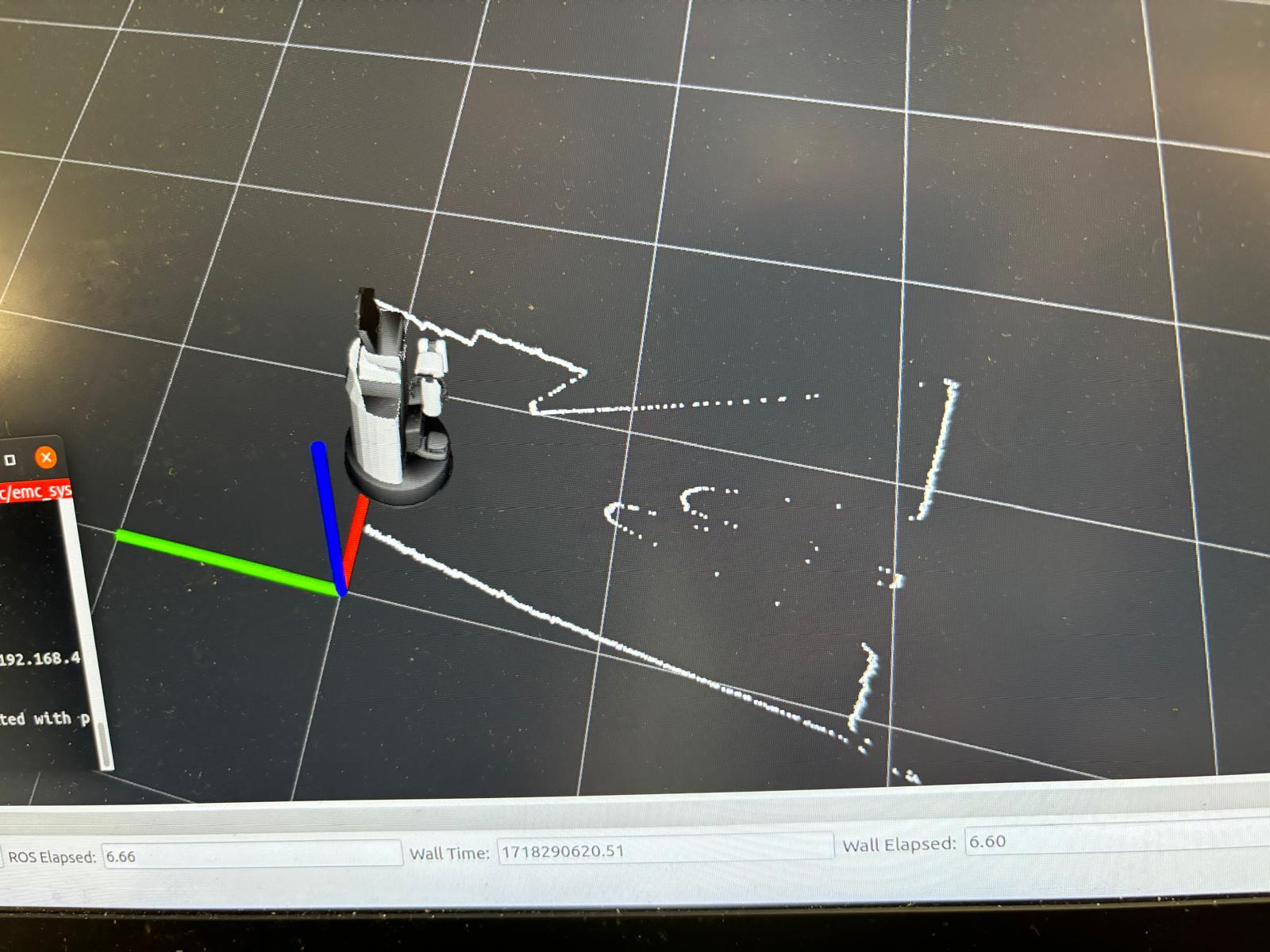

Our laser data returns a set of LiDAR scans from approximately [-110°,110°] with respect to the front of Hero. These laser scans show a number, which is the distance that specific ray has hit a target. The laser scans are used for two distinct purposes. The first one is vision; Hero should recognize (dynamic) objects that are not reported on its theoretical map. The robot should either avoid or stop when it is about to hit other objects. This is further described in the Local Planner. Secondly, the laser data is used to localize the robot. Due to imperfect odometry conversions between theoretical and real movement, for example due to slipping of the wheels, the robot's theoretical and real position will diverge over time. The localization is created to correct for these errors and prevent divergence. The laser data is used for this purpose, further illustrated in the localization section. | |||

==== Odometry data ==== | |||

The odometry data is provided by motion sensors on the wheels to estimate Hero's position over time. As the robot turns both or one of its wheels, the new location of the robot can be estimated. However, in real situations there is significant noise, especially by the previously mentioned wheel slipping, that makes the odometry not completely reliable over longer periods of time. In order to correct for the position of the robot, localization updates the real location of the robot. For the location estimation, odometry data is a big factor as it is used to predict the initial guess of the new location of the robot after a certain time step. Laser data in combination of the map data is then used to correct the position of Hero such that the robot's virtual location does diverge from its actual position over time. | |||

==== Map data ==== | |||

The maps are provided in the memory of Hero. The map describes the environment in which hero moves around. This is firstly used for localization. The combination of the laser data on objects and walls on the map are used to estimate the robot's location on said map. This is combined with the odometry data to estimate Hero's position. Both the exercises on localization as well as the localization section elaborate on this. Secondly, in our implementation for the robot challenge, Hero relies heavily on adding obstacles to the map that are not represented in the initially provided map. Adding new stationary objects to the map permanently, such that no subsequent paths will be reinitialized through these objects. This is further described in the Global Planner section. While it is likely possible to complete the robot challenge with a single provided map, we have also supplied Hero with a second map in which the door is changed to an opening instead of a wall. This is a big improvement for the localization, as the robot can lose its position when driving through a door which is considered as a wall in the world map. In often cases, losing Hero's location permanently. The map is changed when the robot recognizes the door has been opened, as described the Localization section, fully solving this issue. | |||

==== Data output ==== | |||

The data flow has two output components. The first data output is the data output of velocity towards the robot. The robot has his own internal control algorithm that will ensure that the robot will drive the prodivided velocity (if the velocity is feasible). The second data output is the data output of estimated position towards the laptop. Visualization of where the robot thinks he is allows the fast debugging of localization, global path and sensor problems. | |||

== Components == | == Components == | ||

| Line 267: | Line 260: | ||

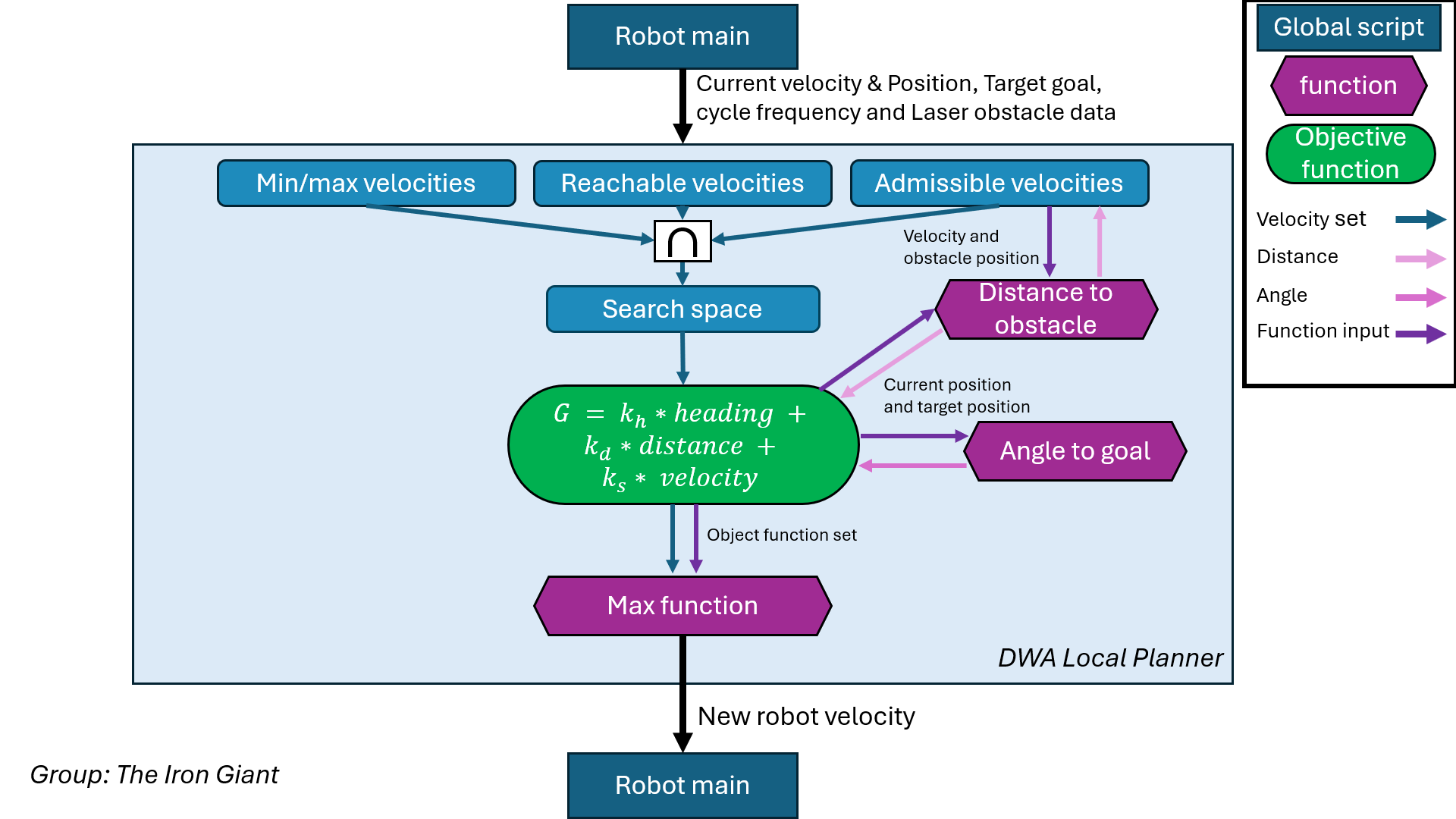

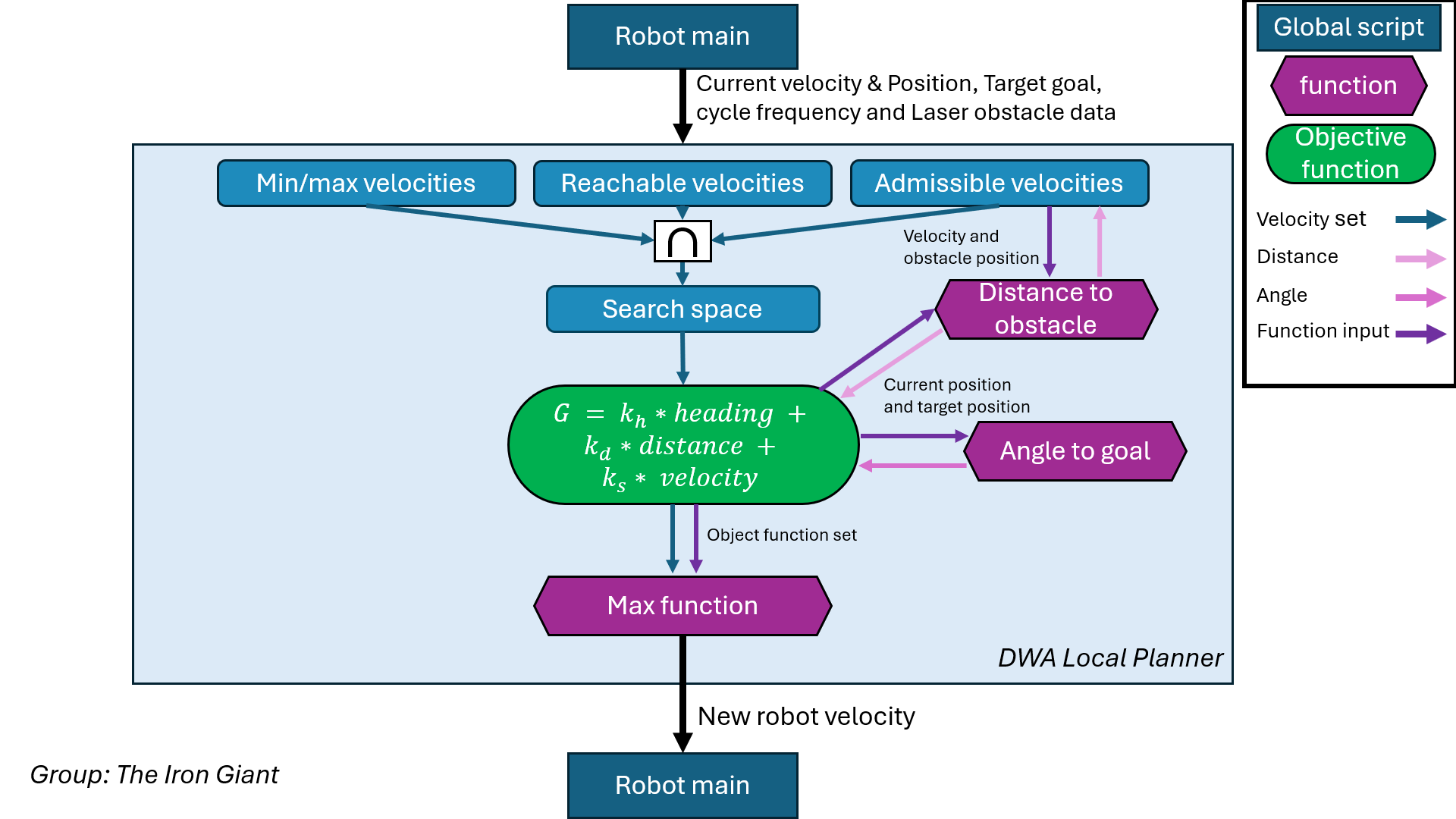

The tunable parameters of the DWA consists of parameters in the objective function, the velocity and acceleration of the robot and the stopping distance. The parameters of the objective function are tuned to aim more direct on the setpoints generated by the global planner and get less deviated by the distance to obstacles. This reduces the obstacle avoidance functionality of the DWA slightly but ensures that the DWA has improved performance for many setpoints close together. Since the obstacle avoidance is now taken on by a seperate algorithm this improves the total performance of the robot. The velocity of the robot was slightly reduced and the acceleration parameters are lowered while the deceleration parameters are kept the same which resulted in a smoother but still safe navigation. | The tunable parameters of the DWA consists of parameters in the objective function, the velocity and acceleration of the robot and the stopping distance. The parameters of the objective function are tuned to aim more direct on the setpoints generated by the global planner and get less deviated by the distance to obstacles. This reduces the obstacle avoidance functionality of the DWA slightly but ensures that the DWA has improved performance for many setpoints close together. Since the obstacle avoidance is now taken on by a seperate algorithm this improves the total performance of the robot. The velocity of the robot was slightly reduced and the acceleration parameters are lowered while the deceleration parameters are kept the same which resulted in a smoother but still safe navigation. | ||

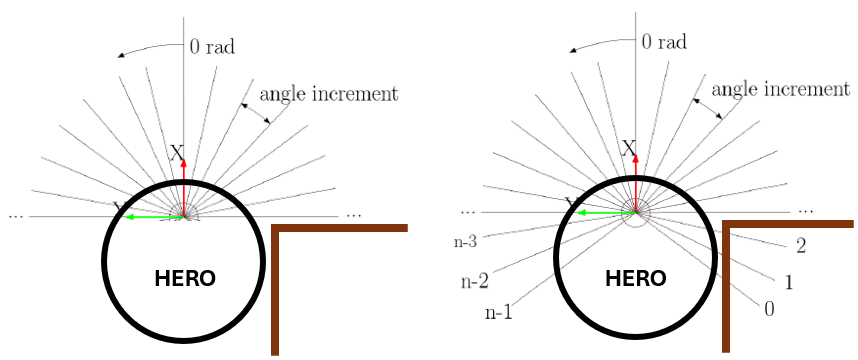

Increasing the vision range of the DWA algorithm was also an important change to the algorithm for the final challenge. The algorithm took only part of the range of laser measurements into account to improve the speed of the algorithm (shown in the DWA vision range figure on the right). This worked well on the Rossbots and was also tested on | Increasing the vision range of the DWA algorithm was also an important change to the algorithm for the final challenge. The algorithm took only part of the range of laser measurements into account to improve the speed of the algorithm (shown in the DWA vision range figure on the right). This worked well on the Rossbots and was also tested on Hero with a single target setpoint. However, while testing the complete system Hero started to hit edges of wall when turning in a corner. The DWA algorithm could not see these edges as shown in brown in the figure on the right. The main reason why the reduction of sensor data could not work has to do with the location of the LiDAR sensor. The Rossbots have a LiDAR sensor more in the middle of the robot where Hero has its lider on the front of the base. Since the DWA only allowes the robot to move forward this problem would have been less of an issue if the LiDAR would have been in the middle of the robot. | ||

==== Local planner - Dynamic objects & Ankle detection ==== | |||

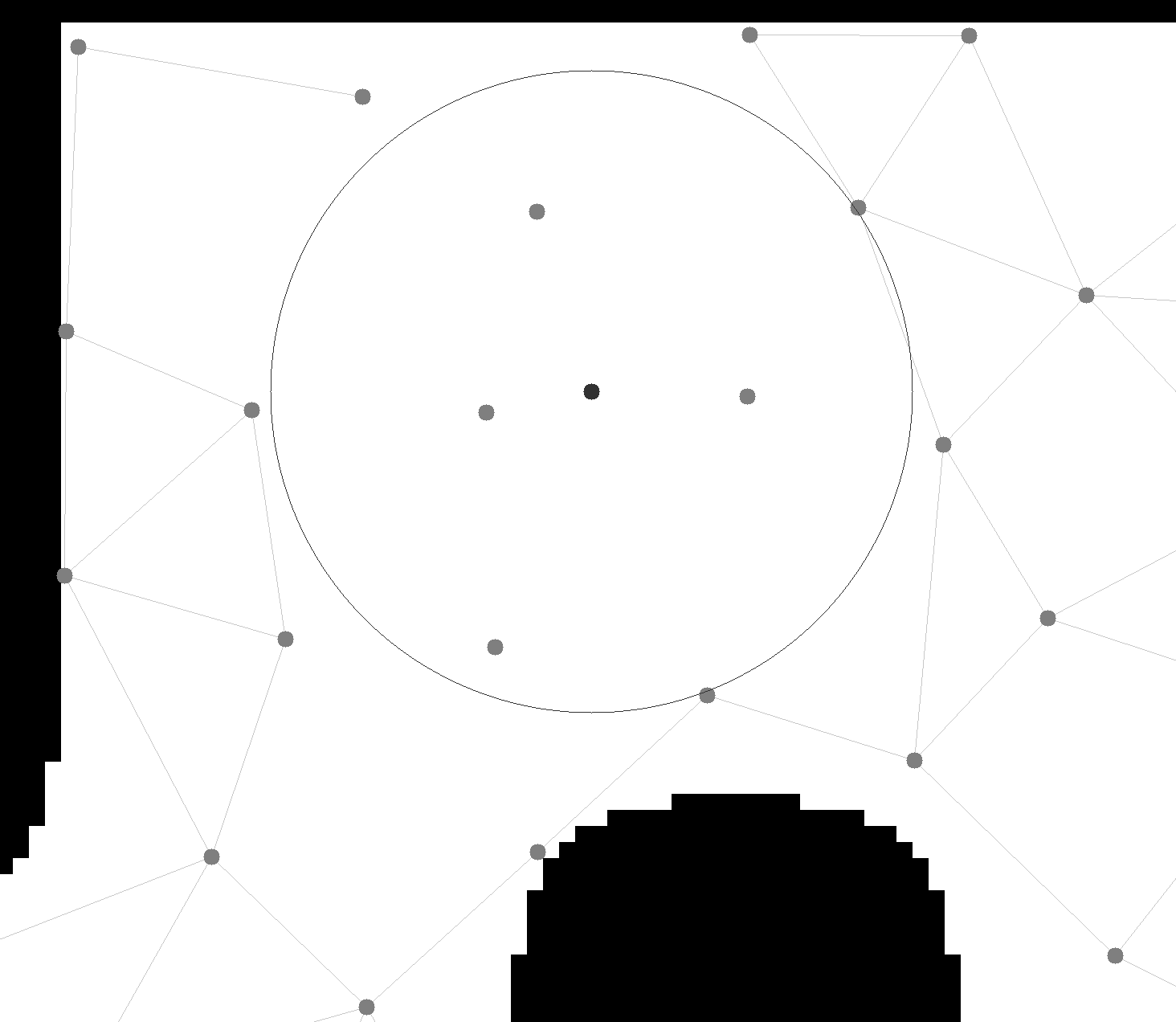

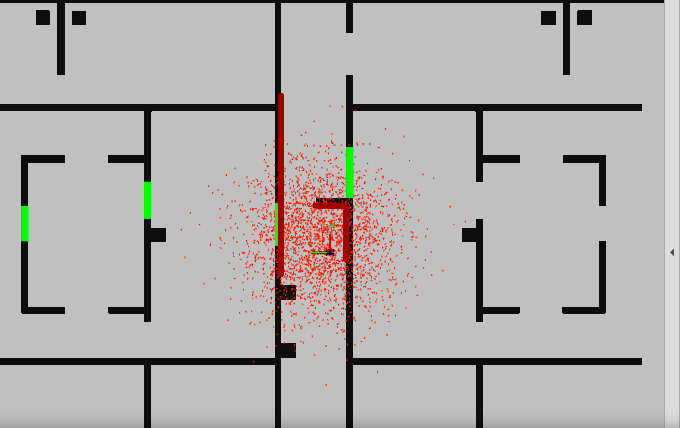

While moving from node to node along its path, Hero may encounter an obstacle. A restaurant is a dynamic environment with people moving and static objects being moved to locations the robot may not have expected it. We divide dynamic objects into three categories; humans, static objects and doors. Our DWA local planner is designed to adhere to the proposed path and it does not diverge from the path too much to avoid obstacles. We made this decision to make it easier to add static objects to our PRM map and plan routes around the objects. Although the local planner prevents the robot from crashing into objects or doors, the reaction of the robot is mainly governed by processes that have to do with the global planner. Therefore, the way Hero interacts with static objects and doors is described in the Global Planner section. However, we will describe how the robot was designed to deal with unpredictable moving humans in this paragraph, as this is closely tied to the local navigator. The main goal for detecting ankles is increasing the safety distance of the DWA to avoid hitting humans or their feet. While Hero can see ankles, it is unable to detect feet which lie below the LiDAR’s height.[[File:WhatsApp Image 2024-06-26 at 13.42.35 9a958f04.jpg|thumb|507x507px|An example of how the robot sees ankles, we can see two halve circles with 'tails' of LiDAR points trailing back from the sides of the ankles.]] | |||

We detect ankles, or in our case also chair legs, by the LiDAR's interaction with circular objects. Generally, when a circular object is detected by the LiDAR, we see a ‘V’ shape, with the point of the V pointing towards the robot and both tails of the ‘V’ can be seen as lines on both sides of the leg. As if a parabola is wrapping around the leg of a chair or human. This phenomenon can be seen in the figure to the right. Objects consisting of straight edges do not show such shapes on the LiDAR, only circular objects. We use this property to detect ankles. The behavior of these parabola is that there are several consequent laser data scans that approach the robot followed by several consequent laser data scans that move away from the robot. We introduce a condition that detects ankles if enough laser scans increase and decrease in succession between a minimum and maximum distance value within a certain vision bracket of the robot. These are only consecutive points in which the distance of LiDAR points changes by 1.5-20cm. And we review if consecutive points move away or move toward the robot. While walls that are slightly tilted from the robot do have the property of LiDAR points getting closer or further away from the robot with relatively large successive distance difference, we never see a wall that shows both. Only very pointy walls directed at the robot will cause these measurements, but these are not used in the restaurant setup. By limiting the view only to small brackets within the robot's vision, we avoid cases in which the robot detects ankles when the robot detects laser points moving away quickly at the very left side of its vision and approaching from the right side of its vision. Finally, only a limited vision slice of about 90 degrees in front of the robot is included in the scan and only points closer than 50 cm to the robot are processed. LiDAR points satisfying these requirements are added to a total. Looking at a small section of Hero's vision, if large distance differences '''towards''' and '''away''' from the robot between consecutive laser scans are measured, ankles are detected. The DWA safety distance would then increase by 10cm for 16 seconds. If Hero has another LiDAR reading instance for which ankles are detected, the safety distance time is reset to 16 seconds again. In the final challenge, our ankle detection was not completely tuned properly. It did not trigger every time it encountered an ankle. It should have been slightly more sensitive. | |||

In this example from the final challenge, Hero does recognize the ankles. This happened once, even though it stopped for a human three times. Although it is hard to hear, it gives audio feedback to confirm this. The result of the detection is that the DWA's safety distance has been increased for 16 seconds. During testing, Hero would sometimes still recognize ankles at e.g. table corners. Therefore, the ankle detection sensitivity was reduced. Unfortunately, this resulted in some ankles not being detected. | |||

[https://gitlab.tue.nl/mobile-robot-control/mrc-2024/the-iron-giant/-/blob/main/5_final_challenge/Final_Challenge_Videos/Ankle2.mp4 Ankle detection video final challenge] | |||

=== Global planner === | |||

---- | |||

The global planner, as its name implies, is the component focused upon the planning of the global path. The path is created using the two sub-components, the Probabilistic Road Map (PRM) that generates a graph to plan a path over and the A* algorithm that uses the graph to plan the optimal path towards the intended goal. Together these components provide an optimal path towards a goal within the known map. | |||

==== Probabilistic roadmap (PRM) ==== | |||

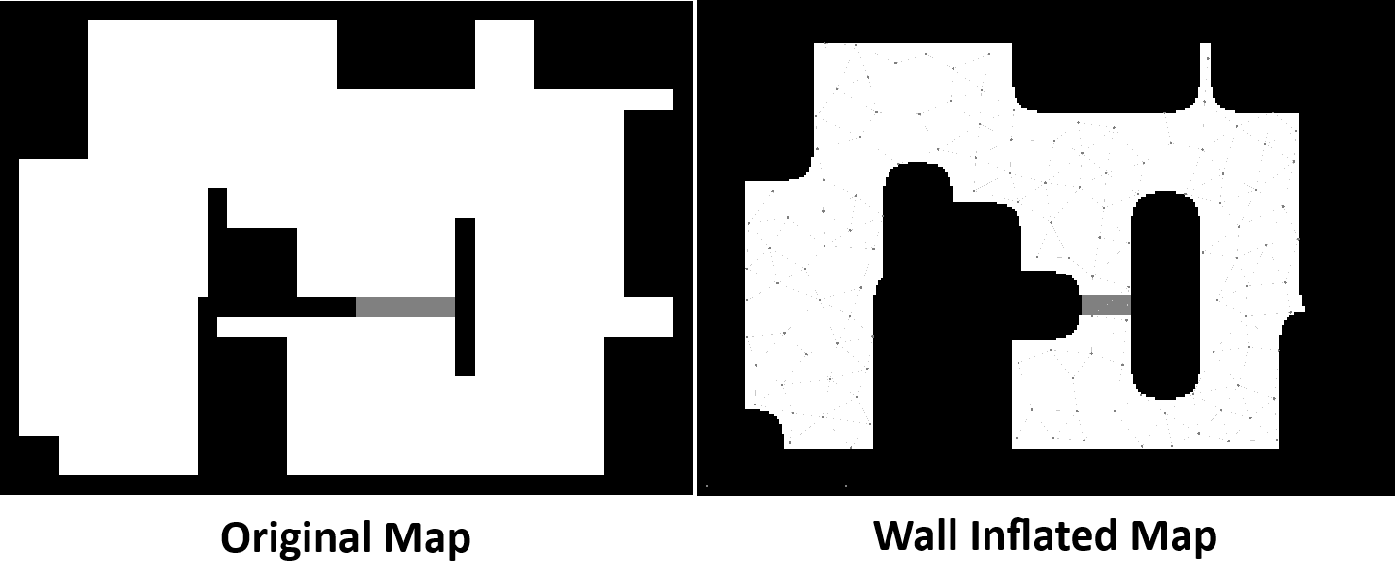

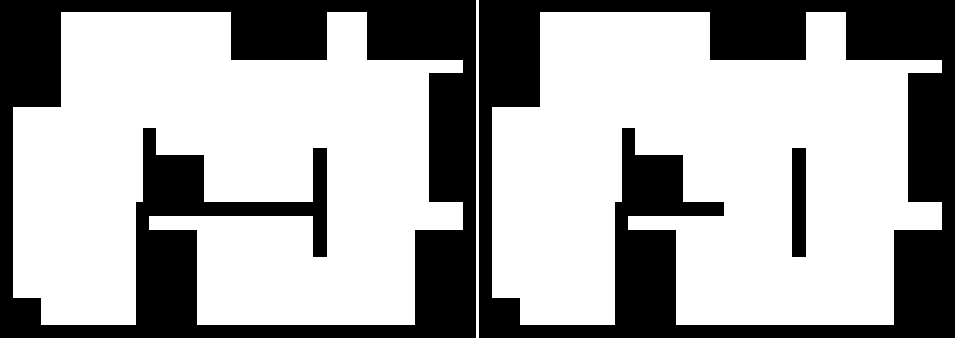

[[File:Wall inflation.png|thumb|574x574px|Left side: the original map. Right side: wall infalted map]] | |||

The PRM is a method designed to generate a graph structure for any provided map. The idea behind PRM is to be able to generate a graph for any map independent of any start or goal locations. The goal of the method is to generate a fully connected graph connecting the whole map where possible. Nodes are randomly generated and edges are collision-free straight-line connections between nodes. With enough nodes placed and their edges connected, the connected graph should provide navigation to all points within the map assuming any point is in some way reachable. | |||

For generating the PRM, the map and its real world scale is required. The scaling parameter keeps the graph generation parameters consistent for each map. Whereas the map is the region in which the node graph is generated. Thus those two are necessary parameters for graph generation. Having specified the input, the function of the PRM will now described. | |||

First, a singular preparation step is taken, namely inflating or artificially enlarging the walls for 25 centimeter within the map to prevent node generation too close to walls. This step is taken to preemptively ensure that Hero won't collide with known walls as it tries to reach a node. As Hero is around half a meter wide using 25 centimeter is large enough to prevent collision and small enough to keep node generation possible in smaller corridors. | |||

[[File:PRM challenge 2024.png|thumb|572x572px|Generated PRM of the challenge map 2024]] | |||

After wall inflation, the graph generation begins. Graph generation places nodes on random location on the map and continues until a specified number of nodes is generated. However, there is a minimum node distance requirement. If a new node is placed too closely to a previously placed node it is discarded. Therefore, there is a limit to the amount of placeable nodes. A limit on the total amount of node placing attempts is also introduced, which is the second stopping criterion. The second stopping condition was added in the case the first condition could not be guaranteed, guaranteeing an end case. Otherwise, the PRM would continuously try to pick locations to place nodes, while no places are available for node placement. The total number of nodes to be placed is set to 20000. Similarly, 20000 attempts to place a node are allowed. It is impossible to place 20000 nodes on the map, but this ensures enough placement attempts are made. Using this large number of attempts ensures that the whole map is connected. | |||

To summarize the, nodes are randomly generated within the map area and each node had to fulfill two simple requirements: | |||

* Not be inside a inflated wall | |||

* Not be too close to another node (30 cm) | |||

After all 20000 nodes are attempted to be place, it is checked if the node can be connected to other nodes in order to create a connected graph. | |||

=== | To connect a node with another node the following requirements have to be met: | ||

* The nodes can not be too far away from each other (50 cm) | |||

* Between the nodes there can be no walls, allowing for a safe path generation Hero can take. | |||

If we combine all of these requirements and steps, we find a graph full of connected nodes as seen in the image to the right. | |||

These node distances, minimal and maximal distance, are chosen such that a path can always be generated in a narrow environment such as a restaurant. The minimal distance ensures that connections can be made in narrow spaces. Whereas the maximal distance is chosen such that nodes cannot be easily skipped, guaranteeing when a node is disconnected no other similar route can be taken. These small distances enable a robust graph for Hero to navigate over. | |||

==== A* Algorithm ==== | |||

This section describes the implementation of the A* pathfinding algorithm. The goal of this algorithm is to find the shortest path from a start node to a goal node in the generated PRM. The process is divided into two main phases: the exploration phase, where nodes are expanded and evaluated, and the path reconstruction phase, where the optimal path is traced back from the goal to the start node. | |||

===== Exploration Phase ===== | |||

The exploration phase starts from Hero's position. In our case, the cost function for the algorithm is the distance. Our sole goal is minimizing the distance from Hero's current position to its goal position. The exploration phase continues until either the goal node is reached or there are no more nodes to explore: | |||

# '''Expanding the Open Node with the Lowest Cost Estimate (<code>f</code> Value):''' | |||

#* The algorithm searches through the list of open nodes to identify the one with the lowest <code>f</code> value, where <code>f</code> is the sum of the cost to reach the node (<code>g</code>) and the heuristic estimate of the cost to reach the goal from the node (<code>h</code>). | |||

#* If this node is the goal node, the goal is considered reached. | |||

#* If no node with a valid <code>f</code> value is found, the search terminates, indicating that no path exists. | |||

# '''Exploring Neighboring Nodes:''' | |||

#* For the current node (the node with the lowest <code>f</code> value), the algorithm examines its neighbors. | |||

#* For each neighbor, the algorithm checks if it has already been closed (i.e., fully explored). If not, it calculates a tentative cost to reach the neighbor via the current node. | |||

#* If this tentative cost is lower than the previously known cost to reach the neighbor, the neighbor’s cost and parent information are updated. This indicates a more efficient path to the neighbor has been found. | |||

#* If the neighbor was not previously in the list of open nodes, it is added to the list for further exploration. | |||

# '''Updating Open and Closed Lists:''' | |||

#* The current node is removed from the open list and added to the closed list to ensure it is not expanded again. This prevents the algorithm from revisiting nodes that have already been fully explored. | |||

===== Path Reconstruction Phase ===== | |||

Once the goal is reached, the algorithm traces back the optimal path from the goal node to the start node: | |||

# '''Tracing Back the Optimal Path:''' | |||

#* Starting from the goal node, the algorithm follows the parent pointers of each node back to the start node. | |||

#* The node IDs of the nodes in the optimal path are collected in a list, creating a sequence from the start node to the goal node. | |||

# '''Setting the Path:''' | |||

#* The collected node IDs are stored, and a flag is set to indicate that a valid path has been found. This allows the path to be used for navigation. | |||

==== | === Added components === | ||

---- | |||

==== Path blocking ==== | |||

[[File:PRM removed area.png|thumb|RemoveArea function being applied at lighter middle point applied to all points in circle range]] | |||

In this section the functions of how a blocked path is registered and recalculated is described. A path is seen as blocked when an object is registered through the LiDAR denying access to a node within the path. If the next goal node is not reached within a certain time frame, 10 seconds in our code, the node is seen as unreachable. When a path is registered as blocked , the robot will disconnect nodes around the next path node and recalculate its route. These nodes are disconnected permanently for a static object. We wish to plan around these static objects from that point onward, effectively adding the unforeseen obstacle to the world map. | |||

===== Disconnect nodes around point ===== | |||

Provided a center point with X and Y coordinates, the location of the next path node, all nodes within the circle of 30cm around the provided point will be disconnected. This is done by looping over all nodes and saving the ones that are within the range distance from the center point. The saved nodes are then disconnected within the PRM graph. | |||

This is done by removing the edges connected to the nodes but keeping the nodes still. A function called removeNode is made, in which each edge related to the removed node is removed from the total edge list. The nodes can not be removed as it would result in index misalignments, causing the whole PRM graph to be wrong. This function is applied to all nodes within the area surrounding the center point within the provided range. | |||

As said these disconnections happens within the PRM, therefore, whenever a new route is calculated these nodes will never be reached unless a new PRM is generated. For this challenge, however, simply removing routes through objects is sufficient. Effectively adding objects to the world map during the rest of the run if no PRM reinitializations are required. | |||

===== Route update ===== | |||

After disconnecting these nodes, the route needs to be recalculated. This is done by recalling the planner to try to calculate the optimal path. As the nodes through the obstacle are removed, an new optimal path is calculated avoiding the obstacle. In certain extreme cases Hero may remove all nodes to a certain table. If this is the case, it will regenerate a PRM. Discarding all previous node removals. We decided to implement this as a fail-safe in the case Hero would cut off nodes in a way where he would corner himself with no path out. In the end, this was not necessary. On top of this fail-save, it was implemented it could only regenerate the PRM once per table. If it had to regenerate the PRM a second time for the same order, it would deem said order infeasible and skip that specific table order. Making sure the robot would not get stuck in any part of the run and allowing versatile pathing and deliveries. | |||

Node removal and path recalculation can be seen in the following videos: | |||

[https://gitlab.tue.nl/mobile-robot-control/mrc-2024/the-iron-giant/-/blob/main/5_final_challenge/Final_Challenge_simulationvideo/Avoid_chair_sim.webm?ref_type=heads obstacle avoidance and adding sim] | |||

---- | |||

The simulation video clearly shows its next intended path, it can be observed that it has recalculated its path and is going past the obstacle. | |||

[https://gitlab.tue.nl/mobile-robot-control/mrc-2024/the-iron-giant/-/blob/main/5_final_challenge/Final_Challenge_Videos/ObstacleAvoidance_Adding.mp4?ref_type=heads Obstacle avoidance and adding obstacle to map video final challenge] | |||

The real video, however, is a bit less clear. The robot is clearly stuck and cannot move through the chair. Two times, nodes have to be removed and a path has to be calculated to move past the chair. The advantage of this technique is that the path will never cross the same chair again. | |||

==== Door opening ==== | ==== Door opening ==== | ||

When Hero sees an object in front of it, it will stop and it will wait for the object to move or add the object to the map, as described in the section Global Planner. However, before this happens it will check if the encountered object is not a door instead of an object. The image of the PRM at the start of Global Planner section shows nodes located around the door with edges between these nodes passing the doorway. This is intentional. If the robot is halted by an obstacle, it will look at the edges between its previous path node, its current node and the target node to see if either of those edges pass a door. This is done using provided functions from the PRM. If this is the case, Hero will already be positioned towards the door. Otherwise, it would not have stopped. If one of the checked path edges crosses the door, Hero is programmed to ask for the door to be opened and will then wait 10 seconds. After these 10 seconds, it will check if the door has been opened. It does this by counting how many laser point scans in a small angular section in front of the robot are measured within a range of 40cm. If less than 50 LiDAR points are found with these constraints, the door is considered open. These parameters were tested and showed very reliable results. For the localization, Hero will now load in the map with an open door, further described in the subsection Door opening in the localization section. If the door is not opened, the robot will remove nodes around the door in the PRM and attempt to find a new path. It will then reroute to find another way. As described earlier in this section, if no paths are possible Hero would route its path again without obstacles. If this finally also does not work, so when the door is again left unopened and no routes are available, it discards the order and moves on the the next order. | |||

The steps described are clearly shown in both the simulation video as well as the video of the final challenge. | |||

[https://gitlab.tue.nl/mobile-robot-control/mrc-2024/the-iron-giant/-/blob/main/5_final_challenge/Final_Challenge_simulationvideo/Open_door_sim.webm?ref_type=heads Door opening sim] | |||

[https://gitlab.tue.nl/mobile-robot-control/mrc-2024/the-iron-giant/-/blob/main/5_final_challenge/Final_Challenge_Videos/DoorOpening.mp4?ref_type=heads Door opening video final challenge] | |||

=== Localization === | |||

----The localization of Hero has three important components. The initialization of the angle of Hero with the initialization of the filter, the localization performance with obstacles and localization with door opening. | |||

==== Initialization of angle ==== | |||

Hero can start in any angle in his starting box. The filter is given an initial guess angle and standard deviation which can both be adjusted. Increasing the standard deviation of the angle to pi allows the robot to better find its angle but has the downside that the robot glitches easier to other points. A simple solution was to provide Hero with a better initial guess. Providing Hero with an initial guess which is accurate within 45 degrees allowed the localization to keep the standard deviation of the angle much smaller than pi. | |||

Hero is initialized by reading the laser sensors from -pi/2 to pi/2. The number of measurement points within this measurement angle and with a range smaller than 1.2 meters are counted as wall hits. Hero is facing the empty hallway direction if the number of wall hits are lower than a predetermined number of hits. Hero is facing a wall if this predetermined number of hits is exceeded. Rotating Hero always in one direction (Counter-Clockwise) until Hero is no longer facing a wall ensures that Hero is approximately looking at the empty hallway. Then setting the initial guess of the particle filter in the direction of the hallway and slowly rotating Hero for a few more seconds results in a significantly high success rate of initialization (1 fail in approximately 40 attempt with the practical setup, 0 fails in approximately 100 attempts with the simulation). The initialization step of Hero can be observed in the simulation as well as in the final challenge which are shown in de following links: | |||

[https://gitlab.tue.nl/mobile-robot-control/mrc-2024/the-iron-giant/-/blob/main/5_final_challenge/Final_Challenge_simulationvideo/Orientation_run_sim.webm?ref_type=heads Initalization in simulation] | [https://gitlab.tue.nl/mobile-robot-control/mrc-2024/the-iron-giant/-/blob/main/5_final_challenge/Final_Challenge_simulationvideo/Orientation_run_sim.webm?ref_type=heads Initalization in simulation] | ||

[https://gitlab.tue.nl/mobile-robot-control/mrc-2024/the-iron-giant/-/blob/main/5_final_challenge/Final_Challenge_Videos/Initialize.mp4?ref_type=heads Initialization video final challenge] | [https://gitlab.tue.nl/mobile-robot-control/mrc-2024/the-iron-giant/-/blob/main/5_final_challenge/Final_Challenge_Videos/Initialize.mp4?ref_type=heads Initialization video final challenge] | ||

==== Localization with unknown obstacles ==== | |||

The localization algorithm is sensitive to unmodeled obstacles. When there is a large, flat wall-like obstacle, the localization can quickly deviate from its actual position due to the significant disturbance. Similarly, if a wall is removed but not updated in the map, the robot will also quickly lose its accurate positioning. However, the algorithm is more robust against smaller obstacles, minor discrepancies between the real environment and the simulated map, and round geometries like bins and chair legs. This robustness ensures the localization is effective enough for the final challenge, where additional obstacles are introduced but no large walls are added or removed. | |||

==== Dynamic Door Handling ==== | |||

[[File:Localization map comparison.png|thumb|Left is initial localization map, right is localization map with opened door]] | |||

In the final restaurant challenge, there's a door on the map that Hero can open. However, when Hero opens the door, there's a noticeable difference between the world model and what Hero observes with its sensors, causing localization to become inaccurate. To restore accurate localization, the world map needs to be updated so that Hero can once again correctly perceive its environment. | |||

The implementation of this update involves Hero moving towards the door. Once Hero reaches the door, it stops and requests the door to be opened. Upon opening, Hero identifies the change and updates the world model accordingly. After updating the world model, Hero resumes its task with the updated information. | |||

In this updated scenario, a new map without the door is introduced. The old map treated the door as a wall, while the new map treats the door as an open passage. For localization purposes, Hero now loads the map with the door open, instead of the initial map with the door closed. Initially, the robot often lost its location when moving through the door because it believed it had "warped" through it. Warping means the robot perceives an abrupt change in position without a corresponding physical movement, leading to a mismatch between its internal map and its actual surroundings. To prevent this, Hero enters a stationary state for 10 seconds upon reaching the door and does not update its localization during this time. Although the door being left open was not part of the challenge, a solution was implemented: if Hero notices the door remains closed after 10 seconds, it will not update its map. Instead, it will remove nodes around the door, regard this path as blocked, and reroute to find an alternate way. | |||

=== Table order === | === Table order === | ||

----This section outlines the algorithm implemented to manage the table order and locations at these tables in the restaurant. The robot follows a predefined list of tables, navigates to specified locations, announces successful deliveries, and handles cases where tables or specific locations are unreachable. The process ensures systematic and efficient delivery, providing status updates and handling new orders as they are received | ----This section outlines the algorithm implemented to manage the table order and locations at these tables in the restaurant. The robot follows a predefined list of tables, navigates to specified locations, announces successful deliveries, and handles cases where tables or specific locations are unreachable. The process ensures systematic and efficient delivery, providing status updates and handling new orders as they are received | ||

| Line 339: | Line 400: | ||

=== Table docking === | === Table docking === | ||

The table docking is a process consisting of a few sequential step represented by several states in the state machine. The requirements for delivering a plate a table were for the robot to be close enough to the table, be oriented toward the table and to wait a few seconds to allow a plate to be taken by a customer. | |||

The robot approaches a table by following the path generated by the A* function. The final node is a point of delivery at the designated table. When the robot is close enough to the table, which happens if the robot has reached a distance of 20cm from the node at the designated table, the docking process starts. In some cases, an object is place next to the table, blocking the docking point. If this is the case, the robot notices he is close to a table and the object blocking the robot is likely a chair. In this case it does not immediately add the object to the PRM map. However, it will first try to reach any of the other predetermined docking points at the table. So it will regenerate a path to the new docking point. Such that the robot navigates to a new location at the table. If all docking points are unreachable, which was not encountered but which has been implemented, the table's order is deemed infeasible and the order is skipped. | |||

In the case a docking point is reachable, it will arrive approximately 20cm from the table. It will then stop and rotate to orient itself towards the table. It uses its own current orientation and the desired orientation for this. If has oriented itself correctly, it will ask the customer to take the plate. It will wait for five seconds, before the undocking process starts. During testing, the robot would sometimes think it was located inside of the table. This would completely disable the robot to make actions due to localization issues. This problem is further addressed in section Testing. For this reason, it was decided to move 180° away from the table before generating a new path. If the robot has rotated itself, it would again go to the state to go to the next table on the order list. | |||

The described docking sequences are shown in both the simulation environment as well during the robot challenge for the first table. In the simulation, we can also see that it selects the next order as it generates a new path towards the next table. | |||

[https://gitlab.tue.nl/mobile-robot-control/mrc-2024/the-iron-giant/-/blob/main/5_final_challenge/Final_Challenge_simulationvideo/Turn_away_from_table_sim.webm?ref_type=heads Table docking and turn sim] | [https://gitlab.tue.nl/mobile-robot-control/mrc-2024/the-iron-giant/-/blob/main/5_final_challenge/Final_Challenge_simulationvideo/Turn_away_from_table_sim.webm?ref_type=heads Table docking and turn sim] | ||

[https://gitlab.tue.nl/mobile-robot-control/mrc-2024/the-iron-giant/-/blob/main/5_final_challenge/Final_Challenge_Videos/Docking_Turning.mp4?ref_type=heads Table docking and turning video final challenge] | [https://gitlab.tue.nl/mobile-robot-control/mrc-2024/the-iron-giant/-/blob/main/5_final_challenge/Final_Challenge_Videos/Docking_Turning.mp4?ref_type=heads Table docking and turning video final challenge] | ||

== Testing == | |||

Once all the components were completed and integrated, thorough testing was necessary to ensure they functioned as expected. Key components tested included the local and global planners, obstacle detection, and localization, all of which were crucial for successfully completing the final challenge. This section discusses the evaluation of these components, detailing what worked well and identifying areas for improvement. | |||

=== | ==== DWA ==== | ||

During testing in the earlier weeks, the DWA worked very well. At this time, the PRM consisted of less nodes with larger distances between the nodes. However, for the implementation of obstacle detection on the PRM map, more nodes with smaller edge distances were necessary. If few nodes were used, Hero would quickly cut off entire corridors or big areas in the map, introducing infeasibility in the route generation. This gave a conflict, as the DWA configured in the earlier weeks would not work well with the new PRM setup. The DWA utilizes higher speeds to dodge obstacles and move around corners. When the PRM was altered, we quickly identified some big issues. This included cutting corners and introducing situations in which Hero would get stuck around corners. For example, the robot would hit the wall after driving through the door or get stuck when trying to make a 90° corner. Some example videos of three days of testing in the final week of the robot challenge are included. | |||

[https://gitlab.tue.nl/mobile-robot-control/mrc-2024/the-iron-giant/-/blob/main/5_final_challenge/captures/DWAhoekjesafsnijden.mp4 Cutting off corners] | |||

[https://gitlab.tue.nl/mobile-robot-control/mrc-2024/the-iron-giant/-/blob/main/5_final_challenge/captures/DWAvastzittenhoekje.mp4 Getting stuck when trying to pass a corner] | |||

Another effect of changing the PRM configuration was that Hero would overshoot target nodes. As the distance to move to a new node was reduced, it would often loop around a node until the robot would reduce its speed near a wall. At this point it could 'catch up' and reach the node. This both cost valuable time and made the trajectory of Hero unclean and unreliable. Additionally, sometimes if multiple loops were made, Hero would add an obstacle on the location of the target node as it took too long for hero to reach the target node. | |||

[https://gitlab.tue.nl/mobile-robot-control/mrc-2024/the-iron-giant/-/blob/main/5_final_challenge/captures/DWAloopsomdoelnode.mp4 Looping around target node] | |||

The encountered problems were addressed by reconfiguring the DWA to operate with more nodes at smaller intervals. This adjustment led to a partial loss of DWA’s obstacle avoidance benefits, causing the local planner to prioritize closely following the designated path and enabling the robot to stop when an object entered its field of view. Consequently, we chose to rely more on the global planner than the local planner. During the final challenge, Hero did get stuck at a corner, similar to the incident in the second video. However, due to our method of introducing obstacles, Hero was able to overcome this difficulty. | |||

==== | ==== Global planner and obstacle introduction ==== | ||

A problem we encountered very quickly when testing our obstacle introduction scheme in the PRM, was the infeasibility of generating a suitable path. Sometimes, when Hero would remove nodes to add an obstacle, it would block entire corridors. Removing too many edges connected to these nodes to generate a feasible A* path. This was solved by introducing a fail-safe; when no feasible path could be generated to a target node Hero discards all its introduced obstacles and reconfigures its PRM. Often, this would allow Hero to move to his target node. However, we wanted to make sure that a table that was blocked permanently would not cause the robot to get stuck in a loop. So the PRM is reinitialized once to reach a target table. If again Hero could not reach the table, the table would be skipped. A video during testing is included in which Hero removed nodes to get past a corner. However, due to the removal of nodes no path to the table could be generated, ending Hero's run. | |||

[https://gitlab.tue.nl/mobile-robot-control/mrc-2024/the-iron-giant/-/blob/main/5_final_challenge/ | [https://gitlab.tue.nl/mobile-robot-control/mrc-2024/the-iron-giant/-/blob/main/5_final_challenge/captures/Padafsnijdenendoodlopen.mp4 Adding obstacles, introducing infeasibility of pathing in corridors] | ||

=== | ==== Localization ==== | ||

The localization also faced some disastrous unforeseen bugs during testing in the final week. Both becoming apparent with the setup of the final map. The first one was encountered when Hero would dock a table to deliver its order. Due to its LiDAR vision at the front of the robot, Hero would often think it was located inside the table. Disallowing all forms of movement. In other words, the robot would get completely stuck and the run would be over. This was solved by introducing another step to the docking sequence; the undocking in which the robot would rotate away from the table after which the localization issue is solved. Another issue, which in fact occurred for another group in the final challenge, was the loss of localization if Hero moves through a door. If hero does not update its world map, it would think it 'warped' through a wall when crossing the door. Very often, this would mess up Hero's localization, causing its position estimate to get stuck behind the door. We solved this by waiting a set time after asking if the door could be opened. Hero would then look at scans in front of the robot to see if the door had been opened, changing the world map when this was the case. This solved all localization issues when passing through the door. | |||

[https://gitlab.tue.nl/mobile-robot-control/mrc-2024/the-iron-giant/-/blob/main/5_final_challenge/ | [https://gitlab.tue.nl/mobile-robot-control/mrc-2024/the-iron-giant/-/blob/main/5_final_challenge/captures/vastintafellocalizatie.mp4 Stuck 'inside' of the table, disabling movement] | ||

[https://gitlab.tue.nl/mobile-robot-control/mrc-2024/the-iron-giant/-/blob/main/5_final_challenge/captures/localizatieverliezen.mp4 Losing localization when crossing a door] | |||

[https://gitlab.tue.nl/mobile-robot-control/mrc-2024/the-iron-giant/-/blob/main/5_final_challenge/ | |||

== Final challenge == | == Final challenge == | ||

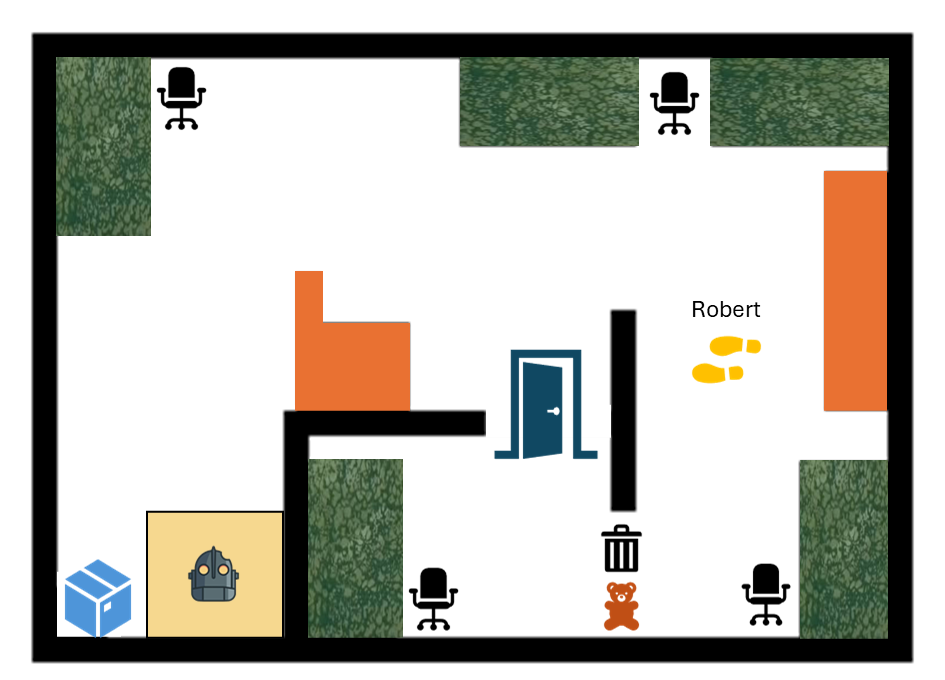

[ | [[File:Final challenge overview v2.png|thumb|466x466px|Final obstacle course ''(Iron-Gigant icon retreived from heatherarlawson)'']] | ||

The final challenge was performed successfully on the restaurant map which is shown on the image on the right. Overall, the robot's behaviour aligned closely with our expectations. Initially, Hero began its localization by turning in a circle, as anticipated. The global planner and the A* algorithm then calculated the route to the first table. The path was not a straight line because the PRM generates nodes randomly on the map rather than in a direct line. Upon reaching the table, Hero initiated its docking procedure, allowing guests to take their food. | |||

Next, the route was updated for the following table in the sequence. As previously explained, the route was not a straight line. Along this path, the robot encountered a chair. The local planner detected the chair, making sure the robot to stop and wait to determine if it was a dynamic object. After a set period, the robot recognized the chair as a static object, removed the corresponding nodes from the map, and updated its route accordingly. This process occurred a second time before the robot successfully reached and docked at the next table. | |||

Hero then recalculated the route to the next table. Along the way, it encountered a person walking through the hallway. Upon detecting an obstacle ahead, the robot stopped. Once the sensors confirmed the path was clear, the robot continued toward the table. Upon arrival, it noticed a chair obstructing the docking position. After a brief wait, the robot added the chair to the map by removing a node. Realizing the original docking position was unreachable, it selected an alternative docking position at the same table. This position was accessible, allowing the robot to dock successfully. | |||

Finally, the route to the last table is calculated. In this route, a small hallway is blocked by a garbage can and a teddy bear which can be observed on the image on the right side. Again the robot sees this through its local planner and removes the nodes. This results in a new route through the door, as all nodes through the initial hallway are removed and therefore no viable path could be found through it. When following this new route, it detected the person’s ankles and increased its safety distance to account for feet. However, it encounters a problem at a corner close to the first chair that was added to the map. As the local planner detects this corner as an obstacle, the nodes are removed again. However, as there are now no viable paths to the table, it resets the whole map and all removed nodes are added again. This is the reason why Hero now again tries to go through the hallway where the garbage can was placed. Here, it again removes the nodes and now finds approximately the same route through the door, but a little further from the corner it got stuck on. Now successfully reaching the door, it requests to open the door. After the door opens, it continues its route and reaches the last table. This means it successfully reached all tables and completing the challenge. | |||

The video of the final challenge from two different perspectives can be seen in the following links: | |||

[https://gitlab.tue.nl/mobile-robot-control/mrc-2024/the-iron-giant/-/blob/main/5_final_challenge/Final_Challenge_Videos/Video_challenge.mp4?ref_type=heads Final challenge video angle 1] | |||

[https://gitlab.tue.nl/mobile-robot-control/mrc-2024/the-iron-giant/-/blob/main/5_final_challenge/Final_Challenge_Videos/Video_challenge2.mp4?ref_type=heads Final challenge video angle 2] | |||

== Conclusion & Recommendations == | |||

Hero was able to bring food to all tables during the final challenge without hitting any obstacle. It took Hero approximately 6 minutes to serve all tables and Hero was able to pass through a door after asking it to be opened. This shows the effectiveness of the chosen components as well as the robustness of the complete system. The usage of the state machine as driving system resulted in a easy to test and improve system, where each individual component could easily be tested by group members. Special added features such as ankle/chair detection, table docking and door opening improved the safety of the system as well as enlarged the functionality as a order serving robot. The overall implementation on Hero was a success. As in any project there are always improvements possible. Multiple recommendations are given to improve the software for the next generation serving robots. | |||

There are four main recommendations which could improve the robot algorithm when implemented. These improvements include smooth driving of DWA with a global path, improved obstacle node removal, robust ankle detection and overal optimized code efficiency. First, the smoothness of driving and driving around small obstacles with the local planner can be improved. The path blocking algorithm removes nodes where obstacles are located. This works best with a dense graph to ensure that the removal of a single node does not lead to a complete unfeasible path. The DWA works best when driving towards a goal which is far away without to many small goals. This allows the DWA to avoid small obstacles by itself without the need of the path blocking algorithm. This inturn leads to faster, smoother and more desired local navigation. The intermediate target goals given to the DWA from the global planner could be checked to remove setpoints which are not relevant for this specific path. This allows to still include the dense graph for node removal but let the local planner do more of the obstacle avoidance work. | |||

A second recommendation would be to improve the method of node removal. The nodes are now removed in a predetermined radius around the localtion of the next target goal. This works most of the time quite well but has the downside that sometimes a path is not removed which is unfeasible and sometimes a path is removed which was feasible. Identifying the actual location of the obstacle using laser data in combination with positional data and removing the nodes are the obstacle could provide a more robust method of obstacle identification in the global map. | |||

A third recommendation would be to improve the ankle detection method using either shape fitting or machine learning techniques. The method now implemented can identify ankles in most cases but also gave some false positive readings at sharp edges of walls. The increased safety radius reduces the flexibility of movement of the robot and is therefore only preferred if there are actual chairs and ankles to avoid. The method now used to recognize, works fast and reasonably well, but could be improved by implementing more common techniques of shape recognition. | |||

The final recommendation would be to optimize the code efficiency. The code now runs at 5 Hz without any problems but to be able to react to dynamic obstacles properly a 10 Hz working frequency is desired. The most limiting factors are the localization and the local planner. The local planner works with velocity sets which are checked for reachability and admissibility which takes significant computing power to check for all laser measurement points. This could be improved by reducing the number of laser measurements considerd per velocity by only looking at the laser angles relevant for a specific velocity. The localization resampling algorithm can also be looked at to reduce the amount of resampling and thus improving the code efficiency. Important to consider is that the reliability of the localization and the global planner should be thoroughly checked when implementing the improvements in code efficiency. | |||

Implementing these four recommendations should equip Hero with the necessary tools for safer and faster navigation in a restaurant environment. | |||

= '''WEEKLY EXERCISES''' = | = '''WEEKLY EXERCISES''' = | ||

| Line 459: | Line 543: | ||

The simplified vector field histogram approach was initially implemented as follows. | The simplified vector field histogram approach was initially implemented as follows. | ||

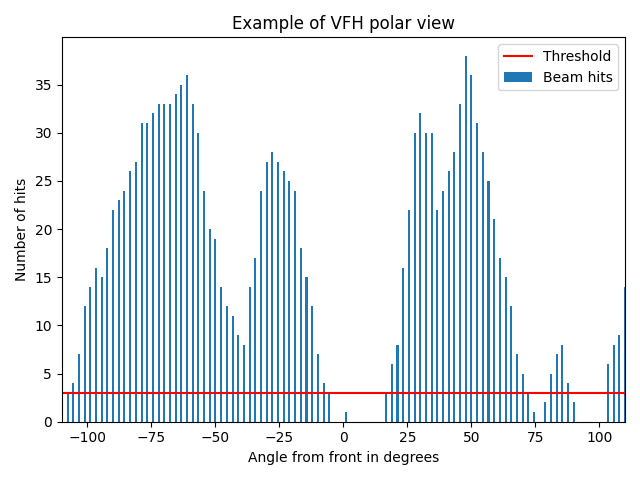

The robot starts out with a goal retrieved from the global navigation function, its laser data is received from the | The robot starts out with a goal retrieved from the global navigation function, its laser data is received from the LiDAR and its own position, which it keeps track of internally. A localization function will eventually be added to enhance its ability to track its own position. The laser data points are grouped together in evenly spaced brackets in polar angles from the robot. For the individual brackets, the algorithm checks how many LiDAR points' distance is smaller than a specified safety threshold distance. It then saves the number of laser data points that are closer than the safety distance for every bracket. This is indicated by the blue bars in the plot to the right. | ||

Next it calculates the direction of the goal by computing the angle between its own position and the goal position. It then checks whether the angle towards the goal is unoccupied by checking the values of the bracket corresponding to that angle. A bracket is unoccupied if the amount of LiDAR hits within the safety distance are fewer than the threshold value. Additionally, some extra neighboring brackets are checked depending on a safety width hyperparameter. The goas of the safety width is to make sure the gap is wide enough for the robot to fit through. If the direction towards the goal is occupied, the code will check the brackets to the left and to the right and save the closest unoccupied angle at either side. It then picks whichever angle is smaller, left or right. and sets that angle as its new goal. If we look at our example histogram, we can see that the forward direction to the goal is open. However, depending on the amount of safety brackets, its behavior might change. In our case, we use 100 brackets as is shown in the example histogram. In some cases, we have used about 5 safety brackets. In the example case, the robot would steer slightly to the positive angle direction (to the right) to avoid the first brackets left from x=0 above the safety threshold. | Next it calculates the direction of the goal by computing the angle between its own position and the goal position. It then checks whether the angle towards the goal is unoccupied by checking the values of the bracket corresponding to that angle. A bracket is unoccupied if the amount of LiDAR hits within the safety distance are fewer than the threshold value. Additionally, some extra neighboring brackets are checked depending on a safety width hyperparameter. The goas of the safety width is to make sure the gap is wide enough for the robot to fit through. If the direction towards the goal is occupied, the code will check the brackets to the left and to the right and save the closest unoccupied angle at either side. It then picks whichever angle is smaller, left or right. and sets that angle as its new goal. If we look at our example histogram, we can see that the forward direction to the goal is open. However, depending on the amount of safety brackets, its behavior might change. In our case, we use 100 brackets as is shown in the example histogram. In some cases, we have used about 5 safety brackets. In the example case, the robot would steer slightly to the positive angle direction (to the right) to avoid the first brackets left from x=0 above the safety threshold. | ||

| Line 561: | Line 645: | ||

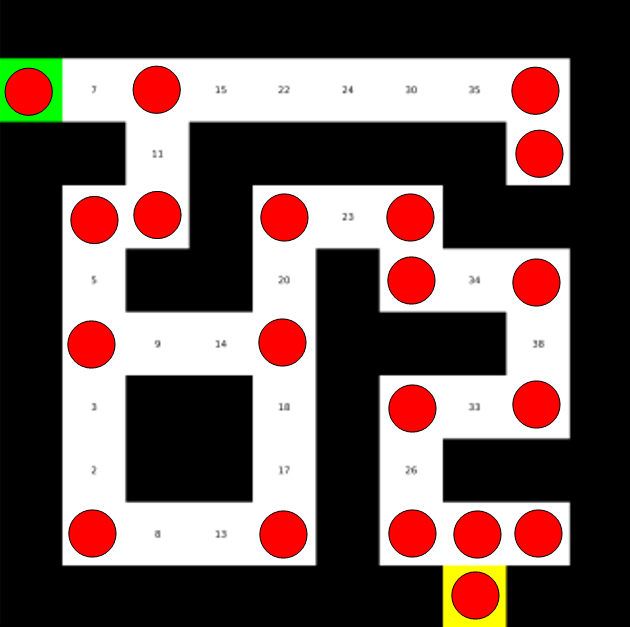

The goal of this project is to reach a certain destination. In order to reach the destination, a plan must be made which the robot will follow. This larger scale plan is called the global plan. This plan is made through the global planner. The global planner that is used throught this project is '''A*''' which will find the most optimal path for a graph. To create the graph on which a path is planned, the function | The goal of this project is to reach a certain destination. In order to reach the destination, a plan must be made which the robot will follow. This larger scale plan is called the global plan. This plan is made through the global planner. The global planner that is used throught this project is '''A*''' which will find the most optimal path for a graph. To create the graph on which a path is planned, the function Probabilistic Road Map (PRM) is used. This function generates a random generated graph for a map. So, by combining these functions a optimal path can be generated for a given map. | ||

[[File:Efficient small maze nodes.png|thumb|221x221px|Efficiently placed nodes]] | [[File:Efficient small maze nodes.png|thumb|221x221px|Efficiently placed nodes]] | ||

Latest revision as of 15:36, 28 June 2024

FINAL REPORT The Iron Giant

Group members:

| Name | student ID |

|---|---|

| Marten de Klein | 1415425 |

| Ruben van de Guchte | 1504584 |

| Vincent Hoffmann | 1897721 |

| Adis Husanović | 1461915 |

| Lysander Herrewijn | 1352261 |

| Timo van der Stokker | 1228489 |

The final work is organized following a normal report structure as can be seen in the table of content. The localization exercises are improved based on the midterm feedback and placed before the introduction. The content in this report mostly reflects the new work after the midterm exercises and contains as little as repetition as possible.

Localization exercises improvement

Assignment 0.1 Explore the code framework

How is the code is structured?

The code is the implementation of a particle filter using either uniformly or gaussian distributed samples. The code of the particle filter consists of multiple classes, which are data structures that also include functionality. These data structures are linked to each other to divide functionality to segments which can be tested individually. The base of the particle filter structure is the identically named ParticleFilterBase. The base is the collection of the whole particle filter structure and thus of the particles. The ParticleFilter is the in the back-ground running code of the particle filter base structure which actually performs the particle tasks such as initialization, updating and resampling. Both classes work with a sub-class called particle. The particle class is the class of a single particle which consists of the location and weight of the particle. The class has functionality to update the position of the individual particle, get its likelihood, set its weight and initialise.

What is the difference between the ParticleFilter and ParticleFilterBase classes, and how are they related to each other?

The ParticleFilterBase class is like a basic framework for implementing particle filtering algorithms. It contains functions such as particle initialisation, propagation based on motion models, likelihood computation using sensor measurements, and weight management. Furthermore, the class ParticleFilterBase provides implementations that can be extended and customized for specific applications. It remains flexible by providing methods to set measurement models, adjust noise levels, and normalize particle weights, allowing it to be used effectively in different probabilistic estimation tasks.

On the other hand, the ParticleFilterclass builds upon ParticleFilterBase. ParticleFilter extends the base functionality by adding features such as custom resampling strategies, more advanced methods to estimate the state of a system, and integration with specific types of sensors. By using ParticleFilterBase as its foundation, ParticleFilter can focus more on the specific choice of filtering, enabling efficient reuse of core particle filter components while providing unique requirements of different scenarios or systems. This modular approach not only improves code reusability but also makes it easier to maintain and adjust particle filtering systems for different applications, ensuring robustness in probabilistic state estimation tasks.

How are the ParticleFilter and Particle class related to each other?

The ParticleFilter and Particle classes collaborate to implement the particle filtering algorithm. The ParticleFilter manages a collection of particles, arranging their initialization, propagation, likelihood computation, and resampling. In contrast, each particle represents a specific hypothesis or state estimate within the particle filter, containing methods for state propagation, likelihood computation, and state representation. Together, they determine the probabilistic state estimation by using the multiple hypotheses of the particles and probabilistic sampling to approximate and track the true state of dynamic system based on sensor data and motion models.

Both the ParticleFilterBase and Particle classes implement a propagation method. What is the difference between the methods?

The ParticleFilterBase::propagateSamples method ensures all particles in the filter move forward uniformly and in sync. It achieves this by applying motion updates and noise consistently to every particle, thereby keeping coordination in how they update their positions. This centralised approach guarantees that all particles stick to the same movement rules, effectively managing the overall motion coordination for the entire group.

On the other hand, the Particle::propagateSample method focuses on updating each particle individually. It adjusts a particle's position based on its current state and specific motion and noise parameters. Unlike ParticleFilterBase, which handles all particles collectively, Particle::propagateSample tailors the movement update for each particle according to its unique characteristics. This allows each particle to adjust its position independently, ensuring flexibility in how particles evolve over time.

Assignment 1.1 Initialize the Particle Filter

What are the advantages/disadvantages of using the first constructor, what are the advantages/disadvantages of the second one?

In which cases would we use either of them?

The first constructor places random particles around the robot with an equal radial probability (uniformly distributed). Each particle has an equal chance of being positioned anywhere around the robot, with completely random poses. This method is particularly useful when the robot’s initial position is unknown and there is no information about its location on the global map. By distributing particles randomly, the robot maximizes its likelihood of locating itself within the environment. The key advantages of this approach include wide coverage and flexibility, making it ideal for scenarios where the robot's starting position is completely unknown. However, the disadvantages are lower precision due to the wide spread of particles and higher computational demands, as more particles are needed to achieve accurate localization.

In contrast, when the robot’s position and orientation are known, the second constructor is more effective. This method initializes particles with a higher probability of being aligned with the robot's known state, placing more particles in at the location of the robot and orienting them similarly (gaussian distributed). This concentrated placement allows for more accurate corrections of the robot’s position and orientation as it moves forward. The advantages of this approach include higher precision and efficiency, as fewer particles are needed for accurate localization. However, it has the drawback of limited coverage, making it less effective if the initial guess of the robot’s position is incorrect. Additionally, it requires some prior knowledge of the robot’s initial position and orientation. This constructor is best used when the robot has a general idea of its starting location and direction.

Assignment 1.2 Calculate the pose estimate

Interpret the resulting filter average. What does it resemble? Is the estimated robot pose correct? Why?

The filter calculates a weighted average across all particles to estimate the robot's state, including its position and orientation. Particles that align closely with sensor data receive higher weights, allowing the particle swarm to adapt to sensor readings. As the filter operates over time, the estimated pose gradually aligns more closely with the actual pose of the robot. However, some discrepancy in pose may persist due to the finite number of particles, which limits the filter's ability to fully represent the pose space. Iterating through the particle swarm with noise can enhance the accuracy of the estimated robot pose over successive iterations.

The resulting filter average, determined in ParticleFilterBase::get_average_state(), represents the estimated pose of the robot based on the weighted distribution of particles in the particle filter. This average pose represents the center of mass of the particle cloud, which approximates the most likely position of the robot according to the filter's current state. The alignment of the robots pose and the the average state of the particle cloud depends on several factors:

- Particle Distribution: The particles should be distributed in a way that accurately reflects the true probability distribution of the robot's pose, then the estimated pose is likely to be correct or close the actual robot position.

- Particle Weighting: The accuracy of the estimated pose of the robot depends on the weights assigned to the particles which represent their likelihood w.r.t. being at the robots pose. Particles with a higher weight contributed more to the average pose calculation, where the more probable poses are given more importance.

- Model Accuracy: Another effect on the accuracy of the average state is how accurate the motion model (which is used to propagate particles) and the measurement model (used to update the particle weights) are. These models should accurately reflects the robot's actual movement and sensor measurements, then the estimated pose is more likely to be correct.

Currently, there is a difference between the average state and the actual robot position. This differences comes from several factors. Firstly, all particles have been assigned the same weight, which prevents the filter from properly reflecting the likelihood of different poses. In a correct setup, particles with higher weights should have more influence on the average pose calculation, accurately representing more probable robot poses.

Furthermore, the motion and measurement models essential for incorporating the robot's movement and sensor measurements have not yet been implemented in the script. These models are crucial for accurately updating particle positions and weights based on real-world data. Without them, the estimated state cannot accurately reflect the robot's actual movement and the measurements from its sensors.

Imagine a case in which the filter average is inadequate for determining the robot position.