AutoRef honors 2019: Difference between revisions

| Line 21: | Line 21: | ||

<center>[[File:Rqt drone honors.png|750 px|system]]</center> | <center>[[File:Rqt drone honors.png|750 px|system]]</center> | ||

The following text will briefly explain what everything in the figure means. More in-depth information will come later or can be seen in the | The following text will briefly explain what everything in the figure means. More in-depth information will come later or can be seen in the corresponding sections. ros_interface'' is the node which is created by the simulator itself and serves as a communication path between the simulator scripts and the rest of the system. What can not be seen in this figure are the individual object scripts within the simulator. A script that belongs to a camera object will publish camera footage from the camera mounted on the drone to the topic ''/txCam''. The ''image_processor'' node which is ran outside the simulator will subscribe to ''/txCam'' and will subsequently get the image from the simulator. It will then extract the relative ball position and size (in pixels) out of each frame and stores it in an object (message type). This message will then be published to the ''/ballPos'' topic. The simulator node (actually a script belonging to the flight controller object) will subscribe to this topic, get the position of the ball relative to the drone and decide what to do with this information i.e. move in an appropriate manner. This loop will run at approximately 24Hz. | ||

=Vision= | =Vision= | ||

Revision as of 08:57, 7 May 2020

AutoRef Honors 2019/20

Introduction

- explain intentions

- since we cannot test hardware anymore we had to adapt and switch to simulation

Software Architecture

Since hardware testing is not an option anymore during times like these we had to adapt how we execute the project. Since we still want to be able to use the work done in these times, we chose to build the communication of our system on the Robot Operating System (ROS). This way we can keep many components of our system unchanged when we go back and implement it with real hardware. Only the part that models the quadcopter and the video stream of the camera have to be swapped for the real drone and camera.

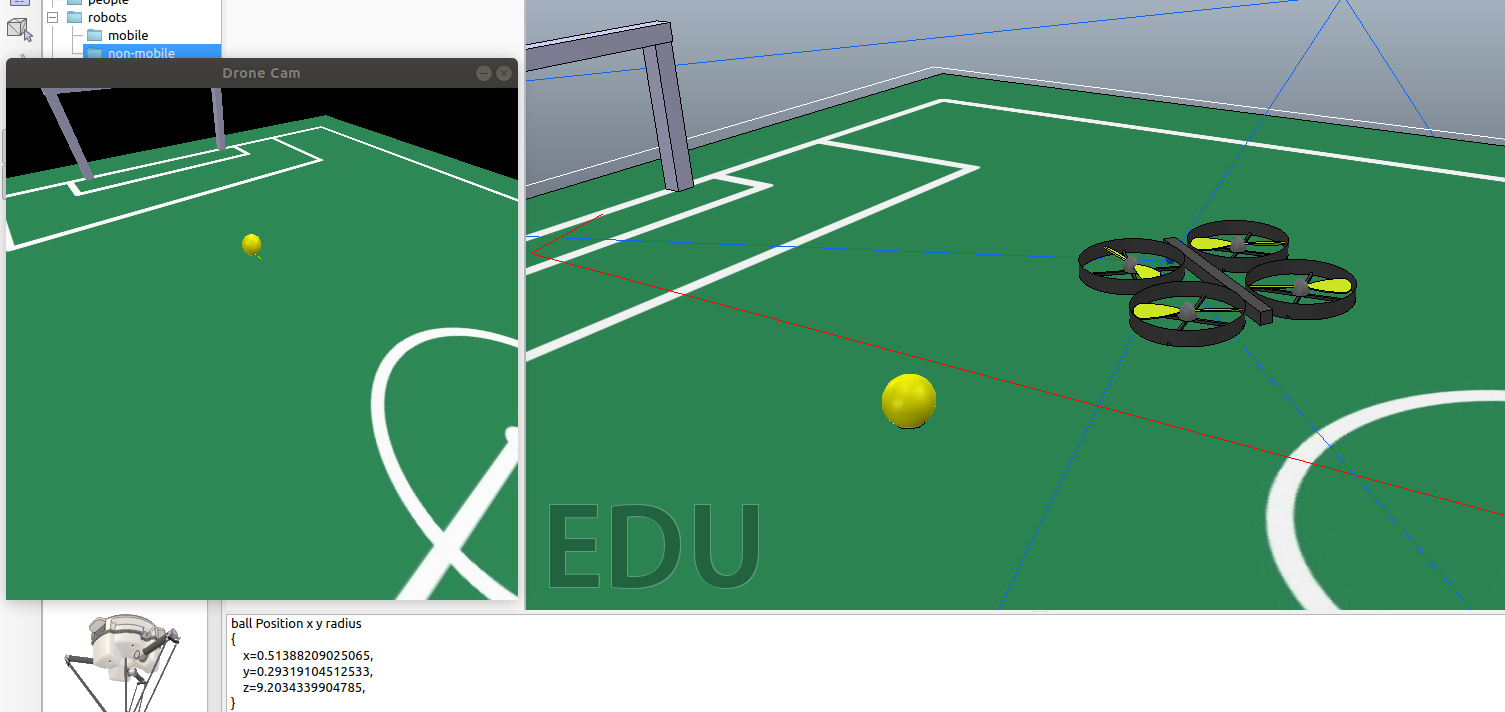

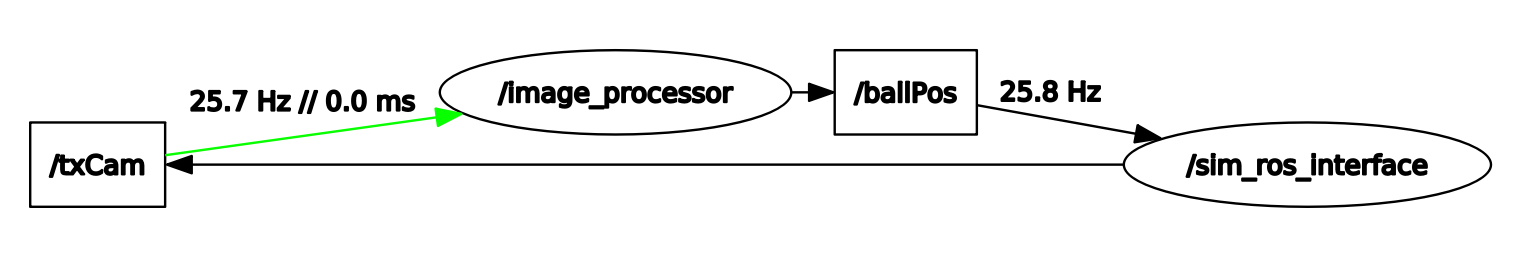

The simulation environment chosen is CoppeliaSim (also known as V-rep), this environment has a nice intuitive API and works great with ROS. In CoppeliaSim each object (i.e. a drone, or a camera) can have its own (child) script which can communicate with ROS via subscription and publication to topics. The overall architecture can be displayed by the ROS command rqt_graph while the system is running. The output of this command will be displayed in the following figure.

The following text will briefly explain what everything in the figure means. More in-depth information will come later or can be seen in the corresponding sections. ros_interface is the node which is created by the simulator itself and serves as a communication path between the simulator scripts and the rest of the system. What can not be seen in this figure are the individual object scripts within the simulator. A script that belongs to a camera object will publish camera footage from the camera mounted on the drone to the topic /txCam. The image_processor node which is ran outside the simulator will subscribe to /txCam and will subsequently get the image from the simulator. It will then extract the relative ball position and size (in pixels) out of each frame and stores it in an object (message type). This message will then be published to the /ballPos topic. The simulator node (actually a script belonging to the flight controller object) will subscribe to this topic, get the position of the ball relative to the drone and decide what to do with this information i.e. move in an appropriate manner. This loop will run at approximately 24Hz.

Vision

Ball tracking

To track the ball from the drone camera a python code was found and adapted. To track the ball a threshold in HSV color space is needed, this threshold represents a range of colors that the ball can show on camera due to different lighting.

The first thing the code will do is grab a frame from the video and make it into a standard size and apply a blur filter to create a less detailed image. Then all of the image that is not the color of the ball is made black, and more filters are applied to remove small parts in the image with the same color as the ball. Now a few functions of the cv2 package are used to encircle the largest piece of the image with the color of the ball. Then the center and radius of this circle are identified. A problem we ran into was that the ball was sometimes detected in an incorrect position due to a color flickering, we then added a feature to only look for the ball in the same region of the image where the ball was detected in the previous frame.

More on the code can be found here https://www.pyimagesearch.com/2015/09/14/ball-tracking-with-opencv/

Control of Drone in Simulator

The drone used in the simulation is CoppeliaSims build-in model 'Quadcopter.ttm' which can be found under robots->mobile. This drone uses a script that takes care of the stabilization and drift. The object script takes the drone's absolute pose and the absolute pose of a 'target' and tries to make the drone follow the target using a PID control loop which actuates on the four motors of the drone. In our actual hardware system we do not want to use an absolute pose system to follow an object so we will not use the absolute pose of the drone in order to path plan in the simulation either. However, this ability of the drone will be used to stabilize the drone since for the real hardware drone we use an optical flow sensor for this. When the drone receives a message from the /ballPos topic (contains information about the position of the ball relative to the drone) the drone will actuate in the following simple way. When the ball is on the right hand side of the screen i.e. 1 > ballPos.x > 0.5, the left motors will spin harder and vice versa. When the ball is far away i.e. 0.5 > ballPos.y > 0, the drone will increase thrust for the two rear motors and vice versa. For now this will be done with a P-controller. For now, this is how the drone follows the ball, this task can obviously be improved by a more complex controller and a more elegant algorithm.

hardware

cf bolt flowdeck

implementation

//

Tutorial Simulation

After following this tutorial the reader should be able to run a basic simulation of a drone following a yellow object (ball) on a soccer field. The simulation environment chosen for this project was CoppeliaSim (also known as V-rep). This environment was chosen because of its intuitive API and the ability of its internal object scripts, more information about how CoppeliaSim deals with scripts can be found here https://www.coppeliarobotics.com/helpFiles/en/scripts.htm. This tutorial assumes the reader is on a Linux machine, has installed ROS, and is familiar with its basic functionalities. The next figure gives a sense of what is achieved after this tutorial (it can be clicked to show a video).

- Download and extract the downloadable zip file at the bottom of the page.

- Download CoppeliaSim (Edu): https://www.coppeliarobotics.com/downloads

- Install it and place the installation folder in a directory.

- Follow the ROS setup for the simulator: https://www.coppeliarobotics.com/helpFiles/en/ros1Tutorial.htm

- Initialize ROS by opening a terminal (ctrl+alt+t) and typing roscore

- Open CoppeliaSim by going to its folder, open a terminal and type ./coppeliaSim.sh

- In the sim, file->open scene... and locate the follow_rollpitch.ttt file from the extracted zip.

- Open the main script(orange paper under 'scene hierarchy'), find the line camp = sim.launchExecutable('/PATH/TO/FILE/camProcessor.py') and fill in the path to the extracted zip.

- All is set, press the play button to start the simulation.

- A window should pop up with the camera feed and the quadcopter should start to follow the ball!

Downloads

- Total Simulation so far: File:Drone follow roomba v-rep.zip

- Empty Robot Soccer Field for CoppeliaSim(V-rep): File:V-rep soccerfield.zip