System Architecture MSD16: Difference between revisions

No edit summary |

|||

| Line 46: | Line 46: | ||

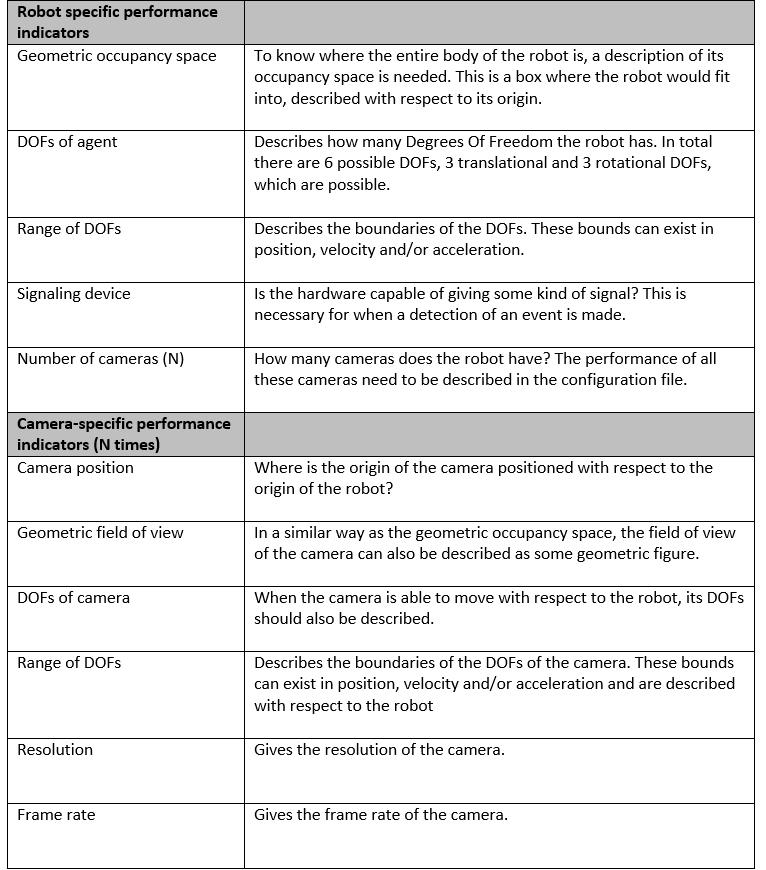

On the approach level of the system architecture it was decided to use moving robots with camera’s to perform the system tasks. Different robots might have different capabilities. This should not hinder the system from operating correctly. It should preferably even be possible to swap robots without losing system functionality. This is why the hardware is abstracted in the architecture. Each piece of hardware needs to know its own performance and share this information with the skill-selector. For the skill-selector to function properly, the performance of the hardware needs to be defined in a structured way. To do this, it is decided that every piece of hardware needs to have its own configuration file. In this file a wide range of quantifiable performance indices are stored. Because one robot can have multiple cameras, the performance can be split into a robot-specific part and one or more camera parts. | On the approach level of the system architecture it was decided to use moving robots with camera’s to perform the system tasks. Different robots might have different capabilities. This should not hinder the system from operating correctly. It should preferably even be possible to swap robots without losing system functionality. This is why the hardware is abstracted in the architecture. Each piece of hardware needs to know its own performance and share this information with the skill-selector. For the skill-selector to function properly, the performance of the hardware needs to be defined in a structured way. To do this, it is decided that every piece of hardware needs to have its own configuration file. In this file a wide range of quantifiable performance indices are stored. Because one robot can have multiple cameras, the performance can be split into a robot-specific part and one or more camera parts. | ||

<center>[[File:HCR.png|thumb|center|700px|hardware configuration]]</center> | <center>[[File:HCR.png|thumb|center|700px|hardware configuration]]</center> | ||

===Skill HCR=== | |||

Some skills might require some capability of the hardware (HCR) in order to perform as desired. To make it clear to the skill-matcher what these requirements are, each skill is prefixed with a HCR. This HCR should give bounds on the performance indicators in the configuration files. For instance; “to execute skill 1 a piece of hardware is required which can move in x and y direction with at least 5 m/s”. The skill-matcher can in turn look at each configuration file in order to determine what hardware can drive in x and y direction with at least this speed. As a simple first implementation, the HCR can be implemented as a structure. This structure will consist of a robot-capability structure and a camera-capability structure: | |||

'''Struct''' ''HCR_struct'' {RobotCap,CameraCap}<br> | |||

'''Struct''' ''RobotCap''{<br> | |||

DOF_matrix<br> | |||

Occupancy_bound_representation<br> | |||

Signal_device<br> | |||

Number_of_cams<br> | |||

}<br> | |||

''' | |||

Struct''' ''CameraCap''{<br> | |||

DOF_matrix<br> | |||

Resolution<br> | |||

Frame_rate<br> | |||

Detection_box_representation<br> | |||

}<br> | |||

Revision as of 19:25, 4 May 2017

Paradigm

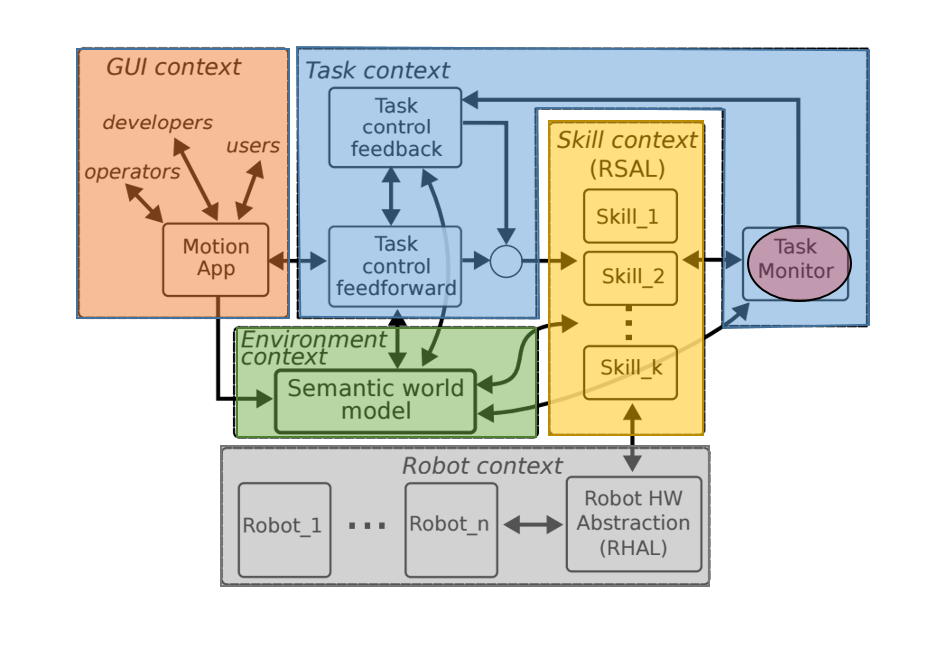

There are many paradigms available for developing architecture for robotic systems. These paradigms are available in Chapter 8 in the Springer Handbook of Robotics. In this project the paradigm developed at KU Leuven in collaboration with TU Eindhoven and was used. This paradigm is followed at TechUnited.

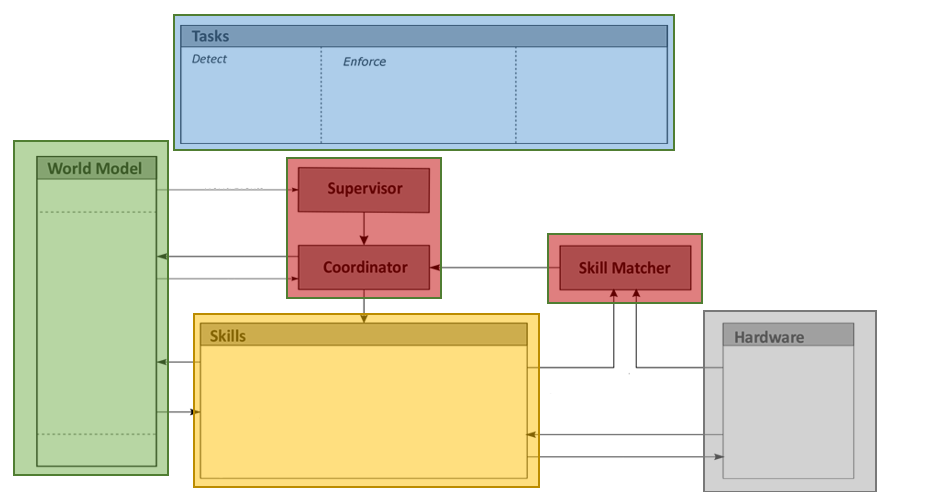

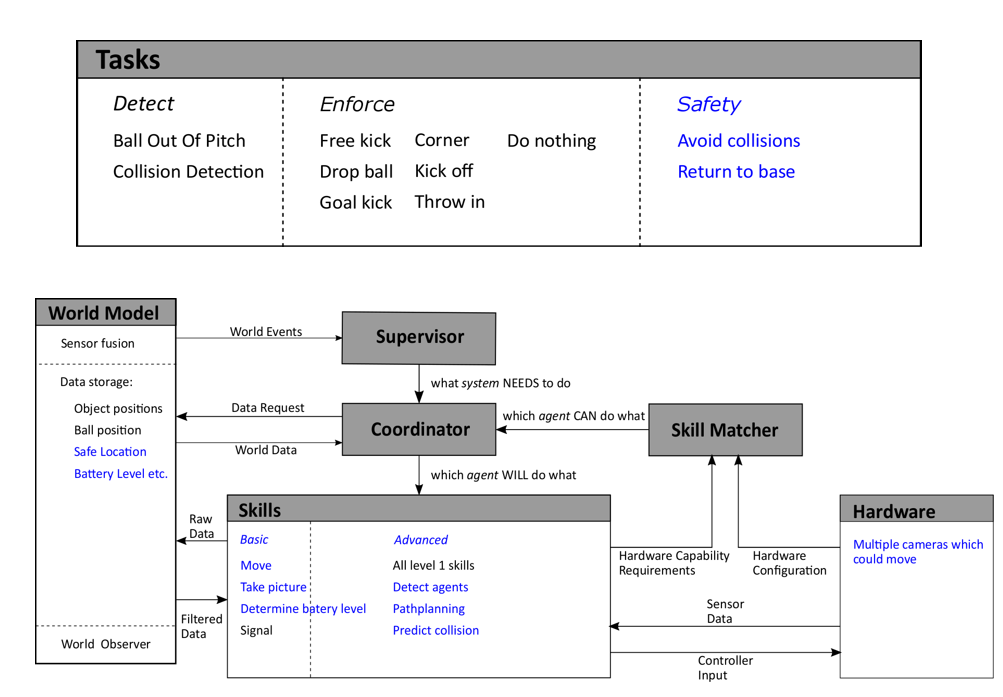

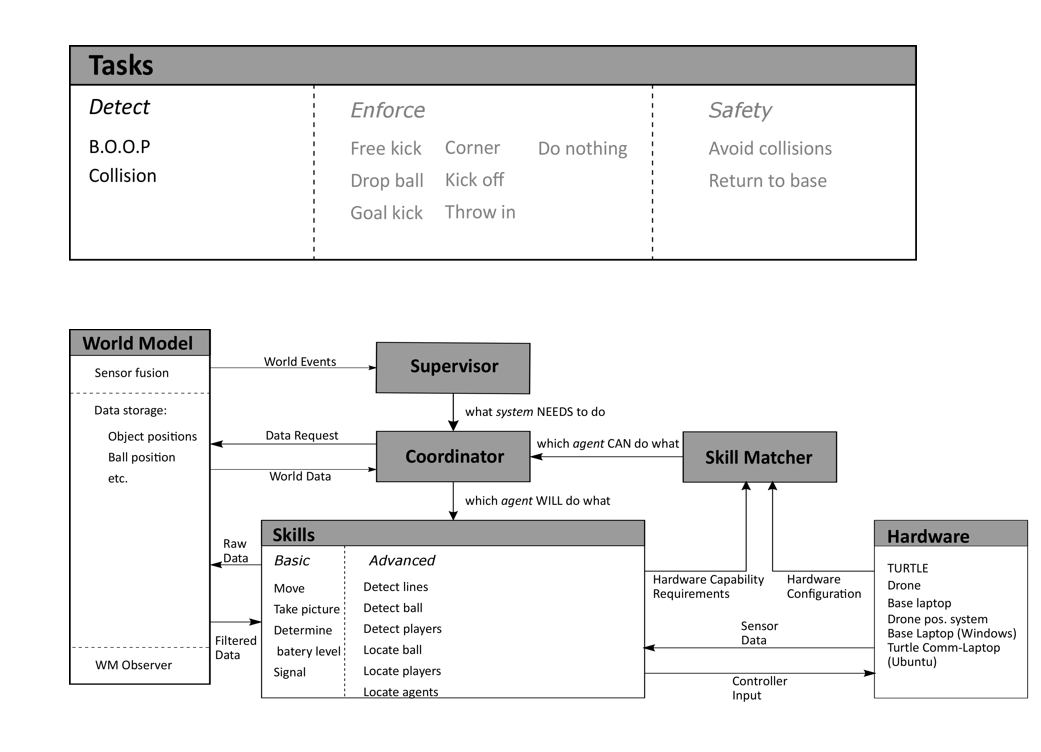

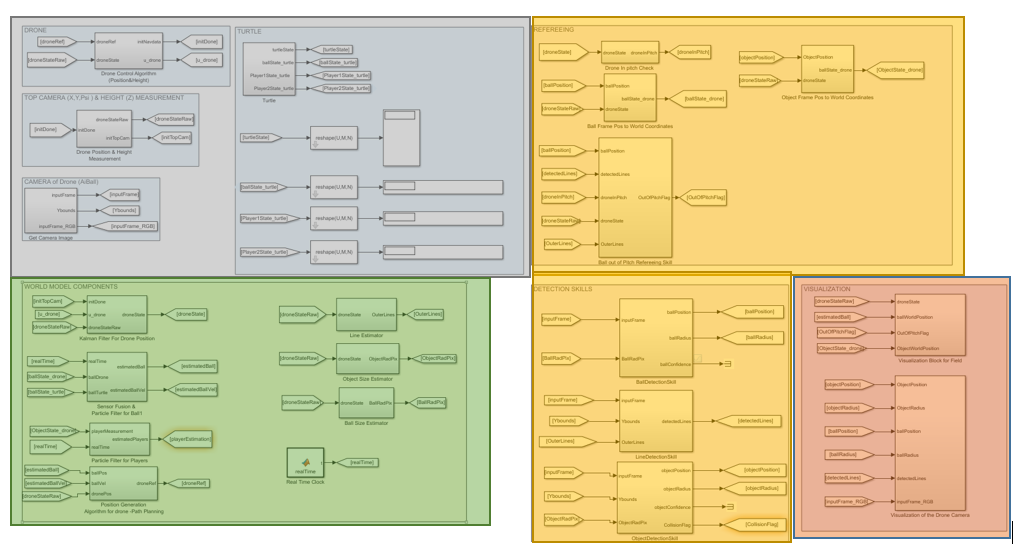

This paradigm defines Tasks (blue block) using the objectives set with respect to the context of the project, i.e. what needs to be done. The Skills (yellow block) then define the implementation, i.e. how to these tasks will be completed. The hardware (grey block) or the robots, i.e. the ones who will complete the tasks by implementing the skills are the agents available at the system-architects’ disposal and the choice made to pick certain agents over the other is influenced by system requirements. The system requirements are influenced by a number of factors especially the objectives and the context of the project. Coming back to the agents, they gather information from the environment and this information is first processed (filtered, fused etc.) and then stored in the world model (green block) which allows it to be accessed afterwards. Finally the visualization or the user interface (orange block) is a tool to observe how the system sees the environment.

In the task block, a sub-block is the Task Monitor (highlighted in red within the Task context block). This block was interpreted as a supervisor and a coordinator. It keeps an eye on the tasks and skills. It is possible to have some tasks which are a completed by a series of skills. In such a case it becomes important to schedule skills as these could either have to be executed sequentially or parallelly. It might become important for the system to track which skills have been completed and which need to be executed next. Task control feedback/ feedforward was not taken into consideration in much detail in the system architecture that was developed for this project.

Layered Approach

The system architecture derived from the concepts presented in the above-described paradigm can be seen below. Every block but the GUI context is highlighted in this architecture. The Coordinator block interacts with the Supervisor to determine which skills need to be executed and is assisted by the Skill Matcher in picking the right hardware agent best suited to execute the necessary skill. The concept behind the skill matcher is explained in a later section.

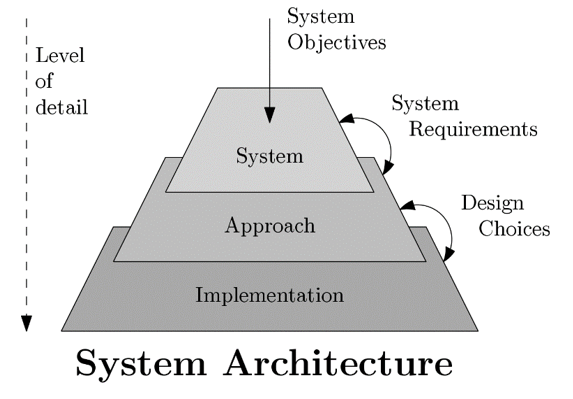

While developing the architecture, a layered approach was used. Based on the objectives, and the requirements/constraints imposed by them, design choices were made. As shown in the Figure below starting from the top, with each subsequent layer the level of detail increases.

System Layer

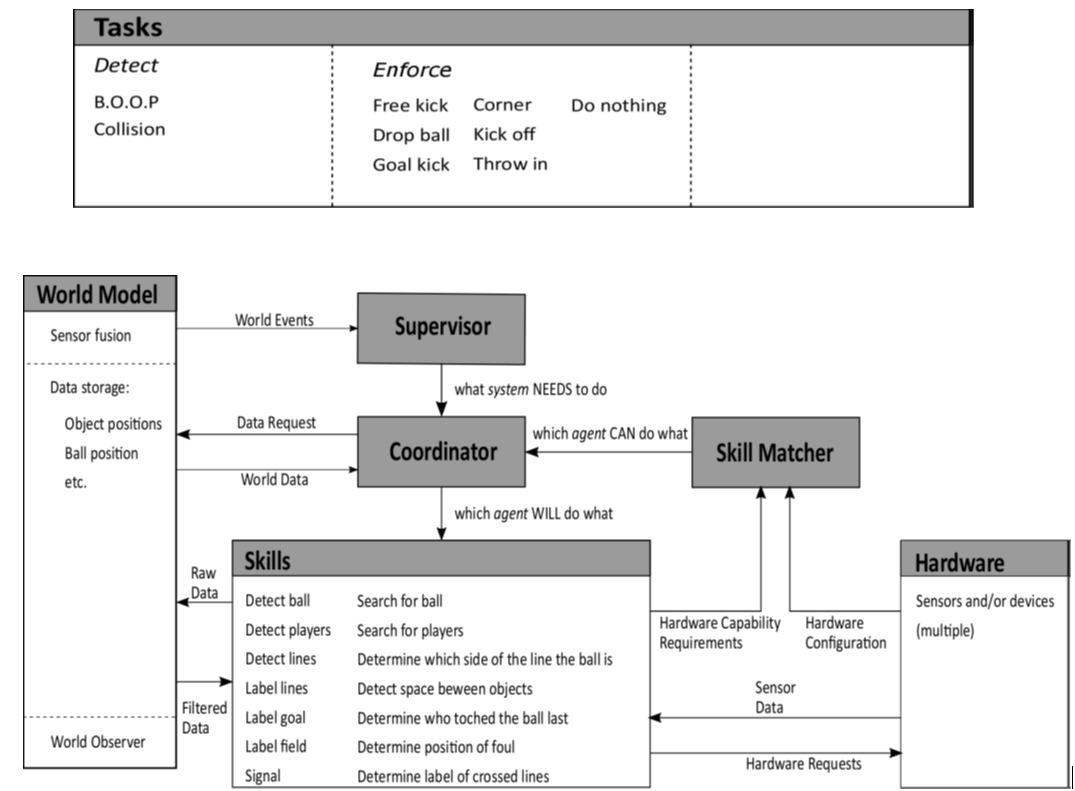

Detecting predefined events and enforcing the corresponding rules were the two main system objectives that could be taken into account for this architecture. Two events, namely

1. ball going out of pitch (B.O.O.P) and

2. collision between players

were considered to be in scope of this project. For detection and enforcement of these rules the Tasks and Skills blocks were filled as shown in the figure below.

Approach Layer

Further, by taking into account some of the system requirements one can bring more tasks and skills into the scope. For example, if one of the requirement is ‘consistency’, it can be defined as, ‘the autonomous referee system should be able to capture the gameplay dynamically’. This requirement might be further refined and result in the decision, ‘the hardware must have multiple cameras in the field which allow effective capture the active gameplay ’. Further, this decision could be polished into having ‘multiple movable cameras in the field which allow effective capture the active gameplay ’. The current layer in the architecture represents an evolution towards implementation. This is reflected in the narrowing down of the elements in the Hardware Block from Sensors and/or devices to multiple cameras which could move. Though this statement is still vague, it is more refined than its predecessor.

Implementation Layer

Referring back to the Three layers in the architecture diagram as the requirements were further refined, several design choices were made on the software and hardware. These choices were influenced not only by requirements but also by constraints. For example, using a drone and the TechUnited turtle were imposed on the project. These did not come into the picture in the previous layers. The current layer of the architecture is the Implementation Layer as depicted in the figure.

At this level of the architecture, one can write the software. The major decisions that were made here were regarding the software such as specific:

1. image processing algorithms (=skills),

2. sensor fusion algorithms (in the world model) and

3. communication protocols (communication between different hardware components).

This layer is also the realistic representation of the actual implementation the project team ended up with i.e. only the detection based tasks (and the corresponding skills).

Below is the screen-shot of the Simulink implementation. What is highlighted here is the fact that the software developed in this project had a proper correspondence with the paradigm used of the system architecture. The congruity in the colors of the blocks here and the ones that have shown earlier in the description of the paradigm is evident.

The Supervisor, coordinator and the skill-matcher were not in the final implementation. Bu their conceptual inception is presented here and can be further worked on and included in future implementations.

Skill-Matcher

For each defined skill, the skill-selector needs to determine which piece of hardware is able to perform this skill. Therefore the capabilities of each piece of hardware needs to be known and registered somewhere in a predefined standard format. These hardware-capabilities can be compared to the hardware capability requirements (HCR; required in the skill framework) of each skill. This matching between hardware and skill can be done by the skill-selector during initialization of the system. To do this in a smart way, the description of the hardware configuration and the HCR need to be in a similar format. One possible way of doing this will be discussed below.

Hardware configuration files

On the approach level of the system architecture it was decided to use moving robots with camera’s to perform the system tasks. Different robots might have different capabilities. This should not hinder the system from operating correctly. It should preferably even be possible to swap robots without losing system functionality. This is why the hardware is abstracted in the architecture. Each piece of hardware needs to know its own performance and share this information with the skill-selector. For the skill-selector to function properly, the performance of the hardware needs to be defined in a structured way. To do this, it is decided that every piece of hardware needs to have its own configuration file. In this file a wide range of quantifiable performance indices are stored. Because one robot can have multiple cameras, the performance can be split into a robot-specific part and one or more camera parts.

Skill HCR

Some skills might require some capability of the hardware (HCR) in order to perform as desired. To make it clear to the skill-matcher what these requirements are, each skill is prefixed with a HCR. This HCR should give bounds on the performance indicators in the configuration files. For instance; “to execute skill 1 a piece of hardware is required which can move in x and y direction with at least 5 m/s”. The skill-matcher can in turn look at each configuration file in order to determine what hardware can drive in x and y direction with at least this speed. As a simple first implementation, the HCR can be implemented as a structure. This structure will consist of a robot-capability structure and a camera-capability structure:

Struct HCR_struct {RobotCap,CameraCap}

Struct RobotCap{

DOF_matrix

Occupancy_bound_representation

Signal_device

Number_of_cams

}

Struct CameraCap{

DOF_matrix

Resolution

Frame_rate

Detection_box_representation

}