Embedded Motion Control 2019 Group 3

Group members

| Collin Bouwens | 1392794 | |

| Yves Elmensdorp | 1393944 | |

| Kevin Jebbink | 0817997 | |

| Mike Mostard | 1387332 | |

| Job van der Velde | 0855969 |

Useful information

Planning

| Week 2 | Week 3 | Week 4 | Week 5 | Week 6 | Week 7 | Week 8 |

|---|---|---|---|---|---|---|

| Wed. 1 May: initial meeting: getting to know the requirements of the design document. | Mon. 6 May: design document handed in by 17:00. Responsibility: Collin and Mike. | Wed. 15 May: escape room competition. | Wed. 5 June: final design presentation. | Wed. 12 June: final competition. | ||

| Tue. 7 May: first tests with the robot. Measurement plan and test code is to be made by Kevin and Job. | Tue. 14 May: Implementing and testing the code for the Escape Room Challenge | |||||

| Wed. 8 May: meeting: discussing the design document and the initial tests, as well as the software design made by Yves.

Presentation of the initial design by Kevin during the lecture. |

Wed. 15 May: Developing the software design for the Final Challenge |

Design document

This document describes the process of making the PICO robot succeed the Escape Room Competition and the Final Competition. The PICO robot is a telepresence robot that is capable of driving around while monitoring its environment. In the Escape Room Competition, the robot is placed somewhere inside a rectangular room with unknown dimensions with one doorway that leads to the finish line. Once the robot crosses the finish line without bumping into walls, the assignment is completed. The Final Competition involves a dynamic hospital-like environment, where the robot is assigned to approach a number of cabinets based on a known map, while avoiding obstacles.

Components

The PICO robot is a modified version of the Jazz robot, which is originally developed by Gostai, now part of Aldebaran. The key components of the robot that are relevant to this project are the drivetrain and the laser rangefinder. The drivetrain is holonomic, as it consists of three omni-wheels that allow the robot to translate in any direction without necessarily rotating. This adds the benefit of scanning the environment in a fixed orientation, while moving in any direction. The software framework allows the forward and sideways velocity to be set, as well as the horizontal angular velocity. The framework also approximates the relative position and angle from the starting position.

The laser rangefinder is a spatial measurement device that is capable of measuring the horizontal distance to any object within a fixed field of view. The software framework measures a finite number of equally distributed angles within the field of view and notifies when new measurement data is available. Using this data, walls and obstacles in the environment of the robot can be detected.

Lastly, the robot is fitted with loudspeakers and a WiFi connection according to the data sheet of the Jazz robot. This can be useful for interfacing during operation, as described in the 'Interfaces' section. Whether the PICO robot actually has these speakers and the WiFi connectivity remains to be determined.

Requirements

Different requirement sets have been made for the Escape Room Competition and the Final Competition. The requirements are based on the course descriptions of the competitions and the personal ambitions of the project members. The final software is finished once all the requirements are met.

The requirements for the Escape Room Competition are as follows:

- The entire software runs on one executable on the robot.

- The robot is to autonomously drive itself out of the escape room.

- The robot may not 'bump' into walls, where 'bumping' is judged by the tutors during the competition.

- The robot may not stand still for more than 30 seconds.

- The robot has five minutes to get out of the escape room.

- The software will communicate when it changes its state, why it changes its state and to what state it changes.

The requirements for the Final Competition are as follows:

- The entire software runs on one executable on the robot.

- The robot is to autonomously drive itself around in the dynamic hospital.

- The robot may not 'bump' into objects, where 'bumping' is judged by the tutors during the competition.

- The robot may not stand still for more than 30 seconds.

- The robot can visit a variable number of cabinets in the hospital.

- The software will communicate when it changes its state, why it changes its state and to what state it changes.

- The robot navigates based on a provided map of the hospital and data obtained by the laser rangefinder and the odometry data.

Functions

A list of functions the robot needs to fulfil has been made. Some of these functions are for both competitions, while some are for either the Escape Room or Final Competition. These functions are:

- In general:

- Recognising spatial features;

- Preventing collision;

- Conditioning the odometry data;

- Conditioning the rangefinder data;

- Communicating the state of the software.

- For the Escape Room Competition:

- Following walls;

- Detecting the end of the finish corridor.

- For the Final Competition:

- Moving to points on the map;

- Calculating current position on the map;

- Planning the trajectory to a point on the map;

- Approaching a cabinet based on its location on the map.

The key function in this project is recognising spatial features. The point of this function is to analyse the rangefinder data in order to detect walls, convex or concave corners, dead spots in the field of view, and gaps in the wall that could be a doorway. This plays a key role during the Escape Room Competition in order to detect the corridor with the finish line in it, and therefore has a priority during the realisation of the software. For this function to work reliably, it is essential that the rangefinder data is analysed for noise during the initial tests. If there is a significant amount of noise, the rangefinder data needs to be conditioned before it is fed into the spatial feature recognition function. As a safety measure, it is important to constantly monitor the spatial features in order to prevent collisions with unexpected obstacles.

Lastly, the trajectory planning function plays a major role during the Final Competition, as this determines the route that the robot needs to follow in order to get to a specified cabinet. This function needs to take obstacles into account, in case the preferred route is obstructed. This is possible, as the documentation about the Final Competition show a map in which multiple routes lead to a certain cabinet. One of these routes can be blocked, in which case the robot needs to calculate a different route.

Specifications

The specifications describe important dimensions and limitations of the hardware components of the robot that will be used during the competitions. For each component, the specifications of that components will be given, with a source of where this specification comes from.

The drivetrain of the robot can move the robot in the x and y directions and rotate the robot in the z direction. The maximum speed of the robot is limited to ±0.5 m/s translation and ±1.2 rad/s rotation. These values are from the Embedded Motion Control Wiki page. The centre of rotation of the drivetrain needs to be known in order to predict the translation of the robot after a rotation. This will be determined with a measurement.

The dimensions of the footprint of the robot need to be known in order to move the robot through corridors and doorways without collision. The footprint is 41 cm wide and 35 cm deep, according to the Jazz robot datasheet. A measurement will be made to check these dimensions.

The laser rangefinder will be used to detect and measure the distance to objects in the vicinity of the robot. The measurement distance range of the sensor is from 0.1 m to 10.0 m with a field of view of 229.2°. The range of the sensor is divided into 1000 parts. These values are determined with the PICO simulator and need to be verified with measurements on the real robot.

Interfaces

The interfacing of the robot determines how the project members interact with the robot in order to set it up for the competitions. It also plays a role during operation, in the way that it interacts with the spectators of the competitions. On the development level there is an Ethernet connection available to the robot. This allows a computer to be hooked up to the robot in order to download the latest version of the software using git, by connecting to the Gitlab repository of the project group. This involves using the git pull command, which downloads all the content from the repository, including the executable that contains the robot software.

On the operation level it is important for the robot to communicate the status of the software. This is useful for debugging the software, as well as clarifying the behaviour during the competitions. This can be made possible with the loudspeaker, by recording voice lines that explain what the robot currently senses and what the next step is that it will perform. Not only is this functionally important, but it can also add a human touch to the behaviour of the robot. In case that the PICO robot has been altered to not have loudspeakers, it needs to be determined during testing if the WiFi interface can be utilised in order to print messages in a terminal on a computer that is connected to the robot.

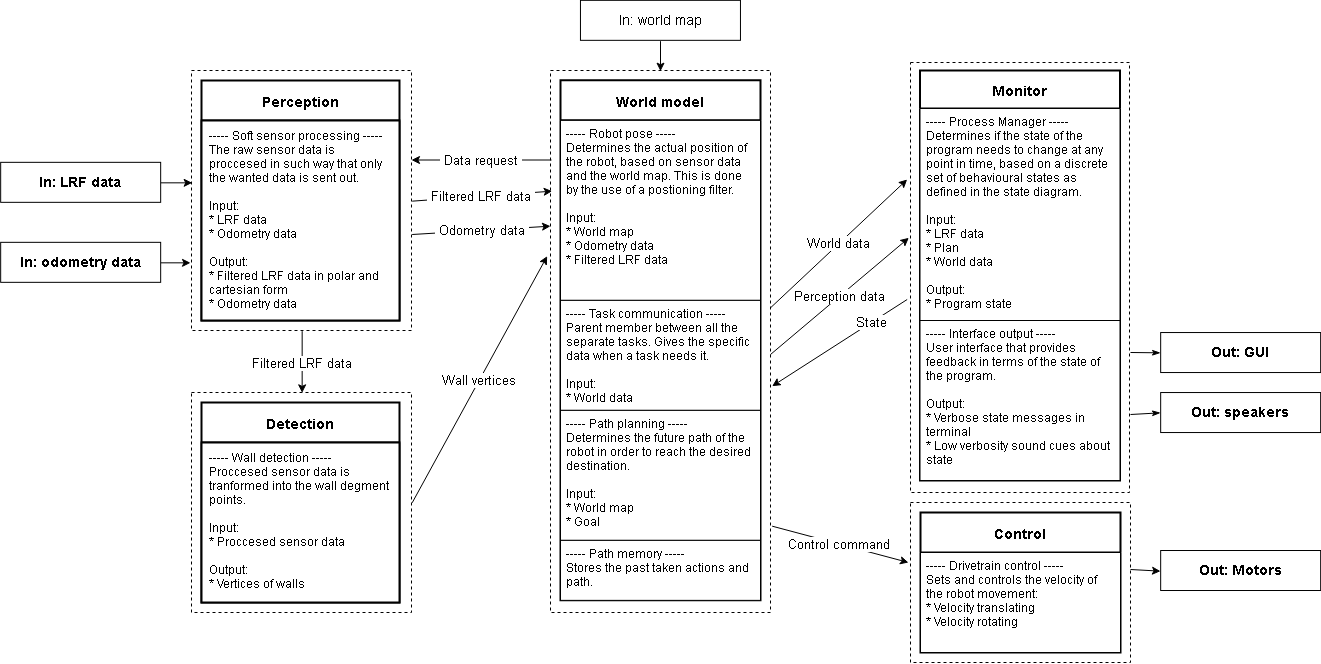

System architecture

Perception block

The purpose of the perception object is to condition the sensor data. This mainly involves filtering invalid points from the LRF measurements, such that these points cannot pollute the information that is fed into the feature detection algorithm. Such invalid points include points that are erroneously measured at the origin of the sensor, probably as a result of dust on the sensor.

Monitor block

The monitor object, as the name implies, monitors the execution of the program. In this object, the state machine is being run. On every tick, it is checked whether the state has fulfilled its exit TODO

World model block

Hier komt kevin's shit over spatial recognition enzo... biem

Planner block

Control block

The control block contains actuator control and any output to the robot interface.

Drivetrain

The actuators are controlled such that the movement of the robot is fluent. This is achieved via implementing an S-curve for any velocity change. General information on S-curves can be found via the link under Useful Information.

Two functions have been constructed, 'Drive' for accelerating or decelerating to a certain speed in any direction, and 'Drive distance' for traveling a certain distance in any direction.

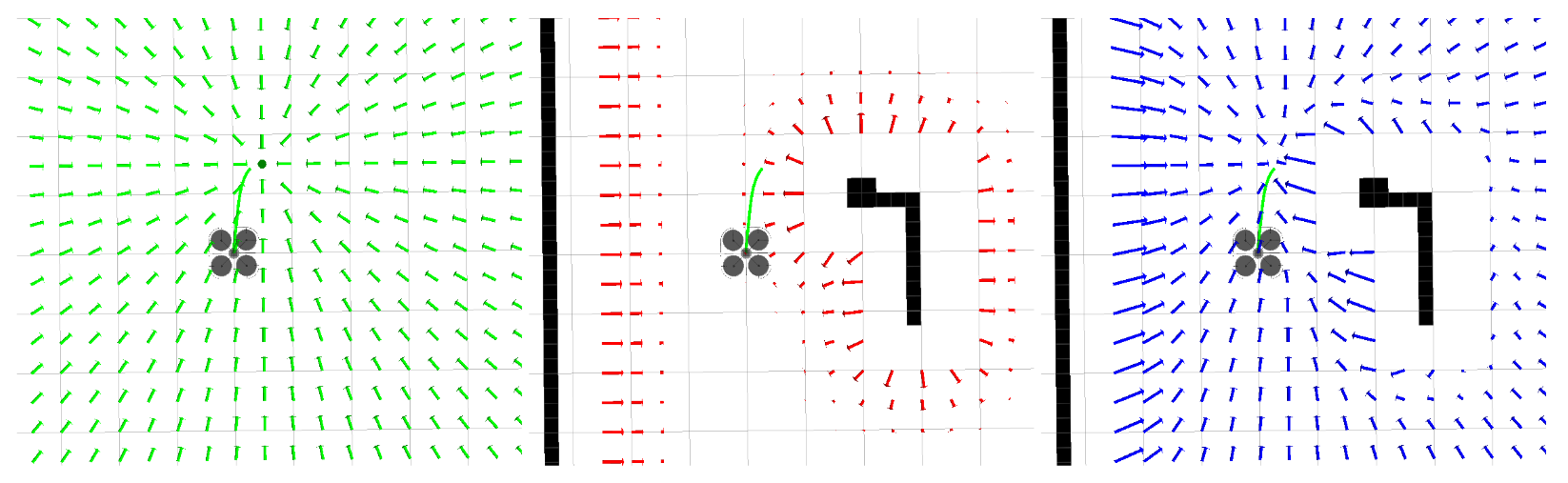

Drive has been further incorporated in a function that uses a potential field. This function prevents the robot from bumping into objects in a fluent manner. See the figure below for a visual representation of the implementation of a potential field. The leftmost image shows the attraction field to the goal, the middle image shows the repulsion from obstacles and the rightmost image shows the combination of the two. Any wall or object is taken into account for this function.

Image obtained from: [[1]]

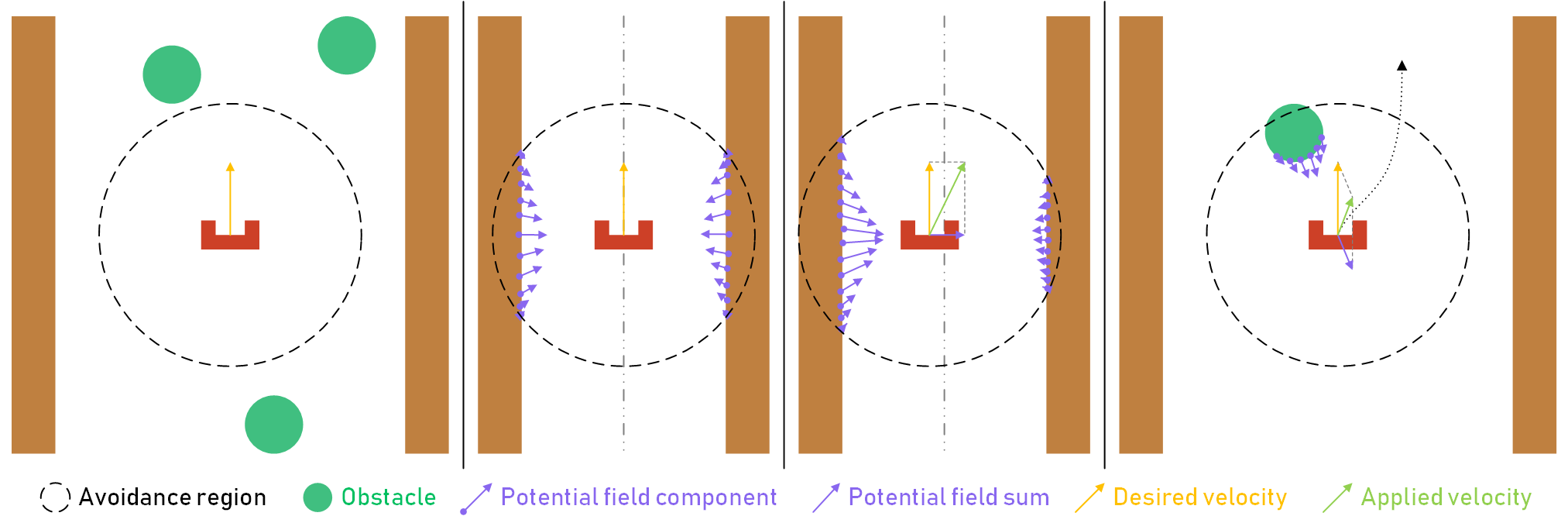

The potential field vector is calculated in real-time, as the robot is expected to run into dynamic obstacles in the final challenge. This also takes the imperfections in the physical environment into account. The way the potential field is obtained is visualised in the figure below.

The first image shows how the robot is far away enough from any walls or obstacles, and thus the potential field vector is zero, causing the robot to keep its (straight) trajectory. In the second image, the robot is driving through a narrow corridor. As a result of the symmetry of the environment, the potential field component vectors cancel each other out, causing the potential field sum vector to be zero. Once again, the robot keeps its trajectory. In the third image however, the robot is closer to the left wall, causing the left potential field component vectors to outweigh the right ones. As such, the potential field sum vector points to the right, causing the robot to drive towards the middle of the corridor, until the sum vector reaches its steady state value when the robot is in the middle again. The fourth image depicts a situation where an obstacle, such a random box or a walking person, enters the avoidance region around the robot. Once again, the potential field sum vector points away from the obstacle, causing the robot to drive around the obstacle as depicted by the dotted line.

Test Plan

The test plan describes the initial tests executed on the Pico robot to determine its functionality. The document also describes the initial setup required to couple the robot to a laptop.

Goal

The goal is to perform the initial setup of the robot and to determine the actual properties of the laser range finder, encoders and drive train. For the laser range finder, these properties consist of the range, angle, sensitivity and amount of noise. The most important property for the encoder is its accuracy.

The most important properties of the drivetrain are its accuracy, and its maximum translational and rotational acceleration for smooth movement.

Simulation results

The range of the laser range finder according to the simulation is 10cm to 10m, the angle is +114.6 to -114.6 degrees as measured from the front of the robot. This angle is measured in 1000 parts, per an amount of time that can be determined by the user.

Execution

Initial setup

The initial setup for connecting with the Pico robot is described on the following wiki page: [[2]]

Laser range finder

Two tests can be executed to determine the range, angle and accuracy of the laser range finder. First of all, the output values from the range finder can be saved in a file and compared to actual measured values. The second option is to program the robot drive backward slowly while facing a wall. The program should stop the robot as soon as it does not register the wall anymore. The same can be done while driving forward to determine the minimum range. To determine the angle the robot can be rotated.

Encoders

The values supplied by the encoders are automatically converted to distance in the x- and y-direction and a rotation a in radians. These can be compared to measured values in order to determine the accuracy.

Drive train

The maximum acceleration of the robot can be determined by finding the amount of time it takes over which the maximum velocity of the robot is achieved in a smooth manner. The maximum translational velocity of the robot is set to 0.5 m/s and the maximum rotational velocity to 1.2 rad/s.

Results

Escape room challenge

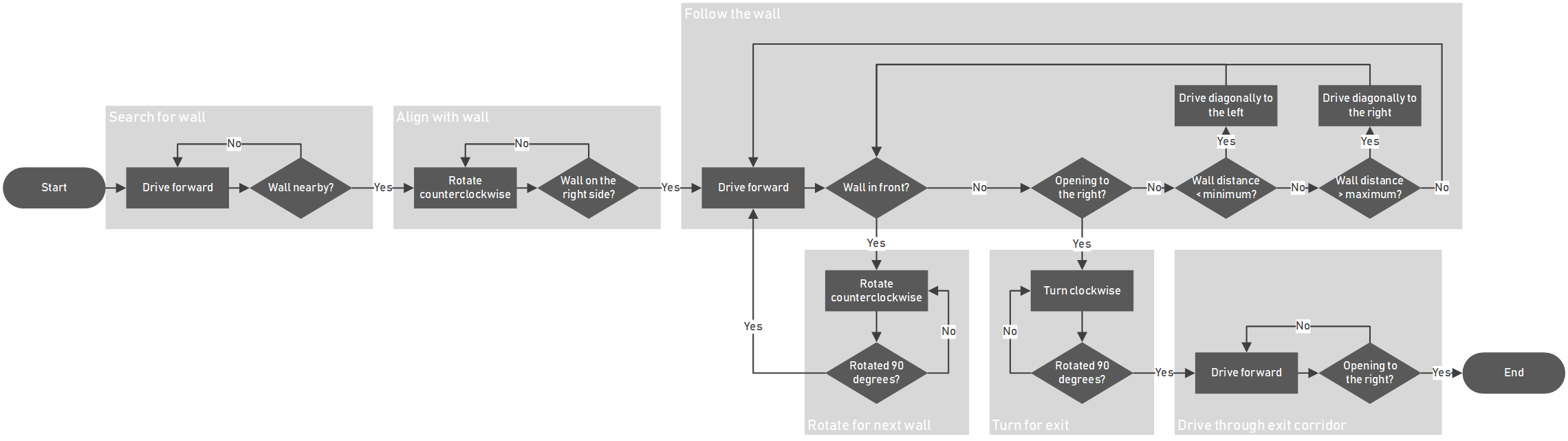

State chart

The state chart below depicts the wall following program that the robot is to execute during the escape room challenge. In a nutshell: the robot drives forward until a wall is detected, lines up with said wall to the right, and starts following it by forcing itself to stay between a minimum and a maximum distance to the wall. When something is detected in front, it is assumed that the next wall to follow is found, and thus the robot should rotate 90 degrees counterclockwise so it can start following the next wall. When a gap is detected to the right of the robot, it is assumed that the exit corridor has been found, and thus the robot should turn into the exit. Then the robot keeps following the right wall in the corridor until, once again, a gap is detected to the right of the robot. At this point, the robot should have crossed the finish line.

Reflection

Due to a lack of time and more resources being put into the final challenge, the code for the escape room challenge had to be simplified. The original plan was to have the robot scan the environment, identify the exit, and when identified, drive towards the exit and drive to the finish line. In case the robot could not identify the exit, the robot would start following the wall instead, as a robust backup plan. The testing session before the challenge proved to be too short, and only the wall follower could be tested. Therefore, only the wall follower program was executed during the challenge.

As a precaution to not bump into the walls, we reduced the speed of the robot and increased the distance the robot would keep to the wall by modifying the config file in the software. Although our program did succeed the challenge, we were the slowest performing group as a result of the named modifications to the configuration. We felt however that these modifications were worth the slowdown and proved the robustness of the simple approach our software took.

Hospital Competition

Approach

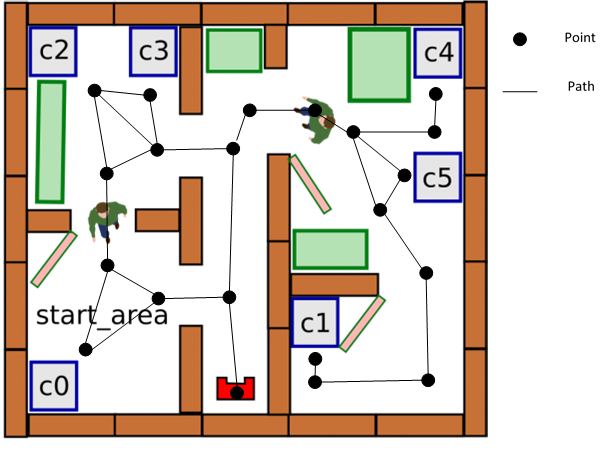

The general approach to the challange is to create a point map of the map of the hospital. The figure below shows such a point map:

A point is placed on diffrent locations on the map. These locations are: at cabinets, on junction, in front of doorways and in rooms. In the placement of these points, it is important that each point can be approached from a diffrent point in a straight line. The goal of these points is that the robot can navigate from one side of the hospital to the other by driving from point to point. The points that the robot can drive to in a straight line from a point are its neighboring points.

The placement of each point is difined by the distance and direction to its neighboring points and its surrounding spatial features. When the robot is on a point (A) and wants to drive to a diffrent point (B), the robot can use the distance and direction to from A to B to drive to where B approximately is. Then, using the spatial features surrounding point B, the robot can more accuratly determine its location compared to B and drive to B. For the path between points, it can be defined whether this path is through a doorway or hallway, or whether its though a room. This can help in how the robot trajectory should be controlled during the driving from point to point.

If the robots needs to drive from a startpoint to an endpoint which is not neigboring, the software will create a route to that point. This route is a list of points to which the robot needs to drive to get to the endpoint. To make sure the route is as effecient as possible, an algorithm is used which calculates the shortest route. The algorithm that is used is called "Dijkstra'a algorithm". A similar algorithm is also used in car navigation systems to obtain the shortest route.

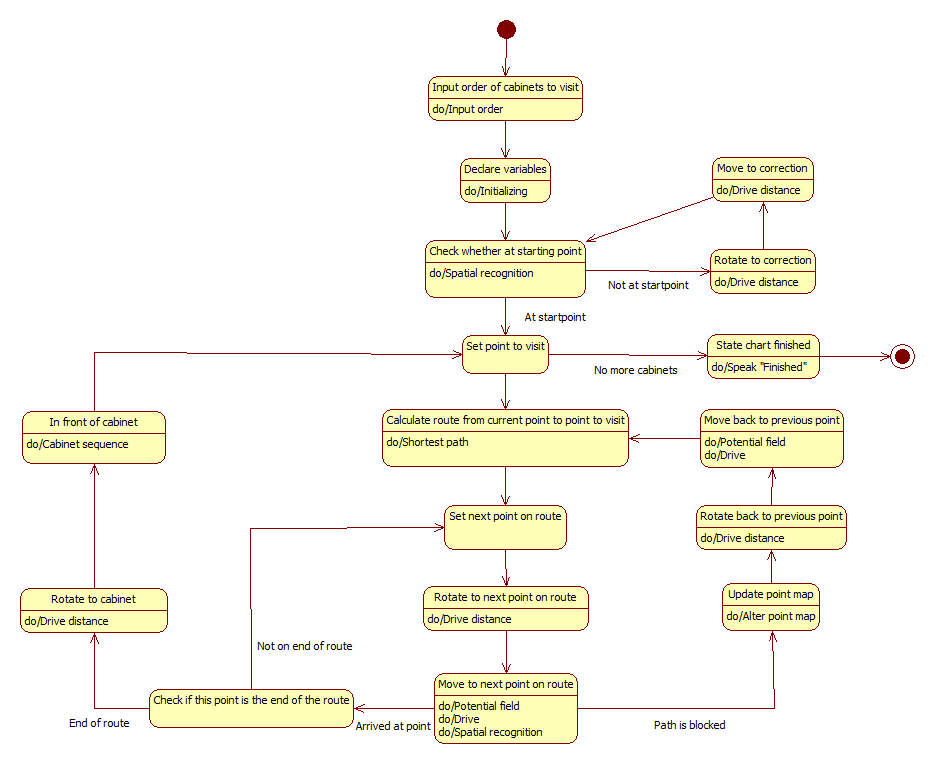

State Machine

The figure below shows the state machine for this challenge. The state chart will be a part of the "World model block" form the system architecture. This diagram will be used as basis for the software written for the final challenge.

Per state, the functions which need to be performed are stated. These exclude functions, such as tracking the position of the robot on the map, which will always run in a separate thread. The state chart is designed such that all the requirements of the final challenge will be fulfilled.

Wall finding algorithm

To allow PICO to navigate safely, he must know where he is in the world map and what is around him. PICO is equipped with a LIDAR scanner that scans the environment with the help of laser beams. This data is then processed to be able to determine where all walls and objects are. There are many ways in which you can process the data into useful information. A commonly used algorithm is the split and merge algorithm with the RANSAC algorithm as an extension. These methods are also used within this project. In the case of this design, we do the following processing steps:

- Filtering measurement data

- Recognizing and splitting global segments (recognizing multiple walls or objects)

- Apply the split algorithm per segment

- Determine end points of segment

- Determine the linear line between these end points (by = ax + c)

- For each data point between these end points, determine the distance perpendicular to the line (d = abs(a*x+b*y+c)/sqrt(a^2+b^2))

- Compare the point with the longest distance with the distance limit value

- If our value falls below the limit value then there are no more segments (parts) in the global segment.

- If the value falls above the limit value, the segment is split at this point and steps 3.1 to 3.4 are performed again for the left and right parts of this point.

- All segment points found are combined using the RANSAC algorithm.

Below is a visual representation of the split principle. The original image is used from the EMC course of 2017 group 10 [3]:

To be extended!!!!

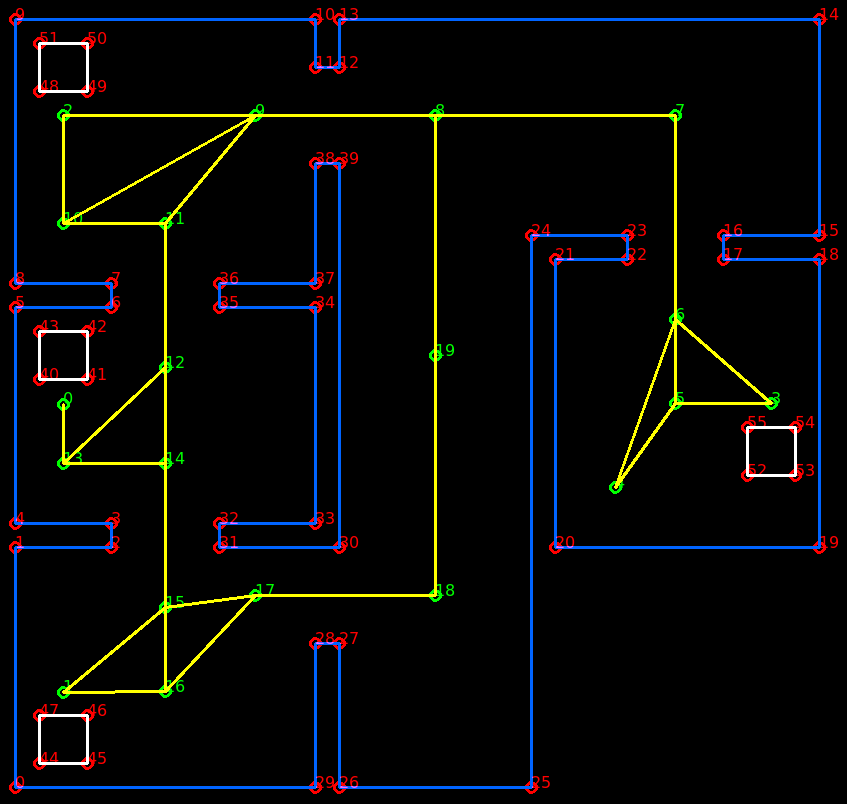

Path planning

The method of determine the path points is done automatic and by hand. The program will load the Json map file when the program starts. The code will detect where all the cabinets are and what the front is of a cabinet. Each cabinet path point will exactly be placed in the middle of the virtual area that is specified in front of the cabinet. The rest of the path points are put in by hand. A path point has three variables: the x and y coordinates and the direction. The direction only applies when the path point is in front of a cabinet. The orientation that PICO needs to have to be in front of the cabinet is specified within the direction variable. The direction will be subtracted to the real orientation of PICO and afterward be corrected if PICO is not aligned right.

| Point | X | Y |

|---|---|---|

| 0 (cabinet 0) | 0.4 | 3.2 |

| 1 (cabinet 1) | 0.4 | 0.8 |

| 2 (cabinet 2) | 0.4 | 5.6 |

| 3 (cabinet 3) | 6.3 | 3.2 |

| Point | X | Y |

|---|---|---|

| 4 (Start point) | 5.0 | 2.5 |

| 5 | 5.5 | 3.2 |

| 6 | 5.5 | 3.9 |

| 7 | 5.5 | 5.6 |

| 8 | 3.5 | 5.6 |

| 9 | 2.0 | 5.6 |

| 10 | 0.4 | 4.7 |

| 11 | 1.25 | 4.7 |

| 12 | 1.25 | 3.5 |

| 13 | 0.4 | 2.7 |

| 14 | 1.25 | 2.7 |

| 15 | 1.25 | 1.5 |

| 16 | 1.25 | 0.8 |

| 17 | 2.0 | 1.6 |

| 18 | 3.5 | 1.6 |

| 19 | 3.5 | 3.6 |

| Path | Length |

|---|---|

| 4->5 | 0.86 |

| 4->6 | 1.49 |

| 5->3 | 0.8 |

| 5->6 | 0.7 |

| 3->6 | 1.06 |

| 6->7 | 1.7 |

| 7->8 | 2.0 |

| 8->9 | 1.5 |

| 9->2 | 1.6 |

| 9->10 | 1.84 |

| 9->11 | 1.17 |

| 2->10 | 0.9 |

| 10->11 | 0.85 |

| 11->12 | 1.2 |

| Path | Length |

|---|---|

| 12->13 | 1.17 |

| 12->14 | 0.8 |

| 13->0 | 0.5 |

| 13->14 | 0.85 |

| 14->15 | 1.2 |

| 15->1 | 1.1 |

| 15->16 | 0.7 |

| 15->17 | 0.76 |

| 1->16 | 0.85 |

| 16->17 | 1.1 |

| 17->18 | 1.5 |

| 18->19 | 2.0 |

| 19->8 | 2.0 |

Meeting notes

Week 2 - 1 May

Notes taken by Mike.

Every following meeting requires concrete goals in order for the process to make sense. An agenda is welcome, though it does not need to be as strict as the ones used in DBL projects. The main goal of this meeting is to get to know the expectations of the design document that needs to be handed in next monday, and which should be presented next wednesday. These and other milestones, as well as intermediate goals, are to be described in a week-based planning in this Wiki.

Design document

The focus of this document lies on the process of making the robot software succeed the escape room competition and the final competition. It requires a functional decomposition of both competitions. The design document should be written out in both the Wiki and a PDF document that is to be handed in on monday the 6th of May. This document is a mere snapshot of the actual design document, which grows and improves over time. That's what this Wiki is for. The rest of this section contains brainstormed ideas for each section of the design document.

Requirements:

- The entire software runs on one executable on the robot;

- The robot is to autonomously drive itself out of the escape room;

- The robot may not 'bump' into walls, where 'bumping' is judged by the tutors during the competition;

- The robot has five minutes to get out of the escape room;

- The robot may not stand still for more than 30 seconds.

Functions:

- Detecting walls;

- Moving;

- Processing the odometry data;

- Following walls;

- Detecting doorways (holes in the wall).

Components:

- The drivetrain;

- The laser rangefinder.

Specifications:

- Dimensions of the footprint of the robot, which is the widest part of the robot;

- Maximum speed: 0.5 m/s translation and 1.2 rad/s rotation.

Interfaces:

- Gitlab connection for pulling the latest software build;

- Ethernet connection to hook the robot up to a notebook to perform the above.

Measurement plan

The first two time slots next tuesday have been reserved for us in order to get to know the robot. Everyone who is able to attend is expected to attend. In order for the time to be used efficiently, code is to be written to perform tests that follows from a measurement plan. This plan involves testing the limits of the laser rangefinder, such as the maximum distance that the laser can detect, as well as the field of view and the noise level of the data.

Software design

The overall thinking process of the robot software needs to be determined in a software design. This involves a state chart diagram that depicts the global functioning of the robot during the escape room competition. This can be tested with code using the simulator of the robot with a map that resembles the escape room layout.

Tasks

Collin and Mike: write the design document and make it available to the group members by saturday.

Kevin and Job: write a test plan with test code for the experiment session next tuesday.

Yves: draft an initial global software design and make a test map of the escape room for the simulation software.

Week 3 - 8 May

Notes taken by Collin.

These are the notes from the group meeting on 8th of May.

Strategy

A change was made to the strategy of the Escape Room Challenge. The new strategy is in two parts, a plan A and a plan B. First the robot will perform plan A. If this strategy fails, plan B will be performed. Plan A is to make the robot perform a laser scan, than rotate the robot 180 degrees and perform another scan. This gives the robot information about the entire room. From this information the software will be able to locate doorway and escape the room. This plan may not work, since the doorway may be too far away from the laser to detect it, or the software may not be able to detect the doorway. Therefore, plan B exists. This strategy is to drive the robot to the a wall of the room. Then the wall will be followed right hand side, until the robot crosses the finish.

Presentation

A Powerpoint presentation was prepared by Kevin for the lecture that afternoon. A few remarks on the presentation were:

- Add the 'Concept system architecture', modyfied to have a larger font.

- Add 'Communicating the state of the software' as a function

- Keep the assignment explanation and explanation of the robot hardware short

Concept system architecture

The concept system architecture was made by Yves. The diagram should be checked on its english, since some sentences are unclear. A few changes were made to the spelling. The content of the contentremained mostly the same.

Measuerment results

The first test with the robot did not go smoothly. Connecting with the robot showed more difficult than expected. When the test program was run, it was discovered that the Laser Sensor contained a lot of noise. A test situation, like the escape room, was made and all the data from the robot was recorded and saved. From this data, a algorithem can be desiged to condition the sensor data. The data can also be used for the Spatial Feature Recognition.

Tasks

The task to be finished for next meeting:

- Spatial Feature Recognition and Monitoring: Mike, Yves

- Laser Range Finder data conditioning: Collin

- Control: Job

- Detailed software design for Escape Room Challenge: Kevin (Deadline: 9/5/2019)

The next robot reservations are:

- Tuesday 14/5/2019, from 10:45

- Thursday 16/5/2019, from 14:30

Next meeting: Wednesday 15/5/2019, 13:30 in Atlas 5.213

Week 4 - 15 May

Notes taken by Collin.

These are the notes from the group meeting on 15th of May.

Escape Room Challenge

The test of the software for the Escape Room Challenge was succesfull. Small changes have been made to the code regarding the currents state of the software being shown on the terminal. Also, the distance between the robot and the wall has been increased and the travel velocity of the robot has been decreased. A state machine has been made and put on the Wiki which describes the software.

Wall detection

A Split and Merge algorithm has been develped in Matlab. It can detect walls and corners. The algorithm needs to be further tested and developed. Futhermore, a algorithm needs to be developed to use the information from the split and merge to find the position of the robot on the map. The current plan is to use a Kalman-filter. This needs to be further developed.

Drive Control

The function to smoothly accelerate and decelerate the robot is not yet finished. Once the function has been swown to work in the simulation, it can be tested on the robot. This will be either Thursday 16th of May or the Tuesday after.

In order to succed in the final challenge, better agreements and stricter deadlines need to ben made and followed by the group.

Tasks

- Yves: Filter double points from 'Merge and split' algoritm.

- Mike: Develop the architecture for the C++ project.

- Job: Code a function for the S-curve acceleration for x ,y direction and z rotation.

- Kevin: Develop Kallman-filter to compare the data from 'Merge and split' with a map.

- Collin: Develop a finite state machine for the final challenge

The next robot reservations are:

- Thursday 16/5/2019, from 14:30

Next meeting: Wednesday 22/5/2019, 13:30 in Atlas 5.213

Week 5 - 22 May

Notes taken by Kevin.

These are the notes from the group meeting on 22th of May.

Finite State Machine and Path planning

Collin created a Finite state machine of the hospital challenge. The FSM is a pretty complete picture of the hospital challenge, but a different ‘main’ FSM needs to be made in which the actions of the robot itself are shown in a clear manner. Collin also came up with a path planning method. In this method important point are selected on the given map, which will be connected with each other where this is possible. The robot will then be able to drive from point to point in a straight line. If some time is left, we could eventually improve to robot by letting it drive between point in a more smooth manner.

Wall detection

Yves has continued working on the split and merge algorithm. He has tried to implement his matlab implementation in C++, but this has proved to be more difficult than anticipated. He will continue working on this.

Drive Control

Job has continued working on the drive control, which is now almost finished. Some tests needs to be done on the real robot to see if it is functioning properly in real life as well. Furthermore, the velocity in the drive control needs to be limited, as it is still unbounded at this time.

Architecture

Mike has worked on creating the overall architecture of the robot. All the other contributions can then be placed in the correct position in this architecture.

Spatial Awareness

Kevin has worked on the kallman filter and spatial recognition. His idea is to first combine the state prediction and odometry information within a kallman filter to give a estimated position and orientation. This estimation can then be combined with laser range data to correct for any remaining mistakes in the estimation.

Last Robot reservation

During this reservation we were finally able to quickly set up the laptop and the robot for the first time without any issues. During this test we collected a lot of data by putting the robot in different positions in a constructed room, and saving all the laser range data in a rosbag file. Most of the data is static, with the robot standing still, but we also got some data in which the robot drives forward and backwards a little bit.

Next robot reservations

The next reservation is Thursday may 23. During this reservation we will have two hours to test the drive control made by Job. Particular attention will be given to static friction and the maximum possible acceleration of the robot. Furthermore, since we want to implement multiple threads in our program, we would like to know how much the robot can handle in real life. As such, a stress test will be made to see how much the robot can handle. The reservation for next week will be made on Wednesday may 29, on the 3th and 4th hour.

Tasks

- Job: finish drive control and integrate it in the architecture. Also create a main FSM with Collin.

- Kevin: Work on a implementation of the kallman filter and spatial recognition software.

- Collin: Continue working on path planning implementation. Also create a main FSM with Job.

- Yves: Continue working on the C++ implementation of split and merge. Also look into speak functions of robot.

- Mike: Work on collision detection and working on creating multiple threads.

- Everyone: Read old wiki's of other groups to get some inspiration.

Next meeting: Wednesday 29/5/2019, 13:30 in Atlas 5.213

Week 6 - 29 May

Notes taken by Job

These are the notes from the group meeting of the 29th of May.

Progress

There has been little integration of functions and everyone has kept working on their separate tasks. It is vital to write the state machine in code so the different functions can be implemented and tasks that still need to be completed can be found.

Mike has worked on the potential field implementation and has achieved a working state for this function. This function needs to be expanded with a correction for the orientation of the robot.

Yves has worked on the spatial recognition integration of the Ransac function. This needs to be finished so it can be used for the Kalmann filter Kevin has worked on.

Kevin needs the work from Yves to finish the Kalmann filter and needs to add a rotation correction.

Collin has worked on the shortest path algorithm which is ready to be used.

Job has improved the Drivecontrol functions after last weeks test session and discussed the integration with the potential fields with Mike.

Planning

Since time is running short, hard deadlines have been set for the different tasks:

- State machine (+ speech function integration) - 02-06-2019, 22.00 - Collin + Job (+ Mike)

- Kalmann filter - 04-06-2019, 22.00 - Kevin + Yves

- Presentation - 04-06-2019, 22.00 - Kevin

- Driving - 05-06-2019, 22.00 - Mike + Job

- Cabinet procedure - 02-06-2019, 22.00 - Collin + Job

- Map + Nav-points - 05-06-2019, 22.00 - Yves

- Visualisation OpenCV - Extra task, TBD

Test on Wednesday 14.30 - 15.25

- Test spatial recognition

Test on Thursday 13.30 - 15.25

- Driving + Map

- Cabinet procedure

- Total sequence

Week 7 - 6 June

Notes taken by Mike

Progress

Kevin has been working on the presentation and the perception functions that fit the map on the detected features. Simple tests suggest that it works by manually feeding the functions with made up realistic points, as well as random points that need to be ignored by the function. For some reason the code does not execute repeatedly though. Either way, the code requires some fine-tuning. This function takes the estimated robot position (odometry) and the LRF data as inputs and has the corrected position as an output. It needs to be extended to take the previous navpoint as the origin of movement. Kevin expects this to be ready for testing by tomorrow's session.

The presentation is almost done. The architecture slide needs to be simplified to prevent an overwhelming amount of information being visible on screen. The same goes for the state machine.

Collin has integrated the state machine as much as possible. More public functions need to be made in the WorldModel object that allows the state machine to check whether the program can progress to any following state or not.

Job and Mike were working on the DriveControl object. The current challenge is driving from point to point. This involves correcting the angle when it deviates from its straight trajectory as a result of the potential vector pushing it away. This is going to be tested in the testing session after this meeting. It also requires implementing the relative position of the end point of the current line trajectory, from Kevin's position estimation function.

Yves (absent) has been working on implementing the published world map and supplying it with navpoints.

Planning

Thursday 6-6: appointment to work together in Gemini South OGO 0 from 9:00 to 10:45, then in Gemini South 4.23 until 12:30. The testing session is from 13:30 until 15:25. The plan is to attempt to integrate everything before this session to simply test as much as possible.

Tuesday 11-6: appointment to work together from 8:45 until the testing session from 9:45 until 10:40. The entire code should be done by now. After the testing session, everything should be fine-tuned in the simulation environment.