PRE2017 3 Groep18

This page describes the group's effort at planning, logging and noting the meetings. Our product specific page can be found at Clairvoyance.

To go to Coaching questions page click here.

Week 1

Meet the group members

Louis: Studied a year of Software Science in 2015. Switched to Psychology and Technology, now in second year of PT. I have some experience with Java, Python, CSS, Javascript, Arduino, Stata. French, but mostly studied in English so I can also help on documentation, writing. Decent at presenting.

Clara: Mechanical engineering Master. Can code C++, Python, Matlab, a tiny bit C. Worked on image recognition before. Know a bit about neural networks, Caffe, Datasets, Json.

Joëlle: Currently in the second year of Biomedical Engineering. I don’t have a lot of experience with programming, only in Python, but I’m very interested and would like to learn more.

Rens: Last year of Software science. Experience with low-level, high-level and webscale code. Also did some data science and machine learning for my job.

Bas: Second year Mechanical Engineering student. Lots of experience with practical group work. Since I'm a mechanical engineer, I know a lot about mechanics and dynamic, control of systems and some flow mechanics. I worked with arduino once, but I'm not too good at it. I can work with matlab pretty well.

Nosa Dielingen: Second year Electrical Engineer. I can program C and Arduino and a couple other languages. Furthermore building a circuit is almost second nature when having Google.

What has been done in week 1?

In week 1 all the teammates had to come with 5 ideas in order to allow for a brainstorming process as well as to be able to take elements of each other's ideas. From these 5 main ideas were chosen as potential final ideas.

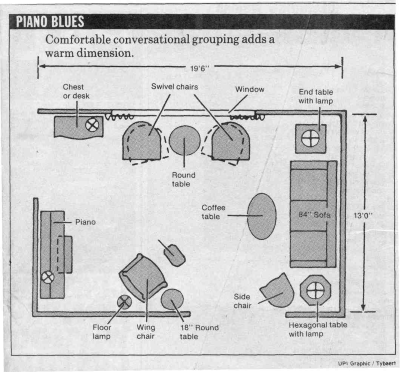

One of the ideas that were chosen was a furniture displacement robot to arrange furniture in an optimal way for different circumstances. This robot would also rearrange a room if something had been displaced such that users don't have to do it themselves.

Another idea is a wellness application for which should help the user to improve its life and reach personal goals. It would adapt itself to the user's needs, time schedule and location.

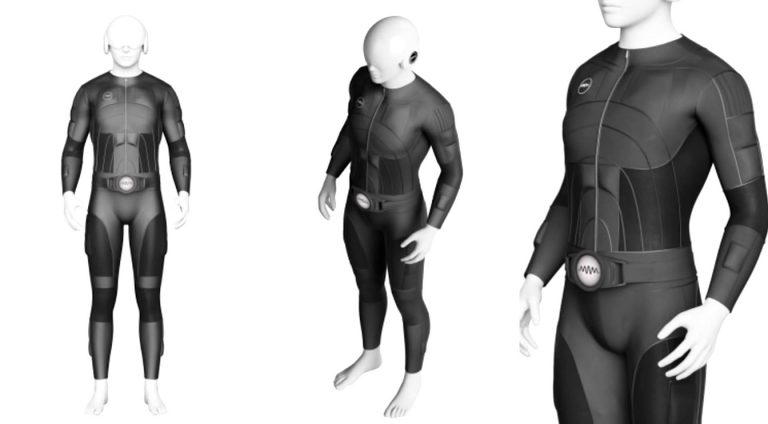

A third idea we thought about was a VR bodysuit which can be worn by the user, optionally with a VR headset. The user would then receive haptic feedback depending on what happens in the virtual environment in which they are visualizing. The use of this technology would be mainly in the entertainment industry for video games as well as movies, but also for army training or martial art training.

A cooking or tasting robot which could detect the chemical composition of an inserted sample and display it, recommend similar recipes as well as share a small sample across different machines.

The final idea was that of an augmented reality mirror which could alter reality, in the same way, that augmented reality works. There were many uses for this technology and could help out through different presentations of reality as well as previews of selected options.

Week 2

For the five ideas, a rubric was made in order rate the different ideas, such that we can make an objective choice for the best idea. The two subjects have been chosen. A Smart Mirror which alters user's appearance and a house system that places all the furniture in their spot and is able to find objects that have been lost. At the end of week 2, one of the two subjects will be chosen.

Paper

In week 2 we have collected papers which can be used to gain knowledge about our subject. Below links to the papers can be found. This section of papers is for the House System Feng Shui Geomancy and the Environment in premodern Taiwan QuadCopter Dynamics [1] [2] [3] [4] [5] [6] [7] [8] [9] [10] [11] [12] [13]

This section of papers are for the smart mirror:

Dynamic Hair Manipulation in Images and Videos

This paper describes that high-resolution image manipulation is doable but that it is much harder to use in a video because of issues of depth and extravagant hairstyles. However simple video manipulation is doable.

This book on 3D modeling explains how using multiple images from different viewpoints we can recreate images as well as know their depth. Useful for both the mirror idea or the home robot(furniture moving). Contains a lot of algorithms and extensive information about the different types of image/video reconstruction or alterations (600+ pages). This is more to be used in the future if we want to make it. Contains an extensive list of sources that can be relevant too.

Automating Image Morphing using Structural Similarity on a Halfway Domain

This article explains that using specific points in images and two distinct images we can create a morph from one image to another. This can be interesting when trying to make someone look different/better, by using a standard image and morphing slightly based on another image. Currently only works on images and thus not on video, but together with other research could be interesting.

I like those glasses on you, but not in the mirror: Fluency, preference, and virtual mirrors

A very relevant paper about different effects of a mirror in a shop. This article details the idea that people prefer their image in the mirror to their actual image and that acquaintances prefer how you look face to face over your reflection in the mirror. This is because someone who is familiar with you is more likely to have seen you from face to face rather than as a reflection in the mirror and thus the reflection seems unfamiliar and thus unusual.This is based on a previously done study [14].

Furthermore, it hits on how virtual mirrors already exist in different forms, but not in the way we want to use them. You can, for example, upload a picture of yourself to some makeup or glasses companies where they will return an image including how their product would look on you. Moreover, it claims that “neither virtual mirror technology itself nor its potential as a basic research tool has received much attention in consumer research.”. It focusses on fluency processing (how easily a visual cue can be processed by someone) and explains the different variables for fluency. It concludes that it increases aesthetical pleasure for the perceiver. It then goes on to explain that processing facilitation creates positive affect and activates smiling muscles [15]. Then conducts an experiment to test people prefer people they do or don’t know in the mirror versus face to face. The results favour seeing someone face to face with familiar people and are indistinguishable for either case with strangers.

Large Pose 3D Face Reconstruction from a Single Image via Direct Volumetric CNN Regression

State of the art AI technique for reconstructing facial meshes from 2D pictures (and videos). Does so by using volumetric CNNs. Can be used in our product to prevent having to use expensive depth cameras. When we have these meshes, we can transform them according to some beauty standard (for example make people's faces thinner, their eyes bigger, etc.)

Photorealistic Facial Texture Inference Using Deep Neural Networks

Generates facial textures from 2D images. These facial textures can be used for "photoshopping" purposes (we can egalize skin, remove blemishes, change skin and eye color (ethics!!)). After having performed our automatic photoshopping we can project the textures back onto our mesh (see above).

[16] [17] [18] [19] [20] [21] [22] [23] [24] [25]

Snapchat patents

In 2015 Snapchat bought the company Looksery, which uses technology very related to our purposes.

METHOD FOR REAL-TIME VIDEO PROCESSING INVOLVING CHANGING FEATURES OF AN OBJECT IN THE VIDEO

METHOD FOR REAL TIME VIDEO PROCESSING FOR CHANGING PROPORTIONS OF AN OBJECT IN THE VIDEO

First detects faces by using the Viola Jones algorithm. Then fits a face shape model to the faces (Active Shape Model). Then it transforms this model to the Candide-3 model, a popular low-computation face mask/mesh. Which then can be used for actually transforming the faces.

Week 3

During the tutor session of week 2, we were told to focus on a single idea and to be sure what our final product would be. During 3 we decided to focus on the "Augmented reality mirror" idea and therefore started looking at the multiple uses this device could have. We figure the device could be very useful in the setting of tattoos, hairdressers, in the beauty sector or for plastic surgery previews.

We then looked at the different stakeholder for each of the ideas in order to display the various parties involved in our product. Different applications of the product came with different stakeholder in the private sector, e.g. Hairdresser, tattoo parlors, surgeons.However, they were all impacted by the users and the government.

Week 4

A new main page was created for the product page which can be found on Clairvoyance

Rens

Waiting for Clara to finish the RPC’s in order to figure out the technical (research) side of the app. Found a website for generating a 3D model from a single picture. Updates the product wiki with research and will write a demo/prototype/wizard-of-oz description. Extended the requirements. Will work on prototype after tutor meeting.

Nosa

Will update wiki with existing apps/products and writes the introduction.

Joëlle

Did research on ethics & laws

Coming week: updating the wiki on ethics & laws and making it up to date

Bas

The task Bas was assigned to in week 4, and probably will be assigned in upcoming weeks, was working on the 3D-model of different types of hairstyles suited for different kinds of heads. These 3D-models will be modelled as a static object which can be placed over ones' head when a 3-model of this head has been made. A big part of the likeability of the system relies on whether the system is capable of showing a representative haircut, so a rather large amount of effort must be put into creating these digital haircuts.

The question of what a representative haircut is, already poses several difficulties. First of all is the matter that each head has a different shape, and thus would ideally require a different model. Next, a haircut is a very dynamic object, which can take many shapes, even during a single day. The question remains which one of those shapes is representative. Last is the question whether an image of the haircut just after the visit to the barbershop is desired, or an image of the hair after several weeks since in the question of what is representative, the latter might be more useful.

As said, haircuts are very complex and dynamic objects. Modelling such a difficult haircut thus won't be easy. After looking for different ways in which haircuts, or 3D-images in general, can be modelled, the group chose Maya to be a suited program. Although the group, and Bas in particular, has more experience with more technical-oriented software, such as Siemens NX or AutoCAD, these programs gave too little freedom in the sense of human-like modelling. Maya is a rather complicated and sophisticated software, so in order to get a good sense of how to model a haircut, Bas spent a lot of time this week learning how to model basic things in Maya. The level of modelling required to model a 3D haircut has not yet been achieved, but a good basis has been made.

Louis

Created user-survey

Clara

Created RPCs

Questions for tutor

Evaluate protoype description.

Wiki page for the app structure

- Introduction

- Existing work

- Research

- Existing apps/products

- User Survey

- Requirements Preferences Constraints

- Prototype Description (Wizard of Oz)

- Laws and Ethics

Week 5

During week five each group member went more in-depth in their work done in week four.

Bas

Clara

Joelle

Louis

Nosa

Having looked into the legal issues of having a database with information from people, the following has been found.

It determines what kind of information it is. When it is personal information the law is strict about it, but when the information is a picture that has been taking in a public place, the law doesn't how the information has to be stored.

Furthermore the one responsible for how the information is stored is we, the creators of the app.

The information that we ask from our users and store are: the name of the user and last name; his or her username so that a profile can be created; a password so that a measurement of security can be met; and pictures that the user takes with the hair style that have chosen.

The law states that the user has to give permission that the information provided can be stored. This can be done with a disclaimer that by using the app, a profile is made and those data are stored in our database.

The main concern were the picture, but because the pictures are made in a public place, namely the hairdresser salon, the pictures can be stored and used by us. Furthermore if the hairdresser decide to also make pictures and store it, this is also aloud.

Rens

Started app development. Basic UI for camera created and 3D model viewer implemented. TODO: model textures, UI for changing hair.

Week 6

Bas

Clara

Joelle

Louis

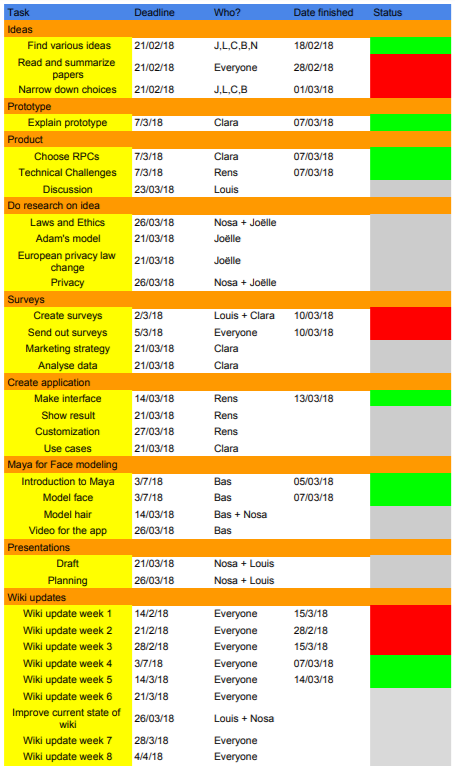

Finished off the planning for the course as can be seen below. The orange rows are topics and the yellow rows underneath are the tasks required for the topic above. For each task there is a deadline, it says who is working on this task and when the task was completed and it indicates whether the task was completed on time or not using red and green colours. The planning will be updated as time goes.