PRE2024 3 Group4

Max van Aken

Bram van der Pas

Jarno Peters

Simon B. Wessel

Javier Basterreche Blasco

Matei Razvan Manaila

Start of project

Problem statement and objective

Many swimmers struggle with improper technique: it is almost impossible to keep track of your own movements while underwater, which means beginners usually require many hours of close monitoring and guidance. When we also consider that the quality and availability of trainers is declining in many amateur clubs, and that swimmers have to pay for access to the facilities themselves, we can see it becomes rather costly to pick up the sport.

To solve this problem, we set out to create a swimsuit with sensors which automates the job of a coach. It could be owned by either individuals or swimming clubs, which could use the technology as a less costly and easily available alternative to a professional coach, that is not paid by the hour.

The suit would be able to gather representative data for the motions of swimmers, store and re-play these motions within an app as an animation, to help the swimmer visualize their movements, but also provide an analysis of their technique, with intuitive feedback.

The users

As the user groups are similar but vary quite a bit in requirements and reason for purchase, we will analyze them separately. If it turns out to be the case, in the future we may consider developing different products for different user demographics.

General user needs and requirements

Since the start of the Covid pandemic we have seen the introduction of a new concept: online coaching. One of the main factors contributing to the appeal of this is the flexibility that online coaching provides, with swimmers being able to schedule their sessions at any time of the day[1]. However, these online coaching platforms aren’t cheap. For example, the biggest online swimming community, "MySwimPro", charges a minimum of $297 per month for online coaching[2]. And this is not a stand-alone case: other companies, like "Train Daly" and "SwimSmooth" charge $200 and €167 respectively for just one hour of video feedback[3][4].

Creating a motion capture suit aimed at these swimmers could provide them with a more affordable alternative while retaining the flexibility of online coaching. Some considerations/requirements for a system aimed at individual amateur swimmers would be:

-System would have to be affordable, at least relative to online coaching.

-Feedback given by the system should be easily understandable, and preferably instant.

-System should be accurate, but should not require precise calibration by the user.

-Optical systems are impractical as they would require too much set-up and would not be feasible for individuals.

-Suit should not feel uncomfortable or inhibit motion.

-Should be a self-contained system that does not require more than wireless phone connectivity to operate: wires or laptops would not be feasible near pools.

Individual users, amateurs

For the purposes of an amateur, basic movement tracking and feedback would suffice. It would not make sense to buy a personal unit yet, as the cost of purchase would not be lower than the price of renting from a local club for only a small number of sessions.

Individual users, professionals

This person may want a more detailed analysis, with specific insights that we would need to research, and potentially a more accurate version of the device that is capable of higher fidelity reproductions.

As the person starts out they may use their local club's suit, but as they become more advanced, with the relatively low cost of the system, it could start making more sense to buy a personal unit than to continue renting one from the club. This may also be desirable for the flexibility.

Amateur swim teams

Another approach could be to create suits that are meant to be bought by amateur sports teams. These suits could then be used by coaches to provide more accurate feedback, or to lower the workload of a team's coach, so he can monitor the entire team at the same time and provide assistance whenever needed, without having to split their focus between each individual. They may need to intervene only when a student is repeatedly struggling, or may even act as a second factor of verification to the advice that the app is giving, to ensure the system is not missing anything important. This would in turn lower the workload of coaches and enable them to offer more individual attention to swimmers, which has been mentioned as an issue in our interviews.

Professional swim teams

As the difference between winning gold and losing out on a medal can be only a couple of hundreds of a second, elite swimmers tend to focus on even the tiniest of details when preparing for a race. Due to this, motion capture technology is already used by competitive swimmers[5], and research is still going on in making it more accurate. The considerations for a system aimed at this audience would be:

-There is huge demand for better motion capture systems;

-The budget for such a suit would be significantly larger than for amateur swimmers;

-There will be little to no feedback given by the suit itself, as at this elite level it cannot be pre-determined what the correct technique is, feedback has to be highly adaptive.

-The suit would have to be very accurate. The project would therefore be mainly focused on finding more accurate sensors or creating a more accurate tracking algorithm instead of the process of product development. We therefore doubt whether this would be feasible for the purposes of this course.

User interviews

Interview 1:

Interviewer: How often do you swim per week?

Response: 2-3 times per week

I: For how long have you been swimming?

R: Almost my whole life

I: Are you also part of a swimming team?

R:Yes, I’m part of DBD

I: Do you also compete in swim meets?

R:Yes, I do

I: Have you ever had the feeling that you did not get enough technique feedback, or at least that you could use some more?

R: Yes, I feel like we are doing a lot of technique exercises, but we don’t really get personal tips

I: Have you ever made use of online services like online coaches or online videos to improve?

R: No, not really

I: Is there a specific reason for that?

R: No, I’ve just never done it.

I: We are working on a motion capture system that would be able to give technique tips. It would work by attaching sensors to certain joints and based on the relative position of those joints, be able to give feedback, like that you are pointing your arm too much to the side, or not bending it enough, etc. If such a system were to exist, would you be interested in it or do you feel like it wouldn’t have much added benefit for you?

R: That sounds pretty cool, I would definitely be interested.

I: If such a system were to exist, what would be your preferred method of feedback? For example, a video that you can watch back later that explains what to improve, an earpiece that gives automatic feedback while you are swimming, something like pressure on your joint indicating you need to move, or maybe you have your own idea.

R: I would say a combination of the video, and some feedback while you are actually swimming. I would then probably say the pressure on joints idea.

I: Why did you pick those?

R: The video is nice to be able to say your own swimming afterwards, but I don’t think that alone will be enough so some direct feedback to make you feel what you are doing would also be important.

I: Are there other things you would like the system to be able to track, like stroke frequency as an example?

R: If possible, something like stroke frequency or breathing frequency would be nice.

I: Which of the following things would you consider the most important in such a motion capture system: Low price, comfort, accuracy, ease of use, or durability.

R: Firstly low price and comfort, then accuracy and durability. Ease of use I don’t find important.

I: With a lot of motion capture systems, a laptop is needed to interpret the data and give feedback. Would having to bring a laptop to the pool be a deal-breaker for you?

R: It wouldn’t be ideal, but not necessarily a deal-breaker. However having some laptop cover to prevent it from accidental splashing would be nice.

I: Lastly, if the system were to actually exist, and be helpful in improving technique, how much money would you be willing to pay for it?

R: It would depend on how well it would work, but probably around 50 to 100 euros, although I know this won’t be a very realistic price for such a product.

I: Thanks for the interview, do you have any additional thoughts regarding the product?

R: No, nothing right now.

Summary: This interview was done with a very experienced swimmer who is already part of a swimming team(So not necessarily our target audience). However, the swimmer did indicate a feeling of not getting adequate feedback at his team, and indicated an interested in trying out our product if available. In terms of how to receive feedback, the swimmer prefers a video watch after he is done swimming, paired with some form of realtime feedback, preferably haptic. The swimmer finds comfort and low price the most important, while ease of use is not an issue. The swimmer would be willing to pay between 50 and 100 euros, but acknowledges that this might not be realistic.

Interview 2:

I: How often do you swim per week?

R: I swim 3 times per week

I: For how long have you been swimming?

R: Around 5 years I believe

I: Are you also part of a swimming team?

R:Yes

I: Do you also compete in swim meets

R:Yes

I: How often?

R: Around 5 times a year

I: Have you ever had the feeling that the coaching you received at the swim team wasn’t enough?

R: I guess so

I: How come?

R: I feel like the trainers might have given up on giving feedback

I: Do you feel like there is too little focus on technique in general, or that there are not enough personal tips?

R: Coaches will usually make these general remarks like ‘focus on your technique’ so in that sense there is attention given to it, but too little personal tips.

I: Have you ever made use of online services like coaching or videos to improve your technique?

R: I have, but that was quite a while ago

I: What did you use specifically?

R: Online videos

I: Did you feel like they helped you?

R: Not really

I: Do you have any idea why?

R:No

I: We are working on a motion capture system that would be able to give technique tips. It would work by attaching sensors to certain joints and based on the relative position of those joints, be able to give feedback, like that you are pointing your arm too much to the side, or not bending it enough, etc. If such a system were to exist, would you be interested in it or do you feel like it wouldn’t have much added benefit for you?

R: That would sound fun to use

I: If such a system were to exist, what would be your preferred method of feedback? For example, a video to watch back after you are done swimming, an earpiece giving you real-time feedback, some pressure based feedback so you feel when your technique is off?

R: A video or an earpiece

I: Why do you pick those?

R: A video because I think its nice to be able to watch your stroke back and I think this can help a lot. The earpiece if it can give realtime feedback would be really nice because you don’t usually get that. The pressure idea sounds like it would be irritating.

I: For the video, what would be the maximal time it could take for the video to be ready after you are done swimming? Does it have to be finished instantly, can it take a little while?

R: Its not a problem if it takes a couple of minutes

I: Are there more factors, like stroke rate, that the system would need to track

R: No, not really.

I:Which of the following aspects would you consider the most important in such a motion capture system: Low price, comfort, accuracy, ease of use, or durability.

R: Comfort, its not of a lot of use if you have to change the way you swim.

I: With a lot of motion capture systems its necessary to bring a laptop to the pool for the analysis, would this be a deal-breaker for you?

R: Not a deal-breaker, but its not ideal.

I: Lastly, if this system would work and actually be effective in improving your technique, what would be the maximum price you would pay for it?

R: around 150 euro’s.

Summary: This interview was done with a relatively experienced swimmer, who is already part of a swimming team. The swimmer indicates that there is a lack of personalized feedback at his club. For the feedback, the swimmer would like an animation after he is done swimming, and an earpiece for realtime feedback. The swimmer considers comfort the most important factor for such a system as having to swim differently due to the sensors would go against the purpose of the system. The swimmer would be willing to pay 150 euros for the system.

User survey

The following Survey has been created: https://docs.google.com/forms/d/e/1FAIpQLSfRmrGkf-iRCDJQGpLv0SILlQEPYvAIomGOxuePE_pGPYbJzA/viewform?usp=sharing

The account SwimDepth ended up sharing our survey on their story.

In total 25 people responded to the survey. The respondents are almost all very motivated swimmers, with 92% swimming at least 3 times a week, and 56% even swimming more than 5 times a week. The respondents are also quite experienced, with 56% having swum for more than 5 years, while the other 44% have been swimming for somewhere between 1 to 5 years. 92% of swimmers has made use of in-person coaching(which probably just meant that they are part of a swimclub). Only 8% has made use of personalised online coaching. The main challenges encountered in coaching were inadequate feedback(13 votes), high cost(11 votes), and it being hard to find good trainers(9 votes). The most important factors influencing the respondents decision to buy the suit were comfort(19 votes), accuracy of feedback(18 votes), and price(17 votes). Durability and ease of use were found less important with online 11 and 10 votes respectively. As to the preferred method of feedback, 52% picked visual feedback, while audio- and haptic feedback received 32% and 12% of the votes. The respondents were split on the maximum price for the product, with 52% being willing to pay between $100-$500, and 40% only being willing to pay below $100. 64% of respondents indicated that having to bring a laptop to the pool would not be a dealbreaker for them. There doesn’t seem to be a relation between how often the swimmers swims, and the price they are willing to pay for the system.

Requirements

As the user survey has not yet been answered, we don't yet have a lot of data regarding the user preferences, but from the interviews we can already write down some preliminary user requirements. First off, both interviewed swimmers found comfort to be among the most important factors when creating such a system. To ensure the system is comfortable, the sensors must be as light as possible and must be attached to the swimmers' joints in a way that does not restrict the swimmer from performing his/her natural swimming motion. From the interviews, ease of use was found to not be a very important factor, so based on that the calibration time can be relatively high. For feedback, both swimmers indicated wanting both an animation that they can watch back after swimming(with render time < ~2 minutes), and some form of realtime feedback. But they did not agree on the exact form of realtime feedback. The swimmers were willing to pay around 100 euros for such a system, but we do have to keep in mind that these were swimmers already part of a swim team, so they are not necessarily our target audience. For our target audience(individual amateur swimmers, mainly those that already pay for online coaching services) the price point will most likely be higher.

| Attribute | Specification | Tolerance | Note |

|---|---|---|---|

| Weight | 1.241-2.5 kg | - | Subject to change, correlated with Suit size |

| Dimensions | L*W = 0.0167168 m^2 | +-5% | Actual numbers may change slightly. |

| Shape | Cd <1.2 | +10% | The lower the better |

| Calibration | <5 minutes | - | According to the interviews, ease of use was not

a very important factor. |

| Accessibility | Real time feed-back.

Computation time: <0.6s |

- | Visualisation of data is desirable. |

| Waterproof | IPx8 | - | 'x' can be any number |

| Corrosion proof | - | +10% max. allowed amount | - |

| Robustness | Resist fall of: E=>9.81 J | - | - |

| Colour | HSL: (x,<50,<30), matte finish | HSL(:x,+-10,+-10) | - |

| Price | Max. 200? euro | +-25 | Based upon user research |

References specifications:

Weight: The weight of the suit can be calculated with the following values:

ESP 32 board: 8-12g

MPU 6050 sensor: 3-5g

Batterie (Li-Po 3.7V, 1000-2000mAh): 20-50g

Wiring: 6g

Water proofing: 20-40g

1mm (Ultra-thin, rash guard style): 0.5 – 1 kg

The weight of a single sensor could vary from 57g to 113g. The biggest variants are in batterie size. A middle ground between weight and batterie life need to be found. The weight of a full suit including all sensors depends also on the weight of the suit which depends on the size. The weight would vary between 1.241-2.5kg depending on suit and batterie size.

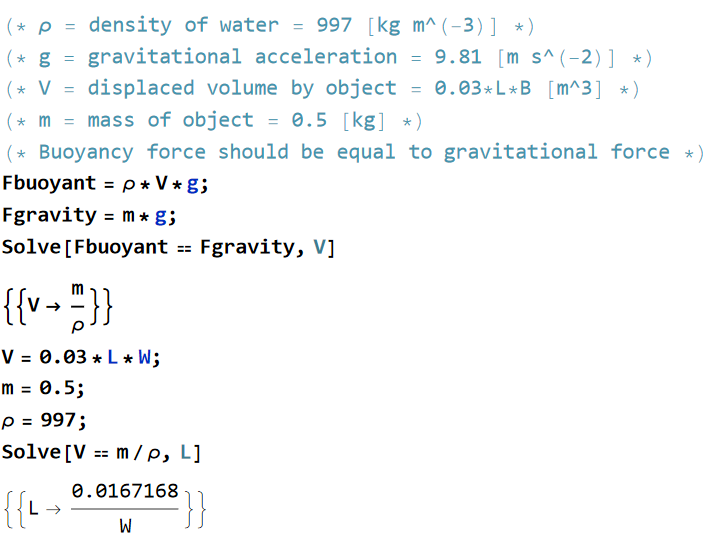

Dimensions: If the 'reference' sensor is placed on the back (most steady part while swimming) it may not extend to far away from the body because we do not want to interfere with the flow of water/streamline of the swimmer in a great extend. (We assume the specification of 'shape' to be already incorporated here). The other 2 dimension may be extended more due to the reduced effects on streamline, but there is a limit to this which is correlated to the weight. We want the device to 'perfectly float' in the water, so it is practically weightless when using. To calculate the required volume of the device given the mass, we do the calculation shown in figure 1. We conclude, if the mass is 0.5 kg, the height 3cm, then: length*width=0.0167168 m^2 to let the device perfectly float in water. As explained in 'Shape', it is desired to reduce the width (W) as much as possible.

Shape: The shape of the device is crucial if we want to minimise the streamline of the swimmer. The general formula for drag force: Fd = (1/2)*ρ*v2*cd*A, with ρ the density of water, v the flow velocity relative to object, cd the drag coefficient and A the frontal surface area. The frontal surface area can be further reduced by minimising W in the equation in 'Dimension->Specification'. The only variable left where the design has influence over is the drag coefficient. For a 'general' swimmer is is roughly 1.1-1.3[1]. We want our product to be at least even streamlined as a swimmer so we have chosen Cd to be less then 1.2. Therefore the device increases the total drag proportionally to the swimmer without device.

Calibration: The calibration should be able to be completed alone and within 1 minute after putting on the sensors.

Accessibility: The calibration button should be easily reached, but not unintentionally hit. A bit above the wrist at the top side for example would fit this description. The feedback must be real-time to give the user the opportunity to optimise the stroke while swimming. To do this visually is hard to do, you cannot watch your wrist all the time so you may miss some feedback. Feedback in speech through a set of earplugs seems to be ideal. The computation time from the finish of the stroke until the time of feedback must be at most 1 time the 'general stroke time'.[2] For Olympic swimmers we can analyse this video[3] and get roughly 100 strokes per minute. This means that the computation time may only take 60/100=0.6 seconds. The feedback should be stored to be made visually afterwards.

Waterproof: The first numeral is not important as it indicates protection against solid foreign objects. The second numeral refers to water where we decided it is necessary to have '8' for the main device, it should be protected against continuous immersion in water[4]. The IP rating however does not take into account any corrosion effects.

There are 2 parts that need to be waterproof: the units containing the electronics, and the wires connecting them.

For the limb-mounted units, the connection point of the shell can be sealed with 2 O-rings along with plastic screws or rivets, since they never need to be accessed. The unit containing the battery however needs to be opened frequently, so we decided it would be more secure if the product was designed such that the battery can be removed, charged, placed back in the compartment and the entire unit sealed before reaching the pool. This was done to maximize the lifespan of the product and minimize risk of water damage, as products featuring waterproof USB covers often tend to fail in these areas. Specifically, over time, since they use friction-based waterproofing, which is not suitable for our type of application. We then decided to equip the battery compartment with a clip-on top for ease of use, but maintaining the same seal design, so there is no compromise on reliability.

All power and data transfer to and from the base unit will be done through wires, ensuring the user never needs to access any compartment other than the one containing the battery (for example to change sensor batteries). This also makes it easier for the user to check whether the critical parts are sufficiently dry before accessing the inner components, so there is no risk of water damage with proper use.

As the suit is supposed to mold to the body perfectly, we decided it would be best for the wires between units to be sewn on a snaking path along the suit. This gives the wires enough extra length to allow flexibility, as mobility would be severely limited otherwise. The wires could attach to the units via commercially available waterproof connections.

To further increase the reliability of our product, we need to reduce the complexity of its physical components as much as possible. One of the ways we achieve this is by moving towards a completely digital user interface. With our current design, the only part of the suit that would require interaction anywhere near a pool will be the power button. Waterproof buttons are widely available commercially, to multiple standards, thus not compromising the overall integrity of our product. Once turned on, the user will not be required to interact with any physical part of the suit again until they want to turn it off, as all other controls are done through the associated app.

Corrosion Proof: A water bassin is allowed to have a certain upper (or lower) limit of chlorine based particles in a specified volume. Free chlorine => 0.5 mg/L, Combined chlorine =< 0.6 mg/L, chloride =< 1000 mg/L, chlorate =<30 mg/L[5] so it must be protected against these substances. PVC is not effected by chlorine or salt. The lifetime is then roughly 15 years[6].

Robustness: We do not want the device to break when accidentally dropped, so we calculate the energy it will generate when falling from 2 meters which corresponds to the maximum heigh it could potentially fall. (E=mass*height*gravitational acceleration=0.5*2*9.81=9.81 J).

Colour: We do not want the device to unintentionally reflect the pool lights into the eyes of the user. There is not an academic source on how reflective a colour is. However we know that darker objects reflects less light and objects with a matt finish are able to decrease this 'reflectance' even further. If we use the HSL (hue, saturation, lightness)[7] method we can give intuitive values for the saturation and lightness.

Price: The material cost can be calculated from the price of all components combined.

| component | amount | price pp | total |

|---|---|---|---|

| ESP | 13 | 0.98€ | 12.09€ |

| MPU | 13 | 0.93€ | 12.09€ |

| Batteris | 13 | 0.93€ | 12.09€ |

| Charging module | 13 | 0.93€ | 12.09€ |

| Wires | - | 0.93€ | 0.93€ |

| Waterproofing | 13 | 0.93€ | 12.09€ |

| Wet Suit | 1 | 50€ | 50€ |

| Total | 67 | 55.58€ | 111,38 |

Calibration

The purpose of calibration is to give the software the actual distances and angles between the various sensors at a given time, so that after the calibration we can track the location of the body parts (sensors). The expensive Xsens suit also requires a calibration before using it and they have an 'accurate' and a 'fast' method[8]. For our suit we will now focus on this partially adopted 'fast' method. Human body proportions are not perfectly the same for everyone, however there is a table with relative lengths of body parts[9][10]. The proportions mentioned table 1 from this paper can be used. Only the total height of the person has to be measured and the other important lengths can be approximated from this. The angles between the sensors also have to be calibrated. This can be done by agreeing on a (convenient) pose where the angels are known. (for example: arms straight up with hands above shoulders -> elbow angel=180,shoulder angle wrt xy plane=0, shoulder angel wrt xz plane = 0; if we the foot are placed right under the hips, the angles are knee angle=180, hip angle wrt xy plane =0, hip angle wrt xy plane =0, angle shoulders wrt xy plane =0). We might, just as the Xsens suit include a option to insert all 11 measurements (2x lower leg, 2x upper leg, 2x lower arm,2x upper arm, hip width, shoulder width, hip-shoulder height).

Approach

Preferably we would like to do this using sensors on the suit, as this means that all of the technology would be on the suit, meaning no external infrastructure is required in the swimming pool. The sensors would be placed on top of or near joints in the body, such as the shoulder, elbow and wrist for arms, and the hips, knees and ankles for legs. Distances between joints would be determined using supersonic sensors and orientation would be determined by having gyrosensors at each joint location. With this approach, reference sensors would be required at the base of each arm or leg so the relative position data from the joints can be converted into more absolute data that is more useful.

If the sensor idea turns out to be impossible to implement during this course, the alternative would be the principles of a motion capture suit, where bright white balls are placed on a black suit and their position is determined using 2 cameras. One camera would view from the side in this case, while another would view from above. Based on this data, the same feedback can be constructed as with the sensor principle, but this would require 2 cameras on rails to be installed in the swimming pool, and these cameras would need to follow the suit around. This would possibly make the suit more expensive, as these rails would need to be either 25 or 50 meters long, depending on the swimming pool. This would also be less practical to implement for amateur clubs, as the pools they use would need to agree with installing said rails.

Milestones and deliverables

Due to the time frame and the scope of the course, a full body suit is likely not feasible. To be able to have something to show at the end of the course, a prototype will be built for one arm. There are also multiple ways of swimming, for this project the focus will be on the front crawl.

The milestones for the construction of the arm suit would be as follows:

- Build the sleeve (for now without any sensors yet). Keep the type of sensor to be used in mind when creating a sleeve.

- Build a functional prototype, either by attaching sensors, or by making a construction with external cameras. The prototype should be able to send position data for each joint to a computer.

- Convert the raw position data to usable coordinates, likely with angles and distances between joints.

- Construct a program that can differentiate between correct and wrong technique. Some technique errors may be distinguished manually, others might require some simple implementations of AI. One method for this would be gathering a bunch of data for wrong and correct arm motion, gathering simple features from the data, like minimal and maximal angle of the elbow joint, and training a simple decision tree.

- (bonus) if there is some time left, it may be possible to also write a program for a different way of swimming, like backstroke or the butterfly.

Hardware: camera vs sensors

Camera

A recent literature review done on motion capture for 3d animation mentioned 5 main techniques that are used [11]. These techniques are passive optical markers, active optical markers, no markers, inertial motion sensors, and surface electromyography. In the context of swimming, either passive optical markers or no markers are the most promising. Passive markers are preferred over active ones, as active markers emit their own light, meaning they need electricity, making passive markers easier to incorporate in a swimsuit. Using no markers at all is also easier compared to active markers, as this would either require no special swimsuit at all or a plain wetsuit. One paper mentioned that the placement of the optical markers can take a long time, and that the optical markers can affect the subject's movement [12]. Moreover, they mentioned that it can sometimes be a challenge to identify which marker is which, meaning an elbow marker might be confused with a shoulder marker in certain positions. Another paper that studied the swimming behavior of horses using optical markers for motion capture also had problems with noise, which came as the markers were occasionally difficult to track [13]. For these reasons, a markerless optical system is the best option for our idea out of the camera based options. This is less invasive and generally yields better and less noisy data. The paper in [12] mentions a neural network approach called OpenPose [14] as a method to identify joints and motion in the footage obtained by the cameras.

When looking into the cutting edge of markerless motion capture companies are found that implement this technology commercially with low errors. For example, a company called Theia has a 3d camera based mocap system that can reach errors of less than 1 cm and less than 3 degrees [15].

Conclusion cameras: If a camera system is used, the system will definitely be a markerless one. Given that companies are already selling this technology on a commercial level indicates that there is not much for us to add to this technology on the hardware side of things.

Sensors

Almost all current papers on motion capture systems with sensors use inertial sensing technologies. Some systems are very cheap as well, such as [16], where the individual sensors are in the range of 10-20 euros. The exact model used in this specific paper (MPU-9250) is no longer being produced. However, the newer version, the MPU-9255, costs 13,50 euros per piece and is pretty much the same as its predecessor, according to tinytronics. In the paper of [16], 15 sensors were used, meaning the sensors cost a total of 202,50 euros. Of course, the sensor suit consisted of more electronics, but this would leave the suit in the price range of a few hundred euros. This is doable for swimming clubs if the suits can become one size fits all, as a handful of suits would then be enough per club. Another advantage of the sensors is that they are usually quite small and light, meaning they do not inhibit the motion of the swimmer much.

The biggest challenge for sensors is that the positions they give are integrated from the acceleration vectors they measure, meaning that the beginning position with respect to other sensors needs to be calibrated, and that a small error in acceleration or direction gets integrated over, meaning the error grows quickly over time. The initial calibration is a problem that has been tackled, as a company called Xsens, which sells motion capture suits to researchers, even has a well documented calibration procedure [17]. One thing that could be exploited for drift compensation is the fact that swimming is generally a periodic motion. For example with the front crawl your arms move forward and backward periodically, while the left arm and right arm are in opposite phase with respect to each other. Each arm will repeat moving forward, and then backward again, which means that at the end of a forward or backward phase there is a moment without velocity, which can maybe be used as some sort of reset point (I thought of this before looking at papers for drift so this idea is not very scientifically backed). Xsens has minimized drift in their motion capture suits by using machine learning and artificial intelligence to create an optimal algorithm [18]. This algorithm almost entirely eliminates drift, according to them. They also have the option to combine the sensor suit with a camera tracking system for situations where zero drift is allowed, such as movies. Other research also uses various algorithms to negate drift [19][20][21].

Another minor challenge that is swimming specific for the sensor suit is that the sensor suit has to be waterproof enough to endure the usually wet conditions of a swimming pool. The movella DOT sensor is IP68, meaning it is sufficiently waterproof for under water use [22].

Conclusion for sensors: sensor suits are a feasible option for a swimming mocap suit, and all major challenges already have a solution, to the point where there is not much for us to add as a group (drift etc).

Conclusion

For both the sensor- and camera-based mocap system there is not much for us to do on the hardware side. For both methods there are companies that have low error systems that could be used to feed the coordinate data into our software. In the context of a swimming technique suit in swimming pools, the sensor idea is a better fit, as it does not require fixing many cameras over the length of the pool to acquire the data. Given that we will likely focus on the software side of this idea, our choice of hardware does not matter a great deal, as we will assume a (near-)perfect data influx for our software to handle.

Perfect swimming technique

The freestyle stroke can generally be divided into 4 phases[23]:

-Entry&Catch(Note that these are sometimes defined as 2 separate phases):

During the entry, the hand enters the water fingertips first - with an angle of 45 degrees with respect to the water surface[24] - around half a meter in front of the shoulder[2]. The hand should enter at around shoulder width(Image your head indicates ‘12’ on a clock, your arms should enter at around ‘11’ and ‘1’ [25]). After entering, the hand should reach forwards and slightly down till the arm is fully extended, staying between 10 to 25cm below the surface of the water[26]. While extending the arm, it is also important to rotate towards the same side. The shoulders should rotate somewhere between 32 and 40 degrees[27], up to 45 degrees on a breathing stroke. During this rotation, and the entire stroke for that matter, the head should stay stationary, and pointed forwards at a 45 degrees angle[28].

After fully extending the arm, the catch begins. Here, fingertips are ‘pressed down’ and the elbow is bent such that the fingertips and forearms will be pointed towards the bottom of the pool.It generally holds that the earlier in the stroke you can set up this ‘early vertical forearm’, the better. It is extremely important that this is done while maintaining a ‘high elbow’ meaning that if one were to draw a straight line between your fingertips and your shoulder, the elbow would sit above that line. When you are done setting up the catch, the angle of your elbow should be between 90 and 120 degrees[29].

-Pull: The catch smoothly transitions into the pull phase, where the arm is pulled straight back(the pull is straight, not the arm!) with the elbow above the hand for as long as possible[24].

-Push(or exit): About when the hand reaches the hips, the arm will extend and push out the last bit of water, while transitioning smoothly into the recovery.

-Recovery: The recovery is the movement of the hand back over the water. Its important that during the recovery, the elbow stays above the hand[25] and leads the recovery, while the hand and forearm are relaxed[30]

Matlab script & visualization

Below a link to the drive where our matlab files and used datasets are stored for who is interested:

https://drive.google.com/drive/folders/1Nkat2n8IxX_gXgsPh2etX_Fn2ceAWQ00

Week 3 progress

Currently there are 3 data files in the drive folder. Book1 is a small base with random numbers I came up with on the fly to get the basics of importing the data under control. This database was also used to get the basic code for calculating angles and distances done correctly. BookSin contains a sinusoidal wave with 2 periods to configure a basic system that selects 1 full period of the data by determining a minimum in the absolute velocity in the x-direction. x is a sin wave, y is a cos wave, and z is a constant (1). This is for all 3 joints so the distances and angles calculated will yield nonsense when using this dataset. The basic plot also doesn't work. BookCos is similar to BookSin in that it is also periodic, but now the values are taken to also give a decent plot. The shoulder joint is taken in the origin, the elbow at x=sin(t),y=cos(t),z=0.5 and the wrist joint at x=2sin(t),y=1.3cos(t),z=1 (This book also contains 2 periods in week 3, but in week 4 this will be updated to 12).

Right now, the robots1.m script can select one period of data (so a period from the swimming stroke) and isolate the position vectors of the (now) 3 joints in the data: the elbow, wrist and shoulder joint. It calculates the distances between these 3 joints and calculates several relevant angles. It also makes a simple 3d plot that takes one frame of the data (can be configured in the script) and "models" the arm using 2 straight lines. After these functions were added to the script, a matlab app (robots.mlapp) was made from the script with the same 3d plot. This app now has a slider, so the user can select a frame. You can also toggle a switch and let the program automatically go through all the frames by toggling the slider for you.

Week 4 progress

In week 4 the script gets expanded to 2 arms and some basic error identification is established. To accomodate this, the BookCos csv file is expanded from 2 to 12 periods. It also receives double the amount of columns, with the original 9 being for the right arm and the 9 new ones for the left arm. The 9 new ones are also half a period behind the first 9, just like for an optimal front crawl. For further expansion, a new dataset is used, called BookBody1. It contains the same 18 columns as the BookCos file, but also a new set of coordinates for a central sensor on the back, and 18 new columns for the legs, with each leg having a sensor at the hip, knee and ankle. Beyond this, the normalized direction vectors (the e_x, e_y and e_z unit vectors) of the central back sensor are located in 9 columns. This is mainly useful for the back sensor, as this tells us something about the general tilt of the swimmer (we can also identify whether the swimmer wobbles a lot while swimming). In the BookBody1 file, the vector e_x is normal, but there is a small wobble present in the yz-plane. BookBody2 is the same as BookBody1, but with a more significant wobble in the yz-plane. For both the bookbody1 and bookbody2 data files the coordinate distribution over the columns is as follows:

xyz coordinates\vector elements (in that order) for: right shoulder, right elbow, right wrist, left shoulder, left elbow, left wrist, mid back, right hip, right knee, right ankle, left hip, left knee, left ankle, e_x back, e_y back, e_z back

The expanded scripta for this week are the robots2.m and the (newest) robotlegs1.m script. Some global errors are identified in the script, and the calculation of relative vectors and angles now occurs in a for loop, so the 'smaller' errors that can be deduced from this data can be counted for every individual period. These smaller errors are also counted for each arm individually, of course. In the robotlegs1 script, a second for loop for data selection and mistake identification is added for the legs (first one was for arms). Legs and arms are done in a different loop, as the leg periodicity generally differs from arm periodicity.

The visualization app, robots.mlapp, is also expanded to the whole body. A small video segment is in the drive to display the current plot. A slightly different plotting method is used than before, which makes the thing look way nicer and more like a representation of a person. An optional wobble correction term is also added. This corrects for the swimmers wobbles by using a coordinate transformation to the unit vectors of the back sensor. In my created data, the joint coordinates are data without a wobbly swimmer, while the back sensor direction is wobbling, meaning that the wobble correction works in reverse to how it would work with real data. For the effect of the wobble correction function to be truly visible, BookBody2 needs to be loaded into the app, as this dataset has a larger wobbling effect.

Week 5 progress

We finally managed to get some real measuring data from the system and with it some practical errors can be removed from the system. It is also useful to finetune the error detection system. The data only contains arm data, but this is fine, as the arm movement is the most important and complex one between arms and legs. robotsreal.m is the newest version of the script.

One error that would occur is that one maximum sometimes appeared in the data as multiple points. This can be seen when the script has run on real data and a matrix like TFi or TFj is loaded in the command window. These arrays store the indices for local maxima, and in one case an array contained the points 793, 795, 1227, 1229 and 1235. This is a problem, since a period of the stroke is defined as the data points between the indices of maxima (these points). To solve this problem, a period of data is skipped in the error detection for loops if it is shorter than 10 data points long. This is also done separately for left and right, as this may occur on the left and right at different moments. Although it is not confirmed this also happens with the leg data, it likely does, so the same if statements are added to the leg error detector.

Another coding mistake was that the errors of the right and left arm are evaluated in the same for loop. The amount of repetitions in the for loop depends on whichever dataset has the lowest amount of periods. For the perfect data used until this point this was fine, but now in one of the datasets the code detects 5 maxima that are very close (<10 data points apart) together on the right, while it doesn't do this on the left. Because the left now has 4 less maxima detected, the final 4 datapoints on the right are not evaluated, meaning most of the useful data is cut off. Separating the for loops for left and right solves this problem.

A final thing that was worked on was a new program for visualization. Until now the only thing we have is the robots.mlapp, which is good, but it could be better. I attempted to copy my visualisation code into unity (this took a little while) to get a 3d model to follow the motion from the datasheet. It is not finished, as the motion still looks a little weird, but a video named unityrobot is in the google drive.

Week 6 progress

The robotsreal.m script is finalized. A hierarchy is added that selects which error needs correcting the most. It first of all selects a group: general errors first, then arm errors, and finally leg errors. After this, it selects the error with the larges (normalized) value to give feedback on. The selection is done by a number called n, and a string is produced called "errorsound<n>.mp3". There are lines in the script that play the appropriate prerecorded sounds, but they are commented out for now while I wait for the sounds from my group mates.

The unity-based visualization is finally finished and it looks (in my humble opinion) quite awesome. Some videos are available in the drive, as is the current version of the .exe file. It has all the functions of the robots.mlapp script (autoslide, manual slide, wobble correction), but you can now also move freely in the room with 2 models. Both models go through the same motion, but the used mesh is different. The first model is a pre-made humanoid model, but this model can seem a bit weird and deformed sometimes, especially with wobble correction turned on. The second model fixes this by being made of a collection of spheres and interconnecting cylinders, so there is not much texture to deform.

To test and use the visualization, a new dataset is made, called GoodTake2b.csv. I added the leg data from bookbody2 to GoodTake2, as the real data does not contain any leg data.

Logbook

| Name | Total hours | Week 1 |

|---|---|---|

| Max van Aken | 10h | Attended beginnen lecture+meeting(2h), Read sources (5h), summerise sources (3h) |

| Bram van der Pas | 13h | Attented lecture(2h), Read sources(8h), Summarized sources(3h) |

| Jarno Peters | 12h | Attended beginning lecture (2h), group meeting (2h), created start of project chapter (3h), summarized 5 sources for literature summary (5h) |

| Simon B. Wessel | 10h | Attended beginning lecture (2h), group meeting (2h), research literature (4h), summarise articles and papers (2h) |

| Javier Basterreche Blasco | 10h | Attended beginning lecture (2h), group meeting (2h), literature acquisition (4h) summarizing research (2h) |

| Matei Manaila | 9h | Researched and read sources (7h), summarise sources (2h) |

| Name | Total hours | Week 2 |

|---|---|---|

| Max van Aken | 6h | Investigated paper+dataset 'xyz dataset'(3h), Searched for 'perfect swim data' (3h) |

| Bram van der Pas | 14h | Attend meeting(2h), Research different possible users(10h), summarize findings(2h) |

| Jarno Peters | 15h | Attended feedback session & group meeting (2h), identify challenges for sensor & camera systems (2h), research state of the art (10h), write camera vs sensors section (3h) |

| Simon B. Wessel | 9h | Group meeting (1h), look into existing projects using MPUs (2h), Downloading software and installing drivers for ESPs (3h), Programming ESP (3h) |

| Javier Basterreche Blasco | 8h | Attended meeting (2h), researched project state of the art and potential areas of improvement (4h), researched viable programming languages (2h) |

| Matei Manaila | 14h | Attend meeting(2h), research of technologies and design brainstorming (12h) |

| Name | Total hours | Week 3 |

|---|---|---|

| Max van Aken | 15h | Researched specifications + references (15h) |

| Bram van der Pas | 12h | Attend feedback session and group meeting(2h), prepare survey and interviews(7h), hold interviews(1h), work out interviews(1h), try to spread survey(1h) |

| Jarno Peters | 17h | Attended feedback session & group meetings (2h), work on matlab script and app (13h), document matlab script progress (2h) |

| Simon B. Wessel | 8h | Research components needed and their specifications (4h), Making virtual circuits with ESP and MPU (3h), Thinking of approach for wireless communication between ESP and UDP server and making block diagram (1h) |

| Javier Basterreche Blasco | 7h | Looked into potential hardware applications and its data processing (4h), read existing code (3h) |

| Matei Manaila | 12h | group meeting (2h), start learning matlab and understand existing code(10h) |

| Name | Total hours | Week 4 |

|---|---|---|

| Max van Aken | 10h | 2h help Jarno with matlab/possible errors in swimming, 8h calibration research/elaboration |

| Bram van der Pas | 8h | Attend meeting(2h), get survey to be spread out(4h), work on user requirements(2h) |

| Jarno Peters | 20h | attend feedback session & group meetings (2h), matlab coding (15h), documenting matlab progress (3h) |

| Simon B. Wessel | 8h | feedback session & group meetings (2h), Research on components weights and specifications (3h), trying to get a hold of a motion tracking system (1h), Matlab programming (2h) |

| Javier Basterreche Blasco | 9h | Attended group meeting (1h), attempted to coordinate scheduling of a session with a measurement system (2h), researched past coding implementations (4h), read existing code (2h) |

| Matei Manaila | 10h | group meeting (2h), catch up with code (3h), work on legs code (5h) |

| Name | Total hours | Week 5 |

|---|---|---|

| Max van Aken | ||

| Bram van der Pas | 11h | attending meetings(2h), getting survey spread(4h), researching perfect swimming technique(5h). |

| Jarno Peters | 16h | attended feedback session & group meeting (2h), measuring with Opti track lab (2h), processing measurement data (1h), matlab coding (5h), unity coding (4h), documenting progress (2h) |

| Simon B. Wessel | ||

| Javier Basterreche Blasco | ||

| Matei Manaila | 10h | group meeting (2h), polished wiki (6h), caught up with code (2h) |

| Name | Total hours | Week 6 |

|---|---|---|

| Max van Aken | ||

| Bram van der Pas | ||

| Jarno Peters | 23h | feedback session & group meeting (2h), adding legs to real data for visualization testing(1h), matlab coding (3h), unity coding (15h), wiki editing (2h) |

| Simon B. Wessel | ||

| Javier Basterreche Blasco | ||

| Matei Manaila |

| Name | Total hours | Week 7 |

|---|---|---|

| Max van Aken | ||

| Bram van der Pas | ||

| Jarno Peters | ||

| Simon B. Wessel | ||

| Javier Basterreche Blasco | ||

| Matei Manaila |

Literature Summaries

Wearable motion capture suit with full-body tactile sensors[31]

This article discusses a suit with not only motion sensors, but also tactile sensors. These sensors detect whether a part of the suit is touching something or not. The motion sensors consist of an accelerometer, several gyroscopes, and multiple magnetometers. The data from these sensors is processed in a local cpu and subsequently sent to a central computer, to decrease processing time and ensure real-time calculations. The goal of the suit is to give researchers in the field of sports and rehabilitation more insight in human motion and behavior, as before this, no real motion capture suit with both motion sensors and tactile sensors had been implemented.

Motion tracking: no silver bullet, but a respectable arsenal[32]

This article goes over the different principles of motion sensors and which methods there are. They discuss mechanical, inertial, acoustic, magnetic, optical, radio and microwave sensing.

mechanical sensing: Provides accurate data for a single target, but generally has a small range of motion. These generally work by detecting mechanical stress, which is not a desirable approach for this project.

Inertial sensing: By using gyroscopes and accelerometers, the orientation and acceleration can be determined in the sensor. By compensating for gravity and double integrating over the acceleration, the position can be determined. One downside is that they are quite sensitive to drift and errors, and a small error integrated over time yields massive errors in the final position. For our project this would be very useful, as sensors determining their position wrt each other is difficult to do as the orientation is difficult to determine.

Acoustic sensing: These sensors transmit a small ultrasonic pulse and time how long it takes to get back. This method has multiple challenges, such as the fact that it can only measure relative changes in distance, not absolute distance. It is also very noise sensitive, as the sound wave can reflect off of multiple surfaces. Those reflections can get back at the sensor at different times, causing all sorts of problems. To solve the reflection problems, the sensor can be programmed to only consider the first reflection and ignore the rest, as this first reflection is generally the one that is to be measured.

Magnetic sensing: These sensors rely on magnetometers for static fields and on a change in induced current for changing magnetic fields. One creative way to use this is to have a double coil produce a magnetic field at a given location and estimate the sensors position and orientation based on the field it measures.

optical sensing: These sensors consist of two components; a light source and a sensor. The article discusses these sensors further, but since water and air have different refractive indices, and the sensors will be in and out of the water at random, these sensors will be useless.

radio and microwave sensing: Based on what the article had to say, this is generally used for long range position determination, such as gps. This is likely not useful for this project.

The Use of Motion Capture Technology in 3D Animation[11]

This article reviews literature about motion capture in 3D animation, and it aims to identify the strengths and limitations of different methods and technologies. It starts by describing different motion capture systems, while later on it comes to conclusions about the accessibility, ease of use, and the future of motion capture in general. Although this last part is not super interesting for us, the descriptions of different systems is.

active & passive optical motion capture: The basic idea is that an object or a person has a suit with either active or passive optical elements. Passive elements only reflect external light, and their position is measured using external cameras, generally multiple cameras from several directions. The material is usually selected such that it reflects infrared light. Active markers on the other hand emit their own light, which is again generally in the infrared part of the spectrum. Also for active markers their position is measured using cameras.

Inertial motion capture: This system uses inertia sensors (described in [32]) to determine the position of key joints and body parts. This system does not depend on lighting and cameras, increasing the freedom of motion. A widely used inertia based system is the Xsens MVN system.

Markerless motion capture: In this case, no markers or sensors are used, but the motion is simply recorded with one or multiple cameras. Software then interprets the data and turns it into something usable for animators. For us this approach is not very usefull.

Surface electromyography: This method is generally used to detect fine motions in the face using sensors that detect the electrical currents produced by contracting muscles. For us again not super useful.

Musculoskeletal model-based inverse dynamic analysis under ambulatory conditions using inertial motion capture[33]

This article discusses the use of inertial motion sensors from xsens, which is currently part of movella. The specific model used here is the xsens MVN link. They constructed a suit using these sensors and let the test subjects perform different movements. The root mean square distance between the determined position and the real position was found to lie between about 3 and 8 degrees, depending on the body part measured. If we can reach these kinds of values for our prototype that would be sufficient. Since this article is from 2019, the current state of the art technology might be even better than this.

Sensor network oriented human motion capture via wearable intelligent system[16]

This article uses 15 wireless inertial motion sensors placed on human limbs and other important locations to capture the motion of the person. The researchers had their focus on a lightweight design with small sensors and a low impact on behavior. The specific sensors used are MPU9250 models, which also only cost about 12 euros.The researchers transform the coordinates gained from the sensors and have an error of about 1.5% in the determined displacement.

Development of a non-invasive motion capture system for swimming biomechanics[34]

I still need to work this one out

Swimming Stroke Phase Segmentation Based on Wearable Motion Capture Technique[35]

In this source, a sensor-based motion capture system is used to distinguish the different phases occurring during a swim stroke. To determine the bodyposition, measurement nodes are placed on different points of the body. Each measurement node contains a 3-axis gyrometer, 3-axis accelerometer,and a 3-axis magnetometer. An orientation estimation algorithm was used to determine the posture from the information gathered by the nodes. Supervised learning is used to create an algorithm capable of separating different phases of the stroke. The accuracy was compared to an optical motion capture system, and it was found that the system is comparatively accurate. But there is quite a big error for a rapidly changing joint angle.

Development of a Methodology for Low-Cost 3D Underwater Motion Capture: Application to the Biomechanics of Horse Swimming[13]

In this article the motion capture is done using an optical marker-based system. This usually involves spherical markers glued to important body parts and camera’s to track them, but in this specific case color pigments were used instead of these markers. It mentions that in general there are three types of Motion Capture: sensor based, optical with markers, and optical without markers. Where optical with markers is considered the gold standard. It also mentions as a downside for sensor based systems that the size of the sensors can cause additional drag. 6 Cameras were used to capture videos of the horses swimming. The video’s were then preprocessed which included things like calibrating the different camera angles and tracking the different markers. Lastly algorithms were used to determine the horse’s movements. The accuracy of the system was in the order of centimeters for segments, and in the order of degrees for angles. Downsides of the method in this article are that the preprocessing requires lots of time-intensive tasks like having to manually track the markers. It therefore recommends training an algorithm for these tasks. It also mentions problems occurring when certain anatomical points lack overlap between different cameras.

Markerless Optical Motion Capture System for Asymmetrical Swimming Stroke[12]

In the article a markerless optical motion capture system is used that only uses one underwater camera to record the swimming motion. The article mentions that an optical motion capture with markers is actually the most used due to its relatively high accuracy, but mentions that there are also downsides like the markers affecting the swimmers movement, it taking quite a long time to properly place the markers, and there being issues discriminating the different markers from eachother. The system involved creating a human body model, this was then matched to the images of the swimmer to track their movement. An additional algorithm was then required to distinguish the left and right side of the body from eachother. The model seemed to match the images quite well. SWUM software was then used to for dynamical analysis, like analysing the force exerted on the water by the swimmer.

SwimXYZ: A large-scale dataset of synthetic swimming motions and videos[36]

According to the article, most limitations of motion capture systems are due to a lack of data. The motion capture systems usually struggle with interpreting aquatic data as they are not trained on that. Research has shown that synthetic data could complement or even replace real images in training the computer models. This paper published a database of 3.4 million synthetic frames of the swimming stroke that can be used for training algorithms. Using their database the researchers also created their own stroke classifier algorithm that seemed to provide accurate results. Limitations of the database are the lack of diversity in the subjects(gender, bodyshape), and lack of diversity in the pool background.

I have downloaded and watched the data for freestyle from this paper, but I think the videos are to short and of quality not high enough to accurately track the posture of the (animated) person. Therefore I think tracking a few videos of (olympic) swimmers and (manually?) track their movements is the most achievable option to obtain reliable data and to give constructive feedback to the user.

Usable videos:https://www.youtube.com/watch?v=vFqX_KxqWtE

Current swimming techniques: the physics of movement and observations of champions[37]

This paper outlines how former champions swam and how their strokes looked like. This we could use as perfect data. It does this for multiple kinds of strokes for males and females. This paper also dives a bit into what contributes most to drag for swimmers, so how a stroke can be improved. Next to this it has experimental data for drag coefficient for swimmers with respect to the depth of swimming.

Research on distance measurement method based on micro-accelerometer[38]

This paper shows a possibility how to go from accelerometer data to distance measurements. This we probably need to accurately calculate the position and angles of the swimmers body parts

Experiments on human swimming : passive drag experiments and visualization of water flow[39]

This paper investigates the drag force of a swimmer in streamline position. This is done by towing a person (so the person doesnt move any bodyparts). This is also done with a sphere to better understand how to flow of water goes.

Smart Swimming Training: Wearable Body Sensor Networks Empower Technical Evaluation of Competitive Swimming[40]

This paper proposes a body-area sensor network for detailed analysis of swimming posture and movement phases. Wearable inertial sensors placed on 10 body parts capture motion data, which is processed using a motion intensity-based Kalman filter for improved accuracy. Deep learning models combine temporal and graph-based networks for automatic phase segmentation, achieving over 97% accuracy. The system accurately tracks joint angles and body posture, providing valuable feedback for swimming performance enhancement.

Using Wearable Sensors to Capture Posture of the Human Lumbar Spine in Competitive Swimming[41]

This study introduces a motion capture system based on wearable inertial sensors for tracking lumbar spine posture in competitive swimming. Using a multi-sensor fusion algorithm aided by a bio-mechanical model, the system reconstructs swimmers’ posture with high accuracy (errors between 1.65° and 3.66°). Experiments validate its reliability compared to an optical tracking system. Kinematic analysis across four swimming styles (butterfly, breaststroke, freestyle, and backstroke) reveals distinct lumbar movement patterns, helping coaches and athletes optimize

performance. The system offers a practical alternative to video-based methods.

3D Orientation Estimation Using Inertial Sensors[42]

This paper discusses methods for 3D orientation using data orm accelerometers, gyroscopes and magnetometers. The Study focuses on upper limb movements for patients with neural diseases. Additionally it explores techniques for 2D and 3D position tracking by considering joint links and kinematic constrains.

Motion Tracker With Arduino and Gyroscope Sensor[43]

This project focuses on making a 3D motion tracing devises using an ESP and a MPU. The advantage of this approach is that it is wireless and could be mounted as a wearable.

Building a skeleton-based 3D body model with angle sensor data[44]

In this paper a method for constructing a full body motion model using data from wearable sensors.

Using Gyroscopes to Enhance Motion Detection [45]

In this article, the author explores the role of gyro sensors in improving motion detection and their functionality. The article also provides insight into how gyroscopes can be used to enhance the performance of various motion-sensitive technologies.

Wearable inertial sensors in swimming motion analysis: a systematic review[46]

With "the aim of describing the state of the art of current developments in this area", the paper presents a comprehensive review of the existing literature on swimming motion analysis, as well an exhaustive exploration of current sensing capacities, both inertial and magnetic. The research focuses more on physical methods rather than digital, as the latter was found to introduce large delays and require too much set-up to be practical for consumers. Like most sources thus far, the paper indicates inertial sensors to be most reliable.

Development of a swimming motion display system for athlete swimmers’ training using a wristwatch-style acceleration and gyroscopic sensor device[47]

This is an older paper, hence advancements in sensing technology may have surpassed the sensor units used here. Despite this, the methods described may still prove useful: the paper details methods of data logging swimmers' motions using an accelerometer and a three-axis gyroscopic sensor device, where any one sensor measures its respective axis.

SmartPaddle as a new tool for monitoring swimmers' kinematic and kinetic variables in real time [48]

This paper explores a more modern sensing toolkit by means of the SmartPaddle device, which features two pressure sensors and a 9-axis Inertial Measurement Unit in a small device that is strapped to both of the swimmer's palms. This way, the force of each stroke can be measured and graphed with the pressure sensors to allow comparisons in force between hands, and the motion of the hand during the stroke can be visually represented in three swimming direction graphs, namely a side view, a top view, and a back view. This product may be useful in our project as it comes as a standalone sensing unit with which we could create a larger assembly from, to capture motion of the entire body.

Digital Analysis and Visualization of Swimming Motion[49]

Unlike the last three articles, this paper focuses on digital methods of motion tracking and analysis. A solid framework is laid out for methods of digitizing videos of swimmers, by use of a module that takes a 2d video and outputs a 3D representation of their body. The swimmer needs to upload a picture of himself standing in a T position in order for the module to calculate length from start to end of limbs, and the swimmer needs to manually point out joint locations on the recorded video. From this, a 3D digitization is produced, that can be further used to visualize and analyze the swimmer's motions.

- ↑ https://swimmingtechnology.com/measurement-of-the-active-drag-coefficient/,Swimming Technology Research, Inc., Measuring the Active Drag Coefficient

- ↑ https://www.theendurancestore.com/blogs/the-endurance-store/stroke-rate-v-stroke-count-and-why-its-critical-for-swim-performance, The Endurance Store, Stroke Rate V Stroke Count, and why it's critical for swim performance

- ↑ https://www.youtube.com/watch?v=D-7YP5BGswA, Olympics, 🏊♂️ The last five Men's 100m freestyle champions 🏆

- ↑ https://www.iec.ch/ip-ratings Internation Electrotechnical Commision, Ingress Protection (IP) ratings.

- ↑ https://wetten.overheid.nl/BWBR0041330/2025-01-01#Hoofdstuk15, Wetten.overheid.nl, Besluit activiteiten Leefomgeving (BaL)

- ↑ https://www.researchgate.net/publication/285642648_Degradation_of_plasticized_PVC, Yvonne Shashoua, Inhibiting the deterioration of plasticized poly(vinyl chloride) – a museum perspective

- ↑ https://global.canon/en/imaging/picturestyle/editor/matters05.html, Canon. Inc, Understanding the "HSL" color expression system

- ↑ A detailed explanation of the body dimensions which can be inserted in the configuration window in MVN software. https://base.movella.com/s/article/Body-Dimensions?language=en_US#Offline

- ↑ Body Segment Parameters1 A Survey of Measurement Techniques,Rudolf s Drillis, PH.D.,2 Renato Contini , B.S.,3 AND Maurice Bluestein, M.M.E.4, 1964

- ↑ THE STATIC MOMENTS OF THE COMPONENT MASSES OF THE HUMAN BODY,https://apps.dtic.mil/sti/tr/pdf/AD0279649.pdf,Dr. Harless,1860

- ↑ 11.0 11.1 WIBOWO, Mars Caroline; NUGROHO, Sarwo; WIBOWO, Agus. The use of motion capture technology in 3D animation. International Journal of Computing and Digital Systems, 2024, 15.1: 975-987. https://pdfs.semanticscholar.org/9514/28e966feece961d7100448d0caf17a8b93ec.pdf

- ↑ 12.0 12.1 12.2 erryanto, F., Mahyuddin, A. I., & Nakashima, M. (2022). Markerless Optical Motion Capture System for Asymmetrical Swimming Stroke. Journal of Engineering and Technological Sciences, 54(5), 220503. https://doi.org/10.5614/j.eng.technol.sci.2022.54.5.3

- ↑ 13.0 13.1 Giraudet, C., Moiroud, C., Beaumont, A., Gaulmin, P., Hatrisse, C., Azevedo, E., Denoix, J.-M., Ben Mansour, K., Martin, P., Audigié, F., Chateau, H., & Marin, F. (2023). Development of a Methodology for Low-Cost 3D Underwater Motion Capture: Application to the Biomechanics of Horse Swimming. Sensors, 23(21), 8832. https://doi.org/10.3390/s23218832

- ↑ Z. Cao, G. Hidalgo, T. Simon, S. -E. Wei and Y. Sheikh, "OpenPose: Realtime Multi-Person 2D Pose Estimation Using Part Affinity Fields," in IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 43, no. 1, pp. 172-186, 1 Jan. 2021, doi: 10.1109/TPAMI.2019.2929257. keywords: {Two dimensional displays;Pose estimation;Detectors;Runtime;Kernel;Training;2D human pose estimation;2D foot keypoint estimation;real-time;multiple person;part affinity fields}, https://ieeexplore.ieee.org/document/8765346

- ↑ Web page of theia, a company that sells markerless mocap technology https://www.theiamarkerless.com/

- ↑ 16.0 16.1 16.2 Qiu S, Zhao H, Jiang N, et al. Sensor network oriented human motion capture via wearable intelligent system. Int J Intell Syst. 2022; 37: 1646-1673. https://doi.org/10.1002/int.22689

- ↑ Article on the calibration of xsens mvn motion capture suits, from the movella website https://base.movella.com/s/article/Calibration-MVN-2021-0-and-older?language=en_US

- ↑ Article on the methods used for drift compensation for xsens motion capture suits, from the mozilla website https://www.movella.com/resources/blog/mocap-without-limits-how-xsens-solved-the-drift-dilemma

- ↑ H. T. Butt, M. Pancholi, M. Musahl, P. Murthy, M. A. Sanchez and D. Stricker, "Inertial Motion Capture Using Adaptive Sensor Fusion and Joint Angle Drift Correction," 2019 22th International Conference on Information Fusion (FUSION), Ottawa, ON, Canada, 2019, pp. 1-8, doi: 10.23919/FUSION43075.2019.9011359. keywords: {Magnetometers;Magnetic field measurement;Calibration;Accelerometers;Sensor fusion;Acceleration;Gravity;Sensor Fusion;Human Motion Capture;Self-Calibration;Magnetic-inertial measurement unit (MIMU)}, https://ieeexplore.ieee.org/abstract/document/9011359

- ↑ I. Weygers et al., "Drift-Free Inertial Sensor-Based Joint Kinematics for Long-Term Arbitrary Movements," in IEEE Sensors Journal, vol. 20, no. 14, pp. 7969-7979, 15 July15, 2020, doi: 10.1109/JSEN.2020.2982459. keywords: {Kinematics;Motion segmentation;Acceleration;Gyroscopes;Robot sensing systems;Body sensor networks;gait;inertial-sensor drift;motion analysis;sensor fusion;wearable sensors}, https://ieeexplore.ieee.org/abstract/document/9044292

- ↑ N. O-larnnithipong and A. Barreto, "Gyroscope drift correction algorithm for inertial measurement unit used in hand motion tracking," 2016 IEEE SENSORS, Orlando, FL, USA, 2016, pp. 1-3, doi: 10.1109/ICSENS.2016.7808525. keywords: {Quaternions;Gyroscopes;Gravity;Tracking;Angular velocity;Accelerometers;Estimation;Inertial Measurement Unit;Gyroscope Drift;Drift Correction Algorithm;Bias Offset Error Estimation;Quaternion Correction using Gravity Vector;Hand Motion Tracking},https://ieeexplore.ieee.org/abstract/document/7808525

- ↑ Movella DOT data sheet https://www.xsens.com/hubfs/Xsens%20DOT%20data%20sheet.pdf

- ↑ Jailton G. Pelarigo, Benedito S. Denadai, Camila C. Greco, Stroke phases responses around maximal lactate steady state in front crawl, Journal of Science and Medicine in Sport, Volume 14, Issue 2, 2011, Pages 168.e1-168.e5, ISSN 1440-2440, https://doi.org/10.1016/j.jsams.2010.08.004.

- ↑ 24.0 24.1 Biskaduros, P. W., Biskaduros, P. W., & Biskaduros, P. W. (2024, 26 september). How to swim freestyle with perfect technique. How To Swim Freestyle With Perfect Technique. https://blog.myswimpro.com/2019/06/06/how-to-swim-freestyle-with-perfect-technique/#comments

- ↑ 25.0 25.1 Fares Ksebati. (2021, 17 maart). How to Improve Your Freestyle Pull & Catch [Video]. YouTube. https://www.youtube.com/watch?v=nD2dZVsrBq4

- ↑ Swim360. (2018, 10 oktober). Freestyle hand entry - find the sweet spot to speed up [Video]. YouTube. https://www.youtube.com/watch?v=3yReSXt9_8Q

- ↑ Ford, B. (2018, 27 juli). How to avoid over rotation in freestyle. Effortless Swimming. https://effortlessswimming.com/how-to-avoid-over-rotation-in-freestyle/#:~:text=One%20of%20the%20key%20aspects,degrees%20during%20a%20breathing%20stroke

- ↑ Petala, A. (2022, 8 november). Everything YOU need to know about Freestyle Rotation. Everything YOU need to know about Freestyle Rotation. https://blog.tritonwear.com/everything-you-need-to-know-about-freestyle-rotation

- ↑ Jerszyński D, Antosiak-Cyrak K, Habiera M, Wochna K, Rostkowska E. Changes in selected parameters of swimming technique in the back crawl and the front crawl in young novice swimmers. J Hum Kinet. 2013 Jul 5;37:161-71. doi: 10.2478/hukin-2013-0037. PMID: 24146717; PMCID: PMC3796834.

- ↑ SwimGym. (2024, 9 maart). Freestyle arm recovery common mistakes [Video]. YouTube. https://www.youtube.com/watch?v=zN7zZztMFiY

- ↑ Y. Fujimori, Y. Ohmura, T. Harada and Y. Kuniyoshi, "Wearable motion capture suit with full-body tactile sensors," 2009 IEEE International Conference on Robotics and Automation, Kobe, Japan, 2009, pp. 3186-3193, doi: 10.1109/ROBOT.2009.5152758. keywords: {Tactile sensors;Humans;Motion estimation;Humanoid robots;Wearable sensors;Motion measurement;Force measurement;Motion analysis;Shape;Robot control}, https://ieeexplore.ieee.org/abstract/document/5152758

- ↑ 32.0 32.1 G. Welch and E. Foxlin, "Motion tracking: no silver bullet, but a respectable arsenal," in IEEE Computer Graphics and Applications, vol. 22, no. 6, pp. 24-38, Nov.-Dec. 2002, doi: 10.1109/MCG.2002.1046626. keywords: {Tracking;Silver;Delay;Roads;Motion estimation;Motion measurement;Pipelines;Robustness;Degradation;Magnetic fields}, https://ieeexplore.ieee.org/abstract/document/1046626

- ↑ Angelos Karatsidis, Moonki Jung, H. Martin Schepers, Giovanni Bellusci, Mark de Zee, Peter H. Veltink, Michael Skipper Andersen, Musculoskeletal model-based inverse dynamic analysis under ambulatory conditions using inertial motion capture, Medical Engineering & Physics, Volume 65, 2019, Pages 68-77, ISSN 1350-4533, https://doi.org/10.1016/j.medengphy.2018.12.021

- ↑ Ascendo, G. (2021). Development of a non-invasive motion capture system for swimming biomechanics [Thesis(Doctoral)]. Manchester Metropolitan University.

- ↑ J. Wang, Z. Wang, F. Gao, H. Zhao, S. Qiu and J. Li, "Swimming Stroke Phase Segmentation Based on Wearable Motion Capture Technique," in IEEE Transactions on Instrumentation and Measurement, vol. 69, no. 10, pp. 8526-8538, Oct. 2020, doi: 10.1109/TIM.2020.2992183.

- ↑ Guénolé Fiche, Vincent Sevestre, Camila Gonzalez-Barral, Simon Leglaive, and Renaud Séguier. 2023. SwimXYZ: A large-scale dataset of synthetic swimming motions and videos. In Proceedings of the 16th ACM SIGGRAPH Conference on Motion, Interaction and Games (MIG '23). Association for Computing Machinery, New York, NY, USA, Article 22, 1–7. https://doi.org/10.1145/3623264.3624440

- ↑ https://coachsci.sdsu.edu/swim/bullets/Current44.pdf,Brent S. Rushall, Ph.D. August 21, 2013

- ↑ Yonglei Shi, Liqing Fang, Deqing Guo, Ziyuan Qi, Jinye Wang, and Jinli Che,https://sci-hub.se/10.1063/5.0054463

- ↑ G, Custers, https://research.tue.nl/en/studentTheses/experiments-on-human-swimming-passive-drag-experiments-and-visual

- ↑ J. Li et al., "Smart Swimming Training: Wearable Body Sensor Networks Empower Technical Evaluation of Competitive Swimming," in IEEE Internet of Things Journal, vol. 12, no. 4, pp. 4448-4465, 15 Feb.15, 2025, doi: 10.1109/JIOT.2024.3485232. keywords: {Sports;Motion segmentation;Training;Biomedical monitoring;Inertial sensors;Data integration;Wearable devices;Skeleton;Wireless communication;Monitoring;Body sensor network (BSN);competitive swimming;motion capture;multisensor data fusion;phase segmentation},

- ↑ Z. Wang et al., "Using Wearable Sensors to Capture Posture of the Human Lumbar Spine in Competitive Swimming," in IEEE Transactions on Human-Machine Systems, vol. 49, no. 2, pp. 194-205, April 2019, doi: 10.1109/THMS.2019.2892318. keywords: {Sports;Biomechanics;Tracking;Biological system modeling;Spine;Wearable sensors;Position measurement;Human biomechanical model;inertial sensor;motion capture;orientation estimation;sensor networks;sport training},

- ↑ Bai, L. (2022). 3D orientation estimation using inertial sensors. Journal of Electrical Technology UMY, 6(1), 1–8.

- ↑ Instructables. (2024, September 3). Motion tracker with arduino and gyroscope sensor. Instructables. https://www.instructables.com/Motion-Tracker-With-Arduino-and-Gyroscope-Sensor/

- ↑ Wang, Q., Zhou, G., Liu, Z., & Ren, B. (2021). Building a skeleton-based 3D body model with angle sensor data. Smart Health, 19, 100141. https://doi.org/10.1016/j.smhl.2020.100141

- ↑ Meyer, A. (2020). Using gyroscopes to enhance motion detection. Engineering Student Trade Journal Articles, (6). https://scholar.valpo.edu/stja/6/

- ↑ Magalhaes, Fabricio Anicio, et al. “Wearable inertial sensors in Swimming Motion Analysis: A systematic review.” Journal of Sports Sciences, vol. 33, no. 7, 30 Oct. 2014, pp. 732–745, https://doi.org/10.1080/02640414.2014.962574.