PRE2024 3 Group12

Group members

| Name | Student number |

|---|---|

| Mara Ioana Burghelea | 1788795 |

| Malina Corduneanu | 1816071 |

| Alexia Dragomirescu | 1793543 |

| Briana Anamaria Isaila | 1785923 |

| Marko Mrvelj | 1841246 |

| Ana Sirbu | 1829858 |

Introduction

Our group initially aimed to develop a prototype of an emotional support robot designed specifically for reducing stress in children with a long-term hospital stays. However, while the project started to take shape, we found that the voice of the robot - and possibly its expressions - could have a very big impact of a person's mood. Because of this, we decided to research the most effective vocal characteristics for the robot, including factors such as pitch, speaking rate, intensity and other features that could enhance comfort and emotional support for the children.

Problem statement

The problem we aim to offer a solution to arises from the difficulties children face with hospital stay. Numerous children with health difficulties are forced to stay in the hospital for prolonged periods of time. While an adult may be able to rationalise and accept this experience, many times, children are not able to process it and so it becomes heavy on their mental state [1]. Just as with any patient, children on hospital stay cannot have visitors at all times and are not always offered the emotional help and attention they require. In this regard, hospitals currently offer time slots dedicated to play time and socialisation for children on hospital stay (*), however this is not a solution for the isolation and anxiety these children face on a daily basis. What about the children who are not able to leave their room or are simply not allowed in the vicinity of other children due to their illness?

Solution

With this in mind, we propose a robot companion, whose functionality is dedicated to interacting with children. We aim to provide each child in need with an all time companion capable of:

- Engaging in interactive conversations to provide companionship.

- Offer emotional support through soothing speech and expressive behaviours.

- Assist in stressful moments, such as medical treatments, by providing reassurance.

- Having conversations and offer support at any moment.

In this way, at times of difficulty such as during treatment, the child can listen to their cheerful companion being there for them when no other human can. It’s been proven in many instances that children, and humans in general, are able to form meaningful connections with non-human entities such as animals, toys and also robots. In this regard, we believe that a vocal and expressive companion would help lift a bit of the anxiety surrounding the hospital.

Our project has shifted to researching the features of voices integrated in these robots.

We are conducting this research in collaboration with Language Studies internship student Nina van Roji from Utrecht University to investigate the impact of voice characteristics on emotional well-being. The voice is an important aspect of such robots and its effect on children is under-tested and under-researched, so we aim to contribute with our own research.

Users - description (Targeted stakeholders)

• Hospitals and caregiving facilities:

Implementing this technology could improve patient well-being and enhance pediatric care.

Hospitals and caregiving facilities could purchase this technology to improve the well-being of hospitalised children or children who need to be supervised or contained for longer periods due to illnesses. This technology would be helpful to such institutions because it would boost the disposition of sick children, which, as shown in multiple studies, increases the strength of the body and helps fight against the sickness.

• Parents:

Knowing that their child has an emotional support system in place when they are absent could provide them peace of mind.

• Children (aged )

They are the primary users. These young patients could benefit from companionship, playfulness and emotional support during their hospitalisation period. Illness itself is a very stressful thing both on the mind and on the body. For children, this becomes even more stressful since they do not fully understand what is happening to them. Feelings of loneliness and lack of interaction with loved ones and other children can have lasting effects on the development of the child and their mental well-being. Therefore, a robot that can keep them company during stressful moments and distract them by playing with them, talking to them, and telling them bedtime stories would make the treatment plan for the child more bearable and less frightening.

Deliverables

• Research paper on what voice features in our voices alleviate stress in children (using proven testing methods).

• General survey on adults about different voice features and which one is the most soothing.

• Results from the Korein TU/e daycare about how children reacted to the robot's voice when performing a certain task.

State of the art

State of the art regarding stress relief in children using robots

Children who spend a lot of time in hospital and medical facilities are prone to experience stress, anxiety and emotional distress. Emotional support robots are emerging as a potential solution to provide companionship and comfort. In this section, we are going to talk about the state of the art in emotional support robots for children.

Several robots have been developed already with the scope of providing emotional support, but it is important to note that most of the robots developed were not designed specifically for children in hospitals. Some mentionable examples are:

PARO: A robotic seal designed to provide comfort through tactile interactions and soothing behaviors for elderly patients with Alzheimer. This approach is simmilar to animal therapy, for example, but with robots. While it has been proven to reduce stress, it is not specifically targeted for children and it does not engage in interactive play or personalized companionship tailored for young users.[2][3]

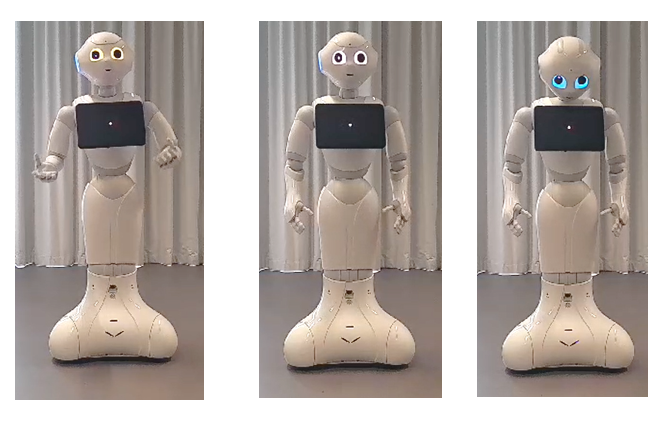

Pepper: is a semi-humanoid robots with the ability to read emotions. It is used as a receptionist at several offices in the UK and is able to identify visitors with the use of facial recognition, send alerts for meeting organisers and arrange for drinks to be made. While it has conversational capabilities and can recognize emotions, its primary function is not emotional support for children, and it lacks deep personalization and game-playing features as well as the caring aspect of emotional support needed for hospitalized children.[4]

Huggable: is a robotic companion capable of active relational and touch-based interactions with a person, targeted for children in hospitals. However, it’s capabilities are still limited, and while it provides emotional support, its interactive play, personalization and tailoring to users is not as advanced as envisioned in an ideal emotional support robot.[5]

Moxi: is a robotic assistant meant to help nurses and hospital staff with administrating medication and helping with repetitive tasks. While it enhances efficiency in hospital environments, Moxi does not engage in direct patient interactions for emotional support or entertainment, making it unsuitable for pediatric emotional care.[6]

Despite the advancements in the world of robotics and emotional support robots, some challenges still stand. Developing robots with the particular needs of children in hospitals and that can adapt to the personal needs of every child remains a key challenge. Another important aspect is maintaining the attention for longer periods of time which is a key aspect in the emotional support of kids who must spend a lot of time in the hospitals, this challenge implies constant updates in interaction style and content. To maximize effectiveness, emotional support robots should be seamlessly integrated into hospital care routines, working alongside medical staff and therapists. Lastly, ensuring data security and ethical use of AI in sensitive environments like hospitals is crucial. Regulations must be in place to protect children's privacy, particularly regarding data collection and emotional analysis.

In conclusion, these robots will become even more effective in providing meaningful emotional support. However, current solutions either lack the specific focus on children or do not incorporate essential features like interactive play and deep personalization.

State of the art regarding the impact of voice traits

The impact of voice traits such as intensity, duration and pitch have been studied for a very long time in developmental psychology and speech therapy. Recent advances in affective computing and human-computer interaction have allowed for the development of voice-based interactions aimed at reducing stress in children. This section reviews the state of research and how these voice traits influence stress levels in children, with a focus on empirical findings and technological applications.

Voice modulation plays a crucial role in emotional regulation. Studies in developmental psychology suggest that young children and infants are very responsive to different vocal characteristics, particularly those used by caregivers. Lower-pitched, soft-spoken voices have been associated with calming effects, while high-pitched, energetic tones can induce excitement. Physiologically, vocal traits influence the autonomic nervous system, with gentle, rhythmic speech patterns linked to lower cortisol levels

Recent empirical studies have explored how voice characteristics can alleviate stress in children and the following remarks can be made regarding this topic.

A lower pitch and slow modulation are perceived as soothing, reducing psychological markers of stress in children. Moreover, slow and rhythmic speech patterns have been shown to promote relaxation and reduce anxiety, particularly in clinical settings.[7]

On top of this, soft speech as opposed to loud or abrupt tonality, has been found to lower the heart rate and improve emotional regulation in children with anxiety disorders.[8][9]

The integration of voice into AI-driven applications and therapeutic tools has become increasingly popular.

For example, conversational agents and Chatbots such as Woebot and Replika incorporate soothing voice modulation to assist children in managing stress.

Apps like Moshi use carefully modulated narration to help children relax before bedtime. Also, emerging research explores adaptive voice systems that modify tone and pitch in real-time based on physiological stress markers.

Despite promising findings, several challenges remain such as children’s responses to voice traits vary based on age, temperament, and cultural background, moreover, more research is necessary to determine whether voice-based interventions provide lasting stress relief benefits.

In conclusion, research suggests that voice traits such as pitch, duration, and intensity play a significant role in stress reduction for children. While technological applications leveraging these findings are growing, further empirical validation is necessary to optimize interventions for diverse populations. Future research should focus on personalizing voice modulation strategies to maximize therapeutic effectiveness.

Literature research

Relationship between hospitalised children and AI companions

Long-term hospitalisation emotionally impacts any patient, especially children, and is defined as a long period of time during which the patient is hospitalised and experiences isolation from his or her family, friends and home[10]. Children who are required to be in the hospital for significant periods of time tend to experience trauma not only physically, that comes directly from the illness and the treatment, but also emotionally and socially. In addition to limited contact with families and missing the routine of school life, hospitalised children need to accept the rules and limitations of their new environment. This can further create a negative impact on their well-being. Pediatric intensive care unit (PICU) hospitalisation places children at increased risk of persistent psychological and behavioural problems following discharge[11]. Despite tremendous advances in the development of sophisticated medical technologies and treatment regimes, approximately 25% of children demonstrate negative psychological and behavioural responses within the first year post-discharge. Parents describe decreases in children’s self-esteem and emotional well-being, increased anxiety, and negative behavioural changes (e.g., sleep disturbances, social isolation) post-PICU discharge[12]. School-aged children report delusional memories and hallucinations, increased medical fears, anxiety, changes in friendships and in their sense of self. Psychiatric syndromes, including post-traumatic stress disorder and major depression, have been diagnosed. These studies have generally been conducted in the first year post-PICU discharge with the majority assessing symptoms within the first 6 months. Furthermore, children under the age of 6 years who constitute the bulk of the PICU population have rarely been included in research to date, suggesting the incidence of negative psychological and behavioural responses may be greatly underestimated.

One solution for navigating this issue is virtual communication. With increasingly impressive developments in the last years, it offers a way for adolescents to keep in touch with the outside world, while maintaining friendships and being on track with school work. The use of mobile technology has shown positive impact for the hospitalized individuals in recent studies[13]. Regretfully, the same effects cannot be replicated with younger children (0 - 6 years old) who are yet to develop friendships and for which playing represents a highly important role in their overall development[14]. To this end, our robot is presented as a solution. A relationship that a kid develops with their social robot friend has shown to be beneficial when it happens in a controlled environment[15].

Research: General use of Socially Assistive Robots in hospitals

During the COVID-19 pandemic, there was an increased emphasis on minimising human contact to avoid spreading the disease. This also allowed for significant developments in the field of robotics that are used in healthcare. We can divide robots in healthcare into 5 main groups:

- Interventional Robots - employed in performing precise surgical procedures

- Tele-operated Robots - enable healthcare professionals to perform tasks remotely

- Socially Assistive Robots (SARs) - focus on providing cognitive and emotional support through social interaction

- Assistive Robots - focus on providing physical support for individuals with impairments and disabilities

- Service Robots - handling logistical tasks within healthcare facilities.

We focus on the third group - Socially Assistive Robots.

SARs are designed to engage with individuals, offering companionship and assistance without physical contact. In Belgium, a study introduced a robot named James to older adults with mild cognitive impairment during the first lockdown. Participants reported that James helped alleviate feelings of loneliness and encouraged meaningful activities, highlighting the potential of SARs to support mental health during such challenging times[16].

In long-term care facilities, SARs have been employed to perform routine health screenings, reducing direct contact between staff and residents. A study conducted in a Canadian long-term care center utilised a robot to autonomously screen staff members for COVID-19 symptoms. The findings indicated that staff had a positive attitude towards the robot, suggesting that SARs can effectively assist in health monitoring tasks while minimising infection risks[17].

Beyond health monitoring, SARs have been instrumental in providing social interaction and reducing feelings of isolation. Research has shown that the presence of SARs can lead to improved mental health outcomes and a reduction in feelings of loneliness among older adults[18][19].

The pandemic has also influenced public perception of SARs. As traditional social interactions became limited, individuals began to recognize the potential benefits of SARs in providing companionship and support. Surveys conducted during the pandemic reveal a positive shift in attitudes toward SARs, with many viewing them as viable companions to mitigate social isolation[20].

In mental healthcare, SARs serve roles such as companions, coaches, and therapeutic play partners. They assist in monitoring treatment participation, offering encouragement, and leading users through clinically relevant activities. Research indicates that SARs can be effective tools in mental health interventions, providing support and enhancing patient engagement [21].

In summary, there is a great demand and use for Socially Assistive Robots in healthcare settings. Their ability to provide emotional support, facilitate social interactions, and perform essential monitoring tasks position them as vital tools in patient care and addressing the challenges of social isolation.

Research: Human and child reactions to facial expressions

We take a look at five studies examining the influence of facial expressions on emotional and behavioral responses, with implications for stress reduction in hospitalized children aged 3-6. These insights are relevant to designing a robot voice to provide emotional support in a clinical setting.

In a study where adults were presented with emotional facial expressions, happy faces elicited automatic approach tendencies, while angry faces prompted conscious avoidance. Sad expressions produced a mixed response—initial approach followed by deliberate withdrawal—suggesting nuanced emotional processing.[22] This indicates that a robot voice with a positive tone may foster engagement and reduce stress in children, whereas an angry tone could heighten anxiety.

In an experiment involving adults maintaining a genuine smile after a stressful arithmetic task, those who did so exhibited faster heart rate recovery compared to those with neutral expressions. The effect was enhanced when participants were aware of the smile’s purpose, supporting the facial feedback hypothesis.This suggests that a robot conveying warmth could facilitate physiological stress recovery in children following a challenging activity.[23]

Another study measured adults’ facial muscle responses to happy and angry expressions, finding that happy faces rapidly activated smiling muscles, while angry faces triggered frowning muscles, reflecting instinctive mimicry.[24]

In research observing preschoolers aged 3-5 during peer interactions, children displaying positive behaviors, such as smiling, were often linked to maternal reports of secure relationships. Maternal sensitivity to emotional cues correlated with increased prosocial behavior in children. This implies that a robot voice emulating a nurturing caregiver could enhance feelings of security and mitigate stress in a hospital environment.[25]

Finally, in a study with infants aged 3-6 months, participants fixated longer on happy faces, accompanied by heart rate deceleration, while angry or fearful faces increased heart rate, signaling stress.[26]

Collectively, these findings demonstrate that positive facial expressions—or their vocal equivalents—promote engagement, attention, and physiological calm across developmental stages. Conversely, negative expressions like anger induce avoidance and stress responses.Automatic mimicry of positive cues further enhances emotional well-being. A robot voice with a calm, nurturing tone may thus effectively support stress reduction in hospitalized children.

Project Timeline

| Week | Tasks |

|---|---|

| Week 1 | Research on AI voice characteristics, user specifications, state of the are and existing robots. Research into parts (prior to switching to research project) and AI required (open source AI tools for developing an AI companion - idea scratched) |

| Week 2 | Meeting with Nina van Roji, refining problem statement, refining ideas, discussing different testing methods used by other researchers, discussing the target of our research and what questions will be answered, discussing what voices will be used and what robots will be used, settling on the voice features we would test. |

| Week 3 | Discussing permissions and course of action with Korein TU/e daycare. Getting familiar with PRAAT and WaveNet. Creating the artificial voices. |

| Week 4 | Distributing the survey, collecting data, analysing survey results. |

| Week 5 | Implementing the voice. |

| Week 6 | Child response study at Korein TU/e daycare. |

| Week 7 | Final analysis and conclusions, writing research paper |

Research Methodology

Development Planning

To determine the most effective voice for an emotionally supportive robot, we will:

- Identify Key Voice Characteristics

- Survey Adult Participants

- Test in a Pediatric Setting

Testing Phases

1.Adult Survey

• Participants will listen to recordings with variations in intensity, pitch and speaking rate.

• They will rate each voice on a scale of 1 to 10 based on how soothing they find it.

• Results will determine which voice features are most effective in reducing stress.

2.Child Response Study (Korein TU/e Daycare)

• Children will engage in a stress-inducing activity (e.g. building LEGO with an unrealistic time constraint)

• Emotional responses are documented and results are compared.

(details on the exact set-up are still being discussed)

(details on how to test the facial expressions are also still being discussed)

Child Response Study @ Korein TU/e Daycare

Objective

To determine if the robot voice reduces stress and enhances performance in hospitalized children aged 3-6 during a stressful task.

Setup

- Voices: Single voice chosen by the survey (or an average of the features of the survey)

- Stressful situation: Lego-building challenge with a timer to induce mild stress. (if we decide to go with this)

- Measurements:

- Performance: Number of Lego pieces correctly placed within the time limit.

- Physiological Stress: The behaviour of the child during the building - were children visibly anxious or calm?

- Subjective Feedback: Talking to the children and getting their feedback.

Hypothesis

Children that listened to a 'calming' voice will perform better in the stressful situation than children that heard no voice.

Experimental Procedure

1. Preparation

- Randomly assign each child to one of the voice conditions.

2. Voice Exposure

- The robot plays the assigned voice reading the selected text.

3. Stressful Task

- After the voice exposure, present the Lego task.

- Start the 3-minute timer and observe the child’s performance.

- Note qualitative behaviors (e.g., frustration, calmness, verbal comments).

4. Post-Task Assessment

- Stop the timer and count correctly placed Lego pieces.

- Ask for the child’s subjective rating:

- “Did you like the robot’s voice?” (Yes/No)

- “How did the voice make you feel?”- show a smiley-face scale (happy 😊, neutral 😐, sad 😞)

5. Control Group

Repeat steps 1-4 without step 2, to gain information on how children perform the timed task on average.

6. Data Analysis

Examine the results of all groups, compare the mean and deviation for each group.

Adult Survey

While researching what type of text would be best, we stumbled upon a text that was used in research on the effects of voice qualities on relaxation. The study, titled "The Effects of Voice Qualities in Mindfulness Meditation Apps on Enjoyment, Relaxation State, and Perceived Usefulness," ([27]) was conducted by Stephanie Menhart and James J. Cummings. Although the study focused on mindfulness meditation apps, the story itself can be used for our research on voice effects. File:Base Audio.mp3

The story/text used

"In a small village nestled between rolling hills and lush forests, there lived a young girl named Elara. Elara had a special gift: she could communicate with animals. Every morning, she would wander into the forest, where the birds, squirrels, and deer would gather around her, eager to share their stories. One day, as Elara was exploring a hidden glade, she stumbled upon a wounded fox. The fox's leg was caught in a trap, and it whimpered in pain. Elara's heart ached for the poor creature, and she gently approached it, speaking in soothing tones. "Don't worry, little one. I'll help you," she whispered. With great care, Elara freed the fox from the trap and tended to its wounds. The fox, grateful for her kindness, nuzzled her hand and licked her cheek. From that day on, the fox became Elara's loyal companion, following her on her daily adventures and protecting her from harm. As the seasons changed, Elara and the fox grew closer, their bond unbreakable. The villagers marvelled at their friendship, and Elara's gift of communication with animals became a source of wonder and inspiration for all who knew her. And so, Elara and her fox lived happily ever after, their hearts forever intertwined by the magic of their unique connection."

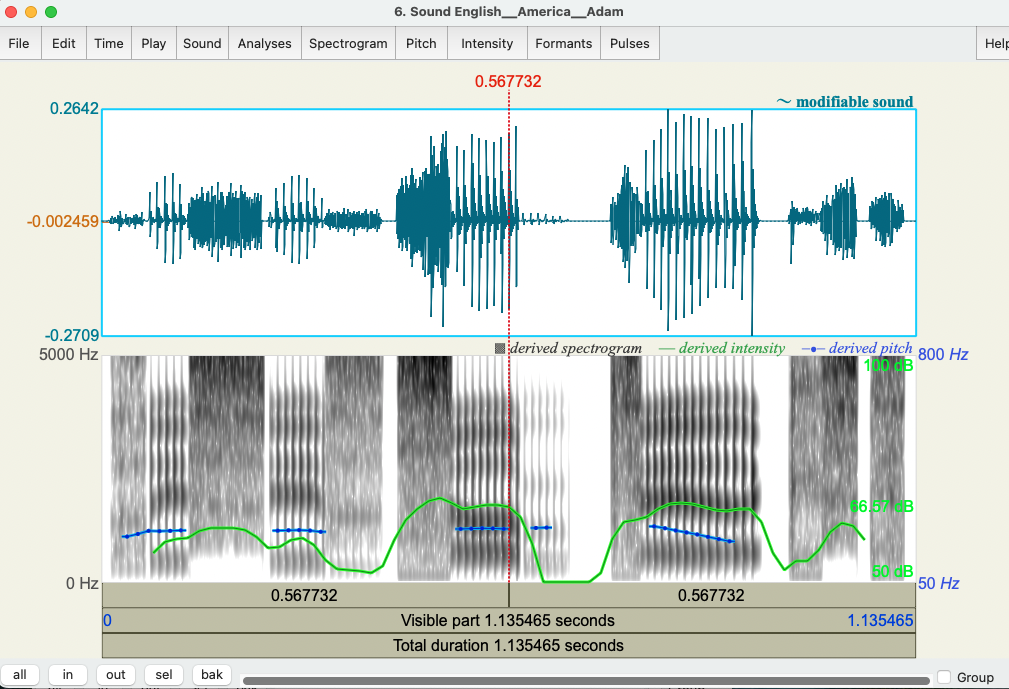

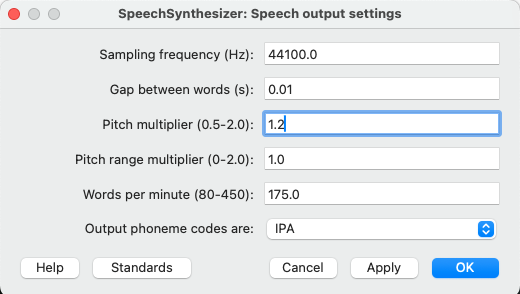

WaveNet transformed this text in speech (roughly 1 min), and we have then used Praat to change the pitch, intensity and duration of the audio.

Is one minute enough to get a feel of the relaxing properties of the voice?

Yes! Multiple studies have found that in guided meditation for example, one minute is enough for people to start feeling more relaxed(*). People taking the survey have a bit over a minute of audio to get a feel for the voice and choose a rating for each property, comparing them and finding which version is more relaxing.

Software and tools used

Praat

We began testing various voices in Praat to get an initial feel for this software's capabilities. Our focus was understanding how to manipulate key features such as pitch, intensity and words per minute. As we navigated through these features, we became more familiar with the interface and gained a better understanding of how these parameters could shape the overall speech output.

WaveNet

We used WaveNet, a deep neural network developed by Google DeepMind, to create a text-to-speech (TTS) system. WaveNet generates highly natural-sounding speech by modeling the raw waveform of audio, allowing it to produce realistic intonations, pitches, and nuances that mimic human speech. The generated speech had a lifelike quality, with smooth transitions between words and realistic pacing, which made the TTS output sound much more natural compared to traditional text-to-speech systems. The final result was a highly accurate and fluid voice that could be used for our research.

Progress - Workload

Week 1

| Name | Task | Work |

|---|---|---|

| Mara Ioana Burghelea | Users | Team meeting (1h), research (5h), user specifications on wiki (2h) |

| Malina Corduneanu | Research - psychology (AI/ robots and kids) | Team meeting (1h) |

| Alexia Dragomirescu | State of the art | Team meeting (1h), research(4h), state of the art(2h), study into existing robots and functionalities(1h) |

| Briana Anamaria Isaila | Functionality of the robot | Team meeting (1h), research papers (6h), looking up parts for the robot and open source software (3h), putting information on wiki (2h) |

| Marko Mrvelj | Research - robots in healthcare | Team meeting (1h), research (5h) |

| Ana Sirbu | Problem statement | Team meeting (1h) |

Week 2

| Name | Task | Work |

|---|---|---|

| Mara Ioana Burghelea | Team meeting with masters student Nina van Roji (1h), research into traits of relaxing voices (1h), talked to the TU/e daycare for possible robot testing. | |

| Malina Corduneanu | Team meeting with masters student Nina van Roji (1h) | |

| Alexia Dragomirescu | Team meeting with masters student Nina van Roji (1h), talked to the TU/e daycare for possible robot testing. | |

| Briana Anamaria Isaila | Team meeting with masters student Nina van Roji (1h), talked to the TU/e daycare for possible robot testing. | |

| Marko Mrvelj | Team meeting with masters student Nina van Roji (1h), updating wiki (2h) | |

| Ana Sirbu | Team meeting with masters student Nina van Roji (1h) |

In the second week we set up a meeting with a Language studies internship student from Utrecht University, Nina van Roji.

Development Planning:

In order to make sure that we can develop a robot which is actually able to help children reduce their stress in special circumstances, such as hospital care, we first need to make sure that our technology can reduce stress in general, as a first step, and then dive deeper into the medical environment. Therefore, the first phase of development concerns finding the best voice for our children companion robot. In order to do this, we will conduct research alongside an internship student from Utrecht University, Nina van Roji, in order to find the most soothing voice for our robot. Based on the results, our technology aims to have an AI assistant implemented which communicates with the child using such a voice. Besides communication, the robot could have different verbal behaviors integrated, such as telling stories, playing music or possibly even playing games with the child, all alongside daily conversations.

Testing phases:

In order to make sure that our technology is able to reduce stress in children through verbal interaction, we will start by conducting some research into what type of AI voices make people feel most at ease and more relaxed. We want to start from the default, known robotic voice and modify it based on three factors: intensity, pitch, and speaking-rate.

Based on these, we will generate multiple variations of robotic voices and create a questionnaire that we will distribute. The questionnaire will have multiple recordings of the same phrase (possibly a breathing exercise) and the participants in the questionnaire will be able to award each voice a grade from 1 to 10 based on how soothing they find each voice. This grading scheme aims to to provide a more statistical approach to what otherwise is a very subjective experience unique for each individual. Based on the answers from this questionnaire, we will then develop the most fitting AI voice based on the traits of the most soothing voices and apply it to our AI assistant, which we will train on our specific needs. These will focus on the interaction with the child, meaning we will implement functionalities such as story telling, conversation and music playing.

(The next stage would be to possibly test out technology on a child during playing time.)

After implementing an appropriate voice and having a fully developed model, our final testing step involves introducing the robot into the child’s environment and document their responses. As a highly subjective experience to each child involved, we are still to decide how we want to set our parameters in order to gather the maximum amount of information on the children’s emotional response to our robot. One favorable idea involves setting out a stress inducing situation fort the children, such as building lego in an unrealistic set time span, and having the robot interact with the children, encouraging and cheering on them. During and after this experience we will document how the child responds to their situation and to the robot. Perhaps, in order to have more clear results we can compare the response of children with whom the robot has interacted with during the stressful situation to children who were left to work alone in that time span. This way we would have a clear base case to the situation we want to analyze.

Week 3

| Name | Task | Work |

|---|---|---|

| Mara Ioana Burghelea | Team meeting with masters student Nina van Roji (1h) | |

| Malina Corduneanu | Team meeting with masters student Nina van Roji (1h) | |

| Alexia Dragomirescu | State of the art, Implementation | Team meeting with masters student Nina van Roji (1h), Update Wiki(3h), research into Miro(3h), Look into open source softwares and tools(2h), research into Zenbo(1h) |

| Briana Anamaria Isaila | Create voices for the survey we are sending out to adults | Set up the base voice in WaveNet (2h). Prepare the voice features for the survey in Praat (4h), Update Wiki (3h), |

| Marko Mrvelj | Team meeting with masters student Nina van Roji (1h), Preparing survey and detailed experiment plan (5h) | |

| Ana Sirbu | Team meeting with masters student Nina van Roji (1h) |

During this week, our objectives crystallised and we got to create some voices in Praat and manipulate them for the survey.

Implementation

This week we also started looking into how to implement the different voice characteristics and what softwares already existing we can use to help us achieve our goals.

For the scope of this course, we are allowed to use some already made robots such as Miro and Zenbo. We decided to look into the specifications of both and try to asses which one of them is easier to work with for our scope. We are going to start with Miro and we will present an overview of the implementation steps below as well as some possible challenges and disadvantages of using this kind of robot.

Miro is a biologically inspired robot designed to interact using simple behaviours. It does not have built-in AI-powered speech synthesis but can be programmed to use predefined speech sounds or integrate external speech synthesis modules.

Because of this, in order to implement different speech characteristics, we would have to pre-record a set of sentences which we would need to later on manipulate the voice. Then, we would have to use an external TTS engine which would be running on a Raspberry Pi controlling Miro.

Some tools and softwares we could use:

1. Festival Speech Synthesis System – Festival is an open-source speech synthesis system that allows for basic pitch and speed control. It is useful for generating different speech characteristics without the need for deep learning.

2. Praat – Praat is a tool for phonetic analysis and speech modification. It allows researchers to manually or programmatically adjust pitch, intensity, and duration in recorded speech files.

3. SoX (Sound eXchange) – SoX is a command-line tool for manipulating audio files. It can be used to adjust pitch, speed, and volume in speech samples before playing them back through Miro.

4. Raspberry Pi + Python – A Raspberry Pi running Python scripts can control Miro and manage speech synthesis using external TTS software. Python libraries such as pyfestival and pydub can be used for integrating speech synthesis.

5. Arduino + DFPlayer Mini – If using pre-recorded speech, an Arduino board combined with a DFPlayer Mini module can store and play back different speech clips with varying characteristics.

There are a lot of other open source softwares that we could use to implement the goals of our project such as Mozilla TTS (open-source deep-learning-based TTS with control over pitch and duration) and eSpeak NG (a lightweight, open-source text-to-speech (TTS) engine that supports multiple languages and voice customization).

A main disadvantage of Miro is that it has limited onboard computing power and it has no built-in AI speech model, relying on preprocessed or streamed audio. Further research is needed into the implementation and other challanges might show up during the development process, but the research provided above represents an outline of the possibilities Miro offers.

Week 4

Week 5

Week 6

Week 7

References

- ↑ https://www.researchgate.net/publication/338659403_The_Impact_of_Hospitalization_on_Psychophysical_Development_and_Everyday_Activities_in_Children

- ↑ [4]Hung, L., Liu, C., Woldum, E. et al. The benefits of and barriers to using a social robot PARO in care settings: a scoping review. BMC Geriatr 19, 232 (2019). https://doi.org/10.1186/s12877-019-1244-6

- ↑ Wang, X., Shen, J., & Chen, Q. (2022). How PARO can help older people in elderly care facilities: A systematic review of RCT. International journal of nursing knowledge, 33(1), 29–39. https://doi.org/10.1111/2047-3095.12327

- ↑ Abdulrauf, Habeeb. (2024). Assessing the Influence of Pepper Robot Facial Design on Emotion and Perception of First-Time Users During Interaction. 10.13140/RG.2.2.25878.66888.

- ↑ Stiehl, W.D. & Lieberman, J & Breazeal, Cynthia & Basel, L. & Cooper, R. & Knight, H. & Maymin, Allan & Purchase, S.. (2006). The huggable: a therapeutic robotic companion for relational, affective touch. 1290- 1291. 10.1109/CCNC.2006.1593253.

- ↑ Kyrarini, Maria & Lygerakis, Fotios & Rajavenkatanarayanan, Akilesh & Sevastopoulos, Christos & Nambiappan, Harish Ram & Kodur, Krishna & ramesh babu, Ashwin & Mathew, Joanne & Makedon, Fillia. (2021). A Survey of Robots in Healthcare. Technologies. 9. 8. 10.3390/technologies9010008.

- ↑ Pitch Variation in Children With Childhood Apraxia of Speech: Preliminary Findings Eddy C. H. Wong , Shelley L. Velleman , Michael C. F. Tong and Kathy Y. S. Lee

- ↑ Hunter E. J. (2009). A comparison of a child's fundamental frequencies in structured elicited vocalizations versus unstructured natural vocalizations: a case study. International journal of pediatric otorhinolaryngology, 73(4), 561–571. https://doi.org/10.1016/j.ijporl.2008.12.005

- ↑ Quam, C., & Swingley, D. (2012). Development in children's interpretation of pitch cues to emotions. Child development, 83(1), 236–250. https://doi.org/10.1111/j.1467-8624.2011.01700.x

- ↑ View of STRESS IN PEDIATRIC PATIENTS – THE EFFECT OF PROLONGED HOSPITALIZATION. (n.d.). https://www.revmedchir.ro/index.php/revmedchir/article/view/273/240

- ↑ Rennick, J.E., Dougherty, G., Chambers, C. et al. Children’s psychological and behavioral responses following pediatric intensive care unit hospitalization: the caring intensively study. BMC Pediatr 14, 276 (2014). https://doi.org/10.1186/1471-2431-14-276

- ↑ Rennick JE, Rashotte J. Psychological outcomes in children following pediatric intensive care unit hospitalization: a systematic review of the research. Journal of Child Health Care. 2009;13(2):128-149. doi:10.1177/1367493509102472

- ↑ Maor, D., & Mitchem, K. (2020). Hospitalized Adolescents’ Use of Mobile Technologies for Learning, Communication, and Well-Being. Journal of Adolescent Research, 35(2), 225-247. https://doi.org/10.1177/0743558417753953

- ↑ Paukkonen, K., & Sallinen, K. (2022). Play Interventions in Paediatric Nursing for Children Aged 0-6 Years Old : literature review. Theseus. https://www.theseus.fi/handle/10024/748388

- ↑ Kurian, N. (2023). Toddlers and Robots? The Ethics of Supporting Young Children with Disabilities with AI Companions and the Implications for Children’s Rights. International Journal of Human Rights Education, 7(1). Retrieved from https://repository.usfca.edu/ijhre/vol7/iss1/9

- ↑ Van Assche, M., Moreels, T., Petrovic, M., Cambier, D., Calders, P., & Van de Velde, D. (2023). The role of a socially assistive robot in enabling older adults with mild cognitive impairment to cope with the measures of the COVID-19 lockdown: A qualitative study. Scandinavian Journal of Occupational Therapy, 30(1), 42-52.

- ↑ Getson, C., & Nejat, G. (2022). The adoption of socially assistive robots for long-term care: During COVID-19 and in a post-pandemic society. Healthcare Management Forum, 35(5), 301-309

- ↑ Getson, C., & Nejat, G. (2021). Socially Assistive Robots Helping Older Adults through the Pandemic and Life after COVID-19. Robotics, 10(3), 106.

- ↑ "Application of Social Robots in Healthcare: Review on the Field, Aware Home Environments" - Sensors, 2023.

- ↑ Ghafurian, M., Ellard, C., & Dautenhahn, K. (2020). Social Companion Robots to Reduce Isolation: A Perception Change Due to COVID-19. arXiv preprint, arXiv:2008.05382

- ↑ "Integrating Socially Assistive Robotics into Mental Healthcare Interventions: Applications and Recommendations for Expanded Use" - Clinical Psychology Review, 2015.

- ↑ 1. Seidel, E.-M., Habel, U., Kirschner, M., Gur, R. C., & Derntl, B. (2010). The impact of facial emotional expressions on behavioral tendencies in females and males. *Journal of Experimental Psychology: Human Perception and Performance*, 36(2), 500-507. [PMC](https://pmc.ncbi.nlm.nih.gov/articles/PMC2852199/)

- ↑ 2. Kraft, T. L., & Pressman, S. D. (2012). Grin and bear it: The influence of manipulated facial expression on the stress response. *Psychological Science*, 23(11), 1372-1378. [PMC](https://pmc.ncbi.nlm.nih.gov/articles/PMC3530173/)

- ↑ Dimberg, U., Thunberg, M., & Grunedal, S. (2000). Facial reactions to emotional facial expressions: Affect or cognition? *Biological Psychology*, 52(2), 153-164. [ScienceDirect](https://www.sciencedirect.com/science/article/abs/pii/S0006322300010660)

- ↑ Brophy-Herb, H. E., Horodynski, M., Dupuis, S. B., Bocknek, E. L., & Schiffman, R. (2017). Preschool children’s observed interactions and their mothers’ reflections of relationships with their children. *The Journal of Genetic Psychology*, 178(2), 118-131. [Taylor & Francis](https://www.tandfonline.com/doi/abs/10.1080/00221325.2017.1286630)

- ↑ Nelson, C. A., & Dolgin, K. G. (1985). Infants’ responses to facial expressions of happiness, anger, and fear. *Child Development*, 56(1), 221-229. [JSTOR](https://www.jstor.org/stable/1129477)

- ↑ Menhart, S., & Cummings, J. J. (2022). The Effects of Voice Qualities in Mindfulness Meditation Apps on Enjoyment, Relaxation State, and Perceived Usefulness. Technology, Mind, and Behavior, 3(4: Winter). https://tmb.apaopen.org/pub/pop6taai