PRE2023 3 Group4

Group members

This study was approved by the ERB on Sunday 03/03/2024 (number ERB2024IEIS22). Below a few links are listed to important documents that were used for this study:

| Name | Student Number | Current Study program | Role or responsibility |

|---|---|---|---|

| Margit de Ruiter | 1627805 | BPT | Note-taker |

| Danique Klomp | 1575740 | BPT | Contact person |

| Emma Pagen | 1889907 | BAP | End responsible Wiki update |

| Liandra Disse | 1529641 | BPT | Planner |

| Isha Rakhan | 1653997 | BPT | Programming responsible |

Planning

Each week, there will be a mentor meeting on Monday morning followed by a group meeting. Another group meeting will be held on Thursday afternoon and by Sunday afternoon the wiki will be updated for work done that week (weekly deliverable).

Week 1

- Introduction to the course and team

- Brainstorm to come up with ideas for the project and select one (inform course coordinator)

- Conduct literature review

- Specify problem statement, user group and requirements, objectives, approach, milestones, deliverables and planning for the project

Week 2

- Get confirmation for using a robot lab, and which robot

- Ask/get approval for conducting this study

- Create research proposal (methods section of research paper)

- If approval is already given, start creating survey, programming the robot or creating video of robot

Week 3

- If needed, discuss final study specifics, including planning the session for conducting the study

- If possible, finalize creating survey, programming the robot or creating video of robot

- Make consent form

- Start finding and informing participants

Week 4

- Final arrangements for study set-up (milestone 1)

- Try to start with conducting the study

Week 5

- Finish conducting the study (milestone 2)

Week 6

- Conduct data analysis

- Finalize methods section, such as including participant demographics and incorporate feedback

- If possible, start writing results, discussion and conclusion sections

Week 7

- Finalize writing results, discussion and conclusion sections and incorporate feedback, all required research paper sections are written (milestone 3)

- Prepare final presentation

Week 8

- Give final presentation (milestone 4)

- Finalize wiki (final deliverable)

- Fill in peer review form (final deliverable)

Individual effort per week

Week 1

| Name | Total Hours | Break-down |

| Danique Klomp | 13.5 | Intro lecture (2h), Group meeting (2h), Group meeting (2h), Literary search (4h), Writing summary LS (2h), Writing problem statement first draft (1,5h) |

| Liandra Disse | 13.5 | Intro lecture (2h), group meeting (2h), Searching and reading literature (4h), writing summary (2h), group meeting (2h), updating project and meeting planning (1,5h) |

| Emma Pagen | 12 | Intro lecture (2h), group meeting (2h), literary search (4h), writing a summary of the literature (2h), writing the approach for the project (1h), updating the wiki (1h) |

| Isha Rakhan | 11 | Intro lecture (2h), group meeting (2h), group meeting (2h), Collecting Literature and summarizing (5h) |

| Margit de Ruiter | 13 | Intro lecture (2h), group meeting (2h), literature research (4h), writing summary literature (3h) group meeting (2h) |

Week 2

| Name | Total Hours | Break-down |

| Danique Klomp | 16,5 | Tutormeeting (35min), groupmeeting 1(2.5h), groupmeeting 2 (3h), send/respond to mail (1h), literature interview protocols and summarize (3h), literature on interview questions (6.5h), |

| Liandra Disse | 12 | Tutormeeting (35min), groupmeeting (3h), write research proposal (3h), groupmeeting (3h), finalize research proposal and create consent form (2,5h) |

| Emma Pagen | 11,5 | Tutormeeting (35min), groupmeeting (3h), write research proposal (2h), groupmeeting (3h), finalize research proposal and create consent form (1,5h), updating wiki (1,5h) |

| Isha Rakhan | 10 | Research on programming (7h), groupmeeting (3h) |

| Margit de Ruiter | 11,5 | Tutormeeting (35min), groupmeeting (3h), read literature Pepper and summarize (3h), groupmeeting (3h), research comfort question interview (2h) |

Week 3

| Name | Total Hours | Break-down |

| Danique Klomp | 14 | Tutormeeting (35min), groupmeeting 1(3h), meeting Task (3h), preparation Thematic analysis & protocol (2h), mail and contact (1,5h), meeting Zoe (1h), group meeting (3h) |

| Liandra Disse | 12 | Tutormeeting (35min), groupmeeting 1(3h), meeting Task (3h), update (meeting) planning (1h), prepare meeting (1h), group meeting (3h), find participant (30min) |

| Emma Pagen | 12 | Tutormeeting (35min), groupmeeting 1(3h), finish ERB form (1h), create lime survey (1,5h), make an overview of the content sections of final wiki page (1h), group meeting 2 (3h), updating the wiki (2h) |

| Isha Rakhan | 12,5 | Tutormeeting (35min), groupmeeting 1(3h), meeting zoe (1h), group meeting (3h), programming (5h) |

| Margit de Ruiter | *was not present this week, but told the group in advance and had a good reason* |

Week 4

| Name | Total Hours | Break-down |

| Danique Klomp | 11,5 | Tutor meeting (35min), group meeting (3h), review interview questions (1h), finding participants (0.5h), mail and contact (1h), reading and reviewing wiki (1.5h), group meeting (4h), lab preparations (1h) |

| Liandra Disse | 11 | Prepare and catch-up after missed meeting due to being sick (1,5h), find participants (0.5h), planning (1h), group meeting (4h), set-up final report and write introduction (4h) |

| Emma Pagen | 13 | Tutormeeting (35min), group meeting (3h), adding interview literature (2h), find participants (0,5h), group meeting (4h), reviewing interview questions (1h), going over introduction (1h), updating wiki (1h) |

| Isha Rakhan | 11,5 | Tutormeeting (35min), group meeting (3h), research and implement AI voices (2h), documentation choices Pepper behavior (2h), group meeting (4h) |

| Margit de Ruiter | 11 | Tutormeeting (35min), group meeting (1h), find participants (0.5h), testing interview questions (1h), group meeting (4h), start writing methods (4h) |

Week 5

| Name | Total Hours | Break-down |

| Danique Klomp | 24,5 | Tutor meeting (30min), group meeting (experiment) (3h), transcribe first round of interviews (2h), familiarize with interviews (5h), highlight interviews (2h), group meeting (experiment) (3h), transcribe second round of interviews (2h), first round of coding (3h), refine and summarize codes (2h), prepare next meeting (1h), adjust participants in methods section (1h) |

| Liandra Disse | 21,5 | Tutor meeting (30min), group meeting (experiment) (3h), transcribe, familiarize and code first interviews (6h), incorporate feedback introduction (1h), group meeting (experiment) (3h), transcribe, familiarize, code second interviews and refine codes (7h), extend methods section (1h) |

| Emma Pagen | 20,5 | Tutor meeting (30min), group meeting (experiment) (3h), transcribe first round of experiments (3h), familiarize with interviews and coding of first interviews (4h), group meeting (experiment) (3h), transcribe second round of interviews (3h), familiarize with interviews and coding of second interviews (4h) |

| Isha Rakhan | 14,5 | Tutor meeting (30min), group meeting (experiment) (3h), group meeting (experiment) (3h) transcribing all of the interviews (4h), coding all of the interviews (4h) |

| Margit de Ruiter | 17 | Tutor meeting (30min), group meeting (experiment) (3h), transcribing all the interviews (7h), group meeting (experiment) (3h) coding all the interviews (3,5h) |

Week 6

Introduction

The use of social robots, specifically designed for interacting with humans and other robots, has been rising for the past several years. These types of robots differ from the robots we have been getting used to over the past decades which often only perform on specific and dedicated tasks. Social robots are now mostly used in services settings, as companions and support tools[1][2];. In many promising sectors of application, such as healthcare and education, social robots must be able to communicate with people in ways that are natural and easily understood. To make this human-robot interaction (HRI) feel natural and enjoyable for humans, robots must make use of human social norms[3]. This requirement originates from humans anthropomorphizing robots, meaning that we attribute human characteristics to robots and engage and form relationships with them as if they are human. We use this to make the robot’s behavior familiar, understandable and predictable to us, and infer the robot’s mental state. However, for this to be a correct as well as intuitive inference, the robot’s behavior must be aligned with our social expectations and interpretations for mental states [4].

One very important integrated element in human communication is the use of nonverbal expressions of emotions, such as facial expressions, gaze, body posture, gestures, and actions[3][4]. In human-to-human interaction as well as human-robot interaction, these nonverbal cues support and add meaning to verbal communication, and expressions of emotions specifically help build deeper and more meaningful relations, facilitate engagement and co-create experiences[5]. Besides adding conversational content, it is also shown that humans can unconsciously mimic the emotional expression of the conversational partner, known as emotional contagion, which helps to emphasize with others by simulating their feelings[5][3]. Due to our tendency to anthropomorphize robots, it is possible that emotional contagion also occurs during HRI and can facilitate making users feel positive affect while interacting with a social robot[5]. Artificial emotions can be used in social robots to facilitate believable HRI, but also provide feedback to the user about the robot’s internal state, goals and intentions[6]. Moreover, they can act as a control system through which we learn what drives the robots’ behavior and how it is affected by and adapts due to different factors over time[6]. Finally, the ability of social robots to display emotions is crucial in forming long-term social relationships, which is what people will naturally seek due to the anthropomorphic nature of social robots[3].

Altogether, the important role of emotions in human-robot interaction requires us to gather information about how robots can and should display emotions for them to be naturally recognized as the intended emotion by humans. A robot can display emotions when it combines body posture, motion velocity, facial expressions and vocal signs (e.g. prosody, pitch, loudness), highly depending on the possibilities considering the robot’s morphology and degree of anthropomorphism[7][8][9]. Social robots are often more humanoid, increasing anthropomorphism, and therefore requiring to match the robot's behavior with the appearance to avoid falling into the uncanny valley which elicits a feeling of uneasiness or disturbance[7][10]. Some research has already been done on testing the capability of certain social robots, including Pepper, Nao and Misty, to display emotions and resulted in robot-specific guidelines on how to program displaying certain emotions[8][11][12].

Based on these established guidelines for displaying emotions, we can look further into how humans are affected by the robot’s emotional cues during interaction with a robot. We will research this in a context where we would also expect a human to display emotions, namely during telling an emotional story. Our research takes inspiration from the study of Van Otterdijk et al[13] and Bishop et al[12] in which the robot Pepper was used to deliver either a positive or negative message accompanied by congruent or incongruent emotional behavior. We will extend on these studies by taking a different combination of context for application and research method: interaction with students as researching application in an educational setting rather than healthcare, and using interviews to gain a deep understanding rather than surveys. Moreover, students are an important target group for robots, because they represent future workforce and innovations. Understanding their needs can help developers design the robots so that they are engaging, user-friendly and educational[14].

More specifically, the research question that will be studied in this paper is “To what extent does a match between the displayed emotion of a social robot and the content of the robot’s spoken message influence the acceptance of this robot?”. We expect that participants will prefer interacting with the robot while displaying the emotion that fits with the content of its message and to be open to more future interactions like this with the robot. On the other hand, we expect that a mismatch between the emotion displayed by robot and the story it is telling will make participants feel less comfortable and therefore less accepting of the robot. Moreover, we expect that the influence of congruent emotion displaying will be more prominent with a negative than a positive message. The main focus of this research is thus on how accepting the students are of the robot after interacting with it, but also gaining insights into potential underlying reasons, such as the amount of trust the students have in the robot and how comfortable they feel when interacting with them. The results could be used to provide insights into the importance of congruent emotion displaying and whether robots could be used on university campuses as assistant robots.

Method

Design

This research consisted of an exploratory study. The experiment was a within-subject design, where all the participants were exposed to the six conditions of the experiment. It consisted of a 2 (positive/negative story) x 3 (happy/neutral/sad emotion displayed by robot) experiment. These six conditions differ in terms of a match between the content of the story (either positive or negative) and the emotion (happy, neutral or sad) of the robot. An overview of the conditions can in Table 1.

| Story / displayed emotion | Happy | Neutral | Sad |

| Positive | Congruent | Emotionless | Incongruent |

| Negative | Incongruent | Emotionless | Congruent |

The independent variables in this experiment were the combination of displayed emotion and the kind of emotional story. The dependent variable was the acceptance of the robot. This was measured by qualitatively analyzing the interviews held with the participants during the experiment.

Participants

The study investigated the viewpoint of students and therefore the participants were gathered from the TU/e. We have chosen to target this specific group because of their in general higher openness to social robots and the increased likelihood that this group will deal a lot with social robots in the near future[14].

Ten participants took part in this experiment and all the participants were allocated to all the six conditions of the study. [DL1] [KD2] There were five men and five women who completed the study. Their age ranged between 19 and 26 years, with an average of 21.4 years (+- 1.96). They are all students at the Eindhoven University of Technology and volunteers, meaning they were not compensated financially for participating in this study. The participants were gathered from the researchers’ own networks, but multiple different studies were included (see Table 2). The general attitude towards robots of all the participants was measured, and all of them had relatively positive attitudes towards robots. Most of the participants saw robots as a useful tool that would help to reduce the workload of humans, however two participants commented that current robots would be unable to fully replace humans in their jobs. When asked whether they had been in contact with a robot before, six of the participants had seen or worked with a robot before. One participant was even familiar with the Pepper robot that was used during the experiment. Three participants responded that they had not been in contact before, but they had experience with AI or Large Language Models (LLM). One participant had never been in contact with a robot before but did not comment on whether they had used AI or LLM. All in all, the participants were all familiar with robots and the technology surrounding robots, which is expected as robots in general are a large part of the curriculum of the Technical University they are enrolled at.

| Study | Number of participants |

| Psychology and Technology | 3 |

| Electrical engineering | 2 |

| Mechanical engineering | 1 |

| Industrial Design | 1 |

| Biomedical technology | 1 |

| Applied physics | 1 |

| Applied mathematics | 1 |

Materials

Pepper

For this experiment, the robot Pepper was used, which is manufactured by SoftBank Robotics.

The reason this robot was chosen is because Pepper is a well-known robot that multiple studies have been done on and that is already being applied in different settings, such as hospitals and customer service. Based on young adults' preferences for robot design, Pepper would also be most useful in student settings, given its human-like shape and ability to engage emotionally with people[15]. The experiment itself was conducted in one of the robotics labs on the TU/e campus, where the robot Pepper is readily available.

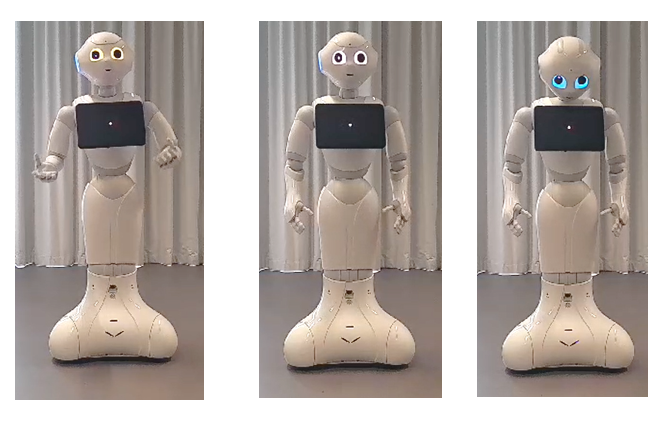

Pepper was programmed in Choregraphe to display happiness and sadness based on pitch, body posture and gestures, and LED eye color. The behavior that Pepper will display is shown in Table 3 and Figure 1, based on the research of Bishop et al. (2019)[12] and Van Otterdijk et al. (2021)[13]. Facial expressions cannot be used, since the morphology of Pepper does not allow for it.

| Happy | Neutral | Sad | |

| Pitch of voice | High pitch and intonation | Average of happy and sad condition | Low pitch and intonation |

| Body posture and gestures | Raised chin, extreme movements, upwards arms, looking around | Average of happy and sad condition | Lowered chin, small movements, hanging arms, not looking around |

| LED eye color | Yellow | White | Light-blue |

Laptops

In the full study, four laptops were used. At the start of the experiment, three laptops were used to hand to the participant for filling in the demographics LimeSurvey. During the experiment, one laptop was used to direct Pepper to tell the different stories with the different emotions. This was done from the control room. Two other researchers were present in the room with the participants and Pepper to assist if necessary and take notes on their laptop, so two other laptops were used there. Moreover, during the interviews, the researcher could choose to keep their laptop with them for taking notes or recording. If chosen not to, the researcher used a mobile phone to record.

LimeSurvey for demographics

The demographics survey that participants were asked to fill in consisted of the following questions:

- What is your age in years?

- What is your gender?

- Male

- Female

- Non-binary

- Other

- Do not want to say

- What study program are you enrolled in currently?

- In general, what do you think about robots?

- Did you have contact with a robot before? Where and when?

These last two questions were based on an article by Horstmann & Krämer[16], which focused on the expectations that people have with robots and their expectations when confronted with other social robots concepts.

Stories told by the robot

The positive and negative stories that the robot told are fictional stories about polar bears, inspired by the study[12]. The content of the stories is based on non-fictional internet sources and rewritten to best fit our purpose. It was decided to keep the stories fictional and about animals rather than humans, because of the lower risk of doing emotional damage to the participants associated with elicited feelings based on personal circumstances.

The positive story is and adaptation of Cole (2021)[17] and shown below:

“When Artic gold miners were working on their base, they were greeted by a surprising guest, a young lost polar bear cub. It did not take long for her to melt the hearts of the miners. As the orphaned cub grew to trust the men, the furry guest soon felt like a friend to the workers on their remote working grounds. Even more surprising, the lovely cub loved to hand out bear hugs. Over the many months that followed, the miners and the cub would create a true friendship. The new furry friend was even named Archie after one of the researcher’s children. When the contract of the gold miners came to an end, the polar bear cub would not leave their side, so the miners decided to arrange a deal with a sanctuary in Moscow, where the polar bear cub would be able to live a happy life in a place where its new-found friends would come to visit every day.”

The negative story is an adaptation of Alexander (n.d.)[18] and shown below:

"While shooting a nature documentary on the Arctic Ocean island chain of Svalbard, researchers encountered a polar bear family of a mother and two cubs. During the mother's increasingly desperate search for scarce food, the starving family was forced to use precious energy swimming between rocky islands due to melting sea ice. This mother and her cubs should have been hunting on the ice, even broken ice. But they were in water that was open for as far as the eye could see. The weaker cub labors trying to keep up and the cub strained to pull itself ashore and then struggled up the rock face. The exhausted cub panicked after losing sight of its mother and its screaming could be heard from across the water. That's the reality of the world they live in today. To see this family with the cub, struggling due to no fault of their own is extremely heart breaking.”

Interview questions

Two semi-structured interviews were held per participant, one after the first three conditions, in which the story is the same, and one after the second three conditions. These interviews were practically the same, except for one extra question (question 7) in the second interview (see below). The interview questions 1-8 were mandatory and questions a-q were optional to use as probing questions. Researchers were free to use these probing questions or use new questions to get a deeper understanding of the participant's opinion during the interview. The interviews also included a short explanation beforehand. This interview guide was printed for each interview with additional space for taking notes.

The interview questions were largely based on literature research. They were divided into three different categories: attitude, trust and comfort. Overall, these three categories should give insight into the general acceptance of robots by students. Firstly, a manipulation check was done to make sure the participants had a correct impression of the story and the emotion the robot was supposed to convey. These were followed by questions about the attitude, focusing on the general impression that the students had of the robot, their likes and dislikes towards the robot, and their general preference for a specific robot emotion. These questions were mainly based on the research of Wu et al (2014) and Del Valle-Canencia (2022)[19]. The questions about the trustworthiness of the different emotional states of the robot are based on Jung et al. (2021) and Madsen and Gregor (2000)[20]. The comfort-category focused mainly on how comfortable the participants felt with the robot. These questions were also based on literature[21]. Lastly, the participants were asked whether they think Pepper would be suitable to use on campus and for which tasks.

The complete interview, including the introduction, can be seen below:

You have now watched three iterations of the robot telling a story. During each iteration the robot had a different emotional state. We will now ask you some questions about the experience you had with the robot. We would like to emphasize that there are no right or wrong answers. If there is a question that you would not like to answer, we will skip it.

- What was your impression of the story that you heard?

- a. Briefly describe, in your own words, the emotions that you felt when listening to the three versions of the story?

- b. Which emotion did you think would best describe the story?

- How did you perceive the feelings that were expressed by the robot?

- c. How did the robot convey this feeling?

- d. Did the robot do something unexpected?

- What did you like/dislike about the robot during each of the three emotional states?

- e. What are concrete examples of this (dis)liking?

- f. How did these examples influence your feelings about the robot?

- g. What were the effects of the different emotional states of Pepper compared to each other?

- i. What was the most noticeable difference?

- Which of the three robot interactions do you prefer?

- h. Why do you prefer this emotional state of the robot?

- i. If sad/happy chosen, did you think the emotion had added value compared?

- j. If neutral chosen, why did you not prefer the expression of the matching emotion?

- Which emotional state did you find the most trustworthy? And which one the least trustworthy?

- k. Why was this emotional state the most/least trustworthy?

- l. What did the robot do to cause your level of trust?

- m. What did the other emotional states do to be less trustworthy?

- Which of the three emotional states of the robot made you feel the most comfortable in the interaction?

- n. Why did this emotional state make you feel comfortable?

- o. What effect did the other emotional state have?

- Do you think Pepper would be suitable as a campus assistant robot and why (not)?

- p. If not, in what setting would you think it would be suitable to use Pepper?

- q. What tasks do you think Pepper could do on campus?

- Are there any other remarks that you would like to leave, that were not touched upon during the interview, but that you feel are important?

Procedure

When participants entered the experiment room, they were instructed to sit down on one of the five chairs in front of the robot Pepper (see Figure X). Each chair had a similar distance to the robot of about 1 meter. The robot Pepper was already moving rather calmly to get participants used to the robot movements. This was especially important since some participants were not familiar yet with Pepper.

The participants started with a short introduction of the study, as can also be seen in the research protocol linked below, and the request to read and sign the consent form (Link consent form in wiki) and continue with filling in a demographic survey on LimeSurvey. There were two sessions with each five participants. First, the participants listened to Pepper, who told the group a story about an ice bear, either a positive or a negative one (see Table 4 and 5 for exact condition-order per session). Pepper told this story three times, each time with a different emotion, which could be ‘happy', ‘sad’ or ‘neutral’. The time that these three iterations took was approximately 6 minutes. When Pepper was finished, the five participants were asked to each follow one of the researchers into an interview room. These one-on-one interviews were held simultaneously and lasted approximately 10 minutes. After completing the interview, the participants went back to the room where they started and listened again to a story about an ice bear. If they had already listened to the positive story, they now proceeded to the negative one and vice versa. After Pepper had finished this story, the same interview was held under the same circumstances. After completion of the interview, there was a short debriefing. The total the experiment lasted about 45-60 minutes.

| Round | story | Emotion 1 | Emotion 2 | Emotion 3 |

| 1 | Negative | Neutral | Sad | Happy |

| 2 | Positive | Neutral | Happy | Sad |

| Round | story | Emotion 1 | Emotion 2 | Emotion 3 |

| 1 | Positive | Neutral | Happy | Sad |

| 2 | Negative | Neutral | Sad | Happy |

The complete and more elaborate research protocol can be found via this link: add link in wiki

Data analysis

The data analysis done in this research is thematic analysis of the interviews. The interviews were audio-recorded and from the recordings a transcript was made. As a first step to the data analysis process, the raw transcripts were cleaned. This includes removing nonsense words, like “uhm” and “nou” or any other forms of stop words. The speakers in the transcript were then also labeled with “interviewer” and “participant X” to make the data analysis easier. After the raw data was cleaned, the experimenters were instructed to become familiar with the transcripts, after which they could start the initial coding stage. This means highlighting important answers and phrases that could help answer the research question. These highlighted texts were then coded using a short label. All the above steps were done by the experimenters individually for their own interviews.

The next step would be combining codes and refining them. This was done during a group meeting where all the codes were carefully examined and combined to form one list of codes. After this, the experimenters recoded their own interview with this list of codes and another experimenter checked the recoded transcript. Any uncertainties or discussions on coding that arose were discussed in the next group meeting. In this meeting, some codes were added, removed or adjusted and the final list of codes was completed. The codes in this final list are divided into themes that can be used to eventually answer the research question. After this meeting, the interviews were again recoded using the final list of codes and the results were compiled from the final coding.

Sources

- ↑ Biba, J. (2023, March 10). What is a social robot? Retrieved from Built In: https://www.nature.com/articles/s41598-020-66982-y#citeas

- ↑ Borghi, M., & Mariani, M. (2022, September). The role of emotions in the consumer meaning-making of interactions with social robots. Retrieved from Science Direct: https://www.sciencedirect.com/science/article/pii/S0040162522003687

- ↑ 3.0 3.1 3.2 3.3 Kirby, R., Forlizzi, J., & Simmons, R. (2010). Affective social robots. Robotics and Autonomous Systems, 58(3), 322–332. https://doi.org/10.1016/J.ROBOT.2009.09.015

- ↑ 4.0 4.1 Breazeal, C. (2004). Designing Sociable Robots. Designing Sociable Robots. https://doi.org/10.7551/MITPRESS/2376.001.0001

- ↑ 5.0 5.1 5.2 Chuah, S. H. W., & Yu, J. (2021). The future of service: The power of emotion in human-robot interaction. Journal of Retailing and Consumer Services, 61, 102551. https://doi.org/10.1016/J.JRETCONSER.2021.102551

- ↑ 6.0 6.1 Fong, T., Nourbakhsh, I., & Dautenhahn, K. (2003). A survey of socially interactive robots. Robotics and Autonomous Systems, 42(3–4), 143–166. https://doi.org/10.1016/S0921-8890(02)00372-X

- ↑ 7.0 7.1 Marcos-Pablos, S., & García‐Peñalvo, F. J. (2021). Emotional Intelligence in Robotics: A Scoping review. In Advances in intelligent systems and computing (pp. 66–75). https://doi.org/10.1007/978-3-030-87687-6_7

- ↑ 8.0 8.1 Miwa, H., Itoh, K., Matsumoto, M., Zecca, M., Takariobu, H., Roccella, S., Carrozza, M. C., Dario, P., & Takanishi, A. (n.d.). Effective emotional expressions with emotion expression humanoid robot WE-4RII. 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) (IEEE Cat. No.04CH37566), 3, 2203–2208. https://doi.org/10.1109/IROS.2004.1389736

- ↑ Crumpton, J., & Bethel, C. L. (2015). A Survey of Using Vocal Prosody to Convey Emotion in Robot Speech. International Journal Of Social Robotics, 8(2), 271–285. https://doi.org/10.1007/s12369-015-0329-4

- ↑ Wang, S., Lilienfeld, S. O., & Rochat, P. (2015). The Uncanny Valley: Existence and Explanations. Review Of General Psychology, 19(4), 393–407. https://doi.org/10.1037/gpr0000056

- ↑ Johnson, D. O., Cuijpers, R. H., & van der Pol, D. (2013). Imitating Human Emotions with Artificial Facial Expressions. International Journal of Social Robotics, 5(4), 503–513. https://doi.org/10.1007/S12369-013-0211-1/TABLES/8

- ↑ 12.0 12.1 12.2 12.3 Bishop, L., Van Maris, A., Dogramadzi, S., & Zook, N. (2019). Social robots: The influence of human and robot characteristics on acceptance. Paladyn, 10(1), 346–358. https://doi.org/10.1515/pjbr-2019-0028

- ↑ 13.0 13.1 Van Otterdijk, M., & Barakova, E. I., Torresen, J. & Neggers, M. E. M. (2021). Preferences of Seniors for Robots Delivering a Message With Congruent Approaching Behavior. 10.1109/ARSO51874.2021.9542833.

- ↑ 14.0 14.1 Manzi, F., Sorgente, A., Massaro, D., Villani, D., Di Lernia, D., Malighetti, C., Gaggioli, A., Rossignoli, D., Sandini, G., Sciutti, A., Rea, F., Maggioni, M. A., Marchetti, A., & Riva, G. (2021). Emerging Adults’ Expectations about the Next Generation of Robots: Exploring Robotic Needs through a Latent Profile Analysis. Cyberpsychology, Behavior, and Social Networking, 24(5), 315–323. https://doi.org/10.1089/CYBER.2020.0161

- ↑ Björling, E. A., Thomas, K. A., Rose, E., & Çakmak, M. (2020). Exploring teens as robot operators, users and witnesses in the wild. Frontiers in Robotics and AI, 7. https://doi.org/10.3389/frobt.2020.00005

- ↑ Kramer, S. & Horstmann, W. (2019). Perceptions and beliefs of academic librarians in Germany and the USA: a comparative study. LIBER Quarterly, 29(1), 1-18. doi: https://doi.org/10.18352/lq.10314

- ↑ Cole, J. (2021, April). Good News Network. Retrieved from Orphaned Polar Bear That Loved to Hug Arctic Workers Gets New Life: vhttps://www.goodnewsnetwork.org/orphaned-polar-bear-rescued-russian-arctic/

- ↑ Alexander, B. (n.d.). USA Today Entertainment. Retrieved from Polar bear cub's agonizing struggle in Netflix's 'Our Planet II' is telling 'heartbreaker': https://eu.usatoday.com/story/entertainment/tv/2023/06/15/netflix-our-planet-2-polar-bear/70296362007/

- ↑ Valle-Canencia, Marta & Moreno, Carlos & Rodríguez-Jiménez, Rosa-María & Corrales-Paredes, Ana. (2022). The emotions effect on a virtual characters design–A student perspective analysis. Frontiers in Computer Science. 4. 10.3389/fcomp.2022.892597.

- ↑ Madsen, Maria & Gregor, Shirley. (2000). Measuring human-computer trust.

- ↑ Erken, E. (2022). Chatbot vs. Social Robot: A Qualitative Study Exploring Students’ Expectations and Experiences Interacting with a Therapeutic Conversational Agent. Tilburg University, Tilburg. Microsoft Word - EstherErken_2049726_MasterThesis_Final.docx (uvt.nl)

Appendix

The complete research protocol can be found via the following link: