PRE2019 4 Group3

SPlaSh

Group members

| Student name | Student ID | Study | |

|---|---|---|---|

| Kevin Cox | 1361163 | Mechanical Engineering | k.j.p.cox@student.tue.nl |

| Menno Cromwijk | 1248073 | Biomedical Engineering | m.w.j.cromwijk@student.tue.nl |

| Dennis Heesmans | 1359592 | Mechanical Engineering | d.a.heesmans@student.tue.nl |

| Marijn Minkenberg | 1357751 | Mechanical Engineering | m.minkenberg@student.tue.nl |

| Lotte Rassaerts | 1330004 | Mechanical Engineering | l.rassaerts@student.tue.nl |

First feedback meeting

SPLASH: THE PLASTIC SHARK (tekst van Dennis)

Er ligt heel veel plastic in de zee en dit brengt heel veel problemen met zich mee. Op dit moment is er al een project bezig wat ook bezig is met het schoonmaken van de zee, namelijk The Ocean Cleanup.

We zouden bij dit onderwerp veel verschillende dingen kunnen doen. We zouden een prototype kunnen maken (LEGO, CAD), we zouden iets met beeldherkenning kunnen doen en we kunnen onderzoek doen naar het nut van het gebruik van de SPlaSh.

MOGELIJKHEDEN

1. Ik denk voor het beste resultaat, dat het het best is om het ontwerp in CAD te maken en dat we hier eventueel een simulatie van kunnen maken waarin je kunt zien hoe het werkt.

2. De reden dat we iets met beeldherkenning kunnen doen is dat de SPlaSh plastic, vissen en misschien nog wel andere dingen moet kunnen herkennen.

3. Voor het USE-aspect van dit vak kunnen we kijken of er behoefte is aan de SPlaSh en of mensen er geld in zouden investeren, omdat het een wereldwijd toepasbaar project zal zijn.

SOURCES

1. https://theoceancleanup.com/

2. https://nobleo-technology.nl/project/fully-autonomous-wasteshark/

3. https://www.portofrotterdam.com/nl/nieuws-en-persberichten/waste-shark-deze-haai-eet-plastic

Problem statement and objectives (Kevin)

Plastic in the ocean -> should go

Current solutions trap fish

Knowing the difference between fish and plastic

Who are the users? (Kevin)

Society / scientists?

What do they require? (Kevin)

A clean ocean, safety for fish

Approach, milestones and deliverables (Menno)

For the planning, A Gannt Chart is created with the most important things. The overall view of our planning is that in the first two weeks, a lot of research has to be done. This needs to be done for, among other things, the problem statement, users and the current technology. Which is the wanted to be done in the first week. In the second week, more information about different types of neural networks and the working of different layers should be investigated to gain more knowledge. Also, this could lead to installing multiple packages or programs on our Laptops, which needs time to test if they work. During this second week, a data-set should be created or found that can be used to train our model. If this cannot be found online and thus should be created, this would take much more time than one week. But it’s hoped to be finished after the third week. After this, the group is split into people who creates the design and applications of the robot, and people who work on the creation of the neural network. After week 5, an idea of the robotics should be elaborated with the use of drawings or digital visualizations. Also all the possible Neural Networks should be elaborated and tried so that in week 6, conclusions can be drawn for the best working Neural Network. This means that in week 7, the Wiki-page can be concluded with a conclusion and discussion about the neural network that should be used and the working of the device. Week 8 is finally used to prepare for the presentation.

Currently, no real subdivision has been done to devide between the robotics hardware and software. This should be done in the following weeks and then the Gannt chart, visual below, can be filled in per person.

"ik weet nog niet hoe ik hier een plaatje krijg van de gannt chart"

Voor SoTa: In recent years, convolutional neural networks (CNNs) have shown significant improvements on amongst others image classifica- tion methods [1]. It is demonstrated that the representation depth is beneficial for the classification accuracy, and that state-of-the-art performance on the ImageNet challenge dataset can be achieved using a conventional ConvNet architecture Besides, VGG net- works are known for their state-of-the-art performance in image feature extraction [2]. Their setup exists out of repeated patterns of 1, 2 or 3 convolution layers and a max-pooling layer, finishing with one or more dense layers. The convolutional layer transforms the input data to detect pat- terns and edges and other characteristics in order to be able to correctly classify the data. The main parameters with which a con- volutional layer can be changed is by choosing a different activation function, or kernel size. Max pooling layers reduce the number of pixels in the output size from the previously applied convolutional layer(s). Max pooling is applied to reduce overfitting. A problem with the output feature maps is that they are sensitive to the location of the features in the input. One approach to address this sensitivity is to use a max pooling layer. This has the effect of making the resulting down sampled feature maps more robust to changes in the position of the feature in the image. The pool-size determines the amount of pixels from the input data that is turned into 1 pixel from the output data. Fully connected layers connect all input values via separate con- nections to an output channel. Since this project has to deal with a binary problem, the final fully connected layer will consist of 1 output. Stochastic gradient descent (SGD) is the most common and basic optimizer used for training a CNN [3]. It optimizes the model using parameters based on the gradient information of the loss function. However, many other optimizers have been developed that could have a better result. Momentum keeps the history of the previous update steps and combines this information with the next gradient step to reduce the effect of outliers [4]. RMSProp also tries to keep the updates stable, but in a different way than momentum. RMSprop also takes away the need to adjust learning rate [5]. Adam takes the ideas behind both momentum and RMSprop and combines into one optimizer [6]. Nesterov momentum is a smarter version of the momentum optimizer that looks ahead and adjusts the momentum based on these parameters [7]. Nadam is an optimizer that combines RMSprop and Nesterov momentum [8].

[1] Gobert Lee. Deep Learning in Medical Image Analysis.

[2] Karen Simonyan and Andrew Zisserman. Very deep convolutional networks for large-scale image recognition. In 3rd International Con- ference on Learning Representations, ICLR 2015 - Conference Track Proceedings, 2015.

[3] Rikiya Yamashita, Mizuho Nishio, Richard Kinh Gian Do, and Kaori Togashi. Convolutional neural networks: an overview and application in radiology, 2018.

[4] Ning Qian. On the momentum term in gradient descent learning algorithms. Neural Networks, 12(1):145–151, 1999.

[5] G E Hinton. Neural Networks for Machine Learning Lecture 9a Overview of ways to improve generalization. Hinton NNHinton NN, 2012.

[6] Diederik P. Kingmaand, Jimmy Lei Ba. Adam:A method for stochastic optimization. In 3rd International Conference on Learning Representations, ICLR 2015 - Conference Track Proceedings, 2015.

[7] Yurii Nesterov. A method for unconstrained convexminimization problem with the rate of convergence o(1/k2). Doklady ANSSR, 27(2):543547, 1983.

[8] Timothy Dozat. Incorporating Nesterov Momentum into Adam. ICLR Workshop, (1):2013–2016, 2016.

State-of-the-Art (Lotte, Dennis, Marijn)

Neural Networks (Dennis)

Neural Neural networks are a set of algorithms that are designed to recognize patterns. They interpret sensory data through machine perception, labeling or clustering raw input. The patterns they recognize are numerical, contained in vectors. Real-world data, such as images, sound, text or time series, needs to be translated into such numerical data to process it [3].

There are different types of neural networks [4]:

- Recurrent neural network: Recurrent neural networks, also known as RNNs, are a class of neural networks that allow previous outputs to be used as inputs while having hidden states. These networks are mostly used in the fields of natural language processing and speech recognition [5].

- Convolutional neural networks: Convolutional neural networks, also known as CNNs, are used for image classification

- Hopfield networks: Hopfield networks are used to collect and retrieve memory like the human brain. The network can store various patterns or memories. It is able to recognize any of the learned patterns by uncovering data about that pattern [6].

- Boltzmann machine networks: Boltzmann machines are used for search and learning problems [7].

Convolutional Neural Networks

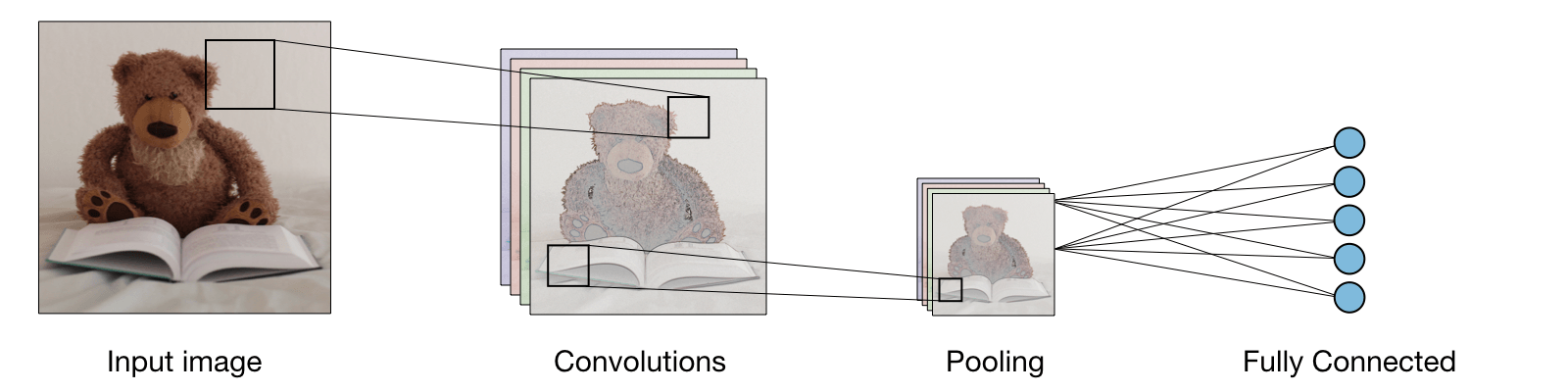

In this project, the neural network should retrieve data from images. Therefore a convolutional neural network will be used. Convolutional neural networks are a specific type of neural networks that are generally composed of the following layers:

The convolution layer and the pooling layer can be fine-tuned with respect to hyperparameters [8]. The convolutional layer transforms the input data to detect pat- terns and edges and other characteristics in order to be able to correctly classify the data. The main parameters with which a con- volutional layer can be changed is by choosing a different activation function, or kernel size. Max pooling layers reduce the number of pixels in the output size from the previously applied convolutional layer(s). Max pooling is applied to reduce overfitting. A problem with the output feature maps is that they are sensitive to the location of the features in the input. One approach to address this sensitivity is to use a max pooling layer. This has the effect of making the resulting down sampled feature maps more robust to changes in the position of the feature in the image. The pool-size determines the amount of pixels from the input data that is turned into 1 pixel from the output data. Fully connected layers connect all input values via separate con- nections to an output channel. Since this project has to deal with a binary problem, the final fully connected layer will consist of 1 output.

Ocean Cleanup + Current Solution (Marijn)

Image Recognition

Over the past decade or so, great steps have been made in developing deep learning methods for image recognition and classification [9]. In recent years, convolutional neural networks (CNNs) have shown significant improvements on image classifiction [10]. It is demonstrated that the representation depth is beneficial for the classification accuracy [11]. Another method is the use of VGG networks, that are known for their state-of-the-art performance in image feature extraction. Their setup exists out of repeated patterns of 1, 2 or 3 convolution layers and a max-pooling layer, finishing with one or more dense layers. The convolutional layer transforms the input data to detect patterns and edges and other characteristics in order to be able to correctly classify the data. The main parameters with which a convolutional layer can be changed is by choosing a different activation function, or kernel size [11].

There are still limitations to the current image recognition technologies. First of all, most methods are supervised, which means they need big amounts of labelled training data, that need to be put together by someone [9]. This can be solved by using unsupervised deep learning instead of supervised. For unsupervised learning, instead of large databases, only some labels will be needed to make sense of the world. Currently, there are no unsupervised methods that outperform supervised. This is because supervised learning can better encode the characteristics of a set of data. The hope is that in the future unsupervised learning will provide more general features so any task can be performed [12]. Another problem is that sometimes small distortions can cause a wrong classification of an image [9] [13]. This can already be caused by shadows on an object that can cause color and shape differences [14]. A different pitfall is that the output feature maps are sensitive to the specific location of the features in the input. One approach to address this sensitivity is to use a max pooling layer. Max pooling layers reduce the number of pixels in the output size from the previously applied convolutional layer(s) and it is used to reduce overfitting. The pool-size determines the amount of pixels from the input data that is turned into 1 pixel from the output data. Using this has the effect of making the resulting down sampled feature maps more robust to changes in the position of the feature in the image [11].

Logbook

Week 1

| Name | Total hours | Break-down |

|---|---|---|

| Kevin Cox | hrs | description (Xh) |

| Menno Cromwijk | hrs | description (Xh) |

| Dennis Heesmans | hrs | description (Xh) |

| Marijn Minkenberg | 1 | Setting up wiki page (1h), X |

| Lotte Rassaerts | 3 | State of the art: image recognition (3h) |

Template

| Name | Total hours | Break-down |

|---|---|---|

| Kevin Cox | hrs | description (Xh) |

| Menno Cromwijk | hrs | description (Xh) |

| Dennis Heesmans | hrs | description (Xh) |

| Marijn Minkenberg | hrs | description (Xh) |

| Lotte Rassaerts | hrs | description (Xh) |

References

- ↑ CS231n: Convolutional Neural Networks for Visual Recognition. (n.d.). Retrieved April 22, 2020, from https://cs231n.github.io/neural-networks-1/

- ↑ Du, K. L., & Swamy, M. N. S. (2019). Neural Networks and Statistical Learning. New York, United States: Springer Publishing.

- ↑ Nicholson, C. (n.d.). A Beginner’s Guide to Neural Networks and Deep Learning. Retrieved April 22, 2020, from https://pathmind.com/wiki/neural-network

- ↑ Cheung, K. C. (2020, April 17). 10 Use Cases of Neural Networks in Business. Retrieved April 22, 2020, from https://algorithmxlab.com/blog/10-use-cases-neural-networks/#What_are_Artificial_Neural_Networks_Used_for

- ↑ Amidi, Afshine , & Amidi, S. (n.d.). CS 230 - Recurrent Neural Networks Cheatsheet. Retrieved April 22, 2020, from https://stanford.edu/%7Eshervine/teaching/cs-230/cheatsheet-recurrent-neural-networks

- ↑ Hopfield Network - Javatpoint. (n.d.). Retrieved April 22, 2020, from https://www.javatpoint.com/artificial-neural-network-hopfield-network

- ↑ Hinton, G. E. (2007). Boltzmann Machines. Retrieved from https://www.cs.toronto.edu/~hinton/csc321/readings/boltz321.pdf

- ↑ Amidi, A., & Amidi, S. (n.d.). CS 230 - Convolutional Neural Networks Cheatsheet. Retrieved April 22, 2020, from https://stanford.edu/%7Eshervine/teaching/cs-230/cheatsheet-convolutional-neural-networks

- ↑ 9.0 9.1 9.2 Seif, G. (2018, January 21). Deep Learning for Image Recognition: why it’s challenging, where we’ve been, and what’s next. Retrieved April 22, 2020, from https://towardsdatascience.com/deep-learning-for-image-classification-why-its-challenging-where-we-ve-been-and-what-s-next-93b56948fcef

- ↑ Lee, G., & Fujita, H. (2020). Deep Learning in Medical Image Analysis. New York, United States: Springer Publishing.

- ↑ 11.0 11.1 11.2 Simonyan, K., & Zisserman, A. (2015, January 1). Very deep convolutional networks for large-scale image recognition. Retrieved April 22, 2020, from https://arxiv.org/pdf/1409.1556.pdf

- ↑ Culurciello, E. (2018, December 24). Navigating the Unsupervised Learning Landscape - Intuition Machine. Retrieved April 22, 2020, from https://medium.com/intuitionmachine/navigating-the-unsupervised-learning-landscape-951bd5842df9

- ↑ Bosse, S., Becker, S., Müller, K.-R., Samek, W., & Wiegand, T. (2019). Estimation of distortion sensitivity for visual quality prediction using a convolutional neural network. Digital Signal Processing, 91, 54–65. https://doi.org/10.1016/j.dsp.2018.12.005

- ↑ Brooks, R. (2018, July 15). [FoR&AI] Steps Toward Super Intelligence III, Hard Things Today – Rodney Brooks. Retrieved April 22, 2020, from http://rodneybrooks.com/forai-steps-toward-super-intelligence-iii-hard-things-today/