PRE2024 3 Group3

Group Members

| Name | Student number | Study | |

| Andreas Sinharoy | 1804987 | Computer Science and Engineering | a.sinharoy@student.tue.nl |

| Luis Fernandez Gu | 1804189 | Computer Science and Engineering | l.fernandez.gu@student.tue.nl |

| Alex Gavriliu | 1785060 | Computer Science and Engineering | a.m.gavriliu@student.tue.nl |

| Theophile Guillet | 1787039 | Computer Science and Engineering | t.p.p.m.guillet@student.tue.nl |

| Petar Rustić | 1747924 | Applied Physics | p.rustic@student.tue.nl |

| Floris Bruin | 1849662 | Computer Science and Engineering | f.bruin@student.tue.nl |

Planning

Roadmap

Week 1: Problem ideation and specification

Week 2: Robot design and specifications

Week 3: Begin construction of prototype of the robot

Week 4: Conduct interviews with relevant user groups

Week 5: Finish prototype of robot

Week 6: Gather feedback for the prototype

Week 7: Finalize the prototype after having taken feedback into account

Milestones

Selecting who and what problem we are going to addressSelecting how our robot shall address our problemConducting research and a literature review on our topicCreating a design for the robotConducting interviews to gauge the receptiveness of the robotSpeaking with our primary user group to obtain their feedback on our proposed solutionCreating a prototype of the robot

Introduction

Problem Statement

Speech impairment diagnosis in children is a flawed area of current day healthcare systems. According to research done by (citation), there is an existence of a large body of misdiagnosed children with speech impediments. According to interviews with a professional speech therapist, one of the reasons for this issue lies within the testing procedure for these diagnoses. For example, the current tests for children involve up to three hours of rigorous questioning, exhausting and inadequate for not only the children involved but also for the therapists. The interviews revealed the existence of bias within the therapist - as their analysis may succumb to understandable human error after these hours of questioning. They also revealed the potential for an inadequate environment for the child when it comes to the test. The format in which the tests take place may not provide the effective medium for the child's responses to to be fully accurate and undeterred. For example, current tests are administered in a therapist's office where questioning may be harsh or uncomfortable for the child to effectively respond. It also places a lot of emphasis on how well the child interacts and views the therapist performing the tests. Furthermore, as with the therapist, after hours of questioning, the children's answers may start succumbing to biases and lower quality responses.

Therefore, for our project, we wish to address these issue by creating a robot which do the following. Firstly, it questions these children consistently, not suffering from its questioning decreasing in quality as the tests progress. Secondly, it records the child's responses, and provides an adequate level of preprocessing to allow the results of the test to be easily and conveniently analyzed by the therapist, allowing the therapist to be able to listen to the recording multiple times, as well as when they have the energy and state of mind to conveniently do so. Finally, it partitions the exam into smaller, gamified chunks - while still ensuring the test provides the same effectiveness as a full three hour session - which offers the children breaks and a more adequate interface which can improve the quality of their responses. Also its friendly appearing, non-threatening interface may provide the child with a more comfortable adn engaging exam experience - although this will need to be tested empirically to analyze its effectiveness.

Other benefits such a robot provides include a separation between the therapist's office and the child's location. This allows for diagnoses to be taken in locations where access to therapists is limited or nonexistent as the responses from the robot can be sent digitally to therapists abroad. It also allows for a diagnosis from multiple therapists, as with consent from the child's guardians, multiple therapists can have access to the recordings and results from the tests allowing for a more robust and certified diagnosis from the therapists as well as reducing bias present in any one therapist.

Diagnosing speech impairments in children is a complex and evolving area within modern healthcare. While early diagnosis is essential for effective treatment, current diagnostic practices can present challenges that may impact both accuracy and accessibility. Several studies and practitioner reports have highlighted inefficiencies in existing assessment models, especially for young children (e.g., ASHA, 2024[1], leader.pubs.asha.org[2]).

Traditionally, speech and language therapists (SLTs) rely on structured, in-person testing methods. These assessments often involve up to three hours of standardized questioning, which can be mentally exhausting for both the child and the therapist. Extended sessions may introduce human fatigue and subjectivity, increasing the risk of inconsistencies in analysis. Additionally, children—especially those between the ages of 3 and 5—may struggle to remain focused and responsive during lengthy appointments, which can affect the quality and reliability of their responses.

Another significant consideration is the environment in which assessments take place. Therapist offices can feel unfamiliar or intimidating to young children, potentially affecting their comfort level and behavior during testing. The child’s perception of the therapist, the formality of the setting, and the pressure of the situation can all influence the outcome of the diagnostic process. Moreover, both therapist and child performance may decline over time, introducing bias and reducing the diagnostic clarity.

To address these challenges, this project proposes the use of an interactive, speech-focused diagnostic robot designed to support and enhance speech impairment assessments. This tool aims not to replace therapists but to assist them by mitigating known sources of bias and fatigue while making the process more accessible and engaging for children.

Firstly, it questions these children consistently, not suffering from its questioning decreasing in quality as the tests progress. Secondly, it records the child's responses, and provides an adequate level of preprocessing to allow the results of the test to be easily and conveniently analyzed by the therapist, allowing the therapist to be able to listen to the recording multiple times, as well as when they have the energy and state of mind to conveniently do so. Finally, it partitions the exam into smaller, gamified chunks - while still ensuring the test provides the same effectiveness as a full three hour session - which offers the children breaks and a more adequate interface which can improve the quality of their responses. Also its friendly appearing, non-threatening interface may provide the child with a more comfortable and engaging exam experience - although this will need to be tested empirically to analyze its effectiveness.

Other benefits such a robot provides include a separation between the therapist's office and the child's location. This allows for diagnoses to be taken in locations where access to therapists is limited or nonexistent as the responses from the robot can be sent digitally to therapists abroad. It also allows for a diagnosis from multiple therapists, as with consent from the child's guardians, multiple therapists can have access to the recordings and results from the tests allowing for a more robust and certified diagnosis from the therapists as well as reducing bias present in any one therapist.

Objectives

- Create a robot which can ask questions, record answers, provide some basic preprocessing to answers, and possess suitable paths of executions for unexpected use cases - for example children crying, incoherent responses, etc.

- Perform multiple tests to understand the effectiveness of the robot. The first being a test analyzing how well a child interacts with the robot. The second test being a comparison between an analysis from a therapist of the results from the device and the standard results from a current test.

- Test how well the robot performs when encountered with unexpected use cases.

USE Analysis

Users

Speech-Language Therapist (Primary User)

Clinicians that are dedicated professionals responsible for diagnosing and treating children with speech, language, and communication challenges. They are often under high workloads and constant pressure to deliver accurate assessments quickly.

Needs:

- Efficient Diagnostic Processes: Therapists require a tool that transforms traditional 2–3 hour assessments into shorter, engaging sessions that maintain clinical rigor. By reducing patient fatigue and maintaining diagnostic quality, this approach aims to enable therapists to focus on other patients and improve overall efficiency [citation].

- Comprehensive Data Capture: The therapists need the capability to capture high-quality audio and sensor data during assessments so that each session can then be reviewed later. This comprehensive data collection supports detailed analysis and would facilitate collaboration among specialists to enhance diagnostic precision [citation].

- Intuitive Digital Tools: The development of a secure, user-friendly dashboard is helpful, as it allows therapists to manage patient records, annotate sessions, and access data remotely and efficiently. Naturally, such tools should comply with healthcare standards such as GDPR, to ensure data integrity and patient confidentiality.

Child Patient (Secondary User)

Young children aged 5–10, who are the focus of speech assessments, often experience anxiety, discomfort or boredom in traditional clinical environments. Their engagement in therapy sessions is crucial for accurate assessment and treatment outcomes.

This age range has been identified as the most suitable for early diagnosis due to several factors. By the age of 4 to 5, most children can produce nearly all speech sounds correctly, making it easier to identify atypical patterns or delays (ASHA[3], 2024; RCSLT, UK[4]). Children within this range also possess a higher level of cognitive and behavioral development, which allows them to better understand and follow test instructions—critical for accurate assessments.

Early identification is crucial because speech sound disorders (SSDs) often begin to emerge at this stage. Addressing these issues early greatly improves outcomes in speech development, literacy, and overall academic performance (NHS, 2023[5]; European Journal of Pediatrics, 2013[6]).

While some speech impairments are not apparent until after age 5—such as developmental language disorders or fluency issues—the younger demographic (ages 3–5) poses a unique challenge. Children aged 3–5 often have limited attention spans (6–12 minutes) and are in Piaget’s preoperational stage of development, making it essential for diagnostic tools to be simple, engaging, and developmentally appropriate to ensure accurate assessment (CNLD.org[7]). Children in this group are often less willing or able to engage with structured diagnostic tasks due to limited attention spans and mental maturity. Therefore, it is essential that diagnostic tools are designed with this in mind, using engaging, age-appropriate methods that ensure accurate and efficient assessment.

Needs:

- Interactive, Game-Like Experience: The device must offer a playful and interactive interface that transforms the clinical assessment into “playtime”, effectively encouraging natural participation. Such an approach has been shown to improve engagement and make the therapeutic process feel more like play ([citation]).

- Immediate, Clear Feedback: Children benefit from receiving real-time visual and auditory cues that help guide them through the session [citation]. The integration of LED indicators and sound effects aims to keep the patient engaged and focused, and indicating progress during exercies.

Parent/Caregiver (Support User)

Parents or caregivers play an essential role in supporting the child’s therapy and need to feel confident that the process is both secure and effective. To ensure the therapy is reinforced outside the clinical setting, the parents need to be fully on-board.

Needs:

- Data Security and Transparency: They require (re-)assurance that all recordings and sensitive data are stored securely and handled in strict compliance with healthcare regulations. Furthermore, that the collected data is used solely for clinical purposes particular to their child. This aims to build trust in the technology and to guarantee that their child’s information remains confidential.

- Accessible Progress Monitoring: A clear and intuitive interface should be available for caregivers to follow their child’s progress without disrupting or getting involved in the therapy session. This transparency aims not only to keep the parents informed, but also to motivate them to support their child’s ongoing development [citation].

Personas

Scenarios

Interactive Diagnostic Session

In a typical session, the therapist initiates the diagnostic process via a secure digital dashboard or the parent engages the robot via a button. The therapeutic companion engages the child through a series of interactive, game-like exercises that are designed to incorporate standard diagnostic questions in an entertaining manner. During the session, the system records high-fidelity audio, video, and sensor data, providing a dataset for later review and analysis. This approach not only aims to make the sessions more inviting for the child, but also to enable the therapist with comprehensive data on longer assessments, typically that are not possible to do otherwise.

Remote and Asynchronous Review

After the interactive session, the therapist logs into a secure platform where all recorded data is available for review. They can pause, rewind, and annotate key segments, which allows for a detailed and thoughtful analysis of the child’s responses and non-verbal cues.

Collaborative Consultation

For some cases, additional input or consultation from colleagues is required, therefore the recorded sessions can be shared with multiple experts after obtaining the necessary consents. This collaborative consultation allows for reviewing and discussing the diagnostic findings, leading to more comprehensive and consensus-driven treatment plans. Such an approach fosters an environment of continuous professional development and shared expertise [citation].

Requirements

For the Therapist

- Robust Hardware Integration: The system must incorporate reliable and durable hardware to ensure diagnostic sessions are completed without data loss or interruption. The design should aim to minimise technical failures during assessments, ensuring that every session's data remains intact and can be reviewed later.

- User-Friendly Dashboard: An intuitive and efficient digital dashboard is required to present clinicians with information on each recorded session. The dashboard should facilitate rapid review and analysis, with the goal of enabling therapists to quickly identify patterns or issues in speech. By streamlining navigation and data review, the tool should help therapists manage multiple patients efficiently while maintaining high diagnostic accuracy.

- Secure Remote Accessibility: In today’s increasingly digital healthcare environment, therapists must be able to access patient data remotely. The system must employ state-of-the-art encryption and robust user authentication protocols to protect sensitive patient data from unauthorised access. Having robust security is crucial for both clinicians and patients, as it reassures all parties that the integrity and confidentiality of the clinical data are maintained as per today’s standards.

For the Child and Parent

- Engaging and Comfortable Design: For young children, the physical design of the robot plays a crucial role in therapy success. A soft, plush exterior coupled with interactive buttons and LED feedback systems can create a friendly, non-intimidating interface that reduces fosters a positive human robot interaction. Ultimately, the sessions should be a fun experience for the child, as otherwise no progress would be made and no speech data would be collected.

- Responsive Feedback Systems: Dynamic auditory and visual cues are essential components that should guide children through each step of a session. Real-time feedback, such as flashing LEDs synchronised with encouraging sound effects, aims to help the child understand when they are performing correctly, and gently corrects mistakes when necessary. This immediate reinforcement not only keeps the child engaged and motivated but also provides parents with clear, observable evidence of their child’s progress. In essence, cues ensure that the therapy sessions are both interactive and instructive.

- Robust Data Security: The system must implement comprehensive security measures such as end-to-end encryption and secure storage protocols to prevent unauthorised access or data breaches. The level of protection must reassure both parents and therapists that the child’s data is handled with the highest level of care and confidentiality. Adhering strictly to healthcare regulations is essential to maintain trust and protect privacy throughout the therapy process.

Society

Early, engaging assessments have been proven to significantly enhance long-term outcomes for children with speech challenges. Some studies have demonstrated that gamified diagnostic tools reduce anxiety and enable quicker detection of speech impediments, ensuring that children can receive timely interventions during formative year [citation]. This acceleration in diagnosis can shorten the long waiting lists currently experienced in many places, ultimately leading to better educational and social outcomes, for those on the waiting lists. Furthermore, digital platforms that enable “over-the-air” reviews and multi-organisation consultations have been shown to improve diagnostic accuracy and continuity of care [citation]. It has been shown that digital care networks enhance collaborative efforts among healthcare professionals, ensuring that each patient is given an opportunity to receive additional evaluation from other experts [citation]. Early interventions in speech impediments not only address immediate speech challenges but also contribute to improved educational achievements and social integration in the long term. Some research shows that children who receive timely, engaging assessments exhibit better communication skills later in life, promoting later inclusion in communities, activities and alike [citation]. These improvements in communication and social skills ultimately contribute to a healthier, more productive society overall.

Enterprise

By transforming lengthy, in-person diagnostic sessions into concise, interactive assessments, the solution enables therapists to focus on cases that require direct intervention while still collecting high-quality diagnostic data. Reports have shown [citation] that digital diagnostic tools can increase clinician productivity significantly, thereby enhancing overall workflow efficiency and allowing for more timely patient care. The system’s capacity to collect anonymised diagnostic data offers opportunities in refining assessment algorithms and personalising treatment plans. Likewise, such refinement can be of service to a broader research initiative and other healthcare experts. Although reducing the need for extended in-person sessions inherently lowers operational costs, the true value of the technology lies in its ability to improve patient outcomes and support innovative care models. Some studies have highlighted that integrating such digital tools leads to a more adaptive healthcare ecosystem that better meets the needs of both patients and providers [citation].

State of the Art

Existing robots

To understand how we can create the best robot for our users, we have to look at what robots already exists relating to our project. We analyzed the following robots and related them to how we can use them for our robot.

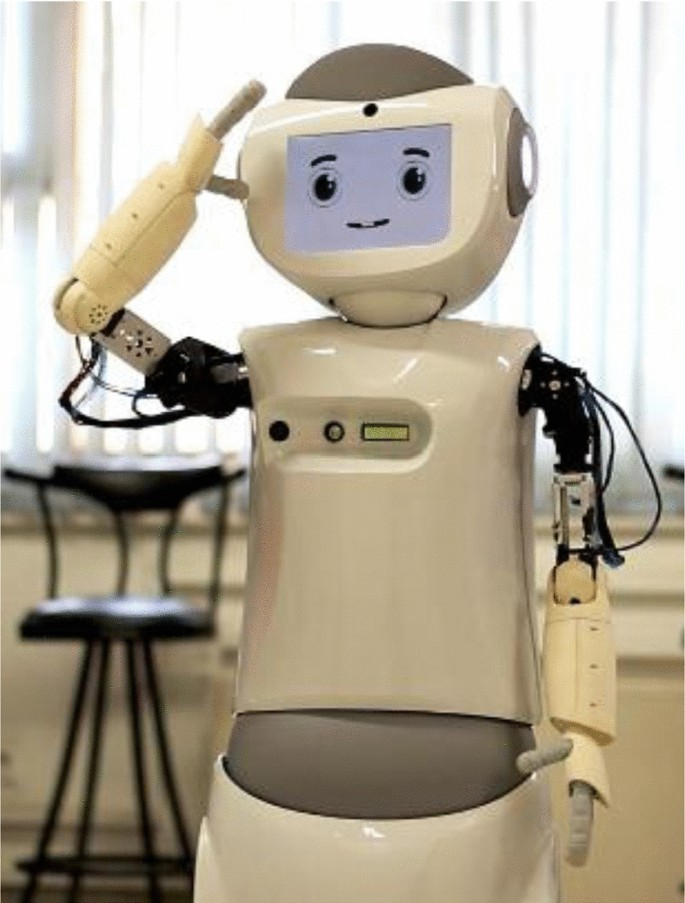

RASA robot

The RASA (Robotic Assistant for Speech Assessment) robot is a socially assistive robot developed to enhance speech therapy sessions for children with language disorders. The robot is used during speech therapy sessions for children with language disorders. The robot uses facial expressions that make therapy sessions more engaging for the children. The robot also uses a camera that uses facial expression recognition with convolutional neural networks to detect the way the children are speaking. This helps the therapist in improving the child's speech. Studies have shown that incorporating the RASA robot into therapy sessions increases children's engagement and improves language development outcomes.

Automatic Speech Recognition

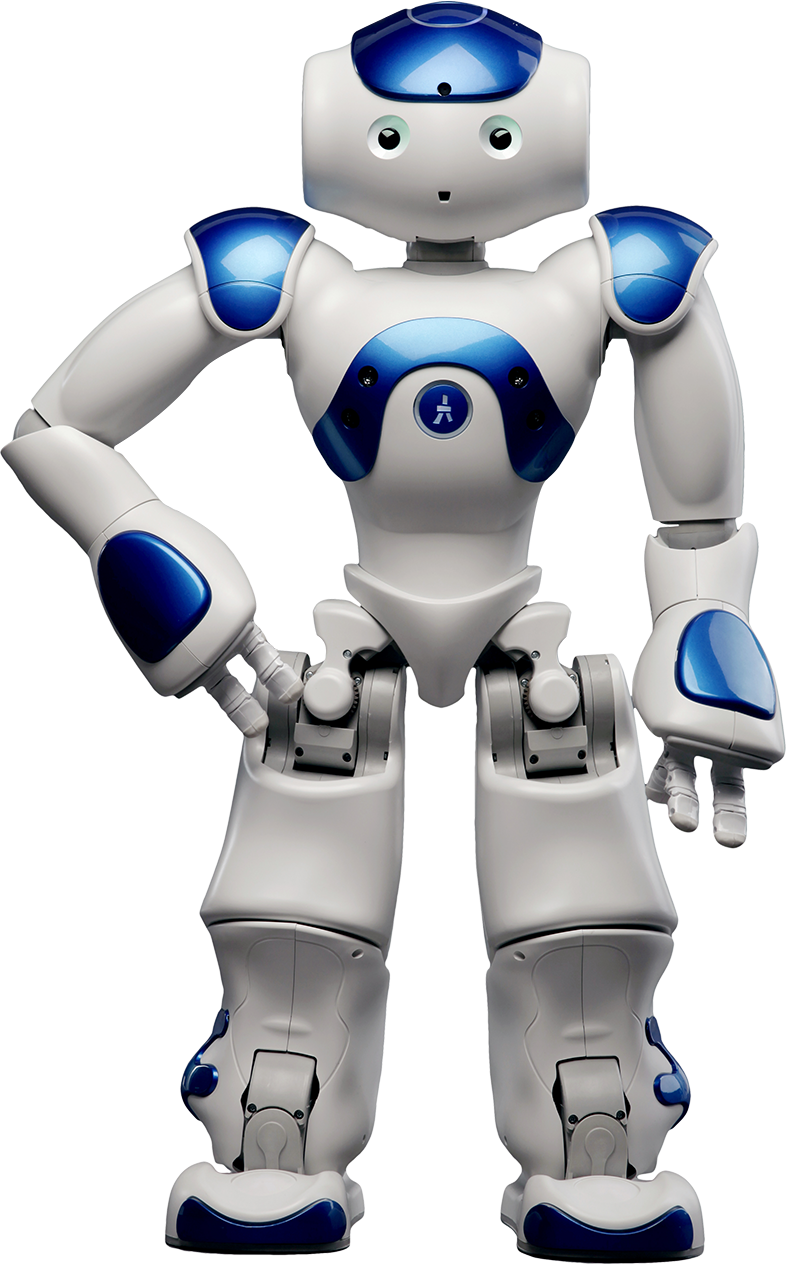

Nao robot

Developed by Aldebaran Robotics, the Nao robot is a programmable humanoid robot widely used in educational and therapeutic settings. Its advanced speech recognition and production capabilities make it a valuable tool in assisting speech therapy for children, helping to identify and correct speech impediments through interactive sessions.

Kaspar robot

Requirements

The aim of this project is to develop a speech therapy plush robot specifically designed to address issues in misdiagnoses of speech impediments in children. Our plush robot solution addresses these issues by transforming lengthy, traditional speech assessments into engaging, short, interactive sessions that are more enjoyable and less exhausting for both patients (children aged 5-10) and therapists. By providing an interactive plush toy equipped with audio recording, playback capabilities, local data storage, intuitive user interaction via buttons and LEDs, and secure data transfer, we aim to significantly reduce the strain on SLT resources and enhance diagnostic accuracy and patient engagement.

To be able to achieve all of this we must satisfy a certain set of requirements that will outline the requirements, design considerations, user interactions, data handling, performance goals, and a test plan, all of which will be done following a MOSCOW prioritization method:

| Category | Definition |

|---|---|

| Must Have | Essential for core functionality; mandatory for the product's success. |

| Should Have | Important but not essential; enhances usability significantly. |

| Could Have | Beneficial enhancements that can be postponed if necessary. |

| Won’t Have | Explicitly excluded from current scope and version. |

Design Specifications

| Ref | Requirement | Priority |

| DS1 | Plush toy casing made from child-safe, non-toxic materials. | Must |

| DS2 | Secure internal mounting of microphone, speaker, battery, and processing units. | Must |

| DS3 | Accessible button controls (Power, Next Question, Stop Recording). | Must |

| DS4 | Visual feedback via LEDs (Recording, Test Complete, Error, Low Storage, Low Battery). | Must |

| DS5 | The robot casing should withstand minor physical impacts or drops. | Should |

| DS6 | Device will not be waterproof. | Won’t |

The plush robot’s physical design prioritizes child safety, comfort, and ease of interaction. Using child-safe materials (DS1) and securely mounted electronics (DS2) ensures safety, while intuitive button controls (DS3) and clear LED indicators (DS4) simplify usage for young children. Durability considerations (DS5) shall ensure the product withstands typical child handling (or any handling...), though waterproofing (DS6) is excluded due to practical constraints and the lack of its necessity given the setting it will be used in.

The test plan is as follows:

| Ref | Precondition | Action | Expected Output |

|---|---|---|---|

| DS1 | N/A | Inspect the plush toy casing and its material certificates/specifications. | Plush toy casing is certified as child-safe and non-toxic. |

| DS2 | N/A | Inspect internal components of the plush toy. | Microphone, speaker, battery, and processing units are securely mounted internally. |

| DS3 | Plush robot is powered on. | Press each button (Power, Next Question, Stop Recording). | Each button activates its designated functionality immediately. |

| DS4 | Plush robot is powered on. | Observe LED indicators when performing different operations (recording, completing test, causing an error, storage nearly full, battery low). | LEDs correctly indicate each state as intended. |

| DS5 | N/A | Simulate minor drops or impacts on a safe surface. | Plush robot casing withstands minor impacts without significant damage or loss of functionality. |

| DS6 | N/A | N/A | N/A |

Functionalities

| Ref | Requirement | Priority |

| FR1 | Provide pre-recorded prompts for speech exercises. | Must |

| FR2 | Capture high-quality audio recordings locally in WAV format. | Must |

| FR3 | Securely store audio locally to maintain data privacy. | Must |

| FR4 | Enable intuitive button-based session controls. | Must |

| FR5 | Support secure data transfer via USB and/or Bluetooth. | Must |

| FR6 | Implement basic noise-filtering algorithms. | Should |

| FR7 | Automatically shut down after prolonged inactivity to conserve battery. | Should |

| FR8 | Optional admin panel for therapists to configure exercises and session lengths. | Could |

| FR9 | Optional voice activation for hands-free interaction. | Could |

| FR10 | Explicitly exclude cloud storage for privacy compliance. | Won’t |

These functional requirements reflect: the necessity to simplify lengthy therapy sessions into manageable segments (FR1, FR4), securely capture and store speech data for later analysis (FR2, FR3, FR5), and maintain user-friendly interaction. Privacy and data security are prioritized by excluding cloud storage (FR10), while noise filtering (FR6), auto-shutdown (FR7), admin panel (FR8), and voice activation (FR9) further enhance practical usability and efficiency but are not essential for the functionality of the robot per se.

The test plan is as follows:

| Ref | Precondition | Action | Expected Output |

|---|---|---|---|

| FR1 | Plush robot is powered on. | Initiate a therapy session. | Robot plays clear pre-recorded speech exercise prompts correctly. |

| FR2 | Plush robot is powered on and ready to record. | Record speech audio using provided prompts. | High-quality WAV audio recordings are stored locally. |

| FR3 | Recording completed. | Inspect local storage on plush robot (either via ssh or usb etc.) | Audio recordings are securely stored locally. |

| FR4 | Plush robot is powered on. | Use button controls to navigate through a session. | Session navigation (Start/Stop, Next Question) operates smoothly via buttons. |

| FR5 | Plush robot has recorded data. | Connect robot via USB/Bluetooth to transfer data securely. | Data transfer via USB/Bluetooth completes successfully with no data corruption or leaks. |

| FR6 | Robot is powered on and ready to record. | Record speech in a moderately noisy environment. | Recorded audio demonstrates effective noise filtering with reduced background noise. |

| FR7 | Plush robot powered on, idle for prolonged period. | Leave robot idle for X+ minutes. | Robot automatically powers off to conserve battery. |

| FR8 | Admin panel feature implemented. | Therapist configures new exercises/session lengths via admin panel. | Admin panel accurately saves and applies changes to exercises/session durations. |

| FR9 | Voice activation implemented. | Use voice commands to navigate exercises. | Robot successfully responds to voice commands. |

| FR10 | Check product documentation/design specification. | Inspect data storage and upload protocols. | Confirm explicitly stated absence of cloud storage capability. |

UI/UX

| Ref | Requirement | Priority |

| UI1 | Clear visual indication of active recording status through LEDs. | Must |

| UI2 | Easy navigation between prompts using physical buttons. | Must |

| UI3 | Dedicated button to stop recording and securely store audio. | Must |

| UI4 | Audio or Visual notifications/indications when storage or battery capacity is low. | Should |

| UI5 | Optional voice commands to navigate exercises. | Could |

| UI6 | Exclude advanced manual audio processing controls for simplicity. | Won’t |

User interactions are designed to be intuitive, enabling children to comfortably navigate therapy sessions (UI1, UI2, UI3) without assistance. Additional audio notifications (UI4) provide helpful prompts, and potential voice command options (UI5) may further simplify operation. Advanced settings are deliberately excluded (UI6) to maintain simplicity for the primary child users (and also due to the lack of time available to work on the project).

The test plan is as follows:

| Ref | Precondition | Action | Expected Output |

|---|---|---|---|

| UI1 | Plush robot is powered on. | Initiate audio recording session. | LEDs clearly indicate active recording status immediately. |

| UI2 | Session ongoing. | Press "Next" button. | Plush robot navigates to next prompt immediately and clearly. |

| UI3 | Session ongoing, recording active. | Press dedicated "Stop" button. | Recording stops immediately and audio securely stored. |

| UI4 | Low storage/battery conditions simulated. | Fill storage nearly full and/or drain battery low. | Robot issues clear audio or visual notifications indicating low storage or battery. |

| UI5 | Voice commands implemented. | Navigate prompts using voice commands. | Robot accurately navigates prompts using voice interaction. |

| UI6 | Check product documentation/design specification. | Verify available UI options. | Confirm explicitly stated absence of advanced audio processing UI controls. |

Data Handling and Privacy

| Ref | Requirement | Priority |

| DH1 | Local, offline storage of all collected data. | Must |

| DH2 | No cloud uploads or third-party data sharing. | Must |

| DH3 | Optional encryption of stored data. | Should |

| DH4 | Facility for deletion of data post-transfer. | Should |

| DH5 | Optional automatic deletion feature to manage storage space. | Could |

| DH6 | No external analytics or third-party integrations. | Won’t |

Data privacy compliance is paramount, thus mandating the need for local storage (DH1) without cloud exposure (DH2). Encryption (DH3) and data deletion capabilities (DH4, DH5) enhance security measures, while explicitly excluding third-party integrations (DH6) aligns with data protection and privacy goals.

The test plan is as follows:

| Ref | Precondition | Action | Expected Output |

|---|---|---|---|

| DH1 | Robot has collected data. | Inspect robot’s storage location and methods. | Confirm data is stored locally/offline with no online/cloud-based storage. |

| DH2 | Data transfer process. | Attempt transferring data and monitor network activity. | No cloud uploads or third-party data sharing occurs during or after data transfer. |

| DH3 | Data encryption implemented. | Attempt accessing stored data directly without proper keys. | Data is inaccessible or unreadable without proper decryption. |

| DH4 | Data has been transferred. | Delete data via provided facility after transfer. | Data is successfully deleted from local storage immediately after confirmation. |

| DH5 | Data exists (old) | Fill storage and observe automatic deletion feature. | Old data automatically deletes to maintain adequate storage space. |

| DH6 | N/A | Inspect software. | Confirm absence of external analytics and third-party integrations... |

Performance

| Ref | Requirement | Priority |

| PR1 | Prompt playback latency under one second after interaction. | Must |

| PR2 | Recording initiation latency under one second post-activation. | Must |

| PR3 | Real-time or near-real-time audio noise filtering. | Could |

| PR4 | Optional speech detection for audio segment trimming. | Could |

| PR5 | Exclusion of cloud-based or GPU-intensive AI processing. | Won’t |

Performance standards demand rapid, seamless interaction (PR1, PR2) to maintain user engagement and provide a good user experience, supplemented by noise filtering capabilities (PR3) to provide the therapists a good experience though it is not exactly necessary for the functioning of the robot. Advanced optional speech-processing features (PR4) are nice to haves but again not necessary, and high-resource cloud-based AI operations (PR5) are explicitly omitted to maintain simplicity and most importantly data security.

The test plan is as follows:

| Ref | Precondition | Action | Expected Output |

|---|---|---|---|

| PR1 | Plush robot powered on. | Initiate speech prompt via interaction/button press. | Prompt playback latency is less than or equal to X seconds. |

| PR2 | Plush robot powered on. | Start recording via interaction/button press. | Recording initiation latency is less than or equal to X second. |

| PR3 | Noise filtering feature implemented. | Record audio in background-noise conditions and observe immediate playback. | Real-time or near-real-time audio noise filtering noticeably reduces background noise. |

| PR4 | Speech detection implemented. | Record speech with silent pauses. | Audio segments are correctly trimmed to include only relevant speech portions. |

| PR5 | N/A | Inspect software. | Confirm absence... |

Legal & Privacy Concerns

Note: We are only going to concern ourselves with EU legislation and regulations as this is our country of residence. Furthermore most of these regulations concern themselves with a full scale implementation of this robot.

We will mainly be making reference to the following regulations/Legislation:

- General Data Protection Regulation GDPR (https://gdpr-info.eu/)

- Medical Device Regulation MDR (https://eur-lex.europa.eu/eli/reg/2017/745/oj/eng)

- AI act (https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX%3A32024R1689)

- UN Convention on the Rights of the Child

- AI Ethics Guidelines (EU & UNESCO)

- Product Liability Directive (EU 85/374/EEC)

- ISO 13482 (Safety for Personal Care Robots)

- EN 71 (EU Toy Safety Standard)

Data Collection & Storage

The robot we want to build for this project requires that some specific audio snippets and data to be collected and stored somewhere where, therapists and professionals that are responsible for the patient's care can access it. This data however is sensitive and must be secured and protected so it is only accessible to those who are permitted to access it. We should also focus on storing the minimum required amount of data on the patient using the robot to make sure only necessary data is stored. These specific data collection and storage concerns, in the EU, are outlined in articles 5 and 9 of the GDPR.

in this context this means the data collected by the robot should at most include:

- Speech audio data of the patient needed by the therapist to help treat the patients impediment

- minimal identification data to know which patient has what data.

- Other data may be needed but must specifically be argued (subject to change)

Furthermore all the data collected by the robot must be:

- encrypted, so if somehow stolen cannot be interpreted

- securely stored, so it can be accessed by the relevant permitted parties

User Privacy & Consent

In order for the robot to be used and for data to collected and shared with the relevant parties, the patient user must consent to this and they must also hold specific rights over the data (creation, deletion, restriction etc). On top of this depending on the age of the patient certain restrictions must be placed on the way data is shared, and all patients must have a way to opt-out and withdraw consent from data collection if necessary. These are all covered in articles 6,7,8 of the GDPR.

In essence this means the user must have the most power and control over the data collected by the robot, and the data collected and its use must be made explicitly clear to the user to make sure that its function is legal and ethical.

Security Measures

Since we must exchange sensitive data between the patient and therapist, data must be secured and protected in its transmission, storage and access. These relevant regulations are specified in article 32 of the GDPR (Data Security Requirements).

This means that data communication must be end-to-end encrypted, and there must be secure and strong authentication protocols across the entire system. On the therapists end of things there must be relevant RBAC (role based access control) so only the relevant admins can access the data. In real time use over long periods of time there should be the possibility of software updates to improve security.

Legal Compliance & Regulations

Since this robot can be considered as a health related or medical device, we must check and make sure that the data collected is used and treated as medical data. All regulations relevant to this are specified in the Medical Device Regulation.

This Robot may also have certain AI specific features or functionalities so this must also fall within and adhere to regulations and laws present in the AI act so that the functionality and usage of the robot is ethical.

Ethical Considerations

Since the patients using this device and interacting with it are children, we must make sure that the interactions with the child are ethical and the way in which data is used and analysed in order to form a diagnosis is not biased in any sort of way.

The robot must minimize psychological risks of AI-driven diagnosis, prevent any possible distress, anxiety and deception that interaction could cause. Training assessments should be analysed in a fair and unbiased manner and decisions on treatment and required data for a particular stage of treatment should be almost entirely decided by the therapist with little to minimal AI involvement.

These are all outlined in the AI Ethics Guidelines and article 16 of the UN Convention on the Rights of the Child.

Third-Party Integrations & Data Sharing

Since we are sharing the data collected from the robot to the therapist, we must ensure that strict data-sharing policies are in place that require parental/therapist consent. Furthermore if we use any 3rd party services, like cloud storage providers, AI tools, or healthcare platforms we must make sure data is fully anonymised so no there is no risk of re-identification.

This is so we adhere to article 28 of the GPDR

Liability & Accountability

In case of issues with function or potential data leak we must make sure to hold the responsible parties accountable. This is especially important in the case of AI functionalities as no responsibility can be placed on such actors, and must be placed on manufacturers and programmers.

If there are technical issues with the function of the robot or issues with data transmission encryption we (the creators of the product) must be held accountable for the issues with robot. If there are issues with storage of data due to failures of 3rd party systems those system creators must also be held accountable for the issues in their system. If the therapist or medical professional that is treating a patient leaks data or provides bad treatment intentionally or otherwise they must also be held accountable for their conduct and actions.

These are all specified in the Product Liability Directive under manufacturer accountability.

User Safety & Compliance

Since the robot interacts directly with children, we ensure its physical safety through non-toxic materials and a child-safe design, comply with toy safety regulations if applicable, prevent harmful AI behaviour by avoiding misleading feedback and ensuring therapist oversight, and adhere to assistive technology standards for accessibility and alternative input methods.

This is so we adhere with ISO 13482 and EN 71 (EU toy safety standard).

Interviews and Data collection

We will also be conducting interviews with several relevant volunteers upon this matter as well as experts in the field. To ensure ethical standards, we will obtain informed consent from all participants, clearly explain the purpose of the interviews, and allow them to withdraw at any time. Confidentiality will be maintained by anonymizing responses and securely storing data. Additionally, we will follow ethical guidelines for research involving human subjects, ensuring that no harm or undue pressure is placed on participants.

Design

Prototype

Device Description

dsdwiki.wtb.tue.nl

Appearance:

- ↑ https://www.asha.org/public/speech/disorders/SpeechSoundDisorders/

- ↑ https://leader.pubs.asha.org/doi/10.1044/leader.FTR3.19112014.48?utm_source=chatgpt.com

- ↑ https://www.asha.org/public/speech/disorders/SpeechSoundDisorders/

- ↑ https://www.rcslt.org/speech-and-language-therapy/clinical-information/speech-sound-disorders/

- ↑ https://www.nhs.uk/conditions/speech-and-language-therapy/

- ↑ https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3560814/

- ↑ https://www.cnld.org/how-long-should-a-childs-attention-span-be/

- ↑ https://www.jbe-platform.com/content/journals/10.1075/pc.12.1.03dau