PRE2022 3 Group1

| Name | Student number | Major |

|---|---|---|

| Geert Touw | 1579916 | BAP |

| Luc van Burik | 1549030 | BAP |

| Victor le Fevre | 1603612 | BAP |

| Thijs Egbers | 1692186 | BCS |

| Adrian Kondanari | 1624539 | BW |

| Aron van Cauter | 1582917 | BBT |

Project plan

Problem statement and objectives

In a 2020 study[1] researchers examined 100 reports of a man overboard (MOB) incident using 114 parameters to create a MOB event profile. In 88 cases the casualty was deceased as a result of the MOB incident, from those 88 cases 34 were assumed dead and 54 were witnessed dead. From the witness deaths 18 died before the rescue and 31 after the rescue, in 5 cases it is unknown. The cause of death was indicated for 42 cases, the most common cause is identified as drowning (26), followed by trauma (9), cardiac arrest (4) and hypothermia (3). Based on this study the survival chance of an MOB event is slim (12%). We aim to increase the survival chance by developing a device that can locate the victim within 10 minutes and provided the victim with life saving equipment such as a ring life buoy.

Scenario

The 2020 study[1] showed that 53% of the MOB events happen on cargo ships, our focus therefore lies on developing a drone for a cargo ship. We assume that the weather conditions are mild at the time of accident and during the search and rescue operation, i.e. mild waves, a mild breeze, little rain, and an average sea air temperature. We also assume that is nighttime at the time of accident and that there is a sole victim who is capable of maintaining afloat, i.e. no major/life-threating injuries.

Assume that we know a man has fallen overboard within X minutes so that we know the general search area.

Users

The end users of our product are personnel of cargo ships, shipping companies that own a fleet of cargo ships, ports, and possibly the military.

Requirements: MoSCoW method

Must

- Have sensors to detect a person within 10 minutes

- Have a communication system to communicate with the involved ship

- Be able to withstand an air temperature range of -3°C to 36°C.

- Be able to operate in the dark

- Be able to operate in the rain

- Be able to withstand wind forces of 4 beaufort.

Should

- Have life assist systems

- Resist (bad) weather to a degree

- A person recognition algorithm

Could

- Have communication between victim and ship

Won’t

- Have the ability to take the person to safety

Approach milestones and deliverables

TODO

Task division

| Person | Week 1 | Week 2 | Week 3 | Week 4 | Week 5 | Week 6 | Week 7 | Week 8 |

| Luc | Literature study (4-5 articles)+Subject picking | Further Brainstorming and subject refining | Literature study (4-5 articles): Search patterns, Remote drone control > necessary equipment | Search pattern and area, TIC, (remote control) | ||||

| Thijs | Literature study (4-5 articles)+Subject picking | Further Brainstorming and subject refining | Literature study(4-5 articles): Communication systems > necessary equipment | Communication systems | ||||

| Geert | Literature study (4-5 articles)+Subject picking | Further Brainstorming and subject refining | Literature study(4-5 articles): Influence of cold water, Oceanic Weather | Ethics of rescue robots, write on scenario (specifics) | ||||

| Victor | Literature study (4-5 articles)+Subject picking | Further Brainstorming and subject refining | Literature study(4-5 articles): Image recognition /sensors > necessary equipment, Take care of the wiki page | TIC, other sensors and image recognition | ||||

| Adrian | Literature study (4-5 articles)+Subject picking | Further Brainstorming and subject refining | Literature study(4-5 articles): Night time deck procedures > What does the victim have on him/her? | Finalize state-of-the-art, communication system (from thursday) | ||||

| Aron | Literature study (4-5 articles)+Subject picking | Further Brainstorming and subject refining | Literature study(4-5 articles): Current tech > write a section on the current state of person detection tech in the wiki | Look into dropping equipment from the drone. (take into account wind direction and ocean currents) |

State of the art: Literature Study

Search patterns

Search procedures for when a man goes overboard already exist. In the IAMSAR manual some of these procedures are already explained. I want to start discussing some of the search patterns described.

For different environments and conditions different patterns are recommended. When the location of the Man overboard is known well, Expanding Square or Sector search is recommended. If the location of the accident is not accurately known. different patterns are recommended such as sweep search.

- Expanding Square search - expanding square search can only be done by one ship at a time. the pattern is starts at the approximate location of the man over board and spirals outwards with course alterations of 90°

- Sector Search - this can be done using one vessel, or using a vessel and an aircraft. the method is used to search a spherical area. the pattern is depicted in .....

- Sweep search - this is a pattern used to search a bounded area. the ship zig zags down it.

IAMSAR also mentions other factors need to be taken into account. If a person falls into the water they will for example be moved away by currents.

Remote Drone Control

A lot of research has already gone into autonomous drone flight. Take a look at autonomous drone racing for example (https://link.springer.com/article/10.1007/s10514-021-10011-y) . A lot of consumer drones also are capable of autonomous flight, for example DJI has a waypoint system based on GPS coordinates and their drones are also capable of tracking a moving person/object. DJI's waypoint system works by loading a set of GPS coordinates onto the drone, the drone will navigate itself to the first set coordinates. From there it goes on to the next. Until it has reached the final set of coordinates. The moving object tracking is a bit more complicated. This involves image recognition.

http://acta.uni-obuda.hu/Stojcsics_56.pdf

However one problem with the autonomous drone flight available in most commercial drones is that it is not possible to interface with the controller via a raspberry pi. So as of now we have three main options:

- We built a drone ourselves

- We manage to find a drone somewhere that can be controlled via a raspberry pi

- We try to work around a separation of the drone control and the camera input

Right now to me (Thijs) option two seems the best but it would entirely depend on us being able to find a drone we can use, and that is not very likely unless the university has such a drone available. If we can't find a drone that we can control it might be best to look a bit more into building our own drone. One problem with that is that none of the group members really have experience with this and that this add extra complexity in the manufacturing of the prototype/scale model that might not be desirable since the actual construction of the base drone is not really a focal point for this project.

If we do want to make our own drone there are some tutorials available, but it will probably still be difficult because not all of the exact materials they use are readily available and some parts are quite expensive. For example the pixhawk flight controller (the most popular option for autonomous flight as far as I can tell) costs between €60 for an older model and €185 for a newer model. There is also a tutorial that uses an alternative flight controller that seems to be cheaper (€30) but it is a little unclear how well that controller would work for autonomous flight.

For the option where we work around not being able to control the drone and the camera's together we could make the flight autonomous via the provided app/drone interface and then give alerts to the ship when the camera detects a heat signature without then also stopping the drone to follow the heat signature and provide better localization.

https://www.hackster.io/korigod/programming-drones-with-raspberry-pi-on-board-easily-b2190e

https://www.instructables.com/The-Drone-Pi/

https://hobbyking.com/en_us/multiwii-328p-flight-controller-w-ftdi-dsm2-comp-port.html

https://ramyadhadidi.github.io/files/Drone-Build-Guide.pdf

https://www.researchgate.net/figure/Raspberry-Pi-camera-attached-to-the-UAV_fig4_338096276

Search Area

Drift upto 10 (nautical) miles per day (http://www.oregonbeachcomber.com/2012/07/mechanics-of-drifting-on-open-sea.html#:~:text=Your%20average%20ocean%20wind%20is,blows%20552%20miles%20a%20day.)

This will be about 0.2 m/s

We define the search area as a circle, this circle has radius r, where r = 0.2t (where t is the time since the person fell overboard.

This following table shows the search area size compared to time.

| Time overboard (minutes) | Search area size (m^2) |

| 1 | 452 |

| 5 | 11310 |

| 15 | 101788 |

Drone search speed

The drone search speed will be limited by multiple things such as flight speed, sensor resolution and quality/speed of the detection algorithm. Since we still have some unknowns I would like to make some assumptions. Lets assume the algorithm is able to detect a human if they take up at least a space of 25 by 25 pixels. Lets also assume a human at sea takes up 25 by 25 cm.

A good sensor to use for the detection of humans at sea would be a IR camera. These cameras take images in the IR spectrum. They have a field of view(fov), a resolution and a refresh rate.

The height the drone can fly will be determined by the fov, and resolution. A typical fov for raspberry pi cameras is about 60 degrees of horizontal (https://www.raspberrypi.com/documentation/accessories/camera.html) and a typical resolution would be 1080*1920 pixels. Here 1920 is the horizontal direction.

If the drone would have a sensor of these specs it would be able to fly about 15 meters high, here it would be able to scan a line of 19.2 meters wide.

Lets assume the algorithm needs to have the human in shot for 5 seconds for it to be detected by the algorithm (https://www.researchgate.net/publication/350981551_Real-Time_Human_Detection_in_Thermal_Infrared_Images)

The vertical fov of raspberry pi sensors is about 40 degrees, this would result in a distance of about 10 meters vertical to be seen by the drone. This means it can fly at about 2 m/s.

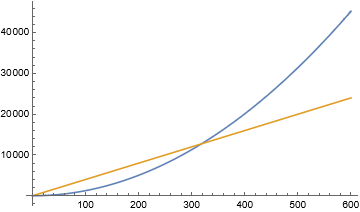

The area the drone can covers is thus 20m*2m/s*t where t is the time since dispatched.

Can a drone keep up?

in the graph on the right we see that after about 5 minutes the drone is not able to keep up with the search area anymore (even with a perfect search pattern). If we start taking dispatch time into account it would mean these 5 minutes would be about result in about 2.5 of effective search time) this would move the yellow line to the left, this would also mean that one drone is not quick enough. and we would likely need to use multiple drones in order to search fast enough..

Communication Systems

For sea communication it might be a good idea to use RF (radio frequency) communication. The main limit to this seems to be the line-of-sight requirement, the transimission distance and the available bandwidth. The bandwidth might mostly be a problem if we want to send (live) video in a reasonable quality to the ship.

There is also reasearch into using a long range wifi network for sea connection, but this probably wouldn't work too well for a scale model since we most likely want to test the model outside where there is little to no wifi signal.

https://ieeexplore.ieee.org/abstract/document/7151030

https://ieeexplore.ieee.org/abstract/document/6805843

For a model/prototype we would probably either have to use a seperate RF module for communication with the raspberry pi or use an inbuilt RF module in the drone controller if that is available.

https://behind-the-scenes.net/433mhz-rf-communication-to-a-raspberry-pi/

Influence of cold water and oceanic weather

(Geert)

Sensors

The main sensor that will be used to locate a victim is a thermal imaging camera (TIC). The sheer size of the ocean makes it nearly impossible to use a normal camera to detect a person, it is as looking for a needle in a hay stack. Since the general search area is small relative to the complete ocean, temperatures of the water will all be relatively the same. The victim will have a much higher temperature than their surroundings, making it easy to detect them. There are a couple important requirements for the TIC. The camera needs a sufficiently high resolution so that we can clearly distinguish the victim from other warm object (think of the marine life). It also needs to have a high refresh rate. Our goal is to detect and locate a victim as soon as possible so that the survival chance is the highest, we therefore need to 'scan' the search area fast and that requires a sufficient refresh rate. For the same reason the thermal camera is required to have a large field of view (FOV). It also needs the right temperature sensitivity, and a temperature bar in the interface.

Other sensors that we might want to include are a microphone and a loudspeaker. This would allow us to make contact with the victim. However, the issue with a microphone is that the sound of the ocean is most likely so loud that it is unlikely to understand the victim, trying to conversate with the victim might also fatigue them. A loudspeaker can be used to notify the victim rescue is on the way and help them calm down.

We also aim to provide life saving equipment such as a ring life buoy, this buoy can be attached to the drone and dropped by a mechanism making use of servo motors. It is harder than it sounds to drop something near the victim from an altitude above the ocean, wind sensors should be added so that calculations can be made to drop the buoy in close proximity of the victim.

TIC

This TIC is able to take pictures with a resolution of 3280 x 2464 pixels, and videos of 1080p at 30 fps, 720p at 60 fps and 640 x 480p at 90 fps. It has an angle of view (AOV) of 67 degrees

Lets assume a human head has a width of 20 cm and length of 25 cm, the surface area would be 500 cm². If we fly the drone 500m above the water we would cover an area of 438km², however, this means each pixel represents an area of 541.6 cm² in picture mode, meaning we would not be able to recognize a person since it would not even be represented by one pixel. The drone has to fly pretty low in order to detect a person. If we fly the drone 10 meters above sea level a person would be represented by approximately 2306 pixels which would be a square of approximately 48x48 pixels. In video mode the TIC has a resolution of 1920x1080 pixels, flying at 10 meters above sea level a person would roughly be represented by 591 pixels, i.e. a square of about 24x24 pixels. At 720p and 10 meters above sea level a person would be represented by a square of approximately 16x16 pixels.

The downside of flying 10 meters above sea level is that only a limited area of ~175m² can be covered.

https://www.flir.eu/ this company offers top-of-the-line thermal imaging systems.

Servomotors

This servomotor is supported by a raspberry pi, it has an active force of 11 kg/cm. These can be used to drop possible equipment and to power the rotors of the drone in case we need to construct one.

Thermal image recognition

For the image recognition we can make use of TensorFlow, Google's Neural Network library.

Sea rescue drones (SOTA)

https://arxiv.org/abs/1811.05291

The research paper "Intelligent Drone Swarm for Search and Rescue Operations at Sea" presents a novel approach for using a swarm of autonomous drones to assist in search and rescue operations at sea. The authors propose a system that uses machine learning algorithms and computer vision techniques to enable the drones to detect and classify objects in real-time, such as a person in distress or a life raft.

The paper describes the hardware and software architecture of the drone swarm system, which consists of a ground station, multiple drones equipped with cameras, and a cloud-based server for data processing and communication. The drones are programmed to fly in a coordinated manner and share information with each other to optimize the search process and ensure efficient coverage of the search area.

The authors evaluate the performance of their system in various simulated scenarios, including detection of a drifting boat, a person in the water, and a life raft. The results show that the drone swarm system is capable of detecting and identifying objects accurately and efficiently, with a success rate of over 90%.

Overall, the research paper presents a promising solution for improving search and rescue operations at sea, leveraging the capabilities of autonomous drones and artificial intelligence.

"We propose an Intelligent Drone Swarm for tackling the aforementioned problems. The central idea is to use an autonomous fleet of intelligent UAVs to accomplish the task. The self-organizing UAVs network would enable the coverage of a larger area in less time, reducing the impact of the limited battery capacity. Recent works on UAVs networks shown their ability of coverage and connectivity improvements in emergency scenarios [15]. Indeed, the UAVs swarm is capable of generating a multi-hop communication network that will guarantee a higher bandwidth due to aerial-to-aerial communication links provided by longer LoS connections. Artificial Intelligence tools, in this case, would greatly improve not only the autonomy and coordination of the UAVs network, but also the communication efficiency. On one hand, autonomy and coordination are improved due to the autonomous adaptation of mobility actions and communication parameters to non-stationary environmental conditions. On the other, communication efficiency is ensured with the usage of smart detection algorithms locally running on the UAV, which limit the amount of information sent to the base station. Recent advances in AI techniques, such as specialized AI chips [16] and quantization/pruning techniques [17], may also help reducing the energy and computational resources needed for detection."

https://www.mdpi.com/2504-446X/3/4/78

The research paper "Requirements and Limitations of Thermal Drones for Effective Search and Rescue in Marine and Coastal Areas" explores the potential of thermal drones for search and rescue (SAR) operations in marine and coastal areas. The authors review the main features and capabilities of thermal drones, including their ability to detect heat signatures and identify objects in low light or night conditions.

The paper highlights the key requirements and limitations of using thermal drones for SAR operations, such as the need for high-resolution thermal cameras, long flight time, and reliable communication systems. The authors also discuss the challenges of operating drones in harsh weather conditions and the importance of complying with regulatory and ethical guidelines.

To demonstrate the effectiveness of thermal drones for SAR operations, the authors present a case study of a simulated rescue mission in a coastal area. The results show that thermal drones can provide valuable assistance in locating and identifying missing persons or distressed vessels, particularly in remote or inaccessible areas.

Overall, the research paper provides a comprehensive overview of the potential of thermal drones for SAR operations in marine and coastal areas, while also highlighting the technical and operational challenges that need to be addressed to ensure their effective use.

"We used a custom hexacopter drone based around a Tarot 680 airframe and Pixhawk 2.1 flight controller. The drone was equipped with a custom designed two-axis brushless gimbal mechanism to provide stabilisation of the camera in both roll and pitch. The gimbal also allowed independent and remote control of the camera’s pitch angle. The thermal camera used was a FLIR Duo Pro R. This consists of a Tau2 TIR detector (

pixels, 60 Hz) with a 13 mm lens ( field of view), and a 4K RGB camera (4000 × 3000 pixels,

field of view) affixed side-by-side. The camera was interfaced to the flight controller to enable remote triggering and geotagging information to be recorded. Live video feedback was available in flight via a 5.8 GHz analogue video transmitter and receiver system."

https://arxiv.org/abs/2005.03409

The research paper "AutoSOS: Towards Multi-UAV Systems Supporting Maritime Search and Rescue with Lightweight AI and Edge Computing" proposes a novel approach for using multiple unmanned aerial vehicles (UAVs) equipped with lightweight AI and edge computing to support maritime search and rescue (SAR) operations.

The authors describe the AutoSOS system, which consists of a ground station, multiple UAVs, and a cloud-based platform for data processing and communication. The UAVs are equipped with cameras and other sensors to detect and classify objects in real-time, such as a person in the water or a life raft. The lightweight AI algorithms are designed to operate on the UAVs, minimizing the need for high-bandwidth communication with the cloud-based platform.

The paper highlights the advantages of using a multi-UAV system for SAR operations, such as increased coverage area, improved situational awareness, and faster response times. The authors also discuss the technical challenges of designing and implementing the AutoSOS system, such as optimizing the UAV flight paths and ensuring the reliability of the communication and data processing systems.

To demonstrate the effectiveness of the AutoSOS system, the authors present a case study of a simulated SAR mission in a coastal area. The results show that the system is capable of detecting and identifying objects accurately and efficiently, with a success rate of over 90%.

Overall, the research paper presents a promising solution for improving SAR operations at sea, leveraging the capabilities of UAVs, lightweight AI, and edge computing.

The research paper "A Review on Marine Search and Rescue Operations Using Unmanned Aerial Vehicles" provides a comprehensive review of the current state of research on using unmanned aerial vehicles (UAVs) for search and rescue (SAR) operations in marine environments.

The paper discusses the main challenges of conducting SAR operations in marine environments, such as limited visibility, harsh weather conditions, and the vast and complex nature of the search areas. The authors then review the different types of UAVs and their applications in SAR operations, such as fixed-wing UAVs for long-range surveillance and multirotor UAVs for close-range inspection and search missions.

The paper highlights the advantages of using UAVs for SAR operations, such as increased coverage area, improved situational awareness, and reduced risk to human rescuers. The authors also discuss the technical challenges of designing and operating UAVs in marine environments, such as optimizing the flight paths, ensuring reliable communication and data transmission, and complying with regulatory and ethical guidelines.

To demonstrate the effectiveness of UAVs for SAR operations, the authors review several case studies of real-world applications, such as detecting and rescuing distressed vessels and locating missing persons in coastal areas. The results show that UAVs can provide valuable support to SAR operations, improving response times, and increasing the chances of successful rescue.

Overall, the research paper provides a comprehensive overview of the potential of UAVs for SAR operations in marine environments, while also highlighting the technical and operational challenges that need to be addressed to ensure their effective use.

- commercially available/are they trial runs (yes)

-are they autonomous (nope)

-cover area (in the papers they talk about covering areas of 10x10km, for our scenario, the areas we have to deal with are way smaller)

Current Person in water detection tech

Currently, radars are used to detect the distance and velocity of an object by sending electromagnetic waves out and detecting the echo that results from objects. In water environments, a radome is needed to protect the radar but let the electromagnetic waves through. However, even with a radome the signal strength already falls to a third in a wet environment compared to dry. Multiple radar technologies exist with each their different advantages and disadvantages. Pulse Coherent Radar (PCR) can pulse the transmitting signal so it only uses 1% of the energy. Another aspect of this radar is coherence. This means that the signal has a consistent time and phase so the measurements can be incredibly precise. It can also seperate the amplitude, time and phase of the received signal to identify different materials. This is not necessarily needed in our use case.

The 'Sparse' service is the best service for detecting objects since it samples waves every 6cm. You don't want millimeter precise measurements in a rough environment like the ocean so these robust measurements are ideal.

Frotan, D., & Moths, J. (n.d.). Human body presence detection in water environments using pulse ... www.diva-portal.org. Retrieved March 6, 2023, from https://mau.diva-portal.org/smash/get/diva2:1668701/FULLTEXT02.pdf

Seven core ethically relevant themes

From the paper Ethical concerns in rescue robotics: a scoping review

Fairness and discrimination

Hazards and benefits should be fairly distributed to avoid the possibility of some subjects incurring only costs while other subjects enjoy only benefits. This condition is particularly critical for search and rescue robot systems, e.g., when a robot makes decisions about prioritizing the order in which the detected victims are reported to the human rescuers or about which detected victim it should try to transport first.

Fairness and discrimination in our scenario

When there’s a situation where multiple people have fallen over board, discrimination is something to look out for. When searching for people, discrimination can’t take place because if the robot has found one person it will continue looking for the others and since it doesn’t take people out of the water it doesn’t need to make decisions about prioritizing people. However, we are also considering dropping lifebuoys and here prioritizing order does come into account. In the situation where the robot can only carry one life vest, we propose that it drops the lifebuoy one the first person it finds, then comes back to the ship to restock, and continues the search. This will be the most efficient and also prevents discrimination between multiple victims.

False or excessive expectations

Stakeholders generally have difficulties with accurately assessing the capabilities of a rescue robot. May cause them to overestimate and rely too much on the robot and give false hopes for the robot to safe a victim. On the other hand, if a robot is underestimated, it may be underutilized in cases where it could have saved a person.

False or excessive expectations in our scenario

It is important for us to accurately inform stakeholders about the capabilities and limitations of the rescue robot.

Labour replacement

Robots replacing human rescuers might reduce the performance of human contact, situational awareness and manipulation capabilities. Robots might also interfere with rescuers attempt to provide medical advice and support.

Labour replacement in our scenario

Since it is important to not fully take the human aspect out of the equation, the robot should be equipped with speakers for people on the ship to talk to the person. They can check how he’s responding and reassure the victim that they’re coming to help. This will most likely help to calm the person. When the rescue attempt is being made by the crew or other rescuers, the drone should fly away since it’s no longer useful and as to not interfere with the rescue attempt.

Privacy

The use of robots generally leads to an increase in information gathering, which can jeopardize the privacy of personal information. This may be personal information about rescue workers, such as images or data about their physical and mental stress levels, but also about victims or people living or working in the disaster area.

Privacy in our scenario

The information gathered by the robots is not shared with anyone outside professional rescue organizations and is exclusively used for rescue purposes. We should also try to limit the gathering of irrelevant data as much as possible.

Responsibility

The use of (autonomous) rescue robots can lead to responsibility ascription issues in the case when for example accidents happen.

Responsibility in our scenario

Clearly state who is (legally) responsible in the case accidents happen during rescue (like operator, manufacturer etc.) Proposal: To ensure trust in our product, we should claim full (legal) responsibility as a manufacturer when accidents happen caused by design flaws or decisions made by the autonomous system.

Safety

Rescue missions necessarily involve safety risks. Certain of these risks can be mitigated by replacing operators with robots, but robots themselves, in turn, may determine other safety risks, mainly because they can malfunction. Even when they perform correctly, robots can still be harmful: they may, for instance, fail to identify and collide into a human being. In addition, robots can hinder the well-being of victims in subtler ways. For example, the authors argue, being trapped under a collapsed building, wounded and lost, and suddenly being confronted with a robot, especially if there are no humans around, can in itself be a shocking experience

Safety in our scenario

Risks should be contained as much as possible, but we do acknowledge that rescue missions are never completely risk free

Trust

Trust depends on several factors; reputation and confidence being the most important once. Humans often lack confidence in autonomous robots as they find them unpredictable.

Trust in our scenario

Ensure that the robot has a good reputation by informing people about successful rescue attempts and/or tests, and limit unpredictable behaviour as much as possible. We can also ensure trust by claiming full (legal) responsibility as a manufacturer when accidents happen caused by design flaws or decisions made by the autonomous system.

Design phase

Components:

Sensors

- TIC

- Wind sensor

- Servomotors

Communication Equipment

- Microphone

- Loudspeaker

Drone

Brainstorm Phase

Possible projects

Man over board (MOB) drone (Final project)

During the MOB protocol, the most challenging part is locating the victim. This can prove to be especially difficult during stormy weather or night time. Creating a drone that is equipped with adequate sensors to locate the victim and life saving equipment would drastically increase the chances of survival for a man overboard. Another problem that our drone needs to tackle is providing appropriate care for the possibilities of drowning, hypothermia or any other injury.

User: Ship's crew, rescue teams (coast guard); Problem: MOB, Requirement: Locate and provide appropriate care for the victim.

References: https://www.ussailing.org/news/man-overboard-recovery-procedure/, https://doi.org/10.1016/j.proeng.2012.06.236

Manure Silo suffacation

Manure Silo's need to be cleaned. When this is done, people can sufficate in the toxic gasses released by the manure (even if the silo is almost empty). We want to develop a robot that alarms people when conditions become dangerous, and if this person is not able to leave the silo in time, supply clean air to them.

User: Farmers, Problem: Manure silo suffication, Requirement: Supply clean air before suffication.

Some references: https://www.ad.nl/binnenland/vader-beukt-wanhopig-in-op-silo-maar-zoon-bezwijkt~a4159109/, https://www.mestverwaarding.nl/kenniscentrum/1309/twee-gewonden-bij-ongeval-met-mestsilo-in-slootdorp

Extreme Sports Accidents

Thrillseekers are often a bit reckless when it comes to safety. We want to design a flying drone that can help people get out of sticky situations during parachute-jumping, base-jumping or even rock-climbing. The victim will be able to attach themselves to the drone using the parachute equipment or rock-climbing equipment and the drone will put them safely on the ground.

User: Extreme sporters, rescue teams, Problem: Dangerous accidents, Requirement: Can safely attach to people and put them on the ground.

Some references: https://www.nzherald.co.nz/travel/aussie-base-jumpers-two-hour-ordeal-after-parachute-gets-stuck-in-tree/HCN6DYMSSA4ZUBE3GVV2WCTNRQ/, https://www.tmz.com/2022/11/30/base-jumper-crash-cliff-dangling-parachute-death-defying-video-moab-tombstone-utah/

Literature study

To determine the state of the art surrounding our project we will do a literature study.

Disaster robotics

This article gives an overview of rescue robotics and some characteristics that may be used to classify them. The article also contains a case study of the Fukushima-Daiichi Nuclear power plant accident that gives an overview of how some robots where used. On top of that the article gives some challenges that are still present with rescue robotics.

https://link.springer.com/chapter/10.1007/978-3-319-32552-1_60

A Survey on Unmanned Surface Vehicles for Disaster Robotics: Main Challenges and Directions

This article gives an overview of the use of unmanned surface vehicles and gives some recommendations around USV's.

https://www.mdpi.com/1424-8220/19/3/702?ref=https://githubhelp.com

Underwater Research and Rescue Robot

This article is about an underwater rescue robot that gives necessary feedback in rescuing missions. This underwater robot has more computng power than the current underwater drones and reduces delay by the use of ethernet cable.

https://www.researchgate.net/publication/336628369_Underwater_Research_and_Rescue_Robot

Mechanical Construction and Propulsion Analysis of a Rescue Underwater Robot in the case of Drowning Persons

This article is about a unmanned life-saving system that recovers conscious or unconscious people. This prevents other people from getting themselves in a dangerous situation by trying to save others. This drone is not fully autonomous since it needs to be operated by humans.

https://www.mdpi.com/2076-3417/8/5/693

Design and Dynamic Performance Research of Underwater Inspection Robots

Power plants along the coastline use water as cooling water. The underwater drone presented in this paper is used to research water near power plants and clean filtering systems to optimize the efficiency of the powerplant.

https://www.hindawi.com/journals/wcmc/2022/3715514/

Semi Wireless Underwater Recue Drone with Robotic Arm

This article highlights the challenges concerning underwater rescue of people and valuable object. The biggest challenge is wireless communication due to the harsh environment. The drone is also equipped with a robotic arm to grab objects and a 4K camera with foglights to navigate properly underwater.https://www.researchgate.net/publication/363737479_Semi_Wireless_Underwater_Rescue_Drone_with_Robotic_Armhttps://www.researchgate.net/publication/363737479_Semi_Wireless_Underwater_Rescue_Drone_with_Robotic_Arm

Rescue Robots and Systems in Japan

This paper discusses the development of intelligent rescue systems using high-information and robot technology to mitigate disaster damages, particularly in Japan following the 1995 Hanshin-Awaji earthquake. The focus is on developing robots that can work in real disaster sites for search and rescue tasks. The paper provides an overview of the problem domain of earthquake disasters and search and rescue processes.

https://ieeexplore.ieee.org/abstract/document/1521744

Two multi-linked rescue robots: design, construction and field tests

This paper proposes the design and testing of two rescue robots, a cutting robot and a jack robot, for use in search and rescue missions. They can penetrate narrow gaps and hazardous locations to cut obstacles and lift heavy debris. Field tests demonstrate their mobility, cutting, and lift-up capacity, showing their potential use in rescue operations.

https://www.jstage.jst.go.jp/article/jamdsm/10/6/10_2016jamdsm0089/_pdf/-char/ja

The current state and future outlook of rescue robotics

This paper surveys the current state of robotic technologies for post-disaster scenarios, and assesses their readiness with respect to the needs of first responders and disaster recovery efforts. The survey covers ground and aerial robots, marine and amphibious systems, and human-robot control interfaces. Expert opinions from emergency response stakeholders and researchers are gathered to guide future research towards developing technologies that will make an impact in real-world disaster response and recovery.

https://doi.org/10.1002/rob.21887

Mobile Rescue Robot for Human Body Detection in Rescue Operation of Disaster

The paper proposes a mobile robot based on a wireless sensor network to detect and rescue people in emergency situations caused by disasters. The robot uses sensors and cameras to detect human presence and condition, and communicates with a network of other robots to coordinate rescue efforts. The goal is to improve the speed and efficiency of rescues in order to save more lives.https://d1wqtxts1xzle7.cloudfront.net/58969822/12_Mobile20190420-67929-tn7req-libre.pdf?1555765880=&response-content-disposition=inline%3B+filename%3DMobile_Rescue_Robot_for_Human_Body_Detec.pdf&Expires=1676230737&Signature=YQXJqYheT6M0hsHXSWDx4FbuCauvv9o9uvDR1Hl8dJL~SmI~KObXAhXbq7dDYZAMLhsydh7ipP5RBOayNkzsM~K0xP7pcXLmOKcW3-WFdt1aTyHvQWeG5hUKzhb5KLaVAj4Frfb313Yi5oyhFaHVb~ODSxbtpN73SGd3YE3UouzuexfeGSVqFyWTWi-3qMqMIQ3qfUKGiBF24QfyArHlj9mKkq8gVItdJsAS9OGBUGeBQaf~8j37WsIauoABw8cO5V73RFxhfLR~ehXXMgJegTRxzwT1tBMhE14OVMK~PkfcpYSAVkHFi3gqf~sawW4SFIut7MetNdUcKfcAwHEBHA__&Key-Pair-Id=APKAJLOHF5GGSLRBV4ZA

Mine Rescue Robot System – A Review

Underground mining has a lot of risks and it is a very difficult task for rescuers to reach trapped miners. It is therefore great to deploy a wireless robot in this situation with gas sensors and cameras, to inform rescuers about the state of the trapped miners.

https://www.sciencedirect.com/science/article/pii/S187852201500096X

Ethical concerns in rescue robotics: a scoping review

We also have to take the ethics of rescue robots into account. There are seven core ethical themes: fairness and discrimination; false or excessive expectations; labor replacement; privacy; responsibility; safety; trust

https://link.springer.com/article/10.1007/s10676-021-09603-0

Rescue robots for mudslides: A descriptive study of the 2005 La Conchita mudslide response

Robots assisted the rescuers who responded to the 2005 mudslide in La Conchita. The robots were waterproof and could thus be deployed in wet conditions, but they failed to navigate through the rubble, vegetation and soil. The paper thus suggests that rescue robots should be trained in a variety of environments, and advises manufacturers to be more conservative with their performance claims.

https://onlinelibrary.wiley.com/doi/abs/10.1002/rob.20207

Emergency response to the nuclear accident at the Fukushima Daiichi Nuclear Power Plants using mobile rescue robots

The 2011 earthquake and tsunami in Japan resulted in a meltdown of the Fukushima nuclear power plant. Due to the radiation levels, robots were deployed because it was too dangerous for humans. First various issues needed to be resolved, like the ability of the robot’s electrical components to withstand radiation. The ability to navigate and communicate was tested at a different nuclear powerplant similar to Fukushima.

https://onlinelibrary.wiley.com/doi/full/10.1002/rob.21439

A Coalition Formation Algorithm for Multi-Robot Task Allocation in Large-Scale Natural Disasters

Robots are more reliable then humans in a lot of cases. This paper discusses a bit of prior research concerning older algorithms and looks into a new algorithm considering multi-robot task allocation is rescue situations. These algorithms should take a lot into account, like sensors needed for problems. They compare their algorithm with older ones in multiple cases like different problem sizes.

Appendix

Logbook

| Week | Name | Work done & hours spent | Total hours |

|---|---|---|---|

| 1 | Geert Touw | Group setup etc (2h) | |

| Luc van Burik | Group setup etc (2h), Subject brainstorm (2 h) | 4h | |

| Victor le Fevre | Group setup etc (2h) | ||

| Thijs Egbers | Group setup etc (2h) | ||

| Adrian Kondanari | Group setup etc (2h) | ||

| Aron van Cauter | Group setup etc (2h) | ||

| 2 | Geert Touw | Meeting 1 (1h), Meeting 2(1h) | |

| Luc van Burik | Meeting 1 (1h), Meeting 2(1h), Subject brainstorm (2h) | 4h | |

| Victor le Fevre | Meeting 1 (1h), Meeting 2(1h) | ||

| Thijs Egbers | Meeting 1 (1h), Meeting 2(1h), Subject brainstorm (2h) | 4h | |

| Adrian Kondanari | Meeting 1 (1h) | ||

| Aron van Cauter | Meeting 1 (1h) | ||

| 3 | Geert Touw | ||

| Luc van Burik | Meeting 2 (1h), IAMSAR reading (3.5h), Drone navigation research (1.5h) | 6h | |

| Victor le Fevre | Meeting 1 (1h), meeting 2 (1h)

Made the wiki look a bit more coherent (1h) Wrote the problem statement and objective, users, scenario and added points to the MoSCoW (1.5h) Read Sensor and Image Recognition papers (1h) |

5.5h | |

| Thijs Egbers | |||

| Adrian Kondanari | |||

| Aron van Cauter | |||

| 4 | Geert Touw | Meeting 1 (1h), Meeting 2(1h) | |

| Luc van Burik | Meeting 1 (1h), Meeting 2(1h), Search area (1.5hr), Drone search speed (1.5 hr), Wiki writing (0.5 hr) | 5.5 hr | |

| Victor le Fevre | Meeting 1 (1h), Meeting 2(1h) | ||

| Thijs Egbers | Meeting 1 (1h), Meeting 2(1h) | ||

| Adrian Kondanari | Meeting 1 (1h), Meeting 2(1h) | ||

| Aron van Cauter | |||

| 5 | Geert Touw | ||

| Luc van Burik | |||

| Victor le Fevre | |||

| Thijs Egbers | |||

| Adrian Kondanari | |||

| Aron van Cauter | |||

| 6 | Geert Touw | ||

| Luc van Burik | |||

| Victor le Fevre | |||

| Thijs Egbers | |||

| Adrian Kondanari | |||

| Aron van Cauter | |||

| 7 | Geert Touw | ||

| Luc van Burik | |||

| Victor le Fevre | |||

| Thijs Egbers | |||

| Adrian Kondanari | |||

| Aron van Cauter | |||

| 8 | Geert Touw | ||

| Luc van Burik | |||

| Victor le Fevre | |||

| Thijs Egbers | |||

| Adrian Kondanari | |||

| Aron van Cauter |

References

Things things we might need later (to be deleted)

Weather: Some waves, a breeze but still calm enough for a drone to fly, little rain, nighttime, water is 3 degrees Celsius -> 15-30 minutes until exhaustion and unconsciousness, 30-90 minutes expected survival time.

Ship: Container ship, on the Atlantic ocean, speed: 25 knots (~46 km/h, had to take a detour, were behind schedule, faster than average)

Reason off fall: Dark outside, slippery because some rain, person is alone, person drifts away without major injuries