PRE2015 4 Groep2

We are developing a neural network to determine fruit ripeness. The robot will be initially developed for controlling the quality of strawberries. As creating a complete prototype is probably not feasible to do in nine weeks, we start with focusing on the detecting and sensing part. For that we will develop a system which scans fruits and determines their ripeness. It can also consider other factors like for example if the fruit looks appealing.

Group members

- Birgit van der Stigchel (0855323)

- Cameron Weibel (0883114)

- Maarten Visscher (0888263)

- Mark de Jong (0896731)

- Raomi van Rozendaal (0842742)

- Yannick Augustijn (0856560)

Abstract

At the start of this project the goal we had in mind was to build a robot that can harvest fruit (strawberries in our case) autonomously. The idea was that the robot would drive along a row of plants, detect which of the fruits are ripe and harvest the fruits that are ripe enough for consumption. Our plan was to use a cheap camera, like a Kinect, to take low resolution pictures of the fruit. These pictures would then be fed through a neural network (a Convolutional Neural Net) and classified into several categories with different degrees of ripeness. This classification can then be used to determine if the fruit should be harvested or not.

The robot would then use shears, mounted on a robotic arm to cut the fruit and put it into baskets. The complete robot would be mounted on rails, such that it can move along the row of plants. It became clear very quickly that this project would be way to ambitious, we also had not properly looked into the User, Society and Enterprise aspects. Rather, we had focused completely on the technical aspects and neglected the USE approach.

We therefore decided to take a step back and evaluate the USE aspects relevant for our project. The first aspects that has a significant impact on the project is the farmers ability to influence the system. We decided not to build the complete robot, but rather focus on the vision system and build a companion app. This app would give the farmer information about the robot and his farm, as well as allow him / her to influence the classification done by the vision system. If every user of the system is able to give feedback, for instance by telling the system whether or not certain classifications that it made are correct, then the accuracy of the system can be improved.

The next step we took is to conduct an interview with a cucumber farmer, to determine if a system like this would be beneficial to him. He said that the minimum accuracy level of the system should be at least 80%. Once the system has been installed, this accuracy should be improved further. It was also important that the system was at least as fast as a human worker, to prevent economic losses.

We also visited a workshop by PicknPack, a European project by the University of Wageningen. The focus of this project was on developing a machine that can package fruits. The main part of this project that are relevant for our project are the quality control module, where the ripeness of the fruit is determined by looking at the colour of the fruit, confirming the plausibility of our project. Another important aspect that we gained from the PicknPack project is their focus on transparency and traceability. Every fruit is labelled, which allows the machine to track each of the fruits individually and connect a page with information about this specific fruit to it.

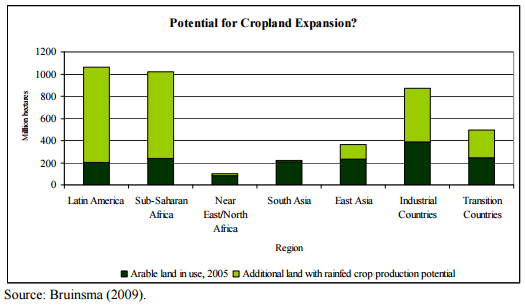

We wanted to implement something similar into our project. It was decided that the best way to do this is to keep track of exactly how much fruit the robot is harvesting in several zones on the farm. We can then display maps inside the app, that allow the farmer to see exactly where the fruit is coming from on his farm. We could also display a map that shows exactly what the average ripeness level is in each of these zones. This will give the farmer the ability to determine which areas of his / her farm are performing badly.

To check the relevance of this idea we decided to visit another farmer. It turns out that this would be very relevant for his farm, since this information is currently not available. This is because the fruit is harvested by workers which cannot keep track of how much fruit each tree is producing. A system that would allow a farmer to do this would be tremendously helpful to a farmer, since it allows him to track down and fix problems very efficiently.

Problem statement

This section should be about the problem we are actually solving

Requirements

Functional requirements

- The robot should be able to detect fruit using a Kinect camera

- It should be able to classify the ripeness of the fruit based on a convolutional neural network

- The robot will query an online database about the ripeness of a certain fruit and the database will return the percentile of ripeness the fruit is in based on different fruit image sets

- The farmer should be able to rate pictures of fruit so that they can be added to the training set as feedback to improve the robot

- The farmer should be able to interface with the database as well as different harvesting metrics through a mobile device

Non-functional requirements

- It should be relatively simple to add the Kinect+Raspberry Pi to an existing harvesting system

- The farmer should be able to use the system with minimal prior knowledge

- The robot should perform better than a human quality controller

- This robot should have all the safety features necessary to ensure no critical failures

USE aspects

User

Need

Primary users are farmers and their workers, who directly use the robot. The following aspects hold:

- Their work becomes far less intensive and heavy. Instead of directly harvesting, farmers can let the robot do the work. They would now only occasionally need to check the harvest and possibly adjust some parameters. This work is less heavy than harvesting and therefore less health problems due to heavy work can be expected.

- More free time for other things. This is because the new work takes far less time. Also there is no need anymore for training seasonal workers.

Secondary users are distributors that pick up the fruits from the farms. They use the robot occasionally when they need to get the fruits that are picked by the robot. Their work is mostly unaffected, however some parts of their work can be left to the robot, depending on how advanced the robot is. One of these things is selecting fruits based on ripeness and appealing factor. This can be done by the robot. The robot could also directly package and seal the fruits.

A tertiary user is the company that is developing and maintaining this robot. It indirectly uses the robot during development.

(I assume that this is a societal aspect:) Another tertiary user is the harvesting worker. A worker that is harvesting fruit manually does not directly come in contact with the robot. The robot does however influence these workers, as it takes away their jobs. This aspect should be researched more. These harvesting workers are likely people with a low education and students wanting to earn a little more. The people with low education can be expected to have a hard time finding a new job.

Impact

Primary: Farmer (and permanent employees)

- Transparency

- Traceability

- Less labor intensive work

Secondary: Quality control / Supermarkets

- Transparency

- Traceability

Tertiary: Maintenance / Supplier

- Increase in demand for their services

Society

Need

There are several problems to which our solution can relate such as:

- There will not be enough food in the near future for all the people

- There is an increasing shortage of workers due to aging

- Decrease in wage gap due to overabundance of food

- Post scarcity

Facts supporting these statements are:

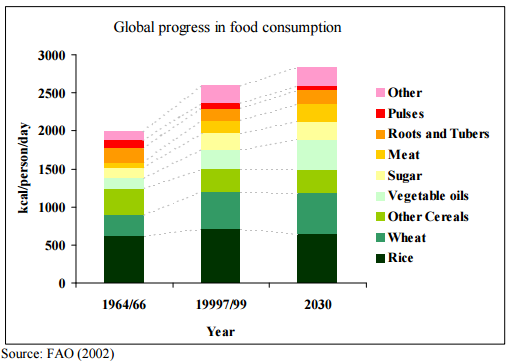

- The world population is predicted to grow from 6.9 billion in 2010 to 8.3 billion in 2030 and to 9.1 billion in 2050. By 2030, food demand is predicted to increase by 50% (70% by 2050). The main challenge facing the agricultural sector is not so much growing 70% more food in 40 years, but making 70% more food available on the plate.

- Roughly 30% of the food produced worldwide – about 1.3 billion tons - is lost or wasted every year, which means that the water used to produce it is also wasted. Agricultural products move along extensive value chains and pass through many hands – farmers, transporters, store keepers, food processors, shopkeepers and consumers – as it travels from field to fork.

- In 2008, the surge of food prices has driven 110 million people into poverty and added 44 million more to the undernourished. 925 million people go hungry because they cannot afford to pay for it. In developing countries, rising food prices form a major threat to food security, particularly because people spend 50-80% of their income on food.

Cited from: http://www.un.org/waterforlifedecade/food_security.shtml

Figure: Evolution of the average diet composition of a human

Figure: places with space and conditions appropriate for food production

Graphs’ source: http://www.fao.org/fileadmin/templates/wsfs/docs/expert_paper/How_to_Feed_the_World_in_2050.pdf

Going from all the above mentioned we can address several calls listed in the Horizon 2020 Work Programme 2016-2017. Some would require a slight shift of our focus, but could well be addressed after our project is completed too since one can build upon our knowledge, making it easier to solve such issues in a likewise way. These include:

- SFS-05-2017: Robotics Advances for Precision Farming

- SFS-26-2016: Legumes - transition paths to sustainable legume-based farming systems and agri-feed and food chains

- SFS-34-2017: Innovative agri-food chains: unlocking the potential for competitiveness and sustainability

Additional information on these calls: [1]

Our solution helps because by integrating the robots’ operation system into the system of distributors, less food will be wasted, and thus more will be available for others when developing the system in such a way that distribution can reach further for instance. Additionally we can help reduce poverty or limit the number of people that are driven into poverty, robots can reduce prices significantly on the middle to long term (before 2050 is certainly doable). By using the procedure we will use to make sure computer vision combined with machine learning is able to identify strawberries in the field, it will become easier to produce more legumes (semi-)automatically, thus addressing the SFS-26-2016 call. Our solution meets the expected mentioned at SFS-15-2017, namely: ‘increase in the safety, reliability and manageability of agricultural technology, reducing excessive human burden for laborious tasks’. Our robotic solution aims at reducing the number of people needed at a farm site. Also it specifically meets one of the expected impacts from the SFS-34-2017 call which says solutions should ‘enhance transparency, information flow and management capacity’. This is what the system behind the robot is intended to achieve.

Impact

Short to middle term

- Decrease of jobs in agriculture

- Increase in robot maintenance jobs

- Increasing food security

- Lower prices

- Less waste

Long term

- More food available

Enterprise

Need

Impact

- Automation on farms correlates to more sales

- $4000 per 1% automation

- Lower costs

- Investment

- Expansion

- System improvement

- Other technical advances

- Investment

Validation of concept

Conversation with Cucumber Farmer

On the 12th of May we conducted an interview with a Cucumber farmer, named Wilco Biemans. A friend of Maarten and Cameron works at his farm, through her we were able to get his contact information and schedule an interview. During the interview we asked him several questions about his farm, the current state of the art, developments that were taking place and his opinion on our system. His overall response was very positive, he saw the potential in our system but he did point out several points of improvement. Below the main questions and his answer will be outlined, please note that we are not reporting his exact answers since the interview was conducted in Dutch and we did not record it.

What system is in place for detecting the ripeness of fruit?

The farmer said that currently the fruit is sorted by trained workers and machines. The cucumbers are picked by workers who have received training which allows them to identify which cucumbers are ripe enough for picking. These vegetables are put in crates, which are then transported to a sorting facility. There the cucumbers that are deformed and not fit for consumption are separated from the rest, these will be sold to the cosmetics or fertilizer industry. The remaining cucumbers are separated by a machine into several weight categories (400-500, 500-600 grams) which are then sold for consumption.

What developments are currently being made in the agriculture industry, with respect to automated harvesting?

He said that there are systems available which are able to recognize and harvest fruit autonomously. However, these systems are not yet ready for commercial use. The biggest problems with these machines is the speed at which they operate, it is simply not worth it (financially), to replace a worker with one of these machines. He also pointed out that the entire infrastructure within the green house, or on the farm, will have to be modified, to accommodate these machines. The infrastructure is currently set up for maximum efficiency with respect to human workers, but a robot might allow for a faster system. (For instance with conveyor belts instead of crates)

He then talked about some important design elements of these robots that we had not yet considered. The first being the use of a laser cutter to harvest fruit, instead of shears. This choice was made to prevent the spread of diseases from one plant to the next, which could be catastrophic for a farm. The next design element was the ability of these robots to withstand the relatively harsh conditions within a green house. There can be large fluctuations in temperature and extreme humidity, which the robot has to be able to withstand. To this end the robot should be waterproofed, since any internal condensation can ruin the electronics.

The next development we talked about was a solution for the problem that no two fruits are the same, even within one species. Every cucumber is different and this makes automated harvesting difficult. As a solution the industry was working on combining two developments, new cucumber species and new methods of harvesting and growth. This new method for growth is called 'hoge draad teelt' and is very common for tomatoes. It involves suspending the cucumbers on a high wire and letting them grow in the air, this ensures that most of the cucumbers grow at the same height which makes harvesting very easy. This does requires a special species of cucumber, currently about 10% of all cucumbers are grown this way. This method of growing and harvesting is also ideal for automation, since this makes location the cucumbers very simple. If done properly, the location of the cucumbers can be controlled such that 95% of the fruit grows at the same height. Another reason that this technology is very beneficial is that it standardizes the ways in which different fruits grow, normally every fruit grows in a specific way and thus, requires a special way of harvesting. By utilizing this new method the way in which the fruits grow is very similar, which allows one machine to harvest different types of fruit, expanding the potential customer base.

An other development is a machine which can sort cucumbers based on their length, automatically. Development of this machine has been ongoing for about 10 years. Methods for detecting rot on the inside of fruits are also being developed. Finally, he pointed us to the work that is being done by the University of Wageningen, more on this can be found in the section on PicknPack and in the section on the state of the art.

Would a more accurate system be helpful?

Of course was his answer. But only if it can be faster and more accurate than a human worker and in this way offer a way to save costs. He said that the workers make a lot of mistakes, this is natural of course because there is no 100% accurate way for the workers to identify which fruit is ripe. Currently this is mostly done by comparing the fruit to other fruits which are known to be ripe, but this process is prone to errors. He estimated that a machine that is just as fast as a human worker, but has an accuracy of 80% would be good enough for implementation. It is important though that this accuracy will keep improving over time until it is as close as possible to 100%.

Trip to PicknPack workshop at Wageningen University

Via one of our mentors we were pointed at the PicknPack project. In this project, research is being done on solutions for easy packaging of food products. The goal of the research is to provide knowledge on possible solutions for picking food from (harvest) bins and packing the food, and meanwhile doing quality control and adding traceability information. To that end they created a prototype industrial conveyor system with machines that provide this functionality. The system is designed to be flexible and allow easy changing of the processed food type/category. While checking out the project, we saw a workshop announcement where they planned on showing the prototype and giving information. The time was possible for us and the content was interesting, so we decided to go with a group of 3 (Mark, Cameron, Maarten). We went on May 26 to Wageningen University for the workshop.

The quality assessment module is the most interesting for our project, and we were pleasantly surprised to learn that their approach used components that we were planning to use ourselves. In the quality assessment module, they set up an RGB camera, a hyperspectral camera, a 3D camera and a microwave sensor. The RGB camera is used for detecting ripeness. A simple algorithm was used for this. Weight was also estimated using the 3D camera. Features they measure via the hyperspectral camera are sugar content, raw/cooked state (for meat products), chlorophyll. The microwave sensor is used for freshness and water content (among other things). Cameron asked about the use of machine learning in combination with the RGB camera to a PhD student working on the RGB ripeness detection. The student explained that they weren't opposed using machine learning, in this project however they chose a simpler approach using a statistical model. He mentioned the black box nature and overfitting as potential problems for using machine learning. The statistical model that they used instead could be tuned by operators. A program was created that showed several results of the algorithm with different parameters. The operator could then indicate the best result.

An other big aspect in the project was traceability. A system was designed to closely track each food packet. Techniques used for this are RFID, QR codes and bar codes. There is also a database where the details are stored. Cleanliness was also very important. To deal with this a robot was created that could move using the conveyor system through all machines. For each separate machine a separate cleaning procedure can be defined.

Trends identified by the PicknPack team

- More personalized fresh foods (online)

- World population growth

- Advancing technology

A collection of our own pictures can be found via this link.

Visit to Kwadendamme Farm

Overview

We learned an immense amount of information from the Steijn farmers in Kwadendamme. These farmers plant Conference pears and Elstar apples, and they process over 900,000kg of fruit every year across 15 hectares of land. As seen in the picture above, the tress are kept to a relatively low height (around 2 meters) and the are aligned nicely in even rows.

Farmer Problems

The main priority of the farmer is to maximize his revenue. In our conversations with Farmer Rene, he mentioned that the biggest detractor from their bottom line was labor. The hourly wage for a Romanian/Polish harvester was 16 euros per hour (which includes tax, healthcare and other employee benefits), even though only 7 euros of that ends up going directly to the worker. The farmers only hire farmhands during 3-4 weeks of the year when they are harvesting their apples and pears, and the rest of the year the majority of their work goes into pruning, fertilizing, and maintaining the plants.

Another way for the farmer to increase his bottom line is to drive top line growth. If the farmer can produce plants that yield 20% more fruit, this translates to an almost equally proportional revenue increase. The farmers mentioned that a robotic system that continually keeps track of the number of produce each row of trees (or individual tree if such accuracy is attainable) is bearing. We discussed the concept of a density map for their land, and the idea resonated with them. We decided here that we would develop the planning for a high-tech farm in which our image processing system would play as a proof of concept for one of the many technologies that would be implemented in the overall farm. This allows us to focus on a more broad perspective, while still creating a technically viable solution.

Usefulness of Our System

The farmers recognized our system as being a necessary innovation within the quality assurance side of the produce supply chain, and they would benefit from a fruit-counting robot utilizing our machine vision software. The proof of concept we will demonstrate at the end of the year is an example implementation of our machine vision system as it applies to quality assurance, but from the farmers' comments it is clear there is plenty of room for innovation in computer vision within the agricultural field.

We also asked the farmers how they felt about certain technologies we saw at Delft, such as the 3D X-ray scanners for detecting internal quality of fruit. Again, they said that such technologies had their place in quality assurance but it was not useful to them as proving an apple that looks good on the outside has internal defects just subtracts from the total number of apples they can sell to the intermediary trader between them an Albert Heijn.

Design

Initial design

Our initial idea was to build an autonomous harvesting robot, made to harvest strawberries. It would be able to determine the level of ripeness of the strawberries and harvest the correct ones accordingly.

To determine the ripeness the robot would make use of a camera mounted on a robotic arm. It would position the camera in front of the fruit and take a picture. This picture would then be send to a neural network, which would have been trained using a large image set. This image set would contain many pictures of strawberries, sorted into different categories based on their level of ripeness. This would allow the neural network to determine the ripeness level of the strawberry in the field, by comparing it to the categories in the database.

Once the robot has determined that a strawberry is ripe enough to be harvested, it would use a grabber and shears mounted on a different robotic arm to grab and harvest the strawberry. The strawberry would then be placed in a basket mounted on top of the robot. Special care would have to be taken not to damage the strawberry, since this would mean that it can no longer be sold.

To move the robot to the next strawberry we planned on using rails. These would be suspended between rows of plants, allowing the robot to reach all of the plants. The positioning of the robot would be fairly simple, since the position of each plant is known. The idea was to move the robot to pre-determined positions on the rail and then use the arm to reach the individual fruits.

This system would allow farmers to utilise much larger farms, since the robots can work day and night without rest. Another benefit is the cost of the robots, if we would be able to keep it relatively cheap the farmer would be able to save a lot of money. Finally, a robot can be a lot more accurate than a human worker allowing for a larger harvesting efficiency. We also thought that the system could easily be adapted to harvest different types of fruit, which would give us a large customer base.

There are several problems with the approach we took at the beginning of the project. First of all, we had never spoken to anyone within the agriculture industry and therefore had no idea if our initial design made any sense. Secondly, we had not really considered the problem from the side of the User, the Society and the Enterprise. But rather, we approached the problem from a completely technical perspective.

It was therefore decided that we would conduct an interview with a farmer, to see what the current situation on a farm is. This would allow us to determine exactly where out solution would fit in and what requirements it would have to fulfil. We also decided that we should visit a farm, to see with our own eyes what the state of the art is. Finally, we visited a European project about the automation of the packing process of food. The result of these visits is described in the section above.

Final design

.

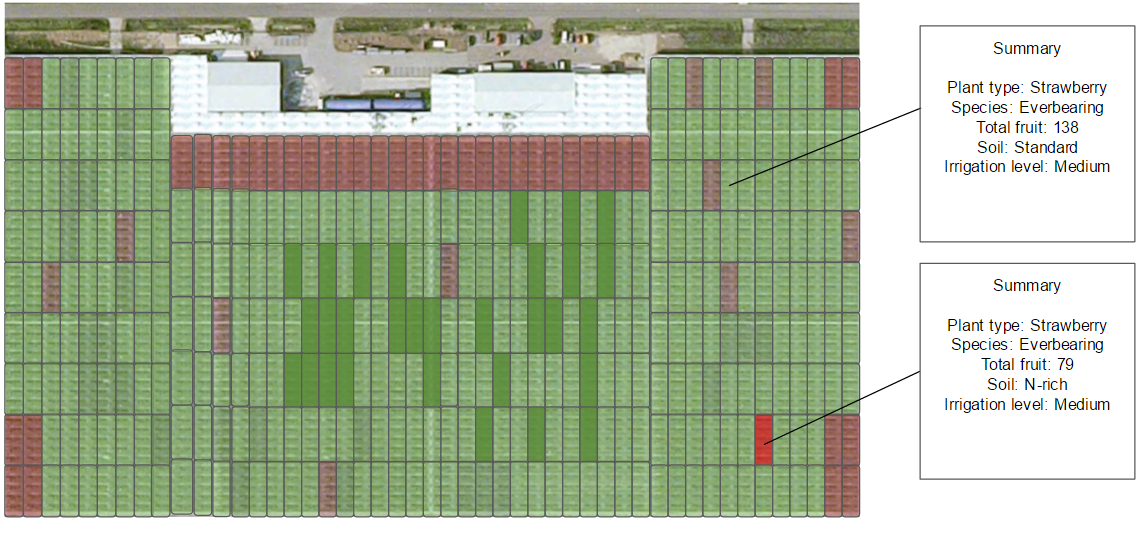

Image sets

We are creating image sets of ripe and unripe strawberries using the format as presented here:

The images are all 64x64, twice the height and width of the CIFAR-10 set, as we will use less images but better quality pictures. These images will be converted into a binary format and segmented into training sets. These images were taken with a Kinect camera attached to a Raspberry Pi. Uniform lighting conditions were used to match that of a quality control facility.

The following setup is being used for taking/uploading images:

Vision system

The robot needs a vision system to detect whether a fruit in its sight is ready to be plucked. When a fruit is detected, it should be left hanging when it is not ripe (green in case of strawberries). When the fruit is ripe or overripe it should be plucked. Overripe fruits will be filtered during later quality assessment phases which are already in place. This often happens during the packaging phase.

For the vision system we chose to use image recognition using machine learning.

Points on why image recognition > color detection (to expand):

- Wageningen: PhD mentioned good use.

- More flexible

For the vision system we use a Kinect camera, for the demo hooked up to a

TensorFlow Framework

For our classification of fruit ripeness, we are using TensorFlow, an open-source Python/C++ framework for machine learning. We are using a convolutional neural network (CNN) with a custom image set of different fruit with different levels of ripeness. We have followed the tutorials for the basic MNIST dataset as well as the CIFAR-10 CNN. We are using a modified CNN based on the Inception v3 model.

The training of the neural network can take a significant amount of time. It has taken more than four hours on a number of occasions to train the CNN using Docker running Tensorflow on the standard-issue TU/e laptop.

Database

Application

Application design (User interface)

Demo

We decided to use a standard off-the-shelf Raspberry Pi as the microcontroller for our implementation of the vision system. The idea is that any device can post an image of a ripe/unripe fruit to the database and receive a classification. Using the Raspberry Pi demonstrates that any device with internet connection and sufficient processing power to use our fruit classification system.

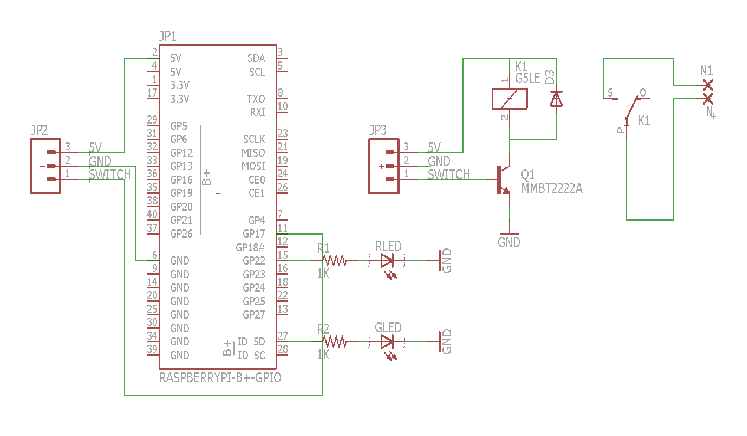

The Raspberry Pi used a single python script to turn on the spotlight, take a picture using the Kinect, and flash a corresponding red or green LED if the fruit was rejected or accepted. The code can be found (INSERT LINK HERE). To turn on the spotlight, a GPIO on the Raspberry Pi was connected to a custom relay board that we produced, which was in turn connected to mains electricity. The neutral wire from the mains was split into two, with the relay in which could close the connection between…

App

Idea behind the app

One of the requirements of the system was for the user/farmer to be able to add pictures of unripe and ripe fruit to the database in order to be improve the system. This was chosen because if it was hypothesized that more pictures under different lighting settings and different circumstances, of different classifications of the fruit would results into a better and faster neural network, that would improve the overall performance of the system. Furthermore we also wanted to give the farmer an extra way of getting more insight about his/her farm and the used system. For example how much fruit was picked, the overall quality of this fruit, the temperature and ways to connect to the CCTV system of the farm en keep an eye on everything.

We decided to use an app for this since we cannot expect the user to have knowledge about programming or any other difficult means to add pictures to the database. With this app the farmer would be able to add pictures to the database and label them, get more information about his/her farm and he/she would be able to go into the database to delete or change the labels of pictures.

We decided to first create screenflows and functional powerpoint versions of the app, to be able to get a good look and eel before programming the whole thing. This often resulted in some slight changes because we found that aspects were not working nicely or lacked an intuitive feel.

Screenflows

Version 1

The first version was made during the third week of the course and formed the base for the first version of the app. The initial idea was that a farmer should be able to alter the database. Add new pictures to the database of instances that do not meet expectations when checking the strawberries that the system had picked, such that it could learn by using this pictures for additional training. Finally it should have an option to view what the robot is doing by live streaming the footage from the robots' camera. [2]

Version 2

After feedback we rethought the apps' requirements and concluded that it wouldn't be useful if the farmer makes pictures himself, since adding 10 will not have any impact on a database of 10k pictures. Therefore we assume that the system is operable at multiple farms and that they share a database in the cloud. By adding a Tinder like functionality for reviewing pictures taken by the robot that day we hope to receive more feedback (as multiple farmers will do this regularly) and thus increasing the learning capabilities of the system. Additional benefit is that this costs the farmer less work and is perceived as being more fun. The database aspect was changed in a way that it now displays statistics about the database, under which the last version and when that update took place and how many pictures are in there. The live feed was replaced by an overview of the farm with different 'filters' which show the performance of different sections in terms of among others revenue, average quality and expected harvest in coming days. This can be seen for the farm as a whole, but also per section individually. [3]

Different versions of the app

Version 1

The first version of the app was made during the 4th week of the course. This app had the functionalities that were described in the "idea behind the app" section. The user could log in to his/her personal account, take pictures, label them and add them to the databse. Furthermore the farmer could get insight in the performance of his/her farm and could change and delete pictures from the database.

The first version of the app can be found File:Use app.pdf.

Version 2

The second version of the app was made during the 6th week, it differed from the first version because the user was not able anymore to take pictures and upload them themselves. During the meetings it was found that taking a picture and labeling it and then uploading it would simply take too much time for the farmer to make it profitable. Therefore the uploading pictures part was replaced by a rating part. This system would take pictures of a fruit it was not yet sure whether the quality was high enough. This pictures would then be stored and presented to the farmer who could then rate them as good or bad by swiping them either to the right or the left of the screen. This ensured that the user could quickly rate the pictures and improve the system, by feeding it more and more pictures everytime.

The second version of the app can be found here: File:Use app v2.pdf

Version 3

The third version of the app was made during the 7th week of the course, and also had some major differences from its earlier versions. The main difference was that the insights into the farm where now done per regio of the farm. This means that the farmer was now able to see, by using color coding, which sector of its farm was performing best and worst and everything in between. The farmer could then click on the regio and could see more statistics about this sector and the performance. Furthermore the farmer could also see an overall view of all his produce and the overall performance of the farm.

The third version of the app can be found here: File:Use app v3.pdf

Version 4

The fourth version of the app was also made during the 7th week of the course and included multiple screens that were color coded. The farmer could now also use the earlier mentioned segments for seeing the following stats: Processed today, Average Quality, Revenue, Time to harvest and Kgs left.

Furthermore the database tab was changed to an overall system performance overview, since it was thought that the changing 1 or 2 pictures each time would not make a significant contribution to the performance of the system.

The fourth version of the app can be found here: File:Use app v4.pdf

Version 5 and Version 5.1

The fifth and fifth.one version of the app were also made during the 7th week and included some minor changes with regard to the fourth version. The names of the buttons were changed in the main menu and numbers were added to the different segments in the overview, this would ensure that the farmer would have a better overview and did not have to press any buttons to check on the numbers of each sector. Furthermore the time to harvest indication was changed to an overview of how much a farmer could pick a day. This was also added to the total overview in order to give the farmer an idea of how much produce he can expect for certain days. This can then be used to schedule his/her workforce and create a more efficient farm.

The fifth and fifth.one version of the app can be found here: File:Use app v5.pdf & File:Use app v5.1.pdf

Version 6

The sixth version was made during the final week and included some extra screens. It gave the user the possibility to zoom in on the different levels, making sure that he/she can get even more insight on a more detailed level. This could be done by clicking on one of the sectors/rows, which would give the user a better overview of the results of that specific row/section.

It was decided to use rows in this section because the farmers that were visited explained that they mostly experiment with different soil contents and irrigation plans per row. Therefore they said that the division of in the first level per row was enough.

The sixth version of the app can be found here: File:Use app v6.pdf

The final app

For the final app, we choose to focus on three main features. From our interviews with farmers and the visit to Wageningen, we found that transparency of the system is verry important to the farmers. Also the farmer wants to be able to distinquish between different parts on the farm, for example if he wants to experience with different kinds of fertilizers. These last remarks has led our app to have a "Insights" part. In this part of the app, the farmer can obtain information about different parts of his farm, in terms of how much fruit is left, how much fruit is picked, the revenue, the average quality of the fruit and an estimated average time to harvest the majority. This information can be seen for the overall farm, but can also be derived for a specific part of the farm.

For the prediction of the average time to harvest the fruit, the average quality percentage is used. This is the quality of all the fruits in the specific area averaged out.

- The second main feature of the app is that it allows the farmer to help improve the learning algerithm, by adding pictures to a database. For this, we made a page in the app where all the pictures taken by the kinect camera that are in a specific range of ripeness (for example between 70% to 90% ripeness) are send to the app, where different farmers can swipe swipe the pictures of the fruit to "approve" or "disapprove" the fruit. How will these pictures be send back to the database?? How much farmers are rating one fruit?

The last feature of the app is a total overview and prediction.

In an improvement for the application, it might be nice to add a setting in which the farmer can specify how detailed he wants his density maps on the app. For example, have it be devided in 10 areas, or have the 10 areas being underdevided in another 10 areas.

-

- Another improvement can be a 3D version, in which the farmer can look at the pictures at the rating system from different angles to better determine the ripeness.

-

- This system can also be used to determine the estatic appeal of the fruits.

-

- Three main features:

-

- Detailed statistics

- General information

-

- Density maps

- Give farmer detailed information about farm

- Ability to troubleshoot problems

- See the results of experiments

-

- Feedback

- All users can give feedback

- Used to improve the classification

-

The app was made using Android Studio, we decided to go for this program because it is fairly easy to use and since Android software is supported by many phone/tablets manufacturers.

link to ZIP-file containing the app

Discussion

.

Suggestions for further research or improvement

.

Conclusion

Research

Manual strawberry harvesting process

.

Harvesting robots

- Description of an autonomous cucumber harvesting robot, designed and tested in 2001

- Paper from 1993 describing the then state-of-the-art and economic aspects. It has a chapter on economic evaluation.

- Recent TU/e paper discussing the state-of-the-art on tomato harvesting. It focuses on the mechanical part and does not include sensing and detecting.

Sensing technology

(Older)

- Yamamoto, S., et al. "Development of a stationary robotic strawberry harvester with picking mechanism that approaches target fruit from below (Part 1)-Development of the end-effector." Journal of the Japanese Society of Agricultural Machinery 71.6 (2009): 71-78. Link

- Sam Corbett-Davies , Tom Botterill , Richard Green , Valerie Saxton, An expert system for automatically pruning vines, Proceedings of the 27th Conference on Image and Vision Computing New Zealand, November 26-28, 2012, Dunedin, New Zealand Link

- Hayashi, Shigehiko, Katsunobu Ganno, Yukitsugu Ishii, and Itsuo Tanaka. "Robotic Harvesting System for Eggplants." JARQ Japan Agricultural Research Quarterly: JARQ 36.3 (2002): 163-68. Web. Link

- Blasco, J., N. Aleixos, and E. Moltó. "Machine Vision System for Automatic Quality Grading of Fruit." Biosystems Engineering 85.4 (2003): 415-23. Web. Link

- Cubero, Sergio, Nuria Aleixos, Enrique Moltó, Juan Gómez-Sanchis, and Jose Blasco. "Advances in Machine Vision Applications for Automatic Inspection and Quality Evaluation of Fruits and Vegetables." Food Bioprocess Technol Food and Bioprocess Technology 4.4 (2010): 487-504. Web. Link

- Tanigaki, Kanae, et al. "Cherry-harvesting robot." Computers and Electronics in Agriculture 63.1 (2008): 65-72. Direct Dianus

- Evaluation of a cherry-harvesting robot. It picks by grabbing the peduncle and lifting it upwards.

- Hayashi, Shigehiko, et al. "Evaluation of a strawberry-harvesting robot in a field test." Biosystems Engineering 105.2 (2010): 160-171. Direct Dianus

- Evaluation of a strawberry-harvesting robot.

State of the art

A small number of tests have been done with machines for harvesting strawberries. These are large, bulky and expensive machines like Agrobot. Cost prices are in the order of 50,000 dollar. Todo: add citations.

A lot of research is done towards inspection by means of machine vision. Todo: add citations and continue.

Planning

Week 2

Clarifying our project goals

Working on USE aspects

Finalize planning and technical plan

Sketch a prototype

Week 3

Preliminary design for app

First implementation of app

Database/Server setup

CNN, and basics of neural networks

Week 4

App v1.0 with design fully implemented

Kinect interfacing to Raspberry Pi completed

USEing intensifies

Finish back-end design and choose frameworks

Week 5

App v2.0 with design fully implemented and tested

Further training of CNN

Working database classification (basic)

Casing (with studio lighting LED shining on fruit)

Week 6

Improve CNN

Expand training sets (outside of strawberries (if possible))

User testing on app

Reflect on USE aspects and determine if we still preserve our USE values

Week 7

Implement feedback from testing app

Improve aesthetic appeal detection (if time)

Week 8

Finish everything

Buffer period

Final reflection on USE value preservation

Week 9

Improve wiki for evaluation

Peer review

Fallback:

App for user to report feedback in the form of images of high/low quality fruit.

Have Rpi take pictures using Kinect and send to database

Choose a more binary classification (below 50%/above 50% quality)

Flesh out the design more (if implementation fails)

Further reading

- Aeroponics (we most likely won’t use this as an irrigation method)

- Why to avoid monoculture