Mobile Robot Control 2021 Group 7

Group Members

Tim van Esch - 1235917

Aron Prinsen - 1243943

Thom Samuels - 1267566

Naomi de Vos - 1233610

Joey Wouters - 0813063

Introduction to the course

The subject of the course is to program a robot (PICO) in such a way that it is able to accomplish certain tasks autonomously. There are two challenges that need to be tackled. The first challenge is an escape room, which will have rectangle shape by approximation that consists of one exit. The robot is not allowed to make contact to the wall in this challenge. The second challenge will be the hospital challenge, in which the robot needs to visit certain cabinets in a predefined order. During the challenge, the robot is not allowed to touch static objects, such as the wall or doors, or dynamic objects, such as people.

Design Document

The Design Document: Media:4SC020_Design_Document_07.pdf

Requirements

Requirements specify what the system should do. Some requirements are challenge dependent, as the environment and task at hand differ per challenge, while others are general. These general requirements are both given by the client and decided upon by the team. The following requirements are applicable to both challenges, where the first three are given by the client and the others are preferences of the group:

- Wall clearance: The robot should stay (0.5-2r)/2 meters away from the wall, where r is the radius of the robot and 0.5 is the minimum width of the corridor of the escape room challenge.

- Speed limit: 0.5 m/s translational, 1.2 rad/s rotational.

- Sensible movement: The robot should make a sensible move within 30 seconds.

- Local path planning: The ability to create a path which needs to be followed, within the same room.

- Effective path: Used time to complete a challenge should be minimized.

- Scalability: The solutions should work for different environments.

- Communication: The robot needs to be able to communicate what it is doing.

For the escape room challenge, there are only two extra needed requirement, both given by the client, which are the following:

- Challenge completion: The robot needs to cross the finish line, at the end of the corridor, completely to pass the escape room challenge.

- Maximum time: Efficient computing and control speed is required to be able to complete the escape room challenge within 5 minutes.

The requirements needed specifically for the hospital challenge are listed below. In this list, the first four requirements come from the client, while the others are decided upon by the group, in order to improve efficiency.

- Challenge completion: The robot needs to visit the cabinets in the given order.

- Maximum time: Efficient computing and control speed is required to be able to complete the hospital challenge within 10 minutes.

- Object detection: Unknown and possibly moving objects should be detected. If unexpected objects and/or people are in the way, the robot needs to go by them, avoiding collisions at all time.

- Orientation with respect to cabinets: The robot should approach the cabinets with the right orientation.

- Door detection: The robot needs to recognize door openings. If a door is locked the robot needs to look for another entry.

- Global path planning: The ability to create a sequence of consecutive intermediate points which needs to be visited in order, divided over multiple rooms.

- Emergency stop: The robot should stop directly when detecting an unexpected object/ person in front of it.

- Keeping right: In hallways, the robot should stay on the right side if possible.

Functions

Functions are requirements that the software needs to meet. These functions can be split up in two types, namely functions that improve the quality of the code and functions that specify what the robot needs to be able to do. The functions that the code needs to meet are as follows:

- Readability: The software needs to be well readable, this includes using indents and comments and best practices.

- Structure: The software needs to have a logical structure, such as dividing the code in multiple files. This also helps improve readability.

- Maintainability: It needs to be possible to maintain the software, this includes that all team members need to be able to work on the software.

The functions that specify what the software needs to enable the robot to do are as follows:

- Perception: Process the LRF data and based on the found distances between the robot and objects, walls and people at a certain angle, which is used to perceive what static and dynamic objects are surrounding the robot.

- Localization: Pinpointing the location of the robot, using the given map of the environment and perception module.

- Path planning: Planning a needed path, based on the given general layout of the environment, together with the given target position and its current position.

- Navigation: Enabling the robot to follow a route from its current location to a target location.

- Collision avoidance with dynamic objects: Detection and reaction to motion of other objects and at all time try to avoid collision with these.

- Alignment: Making sure the robot has the right orientation with respect to the cabinets and hallways.

- Communication: The software needs to be able to communicate with the user.

Components

The software consists of multiple components. These components are as follows:

- Localization: The robot needs to localize itself with respect to the environment.

- Perception: The robot needs to perceive its surroundings, including the detection of objects.

- Navigation: The robot needs to be able to navigate through the environment.

- Path planning: The robot needs to plan its movement towards its target position.

- World modeling: The robot needs to make a model of the world for itself, including the dynamic objects.

- System planning: The robot needs to decide which components to use at what time, in order to come up with a strategy for completing the task.

Specifications

Specifications specify what the system can do. The following specifications can be set up:

- The robot can detect (the distance to) walls/objects using its Laser Range Finder (LRF).

- The robot can estimate its change in position over time, using the wheel encoders.

- The robot can move in all directions, using its holonomic base with omni-wheels.

- The robot can use speech to communicate with its environment.

Interfaces and strategy

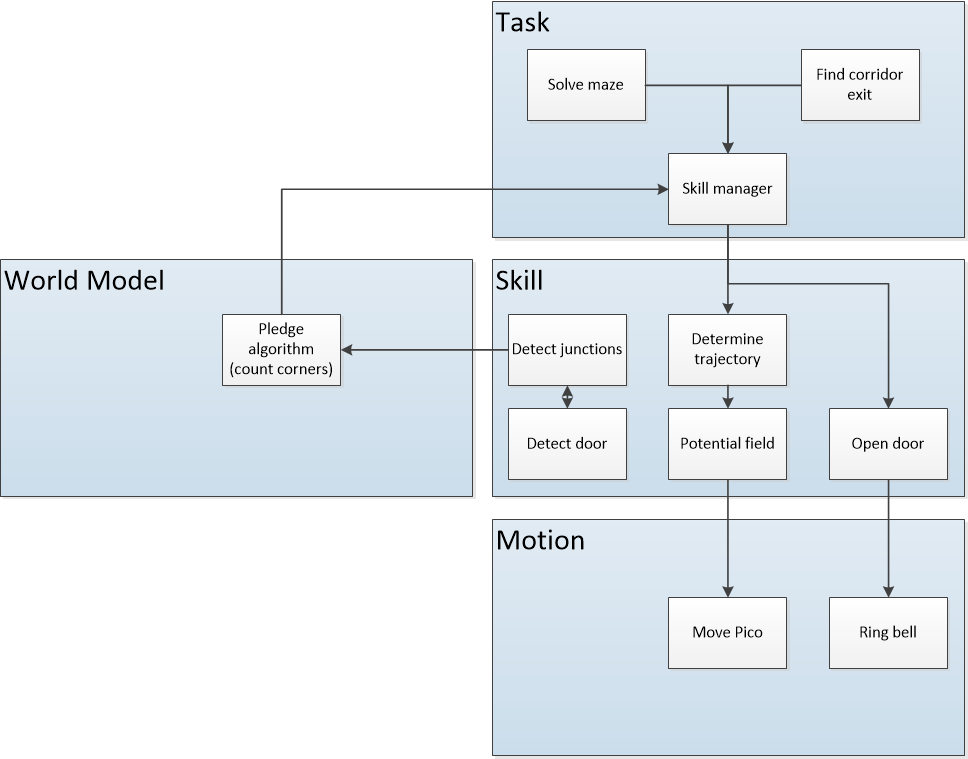

Interfaces link the different components of the software. The interfaces can be seen in the following figure:

Here, the inputs of the world model include the target location(s)/goal, which for the escape room challenge is "leaving the room", and the information that is known before hand, such as the lay-out of the rooms in the hospital challenge. Also, the world model is used to store known data.

In order to complete the escape room challenge, multiple strategies can be applied. In order to compare strategies and to be sure that there is at least one working strategy, two are implemented. The first strategy is following the walls, either clockwise or anti-clockwise, until the robot has left the room. This strategy is probably not the fastest, as you will travel a greater distance, but it is a safe option, as you will always end at the exit, because this is where the walls end. The second strategy consists of multiple steps. The first step is scanning the whole room and based on the LRF data determine the location of the corridor/exit. After determining this location, the robot needs to then drive to this point straightaway. When the exit is localized, it is known that the robot needs to only drive forwards until it has crossed the finish line.

In order to implement the strategies, the state machine of the robot is used. This state machine looks as follows:

Escape Room Competition

Objective

Simply speaking, the escape room challenge can be subdivided two major part: 1) setting the objective based on the LRF data, and 2) driving towards the objective avoiding any obstacle in the way. The following part will elaborate on setting the objective. This is done in three steps: 1) Extract all line segments from the LRF data, 2) for each line segment, identify the type of end point, and 3) based on this classification, set the objective.

Line Extraction - Split and Merge

To extract the relative location of the walls from the ordered LRF data, the split and merge algorithm has been used. This algorithm determines a list of start and end points and/or indices from a set of points in Cartesian coordinates. The procedure is as follows:

- 1. Transform the LRF data from Polar coordinates to Cartesian coordinates.

- 2. Initially, assume there is one line segment, i.e., two vertices.

- 3. Loop over all line segments.

- 4. Assume a straight line between the start point and the end point of a line segment.

- 5. Compute the minimum distance of within a line segment to this line.

- 6. If the maximum distance exceeds the threshold of 0.2 m, the point corresponding to this maximum distance will become a new vertex.

- 7. Repeat steps 3.-6. until no new line segment is found.

An improvement, which is not implemented for the escape room challenge, is to afterwards perform a least square fit for each line segment to improve the accuracy of the wall localization.

Corner Classification

A set of vertices of start and end points is not yet useful without classifying the type of endpoint/corner. A distinction is made between 1) an inside corner, i.e., corner in the room, 2) an outside corner, i.e., corner connecting the room to the corridor, and 3) an endpoint, i.e., point which is not part of a corner. The type conditions are the following, note that the conditions are checked in the order they are presented:

- 1. End point: the vertex is labeled as an end point if the distance between the vertex itself and its direct neighbours exceeds the threshold of 0.2 m.

- 2. Inside corner: the vertex is labeled as an inside corner if r2 > r1 and r2 > r3, where r1 is the previous vertex, r2 the currect vertex, and r3 the next vertex in the list of start and end points.

- 3. Outside corner: the vertex is labeled as an inside corner if r2 < r1 and r2 < r3, where r1 is the previous vertex, r2 the currect vertex, and r3 the next vertex in the list of start and end points.

Exit Detection

Potential Field

A full potential field was implemented. The laser measurements were converted to cartesian coordinates with pico as the origin. All laser measurements with a distance of less than 0.5 meters to pico are used to generate a repulsion field. This is done by taking the vector from pico to each measured point, reversing its direction, and using its length to determine a length for the new vector. The new length is determined such that the new vector is longer if pico is closer to a measured point and infinite if the measured point has a distance of less than 0.1 to pico. All vectors are added together to obtain the repulsion vector. The coordinates of the goal are used as an attraction vector. The repulsion and attraction vector are multiplied by a weight that can be used to determine which is more relevant. The repulsion vector and the attraction vector are summed to obtain the potential field's direction and magnitude at a specific point in pico's spacetime. Using the weights the potential field is implemented as follows: The repulsion vector is only used to actuate the holonomic base and the rotation and forward velocity are only actuated by the attraction vector.

Back-up method

In order to ensure that at least one of the two allowed attempts of the escape room competition is successful, a back-up algorithm was also designed. The goal of this back-up algorithm was to be less risky, while being more reliable. At the same time, because the algorithm is less risky, the total needed time to complete the escape room challenge is also longer.

For the back-up method, a wall following approach has been chosen. When the algorithm is executed, the robot drives forward until it encounters a wall. It detects this wall, using the laser range finder. When the laser range finders detects data points closer to the robot than its set threshold, it classifies the scanned object as a wall. When this wall is detected in front of the robot, the robot turns 90 degrees clockwise and precedes driving forwards, keeping the distance to the wall right from it at the set threshold value. The robot continuous doing this, until it reaches a new wall, at which point the robot turns 90 degrees clockwise again, or until the robot reaches the end of a wall. The end of the wall is again detected using the laser range finder and is recognized based on the fact that the set threshold of the distance from the robot towards the point right from the robot is no longer met. When the end of a wall is detected, this means that the robot has located the corridor. Therefore, the robot turns 90 degrees counter-clockwise, entering the corridor. In this corridor, the robot again keeps its distance to the wall right from it on the set threshold and precedes driving forwards, until it has finished the challenge.

In case the robot classifies a data point from the laser range finder as being closer to itself than the radius of the robot, this point is disregarded, in order to prevent it from influencing the behavior of the robot.

Evaluation and Reflection

During the escape room challenge, the robot behavior was as expected. The feature recognition is designed to localize convex corners and end points of lines, on which the location of the door was based. During the escaperoom challenge the robot first moved to the far end of the room, this was caused by the fact that the wall on the far end of the room was too far away to be recognized by the laser range finder. Because of this, two end points where localized by the feature recognition algorithm, making the robot think it has found the exit and drive towards the goal set by the objective calculation. Once the robot arrived at the dead end at the end of the room, it rotated and re-localized a new goal, this time at the correct exit. The robot then managed to drive towards the correct goal and finish the escaperoom challenge well within time.

The robot could have finished sooner with a more robust feature recognition algorithm. For example, a threshold could have been set on the maximum allowed distance between convex corners and line end points, this may have avoided that the robot first drove towards the wall on the far end of the room. The robot would then take more time to rotate and find two points which are within this threshold, improving the localization of the exit. Which ultimately makes the process of exiting the escaperoom more efficient.