PRE2019 4 Group1: Difference between revisions

No edit summary |

No edit summary |

||

| Line 236: | Line 236: | ||

Based on these inputs, we generated a random dataset (without labels). Because of the current (corona) circumstances, it is not possible for us to gain the data ourselves using the sensors. To circumvent this problem, we scaled all the features that we had to a normalised scale (e.g. from 0 - 1), which enables us to correctly train the decision tree based on random data. After the random data has been generated, it has been labeled with what we think that the robot should do. After using a training program, a decision tree was generated and could be used to run in the background when our robot was active: it now has brains! | Based on these inputs, we generated a random dataset (without labels). Because of the current (corona) circumstances, it is not possible for us to gain the data ourselves using the sensors. To circumvent this problem, we scaled all the features that we had to a normalised scale (e.g. from 0 - 1), which enables us to correctly train the decision tree based on random data. After the random data has been generated, it has been labeled with what we think that the robot should do. After using a training program, a decision tree was generated and could be used to run in the background when our robot was active: it now has brains! | ||

====System Overview Diagram==== | |||

[[File:systemoverview]] | |||

= References = | = References = | ||

Revision as of 08:23, 23 May 2020

CALL-E

We present an in-house assistant to help those in self-isolation through tough times. The assistant will be called CALL-E (Crisis Assistant Leveraging Learning to Exercise).

Group members

| Name | Student ID | Department |

|---|---|---|

| Bryan Buster | 1261606 | Psychology and Technology |

| Edyta W. Koper | 1281917 | Psychology and Technology |

| Sietze Gelderloos | 1242663 | Computer Science and Engineering |

| Matthijs Logemann | 1247832 | Computer Science and Engineering |

| Bart Wesselink | 1251554 | Computer Science and Engineering |

Problem statement and objectives

With about one-third of the world’s population living under some form of quarantine due to the COVID-19 outbreak [1], scientists sound the alarm on the negative psychological effects of the current situation [2]. Studies on the impact of massive self-isolation in the past, such as in Canada and China in 2003 during the SARS outbreak or in west African countries caused by Ebola in 2014, have shown that the psychological side effects of quarantine can be observed several months or even years after an epidemic is contained [3]. Among others, prolonged self-isolation may lead to a higher risk of depression, anxiety, poor concentration and lowered motivation level [2]. The negative effects on well-being can be mitigated by introducing measurements that help in the process of accommodation to a new situation during the quarantine. Such measurements should aim at reducing the boredom (1), improving the communication within a social network (2) and keeping people informed (3) [2]. With the following project, we propose an in-house assistant that addresses these three objectives. Our target group are the elderly who needs to stay home in order to practice social distancing.

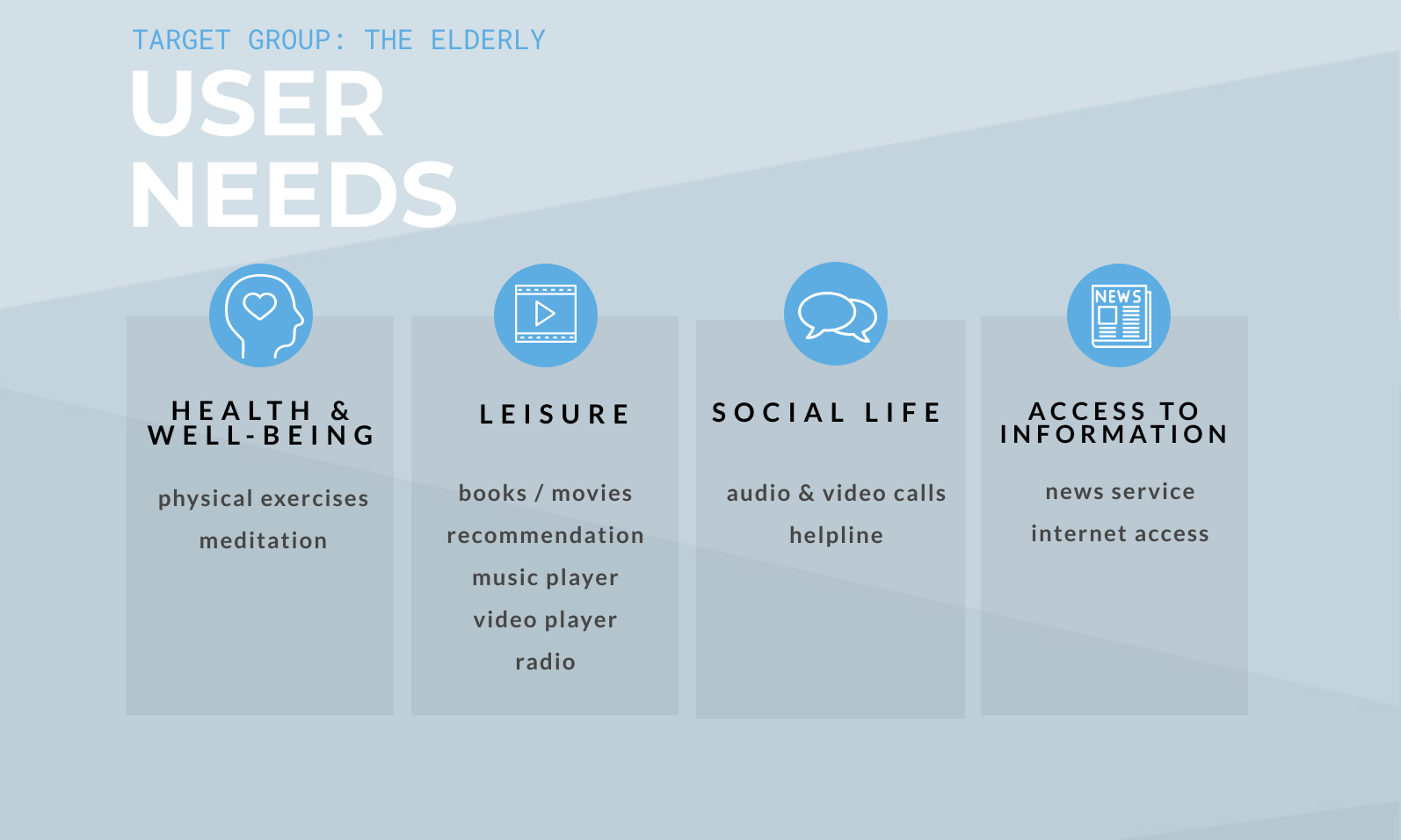

User needs

Having defined our main objectives, we can further specify the assistant's functionalities, which are tailored to our target group. In order to facilitate the accommodation of the assistant, we have focused on users' daily life activities. Research has shown that the elderly most often engage in activities such as reading books, watching TV/DVDs/videos, socialising and physical exercises[4]. Moreover, as we do not want to expose our prospective users to unnecessary stress attributed to the introduction of new technology, we have examined the use of computers and the Internet. It has been found that the most common computer uses among the elderly are: (1) Communication and social support (email, instant messaging, online forums); (2) Leisure and entertainment (activities related to offline hobbies); (3) Information seeking (health and education); (4) Productivity (mental stimulation: games, quizzes)[5]. Additionally, we have added two extra activities: meditation and listening to music, as they have been shown to bring significant social and emotional benefits for the elderly in social isolation[6][7].

Considering our objectives and findings from the literature, we have identified four users' needs and proposed features for each category. The overview is presented below.

Approach

In order for us to tackle the problem as described in the problem statement, we will start with careful research on topics that require our attention, like how and where we can support the mental and physical health of people. From this research, we can create a solution consisting of different disciplines and techniques.

From there, we will start building an assistant that has the following features:

- Speech:

- Speech synthesis: being able to interact with an assistant via speech is a key part of the assistant, to tackle the loneliness problem. Using hardware microphones and pre-existing software, we can create a ‘living’ assistant.

- Speech recognition: being able to talk back to the assistant sparks up the conversation, and makes the assistant more human-like.

- Tracking: the robot has functionalities that enable it to look towards a human person when it is being activated.

- Exercising: to counter the lack of physical activities, the robot has functionalities that can prompt or motivate the user to be physically active, and to track the user’s activity.

- General information: the robot prompts the user to hear the latest news twice every day, once in the morning and once in the evening. Users can always request a new update through a voice command.

- Graphical User Interface: when the user is unable to talk to the assistant, there will be an option to interact with a graphical user interface, displayed on a touch screen.

- Vision: skeleton tracking, facial detection. Use this data to count jumping jacks, look at someone when talking to. (Closely linked with navigation)

Using different feature sets allows our team members to (partly) work individually, speeding up the development process in the difficult time that we are in right now. The parts should be tested together. Not only in the end, but also when building. This can be done by scheduling meetings with the person responsible for building the physical robot. The team members can share code via a source like GitHub, which then can be uploaded onto the robot, allowing members to test their part.

When all parts work together, a video will be created, highlighting all bot functionalities.

The features

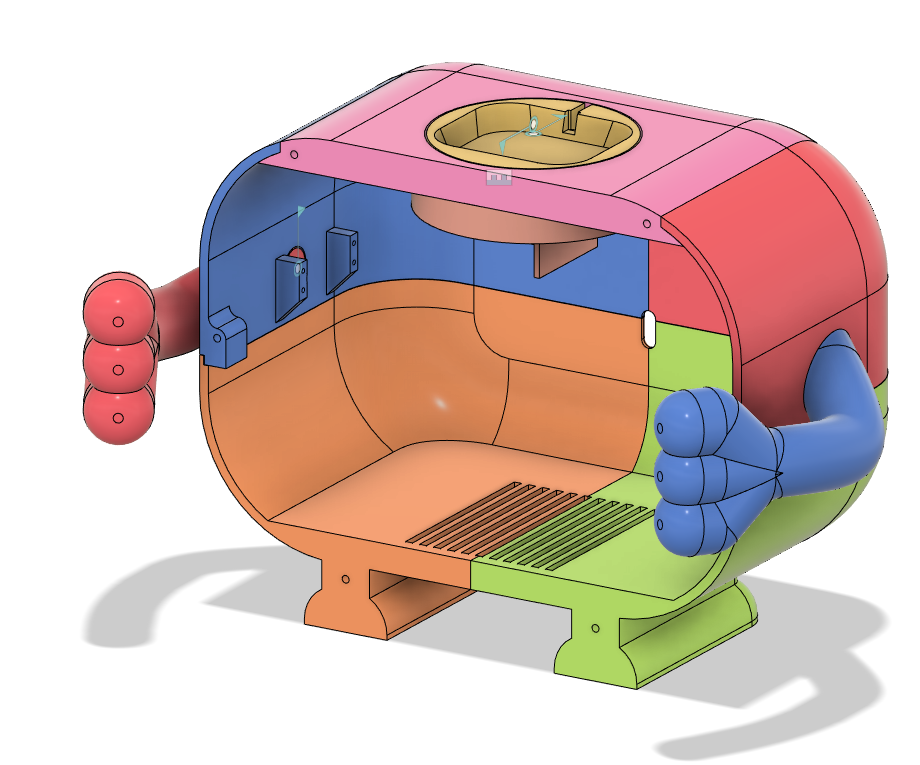

Physical design

Will be formatted nicer in the future. For now, download a turntable view of the preliminary design here.

Physical exercise

Meditation

Books/Movies recommendation

Music Player

Video Player

Radio

Audio & video calls

Helpline

Newsservice

Internet access

Deliverables

By the end of the project, we will deliver the following items:

- Project Description (report via wiki)

- Project Video demonstrating functionalities

- Physical assistant

- Source code

Milestones

| Week | Deliverable |

|---|---|

| 1 (April 20th till April 26th) | Problem statement, think of a concept |

| 2 (April 27th till May 3rd) | Research of SotA |

| 3 (May 4th till May 10th) | Further research, list of required components |

| 4 (May 11th till May 17th) | Power delivery finished, performed all research and acquire components/software for construction |

| 5 (May 18th till May 24th) | Finish physical construction |

| 6 (May 25th till April 31st) | Finish software |

| 7 (June 1st till June 7th) | Finish integration and test with the prospective user |

| 8 (June 8th till June 14th) | Create a presentation, finish Wiki |

| 9 (June 15th till June 21st) | Presentation, on Wednesday |

State of the Art

Indoor localisation has become a highly researched topic over the past years. A robust and compute-efficient solution is important for several fields of robotics. Whether the application is an automated guided vehicle (AGV) in a distribution warehouse or a vacuum robot, the problem is essentially the same. Let us take these two examples and find out how state-of-the-art examples solve this problem.

Logistics AGVs

AGVs for use in warehouses are typically designed solely for use inside a warehouse. Time and money can be invested to provide the AGV with an accurate map of its surroundings before deployment. The warehouse is also custom-built, so fiducial markers [8] are less intrusive than they would be when placed in a home environment. These fiducial markers allow Amazon’s warehouse AGVs to locate themselves in space very accurately. Knowing their location, Amazon’s AGVs find their way around warehouses as follows. They send a route request to a centralised system, which takes into account other AGVs’ paths in order to generate an efficient path, and commands the AGV exactly which path to take. The AGV then traverses the grid marked out with floor-mounted fiducial markers. [9] The AGVs don’t blindly follow this path, however, as they keep on the lookout for any unexpected objects on their path. The system described in Amazon’s relevant patents[8][9][10][11] also includes elegant solutions to detect and resolve possible collisions, and even the notion of dedicated larger cells in the ground grid, used by AGVs to be able to make a turn at elevated speeds.

Robotic vacuum robots

A closer-to-home example could be found when looking at Irobot’s implementation of VSLAM (Visual Simultaneous Localisation And Mapping) [12] in their robotic vacuum cleaners. SLAM is a family of algorithms intended for vehicles, robots and (3d) scanning equipment to both localise themselves in space and to augment the existing map of their surroundings with new sensor-derived information. The patent[12] involving an implementation of VSLAM by Irobot describes a SLAM variant which uses two cameras to collect information about its surroundings. It uses this information to build an accurate map of its environment, in order to cleverly plan a path to efficiently clean all floor surfaces, it can reach. The patent also includes mechanisms to detect smudges or scratches on the lenses of the cameras, and notify the owner of this fact. Other solutions for gathering environmental information for robotic vacuum cleaners use a planar LiDAR sensor to gather information about the boundaries of the floor surface. [13]

SLAM algorithms

This is the point in research about SLAM that had us rethink our priorities. When reading daunting papers about approaches to solve SLAM [14], we were both intrigued and overwhelmed at the same time. While it seems a very interesting field to step into, it promised to consume too large of a portion of our resources during this quartile for us to justify an attempt to do so. Furthermore, we discussed that having our assistant be mobile at all does not directly serve a user need. Thus we decided to leave this out of the scope of the project.

Human - robot interaction

Socially assistive robotics

Socially assistive robots (SARs) are defined as an intersection of assistive robots and socially interactive robots [15]. The main goal of SARs is to provide assistance through social interaction with a user. The human-robot interaction is not created for the sake of interaction (as it is the case for socially interactive robots), but rather to effectively engage users in all sorts of activities (e.g. exercising, planning, studying). External encouragement, which can be provided by SARs, has been shown to boost performance and help to form behavioural patterns [15]. Moreover, SARs have been found to positively influence users’ experience and motivation [16]. Additionally, due to limited human-robot physical contact, SARs have lower safety risks and can be tested extensively. Ideally, SARs should not require additional training and be flexible when it comes to the user’s changing routines and demands. SARs not necessarily have to be embodied; however, embodiment may help in creating social interaction [15] and increases motivation [16].

Social behaviour of artificial agents

Robots that are intended to interact with people have to be able to observe and interpret what a person is doing and then behave accordingly. Humans convey a lot of information through nonverbal behavior (e.g. facial expression or gaze patterns), which in many cases is difficult to encode by artificial agents. This fact can be compensated by relying on other cues such as gestures or position and orientation of interaction [17]. Moreover, human interaction seems to have a given structure that can be used by an agent to break down human behavior and organize its own actions.

Voice recognition

Robots that want to interact with humans need some form of communication. For the robot we are designing one of the means of communication will be audio. The state of the art voice recognition systems use learning neural networks[18] together with natural language processing to process the input and to learn the voice of the user over time. An example of such a system is the Microsoft 2017 conversational speech recognition system.[19] The capabilities of such systems are currently limited to only be able to understand literal sentences and thus its use will be limited to recognizing voice commands.

Speech Synthesis

To allow the robot to respond to the user a text to speech system will be used. Some examples of such systems that are based on Deep Neural Networks include, Tacotron[20], Tacotron2[21] and Deep Voice 3[22]. The current state of the art makes use of end-to-end variant where these systems get trained on huge data sets of (English) voices to create a human-like voice. This system can then be commanded to generate speech that is not limited to some previously set words. These systems are not always as equally robust and thus newer system focus more on making the already high performing system more robust, an example being Fastspeech[23]

Attracting attention of users

Attracting the attention of the user is the prerequisite of an interaction. There are multiple ways to grab this attention. Some examples being touching, moving around, making eye contact, flashing lights and speaking. Research shows that when the user is watching TV, speaking and waving are the best options to attract attention while making sure that people consider the robot grabbing attention to be a positive experience. [24] Trying to acquire eye contact during this experiment did not have positive results and should be avoided to acquire attention, but instead use it for maintaining attention.

Ethical Responsibility

The robot that we are designing, will be interacting with human people, specifically the elderly in this case. This raises questions, what tasks can we let a robot perform, without causing the user to lose human contact. For this, several research papers have been conducted that establish an ethical framework [25] [26].

Next to that, research has also been conducted about what to expect in a relationship with a robot, which is what the elderly will engage in[27][28]. If we want to make the robot persuade the human into physical activities, it is important that we look at how this can be persuasive [29].

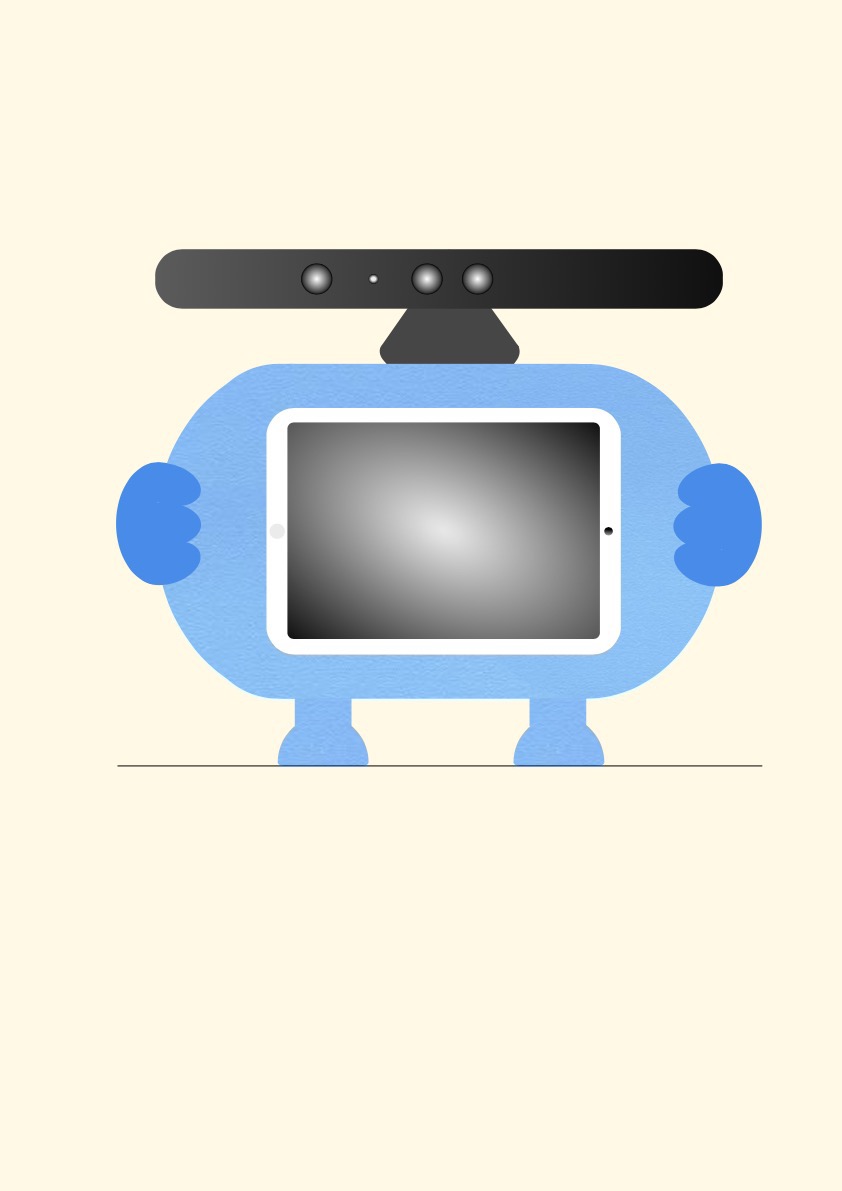

The design

Physical appearance and motivation

The main building component of CALL-E is a torso with a mounted iPad and Kinect placed on a top. The legs and hands are added to increase the friendliness of the assistant. The only moving part is Kinect, which in the given configuration serves as a head with eyes, and can both pan (using a servo) and tilt (using the Kinect's internal tilt motor). Having only one component movable aims at maintaining a balance between complexity of the design and its physical attractiveness. We decided that making other parts able to move would add too much complexity to the mechanical design, and we figured that any more movement is unnecessary. The main body of CALL-E is 3D printed and the whole assistant is relatively portable (size…, weight…), which makes it easy and comfortable to use in many places in a house. The assistant combines functionalities that are normally offered by separate devices. It allows the elderly to enjoy the range of activities without the burden of learning to navigate through several systems. On top of that, CALL-E is designed in the vein of socially assistive robotics, which is hoped to improve users’ experience and motivation. We believe that all these details make CALL-E a valuable addition to the daily life of the elderly.

Interface

The interface is designed to facilitated user experience and accessibility. To better address user needs, the design process was based on research and already existing solutions. The minimalistic design aims at preventing cognitive overload in older adults [30]. The dominant colors are soft pastel. Research has shown that the elderly finds some combinations of colors more pleasant that others. In particular, soft pinky-beiges contrasted with soft blue/greens were indicated as soothing and peaceful [31]. To avoid unnecessary distraction, the screen background is homochromous, and the icons have simple, unambiguous shapes. The font across the tabs is consistent, with increased spacing between letters [32]. The design includes tips for functionality, such as “Swipe up to begin”. The user has a possibility to adjust text and button size [33].

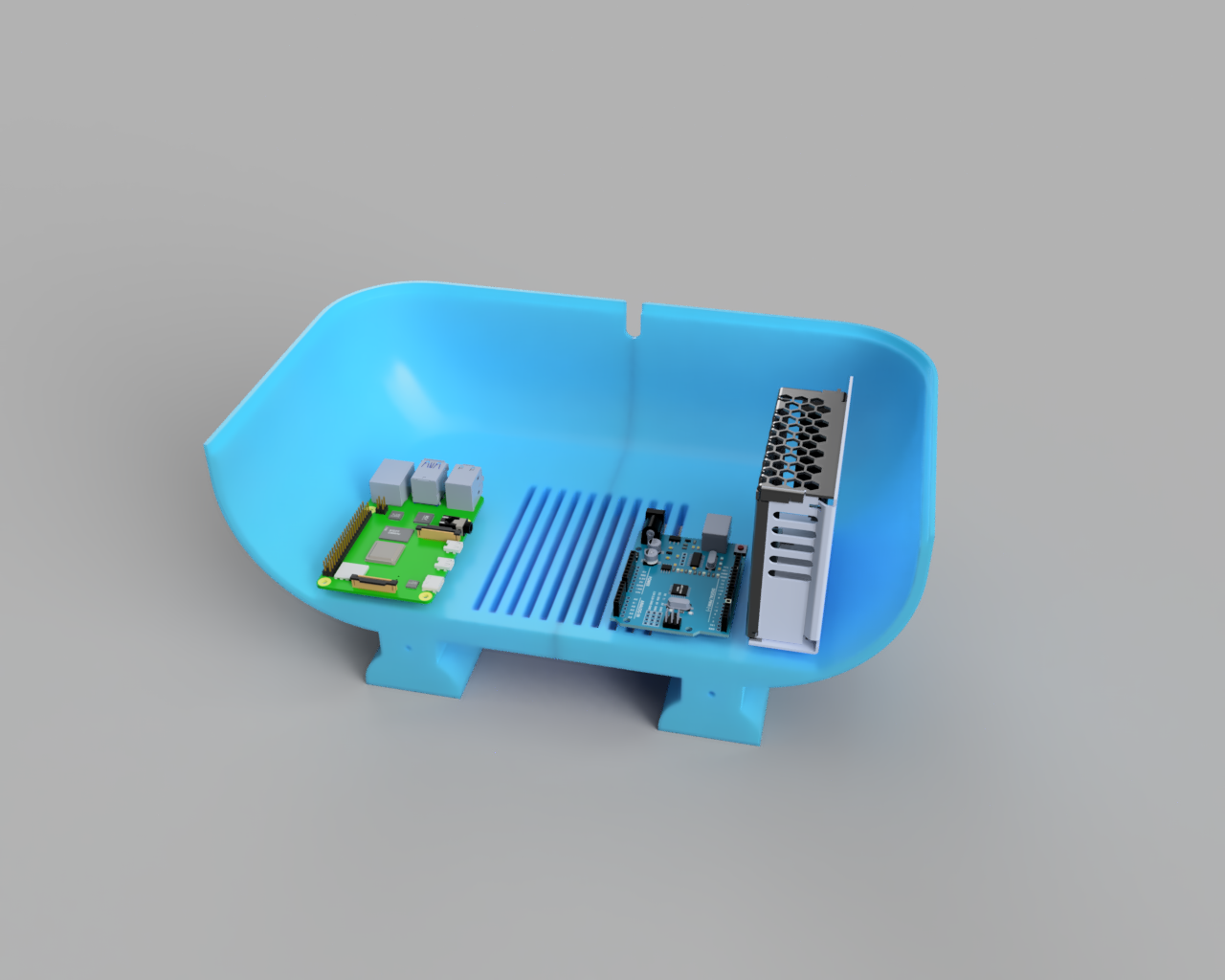

Hardware

Raspberry Pi

The Raspberry Pi was chosen for its computational capabilities and will be used for the computations that CALL-E will need for its features like voice recognition, speech synthesis, visual processing and decision making. Current plans will place the Raspberry Pi away from the Power supply as can be seen in 'Unrestricted access to electronics'. This is to optimise the heat flow as overheating the Pi could cause issues.

Raspberry Pi Fan

The Raspberry Pi of the current generation that will be used has the issue that it gets very hot, up to 75 degrees Celsius[34] and thus we added a fan to cool it. Potentially other components will also be cooled with the fan if placement allows this. Considering current plans do not require overclocking of the Raspberry Pi we do not have to go the extreme of an ICE tower fan, but options do exist if higher clock rates are required.

Arduino

The reason an Arduino was added that even though we already have a Raspberry Pi we needed a device that processes in real-time. The Arduino will thus be used for tasks that the Raspberry Pi is incapable of, like controlling the servos. The Arduino will be placed next to the power supply, away from the Raspberry Pi, to optimise the heat flow even more.

Servos

Two Servomotors, also called servos, will be used. The first to rotate the Kinect and the second to move the right arm of CALL-E. Their movement will be controlled by the Arduino, as mentioned earlier.

Power Supply

The last part is the power supply, the task of our power supply is first to supply power and secondly to act as an AC/DC converter. This is required because the Arduino and the Raspberry Pi run on DC current and on a lower voltage than most outlets supply. The power supply is placed away from the Pi, once more because of heat flow.

Kinect

The main purpose of Kinect is to track the user’s movement and detect faces. Moreover, as mentioned above, Kinect serves the role of the assistant’s head which can turn to look towards a person. This property is employed to facilitate the interaction with the user and make the assistant look more appealing.

iPad

The advantage of using an iPad is that there are already some built-in functionalities that can be used for developing the assistant. Given the time constraints of the project, deployment of the already existing solutions seems both attractive and reasonable. With an iPad as a design component, CALL-E is equipped with a screen and speakers. An iPad is mainly used as an input device and to convey information when the user prefers visual display over voice communication. Additionally, the screen is used during other activities, such as video calls, websites browsing and video playing.

Mechanical considerations

Though not user-centered, the physical construction of CALL-E needed to be ergonomic for whoever might assemble it, even though we are simply making a prototype.

Designing for 3D printing

The parts we are not buying will need to be 3D printed. While 3D printing might be considered magic by machinists, it certainly has its limits. It still brings along a plethora of new requirements on the actual shape of our parts. Firstly, we need to take into account the build volume of the printer. Since CALL-E is taller, wider and deeper than the printer we are using can produce, we need to figure out some clever split. This split also needs to take into account tolerances of parts fitting together, and further print-ability. I will not go into details on reasons why I arrived at the actual split displayed here, but it is what I have ended up at. Every printed part has some preferred orientation to print it in, with what I believe is the best possible combination of bed adhesion and least negative draft.

Mechanical highlights

Serviceability

CALL-E will be easily serviceable, which is very convenient when working on a prototype. After removing the six screws holding on the front of CALL-E, this front can be taken out.

The top, which was previously contained by a 3D printed ledge interlocking the two halves of the main body, can now be lifted out to provide unrestricted access to the internal electronics. This step also opens up the rear slot used to route power cables through. This slot could even be used to route any additional cables to the Raspberry Pi, say for attaching a monitor or other peripherals for debugging purposes.

3D printed bearing surfaces

The Kinect has an internal motor which we will use to tilt the 'head' of CALL-E up and down. Since we want CALL-E to be able to really look at the user, we needed another axis of rotation; pan. We will be using one of the servo's included so-called 'horns', as it features the exact spline needed to properly connect to the servo. This spline has such tight features and tolerances that we prefer to attach our 3D prints to this horn, rather than to the servo directly. We cannot reliably 3D print this horn's spline. This horn will be screwed into a 3D printed base in which the Kinect's base sits. This base will be printed with a concentric bottom layer pattern, and so will the surface this base rests on. This combination makes for two surfaces that experience very little friction when rotating, even when a significant normal force is applied. In our case, this normal force will be applied by the (cantilevered) weight of the Kinect. The movable arm features a slightly different approach, since the bearing surface in that case is cylindrical rather than planar. This allowed for the arm to bear directly into the shell of the main body, without the need to add much complexity to the already difficult to manufacture body shell.

Software

Voice control

We will be using Mycroft [citation needed] as our platform for voice control. In order to set our own 'wakeword', and have CALL-E start listening to the user as soon as he or she calls its name, we used PocketSphinx [citation needed]. We translated the phrase "Hey CALL-E" into phonemes using the phoneme set used in the CMU Pronouncing Dictionary [citation needed]. CALL-E becomes: 'HH EY . K AE L . IY .' The periods indicate pauses in speech, or generally whitespaces.

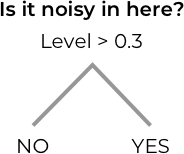

Decisions

The communication with our robot is two-way. Meaning that either the user starts the interaction, or the robot starts the interaction with a user (because of exercising for example). This last process takes place via a decision tree [35]. A decision tree is basically a tree that can be followed, and based on certain conditions, an outcome is determined. So, the decision tree has several input features, and one output.

A decision tree is trained by determining which choices give the most information. The features that we collected were:

- Average noise level over the past 60 minutes

- Current noise level

- Time of day

- Time since the last exercise

- Time since the last call

- Action (call, exercise, play news or do nothing) (label)

Based on these inputs, we generated a random dataset (without labels). Because of the current (corona) circumstances, it is not possible for us to gain the data ourselves using the sensors. To circumvent this problem, we scaled all the features that we had to a normalised scale (e.g. from 0 - 1), which enables us to correctly train the decision tree based on random data. After the random data has been generated, it has been labeled with what we think that the robot should do. After using a training program, a decision tree was generated and could be used to run in the background when our robot was active: it now has brains!

System Overview Diagram

References

- ↑ Infographic: What Share of the World Population Is Already on COVID-19 Lockdown? Buchholz, K. & Richter, F. (April 3, 2020)

- ↑ 2.0 2.1 2.2 Psychological Impact of Quarantine and How to Reduce It: Rapid Review of the Evidence Brooks, S. K., Webster, R. K., Smith, L. E., Woodland, L., Wessely, S., Greenberg, N., & Rubin, G. J. (2020)

- ↑ Depression after exposure to stressful events: lessons learned from the severe acute respiratory syndrome epidemic. Comprehensive Psychiatry, 53(1), 15–23 Liu, X., Kakade, M., Fuller, C. J., Fan, B., Fang, Y., Kong, J., Wu, P. (2012)

- ↑ https://www.researchgate.net/profile/Su_Yen_Chen/publication/233269125_Leisure_Participation_and_Enjoyment_Among_the_Elderly_Individual_Characteristics_and_Sociability/links/5689f04908ae1975839ac426.pdf\Leisure Participation And Enjoyment Among The Elderly: Individual Characteristics And Sociability (Chen & Fu, 2008))

- ↑ https://www.sciencedirect.com/science/article/pii/S0747563210000695\Computer use by older adults: A multi-disciplinary review. (Wagner, Hassanein & Head, 2010)

- ↑ https://www.sciencedirect.com/science/article/pii/S0197457205003794/Integrative Review of Research Related to Meditation, Spirituality, and the Elderly. (Lindberg, 2005)

- ↑ https://link.springer.com/article/10.1007/s10902-006-9024-3/Uses of Music and Psychological Well-Being Among the Elderly. (Laukka, 2006)

- ↑ 8.0 8.1 US20160334799A1: Method and System for Transporting Inventory Items, Amazon Technologies Inc, Amazon Robotics LLC. (Nov 17, 2016) Retrieved April 26, 2020

- ↑ 9.0 9.1 CA2654260: System and Method for Generating a Path for a Mobile Drive Unit, Amazon Technologies Inc. (November 27, 2012) Retrieved April 26, 2020.

- ↑ US8220710B2: System and Method for Positioning a Mobile Drive Unit, Amazon Technologies Inc. (July 17, 2012) Retrieved April 26, 2020.

- ↑ US20130302132A1: System and Method for Maneuvering a Mobile Drive Unit, Amazon Technologies Inc. (Nov 14, 2013) Retrieved April 26, 2020.

- ↑ 12.0 12.1 US10222805B2: Systems and Methods for Performing Simultaneous Localization and Mapping using Machine Vision Systems, Irobot Corp. (March 5, 2019) Retrieved April 26, 2020.

- ↑ US10162359B2: Autonomous Coverage Robot, Irobot Corp. (Dec 25, 2018) Retrieved April 26, 2020.

- ↑ [1]: FastSLAM: A Factored Solution to the Simultaneous Localization and Mapping Problem, M. Montemerlo et al.

- ↑ 15.0 15.1 15.2 Defining socially assistive robotics. Feil-Seifer D., (2005)

- ↑ 16.0 16.1 Attitudes Towards Socially Assistive Robots in In- telligent Homes: Results From Laboratory Studies and Field Trials. Torta, E., Oberzaucher, J., Werner, F., Cuijpers, R. H., & Juola, J. F. (2013)

- ↑ Etiquette: Structured Interaction in Humans and Robots. Ogden B. & Dautenhahn K. (2000)

- ↑ Amodei, D., Ananthanarayanan, S., Anubhai, R., Bai, J., Battenberg, E., Case, C., ... & Chen, J. (2016, June). Deep speech 2: End-to-end speech recognition in English and mandarin. In International conference on machine learning (pp. 173-182)

- ↑ Xiong, W., Wu, L., Alleva, F., Droppo, J., Huang, X., & Stolcke, A. (2018, April). The Microsoft 2017 conversational speech recognition system. In 2018 IEEE international conference on acoustics, speech and signal processing (ICASSP) (pp. 5934-5938). IEEE

- ↑ Yuxuan Wang, RJ Skerry-Ryan, Daisy Stanton, Yonghui Wu, Ron J Weiss, Navdeep Jaitly, Zongheng Yang, Ying Xiao, Zhifeng Chen, Samy Bengio, et al. Tacotron: Towards end-to-end speech synthesis. arXiv preprint arXiv:1703.10135, 2017

- ↑ Jonathan Shen, Ruoming Pang, Ron J Weiss, Mike Schuster, Navdeep Jaitly, Zongheng Yang, Zhifeng Chen, Yu Zhang, Yuxuan Wang, Rj Skerrv-Ryan, et al. Natural tts synthesis by conditioning wavenet on mel spectrogram predictions. In 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pages 4779–4783. IEEE, 2018.

- ↑ Wei Ping, Kainan Peng, Andrew Gibiansky, Sercan O. Arik, Ajay Kannan, Sharan Narang, Jonathan Raiman, and John Miller. Deep voice 3: 2000-speaker neural text-to-speech. In International Conference on Learning Representations, 2018.

- ↑ Ren, Y., Ruan, Y., Tan, X., Qin, T., Zhao, S., Zhao, Z., & Liu, T. Y. (2019). Fastspeech: Fast, robust and controllable text to speech. In Advances in Neural Information Processing Systems (pp. 3165-3174)

- ↑ Torta, E. (2014). Approaching independent living with robots. (pp. 89-100)

- ↑ Robot carers, ethics, and older people. Sorell T., Draper H., (2014)

- ↑ [https://ieeexplore.ieee.org/document/5751968 Socially Assistive Robotics . Feil-Seiver D.., Mataric M., (2011)]

- ↑ An Ethical Evaluation of Human-Robot Relationships. De Graaf M., (2016)

- ↑ Review: Seven Matters of Concern of Social Robots and Older People. Frennert S., (2014)

- ↑ Robotic Nudges: The Ethics of Engineering a More Socially Just Human Being. Borenstein J., (2016)

- ↑ Polyuk, Sergey. “A Guide to Interface Design for Older Adults.” Toptal Design Blog, Toptal, 20 June 2019, www.toptal.com/designers/ui/ui-design-for-older-adults.

- ↑ Colours for living and learning. (n.d.). Retrieved from https://www.resene.co.nz/homeown/use_colr/colours-for-living.htm

- ↑ Digital, Spire. “Accessible Design: Designing for the Elderly.” Medium, UX Planet, 28 Feb. 2019, uxplanet.org/accessible-design-designing-for-the-elderly-41704a375b5d

- ↑ About The AuthorOllie is co-founder & CEO at Milanote, and About The Author. “Designing For The Elderly: Ways Older People Use Digital Technology Differently.” Smashing Magazine, 5 Feb. 2015, www.smashingmagazine.com/2015/02/designing-digital-technology-for-the-elderly/

- ↑ https://www.martinrowan.co.uk/2019/06/raspberry-pi-4-hot-new-release-too-hot-to-use-enclosed/

- ↑ [Matching and Prediction on the Principle of Biological Classification. Belson W., (1959)]

Image Kinect: https://upload.wikimedia.org/wikipedia/commons/thumb/6/67/Xbox-360-Kinect-Standalone.png/266px-Xbox-360-Kinect-Standalone.png

Image Servo: https://4.imimg.com/data4/AI/UT/MY-7759807/servo-motor-500x500.png

Image microphone: https://d2dfnis7z3ac76.cloudfront.net/shure_product_db/product_images/files/c4f/0d9/07-/thumb_transparent/c165d06247b520e9c87156f8322804a2.png

Image speakers: https://lh3.googleusercontent.com/proxy/W0IEorEMeX71Yi4WEW4CdYwa2Oi9BpnRL60v1ZT8EKelTDm8Tx-S8qQP3pivQIPlE8pdWb7pYhfVN-BAqsxv04WDVVVYcvT5aqmwFtMvOGeaDq28pE0qYUHb

Image iPad: https://www.powerchip.nl/6403-thickbox_default/ipad-wi-fi-128gb-2019-zilver.jpg