PRE2019 4 Group6: Difference between revisions

TUe\s153741 (talk | contribs) |

TUe\s153741 (talk | contribs) |

||

| Line 45: | Line 45: | ||

= Limitations and issues = | = Limitations and issues = | ||

% TODO | |||

= USE Aspects = | = USE Aspects = | ||

Revision as of 12:09, 7 May 2020

Group Members

| Name | Student ID | Study | |

|---|---|---|---|

| Coen Aarts | 0963485 | Computer Science | c.p.a.aarts@student.tue.nl |

| Max van IJsseldijk | 1325930 | Mechanical Engineering | m.j.c.b.v.ijsseldijk@student.tue.nl |

| Rick Mannien | 1014475 | Electrical Engineering | r.mannien@student.tue.nl |

| Venislav Varbanov | 1284401 | Computer Science | v.varbanov@student.tue.nl |

Introduction

%% TODO

Problem statement

For the past few decades, the population of old people has been rapidly increasing, due to the advancement of healthcare education. This rapid increase means that a lot more caretakers are necessary to help get those people through the day. Not only physically, but also mentally. Depression and loneliness are right around the corner for a lot of old people if there is not enough interaction with caretakers or family members. This is especially the case for the elderly as many studies have demonstrated that the prevalence of depressive symptoms increases with age.[1] Furthermore, other studies have shown that the feeling of loneliness is one of the three major factors that lead to depression, suicide and suicide attempts[2] It is therefore vital that there are enough caretakers to prevent this. Unfortunately, the shortage of caretakers is growing. To combat this the usage of care take robots is being developed quite extensively. These robots can not yet replace the full physical help a real person can give but can give some mental help by giving the elderly someone to talk to. This social interaction can include having a simple conversation with the person or routing a call to family members. In order to have a more complicated conversation, the robot requires to understand more about the state of the person. Finding the state of a person is very important as humans are emotional creatures. If a robot could get a clue how someone is feeling, this could greatly improve the interaction. While increasing the intelligence of the robot would solve this issue, this proves to be very difficult. Therefore, only small steps in increasing the robots world view can be made.

Objective

The proposal for this project is to use emotion detection in real-time to get a feeling about how the person is feeling so that it can use this information to further help the person. This reading of emotions from the face will not be perfect, but there are already some good results using neural networks.[3] However, the implementation of this emotion detection for specific use on the elderly with a chatbot has not been explored very deeply.

State of the Art

Previous Work

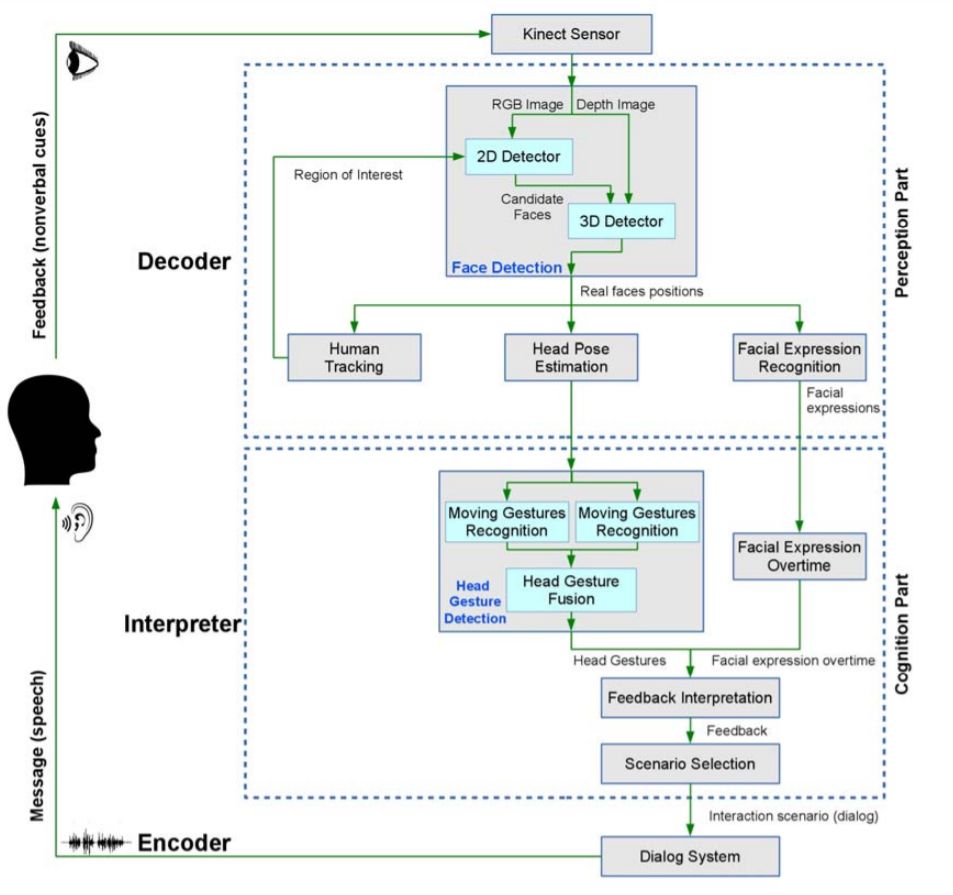

The facial recognition with emotion detection software has already been done before. One method that could be employed for facial recognition is done by the following block diagram:

This setup was proposed for a robot to interact with a person based on the persons' emotion. However, the verbal feedback was not implemented.

Limitations and issues

% TODO

USE Aspects

Users

Lonely elderly people are the main user of the robot. How these elderly people are found is via a government-funded institute where people can make applications in order to get such a robot. These applications can be filled in by everyone(elderly themselves, friends, physiatrists, family, caretakers) with the consent of the elderly person as well. In cases where the health of the elderly is in danger due to illnesses(Final stages dementia etc) the consent of the elderly is not necessary if the application is filled by doctors or physiatrists. If applicable a caretaker/employee of the institute will go visit the elderly person to check whether the care robot is really necessary or different solutions can be found. If found applicable the elderly will be assigned a robot. They will interact with the robot on a daily basis by talking to it and the robot will make an emotional profile of the person which it uses to help the person through the day. When the robot detects certain negative emotions(sadness, anger eg.) it can ask if it can help with various actions like calling family, friends, real caretakers, or having a simple conversation with the person.

Society

Society consists of three main stakeholders. The government, the medical assistance and visitors. The government is the primary funding for an institute that regulates the distribution of the emotion detecting robots. With this set-up, the regulation of the distribution is easier as the privacy violation of the real-time emotion detection is quite extensive. Furthermore, the government is accountable for making laws to regulate what the robots could do and how and what data can be sent to family members or various third-parties. Secondly, the robots may deliver feedback to hospitals or therapists in case of severe depression or other negative symptoms that can not simply solved by a simple conversation. The elderly person who still has autonomy as the primary value must always give consent for sharing data or calling certain people. For people with severe illnesses, this can be overruled by doctors or physiatrics to force the person to get help in the case of emergencies. Finally, any visiting individual may indirectly be exposed to the robot. To ensure their emotions are not unwillingly measured or privacy compromised, laws and regulations must be set up.

Enterprise

Robots must be developed, created and dispatched to the elderly. The relevant enterprise stakeholders, in this case, are the developing companies, the government, the hospitals and therapists to ensure logistic and administrative validity.

Approach

This project has multiple problems that need to be solved in order to create a system / robot that is able to combat the emotional problems that the elderly are facing. In order to categorize the problems are split into three main parts:

Technical

The main technical problem faced for our robot is to be able to reliable read the emotional state of another person and using that data being able to process this data. After processing the robot should be able to act accordingly to a set of different actions.

Social / Emotional

The robot should be able to act accordingly, therefore research needs to be done to know what types of actions the robot can perform in order to get positive results. One thing the robot could be able to do is have a simple conversation with the person or start the recording of an audio book in order to keep the person active during the day.

Physical

What type of physical presence of the robot is optimal. Is a more conventional robot needed that has a somewhat humanoid look. Or does a system that interacts using speakers and different screens divided over the room get better results. Maybe a combination of both.

The main focus of this project will be the technical problem stated however for a more complete use-case the other subject should be researched as well.

Papers on Emotions, Creating the AI and creating the Chatbot

Implementation of facial data in chatbot

Once the facial recognition software has gathered information about the emotional state of the person this information has to be implemented somehow. In this section, there will be discussed how the state of the person will help the robot to personalize how it reacts to the person.

Data to use

The recognition software will detect both whether there is a face in view, together with what emotion is expressed and with what intensity. This data can then be used to determine how positive or negative the person is feeling. These negative emotions are very important to detect, as prolonged negative feelings may lead to depression and anxiety. Recognizing these emotions timely can prevent this, negating help needed from medical practitioners.[5] For our model the following outputs will be used:

- Is there a face in view, Yes/No

- What emotions are expressed, happiness/sadness/disgust/fear/surprise/anger.

- How intense are the different emotions, 0 to 1 for every emotion.

Emotional Model

One very important thing is what kind of emotional interpreter is being used, as there are many different models for describing complex emotions. The most basic interpretation uses six basic emotions that were found to be universal between cultures. This model was developed during the 1970s, where psychologist Paul Eckman identified these six basic emotions to be happiness, sadness, disgust, fear, surprise, and anger.[6] As the goal of the robot is to help elderly people through the day, it probably will not be necessary to have a more complex model as it is mostly about the presence of negative feelings. The further classification of what emotion is expressed can help with finding a solution for helping the person.

How negative or positive a person is feeling can be expressed by the valance state of the person. This valance is the measurement of the affective quality referring to the intrinsic good-ness(positive feelings) or bad-ness(negative feelings). For some emotions the valance is quite clear eg. The negative effect of anger, sadness, disgust, fear or the positive effect of happiness. However, for surprise it can be both positive and negative depending on the context.[7] Because of this, surprise will not be taken into account for the valance measurement. The valance will be simply calculated with the intensity of the emotion and whether it is positive or negative. When the results are not as expected the weight of different emotions can be altered to better fit the situation.

Implementation of valance

Once the presence of every emotion has been detected and whether the individual is feeling positive or negative, the robot can use this information. When the robot detecs that the person is having negative emotions the robot will ask if something is wrong. If the person reacts with yes the robot will ask how it can help with the problem. In the case of a light negative feeling, the robot will help with having a simple conversation. When simple psychiatric help is needed the robot can help by using the ELIZA program. This program was developed to make a person feel better by asking questions and encouraging the person to think positive. If this is not wanted by the person the robot can also ask if the person would want to contact family members/friends. If this is also declined the robot will ask if the person is sure the robot can not help. When the answer is again no, the robot will back off for 30 minutes for light negative emotions. When the emotions get more negative, the robot will immediately react again by asking why the person is feeling so negative. When given consent the robot can call medial specialists or physiatrist so that the person can talk to them. If the person declines, the robot will point out to the person that it is there to help them and that calling someone will really help them. If declined again the robot will back off again, respecting the autonomy of the person.

When the person has a specific illness the settings can be changed so that the robot will always call help even if the person does not give consent. In order to have this setting changed a doctor and psychologist have to confirm that the persons' autonomy can be reduced.

Planning

| Week | Task | Date/Deadline | Coen | Max | Rick | Venislav |

|---|---|---|---|---|---|---|

| 1 | ||||||

| Introduction meeting | 20.04 | 00:00 | ||||

| Group meeting: subject choice | 25.04 | 00:00 | 00:00 | 00:00 | ||

| 2 | ||||||

| Wiki: problem statement | 29.04 | 00:00 | ||||

| Wiki: objectives | 29.04 | 00:00 | ||||

| Wiki: users | 29.04 | 00:00 | ||||

| Wiki: user requirements | 29.04 | 01:00 | ||||

| Wiki: approach | 29.04 | 00:00 | ||||

| Wiki: planning | 29.04 | 00:00 | ||||

| Wiki: milestones | 29.04 | 00:00 | ||||

| Wiki: deliverables | 29.04 | 00:00 | ||||

| Wiki: SotA | 29.04 | 00:00 | 00:00 | 00:00 | 00:00 | |

| Group meeting | 30.04 | 00:00 | ||||

| Tutor meeting | 30.04 | 00:00 | ||||

Milestones

| Week | Milestone |

|---|---|

| 1 (20.04 - 26.04) | Subject chosen |

| 2 (27.04 - 03.05) | Project initialised |

| 3 (04.05 - 10.05) | Facial/Emotional recognition research finalised |

| 4 (11.05 - 17.05) | Facial/Emotional recognition software developed |

| 5 (18.05 - 24.05) | Chatbot research finalised |

| 6 (25.05 - 31.05) | Chatbot implemented |

| 7 (01.06 - 07.06) | Facial/Emotional recognition software integrated in Chatbot |

| 8 (08.06 - 14.06) | Wiki page completed |

| 9 (15.06 - 21.06) | Chatbot demo video and final presentation completed |

| 10 (22.06 - 28.06) | N/A |

| 11 (29.06 - 05.07) | N/A |

References

- ↑ Misra N. Singh A.(2009, June) Loneliness, depression and sociability in old age, referenced on 27/04/2020

- ↑ Green B. H, Copeland J. R, Dewey M. E, Shamra V, Saunders P. A, Davidson I. A, Sullivan C, McWilliam C. Risk factors for depression in elderly people: A prospective study. Acta Psychiatr Scand. 1992;86(3):213–7. https://www.ncbi.nlm.nih.gov/pubmed/1414415

- ↑ Zhentao Liu, Min Wu, Weihua Cao, Luefeng Chen, Jianping Xu, Ri Zhang, Mengtian Zhou, Junwei Mao. A Facial Expression Emotion Recognition Based Human-robot Interaction System. IEEE/CAA Journal of Automatica Sinica, 2017, 4(4): 668-676 http://html.rhhz.net/ieee-jas/html/2017-4-668.htm

- ↑ Saleh, S.; Sahu, M.; Zafar, Z.; Berns, K. A multimodal nonverbal human-robot communication system. In Proceedings of the Sixth International Conference on Computational Bioengineering, ICCB, Belgrade, Serbia, 4–6 September 2015; pp. 1–10. http://html.rhhz.net/ieee-jas/html/2017-4-668.htm

- ↑ Maja Pantic and Marian Stewart Bartlett (2007). Machine Analysis of Facial Expressions, Face Recognition, Kresimir Delac and Mislav Grgic (Ed.), ISBN: 978-3-902613-03-5, InTech, Available from: http://www.intechopen.com/books/face_recognition/machine_analysis_of_facial_expressions

- ↑ Ekman P.(2017, August) My Six Discoveries, Referenced on 6/05/2020.https://www.paulekman.com/blog/my-six-discoveries/

- ↑ Maital Neta, F. Caroline Davis, and Paul J. Whalen(2011, December) Valence resolution of facial expressions using an emotional oddball task, Available from: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3334337/#__ffn_sectitle/

A Natural Visible and Infrared Facial Expression Database for Expression Recognition and Emotion Inference, 2010 https://guilfordjournals.com/doi/abs/10.1521/pedi.1999.13.4.329

Emotional factors in robot-based assistive services for elderly at home, 2013 https://ieeexplore.ieee.org/abstract/document/6628396

Evidence and Deployment-Based Research into Care for the Elderly Using Emotional Robots https://econtent.hogrefe.com/doi/abs/10.1024/1662-9647/a000084?journalCode=gro

Development of whole-body emotion expression humanoid robot, 2008 https://ieeexplore.ieee.org/abstract/document/4543523

Affective Robot for Elderly Assistance, 2009 https://www.researchgate.net/publication/26661666_Affective_robot_for_elderly_assistance

Robot therapy for elders affected by dementia, 2008 https://ieeexplore.ieee.org/abstract/document/4558139