Football Table Simulation Visualization Tool: Difference between revisions

| Line 26: | Line 26: | ||

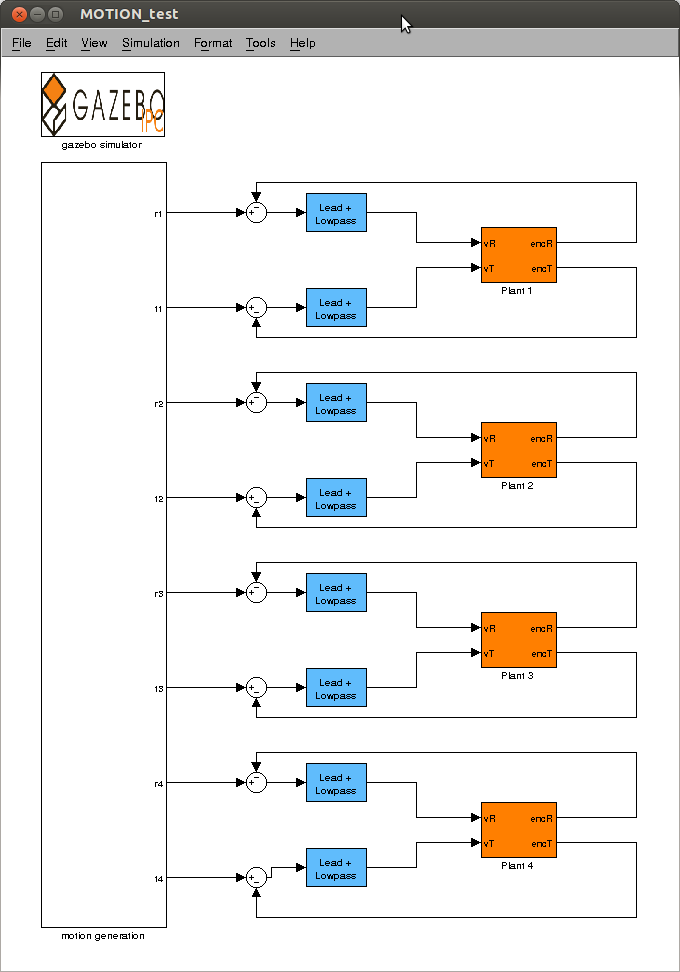

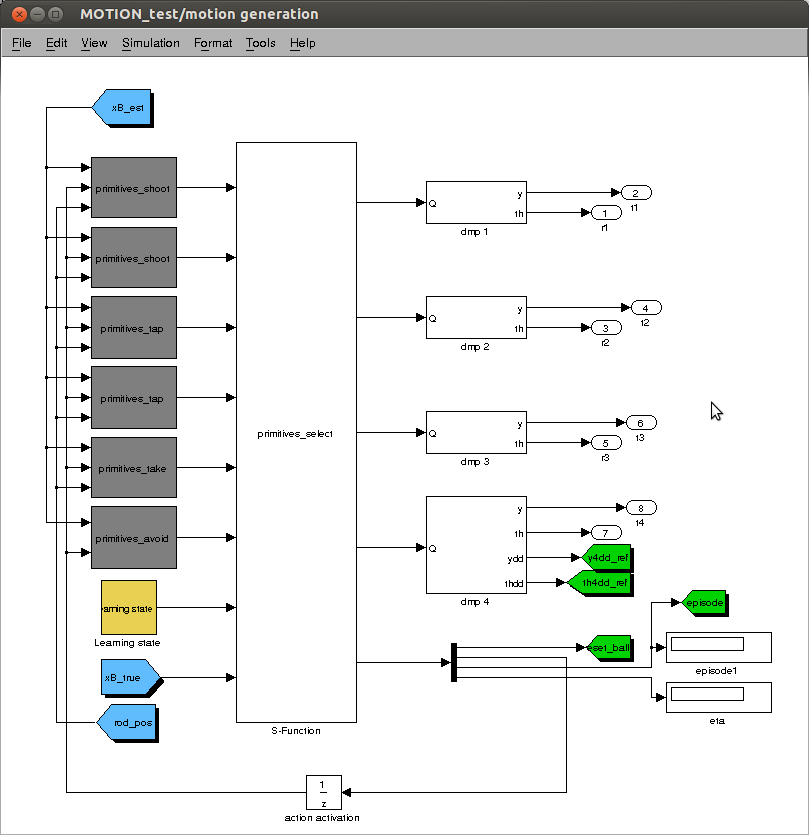

[[File:MOTION_test_RL.png|thumb|center|upright=1.5| Motion generation window <code>MOTION_test.mdl</code>]] | [[File:MOTION_test_RL.png|thumb|center|upright=1.5| Motion generation window <code>MOTION_test.mdl</code>]] | ||

<p> | <p> | ||

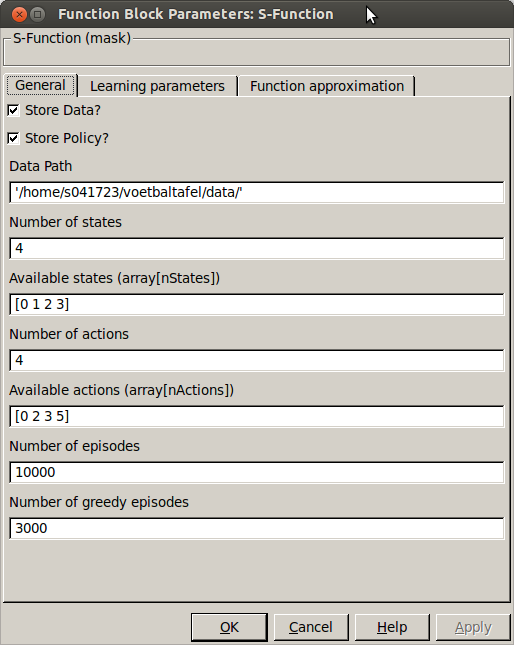

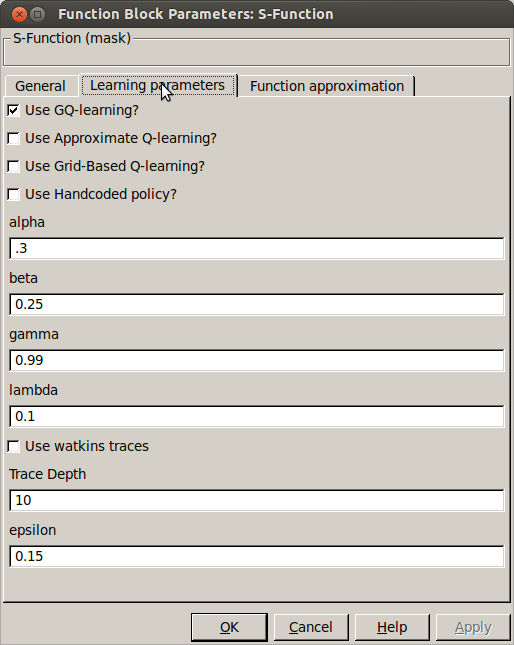

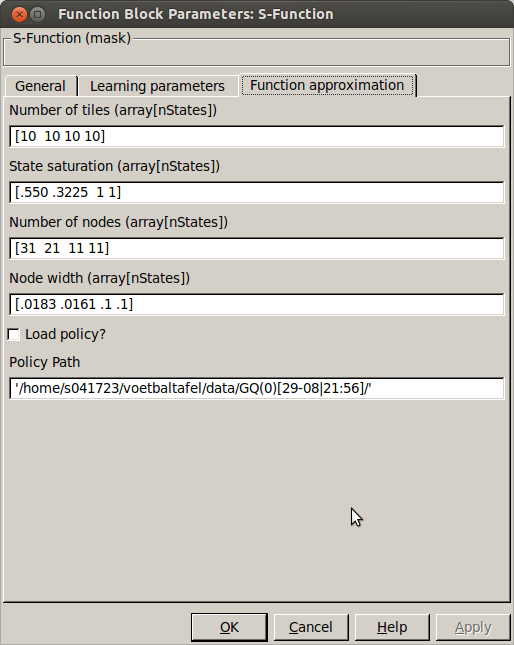

Most relevant settings regarding the actions and the reinforcement learning are set in their respective masks. In the mask of <code>primitives_select.cpp</code> you can set all the parameters described in [http://cstwiki.wtb.tue.nl/index.php?title=Football_Table_RL#Library_functions this RL section]. | Most relevant settings regarding the actions and the reinforcement learning are set in their respective masks. In the mask of <code>primitives_select.cpp</code> you can set all the parameters described in [http://cstwiki.wtb.tue.nl/index.php?title=Football_Table_RL#Library_functions this RL section].<br/> | ||

In the tab named ''general'' the following settings are available: | |||

;Store Data | |||

:Checkbox to enable the storage of data, this will store the buffers and the performance of the simulation you run. | |||

;Store Policy | |||

:Checkbox to enable storage of the policy. This will store the learned weight vectors <math>\theta,~w</math>, but also other settings such as the node positions (centers), number of states and actions etc. | |||

;Number of states | |||

:Number of states used as input to the learning agent | |||

;Available states | |||

:Vector with indices of the states, the allow quick changes you can select a couple of seperate states from the input of the simulink block. I.e. we only want the lateral position and speed of the ball. We can then say: Number of states = 2, available states [1 3] indicating index 1 and 3 in the learning state. Make sure to adjust the function approximation accordingly. | |||

</p> | </p> | ||

[[File:RL_tab1.png|thumb|center|upright=1.0| General settings ]][[File:RL_tab2.png|thumb|center|upright=1.0| RL settings ]][[File:RL_tab3.png|thumb|center|upright=1.0| RL settings ]] | [[File:RL_tab1.png|thumb|center|upright=1.0| General settings ]] | ||

[[File:RL_tab2.png|thumb|center|upright=1.0| RL settings ]][[File:RL_tab3.png|thumb|center|upright=1.0| RL settings ]] | |||

Revision as of 14:01, 16 September 2013

Author: Erik Stoltenborg

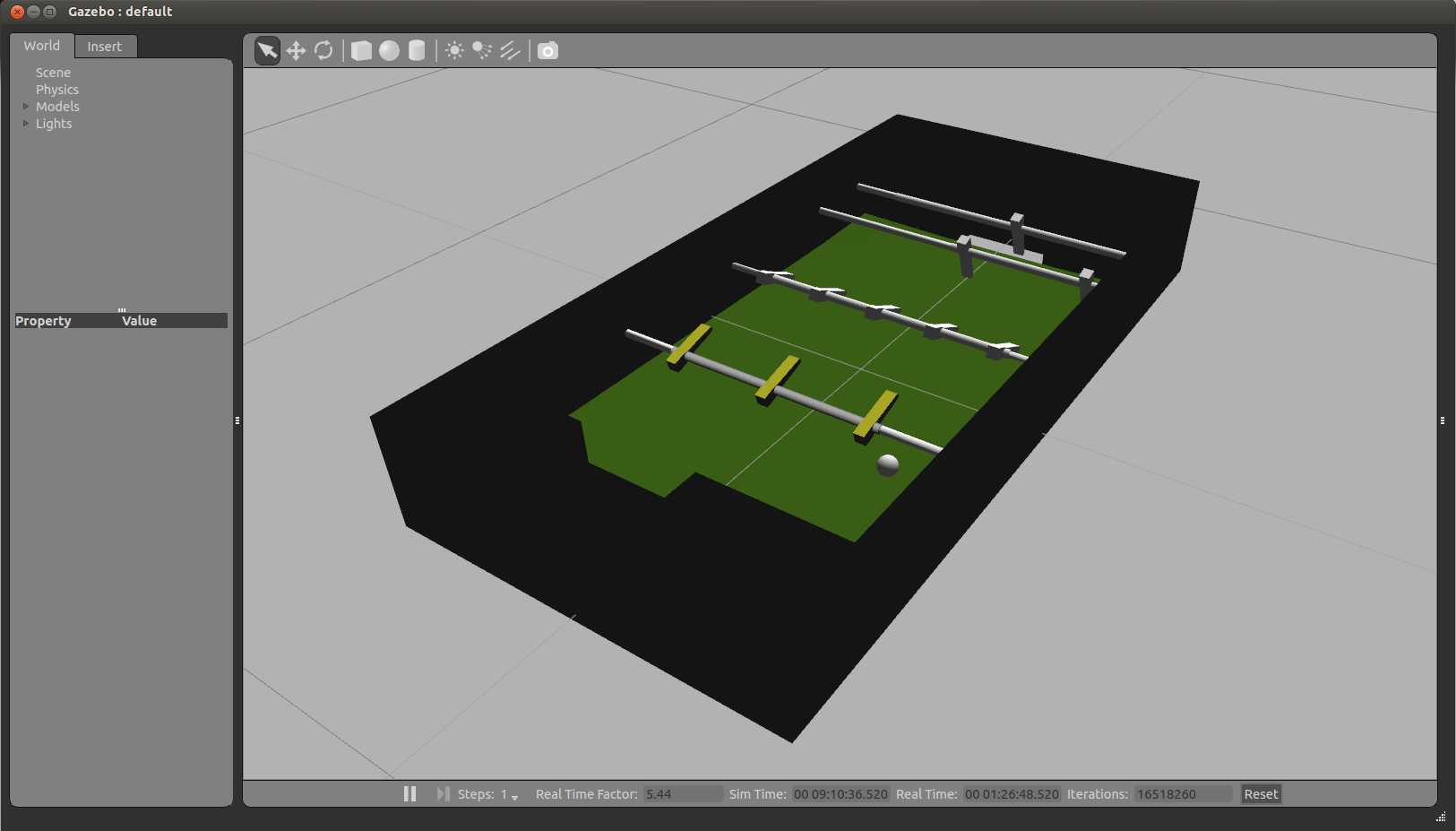

Gazebo

A simulator is developed to easily test new algorithms without depending on the actual robot. In has been developed using gazebo (w/o the use of ROS), a so-called physics abstraction layer, which employs ODE combined with OGRE for rendering. Gazebo has been very well maintained since 2012, since it became the official simulator for the DARPA Robotics Challenge. The environment/robots are described in the SDF format, which is very simular to *.xml. It can be easily combined with CAD-files, in this case is combined with a Collada [1] drawing for more complex geometry. A previous attempt was created using MORSE, however this was aborted because the poor tune-ability of the physics and limited options for communication.

Synchronized Inter-process Communication

To date, Gazebo is mostly used in combination with [http://wiki.ros.org/ ROS. However using ROS plugins yields a lot of overhead, moreover the timers and communication provided there or not accurate enough to ensure an accurate casual link. Therefore a plug-in was created enabling fast light-weight inter-process communication, allowing to run simulation to be ran up to 20 times faster than real-time without loss of causality.

This simulation communicates with Matlab Simulink using Interprocess Communication (IPC) wrapper library for the POSIX libraries, the wrapper makes the use of shared memory more accesible and easy to use. It uses shared memory protected by mutexes and condition variables enabling a thread-safe, synchronized, causal communication between two processes e.g. Gazebo and Simulink. This allows us to use the Gazebo simulator as a plant in our simulink control loop. Moreover this wrapper library could be used for (safe) IPC between two arbitrary processes. More on this library, it's basic principles and how it is used can be found here.

Matlab Simulink

The Simulink side of things can be found in MOTION_test.mdl, this is not an external, but is ran in simulink itself in the regular way. The main window is shown below, the simulator part is the the gazebo sub-system. The motion-generation contains the reinforcement learning, attractor dynamics and constraints for the actions.

MOTION_test.mdl

MOTION_test.mdl

Most relevant settings regarding the actions and the reinforcement learning are set in their respective masks. In the mask of primitives_select.cpp you can set all the parameters described in this RL section.

In the tab named general the following settings are available:

- Store Data

- Checkbox to enable the storage of data, this will store the buffers and the performance of the simulation you run.

- Store Policy

- Checkbox to enable storage of the policy. This will store the learned weight vectors [math]\displaystyle{ \theta,~w }[/math], but also other settings such as the node positions (centers), number of states and actions etc.

- Number of states

- Number of states used as input to the learning agent

- Available states

- Vector with indices of the states, the allow quick changes you can select a couple of seperate states from the input of the simulink block. I.e. we only want the lateral position and speed of the ball. We can then say: Number of states = 2, available states [1 3] indicating index 1 and 3 in the learning state. Make sure to adjust the function approximation accordingly.