Embedded Motion Control 2017 Group 9: Difference between revisions

TUe\s167500 (talk | contribs) |

TUe\s167500 (talk | contribs) |

||

| Line 218: | Line 218: | ||

---- | ---- | ||

We use the similar way to detect if door is opened after 5 second. The forwad direction laser bundle has been used. If both boundaries have longer distance and more than 75% laser line inside the laser bundle is longer than detection radius, PICO will consider door has opened. | We use the similar way to detect if door is opened after 5 second. The forwad direction laser bundle has been used. If both boundaries have longer distance and more than 75% laser line inside the laser bundle is longer than detection radius, PICO will consider door has opened. | ||

====Open Space Detection==== | |||

---- | |||

{| class="wikitable" style="text-align: left; width: 1500px; height: 200px;" | |||

|- | |||

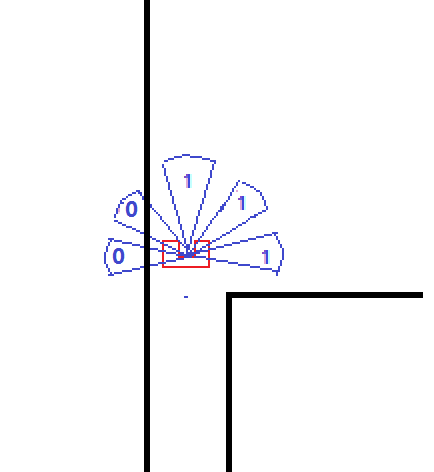

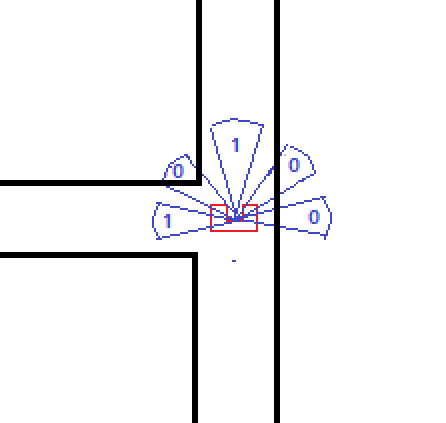

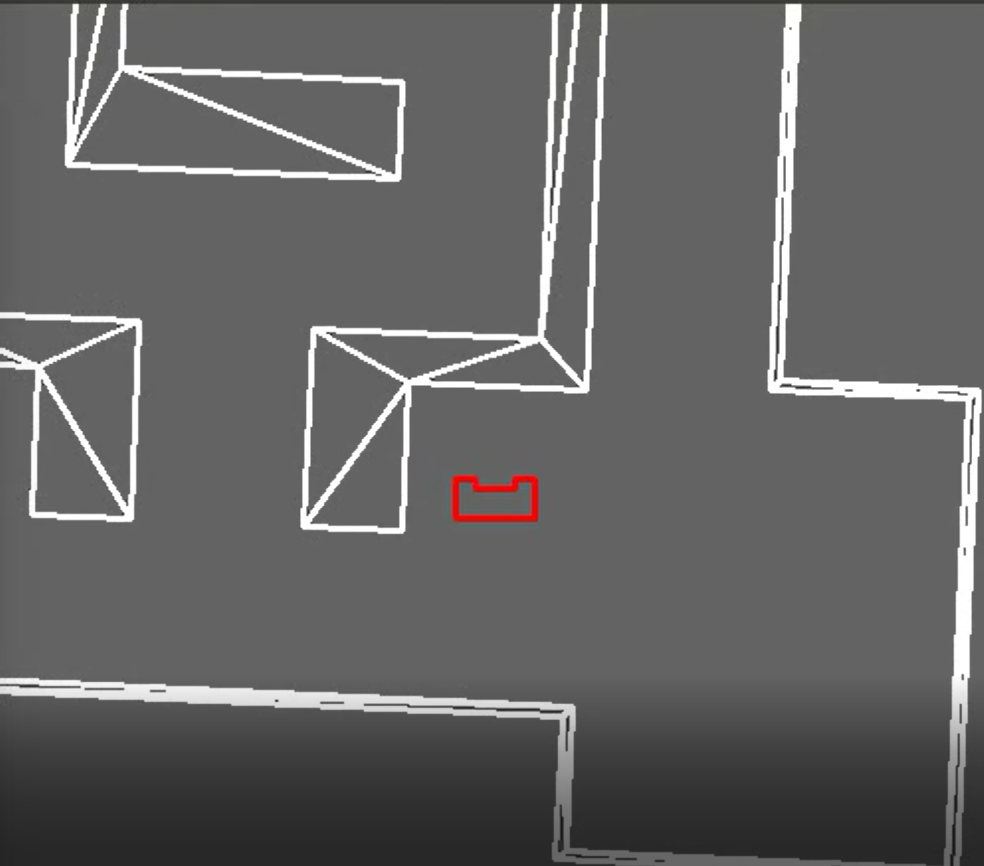

|"Open Space Detection" block is an extension of "Junction Detection" block. By including another two laser beams at -45° and 45° respectively,the feature of open-space can be distinguished from that of normal junctions. the differences will be introduced below in three different cases in detail:<br> | |||

|- | |||

| | |||

:::'''Case1:'''<br> | |||

:::Open space appears on the both side of the robot as showed in Figure "openspace-Detection-case1". The feature of this case is likely to confuse the robot with cross junction in Figure "openspave-case1"(right). With laser beam at -45° and 45° added, we can distinguish this case with cross junction because in open space, laser beam at -45° and 45° cannot detect any obstacles within its detecting radius. While if it is cross junction, some obstacle will be detected.<br> | |||

|[[File:Openspace detection case1.1.png|300px|thumb|openspace_Detection_case1]] | |||

|[[File:Openspace detection case1.png|225px|thumb|openspace_Detection_case1]] | |||

|- | |||

| | |||

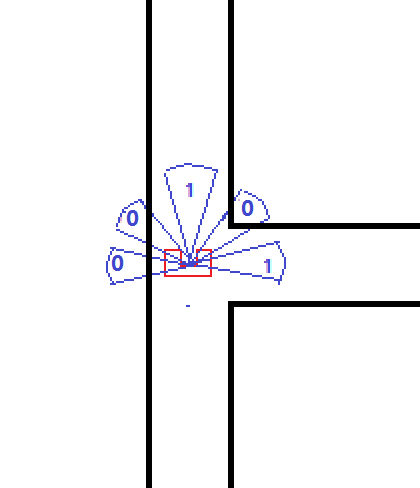

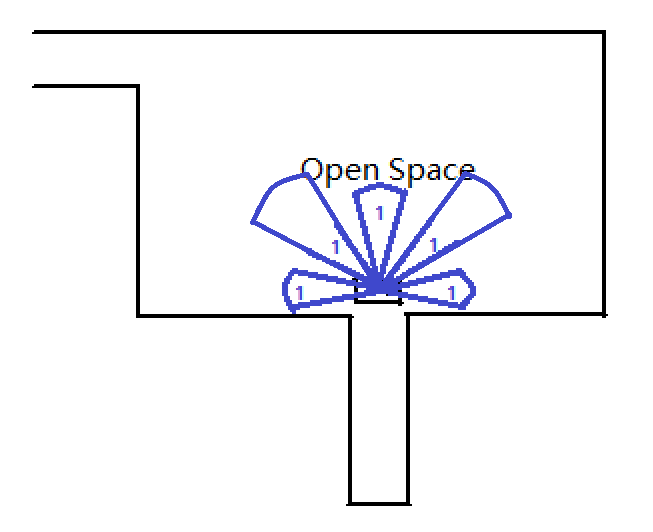

:::'''Case2:'''<br> | |||

:::open space only happens on the right side of the robot as showed in Figure "openspace-Detection-case2". The feature of this case is similar to that of right T junction showed in "openspave-case2"(right). In same way, laser beam at -45° helps to distinguish them. | |||

|[[File:Openspace detection case2.1.png|300px|thumb|openspace_Detection_case2]] | |||

|[[File:Openspace detection case2.png|280px|thumb|openspace_Detection_case2]] | |||

|- | |||

| | |||

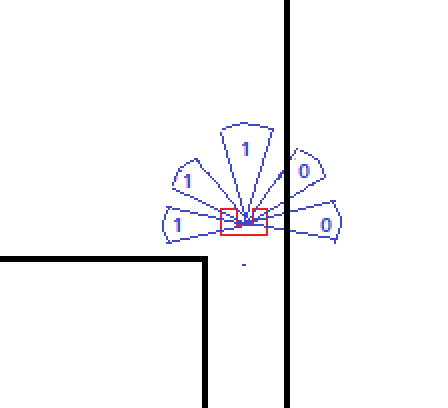

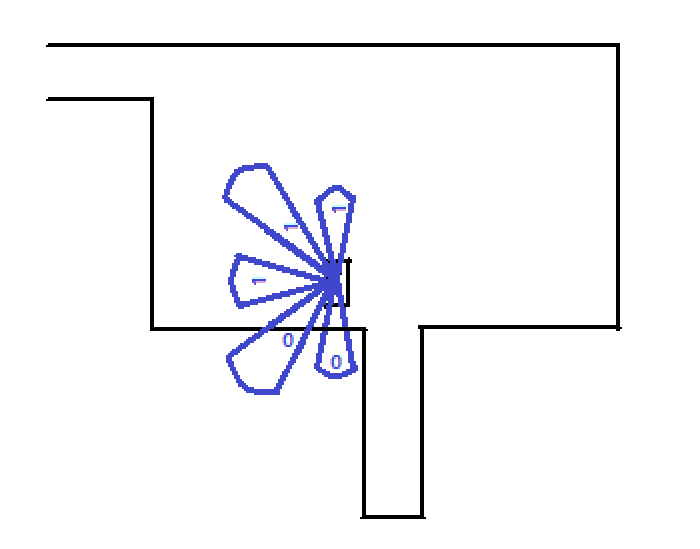

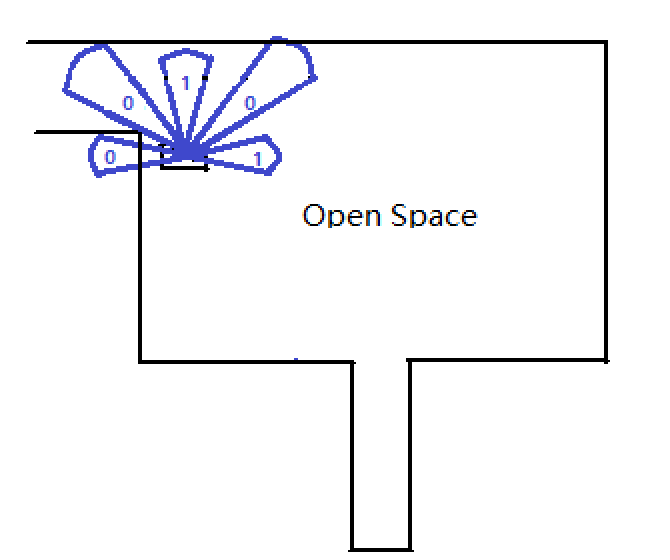

:::'''Case3:'''<br> | |||

:::Open space only happens on the left side of the robot as showed in Figure "openspace-Detection-case3". The feature of this case is similar to that of left T junction showed in "openspave-case2"(right). Laser beam at 45° helps to distinguish them. <br><br><br> | |||

:::The code introducing the information of front left/right laser beam into environment detection is positioned at the end of the "junction_detection.cpp", after the detection of junction is completed. The value of junction_flag and int array junction_direction which indicate the direction of the available corridors at the junction has been assigned. Then, checks on the result of LRF at -45° and 45° follows. If there is no obstacle found in one of these two directions, junction_flag will be reset and the information of left junction detection will be cleaned. i.e. the value of junction_direction[2] will be assigned zero. Variable openspace_flag will be set to indicate the discovery of open space, no matter it is left or right one. | |||

and then, it comes the decision block. Now, the value of junction_flag is zero, Decision block will not execute "Corridor Decision Block" because the condition for this block doesn't hold as showed in figure ''''"Flow Chart of Decision Block"'''. But the condition for the first case in "Open Space Decision Block" hold in this case and decision in this case is specified as 2(indicator of turning left movement) in this round and another variable "openspace_decision_flag" is set at the end of the this sub-block. When "turning left" movement is completed, same detection procedure is repetitively performed. Because the discovery of right open space, the indicator of the discovery of junctions keep being cleaned and indicator for left junction stays at 0 at the end of "Junction_detection Block", openspace_flag is set at the mean time as stated above. the second time when "Decision Block" is executed, the condition of the first case in "Open Space Decision Block" does't hold anymore beacause the value of "openspace_decision_flag" has been set to 1 and no block help to reset it up to now. It is until "Openspce_case3" that "Decision Block" executes sub-block3 and reset the value of "openspace_decision_flag". That is how open-space algorithms works. | |||

|[[File:Openspace detection case3.png|300px|thumb|openspace_Detection_case3]] | |||

|[[File:Openspace detection case3.1.png|290px|thumb|openspace_Detection_case3]] | |||

|- | |||

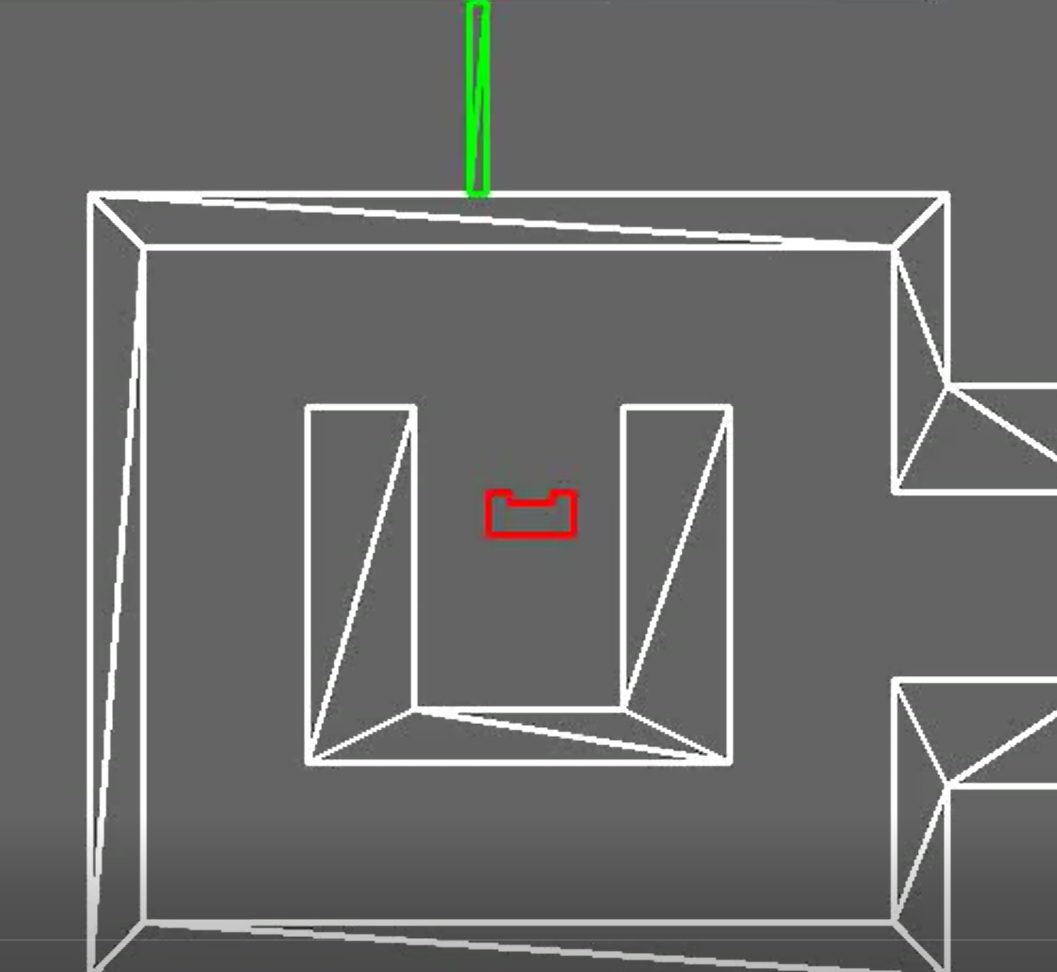

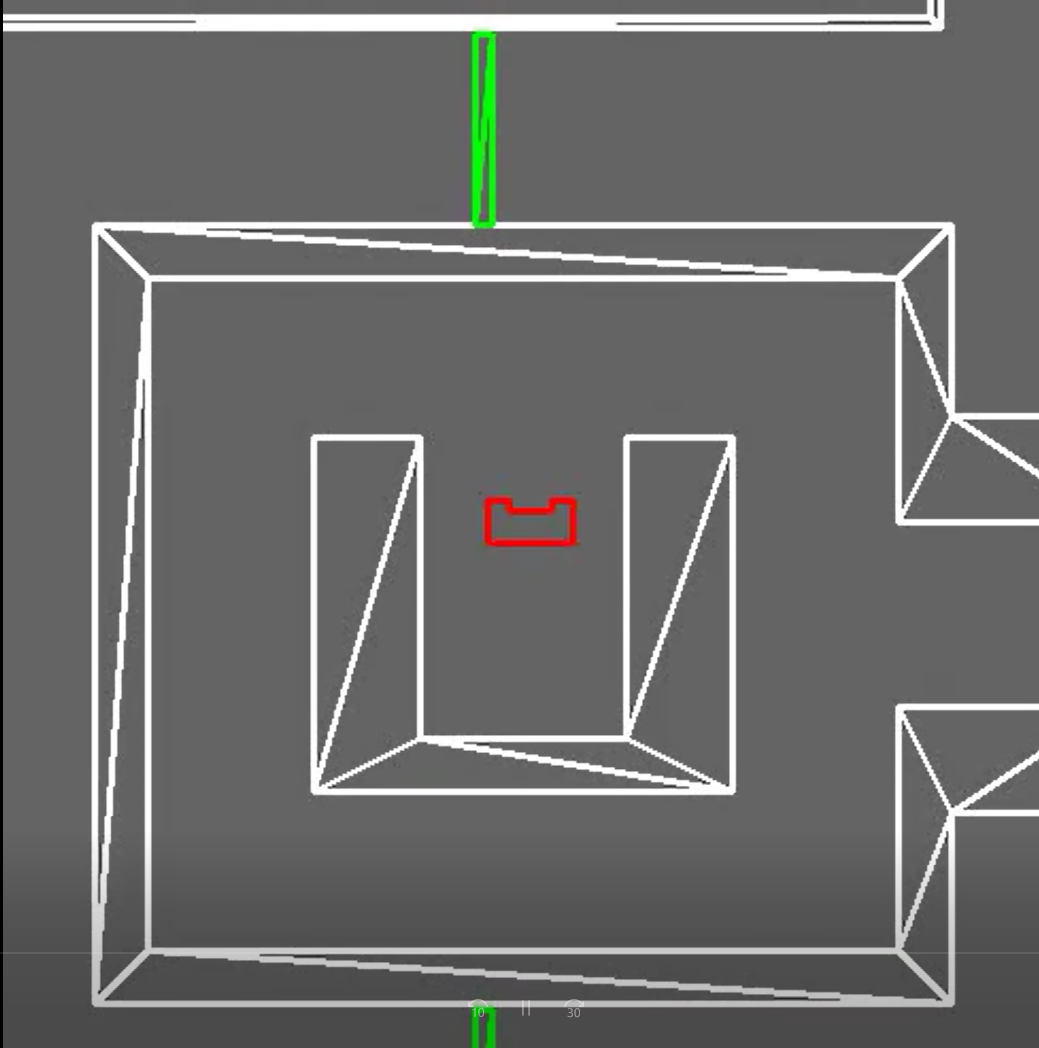

|The performance of the algorithm is show in video "'''Open space performance in simulato'''"<br> | |||

|- | |||

||[[File:Open spaceg92017.png|center|400px|link=https://youtu.be/3DkRhq_-63E|Open space performance in simulator|link=https://youtu.be/3DkRhq_-63E]] | |||

|- | |||

| | |||

::::::::::::'''Open space performance in simulato''' | |||

|} | |||

===Open Space Detection=== | ===Open Space Detection=== | ||

Revision as of 20:24, 18 June 2017

Group Members

| Name: | Student id: |

| Mian Wei | 1035075 |

| Zhihao Wu | 1041226 |

| Petrus Teguh Handoko | 1033085 |

| Bo Deng | X |

| Bo Cong | 0976759 |

| Jian Wen Kok | 0808353 |

| Nico Huebel | Tutor |

Initial Design

The initial design for the maze challenge is elaborated below. It includes the requirements, functions, components, schematic of program structure, specifications and interfaces to define the working of PICO. The file for the initial design is included here: File:Assignment-for-week1.pdf

Requirements

➢ PICO drives autonomously through maze

➢ Being able to take a turn without touching a wall

➢ Being able to detect a turn or branching corridors

➢ Avoiding collisions with obstacles (including the walls)

➢ Driving straight and rotating smoothly

➢ PICO should not stand still for 30 seconds

➢ Avoid getting trapped in a loop of the maze

➢ Being able to recognize the door

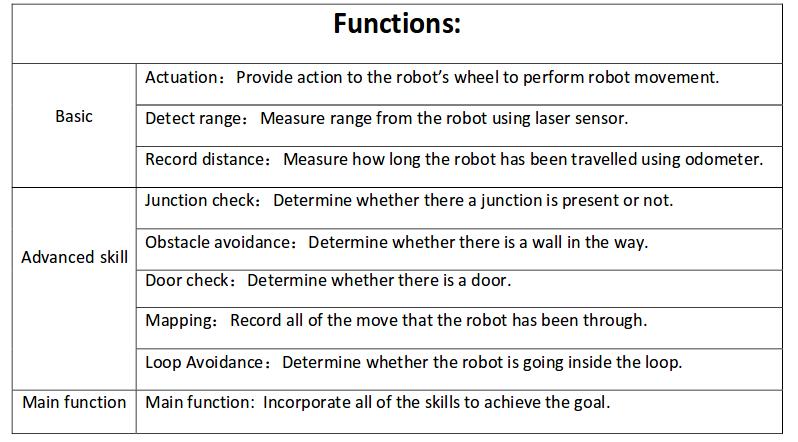

Functions

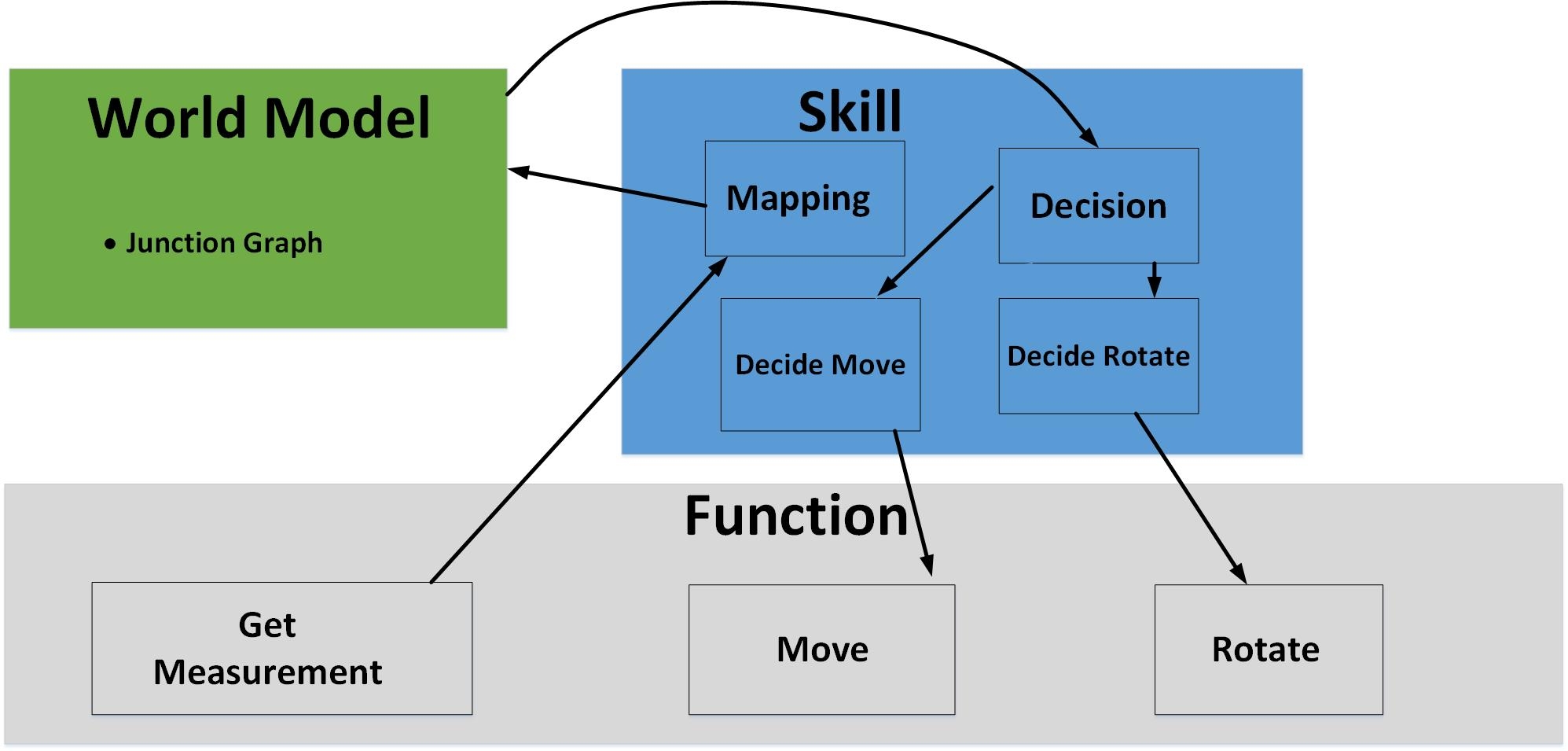

Below is the scheme for the functions of PICO. The basic skills enables the advanced skills and these skills are incorporated in the main function to finish the maze.

Components

drive control

‐Holonomic base (omni‐wheels)

‐Pan‐tilt unit for head

detection

‐170◦ wide‐angle camer (Unavailable in this project)

‐Asus Xtion Depth sensor (Unavailable in this project)

‐Laser Range Finder (LRF)

‐Wheel encoders (odometry)

world model

computer

‐Intel I7

‐Ubuntu 14.04

Specifications

Interfaces

The odometer and LRF generates data for mapping the environment.

The algorithm sets nodes on the junction as a setpoint for navigation, plans the route and put the actuators to work accordingly.

The odometer and LRF keeps on keeping track of the environment and the software recognizes obstructions, dead ends that might be doors and junction.

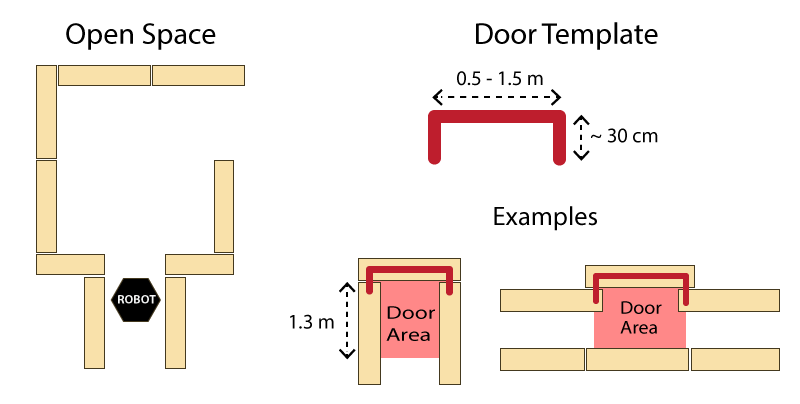

Corridor Challenge

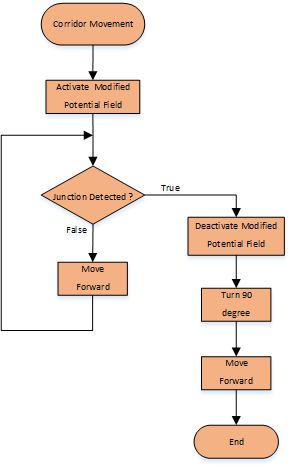

Design

At first, PICO moves forward with a modified potential field.

When it detects the junction, the potential field goes off and stops when it is in the middle of the junction.

PICO then rotates 90 degree and moves forward and finishes the challenge.

modified potential field

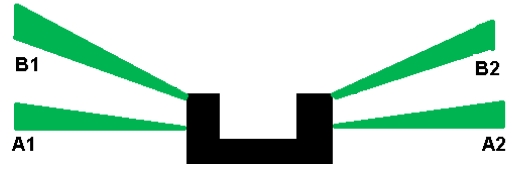

Laser beams A1 and A2 are used to check the relative distance. Based on this relative distance, the robot will be directed to the middle of the corridor by giving both a sidewards and rotational velocity.

potential field

junction detection

Laser beam B1 and B2 are used to check whether a junction exists or not. When a junction is detected, the potential field goes off and waits for A1 and A2 to detect the junction to make sure that PICO will be able to make the turn when it is turned 90 degrees.

junction detection

Result

The corridor challenge failed

In the first trial PICO moved straight forward without potential field.

When it detects the junction it stopped and rotated 90 degrees in the wrong direction.

This inevitably resulted in crashing into the walls.

The second trial PICO did not detect the junction and drove straight forward, this was the latest program.

Evaluation

Maze Challenge

Design

Architecture

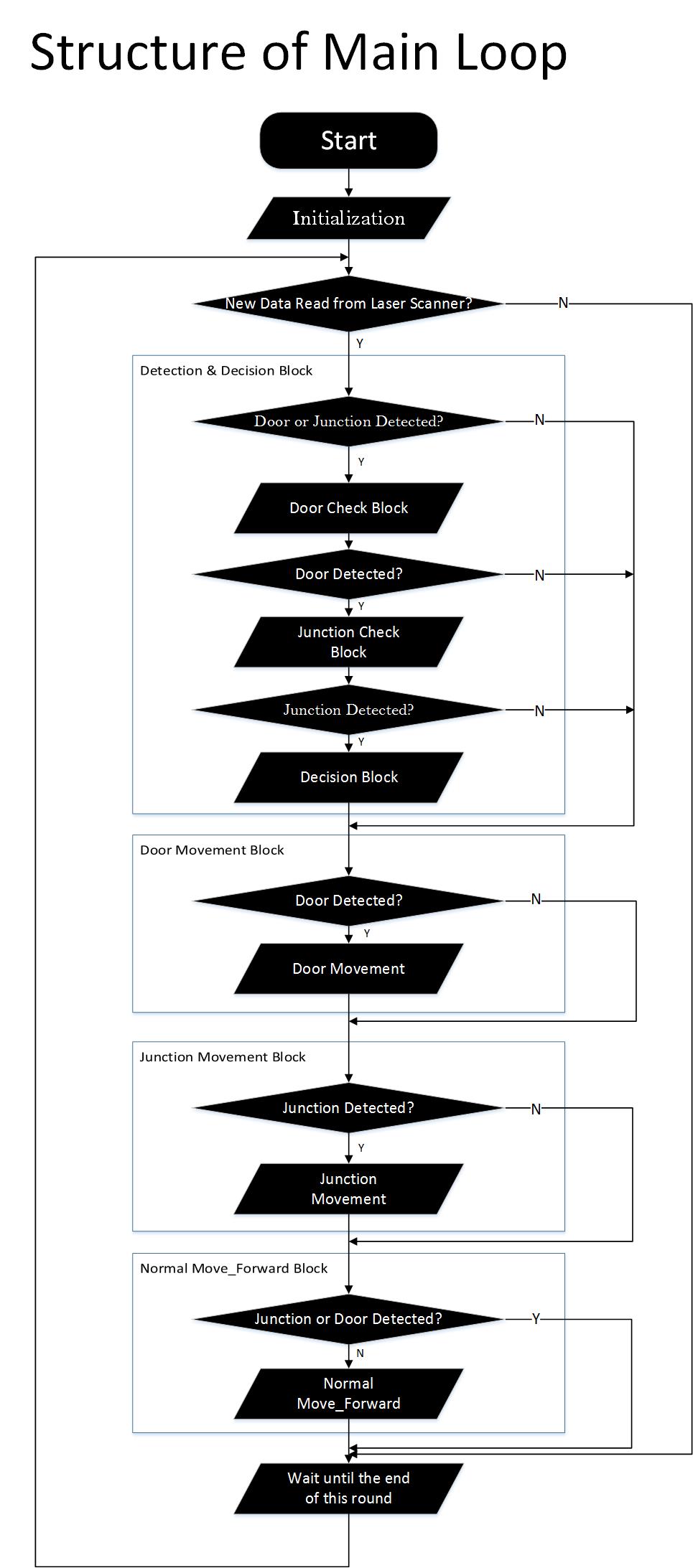

|

Main Flow

Detection

Junction Detection

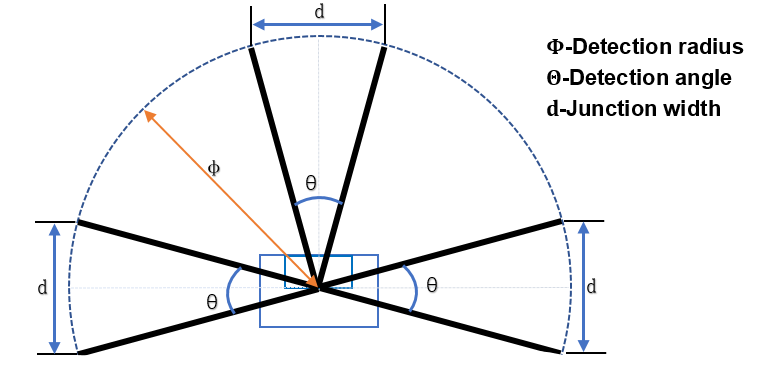

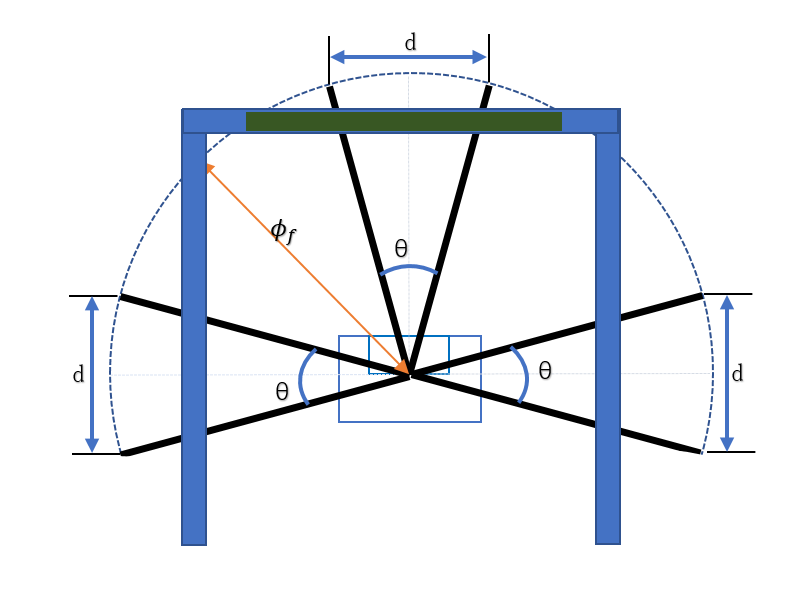

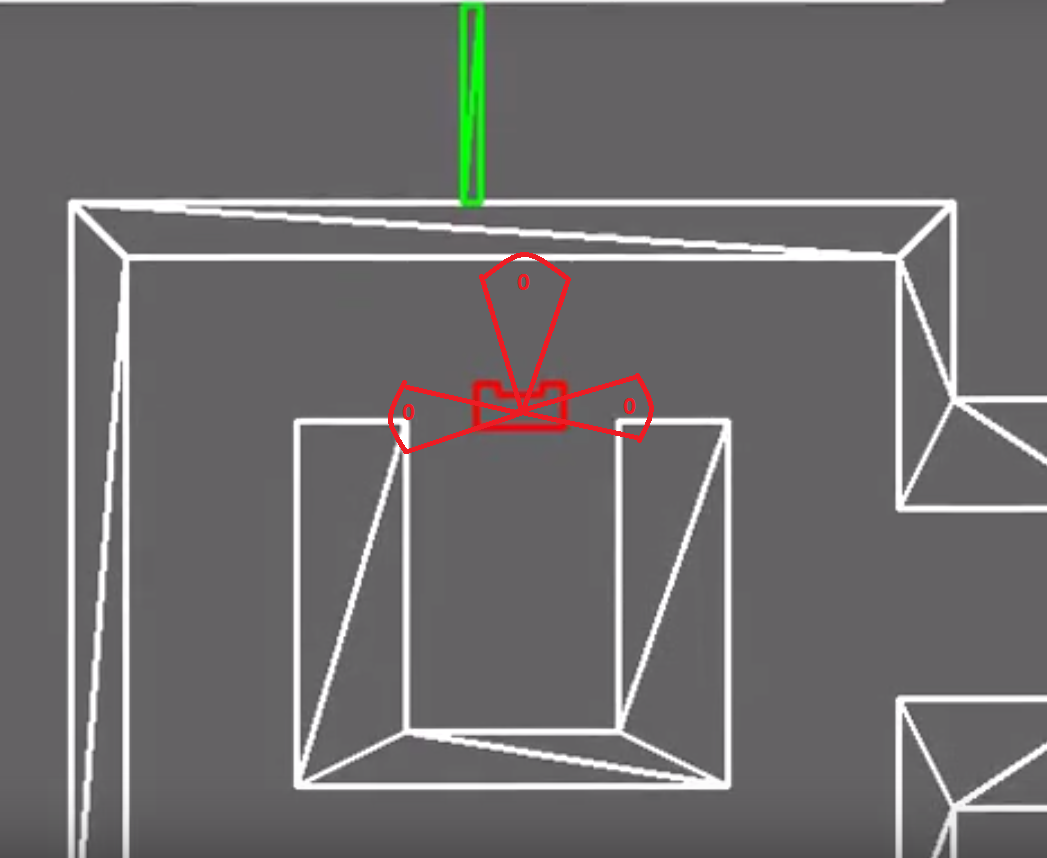

In the following picture, it is clear that three laser bundles in forward direction, left direction and right direction are selected to detect the junction. In order to find junction in perfect way, the detection radius and detection angle need to be well calculated. The detection angle is set to be 24° since the maximum detection range is ±114°.

As a result, the boundaries of three laser bundles are:

- Left bundle: +114° and +66°

- Forward bundle: +24° and −24°

- Right bundle: −114° and −66°

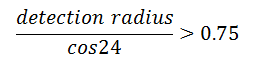

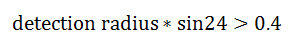

The width of the corridor is between 0.5m to 1.5m. In order to detect the widthest corridor, the following equation need to be satisfied:

Also the width of a junction should be bigger than PICO's width, so:

These two equations will make sure the pico can find junction in the widthest corridor and also could go through the junction in open loop. Finally, the detection radius is set to be 0.85.

To find the junction, both boundaries in one laser bundle needs to have longer distance than the detection radius. After that, to increase the robustness of the detection, all the distance of the laser line inside the laser bundle will be measured, if 80% laser line has longer distance than the detection radius, PICO will consider there is a junction.

Two flag are used to describe the exist and feature of junctions: the junction flag and the direction flag.

Junction flag:

- Junction flag is used to describe the exist of junctions

- 0: no junction or only forward junction

- 1: existence of left or right junction

Direction flag:

- Direction flag D[3] is an array, and each number represent a direction

- D[0]=true: right direction available

- D[1]=true: forward direction available

- D[2]=true: left direction available

If junction flag becomes 1, the decision block will be triggered and decide a direction to move based on the direction flag.

Door Detection

There are two conditions of door, front door and side door.

- Front door detection method

Detecting the distance of three ranges, the range is defined by angle θ=48°, and detection radius φf.

We consider the front door as a dead end, hence the detection is to check whether there is a dead end or not. If the average distance in the three detect ranges all smaller than φf, that means there is a dead end and return possible_door_flag=2 (means there is a front door).

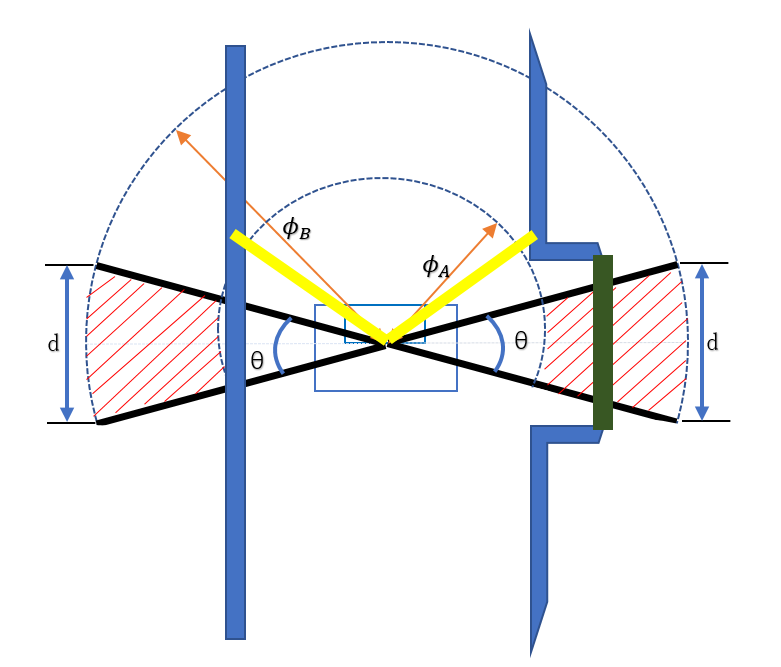

- Side door detection method

Using the left and right side detect ranges to detect the side door, considering the given restrictions the depth of door template is around 0.3m and the corridor width is from 0.5m to 1.5m, we use an annulus range with limitation φA=0.7m and φB=1.3m to define the possible door condition (the red shadow in Figure). To avoid the robot trap by the side door detection loop, we consider front data about 45° should smaller than φC=1m (the yellow line in Figure).

For example, considering the right door in the Figure, first check whether two edge laser data.212 and data.2(±24°) are in the red shadow (in Figure), and the data of front 45°(laser data.303) smaller than φC. If the first condition satisfied then check the second condition: all the other data between scan data.212 and data.2, if 75% data satisfied the length condition (in the red shadow), that means there is a right side door, and return possible_door_flag=1, (for the left door possible_door_flag=3 ).

Door Open Detection

We use the similar way to detect if door is opened after 5 second. The forwad direction laser bundle has been used. If both boundaries have longer distance and more than 75% laser line inside the laser bundle is longer than detection radius, PICO will consider door has opened.

Open Space Detection

Open Space Detection

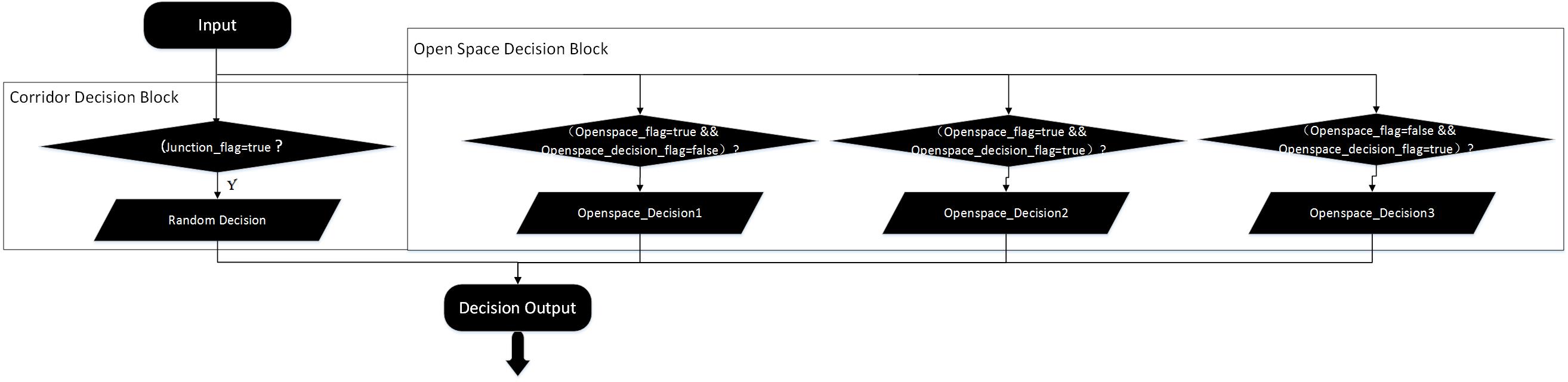

Decision Block

- Decision Block is consisted of two sub-blocks. One of the sub-blocks is “Corridor Decision Block” and the other block is “Open Space Decision Block”. These two block account for decision in corridor and open-space respectively. The switch between two decision sub-block is based on the value of “openspace_flag”, which indicate the existence of the open space.

- As demonstrated in “Figure2 Flow-Chart of Decision block”, when “Decision Block” is executed, program will be navigated to different sub-blocks. Navigating variables are given by “junction_detection” block. According to the internal logic of the “junction_detection” block, “junction_flag” and “openspace_flag” cannot be true at the same time, which ensures that “Decision” block cannot execute “Corridor Decision Block” and “Open Space Decision Block” in same round.

- The detailed decision logic will be interpreted below:

- “Decision Block” is called after “Detection Block”(junction_detection & door_check) has been executed with the information which indicated the existence of door、junction and open space provided. The value of junction_flag ,openspace_falg and openspace_decision_flag are checked.

- Junction Mode:When junction_flag is true, which indicates that junction is found and the absence of open space due to the mutual exclusiveness between junction indicator and open space indicator, Decision Block will be navigated to “Corridor Decision Block”. Arbitrary choice between available corridor directions will be made in “Corridor Decision Block” .

- Open Space Mode Mode:If it is open space detected, “Open Space Decision Block” will be executed, difference priorities are given to different choice. “turning left” is given the highest priority, so the availability of the corridor at left side of the robot will be check first, and then the check on the availability of corridor at forward direction follows and the last direction to check is right side. With this order, the robot can implement the goal of moving along the left wall in open space. The difference between the second and the third sub-blocks in “Open Space Decision Block” is that they adopt different move-forward mode. When “Openspace_flag==true &&Openspace_decision_flag==true” holds, the robot is moving within the open space or just enter the open space. Under these two circumstance, the moving forward movement controlled in a close loop way to avoid bumping the wall . when “openspace_flag==false && openspace_decision_flag=true?” hold, the robot is gonna to encounter with the exit of the open space. Because of lead detection on open space, the indicator of open space has been reset. To ensure that the robot can move to the middle line of the exit before making the next decision, valuable openspace_decision_flag is declared. With this valuable distinguishing the last forward movement before leaving the open space with other forward movements in the open space, last forward movement is an open loop controlled one navigating the robot to move forward by 0.5 meters. “Protection Block” is executed all the time, which protects the robot from bumping in open loop procedures .

- As for the first sub-block in "Open Space Decision Block", it will give the movement comment "Turning left" and set variable "openspace_decision_flag" at the end of itself, which ensures that it can only be executed by one time in every open space and only executed at the moment when robot enter the open-space.

- Open Space Mode Mode:If it is open space detected, “Open Space Decision Block” will be executed, difference priorities are given to different choice. “turning left” is given the highest priority, so the availability of the corridor at left side of the robot will be check first, and then the check on the availability of corridor at forward direction follows and the last direction to check is right side. With this order, the robot can implement the goal of moving along the left wall in open space. The difference between the second and the third sub-blocks in “Open Space Decision Block” is that they adopt different move-forward mode. When “Openspace_flag==true &&Openspace_decision_flag==true” holds, the robot is moving within the open space or just enter the open space. Under these two circumstance, the moving forward movement controlled in a close loop way to avoid bumping the wall . when “openspace_flag==false && openspace_decision_flag=true?” hold, the robot is gonna to encounter with the exit of the open space. Because of lead detection on open space, the indicator of open space has been reset. To ensure that the robot can move to the middle line of the exit before making the next decision, valuable openspace_decision_flag is declared. With this valuable distinguishing the last forward movement before leaving the open space with other forward movements in the open space, last forward movement is an open loop controlled one navigating the robot to move forward by 0.5 meters. “Protection Block” is executed all the time, which protects the robot from bumping in open loop procedures .

|

Movement

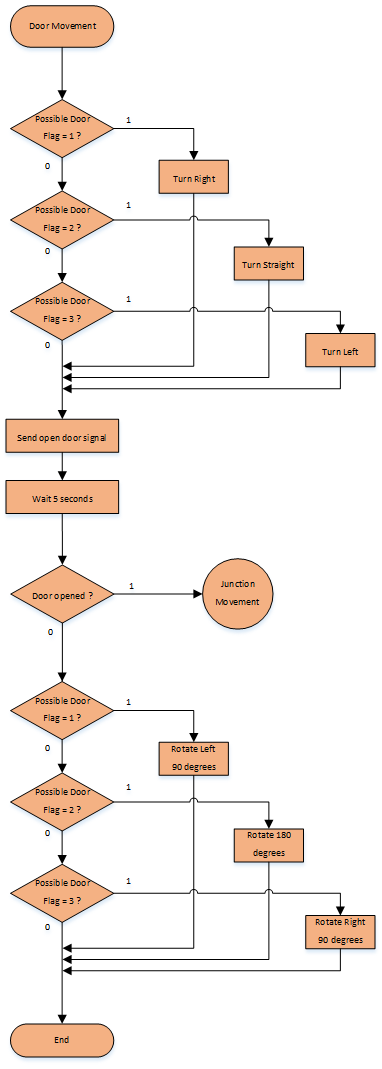

Door Movement

code snippet: door_movement.cpp

Junction Movement

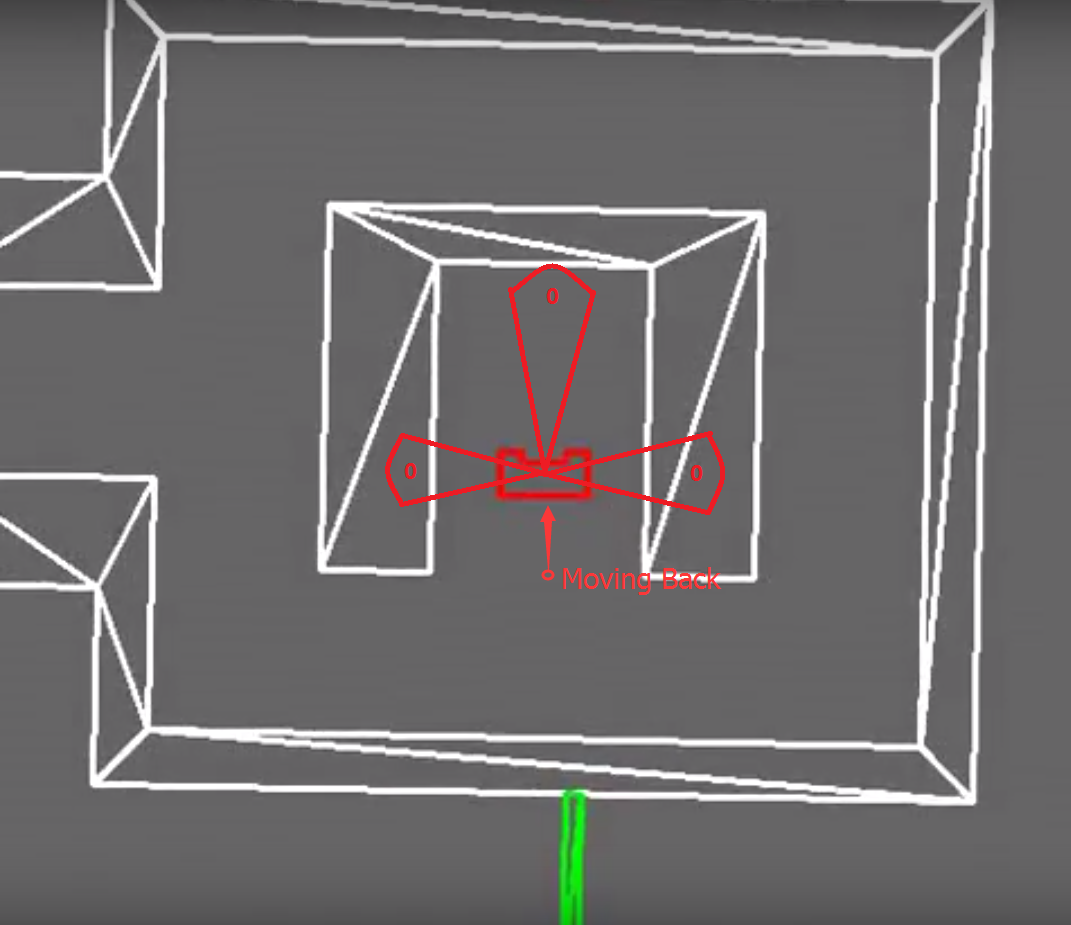

The Junction Movement function consist of five cases: turnStraight, TurnRight, TurnLeft, protection and movForward.

turnStraight:

PICO shortly moves straight for 0.5m or when counters time[3s] has passed and continues with the moveForward function. The counter is needed to prevent the program go into a loop when PICO is not able to move the distance. The forward movement is protected by 5 laser sectors. When it is too close to the wall or obstruction, the forward movement will move backwards with an speed of -0.2m/s. When the laser beams for the obstruction detection on the sides of PICO detects that it is too close to the wall, the program goes into the state of protection and moves PICO away from the obstruction.

turnRight and turnLeft:

Both cases turnRight and turnLeft rotates 90 degrees to the corresponding direction and measures the rotation with the odometer to determine when to stop. To make sure that the odometer is accurate enough, the rotating speed is set at 0.2 rad/s.

movForward:

After finishing the turnStraight, the code will go into the movForward state. PICO moves straight for 5m or stops when counters time[5s] has passed. This movement is exactly the same as the turnStraight movement except for the distance and countertime.

protection:

When an obstruction is detected in the turnStraight or movForward, PICO then stops and moves sideways to avoid the obstruction.

The protection in the junction_movement.cpp and move_forward.cpp are explained here: protection

The code snippet is included below:

code snippet: junction_movement.cpp

Move Forward

Similarly to the junction_movement.cpp, move_forward.cpp uses three front laser bundles to adjust the speed. The forward speed is either 0.5m/s when the detection allows or -0.2 m/s otherwise. What differs from the junction_movement.cpp is that the forward movement will not stop when it adjusts with the side movement to avoid obstruction. With 6 laser bundles, 3 at both sides, it adjusts the sidewards speed. When it detects a obstruction, it will move sidewards to avoid the obstruction.

code snippet: move_forward.cpp

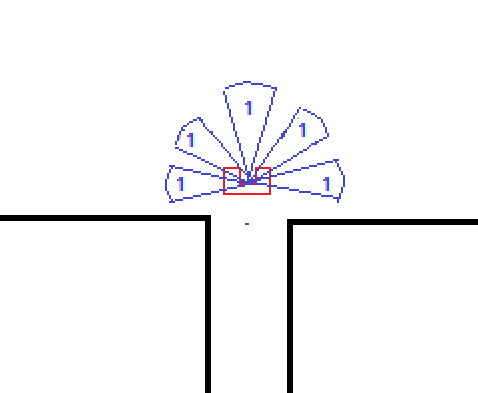

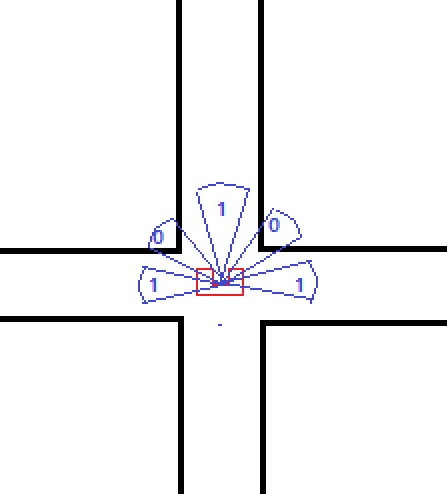

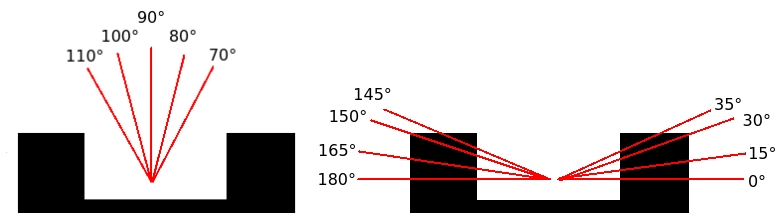

Protection

The picture on the left is the front detection for adjusting the forward velocity and on the right picture is the side detection to adjust the sideways velocity. All laser beams takes the average of 15 points. The forward speed is either 0.5m/s when there is no obstruction in the front or -0.2 m/s otherwise. The sidewards speed is 0.2m/s when an obstruction is detected or 0 m/s otherwise. Below is the protection in action to avoid collision.

The code snippet for side protection here:side protection

The code snippet for forward protection here:forward protection

Open Space

Result

Evaluation

The problem of complexity:

By adding states we increase the complexity of the system and this can result very easily into undesired behavior as it is observed. PICO can only perform 20 tasks each second and since we are moving at a speed of 0.5 m/s and this combined with the many states the program has, can result into more undesired behavior.

[mention the problems here or in the blocks itself?]

Right now, states are being used to prevent undesired behavior. This way of solving requires the making of repeating states with different conditions which in itself increases the complexity.

It is proposed to cut down the number of states and only focus on the key tasks: moving block, detection block, door handling block, maze solving block. To prevent undesired behavior a supervisory controller can be used.

The problem of open loop:

where do we have open loop. what problems , how to solve.

The problem of junction detection: use node is more robust--> make lines

Evaluation group9 EMC 2017

Code snippets

corridor challenge

potential field

junction detection

maze challenge

Door Movement

code snippet: door_movement.cpp

Movement

code snippet: move_forward.cpp

code snippet: junction_movement.cpp[math]\displaystyle{ Insert formula here }[/math]