PRE2015 4 Groep1: Difference between revisions

| Line 375: | Line 375: | ||

</math> | </math> | ||

In this formula <math display inline>F_1</math> is the force pressing on the arm at half its length <math display inline>L_1</math>, <math display inline> T </math> is the friction coefficient that discribes the friction in the joints, <math display inline> K </math> is the stifness in the arm itself and <math display inline> I_0 </math> is the inertia of the arm. The inertia can be calculated because the mass <math display inline> m_1 </math> and the length of the arm are known. | In this formula <math display inline>F_1</math> is the force pressing on the arm at half its length <math display inline>L_1</math>, <math display inline> T </math> is the friction coefficient that discribes the friction in the joints, <math display inline> K </math> is the stifness in the arm itself and <math display inline> I_0 </math> is the inertia of the arm. The reaction moment from the underarm working on the upper arm is added in the form of , <math display inline> M_2 </math>. The inertia can be calculated because the mass <math display inline> m_1 </math> and the length of the arm are known. | ||

:<math display block> | :<math display block> | ||

Revision as of 11:33, 19 June 2016

Group members

- Laurens van der Leden - 0908982

- Thijs van der Linden - 0782979

- Jelle Wemmenhove - 0910403

- Joshwa Michels - 0888603

- Ilmar van Iwaarden - 0818260

Project Description

The aim of this project is to create a anthropomorphic robot that can be used to hug others when separated by a large distance. This robot will copy or shadow a hugging movement performed by a human using motion capture sensors. In order to realize this goal the robot AMIGO will (if allowed and possible) be used to perform the hugs while the commandos are generated using Kinect sensors that capture movement done by a human.

USE Aspects

Before designing the hugging robot it is important to analyze what are the benefits and needs of the users, the society and the enterprises. What might drive them to invest in the technology and what are their needs and wishes?

Who are the USE?

- Primary users: As the hugging robot intended use is to connect people that are separated from each other, the main primary users will be separated from their loved ones for a longer period. As such the primary user will be mainly elderly people, distant relatives or friends, and children or students.

- Secondary users: As primary users want to use the hugging robot, the secondary users will be instances where lot of the primary users can be found. As such hugging robots will be used by nursing or care homes and hospitals, private or boarding schools and educational instances that hold many international students like universities.

- Tertiary users: The hugging robot will probably be in high demand and being used many times by maybe different people. Therefore there will be a demand for maintenance. The tertiary user will be the maintenance staff as a result.

- Society: As the hugging robot will be placed in many government instances, national and local government will be the one that distributes the technology.

- Enterprise: The enterprises that will benefit from the hugging robot are the companies that will help produce the hugging robot. As such the virtual-reality enterprises and the robot producing companies are to benefit from this technology.

What are the needs of the USE?

- Primary user needs: As a large part of the primary users might new technology maybe complicated or intimidating, the hugging robot has to be safe physically as well as psychologically and easy to use. The fact that people are hugging the robot requires that it is comfortable to touch.

- Secondary user needs: As the secondary users are likely to have more than one robot, they would prefer a relatively cheap price. As they probably cannot afford to educate people to become experts with the robot, the robot has to be easy to install and use. A educational instance could reserve a room for the hugging robot, but in a hospital or nursing home, the patient might not be able to move. In that case it must be possible to move the robot to the patient. Therefore the robot has to be not too big and not too heavy. The fact that multiple people will make use of one robot might give rise to the wish that the appearance of the robot is adaptable.

- Tertiary user needs: As the robot has to be relatively cheap, the maintenance of the robot cannot be very intensive. This will require the robot to be easily cleaned and broken hardware and software to be easily accessible and replaced.

- Society needs: The hugging robot will be a device to connect people over large distances, in a better way than modern communication devices can. As such it will fight against loneliness and help strengthen family values.

- Enterprise needs: For the companies it is vital that the hugging robot will make a profit and to achieve this, the robot must be cheap to produce.

How can we process these things in to the project.

- Safety: In order to not damage the primary users the robot has to have pressure sensors for making sure the hug is comfortable and not painful. As an approaching robot might be frightening, the robot cannot give a hug until the user will allow. And by giving the robot an easy to reach kill switch, the user will not be trapped in case the robot might malfunction.

- Comfortable: To make sure the user will enjoy the hug, the robot has to have a soft skin that might be made of cushions and cannot be cold to the touch. By giving the robot a tablet, which might show a photo of the relative, and portraying a similar voice to that of the relative, we hope to make the user more at ease when alone with the robot. Dressing the robot in clothes and playing background music or sounds can also add to that effect. Giving the robots interface two separate buttons for phone function and movement activation, gives the user the choice whether or not he might want the robot to hug him and serves to give the user the sense that he is in control.

- Easy to use: As most people are already familiar with telephone functions, we want to design an interface that is as simple as that.

- Adaptable appearance: The robot can have a set of clothes and/or different skins to adapt to different situations.

Planning

Week 1

Idee in één zin: Een robot die armbewegingen op afstand kan nabootsen, waarbij wij voornamelijk de nadruk leggen op een knuffelrobot (i.e. een robot die van afstand knuffels kan geven).

Soort robot: Amigo-knuffelrobot

Deelvragen/uitdagingen: 1. Hoe wordt de geleverde kracht van die de amigo/robot geeft aan de geknuffelde persoon terug gevoerd naar de knuffelde persoon (druksensoren, real time?)?

2. Hoe registreer je de beweging registreren van de knuffelende persoon (Ki-nect)?

3. Lkj

Actiepunten:

1. Mailtje over precieze inhoud presentatie volgende week maandag (25-4-2016) - Specifieke idee geven - Ook al USE aspecten? - Idee eindproduct geven - Ook al literatuur?

2. Literatuur/state of the art opzoeken Laurens en Thijs

3. Scenario/situatieschets Jelle

4. Notulen/brainstormsessie maken Joshwa

5. Opzetje Wikipagina maken Joshwa

Week 2 We continued to discuss our idea of a robot capable of shadowing a hugging motion using Kinect. This week we contacted someone from TechUnited and asked if it would be possible to use their robot AMIGO for our project. Week 3 During our given presentation on Monday 2-5-2016 the teachers indicated that our plan still lacked clarity and our USE-aspects were missing. Thereafter we discussed this with the group for a few hours and then divided the tasks.

After we sent a second email to the people from TechUnited on the Monday we were invited to come to the robotics lab in the building "Gemini-Noord" on Tuesday evening in order to discuss our idea. While the initial plan was to work with AMIGO from the start if possible our plan changed a little bit. The people from TechUnited strongly advised to create a virtual simulation first(something that is done there a lot to test scenarios before applying this on the AMIGO itself) before considering applying this on the real AMIGO. If we could get the simulation to work properly we could consider trying the real AMIGO. The people from the lab told us which software and systems were to be used to make such a simulation.

Jelle, Joshwa and Laurens have discussed the matter since they will be working on the simulation. The three have installed some software and slightly began working on it.

Thijs has processed the USE-aspects in order to finally clarify what can be done to take USE into account for our robot. (als ik wat vergeten ben graag even toevoegen wat mist)

Ilmar has started working on the literature research regarding: State-of-the-art, User requirements of elderly people, ways of making a human-like presence

Week 4 Jelle, Joshwa and Laurens will work through tutorials to get to know the programming software used to make an AMIGO-simulation.

Week 5 Jelle, Joshwa and Laurens will finish the tutorials this week and hope to lay the groundwork for the AMIGO-simulation Week 6 Jelle, Joshwa and Laurens will work on the AMIGO-simulation this week Week 7 Jelle, Joshwa and Laurens hope to finish the AMIGO-simulation this week. If possible they can apply it on the real AMIGO Week 8 Jelle, Joshwa and Laurens will if possible run tests with the real AMIGO using the AMIGO-simulation and prepare the final demonstration with either the AMIGO-simulation or AMIGO itself.

Ilmar will work on and finish the slides for the final presentation. Week 9 The Wiki will receive its final update this week. The course-related presentations suggest that the final presentation is this week. Depending on the exact date this week will serve as a buffer to run some final tests with either AMIGO or the AMIGO-simulation itself.

Milestones project

Robot building/modifying process

1. Get robot skeleton

We have to acquire a robot mainframe we can modify in order to make a robot that has the functions we want it to have. Building an entire robot from scratch is not possible in eight weeks. If the owners allow us we can use the robot Amigo for this project.

2. Learn to work with its control system

Once we have the “template robot” we have get used to its control system and programming system. We must know how to edit and modify things in order to change its action.

3. Get all the required materials for the project

A list has to be made that includes everything we need to order or get elsewhere to execute the project. Then everything has to be ordered and collected.

4. Write a script/code to make the AMIGO do what you want

We will have to program the robot or edit the existing script of the robot to make it do what we want. This includes four stages:

4a Make it perform certain actions by giving certain commands

We must learn to edit the code to make sure the robot executes certain actions by entering a command directly.

4b Make sure these commands are linked to Kinect

Once we have the robot reacting properly on our entered commands we have to make sure these commands are linked to Kinect. We must ensure that the robot executes the action as a result of our own movements.

4c Include a talk function that gives the robot a telephone function

The robot must be armed with a function so that it reproduces word spoken by the man controlling it. This is like a telephone.

4d Make sure the robot is fully able to hug at will (is presentable to the public)

After the robot is Kinect driven we must modify it in order to be fully working according to plan. In this case it must be able to perform hugs exactly as we want it. As a real shadow of ourselves.

Wiki

5. Have a complete wiki page of what was done

This milestone means that we simply have to possess a wiki page which describes our project well.

Literature

6. State of the art

Find useful articles about existing shadow robotics and hugging robots.

Evaluation

Completed Milestones

'Most of the milestones were completed as the project was in progress. We managed to make a deal with Tech United, who allowed us to use their robot AMIGO for the project, but instructed us to practice and test with a simulator first, before applying our created code and scripts on the real AMIGO. With that we had our robot to be used (1).

Over the course of the weeks we learned to work with Robot Operating Software or ROS for short, the programming software used for AMIGO or at least the functions we needed to proceed (2). As for all materials required we had most of the required materials once we acquired the used AMIGO files and installed ROS. Other things we needed in the end would be a Kinect and software that could process the data perceived by the Kinect. Since we would mainly use digital software and one of our group members, Jelle, had a Kinect at home, we didn't need to order any further materials (3).

Once we learned how to work with the simulator we learned several commands that could make the robot perform certain actions (4a). We could later link these commands to data perceived by the Kinect and put through to ROS (4b). This allowed us to let the robot shadow actions performed by a person standing in front of the Kinect interface, albeit only for the arms and due to internet connection with some delay (4d).

At the end of the project we put every part of information concerning our project we deemed important on the Wiki in order to give a good view of what we had done in our project (5).

Ilmar and Thijs spent a lot of time searching for literature and articles about our subject and what already existed in this area of robotics. Some of theses articles proved useful for our project or at least the description of the idea (6). These articles can be found under Research.

Failed Milestones

We did not manage to include the telephone function in our prototype, mainly because other parts of the design had more priority and we were running out of time (4c). While the telephone function was not the most important thing we wanted to include it is certainly a part of the project that should be included in a more advanced version of our prototype.

Conclusion regarding Milestones

Overall nearly all our milestones were completed with relative success during the course of the project, the sole exception being the telephone function. It is unfortunate that this milestone was not completed, but it did not ruin the project as we still had something fun to demonstrate to the public and learned a lot from the project. Failing to complete any other milestone would have resulted in bigger problems as those would have resulted in significant problems for the project.

Research

State of the art

- Telenoid: The Telenoid robot is a small white human-like doll used as a communication robot. The idea is that random people and caregivers can talk to the elderly people from a remote location using the internet, and brighten the day of the elderly people by giving them some company. A person can control the face and head of the robot using a webcam, in order to give people the idea of a human-like presence. --[1 Telenoid]

- Paro: Paro is a simple seal robot, able to react to his surroundings, using minimal movement and showing different emotional states. It is used in elderly nursing homes to improve the mood of elderly people and with that reduce the workload of the nursing staff. Paro did not only prove that a robot is able to improve the mood of elderly people but also that a robot is able to encourage more interaction and more conversations.

- Telepresence Robot for interpersonal Communication (TRIC): TRIC is going to be used in an elderly nursing home. The goal of TRIC is to allow elderly people to maintain a higher level of communication with loved ones and caregivers than via traditional methods. The robot is small and lightweight so it is easy to use for the elderly people, it uses a webcam and a LCD screen.

Interesting findings from the literature research:

- Ways to create a human-like presence:

- Using a soft skin made of materials such as silicone and soft vinyl.

- Using humans to talk (teleoperate) instead of using a chat program, so the conversation are real and feel real.

- Unconscious motions such as breathing and blinking are generated automatically to give a sense that the android is a live.

- Minimal human design, so it can be any kind of person the user wants it to be using imagination. (Male/female, Young/old, Known person/Unkown person)

- From the participant’s view, the basic requirement for interpersonal communication using telepresence is that the participants must realize whom the telepresence robot represents. The two main options are using an LCD screen or to create mechanical facial expressions. (Mechanical facial expressions increase humanoid characteristics and therefore encourages more communication.)

- User requirements for the elderly:

- Affordable

- Easy to use:

- Lightweight

- Easy / Simple Interface

- Automatically Recharge

- Loud speakers (capable of 85dB), because elderly poeple prefer louder sounds for hearing speech sounds.

- Maximum speed of 1.2 m/s (Average walking speed)

- First of all it is important to know that whenever someone has a negative attitude towards robots, the robot will feel less human-like and increase the experienced social distance between humans and embodied agents. Secondly a proactive robot in this study was seen as les machine-like and more dependable when interaction was complemented with physical contact between the human and agent. Whenever people have a positive attitude towards robots, and the robot is proactive than the social experienced distance will decrease between humans and agents.

- Both the robots Paro and Telenoid proved that elderly people are able to accept robots. (9/10 of the people who used Telenoid accepted it, and thought the robot was cute)

- Both the robots Paro and Telenoid proved that robots are able to improve the mood of elderly people, by encouraging them to have more conversations.

Articles

- Telenoid

Telenoid 1 https://www.ituaj.jp/wp-content/uploads/2015/10/nb27-4_web_05_ROBOTS_usingandroids.pdf

This article is about the Telenoid robot. Here they mention that an ageing society with increasing loneliness is becoming a problem, “These days, the social ties of family, neighbors and work colleagues do not bond people together as closely as they used to and as a result, the elderly are becoming increasingly isolated from the rest of society. When elderly people become more isolated, they can lose their sense of purpose, become more susceptible to crime, and may even end up dying alone. Preventing isolation is essential if we are to create a safe and secure environment in the super-ageing society that Japan is having to confront ahead of any other country.”

In order to confront this isolation problem they developed the robot Telenoid, a communication robot. The idea is that random people and caregivers can talk to the elderly people from a remote location using the internet, and brighten the day of the elderly people by giving them some company. A person can control the face and head of the robot using a webcam, in order to give people the idea of a human-like presence.

These articles were useful because they give some interesting USE-aspects we could implement in our hugging robot. Like things that make a robot more humanlike. This article also showed us that the elderly people indeed react positive on human-robot interaction, this might not sound interesting but it was one of my biggest fears, that robot would not be accepted by the elderly people.

- Paro

Paro is a simple seal robot, able to react to his surroundings, using minimal movement and showing different emotional states. It is used in elderly nursing homes to improve the mood of elderly people and with that reduce the workload of the nursing staff. Paro did not only prove that a robot is able to improve the mood of elderly people but also that a robot is able to encourage more interaction and more conversations.

This article was not really useful, it just confirmed that robots can have a positive effect on people's mood and that it encourages conversations and interaction between the elderly people. I expected that this article would have been useful because every article or report about social robots cited this article and mentioned the robot "Paro".

- Telepresence Robot for interpersonal Communication

This article describes the development of a telepresence robot called TRIC ( Telepresence Robot for interpersonal Communication), who is going to be used in an elderly nursing home. The goal of TRIC is to allow elderly people to maintain a higher level of communication with loved ones and caregivers than via traditional methods. This paper further describes what discussion they made regarding the robot development process.

Interesting findings:

- From the participant’s view, the basic requirement for interpersonal communication using telepresence is that the participants must realize whom the telepresence robot represents. The two main options are using an LCD screen or to create mechanical facial expressions. (Mechanical facial expressions increase humanoid characteristics and therefore encourages more communication.)

- A telepresence robot should possess some form of autonomous behaviors. This is needed in order to be able to handle certain situations on its own, when the user does not know of this situation or is not able to control the robot properly.

- TRIC has the ability to automatically recharge its battery when needed.

- Affordable, lightweight and with a maximum speed of 1.2 m/s (walking speed)

- Loud speakers (capable of 85dB), because elderly people prefer louder sounds for hearing speech sounds.

We found a lot of interesting findings in this article, some which we can implement in our demonstration using the Amigo. The article also explains the definition of "telepresence" really well. We always thought that the robot had to feel like a human to the environment, but it is actually the other way around. The teleoperator has to feel as if he is physically in the same environment as the robot is.

- The effect of touch on people’s responses to embodied social agents

http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.147.5975&rep=rep1&type=pdf

This study concluded a couple of things about the effect of physical contact between humans and robots. First of all, it is important to know that whenever someone has a negative attitude towards robots, the robot will feel less human-like and increase the experienced social distance between humans and embodied agents. Secondly, a proactive robot in this study was seen as less machine-like and more dependable when interaction was complemented with physical contact between the human and agent. Whenever people have a positive attitude towards robots, and the robot is proactive than the socially experienced distance will decrease between humans and agents.

Nothing too much out of the ordinary here, now we know that a proactive robot is more accepted than a reactive robot if it is about a robot touching a human. But unfortunately, the Amigo robot's movement are not quite what we would have liked, which would have made it a little bit awkward for the receiver if Amigo initiated the hug. Therefore we decided that we would program the Amigo robot to be reactive and let the receiver be the initiator here. Thus, the article was still useful, but only for our conceptual idea and not so useful for the actual demonstration.

- The Hug: An Exploration of Robotic Form For Intimate Communication

DiSalvo, C., Gemperle, F., Forlizzi, J., & Montgomery, E. (2003). The Hug: An Exploration of Robotic Form for Intimate Communication. Proceedings of the Ro-Man 2003. Millbrae. http://bdml.stanford.edu/twiki/pub/Haptics/TauchiRehabilitationProject/TheHugRobot.pdf

This article is about a robot called “the hug”. The hug is a pillow shaped robot with two outstretched arms and is made to support long distance communication between elderly and their relatives. They chose for a design that easily fits in a home context, the hug is soft and made from silk upholstered fabrics. It uses voice, vibration and heat patterns to create an intimate hug. Using two of these robots, people can chat and send each other hugs, when one is not available, voice messaged, heat patterns and vibration can be left and received later.

This article was useful because it gave us ideas for our own concept of a hugging robot. There are two main hugging robots available right now, where “the Hug” is one of them. What’s really interesting here is that using thermal fibers you can create a comfortable radiating warmth that makes a hug feel more natural. This is something we have speculated about but did have confirmed yet by the literature. What’s also interesting is that they did not chose to go for a humanlike robot, but chose for something that easily fits in a home context. Furthermore, we learned that stroking the back during a hug is really important to make it a real hug and that open outstretched arms invite and encourage people to hug a robot.

In our conceptual idea of our hugging robot, we could make use of thermal fibers and vibration as well, but unfortunately this is not possible with the Amigo robot. In Amigo’s case we can stretch out his arms to invite people to hug him, and we can try to make it possible to stroke someone’s back during the hug.

- Recognizing Affection for a Touch-based Interaction with a Humanoid Robot

Cooney, M. D., Nishio, S., & Ishiguro, H. (2012). Recognizing affection for a touch-based interaction with a humanoid robot. Paper presented at the 1420-1427.

In this article they talk about ways to recognize certain gestures and how affectionate these gestures are. They use two “mock-up” robots with touch sensors and a Microsoft Kinect camera to recognize these gestures. Some gestures were more easily recognized through the Kinect camera and others by the touch sensors. The gestures “Hug and pat back”, “hug” and “touch all over” were the most difficult gestures to recognize. The recognizing behavior was broken down in two sub problems: classifying gestures and estimating affection values. For the former they used Support Vector Machines, they also implemented the k-Nearest Neighbor algorithm. These all combined gave a high accuracy of 90.3%.

This article was useful for our conceptual idea of the hugging robot. Our conceptual hugging robot has to be proactive and initiate the hugging, therefor he needs to see and recognize what the receiver does. First we wanted to just use a Kinect camera, but this is clearly not enough. So now we are thinking about two Kinect camera’s and some touch sensors. For our demonstration using the amigo, it is not so useful. The Amigo robot is like he is and we are not allowed to change anything hardware related. Therefor we came up with the assumption that during the demonstration the hug sender is able to see the receiver and the robot at all times during the hug.

The Support Vector Machines are a subject that needs to be further looked into.

- MOTION CAPTURE FROM INERTIAL SENSING FOR UNTETHERED HUMANOID TELEOPERATION

http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.85.2155&rep=rep1&type=pdf

Here they describe the motion suit made for the NASA robonaut using sensors with inertial measuring units (IMS). Here they explain that their suit is a cost-effective way of capturing motion while the current majority of commercial motion capture system are cost-prohibitive and have major flaws. This motion suit avoids those problems with the IMS sensors which only require gravity and the earth’s magnetic field to work. Their only limitation is the amount of sensors needed, other than that, they claim that this system is better than all the other systems. And that in the future this will play an important role in human-robot interaction research.

This article was interesting up to the fact that their system needs about 7 sensors for just the upper body half which costs approximately 300*7+100=2200$ for the sensors alone. Which becomes quite expensive when you consider that every relative who would like to use the hugging robot is going to have to buy this. The system is really interesting but way too expensive and therefore this article was not useful.

- The Hug Therapy Book

Kathleen Keating (1994). The Hug Therapy Book. Hazelden PES. ISBN 1-56838-094-1.

This book is all about hugs. The ethics and rules of conduct regarding hugs (Be sure to have permission, ask permission, be responsible etc.), the different kind of hugs (For example: Bear hug, side-to-side hug, heart-cantered hug, a-frame hug, etc.), the where, when and why (time of the day, environment, reasons to hug) and last but not least advanced techniques regarding hugs (Visualising, zen hugs).

This book really helped us out a lot, it is really hard to find literature that just explains what a hug is, or at least we were not able to find it. This book on the other hand is just that, it simply explains what a hug is, which is difficult for some people because it comes so natural for us. We were able to choose a type of hug for our concept namely the Bear hug thanks to this book (Definition of the bear hug follows below). This book also stated the importance of stroking once back during the hug and the warmth of a hug, which we read before in other articles as well.

The Bear Hug: " In the traditional bear hug (named for members of the family Ursidae, who do it best), one hugger usually is taller and broader than the other, but this is not necessary to sustain the emotional quality of bear-hugging. The taller hugger may stand straight or slightly curved over the shorter one, arms wrapped firmly around the other’s shoulders. The shorter of the pair stands straight with head against the taller hugger’s shoulder or chest, arms wrapped—also firmly!—around whatever area between waist and chest that they will reach. Bodies are touching in a powerful, strong squeeze that can last five to ten seconds or more. We suggest you use skill and forbearance in making the hug firm rather than breathless. Always be considerate of your partner, no matter what style of hug you are sharing. The feeling during a bear hug is warm, supportive, and secure.

Bear hugs are for: Those who share a common feeling or a common cause. Parents and offspring. Both need lots of reassuring bear hugs. Grandparents and grandoffspring. Don’t leave grandparents out of family bear hugs. Friends (this includes marrieds and lovers, who hopefully are friends too)." This makes it perfect for our concept and our demonstration.

- A Design-Centred Framework for Social Human-Robot Interaction

This article is about a framework for social human-robot interaction. They have five categories in there framework: form, modality, social norms, autonomy, interactivity. For each category they have basically three levels and for each of these categories they shortly explain what you should do when you are building a social robot. When looking at this framework for our hugging robot we should build for example a: Humanoid robot, because it is gonna act like a human and we want to give the impression that it could be a human (form), whereas for modality we do not really have those emotions and communication channels because the hugging robot is tele-operated, but you could imply that the robot needs to express the emotions of the teleoperator.

It is always good to have a set of ground rules about social robots, and to whether your robot concept satisfies those rules. Other than those basic set of rules/requirements, the artic was not that useful as the title let me expect it would be.

Used Literature/Further reading

Links subsection "challenges design realization"

- Advances in Telerobotics

AU: Manuel Ferre, Martin Buss, Rafael Aracil, Claudio Melchiorri, Carlos Balaguer ISBN: 978-3-540-71363-0 (Print) 978-3-540-71364-7 (Online) http://link.springer.com.dianus.libr.tue.nl/book/10.1007%2F978-3-540-71364-7

This book gives a discription of telerobotics and the advances within the field up to 2007. Several topics are discussed ranging form different interfaces, different control architectures and their performances to the applications of telerobotics. While the book starts with a general idea of each topic, the authors turn the text in depth soon after. This can make it hard to fully understand everything for readers not familiar with the field.

This book was partly useful as it gives a general idea about telerobotics, which is ultimately the foundation of the hugging robot. However it turns complicated fast, whereas not all topics discussed in the book are as useful, this resulted that their were no practical anwers found. Maybe the book will be more useful when designing the hug for the robot.

- Telerobotics

AU: T.B. Sheridan † http://www.sciencedirect.com.dianus.libr.tue.nl/science/article/pii/0005109889900939

This artikel discusses the historical developments in telerobotics and current and future applications of the technology. Different interfaces and control architecters are discussed. Its focus however is not on hardware or software but on robot-human interaction.

This artikel is useful as an introduction into telerobotics. There are no practical uses conserning software or hardware, and there might be some conserning USE aspects, although the artikel might be slightly outdated.

- An Intelligent Simulator for Telerobotics Training

AU: Khaled Belghith et al. http://ieeexplore.ieee.org.dianus.libr.tue.nl/xpl/abstractAuthors.jsp?arnumber=5744073&tag=1

This artikel discusses a architecture for path planning and learning and training. This might be useful for future research and development of the hugging robot, but it is not for the scope of this project.

- Telerobotic Pointing Gestures Shape Human Spatial Cognition

AU: John-John Cabibihan, Wing-Chee So, Sujin Saj, Zhengchen Zhang http://link.springer.com.dianus.libr.tue.nl/article/10.1007%2Fs12369-012-0148-9

This paper investigates the effect of pointing gestures in combination with speech in the design of telepresence robots. Can people interperter the gestures and does this inprove over time?

This paper is useful, as the hugging robot will have to make its intentions clear when it will try to hug a user. Hand gestures and pointing can play a large part in this. Based on the results of this paper, the hugging robot should have gestures incorporated in its request to make a hug.

- Haptics in telerobotics: Current and future research and applications

AU: Carsten Preusche , Gerd Hirzinger http://link.springer.com.dianus.libr.tue.nl/article/10.1007%2Fs00371-007-0101-3

This paper discusses the importance of haptics in telerobotics. It gives an introduction to haptics, telerobotics and telepresence. The paper is not very useful other than introducing the reader to these subject. Any implementation of haptics might be to complicated to acieve with Amigo.

Force feedback

In order to establis a exchange of forces that make a hug more comfortable than a static envellopment by the arms, some research is done into force feedback. We hope that this will help to make a hug more realistic and enjoyable.

Literature:

http://link.springer.com.dianus.libr.tue.nl/article/10.1007%2Fs12555-013-0542-6

http://servicerobot.cstwiki.wtb.tue.nl/files/PERA_Control_BWillems.pdf

http://www-lar.deis.unibo.it/woda/data/deis-lar-publications/d5aa.Document.pdf

Regeltechniek, M. Steinbuch & J.J. Kok

Modeling and Analysis of Dynamical systems, Charles M. Close & Dean H. Frederick & Honathan C. Newell. 3th edition

Engineering Mechanics Dynamics, J.L. Meriam & L.C. Kraige

Mechanical Vibrations, B. de Kraker

http://www.tandfonline.com/doi/pdf/10.1163/016918611X558216

http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=5246502

Experiment

Simulink model

As the ROS simulation didn't give any information or feedback in respect to the acting forces, a simulink model is created. For this simulation the physical aplication has to be translated in to a mathematical model, before a controller can be designed.

Simplyfication and Equation of motion

When discribing a hug, the horizontal movement of the arms is more dominant than the vertical movement. Therefor we considered the move to be 2D in the x-y plane. This has the disadvantage that gravitational forces are not included in the model, and thus somewhat less accurate. Because of symmetry, it suffices to only discribe one arm. This arm will consist of an upper and a lower arm, for simplification the hands are not included in the model. PLAATJE BIJ When creating a mathematical model, first the free body diagram is considered. PLAATJE BIJ As the robot moves both upper and lower arms independently with an independent motor, the corresponding plant consist of one arm. The plant can than be described with the balans of moments.

- [math]\displaystyle{ \sum M = \frac{1}{2} F_1 L_1 + T \dot{\theta_1} + K \theta_1 + M_2 = I_0 \ddot{\theta_1} }[/math]

In this formula [math]\displaystyle{ F_1 }[/math] is the force pressing on the arm at half its length [math]\displaystyle{ L_1 }[/math], [math]\displaystyle{ T }[/math] is the friction coefficient that discribes the friction in the joints, [math]\displaystyle{ K }[/math] is the stifness in the arm itself and [math]\displaystyle{ I_0 }[/math] is the inertia of the arm. The reaction moment from the underarm working on the upper arm is added in the form of , [math]\displaystyle{ M_2 }[/math]. The inertia can be calculated because the mass [math]\displaystyle{ m_1 }[/math] and the length of the arm are known.

- [math]\displaystyle{ I_0 = m_1 L_1 ^2 }[/math]

With this a controller can be designed.

Simulink model

- [math]\displaystyle{ H=\frac{1}{I_0 \ddot{\theta_1} + T \dot{\theta_1} + K \theta_1} }[/math]

| Factor | Value |

|---|---|

| Kp | row 1, cell 2 |

| Ki | row 2, cell 2 |

| Kd | row 3, cell 2 |

Technical Aspects

Introduction

leg uit wat er in deze sectie besproken wordt

Verwerken research in gewenste robot techniek

Challenges Design realization (eventueel helemaal weghalen)

(Creating a robot that serves as some kind of avatar that exactly moves like you is an ambitious idea. It will not be possible to realize this over a very long distance as that would require a very strong signal with a big range. Since we will be focussing on a prototype that can copy just a(or some) basic action(s) within a close range it might be possible to realize this.

To make this work there are a few components that have to be taken into account:

- The robot must be controlled from a (close) distance

- The robot must be able to recognize some human movements or gestures

- The robot must be able to translate these percepts into action

The first of these three components can be realized by using an arduino with a bluetooth module linked to a device, most likely a portable device or laptop. There exists a program or software called Kinect. This software uses a depth sensor to registrate movement. Xbox uses Kinect for certain games, allowing people to play a game using gestures and their own movement rather than a console. The Kinect software seems to be available for Windows.

On the internet a few tutorials and examples can be found on the internet of people using the Kinect software on a computer in order to control a device with gestures and arm movement. There is an example of a man that can make a robot hand copy his hand gestures using this software. Another example features a man making a robot kart move with his own gestures(uring Kinect and bluetooth).

As building an entire robot body could prove difficult we hope to borrow a robot body or prototype from the “right people” and arm it with tools mentioned above. As some people have proven the Kinect controlled robot to be possible, it should be possible to make a hugging robot.

--Links to the tutorials and clips of the examples are formulated above can be found at the end of this wiki under the name "Links subsection challenges design realization")

Requirements AMIGO

Exact Usage Scenario

The aim of this section is to provide an exact description of a hug that the AMIGO robot needs to perform during the final demonstration.

Assumptions

- The AMIGO robot’s shoulders are lower than the hug-receiver’s shoulders.

- The hug-sender has a clear view of the AMIGO robot and the hug-receiver without any cameras.

- The hug-sender can see what the AMIGO’s main camera sees using a display.

Hug description

- The hug-sender and the hug-receiver have already established a communication session via telephone.

- The hug-receiver turns the AMIGO robot on.

- The hug-sender turns the KINECT system on.

- The hug-sender performs several test movements: by taking several poses focused on the hug-sender’s arms and checking whether the AMIGO robot’s arms take on the same poses.

- The hug-sender spreads their arms to indicate they are ready to give the hug. The AMIGO robot also spreads its arms.

- Both the hug-sender as well as the hug-receiver are notified that a hug can now be given. This can be done for example by changing the AMIGO’s color or having it pronounce a certain message.

- The hug-receiver approaches the AMIGO robot.

- The hug-receiver begins to hug the AMIGO robot (a so-called ‘bear’-hug).

- The hug-receiver tells the hug-sender that they are ready to receive a hug from the AMIGO.

- The hug-sender makes a hugging movement by closing their arms.

- The AMIGO robot takes over after the hug-sender’s arms have reached a certain point. This is because the hug-sender cannot see the hug-receiver and the AMIGO’s arms clearly enough to give the hug-receiver a comfortable hug.

- By measuring the resistance through the AMIGO’s actuators, the AMIGO can estimate the amount of pressure being exerted on the hug-receiver. The AMIGO starts to slowly close its arms around the hug-receiver, starting with its upper arms and ending with the hands.

- (optional) By moving its arms closer together or farther apart, the hug-sender can make the AMIGO robot hug tighter or looser.

- The hug-sender or the hug-receiver indicates that they would like to end the hug.

- The AMIGO robot slowly spreads its arms outwards.

- The hug-receiver stops hugging the robot and walks away.

- The AMIGO robot and the KINECT system are turned off.

=== Requirements (andere naam)

Must-have

- The AMIGO must be able to process the arm-movements of the hugging person in considerable time (ideally in real time, but probably unrealistic) and mimic them credible and reasonable fluently to the person ‘to be hugged’.

- The arms of the AMIGO must be able to embrace the person ‘to be hugged’. More specifically; the AMIGO must be able to make an embracing movement with his arms.

Should-have

- There should be a force-stop function in the AMIGO so that the person ‘to be hugged’ can stop the hug anytime if he/she desires (for example because he/she feels uncomfortable).

- The AMIGO should have a feedback function as to if and how much his arms are touching a person (pressure sensors).

Could-have

- The AMIGO could get a message from the ‘hug-giver’, the person in another place wanting to give a hug.

- The AMIGO could inform the ‘hug-receiver’ that a hug has been ‘send’ to him/her and ask if he/she wants to ‘receive’ the hug now.

- The AMIGO could receive a message from the ‘hug-giver’ that the hug has ended.

ROS

The predefined simulator of AMIGO is used for this project. This simulator runs in Robotic Operating System or ROS. ROS is programming software used for many robot projects and can not be ran in Windows (yet). Since all the work in on AMIGO in ROS is done in the control system Ubuntu 14.04, this was the control system we installed for the project.

All functions for controlling the AMIGO robot in the simulator as well as its main environment are already defined and could be downloaded from GitHub. The only things we had to figure out were certain commands to move the arms and process these in a script. This can be done with some predefined operations. We used Python as a programming language and based on a template we created a script that can control the arms of amigo based on data from the Kinect. This script can be executed in the Terminal and will send orders to the simulator as long as the simulator is activated. The simulator can be started by typing the following commands in loose terminals.

-roscore, this command will activate the ROS master and the network of nodes. Without this the script and the simulator can't communicate.

-astart, this is a command that will launch a file that starts up certain parts of the AMIGO simulator.

-amiddle, this is a second command that will launch a file that starts up the rest of the AMIGO simulator and the world models.

-rviz-amigo, this will start a visualizer that shows the robot model and its environment. This can be used to see what the robot is doing.

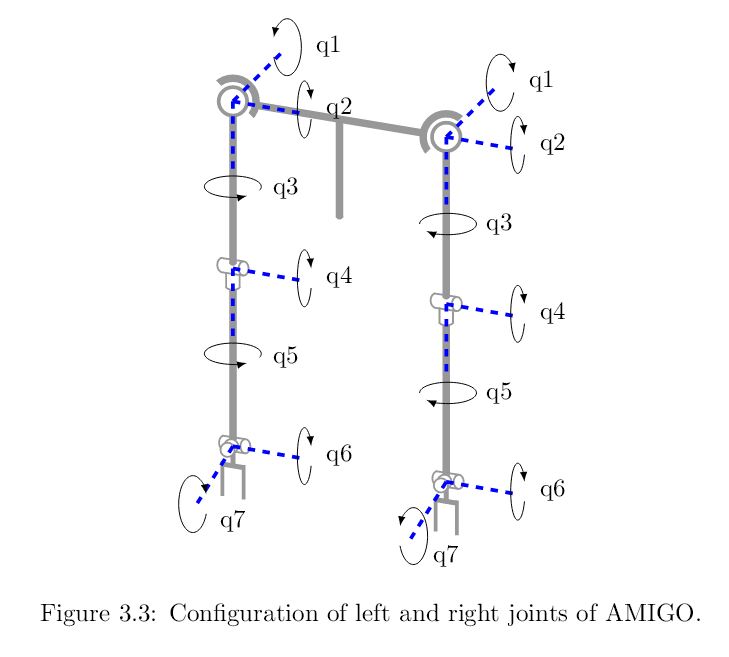

The arm control function used for our simulation is Amigo.leftarm.default_trajectories and Amigo.rightarm.default_trajectories . These commands work as follows. In the part about AMIGO's degrees of freedom and Coordinates conversion is discussed that AMIGO has seven joints in his arms that can be rotated with a certain amount. Amigo.lefarm.default_trajectories and Amigo.leftarm.default_trajectories can be used to predefine a certain sequence of positions for the seven joints in respectively the left and right arm of the robot. The previously defined sequences of poses can be executed with the commands “Amigo.leftarm.send_joint_trajectory” and “Amigo.rightarm.send_joint_trajectory”. These commands send the sequences for the arms to the simulator and AMIGO will then move his arms in the given positions.

A ROS node is a small part of a program in ROS with a certain function. ROS nodes can communicate with each other through ROS topics. This can be compared with two mobile devices communicating with each other through a certain frequency. A node can send certain messages or instruction through a topic and another node can receive this messages and carry out the instructions.

The AMIGO simulator can be seen as a node and our script that can send the commands is a node as well that sends the commands over a topic to be received by the simulator node.

The script used will receive an array size 1x14 with 14 numbers in it from the computer housing the Kinect software, derived from Kinect data. This array will be send over the topic and the script is written in such a way that it can receive this array and process it to two 1x7 arrays that will be the coordinates of the arm joints for the two arms of AMIGO. The script in windows will continuously send a new array with numbers based on the data derived of the Kinect. This way the script will continuously receive data which it will then process and send to the simulator. This way AMIGO will continously adjust its arm poses to the poses of the person in front of the Kinect interface. More on how this works is discussed under Kinect and Connection ROS-Kinect.

Progress Report (weghalen)

The software running the AMGIO simulation (and hopefully the AMIGO robot itself) is an essential part of this project. After the first consultation with a team member of the Tech United Team (Janno …), it became clear that the programming language used to control the AMIGO robot, ROS, has quite a steep learning curve. It is because of this that we decided to dedicate three team members (Laurens, Joshwa and Jelle) to the task of getting to know the programming language over the course of the coming two weeks. The following is a report on progress of the programming of the AMIGO simulation and the obstacles encountered.

Week 4

The ROS version used for controlling the AMIGO runs on the Ubuntu 14.04 operating system. In the weekend preceding this week, we had tried to install Ubuntu on a USB stick. We deemed this to be a safer alternative than partitioning the hard drive of our TU/e notebooks. At the start of this week, we set to installing ROS and going through several tutorials. These tutorials were aimed at creating an environment for writing ROS programs and getting familiar with the terminal and ROS commands. The latter was quite confusing to us, as we had never used Linux and therefore the terminal before.

Halfway during the week, we encountered a problem. It appeared that we had installed a try-out version of Ubuntu which does not save any changes made at all. Luckily, we had found the following tutorial. This allows the user to reserve about 4GB on the USB stick for personal storage. After installing Ubuntu properly this time, we ran through the installation process and the tutorials again.

Despite the setbacks this week, we obtained a better understanding of the ROS. When Janno showed us how several things worked in ROS, we were mostly confused seeing all these commands for the first time. We had now obtained some insight as to what he was getting at. However, there were some issues that bothered us: we were still in the dark about how to actually program the AMIGO robot and we had spent our time doing the same tasks.

Week 5

This week Joshwa and Jelle spent more time on completing the beginner ROS tutorials. The subjects covered in these tutorials are on two ROS programs communicate using a so-called ROS-topic. These tutorials concluded by having you program two communicating ROS programs in ROSPython, a adaption of regular Python by ROS. Laurens focused on getting the TU/e’s own ROS programs and adaptations running, especially trying to start the ROS simulation. Whilst doing so, he encountered a big problem. The 4GB reserved earlier for personal storage proved to be insufficient for the extra software installed. He had then tried to resolve this problem by installing the Ubuntu software in a different way. After installation on a USB 2.0 stick, which are unsuitable for direct installation of an operating system, Laurens has got the simulation running, despite not having figured out how it works yet.

Week 6

TBA

Week 7

TBA

Week 8

TBA

Kinect

The Kinect is used in order to record the movements of the hug giver giving the hug. The coordinates of the joints (in this case the shoulder, elbow and wrist) are then converted into relevant values corresponding with Amigo's degrees of freedom.

Amigo's degrees of freedom

source: pdf

Amigo uses two PERA manipulators that have seven degrees of freedom (DOF) each.

Coordinates conversion

Amigo will allow someone to give a hug over a distance. The Kinect will take pictures of this motion and produce the cartesian coordinates of each of the joints. In order to convert this to useful values for Amigo, these cartesian coordinates must be converted to spherical coordinates. As the software might have trouble tracking the different joints, the user is given colored dots on these places. It will be these dots that the software will track and convert into relevant information. The user will be instructed to first stretch his arms horizontally so that the software can initialise. By doing so the relevant parameters can be measured. These are the lengths of the upper ([math]\displaystyle{ \rho\_1 }[/math]) and lower ([math]\displaystyle{ \rho\_1 }[/math]) arms as wel as setting a reference point.

The angles of the upper arm can then be found first by making A the new orign by subtracting the position of the shoulder (A) form the position of the elbow (B) and then convert the position of the elbow (B') in spherical coordinates. STILL NEED ANGLE DEFINITIONS

[math]\displaystyle{ \overline{B_c}-\overline{A_c}=\overline{B'_c} }[/math]

- [math]\displaystyle{ \ \begin{bmatrix} \rho \\ \theta \\ \phi \end{bmatrix}| }[/math]

- [math]\displaystyle{ \begin{bmatrix} \rho_1 \\ arccos(\frac{z}{\rho}) \\ arctan(\frac{y}{x}) \end{bmatrix} = }[/math]

[math]\displaystyle{ \overline{C}' = \begin{bmatrix} \cos{\theta} & \sin{\theta} & 0 \\ \sin{\theta} & \sin{\theta} & 0 \\ 0 & 0 & 1 \end{bmatrix} \begin{bmatrix} x \\ y\\ z \end{bmatrix} }[/math]

From that the relevant coordinates of the next joint can be found by converting [math]\displaystyle{ \overline{B'_c} }[/math] to a new set so that B is now the orign. [math]\displaystyle{ \overline{C_c}-\overline{B_c}=\overline{C'_c} }[/math]

[math]\displaystyle{ \ \begin{bmatrix} cos(\phi) & sin(\phi) & 0\\ sin(\phi) & cos(\phi) & 0\\ 0 & 0 & 1 \end{bmatrix} }[/math]

[math]\displaystyle{ \

\begin{bmatrix}

cos(\theta) & 0 & sin(\theta) \\

0 & 1 & 0 \\

sin(\theta) & 0 & cos(\theta)\\

\end{bmatrix}

}[/math]

Verbinding ROS - Kinect

iets vertellen hoe we kinect op windows en ros laten samenwerken In order to have the computer that has ROS and Ubuntu communicate with the computer that has Windows and the Kinect software a special Socket node is used. This socket node is installed on the Ubuntu laptop and is specially predefined to receive messages from the (...name of windows software sending information...) . The socket node then posts the message on a topic all other active nodes in ROS can read. This way the node of the used script can receive the messages send by the computer with windows.

scripts

download link gemaakte scripts

Evaluation

evaluatie van het hele project: wat hebben we uiteindelijk gedaan en wat hadden we eigenlijk willen doen

Remaining used literature (WTF doen we hier mee?)

https://www.youtube.com/watch?v=KnwN1cGE5Ug

https://www.youtube.com/watch?v=AZPBhhjiUfQ

http://kelvinelectronicprojects.blogspot.nl/2013/08/kinect-driven-arduino-powered-hand.html

http://www.intorobotics.com/7-tutorials-start-working-kinect-arduino/

Anand B, Harishankar S Hariskrishna T.V. Vignesh U. Sivraj P. Digital human action copying robot 2013

http://singularityhub.com/2010/12/20/robot-hand-copies-your-movements-mimics-your-gestures-video/

http://www.telegraph.co.uk/news/1559760/Dancing-robot-copies-human-moves.html

http://www.emeraldinsight.com/doi/abs/10.1108/01439910310457715

http://www.shadowrobot.com/downloads/dextrous_hand_final.pdf

https://www.shadowrobot.com/products/air-muscles/

MORE TO FOLLOW