PRE2015 3 Groep2 week7: Difference between revisions

| Line 69: | Line 69: | ||

= Report = | = Report = | ||

[[Media:Second_version_report_group_2_quarter_3_2015-2016.pdf]] | |||

Latest revision as of 19:43, 28 March 2016

Mapping

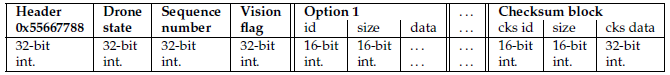

Structure of navigation data packets:

Each packet is in Little-Endian byte order, this means that the Least Significant Bit (LSB) is stored at the lowest memory address, or when you write it out on paper, it is the most left bit. The 4-byte header of each packet is the unique identifier “0x55667788” in hexadecimal which translates to “ˆwfU” in ASCII. The following 4 bytes form a 32-bits field which represents the drone’s state. Bytes 8 through 11 form the sequence number, this is useful for synchronization, since the packets are sent over UDP which is an unreliable data transfer protocol, which means that packets can get lost and can be delivered out of order, so it is possible to receive old navigation data packets, which can be discarded. Bytes 12 through 15 form the fly state bit field, the semantics of this field are unknown, nothing about this field is explained in the SDK documentation and nothing about it has been discovered from the official SDK library. After these first 16 bytes, the option segments follow. Each option has the common structure where the first 4 bytes represent the option ID and the length of the option segment, the length includes the 4 bytes header. This means that the length of the data is size – 4 bytes. After the 2-byte size field the payload will follow, whose structure depends on the specific option. The SDK documentation doesn’t tell you anything about their structure you have to investigate their structure yourself by inspecting the file “navdata_common.h” in the official SDK library.

Each navigation data option has a fixed length, even when fields are unused. Probably done to make the parsing consistent and easier compared to the case where option data size would shrink with the amount of useful data contained. Be aware of this when you are parsing an option segment like Vision Detect, although one tag might have been detected, the packet contains still information for 4 tags, the fields of the other 3 tags are simply padded with dummy data.

How do I know which navigation data is sent to the client? To figure out which navigation data options are enabled one has to inspect the configuration parameter: “general:navdata_options”, the value stored at this parameter is the integer value of a 32-bits field.

The following navigation data options are available listed with their corresponding ID, name and description. In total there are currently 28 available options, excluding the checksum option, which isn’t an option you request/activate. We have only provided a description with the most important options. We simply do not know the semantics of each navigation data option, the documentation does not tell anything about their structure, to figure out their structure one must reverse-engineer some of the official library C-code, which can be difficult, the library file that describes these structures is “navdata_common.h”. However many of the fields in these structures are undocumented, sometimes one can figure out their semantics just by the variable name, but not always and for some properties it is useful to know the value domain, which is also unspecified and must be figured out by yourself. Another quirk is that some of these fields are not actually in use, but were reserved for future use.

Navigation Data Options: File:Table data packets.pdf

Note: you won’t find the correct structure of “ZIMMU_3000”, which represents the GPS data, in the “navdata_common.h” file, the actual structure is published at “https://github.com/lesire/ardrone_autonomy/commit/a986b3380da8d9306407e2ebfe7e0f2cd5f97683”

To check whether a certain option is enabled one must compute the bit mask for a specific option and compute the bitwise logical AND of the options value with the mask, if the computed value equals the mask then we know the specific options is enabled. The mask is computed with the following expression: bitmask(id) = 1 << id, which boils down to the id-th bit is set to 1, all other are 0. Where “<<” denotes the left-shift operator and id is the id of the desired option. Example: Suppose our options bit field is “1 0000 0000 0000 0001”. We want to check if vision detect is enabled. Vision detect has option ID: 16 and therefore mask “1 0000 0000 0000 0000”. We compute:

| 1 0000 0000 0000 0001 | (options) |

| 1 0000 0000 0000 0000 | (options) |

| 1 0000 0000 0000 0000 | (Result) |

We note that the result equals the mask, so we know the option is enabled.

The maximum packet size is 4096 bytes, this value comes from the official SDK from the file “navdata_common.h”.

We ourselves are particularly interested in the navigation data options: “DEMO” and “VISION_DETECT”. The bitfield to activate these options is: “10000000000000001” which translates to the integer “65537”. This value must be set to the configuration parameter “general:navdata_options” through the following AT-command: “AT*CONFIG,<SEQ>,\”general:navdata_options\”,\”65537\””. The backslash denotes the escape character, since the parameter and value must be surrounded by quotes and the AT-command itself is a String and therefore denoted in quotes, one must escape the inner quotes.

The fixed size for the navigation data packet containing only DEMO and VISION_DETECT is 500 bytes. 16 bytes header data + 148 bytes DEMO option + 328 bytes VISION_DETECT + 8 bytes CHECKSUM.

Checksum

One can compute the checksum of a packet by summing up all the bytes in the packet, excluding the last 8 bytes which form the CHECKSUM option, treating each byte as an 8-bits unsigned integer. The computed checksum must equal the value in the checksum option, if not one should ignore the packet since it was corrupted.

Vision Tags

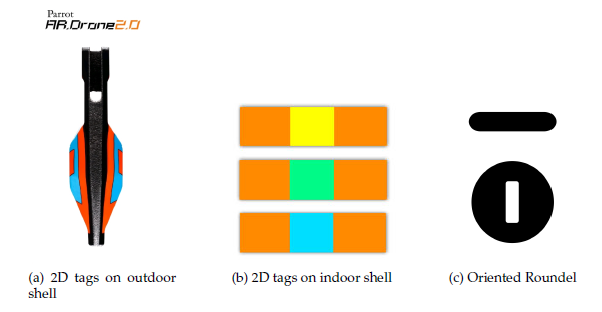

The AR.Drone 2.0 supports automatic detection of three pre-defined tags or markers. The following tags are supported:

Tag detection is not enabled by default, it has to be activated by modifying the following configuration parameters by means of sending “AT*CONFIG” commands: “detect:detect_type”, “detect:enemy_colors”, “detect:detections_select_h”, “detect:detections_select_v” and “detect:detections_select_v_hsync”.

With “detect:detect_type” you specify which tags should be detected, it can be set to use a multi-detection mode, where you can further specify which camera should try to detect which tag type. It is strongly advised not to configure both cameras to detect the same tag type, since this gives unexpected results. To set the tag type to detect for the horizontal camera, update the “detect:detections_selecht_h” parameter accordingly. To specify which tag type the vertical camera must detect, you have a special possibility, you can set the value to “detect:detections_select_v” or “detect:detections_select_v_hsync”, you should use either one to specify which tag type you want the vertical camera to detect, but absolutely not both since this gives unexpected behavior, the difference though is that the latter setting does detection at a rate of 30 fps opposed to the 60 fps in the former, so if you do not need the 60 fps detection, you are advised to use the 30 fps version, since this reduces CPU load.

With “detect:enemy_colors” you can specify which of the three colored stripe tags should be detected. This setting is not used when “oriented roundel detection” is configured.

The AR.Drone 2.0 can detect up to four tags in a frame, four tags is the limit, since detecting these tags is computationally expensive. The documentation tells briefly that you can detect up to 4 tags or roundel shell, leaving it unclear if you can detect a roundel and also 3 other tags or just 4 standard xor a roundel shell. This we will test in the upcoming week. When the onboard detection algorithm detects tags in the current frame, it publishes its detections through the navigation data stream, if the VISION DETECT option is enabled, the NAVDATA package will contain the VISION DETECT option and one can then parse the information about the detected tags. For the oriented roundel, the drone’s orientation with respect to the roundels direction is published, with this information one can perform yaw-movement to align the drone’s direction with that of the roundel.

The complete vision detect option is 328 bytes in length, hence the payload is 324 bytes. The description of each tag consists of 324 / 4 = 81 bytes. The funny thing is that each property field, except “# of detect tags”, is actually an array of a fixed size of 4. This means that the data fields for a tag are not consecutively stored after each other, but all values of all the tags for a particular field are stored consecutively. This means that byte 4 to 20 represent 4 type field values. Be aware of this structure while parsing.

Detailed structure of the vision detect option: File:Table vision detection.pdf

After digging really deep into the official SDK, we found that the type is not simply a value, but represents the composition of two bit fields whose semantics are different, the first 16 bits represent that camera source, and the latter 16 bits represent the actual tag type that has been detected. The given offset is for convenience it denotes the byte offset at which the first element in the array for the corresponding field starts, this is useful for parsing. We have stated before that the type field is a composite value, to parse the source compute “(type value << 16) & 0x0FF”, to parse the tag type from the type field compute “type value & 0x0FF”, according to definitions in “ardrone_api.h”.