PRE2024 3 Group18: Difference between revisions

| Line 316: | Line 316: | ||

| rowspan="5" |Week 4 | | rowspan="5" |Week 4 | ||

|Bas Gerrits | |Bas Gerrits | ||

| | |Meeting (3h) | ||

| | | | ||

|- | |- | ||

| Line 332: | Line 332: | ||

|- | |- | ||

|Sem Janssen | |Sem Janssen | ||

| | |Meeting (3h) | ||

| | | | ||

|- | |- | ||

| rowspan="5" |Week 5 | | rowspan="5" |Week 5 | ||

|Bas Gerrits | |Bas Gerrits | ||

| | |tutor meeting + after meeting (2h), online meeting (1h), | ||

| | | | ||

|- | |- | ||

|Jada van der Heijden | |Jada van der Heijden | ||

| | |tutor meeting + after meeting (2h), online meeting (1h), | ||

| | | | ||

|- | |- | ||

|Dylan Jansen | |Dylan Jansen | ||

| | |tutor meeting + after meeting (2h), online meeting (1h), | ||

| | | | ||

|- | |- | ||

|Elena Jansen | |Elena Jansen | ||

| | |tutor meeting + after meeting (2h), online meeting (1h), interview 1 (1h), interview 2 (2h), real life visit for interviews + preparation (6h) , analysing forms interviews (1h), updating wiki (2h), emailing with participants (30m), designing bracelet + sensor wearable (sketching + 3d) (4h) | ||

| | | | ||

|- | |- | ||

|Sem Janssen | |Sem Janssen | ||

| | |online meeting (1h), | ||

| | | | ||

|- | |- | ||

Revision as of 15:18, 19 March 2025

Members

| Name | Student Number | Division |

|---|---|---|

| Bas Gerrits | 1747371 | B |

| Jada van der Heijden | 1756710 | BPT |

| Dylan Jansen | 1485423 | B |

| Elena Jansen | 1803654 | BID |

| Sem Janssen | 1747290 | B |

Approach, milestones and deliverables

| Week | Milestones |

|---|---|

| Week 1 | Project orientation

Brainstorming Defining deliverables and limitations Creating Planning Initial topic ideas Defining target users |

| Week 2 | Wiki layout

Task distribution SotA research Identifying and determining specifications (hardware, software, design (MoSCoW Prioritization)) UX Design: User research |

| Week 3 | UX Design: User Interviews

Wiki: Specifications, Functionalities (sensors/motors used) |

| Week 4 | Prototyping cycle: building and mock-ups

UX Design: Processing interviews Order needed items |

| Week 5 | Evaluating and refining final design |

| Week 6 | Demo/scenario testing, fine-tuning

UX Design: Second round of interviews (evaluation) Wiki: Testing results |

| Week 7 | Presentation/demo preperations

Wiki: Finalize and improve (ex. Conclusion, Future work, Discussion) |

| Name | Responsibilities |

|---|---|

| Bas Gerrits | Arduino control |

| Jada van der Heijden | Administration, UX design, Wiki |

| Dylan Jansen | Code, Electronics, SotA |

| Elena Jansen | Designing, user research |

| Sem Janssen | Hardware |

Problem statement and objectives

Problem Statement

When encountering an environment as ever-changing and unpredictable as traffic, it is important for every traffic participant to have the widest range of information about the situation available to them, for safety reasons. Most roads are already covered in guiding materials: traffic lights, cross walks and level crossings have visual, tactile and auditory signals that are able to relay as much information to users of traffic as possible. Unfortunately, some user groups are more dependent on certain type of signals than others, for example due to disabilities. Not every crossing or road has all of these sensory cues, therefore it is important to find a solution for those user groups that struggle with this lack of information and therefore feel less safe in traffic. In specific, we are looking at visually impaired people, and creating a system/robot/design that will aid them in most traffic situations to cross roads, even with the lack of external sensory cues.

Main objectives:

- The design should be able to aid the user in crossing a road, regardless of external sensory cues already put in place, with the purpose of giving more autonomy to the user

- The design must have audible, or otherwise noticeable, alerts for the user, that are easily understood by said user

- The design must have a reliable detection system

- The design must be 'hidden': it should not disrupt the user's QoL and could be barely noticeable as technology

- Ex. hidden in the user's clothing

An extended list of all features can be found at MoScoW part.

State of the Art Literature Research

Existing visually-impaired aiding materials

Today there already exist a lot of aids for visually impaired people. Some of these can also be applied to help cross the road. The most common form of aid for visually impaired people when crossing is audio traffic signals and tactile pavement. Audio traffic signals provide audible tones when it’s safe to cross the road. Tactile pavement are patterns on the sidewalk to alert visually impaired people to hazards or important locations like crosswalk. These aids are already widely applied but come with the drawback that it is only available at dedicated crosswalks. This means visually impaired people might still be able to cross at locations they would like to, which doesn’t positively affect their autonomy.

Another option is smartphone apps. There are currently two different types of apps that visually impaired people can use. The first is apps that use a video call to connect visually impaired people to someone that can guide them through the use of the camera. Examples of these apps are Be My Eyes and Aira. The second type is an app utilizing AI to describe scenes using the phone’s camera. An example of this is Seeing AI by Microsoft. The reliability of this sort of app is of course a major question.

There have also been attempts to make guidance robots. These robots autonomously guide, avoid obstacles, stay on a safe path, and help you get to your destination. Glidance is one of these robots currently in the testing stage. It promises obstacle avoidance, the ability to detect doors and stairs, and a voice to describe the scene. In its demonstration it also shows the ability to navigate to and across crosswalks. It navigates to a nearby crosswalk, slows down and comes to a standstill before the pavement ends, and keeps in mind the traffic. It also gives the user subtle tactile feedback to communicate certain events to them. These robots could in some ways replace the tasks of guidance dogs. There are also projects that try to make the dogs robot-like. Even though this might make the implementation harder than it needs to be. It seems the reason for the shape of a dog is to make the robot feel more like a companion.

There also exist some wearable/accessory options for blind people. Some examples are the OrCam MyEye: A device attachable to glasses that helps visually impaired users by reading text aloud, recognizing faces, and identifying objects in real time. Or the eSight Glasses: Electronic glasses that enhance vision for people with legal blindness by providing a real-time video feed displayed on special lenses. Theres also the Sunu Band (no longer available): A wristband equipped with sonar technology that provides haptic feedback when obstacles are near, helping users detect objects and navigate around them. While these devices can all technically assist in crossing the road, none of them are specifically designed for that purpose. The OrCam MyEye could maybe help identify oncoming traffic but may not be able to judge their speed. The eSight Glasses are unfortunately not applicable to all types of blindness. And the Sunu Band would most likely not react fast enough to fast-driving cars. Lastly, there are some smart canes that come with features like haptic feedback or GPS that can help guide users to the safest crossing points.

About road aids

??

User Experience Design Research

For this project, we will employ a process similar to UX design:

- We will contact the stakeholders or target group, which is in this case visually impaired people, to understand what they need and what our design could do for them

- Based on their insight and literature user research, we will further specify our requirements list from the USE side

- Combined with requirements formed via the SotA research, a finished list will emerge with everything needed to start the design process

- From then we build, prototype and iterate until needs are met

Below are the USE related steps to this process.

USEr research

Target Group

Primary Users:

- People with affected vision that would have sizeable trouble navigating traffic independently: ranging from visually impaired to fully blind

Secondary Users:

- Road users: any moving being or thing on the road will be in contact with the system.

- Fellow pedestrians: the system must consider other people when moving. This is a separate user category, as the system may have to interact with these users in a different way than, for example, oncoming traffic.

Users

What Do They Require?

The main takeaway here is that visually impaired people are still very capable people, and have developed different strategies for how to go about everyday life.

‘Not having 20/20 vision is not an inability or disability its just not having the level of vision the world deems acceptable. Im not disabled because im blind but because the sighted world has decided so.’ ((TEDx Talks, 2018). This was said by Azeem Amir in a ted talk about how being blind would never stop him. In the interviews and research it came forward again and again that being blind does not mean you can not be an active participant in traffic. While there are people that lost a lot of independence due to being blind there is a big group that goes outside daily, takes the bus, walks in the city, crosses the street. There are however still situations where it does not feel safe to cross a busy street in which case users have reported having to walk around it, even for kilometers, or asking for help from a stranger. This can be annoying and frustrating. In a belgian interview with blind children a boy said the worst thing about being blind was not being able to cycle and walk everywhere by himself, interviewing users also reflected this ((Karrewiet van Ketnet, 2020). So our number one objective with this project is to give back that independence.

A requirement of the product is not drawing to much attention to the user. This is because while some have no problem showing the world theyre legally blind and need help, others prefer not to have a device announce they are blind over and over.

!!From user research we will probably find that most blind people are elderly. We can conclude somewhere here then that we will focus on elderly people, and that that will have implications for the USEr aspects of the design as elderly people are notorious for interacting and responding differently to technology.!!

Society

How is the user group situated in society? How would society benifit from our design?

People that are visually impaired are somewhat treated differently in society. In general, there are plenty of guidelines and modes of help accessible for this group of people, considering globally at least 2.2 billion have a near or distance vision impairment (https://www.who.int/news-room/fact-sheets/detail/blindness-and-visual-impairment). In the Netherlands there are 200 000 people with a 10%- 0% level of sight ('ernsitge slechtziendheid' and 'blindheid'). It is important to remember that when someone is legally blind a big percentage can still see. Peter who we have interviewed for this project and is a member of innovation space on campus, has 0.5% sight with tunnelvision. This means Peter can only see something in the very center. Sam from a Willem Wever interview has 3% with tunnel vision and can be seen in the interview wearing special glasses through which he can still watch tv and read ((Kim Bols, 2015). Society often forgets this and simply assume blind people see nothing at all. This is true for George, a 70 year old man we interviewed, but not for all target users.

The most popular aids for visually challenged people is currently the cane and the support dog. Using these blind people navigate their way through traffic. In the Netherlands it is written in the law that when the cane is raised cars have to stop. In reality this does not always happen, especially bikes do not stop, this was said in multiple interviews. Many people do have the decency to stop but it is not a fail proof method. Blind people thus remain dependant on their environment. Consistency, markings, auditory feedback and physical buttons are there to make being independent more accesibly. These features are more prominent in big cities which is why our scenario takes place in a town.

Many interviewed people reported apps on their phones, projects from other students, reports from products in other countries, paid monthly devices, etc. There are a lot of option for improvement being designed or researched but none are being brought into the big market.

As society stands right now, the aids that exist do help in aiding visually impaired people in their day to day life, but do not give them the right amount of security and confidence needed for those people to feel more independent, and more at equal pace as people without impaired vision. In order to close up this gap, creating a design that will focus more on giving these people a bigger sense of interdependency and self-reliance is key.

Enterprise

What businesses and companies are connected to this issue?

Peter from Videlio says the community does not get much funding or attention in new innovations. Which is why he has taken it into his own hands to create a light up cane for at night, which until now does not even exist for dutch users. There is a lot of research on universities and school projects because it is an 'interesting weak user group' but they do not get actual new products on the market.

REFERENCES

TEDx Talks. (2018, 8 november). Blind people do not need to see | Santiago Velasquez | TEDxQUT [Video]. YouTube. https://www.youtube.com/watch?v=LNryuVpF1Pw

Karrewiet van Ketnet. (2020, 15 oktober). hoe is het om blind of slechtziend te zijn? [Video]. YouTube. https://www.youtube.com/watch?v=c17Gm7xfpu8

Kim Bols. (2015, 18 februari). Willem Wever - Hoe is het om slechtziend te zijn? [Video]. YouTube. https://www.youtube.com/watch?v=kg4_8dECL4A

Stakeholder Analysis

To come in closer contact with our target groups, we reached out to multiple associations via mail. The following questions were asked (in Dutch), as a ways to gather preliminary information:

Following these questions, we got answers from multiple associations that were able to give us additional information.

From this, we were able to setup interviews via Peter Meuleman, researcher and founder of Videlio Foundation. This foundation focusses on enhancing daily life for the visually impaired through personal guidance, influencing governmental policies and stimulating innovation in the field of assisting tools. Peter himself is very involved in the research and technological aspects of these tools, and was willing to be interviewed by us for this project. He also provided us with interviewees via his foundation and his personal circle.

Additionally, via personal contacts, we were also able to visit an art workshop at StudioXplo on x meant for the visually impaired. We were able to conduct some interviews with the intended users on site.

(how do we cross the road vs. hard of seeing)

Creating the solution

MosCoW Requirements

Prototypes/Design

Here we will put some different versions of a design of what we think will be a solution. We can pick one to work on.

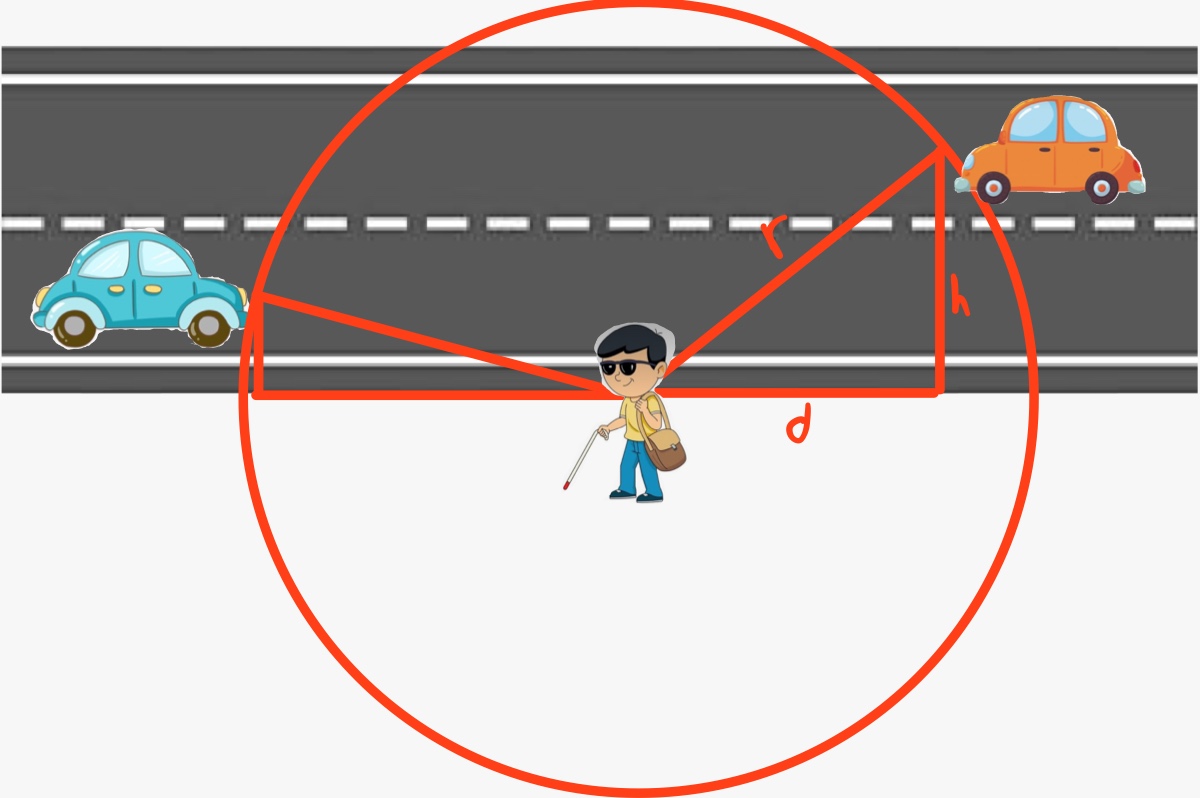

To safely cross a road with a speed limit of 50 km/h using distance detection technology, we need to calculate the minimum distance the sensor must measure.

Assuming a standard two-lane road width of 7.9 meters to be on the safe side, the middle of the second lane is at 5.8 meters. This gives us a height h of 5.8 meters.

The average walking speed is 4.8 km/h, which converts to 1.33 m/s. Therefore, crossing the road takes approximately 5.925 seconds.

The distance d can now be calculated. A car traveling at 50 km/h moves at 13.88 m/s, so:

d=13.88 * 5.925 = 82.24 meters

Applying the Pythagorean theorem, the radius r is:

r=sqrt(d^2 + h^2)=sqrt(82.24^2 + 5.8^2)=82.44

This means the sensor needs to measure a minimum distance of approximately 82.44 meters to ensure safe crossing.

The sensors that are capable of this are more the 2000 euros, which is out of the budget for the course so for the prototype a small scaled version needs to be used to prove the concept. The new speed will depend on the distance the sensor can measure. This is done by the following ratio.

speed/distance = 50/82.44

Idea 1

The first design uses a servomotor to rotate the distance sensor. When it turns 180 degrees it measures distance and then the motor rotates back 180 degrees and compares the distances. If there is a change in distances this means there is movement in the environment. This will result in the device telling the user that it is not safe to cross the road.

Idea 2

The second design uses a better distance sensor which measures even further and will calculate the speed of the road users. Depending on this speed the device tells the user if it is safe to cross the road.

Idea 3

Wearable AI camera

This wearable device has a camera built into it in order to be able to view traffic scenes visually impaired people might want to cross but lack the vision to do so safely. The cameras send their video to some kind on small computer running an AI image processing model. This model would then extract some parameters that are important for safely crossing the road, think about: if there is oncoming traffic; other pedestrians; how long crossing would take; or whether there are traffic islands. This information can then also be communicated back to the user according to their preferences: audio (like a voice or certain tones) or haptic (through vibration patterns). The device could be implemented in various wearable forms. For example: a camera attached to glasses, something worn around the chest or waist, a hat, or on the shoulder. The main concerns is that the user feels most comfortable with the solution, like being easy to use, not being in the way, and being hidden if it makes them self-conscious.

This idea requires a quite sophisticated AI model, it is of concern that the computer is able to quickly process cars and relay the information back to the user or else the window to safely cross the road might have already passed. It also needs to be reliable, should it misidentify a car or misjudge is speed it could have a negative impact for the users life expectancy. Running an AI model like is proposed could also pose a challenge on a small computer. The computer has to be attached to the user is some way and can be quite obtrusive if it is too large. Users might also feel it makes them uncomfortable if it is very noticeable that they are wearing some aid and a large computer could make it difficult to make discrete. On the other side, an advanced model could probably not run locally on a very small machine, especially considering the fast reaction times required.

Implementation

Evaluation

Conclusion

Discussion

Appendix

Timesheets

| Week | Names | Breakdown | Total hrs |

|---|---|---|---|

| Week 1 | Bas Gerrits | Meeting & brainstorming (3h), SotA research (4h), working out concepts (1h), sub-problem solutions (2h) | 10 |

| Jada van der Heijden | Meeting & brainstorming (3h), planning creation (2h), wiki cleanup (2h), SotA research (3h) | 10 | |

| Dylan Jansen | Meeting & brainstorming (3h), SotA research (3h), working out concepts (2h), looking for reference material (2h) | 10 | |

| Elena Jansen | Meeting & brainstorming (3h) | ||

| Sem Janssen | Meeting & brainstorming (3h) | ||

| Week 2 | Bas Gerrits | Meeting (2h), User research (2h), MoSCoW (2h), design (2h), design problem solving (4h) | |

| Jada van der Heijden | Meeting (2h), Editing wiki to reflect subject change (2h), Contacting institutions for user research (1h), Setting up user research (interview questions) (1h), User research (2h) | 7 | |

| Dylan Jansen | Meeting (2h), Contacting robotics lab (1h), SotA research for new subject (4h), Looking for reference material (2h), Updating Wiki (1h), Prototype research (2h) | 12 | |

| Elena Jansen | Meeting (2h) | ||

| Sem Janssen | Meeting (2h) | ||

| Week 3 | Bas Gerrits | Meeting (3h), Looking at sensors and such to see what is possible (making a start on program) (3h) Theoritical situation conditions (3h) | |

| Jada van der Heijden | Meeting (3h), Editing User Research wiki (4h), Refining interview questions (2h) | 9 | |

| Dylan Jansen | Meeting (3h), Updating Wiki (1h), uploading and refining SotA (4h), Ideas for prototype (2h), Bill of materials for prototype (2h) | 12 | |

| Elena Jansen | Meeting (3h), Making interview questions for online, updating user research wiki | ||

| Sem Janssen | Meeting (3h), Looking at different options for relaying feedback (design) | ||

| Week 4 | Bas Gerrits | Meeting (3h) | |

| Jada van der Heijden | Meeting (3h), Editing User Research wiki (xh), Refining interview questions (2h), Interviewing (xh) | ||

| Dylan Jansen | Meeting (3h), Working out a potential design (3h), Adding design description to wiki (2h), Discussion on best solution (2h) | 10 | |

| Elena Jansen | |||

| Sem Janssen | Meeting (3h) | ||

| Week 5 | Bas Gerrits | tutor meeting + after meeting (2h), online meeting (1h), | |

| Jada van der Heijden | tutor meeting + after meeting (2h), online meeting (1h), | ||

| Dylan Jansen | tutor meeting + after meeting (2h), online meeting (1h), | ||

| Elena Jansen | tutor meeting + after meeting (2h), online meeting (1h), interview 1 (1h), interview 2 (2h), real life visit for interviews + preparation (6h) , analysing forms interviews (1h), updating wiki (2h), emailing with participants (30m), designing bracelet + sensor wearable (sketching + 3d) (4h) | ||

| Sem Janssen | online meeting (1h), | ||

| Week 6 | Bas Gerrits | ||

| Jada van der Heijden | |||

| Dylan Jansen | |||

| Elena Jansen | |||

| Sem Janssen | |||

| Week 7 | Bas Gerrits | ||

| Jada van der Heijden | |||

| Dylan Jansen | |||

| Elena Jansen | |||

| Sem Janssen |

140 pp, 700 total, ~20 hrs a week