PRE2024 1 Group2: Difference between revisions

Part week 7 |

Part week 7 |

||

| Line 760: | Line 760: | ||

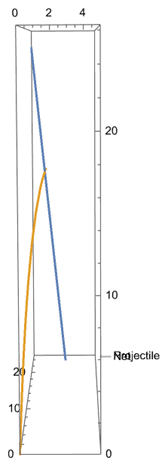

Wind plays a big role in the path of the projectile and of the net. It is important that the model also functions under these circumstances. In order to determine the acceleration of the projectile and net the drag force on both objects must be determined. Two important factors where the drag force depends on are the drag coefficient and the frontal area of the objects. Since different projectiles are used during warfare, like hand grenades or Molotov cocktails, it is unknown what the exact drag coefficient or frontal area is or the projectile. After a dive in literature it was decided to take an average value for the drag coefficient and the frontal area since these values lied on the same spectrum. For the frontal area this could be predicted since the drones are only able to carry objects of limited size. After some calculations it was found that if the net (including the weights on the corners) weighs 3kg, the acceleration of the projectile and net due to wind effects is identical leading to still a perfect interception, which can be seen in the figure. This is based on literature values, for a later stage it is necessary to find the exact drag coefficient and surface area of the net and change the weight accordingly. As for projectiles which do not exactly satisfy the found drag coefficient or surface area, it is found with the use of the model that differences up to 50% of the used values do not influence the projectile so much that the interception misses. This range includes almost all theoretical values found for the different projectiles, making the model highly reliable under the influence of wind. | Wind plays a big role in the path of the projectile and of the net. It is important that the model also functions under these circumstances. In order to determine the acceleration of the projectile and net the drag force on both objects must be determined. Two important factors where the drag force depends on are the drag coefficient and the frontal area of the objects. Since different projectiles are used during warfare, like hand grenades or Molotov cocktails, it is unknown what the exact drag coefficient or frontal area is or the projectile. After a dive in literature it was decided to take an average value for the drag coefficient and the frontal area since these values lied on the same spectrum. For the frontal area this could be predicted since the drones are only able to carry objects of limited size. After some calculations it was found that if the net (including the weights on the corners) weighs 3kg, the acceleration of the projectile and net due to wind effects is identical leading to still a perfect interception, which can be seen in the figure. This is based on literature values, for a later stage it is necessary to find the exact drag coefficient and surface area of the net and change the weight accordingly. As for projectiles which do not exactly satisfy the found drag coefficient or surface area, it is found with the use of the model that differences up to 50% of the used values do not influence the projectile so much that the interception misses. This range includes almost all theoretical values found for the different projectiles, making the model highly reliable under the influence of wind. | ||

An uncertainty within the system is the exact location of the drone. We aim to accurately know where the drone, and thus the projectile is, but in reality this infinite accurate location is unachievable, but we can get close. The sensors in the system must be optimized to locate the drone as good as possible. Luckily there are sensors which are able to achieve high accuracy, for example a LiDAR sensor which has a range of 2000m and is accurate to 2cm. The 2000m range is well within the range that our system operates and the accuracy of 2cm is way smaller than the size of the net (100cm by 100cm) which should not cause problems for the interception. | |||

[[File:Imagevvd.png|thumb]] | [[File:Imagevvd.png|thumb]] | ||

Revision as of 09:33, 19 October 2024

Group Members

| Name | Student ID | Department |

|---|---|---|

| Max van Aken | 1859455 | Applied Physics |

| Robert Arnhold | 1847848 | Mechanical Engineering |

| Tim Damen | 1874810 | Applied Physics |

| Ruben Otter | 1810243 | Computer Science |

| Raul Sanchez Flores | 1844512 | Computer Science / Applied Mathematics |

Problem Statement

The goal of this project is to create an easily portable system that can be used as the last line of defense against incoming projectiles.

Objectives

To come up with a solution for our problem we have the following objectives in mind:

- Determine how drones and projectiles can be detected.

- Determine how a drone or projectile can be intercepted and/or redirected.

- Build a prototype of this portable device.

- Explore and determine ethical implications of the portable device.

- Prove the system’s utility.

Planning

Within the upcoming 8 weeks we will be working on this project. Below are the projects tasks layed out over these 8 weeks and when they will be performed.

| Week | Task |

|---|---|

| 1 | Initial planning and setting up the project. |

| 2 | Literary research. |

| 3 | Create ethical framework. |

| Conduct an interview with an expert to confirm and construct the use cases. | |

| Start constructing prototype and software. | |

| Determine potential problems. | |

| 4 | Continue constructing prototype and software |

| 5 | Finish prototype and software |

| 6 | Testing prototype to verify its effectiveness and use cases. |

| Evaluate testing results and make final changes. | |

| 7 | Finish Wiki page. |

| 8 | Create final presentation. |

Users and their Requirements

We currently have two main usages for this project in mind, which are the following:

- Military forces facing threats from drones and projectiles.

- Privately-managed critical infrastructure in areas at risk of drone-based attacks.

The users of the system will require the following:

- Minimal maintenance

- High reliability

- System should not pose additional threat to surrounding

- System must be personally portable

- System should work reliably in dynamic, often extreme, environments

- System should be scalable and interoperable in concept

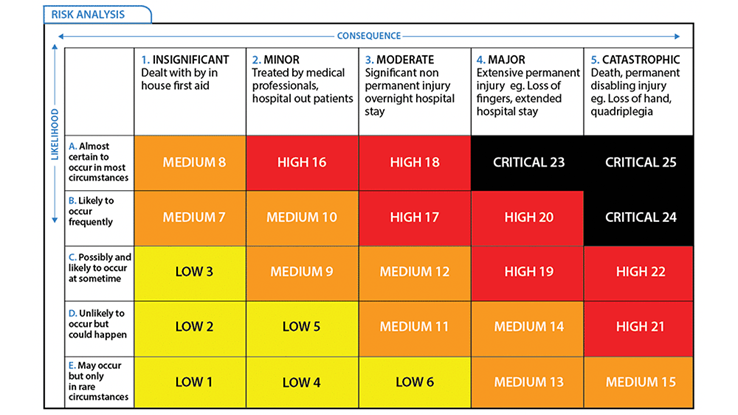

Risk Evaluation

A risk evaluation matrix can be used to determine where the risks are within our project. This is based on two factors: the consequence if a task is not fulfilled and the likelihood that this happens. Both of these factors are rated on a scale from 1 to 5 and using the matrix below a final risk is determined. This can be a low, medium, high or critical risk. Knowing the risks beforehand gives the ability to prevent failures from occurring as it is known where special attention is required.

| Task | Consequence (1-5) | Likelihood (1-5) | Risk |

| Collecting 25 articles for the SoTA | 1 | 1 | Low |

| Interviewing front line soldier | 1 | 2 | Low |

| Finding features for our system | 4 | 1 | Medium |

| Making a prototype | 3 | 3 | Medium |

| Make the wiki | 5 | 1 | Medium |

| Finding a detection method for drones and projectiles | 4 | 1 | Medium |

| Determine (ethical) method to intercept or redirect drones and projectiles | 5 | 1 | Medium |

| Prove the systems utility | 5 | 2 | High |

Detection

Drone Detection

The Need for Effective Drone Detection

With the rapid advancement and production of unmanned aerial vehicles (UAV), particularly small drones, new security challenges have emerged for the military sector.[2] Drones can be used for surveillance, smuggling, and launching explosive projectiles, posing threats to infrastructure and military operations.[2] Within our project we will be mostly looking at the threat of drones launching explosive projectiles. We have as an objective to develop a portable, last-line-of-defense system that can detect drones and intercept and/or redirect the projectiles they launch. An important aspect of such a system is the capability to reliably detect drones in real-time, while possibly in dynamic environments.[3] The challenge here is to create a solution that is not only effective but also lightweight, portable, and easy to deploy.

Approaches to Drone Detection

Numerous approaches have been explored in the field of drone detection, each with its own set of advantages and disadvantages.[4][3] The main methods include radar-based detection, radio frequency (RF) detection, acoustic-based detection, and vision-based detection.[2][4] It is essential for our project to analyze these methods within the context of portability and reliability, to identify the most suitable method, or combination of methods.

Radar-Based Detection

Radar-based systems are considered as one of the most reliable methods for detecting drones.[4] Radar systems transmit short electromagnetic waves that bounce off objects in the environment and return to the receiver, allowing the system to detect the object's attributes, such as range, velocity, and size of the object.[4][3] Radar is especially effective in detecting drones in all weather conditions and can operate over long ranges.[2][4] Radars, such as active pulse-Doppler radar, can track the movement of drones and distinguish them from other flying objects based on the Doppler shift caused by the motion of propellers (the micro-Doppler effect).[2][3][4]

Despite its effectiveness, radar-based detection systems come with certain limitations that must be considered. First, traditional radar systems are rather large and require significant power, making them less suitable for a portable defense system.[4] Additionally, radar can struggle to detect small drones flying at low altitudes due to their limited radar cross-section (RCS), particularly in cluttered environments like urban areas.[4] Millimeter-wave radar technology, which operates at high frequencies, offers a potential solution by providing better resolution for detecting small objects, but it is also more expensive and complex.[4][2]

Radio Frequency (RF)-Based Detection

Another common method is detecting drones through radio frequency (RF) analysis.[2][3][4][5] Most drones communicate with their operators via RF signals, using the 2.4 GHz and 5.8 GHz bands.[2][4] RF-based detection systems monitor the electromagnetic spectrum for these signals, allowing them to identify the presence of a drone and its controller on these RF bands.[4] One advantage of RF detection is that it does not require line-of-sight, implying that the detection system does not need to have a view of the drone.[4] It can also operate over long distances, making it effective in a large pool of scenarios.[4]

However, RF-based detection systems do have their limitations. They are unable to detect drones that do not rely on communication with another operator, as in autonomous drones.[3] Also, the systems are less reliable in environment where many RF signals are presents, such as cities.[4] Therefore in situations where high precision and reliability are a must, RF-based detection might not be too suitable.

Acoustic-Based Detection

Acoustic detection systems rely on the unique sound signature produced by drones, patricularly the noise generated by their propellers and motors.[4] These systems use highly sensitive microphones to capture these sounds and then analyze the audio signals to identify the presence of a drone.[4] The advantage of this type of detection is that it is rather low cost and also does not require line-of-sight, therefore this type of detection is mostly used for detecting drones behind obstacles in non-open spaces.[4][2]

However, it also has its disadvantages. In environments with a lot of noise, as in a battlefields, these systems are not as effective.[3][4] Additionally, some drones are designed to operate silently.[3] Also, they only work on rather short range, since sound weakens over distance.[4]

Vision-Based Detection

Vision-based detection systems use camera, either in the visible or infrared spectrum, to detect drones visually.[2][4] These system rely on image recognition algotihms, often by use of machine learning.[4][5] Drones are then detected based on their shape,size and movement.[5] The main advantage of this type of detection is that the operators themselves will be able to confirm the presence of a drone, and are able to differentiate between a drone and other objects such as birds.[4]

However, there are also disadvantages when it comes to vision-based detection systems.[3][4] These systems are highyl dependent on environmental conditions, they need a clear line-of-sight and good lightning, additionally weather conditions can have an impact on the accuracy of the systems.[3][4]

Best Approach for Portable Drone Detection

For our project, which focuses on a portable system, the ideal drone detection method must balance between effectiveness, portability and ease of deployment. Based on this, a sensor fusion approach appear to be the most appropriate.[4]

Sensor Fusion Approach

Given the limitations of each individual detection method, a sensor fusion approach, which would combine radar, RF, acoustic and vision-based sensors, offers the best chance of achieving reliable and accurate drone deteciton in a portable system.[4] Sensor fusion allows the strengths of each detection method to complement the weaknesses of the others, providing more effective detection in dynamic environments.[4]

- Radar Component: A compact, millimeter-wave radar system would provide reliable detection in different weather conditions and across long ranges.[3] While radar systems are traditionally bulky, recent advancements make it possible to develop portable radar units that can be used in a mobile systems.[4] These would most likely be less effective, therefore to compensate a sensor fusion approach would be used.[4]

- RF Component: Integrating an RF sensor will allow the system to detect drones communicating with remote operators.[4] This component is lightweight and relatively low-power, making it ideal for a portable system.[4]

- Acoustic Component: Adding acoustric sensors can help detect drones flying at low altitudes or behind obstacles, where rader may struggle.[2][4] Also this component is mainly just a microfone and the rest is dependent on software, and therefore also ideal for a portable system.[4]

- Vision-Based Component: A camera system equipped with machine learning algorithms for image recognition can provide visual confirmation of detected drones.[5][4] This component can be added by use of lightweight, wide-angle camera, which again does not restrict the device from being portable.[4]

Conclusion

To achieve portability in our system we have to restrict certain sensors and/or components, therefore to still achieve effectivity when it comes to drone detection, the best apporach is sensor fusion. The system would integreate radar, RF, acoustic and vision-based detection. These together would compensate for eachothers limitations resulting in an effective, reliable and portable system.

Interception

Drone interception refers to a range of methods used to incapacitate or destroy rogue drones once they have been detected and identified as threats. The rise of small drones, including both off-the-shelf and custom-built UAVs, has led to the development of many counter-drone systems that aim to neutralise drones in a non-destructive or destructive manner. The choice of method depends on various factors, including the environment, threat level, and available technology.

Key Approaches to Interception

- Kinetic Interceptors: Kinetic methods physically destroy or incapacitate drones through direct impact. These include missile systems and kinetic projectiles, which engage drones at medium to long ranges. While effective, kinetic interceptors are typically expensive and may pose risks of collateral damage. Systems like the U.S. Army’s 40mm net grenade are non-lethal alternatives that physically trap drones without destruction, and at a low cost.

- Electronic Warfare (Jamming and Spoofing): One of the most common drone neutralization techniques involves electronic warfare, such as radio frequency (RF) and GNSS jamming. These methods disrupt the drone’s control signals or GPS navigation, forcing it to lose connectivity and potentially crash. Spoofing, on the other hand, involves hijacking the drone’s communication system, allowing operators to redirect it. While jamming is non-lethal, it may affect other nearby electronics and is ineffective against autonomous drones that don’t rely on external control signals.

- Directed Energy Weapons (Lasers and Electromagnetic Pulses): Directed energy systems like lasers and electromagnetic pulses (EMP) are designed to disable drones by damaging their electrical components or destroying them outright. Lasers offer precision and instant engagement but are costly and susceptible to environmental conditions like rain or fog. EMP systems can disable multiple drones at once but may also interfere with other electronics in the vicinity.

- Net-Based Capture Systems: These systems use physical nets to ensnare drones, rendering them incapable of flight. The nets can be launched from ground-based platforms or other drones and are highly effective against low-speed, low-altitude UAVs. This method is non-lethal and minimizes collateral damage but has limitations in range and reloadability.

- Geofencing: Geofencing involves creating virtual boundaries around sensitive areas using GPS coordinates. Drones equipped with geofencing technology are automatically programmed to avoid flying into restricted zones. This method is proactive but can be bypassed by modified or non-compliant drones

Objectives of Effective Drone Neutralization

When designing or selecting a drone interception system, several key objectives must be prioritised:

- Low Cost-to-Intercept: Cost-effectiveness is critical, as these small drones, off-the-shelf and custom-built UAVs are costing a lot more to intercept than to build, thus incurring a net negative cost on militaries. For example, using a $2 million Standard Missile-2 to intercept a drone that may cost as little as $2,000 is a clear example of this asymmetry. (https://www.csis.org/analysis/cost-and-value-air-and-missile-defense-intercepts) especially in environments where multiple drones may need to be intercepted over time. Some methods, such as net-based systems, offer a low-cost solution, while others, like lasers or kinetic interceptors, are more expensive(1-s2.0-S266737972200023…).

- Portability: The ideal counter-drone system should be portable, lightweight, and collapsible for easy transportation and deployment in various settings. Systems like RF jammers and net throwers are typically more portable than missile-based solutions(AeroDefense Blog).

- Ease of Deployment: The ability to quickly set up and deploy a system is vital, particularly in fast-moving scenarios such as military operations or protecting large events. Systems that can be operated from vehicles or drones provide greater flexibility in dynamic environments(Counter_Drone_Technolog…).

- Quick Reloadability or Automatic Reloading: In high-threat environments, rapid reloading or automatic reloading capabilities ensure continuous protection against multiple drone incursions. Systems like laser-based or RF jammers offer this advantage, whereas net throwers and kinetic projectiles may need manual reloading(Counter_Drone_Technolog…).

- Minimal Collateral Damage: Especially in civilian areas, minimizing collateral damage is critical. Non-lethal methods such as jamming, spoofing, and net-based systems are preferred in such environments, as they neutralize threats without causing widespread damage(Counter_Drone_Technolog…)(1-s2.0-S266737972200023…).

Evaluation of Drone Interception Methods

Pros and Cons of Drone Interception Methods

- Jamming (RF/GNSS)

- Pros: Effective at neutralizing communication between a drone and its operator. It is non-destructive, widely applicable, and can target multiple drones simultaneously.

- Cons: Limited effectiveness against autonomous or pre-programmed drones that don’t rely on external signals. Can interfere with other electronics in the area

- Net Throwers

- Pros: Non-lethal and environmentally safe, nets can physically capture drones without destroying them, making them ideal for urban settings where collateral damage is a concern

- Cons: Limited range and only effective on slower, low-altitude drones. Reloading can be slow unless automated.

- Missile Launch

- Pros: High precision and range, effective at engaging fast-moving or long-range drones. Can target multiple drones

- Cons: Extremely high cost per intercept and the risk of collateral damage. These systems are less portable and require significant infrastructure to deploy

- Lasers

- Pros: Silent, fast, and capable of engaging multiple drones quickly without physical debris. Lasers offer precision and minimal collateral damage.

- Cons: Expensive and susceptible to weather conditions (fog, rain). High energy requirements make portability a challenge

- Hijacking

- Pros: Allows operators to take control of drones without destroying them. It’s a non-lethal approach, ideal for situations where it’s essential to capture the drone intact.

- Cons: Collateral damage to surrounding electronics, limited range, and high operational costs

- Spoofing

- Pros: Redirects or manipulates drone signals to mislead operators. It is non-destructive and can be used to safely divert drones away from sensitive areas.

- Cons: Complex to execute, especially on drones with advanced anti-spoofing countermeasures

- Geofencing

- Pros: Prevents drones from entering restricted zones proactively. Non-lethal and offers permanent coverage in geofenced areas.

- Cons: Can be bypassed by modified or non-compliant drones. Requires drone manufacturers to implement the technology

Pugh Matrix

| Method | Cost-to-intercept | Portability | Ease of Deployment | Reloadability | Minimum Collateral Damage | Effectiveness | Total Score |

|---|---|---|---|---|---|---|---|

| Jamming (RF/GNSS) | Medium | High | High | High | High | Medium | 10 |

| Net Throwers | Low | High | High | Medium | High | High | 11 |

| Missile Launch | Low | Low | Medium | Low | Low | High | 5 |

| Lasers | High | Medium | Medium | High | High | High | 8 |

| Hijacking | Low | High | Medium | Low | High | Medium | 8 |

| Spoofing | Medium | High | Medium | Medium | High | Medium | 8 |

| Geofencing | Low | High | High | High | High | Low | 10 |

Is it ethical to deflect or redirect drones and projectiles?[6][7][8][9][10][11][12]

The goal in this project is to deflect or redirect drones and projectiles for soldiers to be safe. But by deflecting or redirecting these drones and projectiles they do not disappear. Chances are that other people get injured by these deflected or redirected drones and projectiles. So is it even ethical to deflect or redirect these drones or projectiles?

Lets start off by noting that this piece of technology is in no way meant to harm anyone, but instead to keep them safe. However, according to the International Humanitarian Law (IHL), article 49 specifically, an attack is seen as any act of violence, whether in offence or defense. This means that the deflection or redirection of drones and projectiles is seen as an attack. But since this piece of technology is used in warzones, attacks are really common there, so this should not be a big problem. By deflecting or redirecting an attack, it is simply a continuation of an already ongoing attack. This would simply lead to the conclusion that it is ethical to deflect or redirect drones and projectiles. However, deflection or redirection can also cause harm to other people on the defending side or even civilians. It is not a guarantee that the attack will be deflected or redirected to the attacking side. Now this piece of technology is comparable to autonomous cars. Both are made with the intention to protect people, but both also have the side effect that they can bring harm to people and they can make life or death decisions. Lots of research has gone into the ethics of autonomous cars and this information can be used to study the ethics of our product. This will be done by looking at different scenarios that could be encountered in war.

In a one on one situation in war, with one person from the attacking side and one from the defending side, our deflection or redirection of drones and projectiles is an ethical thing to do as mentioned above. You use an already existing attack and continue with it. Since it is a one on one situation there is no one else that can possibly get harmed.

If we expand our situation to a battlefield with more then one person on the attacking side and also more then one person on the defending side, there becomes the chance that the deflection or redirection of a drone or projectile hits a person from your own team or someone from the other team which did not send the attack. As for this second scenario this would not be a big problem. Since in a war you can attack those people who are fighting you. Even if this person did not send the drone or projectile toward you, he is in the attacking team and has intentions to fight you. Deflection or redirecting a drone or projectile towards a member of your team however does mean that you are attacking someone who is not attacking you, which is not allowed. This situation can be compared to an autonomous car which in the case of an accident tries to minimize the risks for the driver, by increasing risks for the passengers. While, in a perfect scenario, the car should minimize risks for everyone involved in the accident and it should not be biased toward one side or one person.

However not all risks can be resolved with autonomous cars, we still use them since they have the potential to reduce the amount of accidents, especially when technology advances. This is also the reason that the deflection or redirection of drones and projectiles may be done in this scenario, if we aim to further develop this technology to decrease potential harm and if we remove bias within the system.

A last expansion in our situation is a situation where there are also civilians nearby which can be harmed if a drone or projectile is deflected or redirected. In the case of autonomous cars this can be comparable to a situation where an autonomous car, in order to prevent a collision, hits a pedestrian who caused no threat to the driver (or person sitting in the car since the car is autonomous). Ethics on autonomous cars learns that it is not straightforward to apply this technology in this situation since it depends on how the technology is designed, used and regulated which requires a multidisciplinary approach.

Ethical Framework

Description

The goal of this project is to create an easily portable system that can be used as the last line of defence against incoming projectiles. In order to come up with a sufficient ethical framework this description needs to be more specified and categorised. The device under consideration is capable of neutralising an incoming projectile. However, an incoming projectile doesn’t always have to be neutralised and this can differ between situations. Because the main purpose of this device is to be used in combat circumstances we will focus on this sort of scenario which is described as follow:

- This device could be used in war zone situations. For example if soldiers are in trenches it is hard for the enemy to hit them, therefore a solution that is nowadays used in Ukraine is drones [???]. A war zone is in general a rapidly evolving environment so the soldiers and equipment need to be able to adapt to that [1]. In order to give certainty that the device will neutralise the harmful projectile there needs to be an extensive software framework which can distinguish for example birds from drones, but can also detect grenades dropped from higher altitude.

In this scenario the device will be actively used in a war zone. Therefore it should comply with the Geneva conventions. It should thus be able to examine the impact its actions would make for civilians and it must be certain it will not do harm to civilians directly or indirectly. To illustrate, if a kamikaze drone is heading towards a military vehicle, the device will either redirect the drone or let the drone explode above the ground based on the type of interception that is chosen. If the drone is redirected to not hit too close to the military vehicle, it might be that the kamikaze drone injures civilians. If the drone explodes above the ground, the fragments will still shoot downwards causing injuries over a larger area, possibly including civilians [???]. This not only violates the Geneva convention, but also misses the point of preventing harm.

In principle this can be seen as a trolly problem in disguise. On the one hand we have the soldiers who get killed if the system is not activated, but on the other hand we have the civilians who get killed if the system does get activated. In order to make sure this is not something that will happen the device must be able to choose a desired and achievable location to redirect an incoming drone towards. If this problem is seen from a utilitarian point of view we want to minimise harm to maximise happiness. In order to achieve this the triggering of a drone explosion in the air with proper warnings for the surrounding people might be a solution to achieve this maximisation and further looked into in chapter????.

After all, we have to remember that the situation where there is not a harmless place to redirect the drone to is fairly specific and not at all an ‘everyday-problem’. Even stronger, this specific problem can be seen as a side effect which is expected to be far outweighed by the gains and benefits of the product.

[1] https://ndupress.ndu.edu/Portals/68/Documents/jfq/jfq-101/jfq-101_78-83_Lynch.pdf?ver=Gu3iNHVHh5wYTbAPOqwd7Q%3d%3d

Specifications

| ID | Requirement | Preference | Constraint | Category | Priority | Testing Method |

|---|---|---|---|---|---|---|

| R1 | Detect Rogue Drone | Detection range of at least 30m | No false negatives | Hardware & Software | M | Simulate rogue drone scenarios in the field |

| R2 | Object Detection | 100% recognition accuracy | Detects even small, fast-moving objects | Software | M | Test with various object sizes and speeds in the lab |

| R3 | Detect Drone Direction | Accuracy of 90% | Must account for evasive drone movements | Hardware & Software | M | Use drones flying in random directions for validation |

| R4 | Detect Drone Speed | Accuracy within 5% of actual speed | Must be effective up to 20m/s | Hardware & Software | M | Measure speed detection in controlled drone flights |

| R5 | Detect Projectile Speed | Accurate speed detection for fast projectiles | Must handle speeds above 10m/s | Hardware & Software | M | Fire projectiles at varying speeds and record accuracy |

| R6 | Detect Projectile Direction | Accuracy within 5 degrees | No significant deviation in direction detection | Hardware & Software | M | Test with fast-moving objects in random directions |

| R7 | Track Drone with Laser | Tracks moving targets within a 1m² radius | Must follow targets precisely within the boundary | Hardware | S | Use a laser pointer to follow a flying drone in real-time |

| R8 | Can Intercept Drone/Projectile | Drone/Projectile is within the 1m² square | Must not damage surroundings or pose threat | Hardware | C | Test in a field, using projectiles and drones in motion |

| R9 | Low Cost-to-Intercept | Interception cost under $50 per event | Hardware & Software | S | Compare operational cost per interception in trials | |

| R10 | Low Total Cost | Less than $2000 | Should include all components (detection + net) | Hardware | C | Budget system components and assess affordability |

| R11 | Portability | System weighs less than 3kg | Hardware | C | Test for total weight and ease of transport | |

| R12 | Easily Deployable | Setup takes less than 5 minutes | Must require no special tools or training | Hardware | C | Timed assembly by users in various environments |

| R13 | Quick Reload/Auto Reload | Reload takes less than 30 seconds | Must be easy to reload in the field | Hardware | C | Measure time to reload net launcher in real-time scenarios |

| R14 | Environmental Durability | Operates in temperatures between -20°C and 50°C | Must work reliably in rain, dust, and strong winds | Hardware | W | Test in extreme weather conditions (wind, rain simulation) |

Testing Scenarios

To verify whether or not we have achieved some of these requirements, we have to devise some test scenarios, which will allow us to quantitatively determine our prototype's accuracy. The notion of accuracy of course may be ambiguous, as we do not have access to test our prototype in a warzone-like environment, and thus accuracy in a lab may not result in accuracy in the trenches. However, we will attempt to simulate such an environment through the use of a projector, as well as through the use of speakers.

R1: Detect Rogue Drone

Objective: Verify that the system can detect a rogue drone within a specific detection range, minimizing false negatives.

Setup Description:

1. Use a single drone labeled as “rogue.” Start by positioning the drone at a distance of 5 meters from the detection system, and incrementally increase the distance by 2 meters until the maximum possible distance within the room or 30 meters, whichever is smaller.

2. The room should be marked at each meter interval so that the drone can be placed accurately at each distance point.

Test Procedure:

1. At each distance interval, power on the drone and ensure it is hovering stably. The system should attempt to detect the drone’s presence at each point.

2. Record whether the detection system correctly identifies the rogue drone's presence at each interval.

Measurement Method:

1. Detection Confirmation: The system should provide a visible or audible signal (like an LED indicator, a beep, or a message on a screen) upon detecting the drone.

2. Record Detection: Log each detection attempt, noting the distance at which the detection occurred successfully or failed.

Quantitative Criteria:

- Pass: The system should detect the rogue drone at all distances up to 30 meters (or the room’s maximum achievable distance) without any missed detections.

- Fail: If the system fails to detect the drone at any distance within this range, it does not meet the requirement.

R4: Detect Drone Speed

Objective: Validate that the system can measure the drone’s speed accurately within an enclosed space.

Setup Description:

1. Mark two points on the floor, exactly 5 meters apart, to serve as start and end points for the drone to travel in a straight line.

2. Program the drone to fly between these points at different speeds (e.g., 5 m/s, 10 m/s, 15 m/s) if supported by the drone’s settings.

3. Position a high-speed camera or timer-based tool to measure the time taken for the drone to travel between the start and end points.

Test Procedure:

1. Set the drone to fly from the starting point to the end point at each speed setting (5 m/s, 10 m/s, 15 m/s).

2. Use a stopwatch or high-speed camera to record the time taken for the drone to cross the 5-meter distance for each speed trial.

3. Calculate the actual speed based on the time and distance traveled.

Measurement Method:

1. Manual Speed Calculation: Calculate the actual speed using the formula \( \text{Speed} = \frac{\text{Distance}}{\text{Time}} \) based on the measured time across the 5-meter distance.

2. Compare with System Detection: Log the detected speed from the system and compare it with the manually calculated speed.

Quantitative Criteria:

- Pass: The system’s detected speed should be within ±5% of the manually calculated speed for each test.

- Fail: If the detected speed deviates more than ±5% from the calculated speed in more than one trial, the requirement is not met.

R7: Track Drone with Laser

Objective: Test if the system’s laser can track the drone accurately as it moves within a 1m² radius.

Setup Description:

1. Mark a 1m² square area on the floor, with a 1m x 1m boundary.

2. Place the drone within this marked area and set it to fly in circular, random, or figure-eight patterns within the boundary, keeping it stable and at a consistent height.

3. Ensure the laser tracking system is positioned to follow the drone’s movements within this confined area.

Test Procedure:

1. Start the drone’s movement within the 1m² square and activate the laser tracking system.

2. Observe the laser’s movement in real-time, ensuring it follows the drone as it stays within the boundaries of the 1m² area.

Measurement Method:

1. Visual Observation: Record the laser's accuracy with a high-speed camera to capture any deviations outside the 1m² boundary.

2. Boundary Tracking Analysis: Playback the recorded footage and analyze if the laser consistently stays within the 1m² boundary around the drone.

Quantitative Criteria:

- Pass: The laser must remain within the 1m² boundary around the drone for at least 95% of the movement time during the test.

- Fail: If the laser tracking drifts outside the boundary for more than 5% of the time, the system does not meet the requirement.

R8: Can Intercept Drone/Projectile

Objective: Verify the system’s ability to intercept the drone within a 1m² area without causing any unintended impact outside the target zone.

Setup Description:

1. Define a 1m² target area on the floor, marking it clearly.

2. Position the drone in the center of this area and program it to hover or move slowly within the 1m² space.

3. Configure the interception mechanism (net launcher, tagging device, etc.) to target the drone within this specified area.

Test Procedure:

1. Once the drone is positioned within the 1m² area, initiate the interception mechanism.

2. Repeat the interception test at different points within the 1m² target area to ensure consistency.

Measurement Method:

1. Interception Accuracy: Use a high-speed camera to capture the interception action, confirming that it occurs entirely within the designated 1m² area.

2. Surrounding Impact Analysis: Review the footage to ensure that the interception mechanism does not affect any area outside the 1m² boundary.

Quantitative Criteria:

- Pass: The interception must occur within the 1m² target area with no impacts or interference outside the boundary for at least 95% of trials.

- Fail: If the interception strays outside the target area or impacts surroundings in more than 5% of cases, the system does not meet the requirement.

Prototype

Components Possibilities

Radar Component (Millimeter-Wave Radar):

Component: Infineon BGT60ATR12C

- Price: Around €25-30

- Description: A 60 GHz radar sensor module, compact and designed for small form factors, ideal for detecting the motion of drones.

- Software: Infineon's Radar Development Kit (RDK), a software platform to develop radar signal processing algorithms.

- Website: https://www.infineon.com/cms/en/product/sensor/radar-sensors/radar-sensors-for-automotive/60ghz-radar/bgt60atr24c/

Component: RFBeam K-LC7 Doppler Radar

- Price: Around €55

- Description: A Doppler radar module operating at 24 GHz, designed for short to medium range object detection. It’s used in UAV tracking due to its cost-efficiency and low power consumption.

- Software: Arduino IDE or MATLAB can be used for basic radar signal processing.

RF Component:

Component: LimeSDR Mini

- Price: Not deliverable at the moment

- Description: A compact, full-duplex SDR platform supporting frequencies from 10 MHz to 3.5 GHz, useful for RF-based drone detection.

- Software: LimeSuite, a suite of software for controlling LimeSDR hardware and custom signal processing.

Component: RTL-SDR V3 (Software-Defined Radio)

- Price: Around €30-40

- Description: An affordable USB-based SDR receiver capable of monitoring a wide frequency range (500 kHz to 1.75 GHz), including popular drone communication bands (2.4 GHz and 5.8 GHz). While not as advanced as higher-end SDRs, it’s widely used in hobbyist RF applications.

- Software: GNU Radio or SDR# (SDRSharp), both of which are open-source platforms for signal demodulation and analysis.

Acoustic Component:

Component: Knowles SPH0645LM4H-B

- Price: Around €2

- Description: A MEMS (Micro-Electro-Mechanical System) digital microphone, highly sensitive for acoustic detection, designed for low-power applications.

- Software: MATLAB for processing acoustic signatures, implementing signal processing algorithms to differentiate drone sounds from background noise.

- Website: https://nl.mouser.com/datasheet/2/218/ph0645lm4h_b_datasheet_rev_c-1525723.pdf

Component: TDK InvenSense ICS-43434

- Price: Around €2

- Description: A low-noise, omnidirectional MEMS microphone designed for precise sound capture.

- Software: Python with SciPy and NumPy libraries to analyze and filter acoustic signals in real-time.

- Website: https://invensense.tdk.com/products/ics-43434/

Component: KY-037 High Sensitivity Microphone Module

- Price: Around €3

- Description: A low-cost microphone sensor module with high sensitivity to detect the sound signatures of drone propellers. It is compact and designed for basic acoustic detection.

- Software: Arduino IDE for signal processing, or Python with SciPy for audio filtering and analysis.

- Website: https://arduinomodules.info/ky-037-high-sensitivity-sound-detection-module/

Component: Adafruit I2S MEMS Microphone (SPH0645LM4H-B)

- Price: Around €7-10

- Description: A low-cost MEMS microphone offering high sensitivity, commonly used in sound detection projects for its clarity and noise rejection.

- Software: Arduino IDE or Python with SciPy for sound signature recognition.

- Website: https://www.adafruit.com/product/3421

Vision-Based Component:

Component: Arducam 12MP Camera (Visible Light)

- Price: Around €60

- Description: A lightweight, high-resolution camera module, ideal for machine learning and visual detection.

- Software: OpenCV combined with machine learning libraries like TensorFlow or PyTorch for object detection and tracking.

- Website: https://www.arducam.com/product/arducam-12mp-imx477-mini-high-quality-camera-module-for-raspberry-pi/

Component: Raspberry Pi Camera Module v2

- Price: Around €15-20

- Description: A small, lightweight 8MP camera compatible with Raspberry Pi, offering high resolution and ease of use for vision-based drone detection. It can be paired with machine learning algorithms for object detection.

- Software: OpenCV with Python for real-time image processing and detection, or TensorFlow for more advanced machine learning applications.

- Website: https://www.raspberrypi.com/products/camera-module-v2/

Component: ESP32-CAM Module

- Price: Around €10-15

- Description: A highly affordable camera module with a built-in ESP32 chip for Wi-Fi and Bluetooth, ideal for wireless image transmission. It’s a great choice for low-cost vision-based systems.

- Software: Arduino IDE or Python with OpenCV for basic image recognition tasks.

- Website: https://www.tinytronics.nl/en/development-boards/microcontroller-boards/with-wi-fi/esp32-cam-wifi-and-bluetooth-board-with-ov2640-camera

Sensor Fusion and Central Control

Component: Raspberry Pi 4

- Price: Around €35

- Description: A small, affordable single-board computer that can handle sensor fusion, control systems, and real-time data processing.

- Software: ROS (Robot Operating System) or MQTT for managing communications between the sensors and the central processing unit.

- Website: https://www.raspberrypi.com/products/raspberry-pi-4-model-b/

Component: ESP32 Development Board

- Price: Around €10-15

- Description: A highly versatile, Wi-Fi and Bluetooth-enabled microcontroller. It’s lightweight and low-power, making it ideal for sensor fusion in portable devices.

- Software: Arduino IDE or Micropython, with MQTT for sensor data transmission and real-time control.

- Website: https://www.espressif.com/en/products/devkits

Path Prediction of Projectile[13][14][15][16][17][18][19][20][21][22][23]

Theory:

Catching a projectile requires different steps. At first the particle has to be detected, after which its trajectory has to be determined. If we know how the projectile is moving in space and time the net can be shot to catch the projectile. However based on the distance of the projectile it takes different amounts of time for the net to reach the projectile. In this time the projectile has moved to a different location. So the net must be shot to a position where the projectile will be in the future such that they collide.

Since projectiles do not make sound and do not emit RF waves, they are not as easy to detect as drones. For this part the assumption will be made that the projectile is visible. Making the system also detect projectiles which are not visible would probably be possible but this would complicate things a lot. The U.S. army uses electronic fields which can detect bullets passing. Something similar could be used to detect projectiles which are not visible, but this will not be done in this project due to the complexity.

To detect a projectile a camera with tracking software is used. Anything with a size smaller than 10 cm3 will have to be detected by this camera. This exact size is chosen, since the system should not detect movement of people and this size is large enough to detect most small projectiles that drones can drop. Anything larger than 10 cm3 would also mean that the system gets bigger, while the aim is to create a compact system. The software also needs to be trained with AI to determine whether the detected item really is a projectile or let’s say a bird.

Now that the projectile is in sight its trajectory has to be determined. There exists software that already does this, for example YoloV5. It is based on the fact that the projectile is only falling under the influence of gravity. The speed of the projectile should only slow down due to friction in the air and speed up due to gravity. For a first model air friction can be neglected to get a good approximation of the flight of the projectile. Since not every projectile has the same amount of air resistance, the best friction coefficient should be found experimentally by dropping different projectiles. The best friction coefficient is when most projectiles are caught by the system. An improvement for this is to have different pre-assigned values for friction coefficients for different sizes of projectiles. Since surface area plays a big role in the amount of friction a projectile experiences, this is a reasonable thing to do.

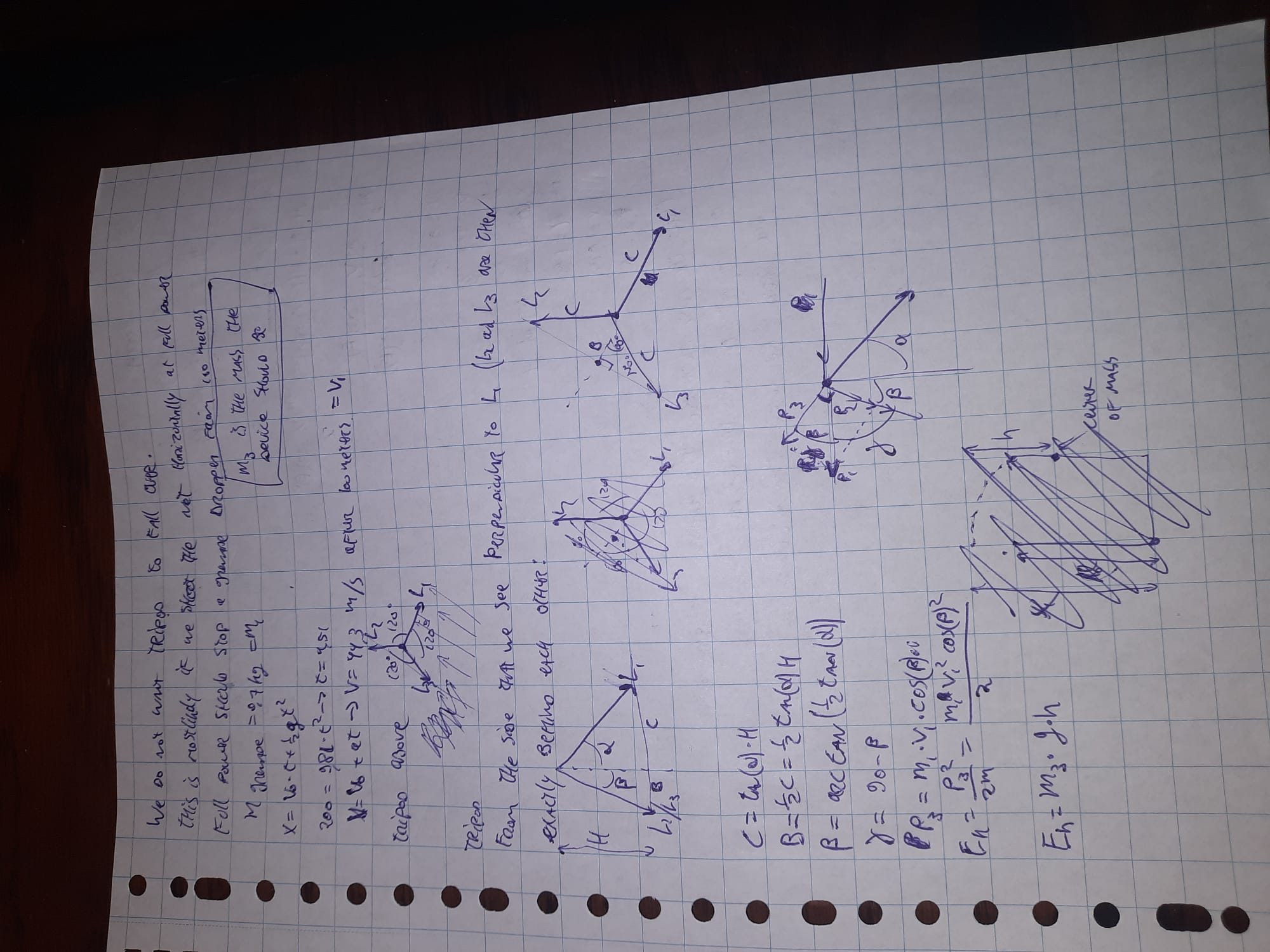

With the expected path of the projectile known, the net can be launched to catch the projectile midair. Basic kinematics can give accurate results for these problems. Also, the problem can be seen as a 2D problem. Since we only protect against projectiles coming towards the system, we can always define a plane such that the trajectory of the projectile and the system are in the same plane, making the problem two dimensional. If the path of the projectile would exceed the plane and become a three dimensional problem the system does not need to protect against this projectile as it does not form a threat, since the system is in the (2D) plane.

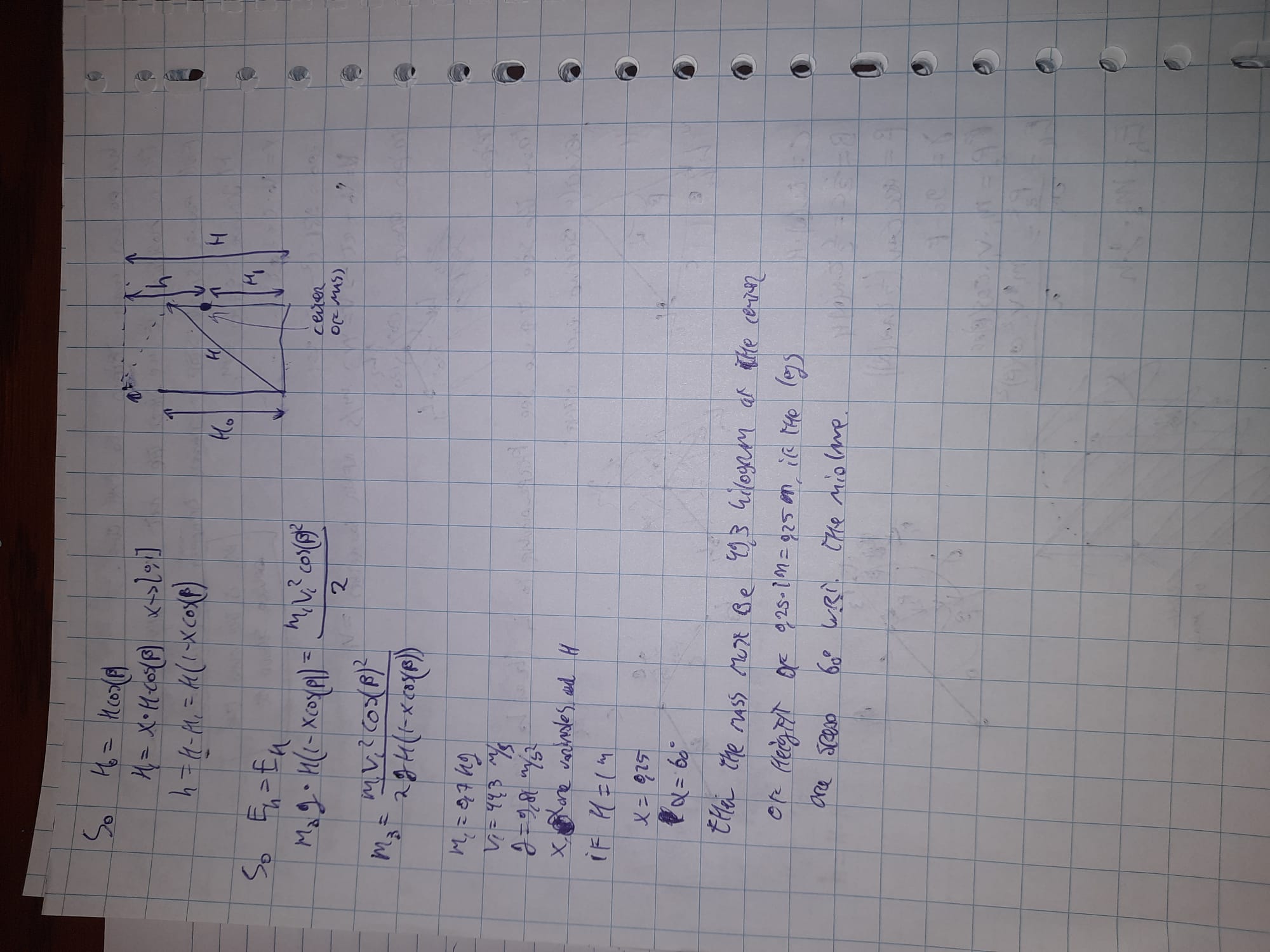

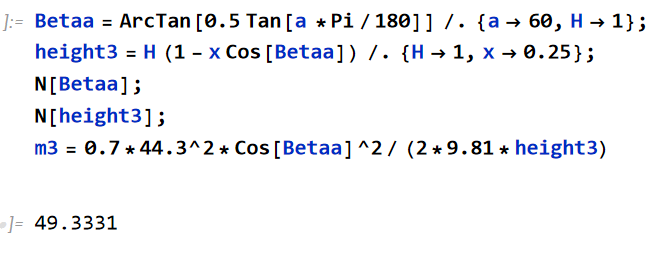

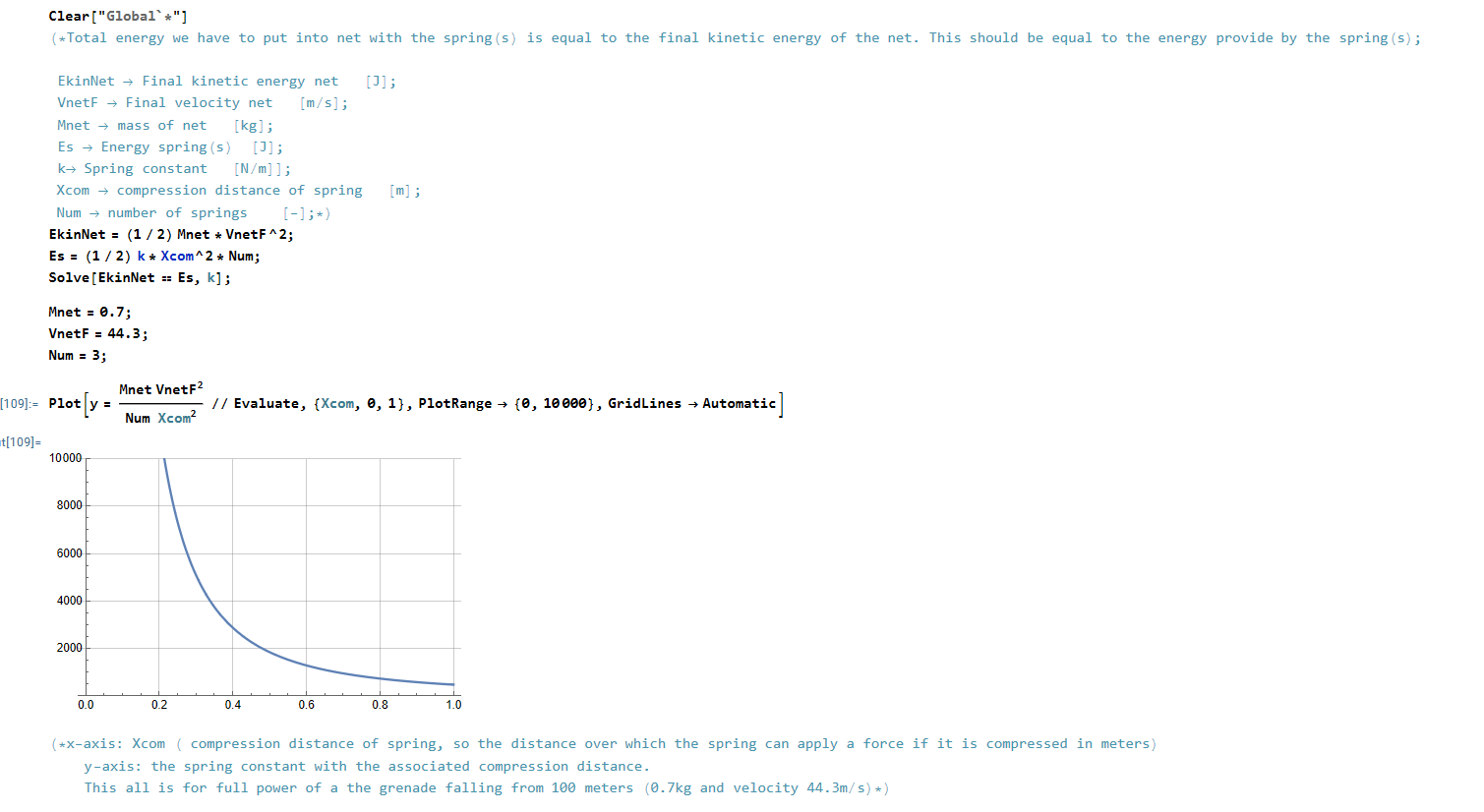

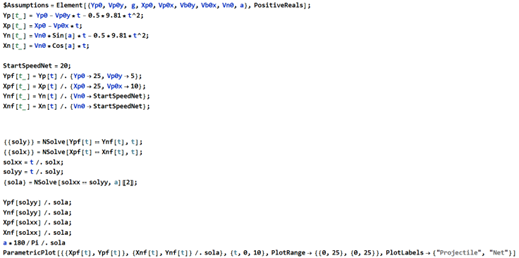

Calculation:

The Mathematica script below shows the calculation that has to be done in order to determine at which angle the net should be shot. The script now makes use of made up values which have to be determined experimentally based on the hardware that is used. For example, the speed at which the net is shot should be tested and changed in the code to get a good result. The distance and speed of the projectile can be determined using sensors on the device. The output of the Mathematica script is shown in the second picture. It gives the angle for the net to be shot at as well as a graph to visualize how the interception will happen.

Accuracy:

The height at which projectiles are dropped can be estimated by looking at footage of projectiles dropping. The height can be easily determined by assuming the projectile falls in vacuum, this represents reality really well. The height is given by: 0.5*g*t^2. Using a Youtube video[24] as data, it can be seen that almost every drop takes at least 4 seconds. This means that the projectiles are dropped from at least 78.5m. If we catch the projectile at two thirds of its fall, still having plenty of height to be redirected safely, and the net is 1 by 1 meter (so half a meter from its center to the edge of the net), the projectile must not be dropped more than 0.75 meter (see figure with calculation) next to us (outside of the plane) since the system would not catch this, if everything else went perfect. Even if the projectile would be dropped 0.7 meter next to the device, the net would hit the projectile with the side, which does not guarantee that the projectile will stay in the net.

An explosive projectile will do damage even when 0.75 meters away from a person. This means that the previously made assumption, where it was assumed that a 2D model would be fine, since everything happens in a plane, does not fulfill all our needs. Enemy drones are not accurate to the centimeter, since explosive projectiles, like grenades, can kill a person even when 10 meters away. This means that for better results a 3D model should be used.

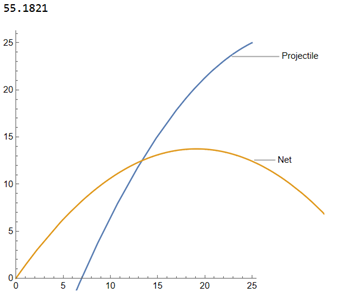

3D model:

It was tried to replicate the 2D model in 3D, but this did not work out with the same generality. For this reason some extra assumptions were made. These assumptions are based on reality and therefor still valid for this system. The only thing this changes is the generality of the model, where it could be used in multiple different cases instead of only projectiles dropped from drones.

In the 2D model the starting velocity of the projectile could be changed. However, in reality, drones do not have a launching mechanism and simply drop the projectile without any starting velocity. This means that the projectile will drop straight down (except some sideways movement due to external forces like wind). This was noted after watching lots of drone warfare video footage, where it was also noted that drones do not usually crash into people, but mainly into tanks since for tanks an accurate hit is required between the base and the turret of the tank. For people, drones drop the projectile from a standstill (to get the required aim). This simplification also makes the 2D model valid again, since there is no sideward movement in the projectile, it will never leave the plane and we can create between the path of the projectile and the mechanism which shoots the net.

Since this mechanism works in the real world (3D), it is decided to plot the 2D model at a required angle in 3D so there is a good representation of how the mechanism will work. The new model also gives the required shooting angle and then it shows the path of the net and projectile in 3D. To get further insight, the 2D trajectory of the net and projectile is also plotted, this can be seen in the figure.

Accuracy 3D model:

The 3D model which is now set up only works in a “perfect” world, where there is no wind, no air resistance or any other external factors which may influence the path of the projectile and the net. Also we assume that the system knows where the drone is with infinite accuracy. This is in reality simply not true, but it is important to know how close this model replicates reality and if it can be used.

Wind plays a big role in the path of the projectile and of the net. It is important that the model also functions under these circumstances. In order to determine the acceleration of the projectile and net the drag force on both objects must be determined. Two important factors where the drag force depends on are the drag coefficient and the frontal area of the objects. Since different projectiles are used during warfare, like hand grenades or Molotov cocktails, it is unknown what the exact drag coefficient or frontal area is or the projectile. After a dive in literature it was decided to take an average value for the drag coefficient and the frontal area since these values lied on the same spectrum. For the frontal area this could be predicted since the drones are only able to carry objects of limited size. After some calculations it was found that if the net (including the weights on the corners) weighs 3kg, the acceleration of the projectile and net due to wind effects is identical leading to still a perfect interception, which can be seen in the figure. This is based on literature values, for a later stage it is necessary to find the exact drag coefficient and surface area of the net and change the weight accordingly. As for projectiles which do not exactly satisfy the found drag coefficient or surface area, it is found with the use of the model that differences up to 50% of the used values do not influence the projectile so much that the interception misses. This range includes almost all theoretical values found for the different projectiles, making the model highly reliable under the influence of wind.

An uncertainty within the system is the exact location of the drone. We aim to accurately know where the drone, and thus the projectile is, but in reality this infinite accurate location is unachievable, but we can get close. The sensors in the system must be optimized to locate the drone as good as possible. Luckily there are sensors which are able to achieve high accuracy, for example a LiDAR sensor which has a range of 2000m and is accurate to 2cm. The 2000m range is well within the range that our system operates and the accuracy of 2cm is way smaller than the size of the net (100cm by 100cm) which should not cause problems for the interception.

Literary Research

Autonomous Weapons Systems and International Humanitarian Law: Need for Expansion or Not[25]

A significant challenge with autonomous systems is ensuring compliance with international laws, particularly IHL. The paper delves into how such systems can be designed to adhere to humanitarian law and discusses critical and optional features such as the capacity to identify combatants and non-combatants effectively. This is directly relevant to ensuring our system's utility in operational contexts while adhering to ethical norms.

Artificial Intelligence Applied to Drone Control: A State of the Art[5]

This paper explores the integration of AI in drone systems, focusing on enhancing autonomous behaviors such as navigation, decision-making, and failure prediction. AI techniques like deep learning and reinforcement learning are used to optimize trajectory, improve real-time decision-making, and boost the efficiency of autonomous drones in dynamic environments.

Drone Detection and Defense Systems: Survey and Solutions[2]

This paper provides a comprehensive survey of existing drone detection and defense systems, exploring various sensor modalities like radio frequency (RF), radar, and optical methods. The authors propose a solution called DronEnd, which integrates detection, localization, and annihilation functions using Software-Defined Radio (SDR) platforms. The system highlights real-time identification and jamming capabilities, critical for intercepting drones with minimal collateral effects.

Advances and Challenges in Drone Detection and Classification[4]

This state-of-the-art review highlights the latest advancements in drone detection techniques, covering RF analysis, radar, acoustic, and vision-based systems. It emphasizes the importance of sensor fusion to improve detection accuracy and effectiveness.

Autonomous Defense System with Drone Jamming capabilities[26]

This patent describes a drone defense system comprising at least one jammer and at least one radio detector. The system is designed to send out interference signals that block a drone's communication or GPS signals, causing it to land or return. It also uses a technique where the jammer temporarily interrupts the interference signal to allow the radio detector to receive data and locate the drone's position or intercept its control signals.

Small Unmanned Aerial Systems (sUAS) and the Force Protection Threat to DoD[27]

This article discusses the increasing threat posed by small unmanned aerial systems (sUAS) to military forces, particularly the U.S. Department of Defense (DoD). It highlights how enemies are using these drones for surveillance and delivery of explosives.

The Rise of Radar-Based UAV Detection For Military: A Game-Changer in Modern Warfare[3]

This article discusses how radar-based unmanned aerial vehicle (UAV) detection is transforming military operations. SpotterRF’s systems use advanced radar technology to detect drones in all conditions, including darkness or bad weather. By integrating AI, these systems can distinguish between drones and non-threats like birds, improving accuracy and reducing false positives.

Swarm-based counter UAV defense system[28]

This article discusses autonomous systems designed to detect and intercept drones. It emphasizes the use of AI and machine learning to improve the real-time detection, classification, and interception of drones, focusing on autonomous UAVs (dUAVs) that can neutralize threats. The research delves into algorithms and swarm-based defense strategies that optimize how drones are intercepted.

Small Drone Threat Grows More Complex, Deadly as Tech Advances[29]

The article highlights the growing threat of small UAV to military operations. It discusses how these systems are used by enemies for surveillance and direct attacks, and the various countermeasures the U.S. Department of Defense is developing to stop these attacks. It eplores the use of jamming (interference of connection between drone and controller), radio frequency sensing, and mobile detection systems.

US Army invents 40mm grenade that nets bad drones[30]

This article discusses recently developed technology that involves a 40mm grenade that deploys a net to capture and neutralise hostile drones. This system can be fired from a standard grenade launcher, providing a portable, low-cost method of taking down small unmanned aerial systems (sUAS) without causing significant collateral damage.

Making drones to kill civilians: is it ethical?[31]

Usually, anything where harm is done to innocent people is seen as unethical. This would mean that every company which is somehow providing for items in war would do something which is at least partially unethical. However, during war an international law states that a country is not limited by all traditional ethics. This makes deciding on what is ethical and what not harder.

Sociocultural objections to using killer drones against civilians:

- Civilians (not in war) are getting killed by drones since the drones are not able to see the difference between people in war and people not in war

- We should not see war as a ‘clash of civilizations’ as this would induce that civilians are also part of war

Is it ethical to use drones to kill civilians?:

- As said above, an international law applies during war between countries. This law implies:

o Killing civilians = murder = prohibited

- People getting attacked by drones, say that it is not the drones who kill people, but people kill people

A simple solution is to follow the 3 laws of robotics from Isaac Asimov:

- A robot may not injure a human being or allow a human being to come to harm

- A robot must obey orders given to it by human beings except when such orders conflict with the first law

- A robot must protect its own existence, provided such protection does not conflict with the first or second law

But following these laws would be too simple, as these laws are not actual laws

The current drone killer’s rationale:

- A person is targeted only if harmful to the interests of this country so lang as he/she remains alive

This rationale is objected by:

- This rationale is simply assumed since the international law says nothing about random targeting of individuals

This objection is disproved by:

- If the target is not in warzone, it is not harmful to the interests of the country, thus such a person would not be a random person

Is it legal and ethical to produce drones that are used to kill civilians?:

A manufacturer of killer drones may not assume its drones are only being used peacefully.

The manufacturers of killer drones often have cautionary recommendations, which are there to put these manufacturers in a legally safe place.

Conclusion:

The problem is that drone killing is not covered in the traditional war laws. Literature is not uniform in opposition to drone killing, but the majority states that killing civilians is unethical.

Ethics, autonomy, and killer drones: Can machines do right?[32]

The article looks into the ethics of certain weapons used in war (in the US). Since we can view back on new weapons back then in war (like atomic bombs) we can see if what they thought then, is what we think now is ethical. The article uses two different viewpoints to decide the ethics of a war technology, namely teleology and deontology. Teleology is focused on the outcome of an action, while deontology focusses more on the duty of an action.

The article looks first at the atomic bomb, which according to a teleologic viewpoint could be seen as ethical, as it would bring an end to war quickly which saves lives in the long term. Deontology also says it could be ethical since it would show superiority to have such strong weapons, which intimidates other countries in war.

Next up in discussion in a torture program. According to teleology this is an ethical thing to do, since torturing some people, to extract critical information from them could be used to prevent more deaths in the future.

Now the article questions AI-enabled drones. For AI ethics, the AI should always be governed by humans, bias should be reduced (lots of civilians are getting killed now) and there should be more transparency. As for a country this is more challenging since they also have to focusses on safety and winning a war. This is why, in contrast to with the atomic bomb, where teleology and deontology said the same, there now is a contrast between teleology and deontology. Teleology wants to focus on outcome, thus security and protection. Deontology focusses on global values, like human rights. The article says the challenge is to use AI technologies effective while following ethical principles and letting everyone do this.

Survey on anti-dron systems: components, designs, and challenges[33]

Requirements an anti-drone system must have:

- Drone specialized detection (detect the drone)

- Multi drone defensibility (Defend for multiple drones)

- Cooperation with security organizations (Restrictions to functionality should be discussed with public security systems (police/military)

- System portability (lightweight and use wireless networks)

- Non-military neutralization (Don’t use military weapons to defend for drones)

Ways to detect drones:

- Thermal detection (Motors, batteries and internal hardware produce heat)

o Works in all weather

o Affordable

o Not too much range

- RF scanner (capture wireless signals)

o Can’t detect drones what don’t produce RF signals

o Long range

- Radar detection (Detect objects and determine the shape)

o Long range

o Can’t see the drone if it is not moving since it thinks it is an obstacle

- Optical camera detection (detect from a video)

o Short range

o Weather dependant

Hybrid detection systems to detect drones

- Radar + vision

- Multiple RF scanners

- Vision + acoustic

Drone neutralization:

- Hijacking/spoofing (Create fake signal to prevent drone from moving)

- Geofencing (Prevent drone from approaching a certain point)

- Jamming (Stopping radio communication between drone and controller)

- Killer drones (Using drones to damage attacking drones)

- Capture (Physically capture a drone) (for example with a net)

o Terrestrial capture systems (human-held or vehicle-mounted)

o Aerial capture systems (System on defender drones)

Determination of threat level:

- Object

- Flight path

- Available time

(NOTE: The article goes into more depth about some mathematics to determine the threat level, which could be used in our system)

Artificial intelligence, robotics, ethics, and the military: a Canadian perspective[34]

The article not only looks at the ethics, but also the social and legal aspects of using artificial intelligence in the military. For this it looks at 3 main aspects of AI, namely Accountability and Responsibility, Reliability and Trust.

Accountability and Responsibility:

The article states that the accountability and responsibility of the actions of an AI system are for the operator, which is a human. However, when the AI malfunctions it becomes challenging to determine who is accountable.

Reliability:

AI now is not reliable enough and only performs well in very specific situations where it is made for. During military usage you never know in what situation an AI will be in, thus causing a lack in reliability. A verification of AI technologies is necessary, especially when you are dealing with live and death of humans.

Trust:

People who use AI in military should be thought how the AI works and to what extend they can trust the AI. Too much or too little trust in AI can lead to big mistakes. The makers of these AI systems should be more transparent so it can be understood what the AI does.

We need to have a proactive approach to minimize the risks we have with AI. This means that everyone who uses or is related to AI in military should carefully consider the risks that AI brings.

When AI goes to war: Youth opinion, fictional reality and autonomous weapons[35]

The article looks into the responsibilities and risks of fully autonomous robots in war. It does this by asking youth participants about this together with other research and theory.

The article found that the participants felt that humans should be responsible for actions of autonomous robots. This can be supported by theory which says that since robots do not have emotions like humans do, they cannot be responsible for their actions in the same way as humans. If autonomous robots were programmed with some ethics in mind, the robot could in someway be accounted for its actions as well. How this responsibility between humans and robots should be divided became unclear in this article. Some said responsibility was purely for the engineers, designs and government, while others said that the human and robot had a shared responsibility.

The article also found that there were still fears for fully autonomous robots. This came from old myths and social media which say that autonomous robots can turn against humans to destroy them.

As for the legal part of autonomous robots, they can potentially violate laws during war, especially if they are not accounted responsible for their actions. This causes worries for the youth.

The threats that fully autonomous robots bring outweigh the benefits for the youth. This is a sign for the scientific community to further develop and implement norms and regulations in autonomous robots.

Advances and Challenges in Drone Detection and Classification Techniques: A State-of-the-Art Review[36]

Summary:

•The paper provides a comprehensive review of drone detection and classification technologies. It delves into the various methods employed to detect and identify drones, including radar, radio frequency (RF), acoustic, and vision-based systems. Each has their strengths and weaknesses, after which the author discusses 'sensor fusion', where the combination of detection methods lead to improvements of system performance and robustness.

Key takeaways:

•Sensor fusion should be incorporated into system to improve performance and robustness

Counter Drone Technology: A Review[37]

Summary:

•The article provides a comprehensive analysis of current counter-drone technologies and categorizes counter-drone systems into three main groups: detection, identification, and neutralization technologies. Detection technologies include radar, RF detection, etc. Once a drone is detected, it must be identified as friend or foe. The review discusses methods such as machine learning algorithms and signature signal libraries. It covers various neutralization methods, including jamming (RF and GPS), laser-based systems, and kinetic solutions like nets or projectiles, and the challenges each method faces.

Key takeaways:

•Integration of multiple sensor technologies is critical

•Non-kinetic neutralization methods should be prioritized where possible to avoid unintended consequences

A Soft-Kill Reinforcement Learning Counter Unmanned Aerial System (C-UAS) with Accelerated Training[38]

Summary:

•This article discusses the development of a counter-drone system that utilizes reinforcement learning using non-lethal (“soft-kill") techniques. The system is designed to learn and adapt to various environments and drone threats using simulated environments.

Key takeaways:

•C-UAS systems must be rapidly deployable

•C-UAS systems should be trained in simulated environments to improve robustness and adaptability

Terrorist Use of Unmanned Aerial Vehicles: Turkey's Example[39]

Summary:

•The article examines how terrorist organizations have utilized drones for surveillance, intelligence gathering, and attacks. It highlights the growing accessibility of consumer drones, which are repurposed for malicious use and various counter-UAV technologies and tactics employed by Turkish forces to mitigate this threat.

Key takeaways:

•Running costs must be kept minimal. Access to affordable drones is widespread.

•Both kinetic and non-kinetic interception must be available if the system is to be used in urban or otherwise populated environments

Impact-point prediction of trajectory-correction grenade based on perturbation theory[40]

Summary:

•The article discussed trajectory prediction and correction methods for the use case of improving the accuracy of artillery projectiles. By modeling and simulating the effects of small perturbations in projectile flight, the study proposes an impact-point prediction algorithm. While this algorithm can be applied to improving artillery accuracy, it could potentially be used to predict the trajectory and impact location of drone-dropped explosives.

Key takeaways:

•Detailed description of real-time trajectory prediction corrections

•Challenge to balance efficiency with accuracy in path prediction algorithms

Armed Drones and Ethical Policing: Risk, Perception, and the Tele-Present Officer[41]

This paper talks about the tele-officier on ‘unmanned drones’. This paper looks at it from the point of view of attacking, but it can be looked at from the point of view of ‘attacking’ incoming drones where still a person should or should not ‘pull the trigger’ to intercept a drone, with the potential risks of redirecting it at another crowd.

The Ethics and Legal Implications of Military Unmanned Vehicles[42]

This papers states that human soldiers/marines also do not agree on what is ethical warfare. They give a few examples on which questions have controversial answers under the soldiers/marines. (we may use this to argue why/why not our device should be autonomous or not.

Countering the drone threat implications of C-UAS technology for Norway in an EU an NATO context[43]

This paper gives clear insight in different scenarios where drones can be a threat. For example on large crowds but also in warfare. This paper does however not give a concrete solution.

An anti-drone device based on capture technology[44]

This paper explores the capabilities of capturing a drone with a net. It also addresses some other forms of anti drone devices, such as lasers, hijacking, rf jamming…

For the rest is this paper very technical in the net captering.

Four innovative drone interceptors.[45]

This paper states 5 different ways of detecting drones. Acoustic detection and tracking with microphones positioned in a particular grid, video detection by cameras, thermal detection, radar detection and tracking and as last the detection through radio emissions from the drone. Because we want to also be able to catch ‘off-the-shelf’ drones we have to investigate which ones are appropriate. For taking down the drone they give 6 options: missile launch, radio jamming, net throwers, machine guns, lasers, drone interceptors. The 4 drone interceptors they introduce are for us a bit above budget, as they are real drones with various kinds of generators to take down a drone (for example with a high electric pulse), but we could still look into this.

Comparative Analysis of ROS-Unity3D and ROS-Gazebo for Mobile Ground Robot Simulation[46]

This paper examines the use of Unity3D with ROS versus the more traditional ROS-Gazebo for simulating autonomous robots. It compares their architectures, performance in environment creation, resource usage, and accuracy, finding that ROS-Unity3D is better for larger environments and visual simulation, while ROS-Gazebo offers more sensor plugins and is more resource-efficient for small environments.

Interview Preparation

Interview 1: Introductory interview with F.W.

General understanding:

- What types of anti-drone systems are currently used in the military?

- What are key features that make an anti-drone system effective in the field?

- What are the most common types of drones that these systems are designed to counter?

- What are the most common types of drone interception that these systems employ?

- Are there any specific examples of successful or failed anti-drone operations you could share

- DroneShield

- Anduril

Limitation of current systems:

- What are the most significant limitations of current anti-drone systems?

- Are there any specific environments (urban, desert, etc.) where anti-drone systems struggle to perform well?

Cost-related:

- Can you give a rough idea of the costs involved in deploying these systems?

- Purchase cost

- Maintenance cost

- Cost-to-intercept

- What are usual price ranges for systems like these?

- Affordable options

- Full-scale, full-feature systems (military-grade, fully-equipped, etc.)

Ethics discussion:

- Which ethical concerns may be associated with the use of anti-drone systems, particularly regarding urban, civilian areas?

- How does the military handle ethical issues when deploying these technologies?

Potential improvements:

- What improvements do you think are necessary to make anti-drone systems more effective? What are current shortcomings?

- Are there specific threats (related to the build of the drone or other factors) that these systems are weak against?

- Do you think AI or machine learning could help enhance anti-drone systems? To what extent is it currently being used?

Technical questions:

- Is significant training required for personnel to effectively operate anti-drone systems?

- Time required for training

- Infrastructure required for training

- Cost?

- How do these systems usually handle multiple drone threats or swarm attacks?

- Can you explain how systems differentiate between hostile and non-hostile drones?

- How are these systems tested and validated before they are deployed in the field?

Logbook

| Name | Total | Break-down |

|---|---|---|

| Max van Aken | 10h | Attended lecture (2h), Attended meeting with group (2h), Analysed papers/patents [21], [22], [23], [24], [25] (5h), Summarized and described key takeaways for papers /patents [21], [22], [23], [24], [25] (1h) |

| Robert Arnhold | 16h | Attended lecture (2h), Attended meeting with group (2h), Analysed papers/patents [11], [12], [13], [14], [15] (10h), Summarized and described key takeaways for papers/patents [11], [12], [13], [14], [15] (2h) |

| Tim Damen | 16h | Attended lecture (2h), Attended meeting with group (2h), Analysed papers [12], [13], [14], [15], [16] (10h), Summarized and described key takeaways for papers [12], [13], [14], [15], [16] (2h) |

| Ruben Otter | 17h | Attended lecture (2h), Attended meeting with group (2h), Analysed papers/patents [1], [2], [3], [4], [5] (10h), Summarized and described key takeaways for papers/patents [1], [2], [3], [4], [5] (2h), Set up Wiki page (1h) |

| Raul Sanchez Flores | 16h | Attended lecture (2h), Attended meeting with group (2h), Analysed papers/patents [6], [7], [8], [9], [10] (10h), Summarized and described key takeaways for papers/patents [6], [7], [8], [9], [10] (2h), |

| Name | Total | Break-down |

|---|---|---|

| Max van Aken | 13h | Attended lecture (30min), Attended meeting with group (1h), Research kinds of situations of device (2h), wrote about situations (1,5h), research ethics (6h), write ethics (2h) |