PRE2020 4 Group4: Difference between revisions

| Line 813: | Line 813: | ||

The costs for the project depend on the complexity of the chatbot. Considering the fact that the chatbot we are designing is artificially intelligent and has different features, the costs will be a bit higher than the minimum. The costs are therefore estimated to be around $40,000 to $55,000 for Coco, also based on the estimates from <ref name = 'Hyena'>How Much Does it Cost to Develop Virtual Assistant Apps 2021. (2021, May 28). https://www.hyena.ai/how-much-does-it-cost-to-develop-virtual-assistant-apps/ </ref>. | The costs for the project depend on the complexity of the chatbot. Considering the fact that the chatbot we are designing is artificially intelligent and has different features, the costs will be a bit higher than the minimum. The costs are therefore estimated to be around $40,000 to $55,000 for Coco, also based on the estimates from <ref name = 'Hyena'>How Much Does it Cost to Develop Virtual Assistant Apps 2021. (2021, May 28). https://www.hyena.ai/how-much-does-it-cost-to-develop-virtual-assistant-apps/ </ref>. | ||

Responses to our second survey showed that people | Responses to our second survey showed that the largest group of people was willing to pay 0 to 5 euros a month for Coco. That would mean that the costs of $55,000 will be covered in one year if a little more than 1500 people have a paid year subscription for Coco. This is quite achievable, definitely when considering the fact that there are currently about 344.000 students in the Netherlands <ref name = 'Kengetallen'>Prognose aantal studenten wo | Kengetallen | Onderwijs in cijfers. (n.d.). Retrieved June 7, 2021, from https://www.onderwijsincijfers.nl/kengetallen/wo/studenten-wo/prognoses-aantal-studenten-wo </ref>. | ||

===Evaluation of Alternatives=== | ===Evaluation of Alternatives=== | ||

Revision as of 11:31, 12 June 2021

Coco, The Computer Companion

Group Description

Members

| Name | Student ID | Department | Email address |

|---|---|---|---|

| Eline Ensinck | 1333941 | Industrial Engineering & Innovation Sciences | e.n.f.ensinck@student.tue.nl |

| Julie van der Hijde | 1251244 | Industrial Engineering & Innovation Sciences | j.v.d.hijde@student.tue.nl |

| Ezra Gerris | 1378910 | Industrial Engineering & Innovation Sciences | e.gerris@student.tue.nl |

| Silke Franken | 1330284 | Industrial Engineering & Innovation Sciences | s.w.franken@student.tue.nl |

| Kari Luijt | 1327119 | Industrial Engineering & Innovation Sciences | k.luijt@student.tue.nl |

Logbook

See the following page: Logbook Group 4

Subject

We want to analyze and design an AI robot componanion to improve online learning and working from home problems like diminished motivation, loneliness and physical health problems. In order to address these problems we will introduce you to Coco, the computer companion. Coco will be an artificially intelligent and interactive agent that users can easily install on their laptop or PC.

Problem Statement and Objectives

Problem Statement

Due to the COVID-19 pandemic that emerged at the beginning of 2020, everyone's lives have been turned upside down. Working from home as much as possible was and still is the norm in many places all around the world and it applies to office workers, but also to college-, university-, and high school students. It is also expected that working from home will still be a thing in the post-pandemic world. In the post-pandemic world, working online will be combined with working physically in school or in the office [1].

However, even though there might be benefits from working in a home office, there are also many disadvantages that are critical to everyone's health, motivation, and concentration. Multiple studies have found such effects, both mental and physical, because of the work-from-home situation [2] [3].

Mental issues that might arise are emotional exhaustion, but also feelings of loneliness, isolation and depression. Moreover, because people have a high exposure to computer screens, they can experience fatigue, tiredness, headaches and eye-related symptoms [4]. Additionally, people exercise less while working from home during the pandemic. This can have effects on metabolic, cardiovascular, and mental health, and all this might result in higher chances of mortality [3] .

Other issues are related to the concentration and motivation of the people that are working from home. Office workers that work at home while also taking care of their families have lots of problems with staying on one task, because they want to run errands for their families [4] . In addition to this, it requires greater concentration for home office workers during communication [5]. Students have also indicated to experience a heavier workload, fatigue and a loss of motivation due to COVID-19 [6]

Because of the shift to working online, it is expected that all these mental- and physical health problems will still be present in the post-pandemic world. According to our first survey, primarily students experience the negative effects of working from home. Because of this we changed the user group to exclude office workers and we will focus on finding a solution for college-, university-, and high school students during this project.

Objectives

Our objectives are the following:

- Help with concentration and motivation (study-buddy)

- Improve physical health

- Provide social support for the user

USE: User, Society and Enterprise

Target user group

The user groups for this project will be office workers, college-, university-, and high school students, since these groups experience the most negative effects of the restrictions to work from home. There are several requirements for each group, most of them are related to COVID-19. First of all, there are some requirements relating to mental health. It is important for people to have social interactions from time to time. Individuals living alone could get mental health issues such as depression and loneliness due to the lack of these social interactions, caused by the restrictions [4]. It is also important for people to be able to concentrate well when they are working and that they can maintain their motivation and focus. Studies show that due to COVID-19 students experience a heavier workload, fatigue and a loss of motivation [6]. Considering the physical health, it is important that students and office workers are physically active and healthy. Some problems for the physical health of students and employees can arise from working from home. People that have an office job often do not get a lot of physical exercise during their workhours, but quarantine measures have reduced this even more [4]. This can affect cardiovascular and metabolic health, but even mental health [3]. In addition to this, the increased exposure to computer screens since the outbreak of COVID-19, especially applicable to high school students, can result in tiredness, headache and eye-related symptoms [4]. Hence, students and employees should become more physically active to improve their physical (and mental) health.

Secondary users

When people use Coco, they should gain better concentration and motivation and better physical health than without the computer assistant. Moreover, people that might feel lonely can find social support in Coco. Parents of the students will also profit from these aforementioned benefits of Coco, because they need to worry less about their children and their education, as Coco will assist them while studying.

Besides parents, teachers will profit from Coco too. Since Coco will help the students with studying, the teachers can focus on their actual educational tasks.

Moreover, co-workers and managers will profit from their colleagues using Coco. Coco can help the workers maintain physical and mental health which in turn leads to a better work mentality and environment

Society

When people use Coco the computer companion they will have better, concentration, motivation and better mental and physical health. This means that a lot of people in the society will have a higher well-being which in turn results in a healtier society. Moreover, because people work and study better both companies and the schools will have better results.

Enterprise

There are two main stakeholders for enterprises. Coco needs to be developed and this is where a software company comes in. Such a company will develop the virtual agent and will sell licenses to other companies. These companies are the other stakeholders and are interested in buying Coco for their employees or students. This could be small enterprises that want to buy a license for a small group of employees, but also large universities that want to provide the virtual agent for all their students. The effects for the software development company will be economic, since they will earn money with selling the Coco software licenses. For the interested companies, buying the license will mean that their employees’ physical and mental health will increase i.e., the primary users’ benefits.

Approach

In order to address the consequences and improve health and motivation in home-office workers, we will introduce to you Coco, the computer companion. Coco will be an artificially intelligent and interactive agent that users can easily install on their laptop or PC.

Concerning the mental health of users, a main problem is loneliness. It has been researched before what the impact of robotic technologies is on social support. Ta et al [7] have found that artificial agents do not only provide social support in laboratory experiments but also in daily life situations. Furthermore, Odekerken-Schröder et al. (2020) have found that companion robots can reduce feelings of loneliness by building supportive relationships[8].

Regarding the physical well-being of users, the use of technology could be useful to improve physical activity. As stated by Cambo et al. (2017), using a mobile application or wearable that tracks self-interruption and initiates a playful break, could induce physical activity in the daily routine of users [9]. Moreover, Henning et al. (1997) have found that at smaller work sites, users’ well-being improved when exercises were included in the small breaks [10].

Finally considering the productivity of users, a paper by Abbasi and Kazi (2014) shows that a learning chatbot systems can enhance the performance of students[11]. In an experiment where one group used Google and another group used a chatbot to solve problems, the chatbot had impact on memory retention and learning outcomes of the students. The same research as mentioned before from Henning et al also showed that not only the users’ well-being, but also the users’ productivity would increase in the presence of a chatbot[12]. Moreover, as has been researched in an experiment of Lester et al. (1997), the presence of a lifelike character in an online learning environment can have a strong influence on the perceived learning experience of students around the age of 12. Adding such an interactive agent to the learning process can make it more fun, next to the fact that the agent is perceived to be helpful and credible[13].

Method

At the end of the project, we will present our complete concept of the AI companion. This will include its design and functionality, which are based on both literature research and statistical analysis of send-out questionnaires. The questionnaires will be completed by the user group to make sure the actual users of the technology have their input in the development and analysis of the companion. Moreover, the user needs and perceptions will be described. The larger societal and entrepreneurial effects will also be taken into account. In this way, all USE-aspects will be addressed. Finally, a risk assessment will be included, as limitations related to the costs and privacy of the product are also important for the realization of the technology. These deliverables will be presented both in a Wiki-page and final presentation. A schematic overview of the deliverables can be found in Table 1.

Table 1: Schematic overview of the deliverables

| Topic | Deliverable |

|---|---|

| Functionality | Literature study |

| Results questionnaire 1: user needs | |

| Design | Results questionnaire 2: design |

| Example companion | |

| Additional | Risk assessment |

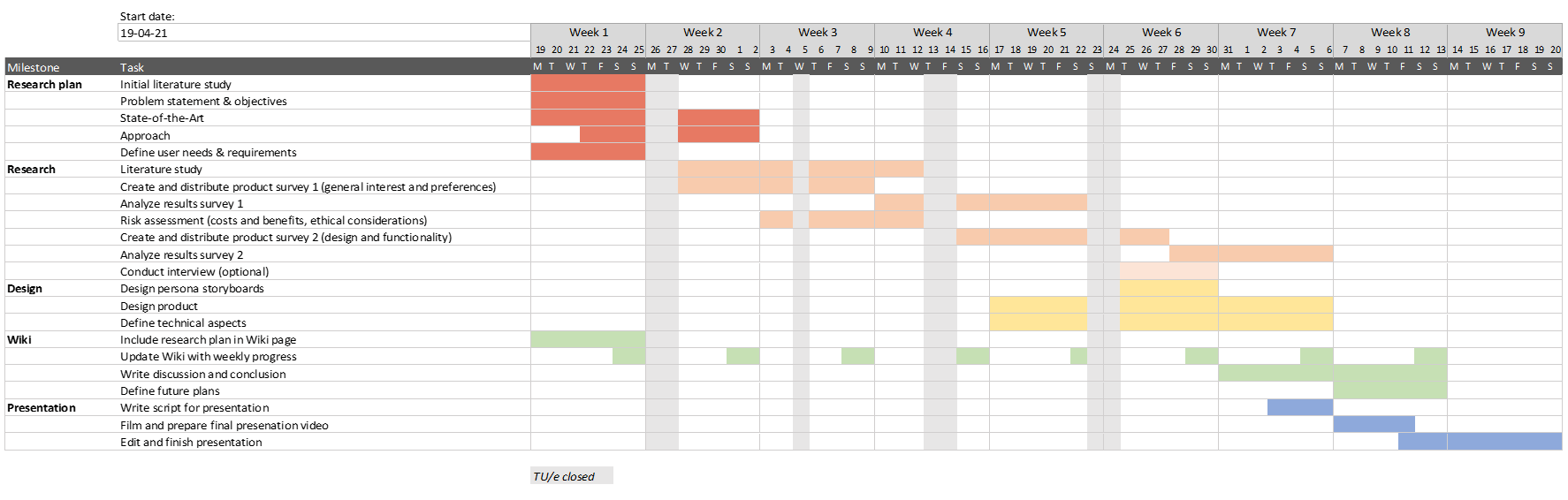

Milestones

During the project, several milestones are planned to be reached. These milestones correspond to the deliverables mentioned in the section above and can be found in table 2.

Table 2: Overview of the milestones

| Topic | Milestone |

|---|---|

| Organization | Complete planning |

| Functionality | Complete literature study |

| Responses questionnaire 1: user needs | |

| Complete analysis questionnaire 1: user needs | |

| Design | Responses questionnaire 2: design |

| Complete analysis questionnaire 2: design | |

| Design of the companion |

Survey

To find out more about the group of people that will be most interested in having a virtual companion, a survey will be conducted. This survey is meant to specify the target group and to get to know their preferences regarding the functionality of the virtual agent. The survey consists of 21 questions about Coco divided into the topics demographics, general computer work, productivity, tasks of a VA, privacy and interest. The link to the survey can be found here. And the analysis of the survey can be found here.

Planning

Research

State of the Art

Productivity agents

As discussed by Grover et al. [14] multiple applications exist that focus on task and time management. They all try to assist their users but do so in different ways. “MeTime”, for example, tries to make its users aware of their distractions by showing which apps they use (and for how long). “Calendar.help”, on the other hand, is connected to its user's email and can schedule meetings accordingly. Other examples include “RADAR” that tackles the problem of “email overload” and “TaskBot” that focuses on teamwork.

Grover et al. mention how Kimani et al. [15] designed a so-called productivity agent, in an attempt to incorporate all the beforementioned applications with different functions into one artificially intelligent system. The conversational agent that they described focused on improving productivity and well-being in the workspace. By means of a survey and a field study, they investigated the optimal functionality of a productivity agent. Findings suggests four tasks that are most important for such agents to possess. These tasks include distraction monitoring, task scheduling, task management and goal reflection. [14]

With their research, Grover and colleagues [14] wanted to get more insight on the influence of anthropomorphic appearance in agents versus a simple text-based bot which lower perceived emotional intelligence. Even though productivity was increased with the presence of a chatbot, outcomes suggest that there was no significant performance difference between the virtual agent and the text-based agent. Interaction with the virtual agent was however perceived to be more pleasant, supporting the idea that higher emotional intelligence in agents can reduce negative emotions like frustration [16]. The researchers also found that it is important that the appearance of the agent matches their capabilities, meaning that agents should only have anthropomorphistic looks if it can also act human-like. Other suggestions for improvement were focused on the agent’s inflexible task management skills and inappropriately timed distraction monitoring messages. Those last points especially will act as a guidance in designing an improved Agent System Architecture during this research. Grover et al. suggest including an additional dialog model into the agent architecture, which could be initiated by the user when they want to reschedule or change the duration of a task. They also suggest extending the distraction detection functionality and let users personalize their list of distracting websites and applications.

Companion agents

When going to the Play Store or App Store on your mobile phone, you can download “Replika: My AI Friend". This is a companion chatbot, that imitates human-like conversations. The more you use the app, the more it also learns about you. Ta et al. [7] investigated the effects of this advanced chatbot. They found out that it is successful in reducing loneliness as it resembles some form of companionship. Some other benefits were found as well. These include its ability to positively affect its users by sending positive and caring messages, to give advice, and to enable a conversation without fear of judgements.

Physical health agents

Cambo, Avrahami, & Lee [17] investigated the application “BreakSense” and concluded that the technology should let the user decide for themselves when to take a break. They discovered that in this way, the physical activity became part of their daily routine.

Computer assistants

Although these agents focus on specific tasks, there also exist personal computer assistants that are developed to help, for instance children, more generally with their daily activities. The study by Kessens et al. [18] investigated such a computer assistant, namely the Philips iCat. This animated virtual robot can show varying emotional expressions and fulfilled the roles of both companion, educator and motivator.

Related Literature

General

A list of related scientific papers, including short summaries stating their relevance, can be found here.

Design of the Virtual Agent

In week 3 a separate literature study has been conducted, specifically focused on the design aspect of the virtual agent. As a result of this study, it is planned to form a scientifically grounded recommendation for the design of the virtual agent, based on the direction of all papers taken together.

An overview of the collected data regarding the design and appearance of the agent can be found here.

Motivation for virtual agent

This section contains the motivation for the decision to develop a virtual agent instead of a physical robot. Several important aspects of Coco will be discussed to emphasise why we've chosen a virtual agent.

Pros and cons of a physically embodied agent

There are a few very clear pros for an embodied agent. Firstly, a physically embodied agent enhances social telepresence, which is the feeling of face-to-face interaction while a person is not physically present (e.g. using videocalls) [19]. According to Lee et al., an embodied agent is also able to provide affordance for proper social interaction, meaning that a physical robot has fundamental properties that determine its way of use [20]. These two things show that a physically present robot has is better able to provide good social interaction than a virtual agent since a virtual agent does not have these same affordances.

However, these arguments for a physically embodied agent can also be refuted. The social telepresence mentioned before decreases the smoothness of speech of the participant[19]. Besides this, Lee et al. found that if an embodied agent had anthropomorphic-physical embodiment, that humans had very high expectations of a robot. However, if the robot then did not have the ability to respond to touch-input, the high expectations dropped immediately and they became frustrated and disappointed in the robot. This is in general a very negative effect of physical embodiment [20].

Another con of physically embodied robots is that they are very expensive to develop and to maintain (the many embedded sensors and motors can make sure many technical difficulties arise). A paper on design of social robots from Puehn et al. compares a low-cost social robot ‘Philos’ to commercial social robots. Their design idea Philos has a commercial value of $3,000 and the associated software is free. But the more widely known, higher quality robots, like the animal robot Paro and humanoid robot Nao, cost way more than that, namely $6,000 and $15,000 respectively [21]. This shows that a very low-cost social robot will still be sold for $3,000, which probably won’t attract our user group, students.

Pros and cons of a virtual agent

A virtual agent also has quite some clear pros. Firstly, a virtual agent can process both spoken and written language and therefore it allows easier communication between Coco and its user. Especially as no confusions can arise due to accents or bad pronunciations of words. The communication software, for example language processing software, can very easily be updated. Secondly, a virtual agent can be accessed at any time and it does not have to be repaired, only updated (Milne, Luerssen, Lewis, Leibbrandt & Powers). Besides this, a virtual agent can easily communicate with other applications on a laptop, computer or phone [22].

Conclusion

The physically embodied robot and virtual agent can be compared on different subjects. The largest pro of a physically embodied agent is that it enhances social interaction and you could say that that is of course favorable, since it has a positive influence on the student’s. However, since the target group of our research are scholars or students we have to take into account their needs. The average student does not have the money to invest in a physically embodied robot such as Paro. Even if low-cost social robots are developed, such as the aforementioned ‘Philos’ it will still cost at least $3000 (even more if it needs repairs) and if it the robot not realistic, peoples’ expectations will drop. And with that, the advantage of a physically embodied agent becomes minimal.

The robot that we want to develop needs to have multiple functionalities. In order to improve the students’ concentration and motivation (objective 1), Coco would benefit from permission to control apps or websites. Imagine, for instance, that someone has his/her social media open when trying to make homework. Incoming messages from the social media platform would cause a lot of distraction, which could be prevented by (temporarily) blocking the platform. Additionally, if Coco is able to monitor activity on your computer, it can provide you with summaries and recommendations on how you can invest your time more wisely. Making these recommendations can performed even better when Coco is able to see your online planning. All of these desired functionalities of Coco are easier to implement in a virtual agent than in a physically embodied agent because of the pro’s that were mentioned earlier. The software for this can be easily updated online and since it’s a virtual agent, it can easily communicate with other applications (such as a Google Agenda) to help you schedule tasks for example. This is not possible with a physical agent. Additionally, a virtual agent can process both spoken and written language, therefore allowing easy communication with the user. And lastly, you can just take a virtual agent with you everywhere, and with a physical robot, this is harder.

Artificial Intelligence applications

One of the important characteristics of our computer companion Coco, is the fact that it is not an ordinary chatbot, but an advanced virtual agent. This means that it uses artificial intelligence (AI) in order to function better and meet the user needs. These user needs have been investigated in the first survey we sent out. Hereafter, the main objectives have been set to (1) help with concentration and motivation, and to (2) provide social support.

Natural Language Processing

First of all, Coco will need to use natural language processing in order to analyze the user’s textual input and subsequently provide correct textual responses. What makes Coco an artificial agent is the fact that it does not have all its questions and answers preprogrammed. Instead, it processes and analyzes the conversation and responds to this.

In the first survey, only 26% of the respondents in our user group (people of <= 25 years) would give permission to access the microphone. For this reason, it has been chosen to only communicate via textual messages. Another benefit of this approach is the fact that less confusion will arise since wrong pronunciations or background noise will not interfere. Also, Coco itself will not “speak” to its users, but only communicate via text-messages and popups. This makes it easier to use Coco in settings where other people are present as well (i.e., public places).

Sentiment Analysis

Furthermore, Coco will include sentiment analysis. As explained before, we would like Coco to show emotionally intelligent behavior, to be able to provide the correct social support. Sentiment analysis would be an important step in reaching this goal, as it allows Coco to analyze its users' emotions. Often, models categorize sentiments as either being positive or negative [23]. However, more precise distinctions could be made, although this is more difficult. Coco could adapt its responses then to the matching sentiment of the user. For example, an extremely happy response would not be appropriate in case the user is feeling sad. However, it would be if the user just received some positive news (e.g., that he/she passed an exam).

Also, by analyzing the users’ sentiment, it would be able to see whether the user would need social support. Imagine that someone is feeling bored or lonely and is therefore responding negatively. Coco could detect this and ask how he/she is doing. It could also send some suggestions to feel better or provide some motivating messages to support the user. By analyzing the response of the user again, Coco could know whether the person is appreciating its support (e.g., by checking the used emojis). Sentiment analysis should namely be able to analyze different types of input, such as text, emoticons or symbols [23].

To conclude, sentiment analysis would be important for providing the correct social support, which is one of the main objectives of Coco. However, at this moment, the analysis methods are not yet perfect. Difficulties can arise because of various reasons, such as sarcasm and interpunction, but also the specific context in which a word is used. As an example, the model discussed in the Ted Talk by Andy Kim reached an accuracy of around 60% [24]. However, as technology is constantly developing, the accuracy is expected to increase further.

Preference Learning

Preference learning allows Coco to adapt to its specific users. For instance, if Coco would start making jokes to cheer its user up and he/she reacts in a positive way, it could learn that this specific user likes a joke as motivator. However, if he/she reacts with “stop it”, this user does not appreciate jokes. Another example could be Coco asking if the user would like to take a short break. If a certain person always responds “no” to breaks at 15:00 and “yes” to breaks at 16:00, it could learn that this user would like to have a break around 16:00 instead of 15:00.

As becomes clear from these two examples, it is necessary to save user data in order to create such preference models. However, the first survey showed that only 46% of the examined participants from our user group (people of <= 25 years) would give permission to save their user data. Hence, this last AI-related application of Coco could be added as a voluntary extra feature, which people could select if they feel comfortable with saving their using data.

Design and Appearance Recommendation

Text-based or visaully represented

There are several ways in which the virtual assistant can communicate to the user. For example, the communication could be (primarily) text-based or speech-based, but the agent could also have an appearance. This appearance could be a non-moving image or the agent could have facial expressions. When looking at the physical design of a virtual agent, is it important to ask ourselves whether having a physical appearance for the virtual assistant is actually a good idea in the first place. When looking at past research, it turns out that a lot of studies show the additional benefits of having an interface, related to the user’s performance and social interaction. We will look into this in more detail.

First of all, having an interface will make the virtual assistant seem more emotionally intelligent. Agents with more emotional intelligence in their interaction are shown to increase positive affect in users, and to decrease negative emotions [25] [26] [27]. As Grover et al. [14] have shown with their research, this can in turn make the user feel more productive and focused and the user will hence be more satisfied with their achievements. Zhou et al. also noted this, since they found that having an interface can make the user more aroused, but on the negative side users are usually less relaxed when using an interface [28]. Furthermore, simply the presence of the virtual assistant has the ability to improve task performance, which is in line with social facilitation theory, stating that people will increase their effort in the (imagined) presence of others [29]. Shiban et al. [30] confirmed this, indicating that having an interface can increase the motivation of the user, which will in turn have a positive influence on their performance. Finally, as a result of their meta-analysis Yee et al. [31] also indicate that the presence of an interface can produce more social interactions, hence it seems to be better than having no interface. They also mention the increased task performance, indicating however that overall effect sizes are relatively small.

To conclude, all these findings suggest that it is better for the user to have an interface for the virtual agent, primarily to improve productivity, positive affect and social interaction.

Human-like characteristics

The next question to focus on is, to what extent should the virtual agent be human-like? This question is interesting in multiple ways. Firstly, we can look at changing anthropomorphic characteristics like facial expressions and gestures. Secondly, we can also focus on the actual static appearance of the agent: is it better to closely resemble a real human, to use a cartoon-figure or to maybe use an animal or robot design? This section addresses the first question.

The non-verbal part in communication has high importance. It can even replace verbal communication and when verbal and non-verbal communication are in conflict with each other, people tend to trust the non-verbal communication more and use it to find the true meaning behind the communication [32]. According to the Computers as Social Actors theory, people act towards AI devices as if they were humans, and also expect them to act in a socially appropriate manner [33]. Therefore, the non-verbal communication part should not be left out of the VA’s designing process.

Facial expressions

One form of non-verbal communication are the facial expressions, which have an important role. Including emotional expressions can help enhance the perceived emotional intelligence of the agent [27]. As stated by Grover et al. [14], the enhancement of the perceived emotional intelligence of the agent can have a positive effect on the perceived productivity and focus of the user. According to Fan et al. [34] high emotional intelligence leads to an increased level of trust, so if we want users to be able to trust the agent, we should design the agent to be highly emotionally intelligent. When adding facial expressions to a virtual assistant, the communication becomes clearer, since emotions of the agent are easier to read [35]. The facial expressions enrich the interaction between assistant and user and make the agent appear to have more natural behavior [36]. Virtual assistants are also better accepted when they have a high social intelligence and are preferred over non-socially intelligent robots [37]. The above reasoning is also connected to the idea of facial imitation: when a human is observing someone's expression, they tend to mimic this expression, leading to emotional contagion. This could then in turn lead to enhanced social interaction and trust [38]. Since (humanoid) robots rarely have the same details in their face as humans, is it expected that the level of emotional contagion will be decreased. Frith [39] however described a study in which humans did show recognition of the robot emotional expressions, indicating that there is at least some resemblance with humans.

To conclude, facial expressions can be included to improve communication between the virtual assistant and the user and to improve acceptance of the virtual assistant. The effect will likely be largest when ample anthropomorphic features of the agent are present.

Body language

Can adding a (non-static) body to the agent representation have additional advantages in comparison to just showing a face? A study by De Gelder et al. [40] addresses exactly this question. They conclude that both faces and bodies can convey information that is crucial for social interaction. Both however do this in a slightly different way. While faces are useful for conveying subtle emotions and intentions in a close-by setting, body language is important for both close-by interaction as well as (action) intention over a larger distance.

Facilitating larger distance communication is of course not relevant in the context of an on-screen virtual agent. Still, body language can enhance and increase emotional appraisal, and in times when face and body show conflicting emotions, people are biased towards the interpreting the emotion as it is expressed by the body [41]. This indicates the impact of body language, but it also shows the importance of always including a bodily posture in the chatbot interface as best fitting with the current emotional message.

In contrast of the above findings, a review of studies comparing computer-mediated communication with face-to-face communication concluded that conveying emotions is just as easy in a text-based context as it is offline. Especially with the use of emoji’s [14], online communication is known to more often show explicit emotion during communication than face-to-face interaction [42].

Lastly, by not including a (human) body in the interface, potential decreased self-image of the user can also be avoided [43]. This disadvantage can however be solved with the use of a humanoid robot appearance, without visible human-like body shape or gender.

Based on the information above, adding a bodily representation to the virtual agent could potentially be beneficial for better social interaction, but this is not necessarily needed.

Gesturing

Hand gestures, including deictic (pointing) gestures, form another part of non-verbal communication. People use gestures on their own, when they cannot talk to somebody due to loud noises or distance for example, but also in addition to speech. These "co-speech gestures" can enhance language comprehension and have a positive influence on later recall [44]. However, a study done by Craig et al. [45] states that deictic gestures do not have an influence on retention, so the actual consequences of deictic gestures are uncertain.

Deictic gestures can be especially important when the agent wishes to orient the user to the part of a document or the screen that is currently under discussion [46]. Shiban et al. [30] noted that deictic gestures indeed guide attention, especially when the virtual agent is static.

Lester et al. described the agent’s persona as “the presence of a lifelike character in an interactive learning environment” [13] . Considering the implication of deictic gestures for a virtual agent, the agent's persona will be significantly increased when implemented, which will increase its social presence [47]. In accordance with the social facilitation theory, this in turn can increase a user’s task performance [29].

The inclusion of hand gestures can improve the user's relationship with the virtual agent. The user will most likely feel more comfortable when interacting with an agent that will be able to communicate via more natural ways [48].

Dynamic vs. static

It is also important to look at whether there is an added value to implementing a dynamic agent, instead of a static agent. A study by Garau et al. [49] reported that users are less likely to start an interaction with the static agent than when the agent is moving, since they had a low sense of personal contact with the non-moving agent. However, this might not be disadvantageous for our virtual agent, considering the fact that users do not necessarily have to start an interaction with the agent.

A paper by Bergmann et al. [50] noted that the 'uncanny valley' effect stronger is for dynamic agents than for static agents. The uncanny valley effect describes the effect that a robot will be more appealing when it appears more human-like, but only up to a certain point. At that point, people will feel uneasy or even aversive to the highly realistic humanoid robots [50].

Lastly, older people are better at correctly matching emotions when looking at a static expression compared to a dynamic expression. However, for younger people there appears to be no difference between static and dynamic expressions when recognizing emotions [51].

So, it might be beneficial for the interaction from the user to the agent to have a dynamic agent, but this is not really applicable in context of the objectives regarding our research. This is because the target group merely consists of younger people (students). In addition to that, the uncanny valley effect is stronger for dynamic agents, also indicating that a static agent can be more successful in avoiding discomfort.

Human-like appearance

A lot of researchers have attempted to show the beneficial effects of utilizing an agent that closely resembles a human. Generally, many studies have supported the notion that more human-likeness in a virtual agent can lead to a higher perception of social fidelity (indicating the realism of socially-relevant attributes) [52] and social presence [53]. Both Davis [47] and Zhou et al. [28] mention in their study that those factors may lead to more positive attitudes towards the agent and longer and more positive social interactions. Both studies however also indicate that they did not find that an anthropomorphistic appearance has any positive significant effects on learning outcomes or task performance. Shiban et al. [30] on the other hand, describe how cartoon figures could lead to lower motivational outcomes in comparison to more realistic agents. This could however be due to the specific design of cartoonized characters in their conducted study. Yee et al. [31] confirm in their meta-analysis how human-like chatbot representations seem to increase positive social interactions with users. However, it is to be noted that the effect sizes were low. Moreover, the effects were only found in subjective measures, where demand characteristics might have had influence (or effect) on peoples’ responses.

Pelau, Dabija & Ene [54] conducted a study in which they looked at the relationship between human-like characteristics in agents and user’s acceptance and trust of those AI devices. They found that anthropomorphistic characteristics do not automatically lead to better acceptance of the agents, but they did when combined with characteristics like empathy and interaction quality. These finding suggest user satisfaction will be highest when a virtual agent looks, acts and behaves like a real human.

The above notion connects to the reasoning of Chaves-Steinmacher and Gerosa [55], emphasizing how an overly humanized agent can significantly increase user expectations. When the agent does not fully behave like a human, this will very often lead to frustration and annoyance. Furthermore, an agent which fully resembles a human could also cause discomfort [56]. It is therefore important in the designing process to provide the agent with the right identity cues, that are in line with the agent’s capabilities and user expectations.

Identity

Even just with a static appearance, or a so-called avatar, a virtual agent can elicit several feelings in its viewers. These feelings can for example be related to stereotypes or user expectations.

Multiple studies have found that similarity is an important factor when wanting to increase trust and motivation in users. For example, users might think of the agent as more trustworthy when their ethnicity and gender matches the ethnicity and gender of the user [57] [58] [59]. Moreover, Rosenberg-Kima, Plant, Doerr & Baylor [60], indicated in their study how agents with similar race and gender are most successful in motivating and helping users raise their self-efficacy. The same study did however also mention something about the stereotypes that the agents with different races endorsed. This kind of stereotyping is not rare: De Angeli and Brahnam [61][62] described in their study how disclosure of the agent’s gender can cause users to use more swear words and sexual words during communication, and how black-representing chatbots caused people to engage in racist attacks.

To avoid these kinds of negative stereotyping, it might be a best to design a virtual agent’s physical appearance without obvious disclosure of race or gender. The same authors as mentioned above for example also show how a chatbot with a robot embodiment caused users to engage in sex-talk significantly less often[61]. As Chaves-Steinmacher and Gerosa [55] conclude in their analysis, it is important in the design process to focus on including the kind of identity cues which are appropriate regarding the context and capabilities of the agent.

Color

It has been widely known that colors can have a profound impact on human feelings and behavior. This also includes aspects of working behavior, such as focus and productivity. Considering the fact that the virtual agent is not present the whole time, it is more important to focus on colors that will radiate calmness, trust and positivity. This can have a positive influence on the acceptance of the virtual agent. It might however also be beneficial to choose a color that is known for its positive effect on productivity. This way, the agent might be more functional in trying to motivate the user to start (or continue) working.

The colors red and yellow are more arousing than the colors blue and green. The first two colors can increase the heart rate of the user, whilst the colors blue and green can decrease the heart rate [63]. The colors blue and green can focus people inward and are perceived as quieting and agreeable [64]. These colors will also generate stable behavior. In addition to this, pale colors are perceived as to radiate calmness, and freshness, appear to be more pleasant and less sharp [63]. In the study by Al-Ayash [63], participants noted that they felt less motivated to study due to the fact that they felt less energetic with the pale colors.

So, it might be best to use a vivid blue and/or green for the agent, to increase the positive feeling of the user toward the agent and their motivation.

Conclusion and recommendation

The above findings generally indicate that it would be wise to include a visual representation of the virtual agent to accompany the dialogue bubble. Moreover, it will be beneficial to add distinctive facial expressions to it, corresponding to the emotions conveyed via text.

When it comes to adding a body, there are both advantages and disadvantages. Since adding a body is not known to increase social interaction or emotional appraisal, it might not be necessary to include a body into the design. However, this will remove the possibility of including (deictic) gestures. To avoid potential incongruencies between the emotions on the face and the body, as well as negative influences on the user’s body-image, a good alternative might be to represent the virtual agent by a humanoid robot.

Even though positive attitude towards the agent and levels of trust might be higher with highly anthropomorphized agents, there is also a significantly increased risk for discomfort, frustration and cognitive dissonance (because of user expectations). To avoid these negative consequences while still keeping the human aspect, a humanoid robot seems to be a good alternative in our situation as well. It is recommended to design this representation without obvious cues of gender or race, to avoid stereotyping.

When having a human representation, it is best to design it to be static. This is because a static appearance does not have noticeable disadvantages and there might otherwise be a risk for discomfort. With a robot representation, static or dynamic representations are both possible. A slightly moving image might in this case cause some more positive affect in users, but it is uncertain whether this effect is large enough to outweigh the trouble of implementing this.

Considering the color for the agent, it would be best to make the agent vivid green or blue, or a combination of these colors, for this will increase the positive feeling of the user toward the agent and the motivation of the user.

Analysis Survey 1

This section focuses on the main topics we are interested in. All parts are extensively analyzed using the statistics application Stata. The Stata code for the analysis of survey 1 can be found in Appendix A.

Interest

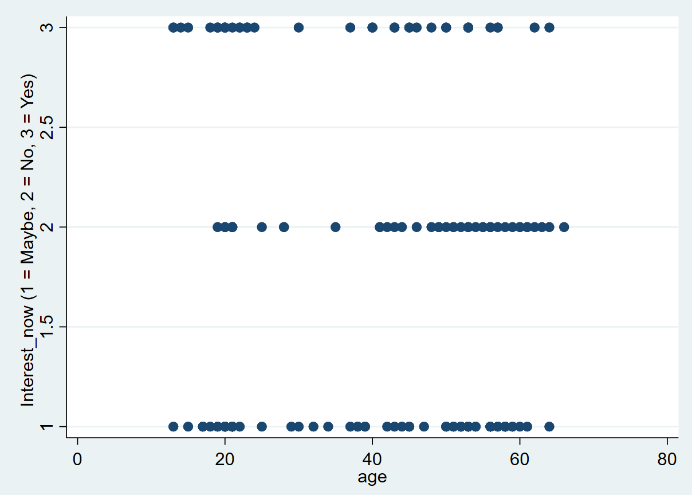

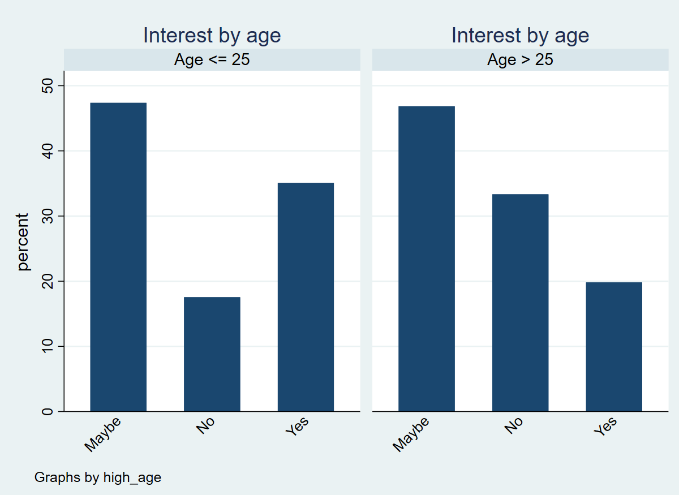

The first part of the analysis was quite exploratory: a general question was asked about whether people were interested in having a virtual agent as described in the survey. The research question for this first part was formulated in the following way: 'What are the differences in interests and preferences for people with different demographical characteristics?'

When it comes to interest in the VA, almost half of the respondents expressed their doubts. They did not immediately reject the idea, but thought their choice depended on for example the situation and the functionality of the agent. When looking at age, people that were not sure were distributed very evenly. A Wilcoxon rank-sum test however shows a significant difference in age for people who say they would be interested and people who say they are not (p=0.001). It seems younger people are more open to the idea of having a virtual agent.

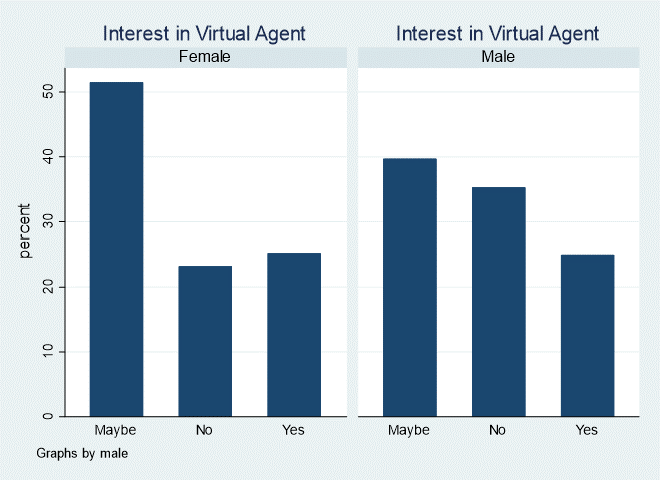

Looking at gender, both males and females are interested for around 25% of the time. Even though Figure X below suggests that females more often consider the idea of having a virtual agent, this difference is not significant (X^2, p=0.07).

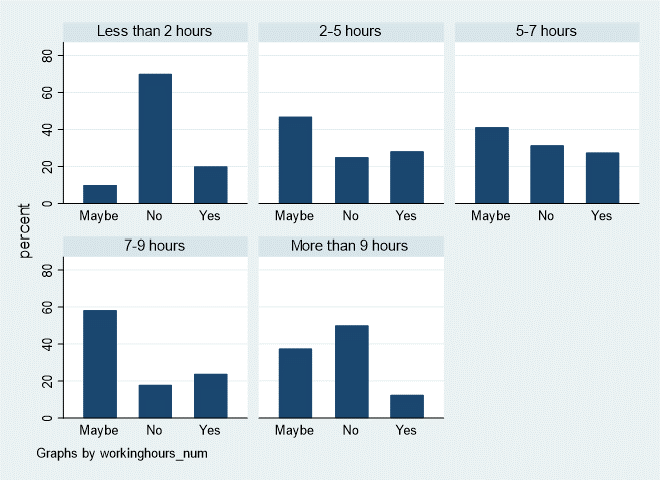

From Figure 4 we can also conclude that people who use their laptop around 7-9 hours a day are most open to the idea of a virtual agent. Least interested are people who use their computer less than 2 hours a day, or more than 9 hours. For both groups respondents indicated that they do not need such a virtual agent.

Regarding the open question whether people would be interested in Coco and why (not), a different range of answers were given. Quite some people worried about privacy, the idea of Big Brother and that employers should not get insight in their data. Furthermore, it was mentioned that people do not want to get too dependent of the agent. Moreover, some people mentioned that they wanted to be able to test the program and that they needed more information first. Finally, many people gave suggestions of what they wished Coco would be able to do. Some examples can be found in the list below:

- Program should not be too distracting, like an RSI program

- People wish to be able to ignore the agent and keep scrolling on Instagram for example

- Be able to pause the pop-ups, when in a lecture/teaching/wanting to focus or let there be blocks in which the VA will pop-up in blocks in which it won't

- How to maintain flexibility? Needs can differ daily

- Create a rhythm

- AI for making your planning

- Combine multiple apps in the VA

- Maybe not always block certain sites, mostly help with planning

- Trouble with starting work, instead of continuing to work

Regarding the open question why people would be interested in Coco after the corona crisis (or why not), we researched the relation between the closed question (yes/no/I don’t know) and the open question. Most people who answered no to the question thought they did not need the help of a virtual agent. Other reasons were privacy and having a VA would be distracting. Reasons why people answered the question with yes were mainly that the corona crisis did not influence the usefulness of Coco. Other reasons were that Coco could be a friend, could help with daily life rhythm, could give feedback and make sure no tasks get forgotten. People who answered I don’t know were mostly doubting about how Coco exactly functions and would improve their productivity level and about what the circumstances would be after corona.

- Temporarily shut off Coco

- Privacy regarding employers’ insight

- Explain how Coco makes decisions so users can improve their own behavior without being dependent of the VA

In conclusion, there are four main findings regarding the Stata analysis. First of all, around 75% of the respondents would potentially be interested in a virtual productivity agent. However, from the open questions we have found that there are some concerns regarding the privacy and effect of Coco. Moreover, younger people (age <= 25) more often express their interest than older people (age > 25). Furthermore, the group that would be interested consists of around the same number of males and females. And finally, people who work on their computer for 2-9 hours a day seem to be interested most often.

Corona impact on productivity and health

The second part of the analysis was about the influence of corona on productivity and health. Several research questions have been formulated:

Do people feel that their productivity, including concentration and motivation have decreased because of the corona virus?

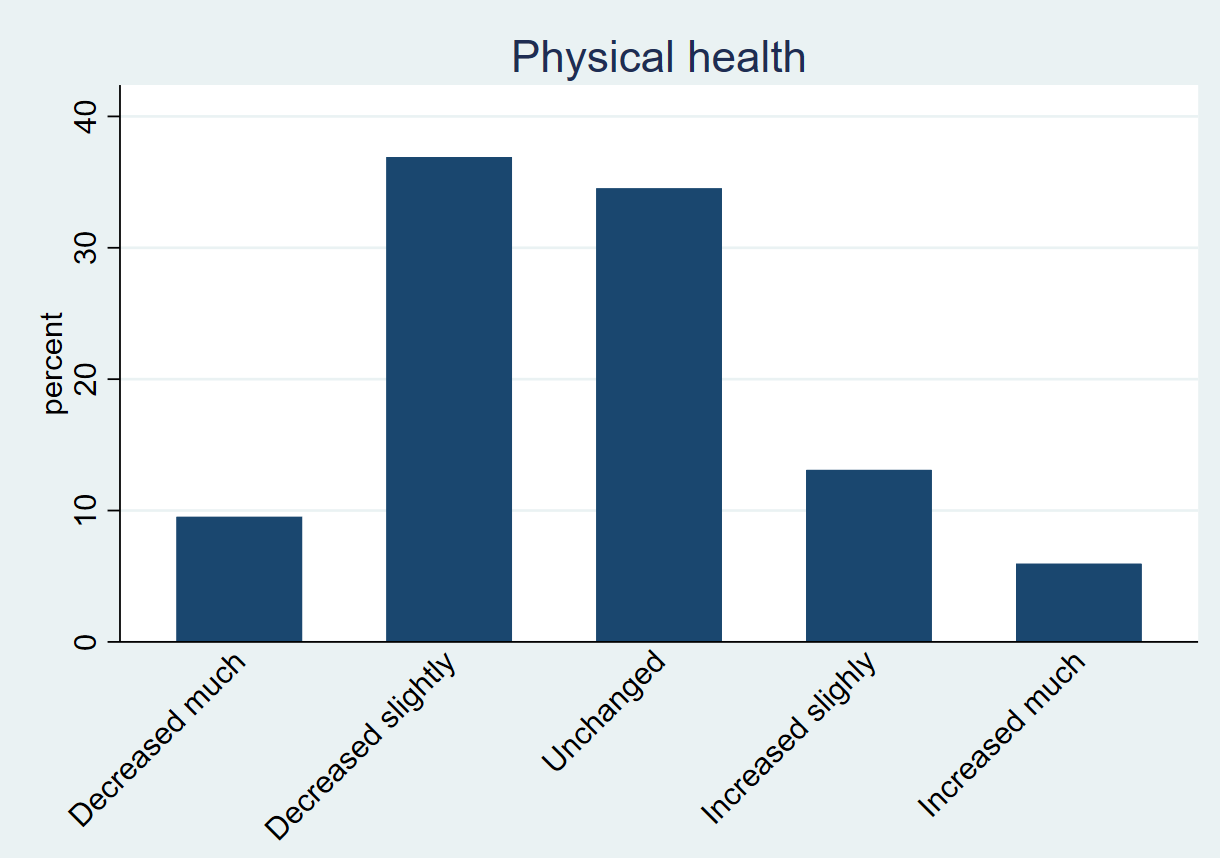

Do people feel that their physical health has decreased because of the corona virus?

Do people feel that their mental health has decreased because of the corona virus?

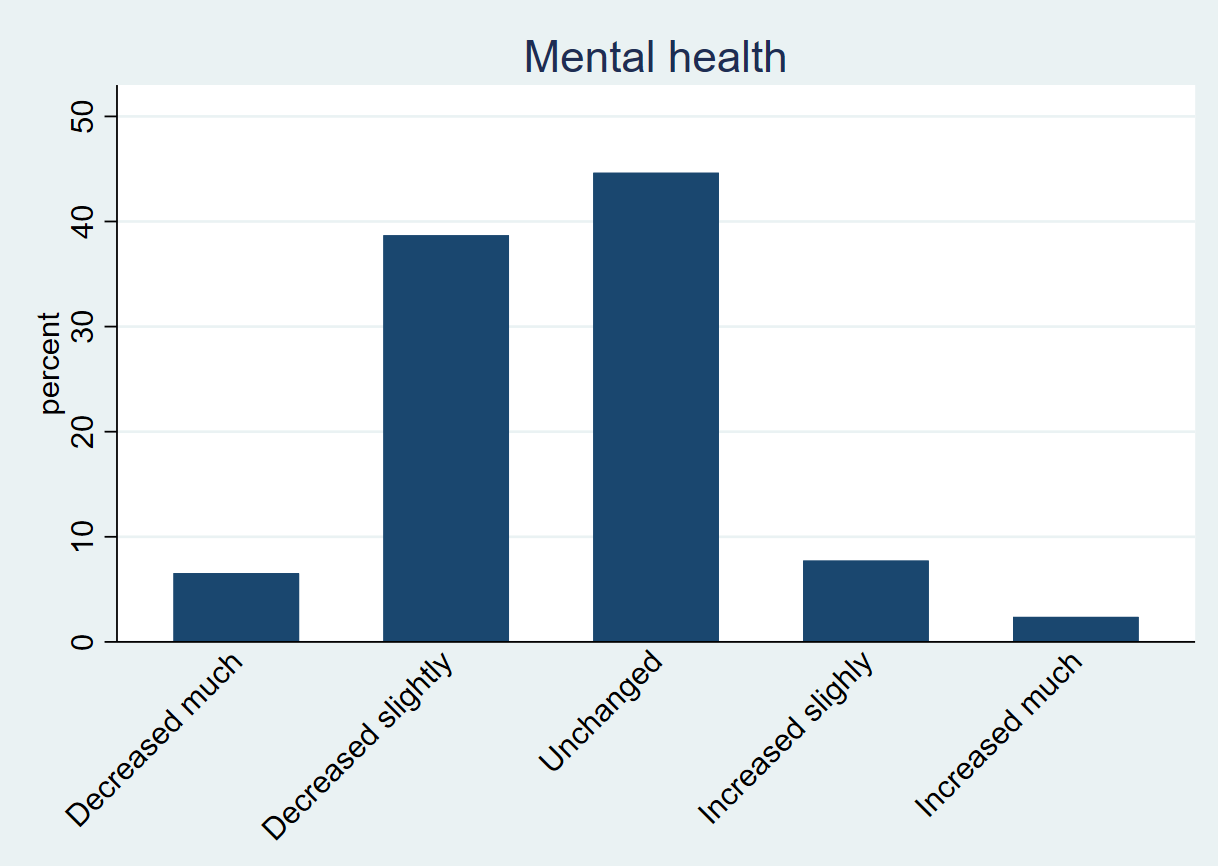

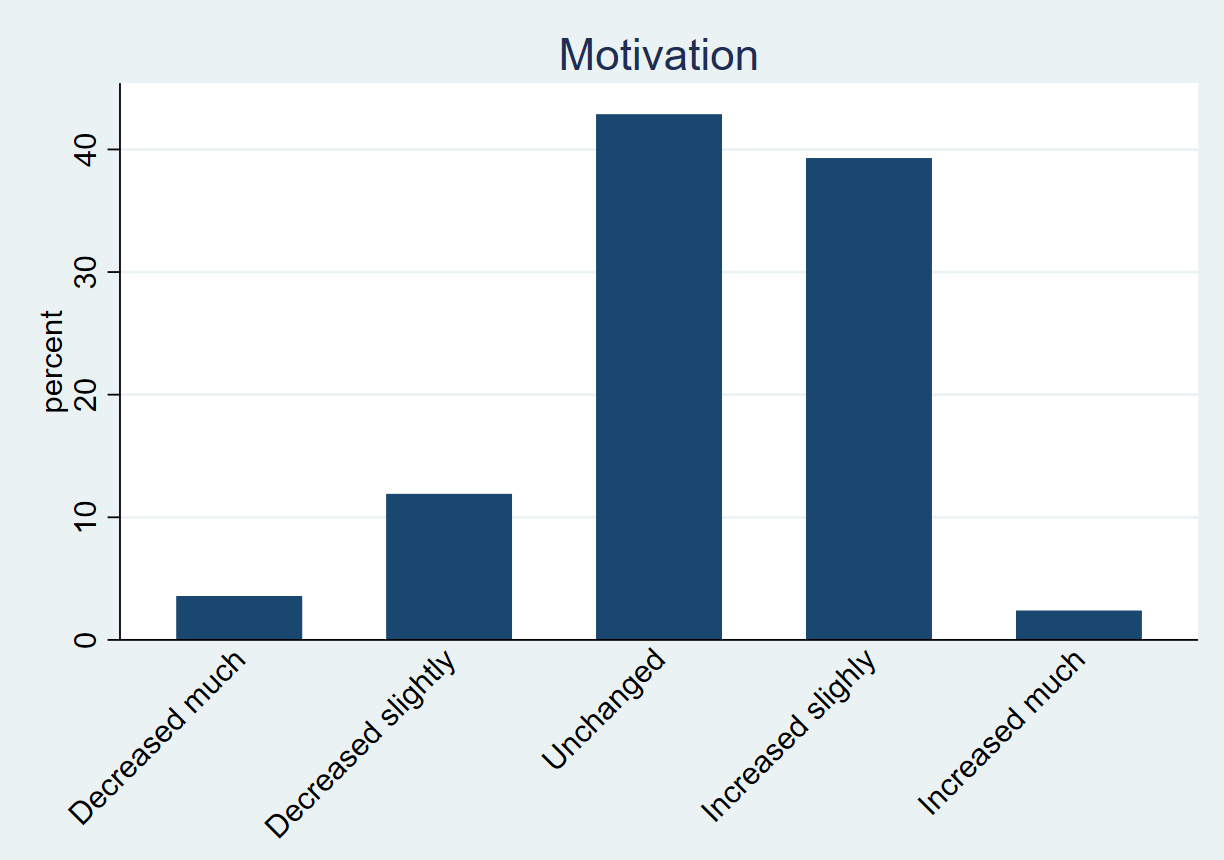

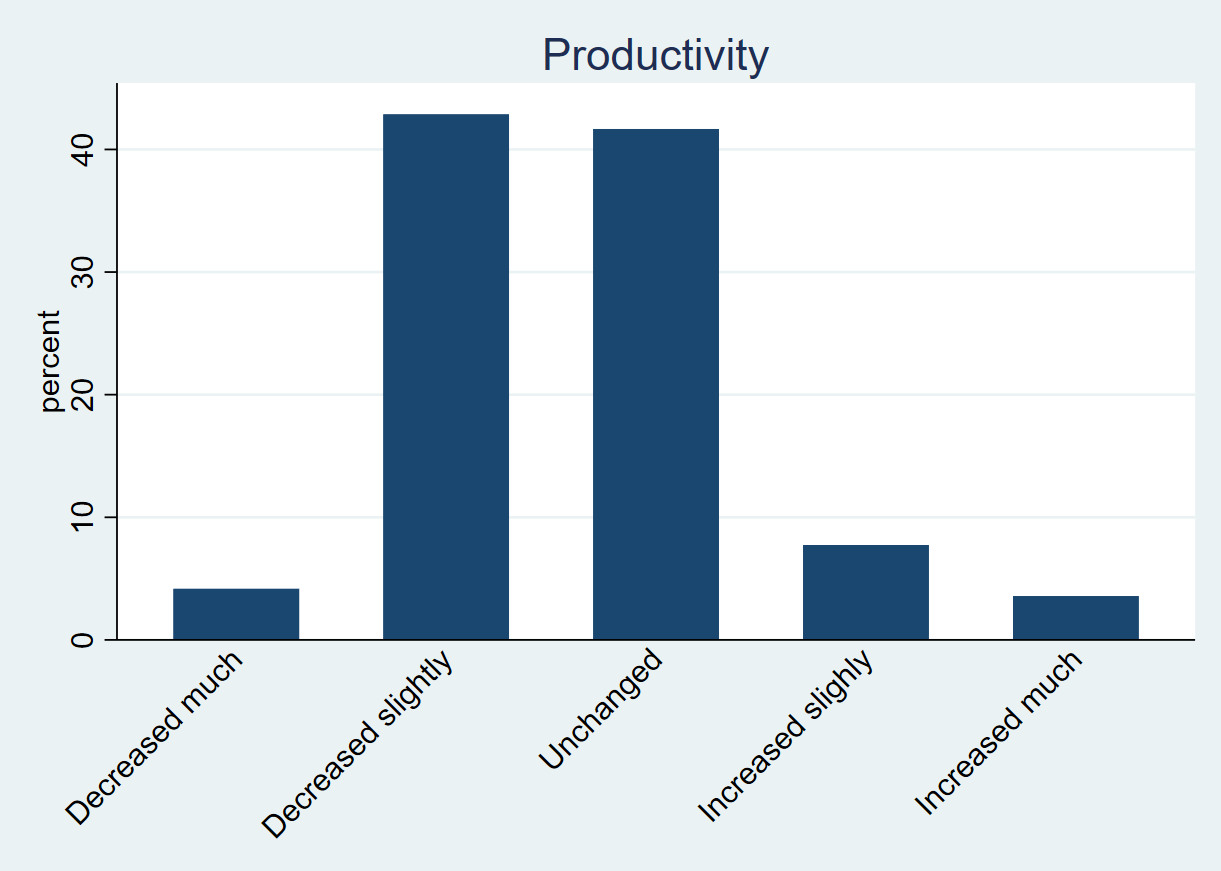

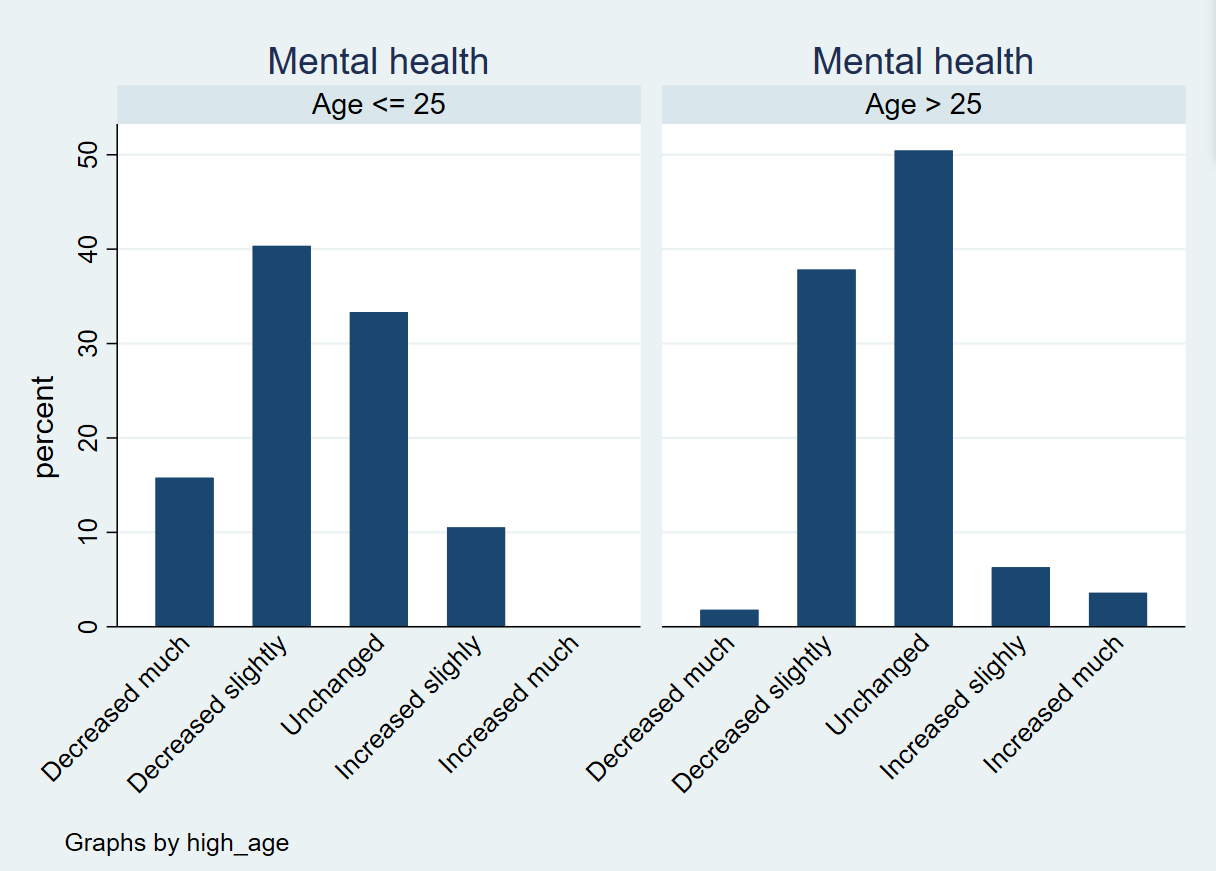

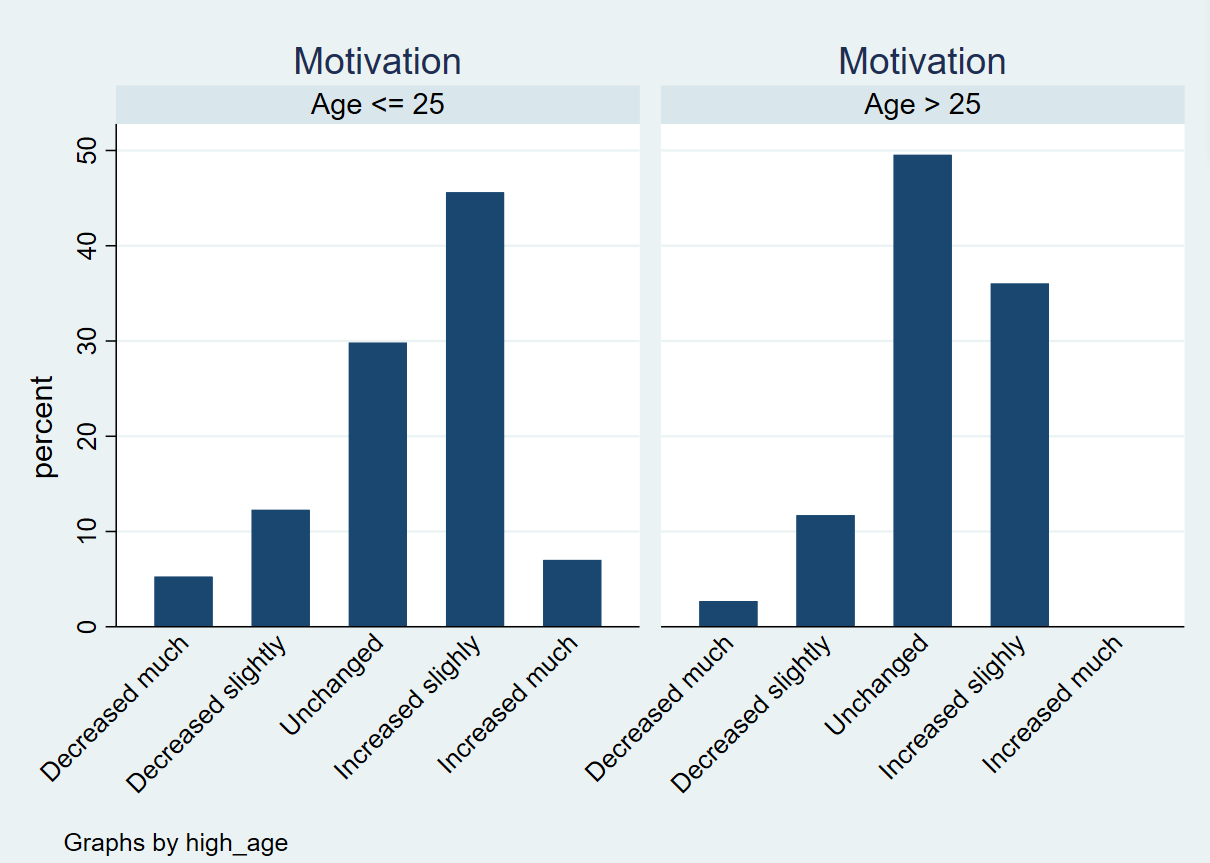

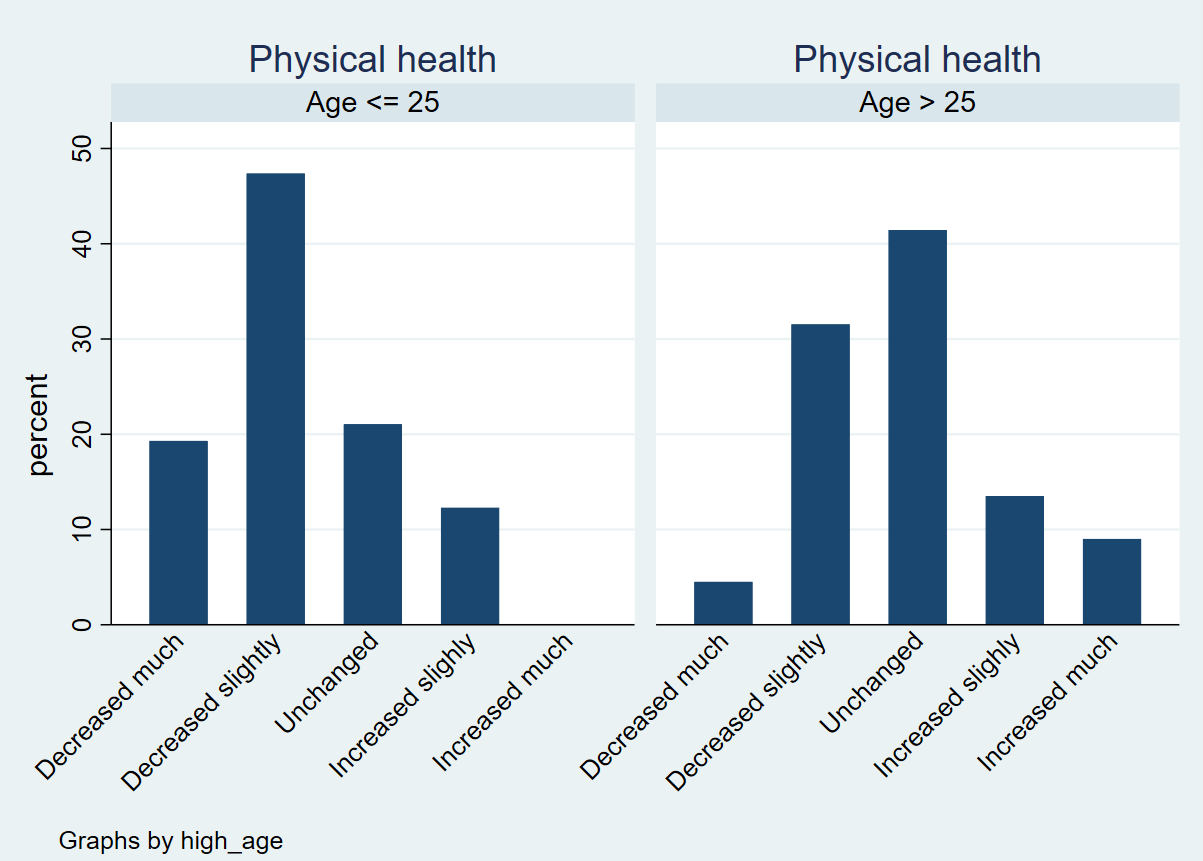

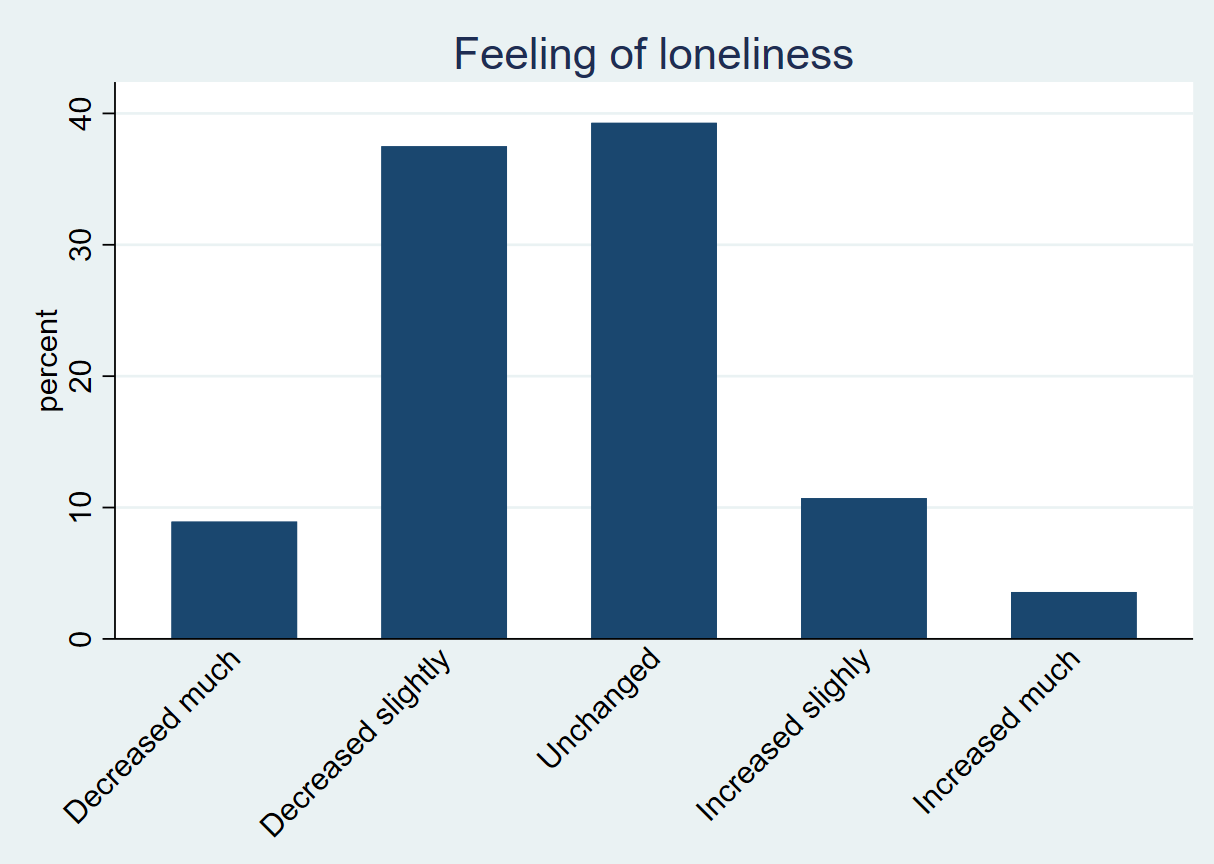

Considering the effect of COVID-19 on people's perception of their work situation, several observations can be made. Generally, mental health, loneliness and productivity are the three areas that have been negatively impacted by the corona crisis the most.

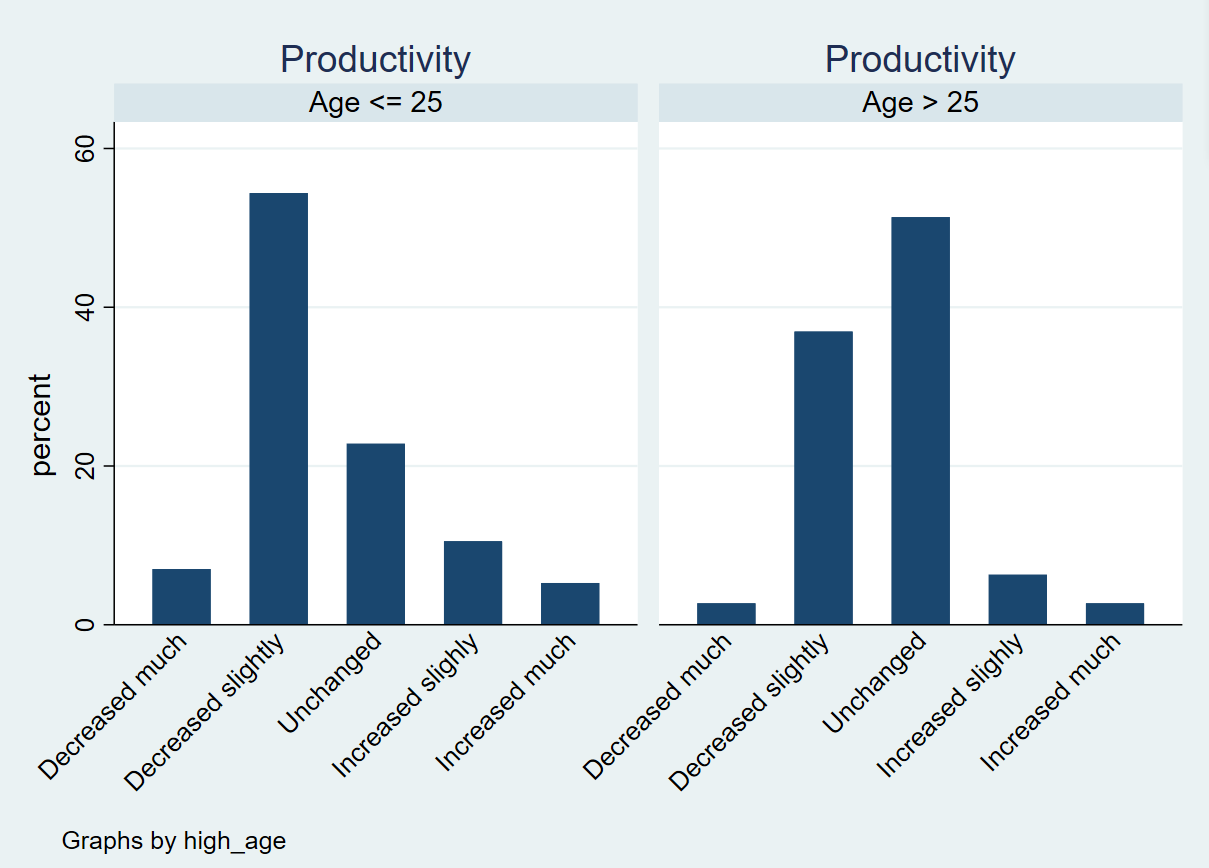

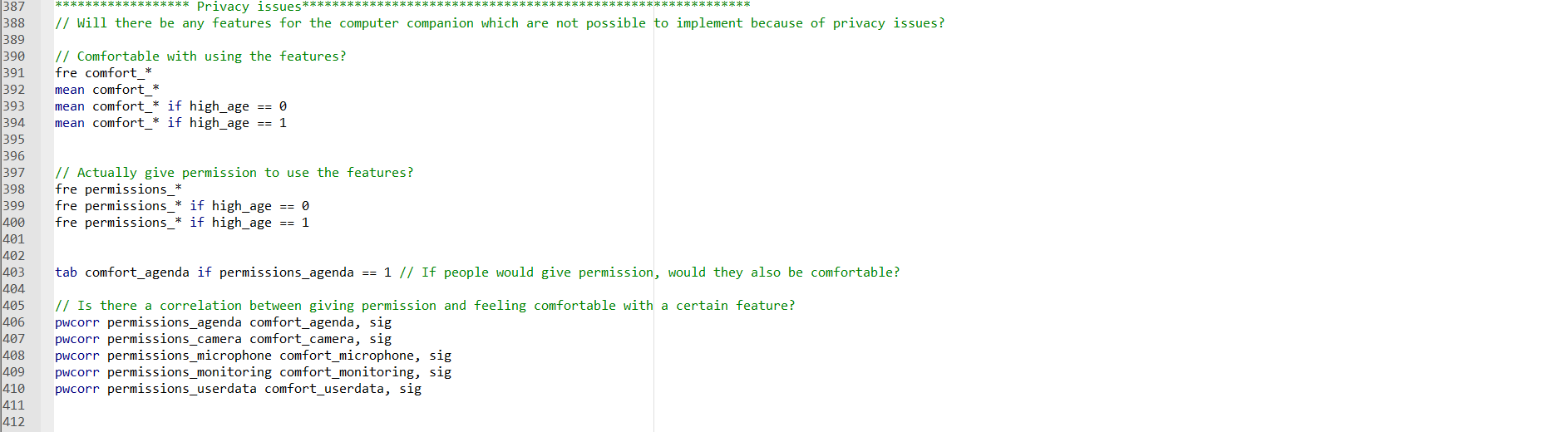

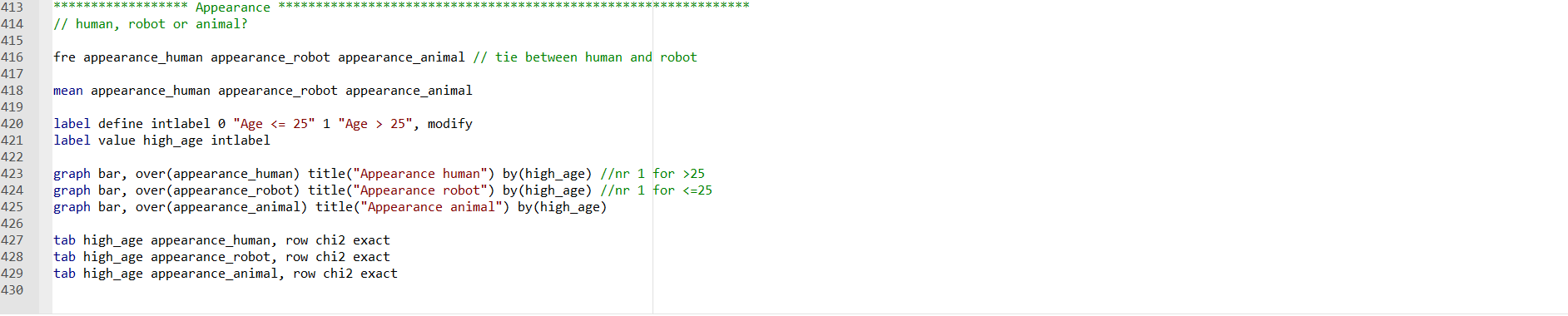

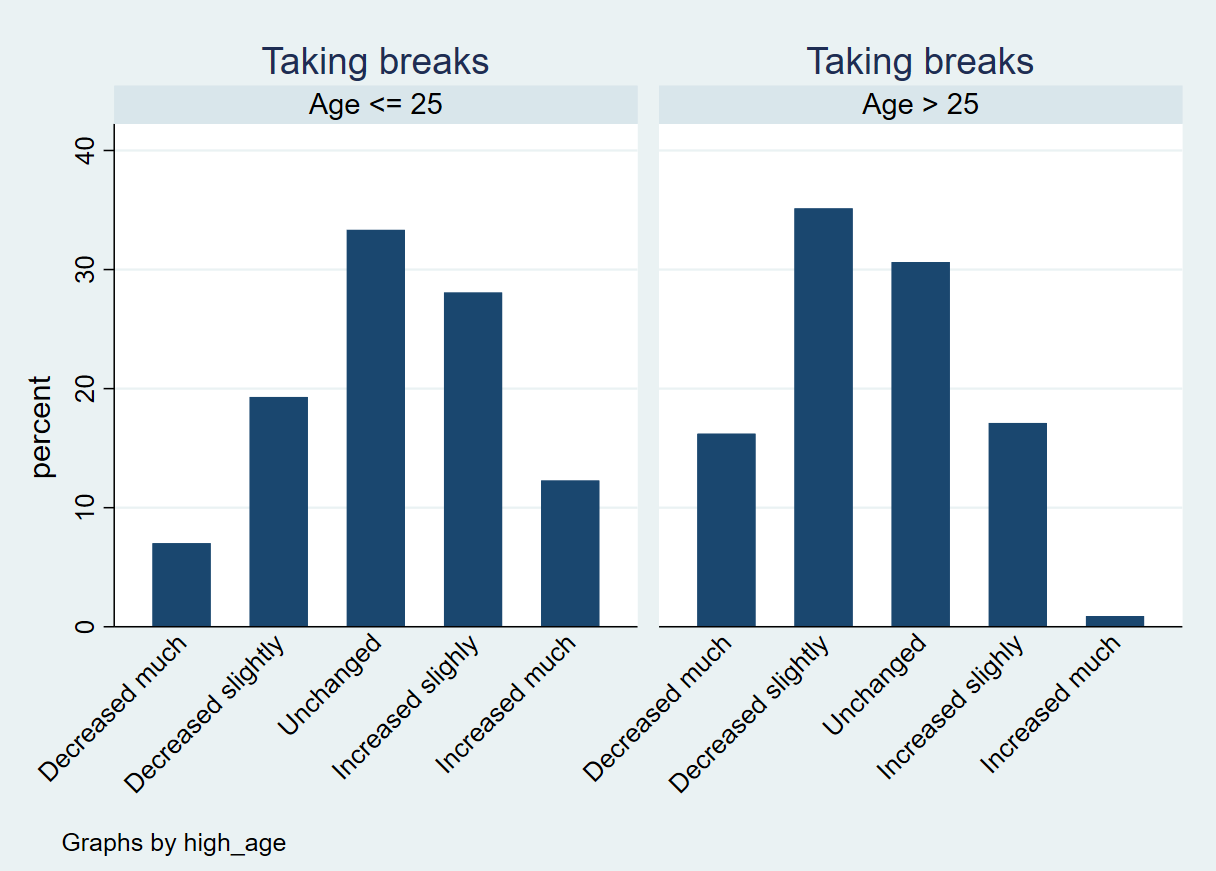

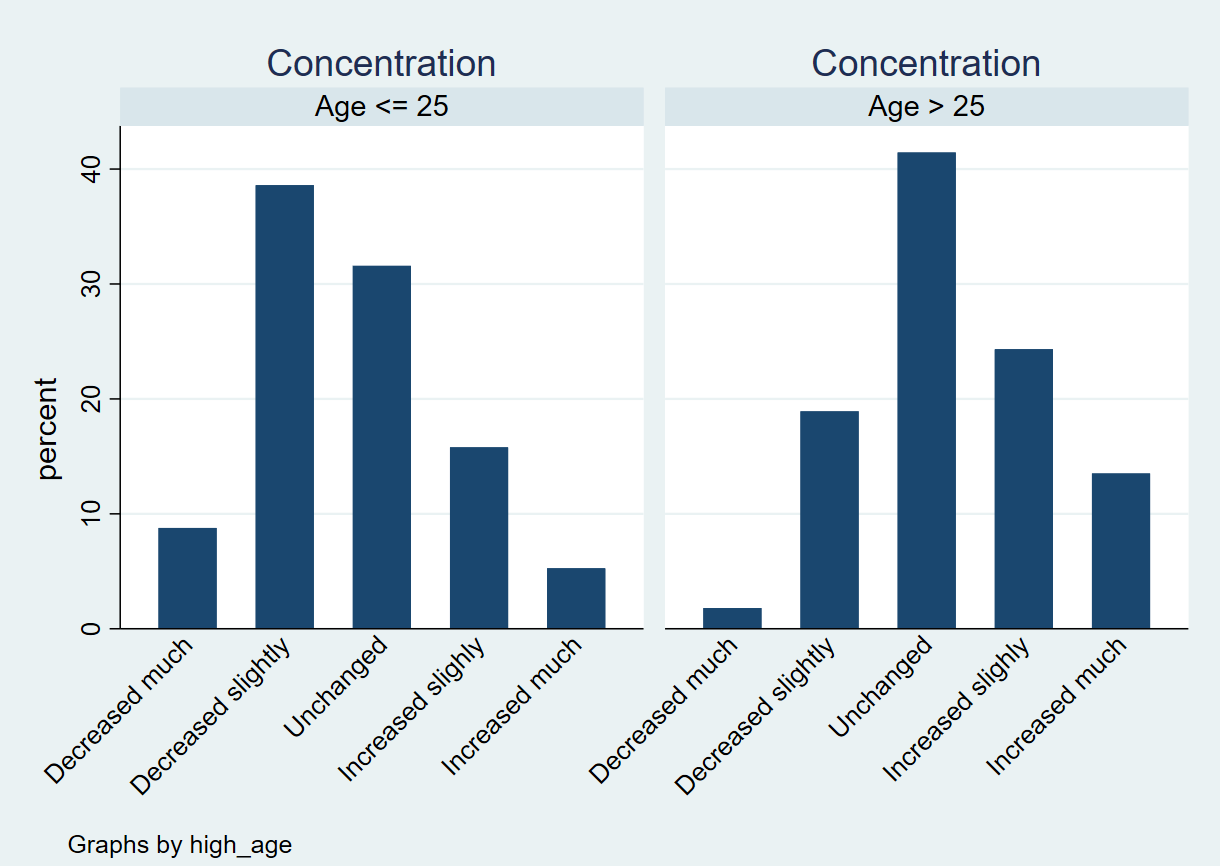

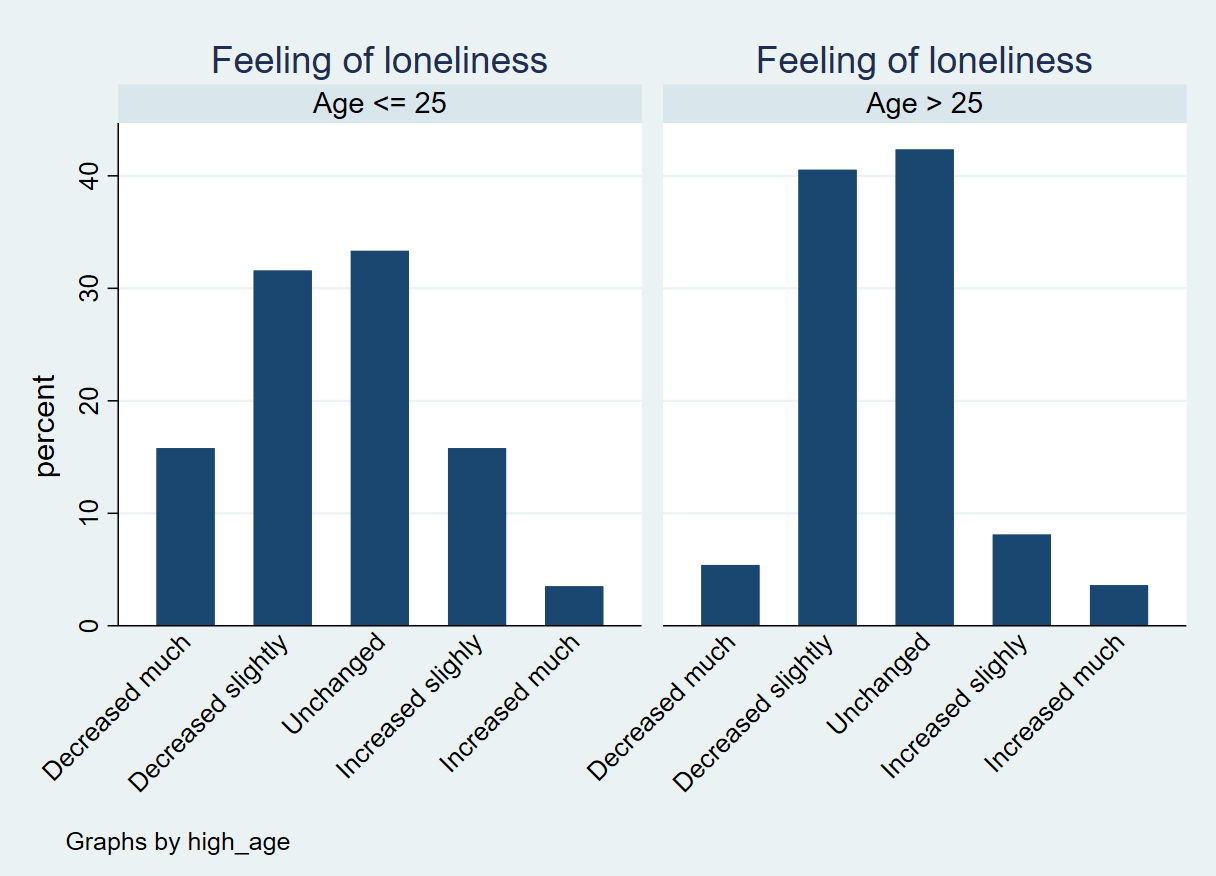

Since this questionnaire was also made to determine the user group that would need the help of a VA the most, the following matters will also be analyzed based on age. Generally, people older than 25 take less breaks since COVID-19, and younger people tend to take more breaks. Younger people generally think their concentration has decreased since COVID-19 and on the other hand, people older than 25 generally say their concentration has increased. However, both groups state their productivity has decreased. Although both groups state that their mental health, physical health and feeling of loneliness have decreased since COVID-19, the younger user group indicates a greater negative impact.

In Table 1 the means of the two groups are shown to clarify the above made statements. When the mean is smaller than 0, there is on average a decrease and if this impact is bigger than 0, there is on average an increase. There is a significant difference between the two groups if the p-value is smaller than 0.05. All this information suggests that it might be best to focus on people of 25 or younger for this research and the development of the computer companion.

Table 1: The means for several corona impact measurements and the differences between the different age groups.

| Aspects | Mean <= 25 | Mean > 25 | p-value |

|---|---|---|---|

| Taking breaks | 0.19 | -0.49 | 0.0001 |

| Concentration | -0.30 | 2.9 | 0.0004 |

| Feeling of loneliness | -0.40 | -0.36 | 0.7745 |

| Mental health | -0.61 | -0.28 | 0.0117 |

| Motivation | 0.37 | 0.19 | 0.1868 |

| Physical health | -0.74 | -0.09 | 0.0001 |

| Productivity | -0.47 | -0.31 | 0.2165 |

Regarding the open question 'Are there other activities than the ones mentioned above that you often experience to negatively impact your productivity?', around 20 people mentioned that phone calls, applications like Netflix, and message platforms like Microsoft Teams and WhatsApp disturb their productivity. Besides that, 15 participants mentioned that their family and other people in their household negatively impact productivity. Children for example ask for a lot of attention when parents are working at home, and they need to be helped with homeschooling (unfortunately, Coco will not be able to solve this problem). Besides these two main impact factors, some participants also mentioned no breaks, lack of physical contact, the mailman, spontaneous household chores, unstructured schedules, bad sleep quality, a non-stimulating workplace, ambient noise, and the monotony of the day as things that negatively impact productivity.

To summarize, considering all respondents the productivity, physical health and mental health have decreased. Generally, concentration has not been affected by COVID-19. Respondents even indicated an increase in motivation. Once the respondents were grouped by age, a clear difference in responses could be seen. The people of 25 years old and younger indicated a slight decrease in concentration, while people over 25 indicated a slight increase in concentration. Even though both groups indicated a decrease for most other cases, people of 25 years old and younger indicated a higher decrease in mental health, physical health and productivity. For both groups motivation increased, but there was a bigger increase indicated for the younger group.

Helpful tasks for a virtual agent

The third part of the analysis was about the tasks of a virtual agent users would see as useful. The research question for this part is: Which tasks are most important for a computer companion to possess?

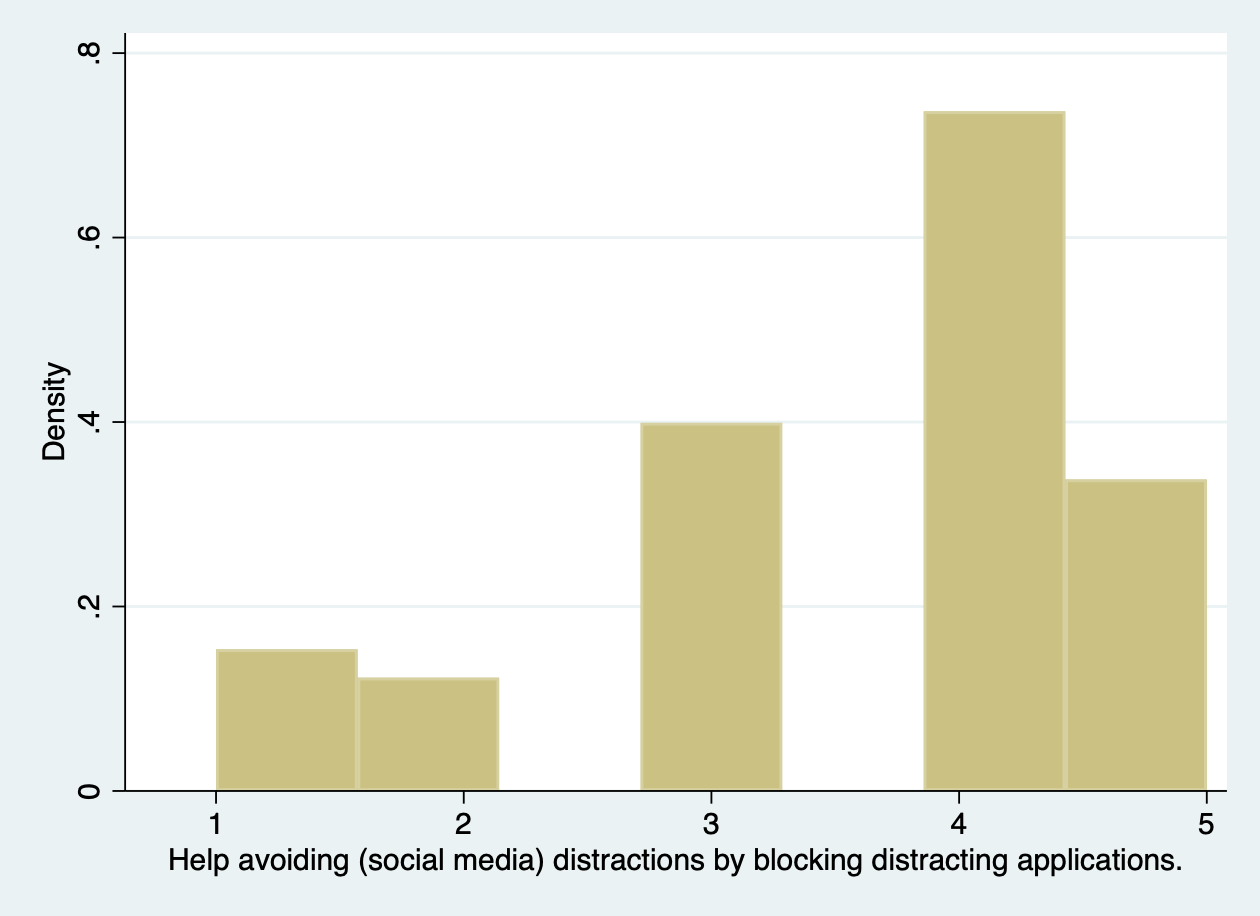

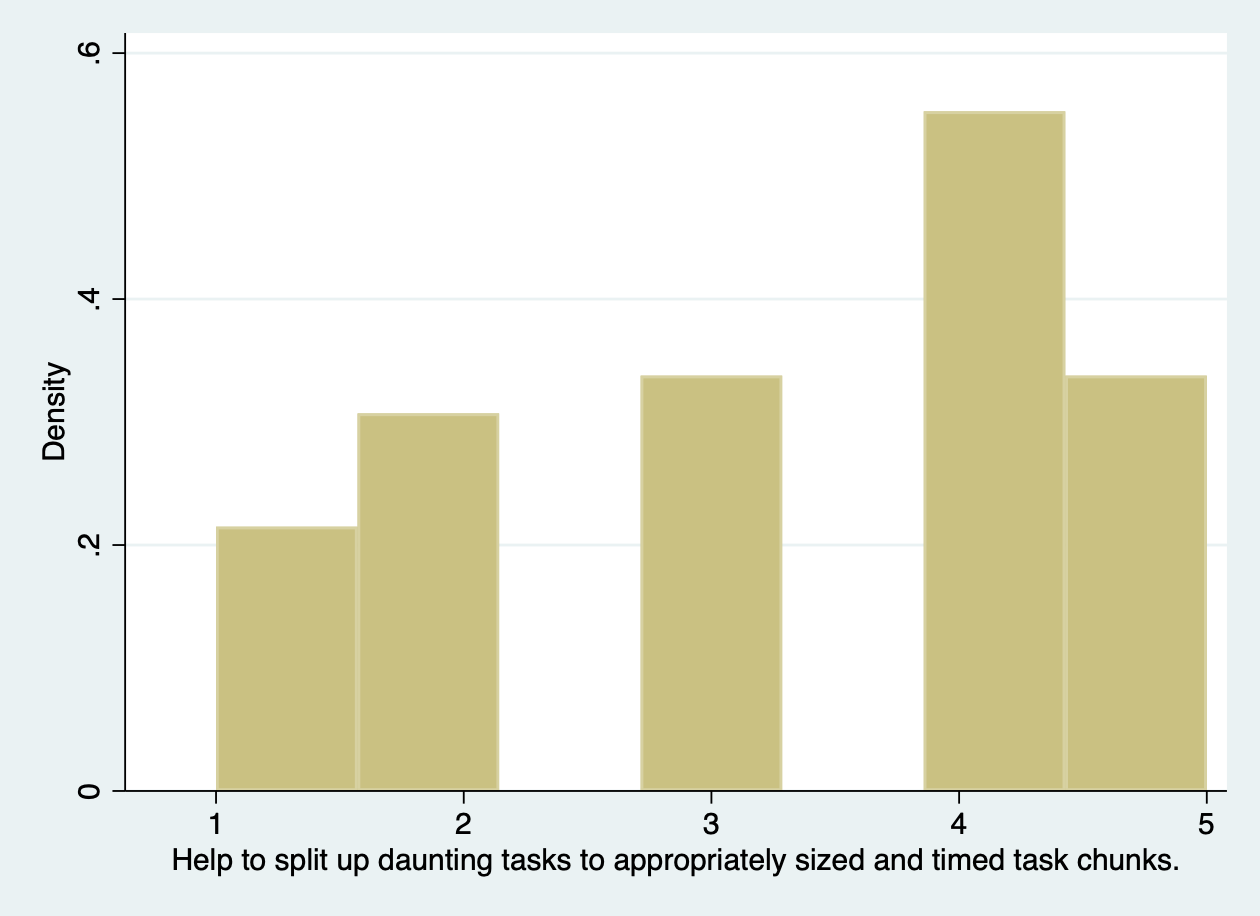

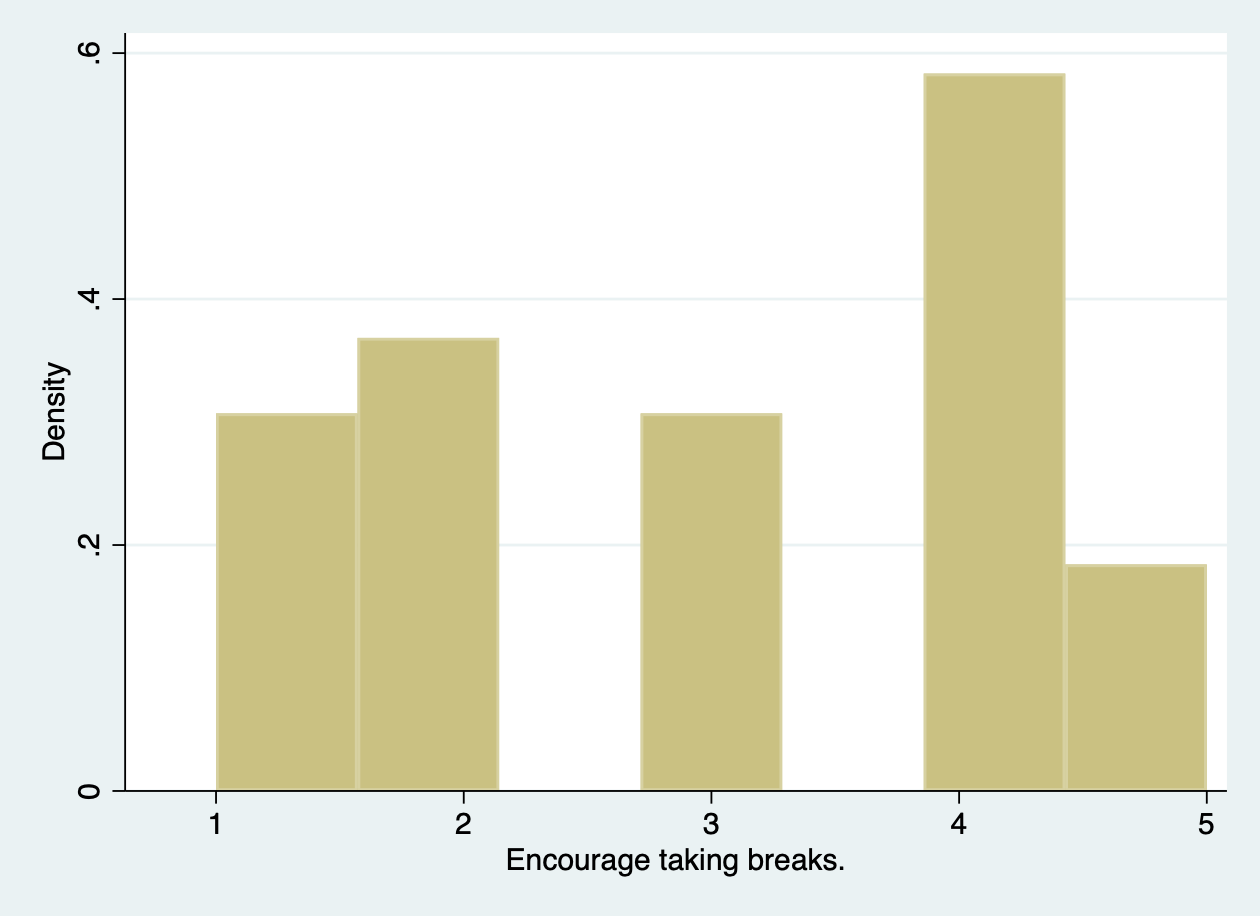

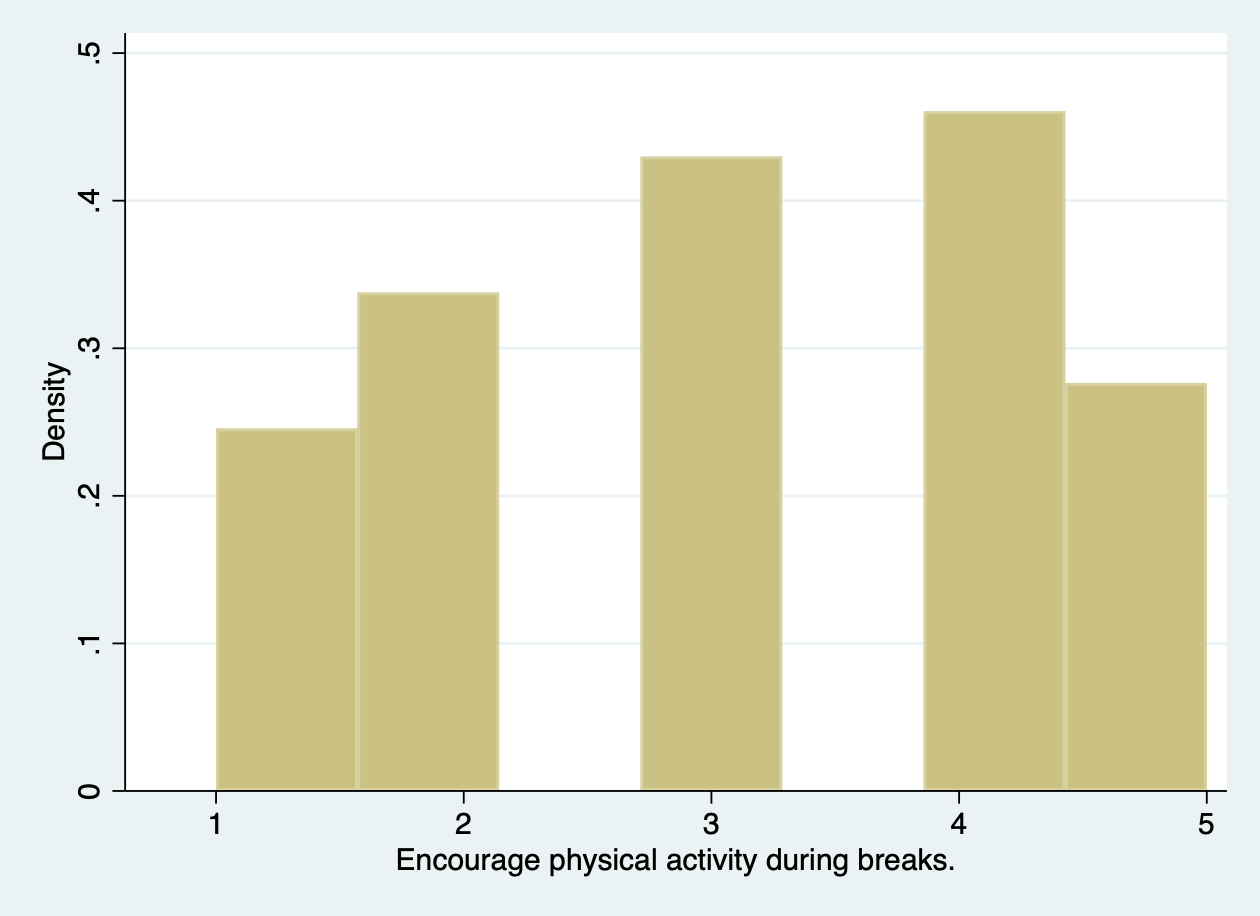

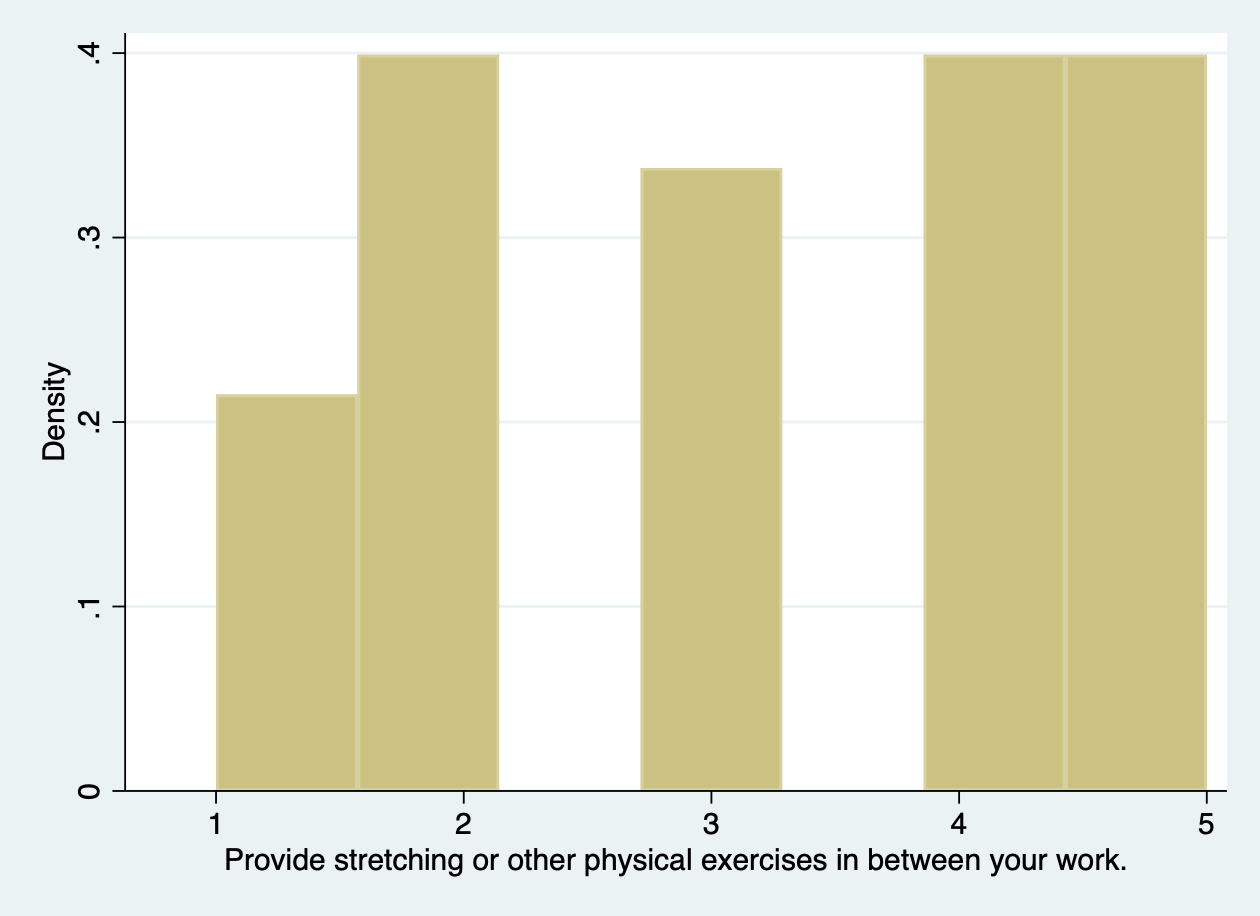

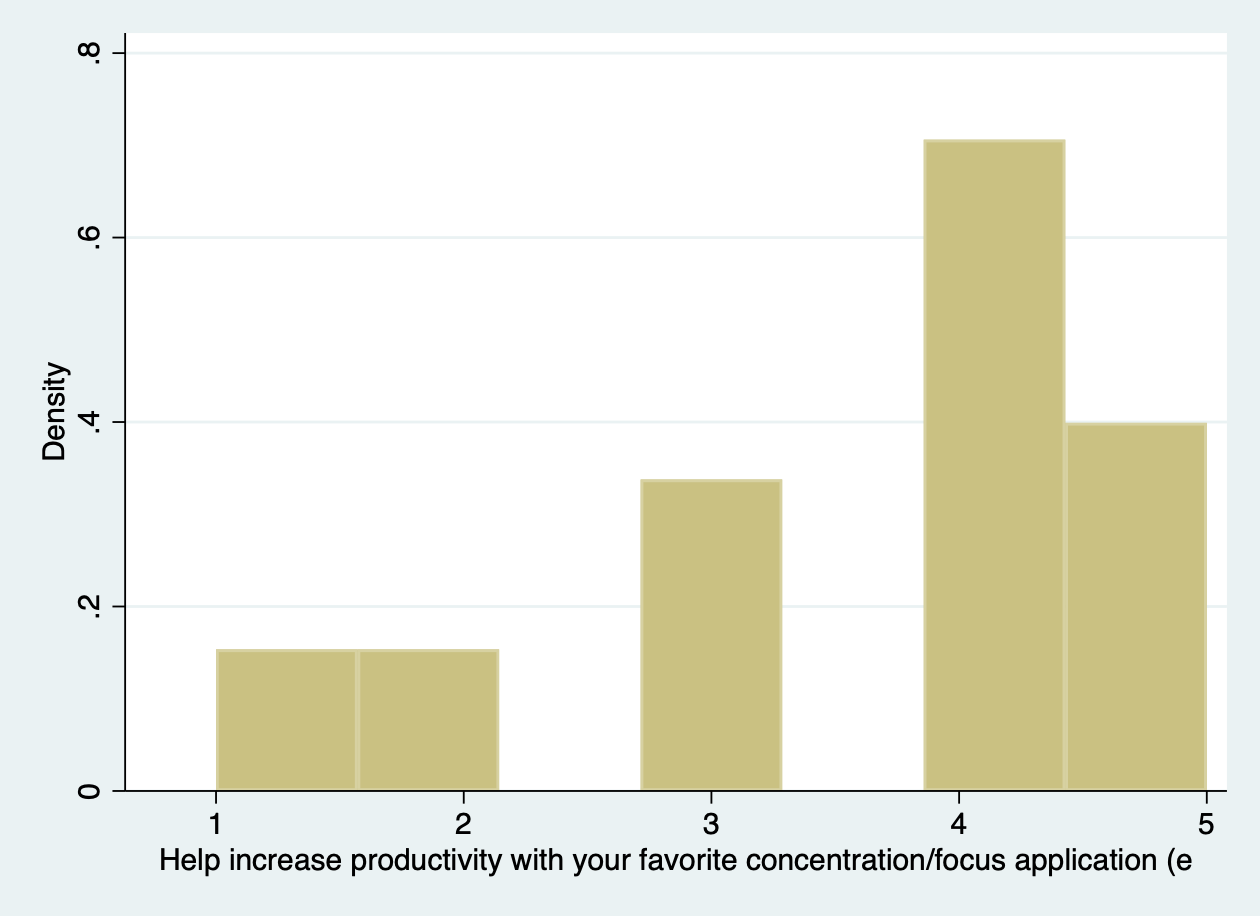

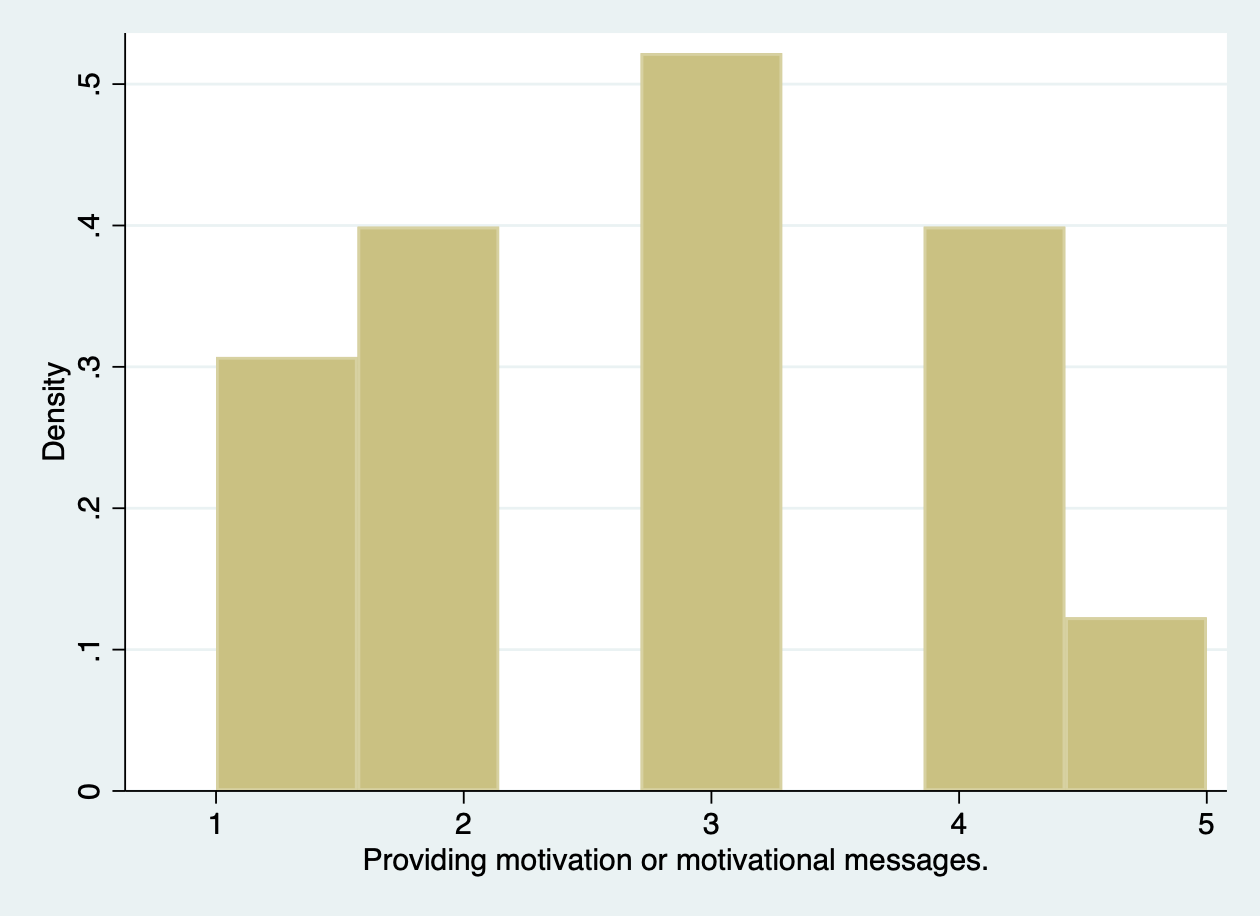

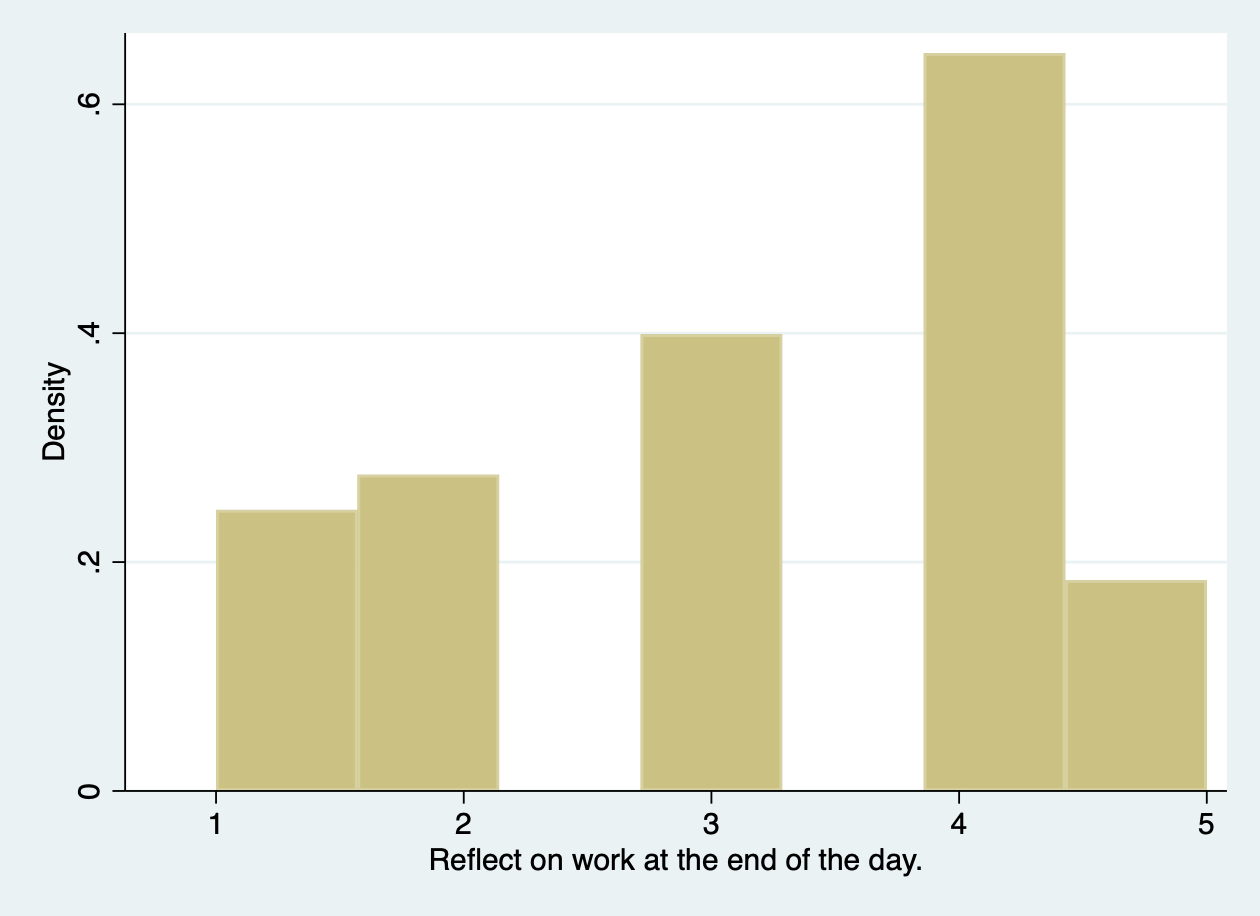

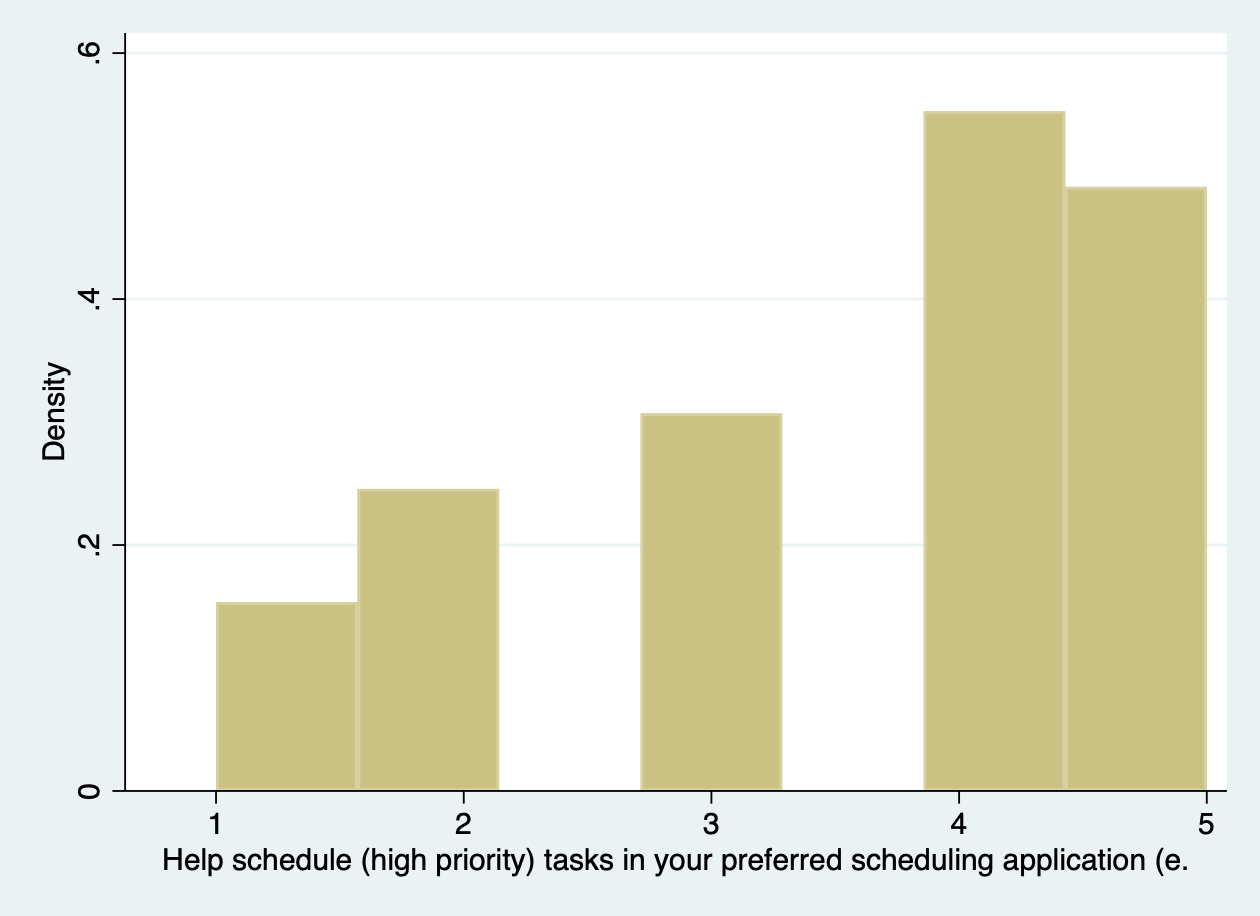

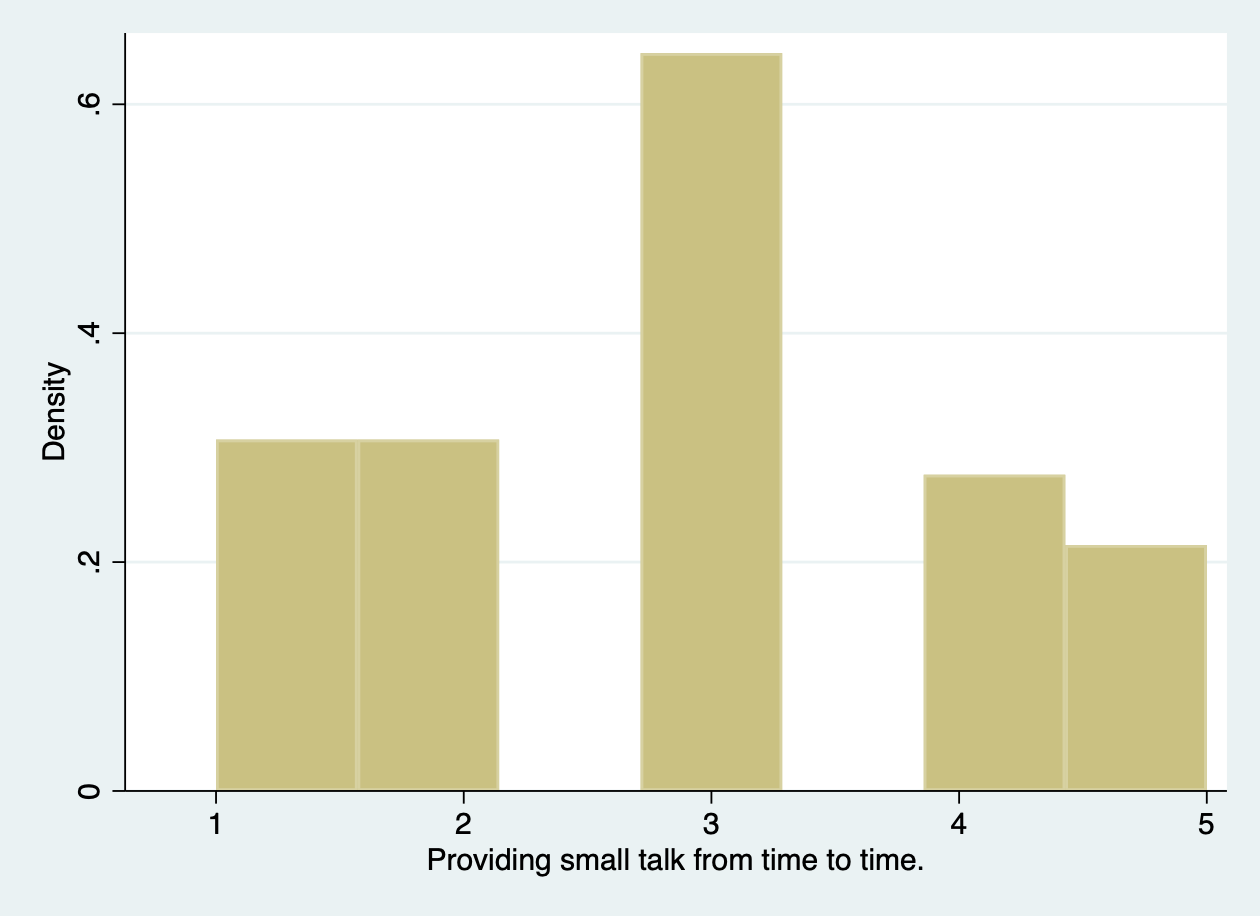

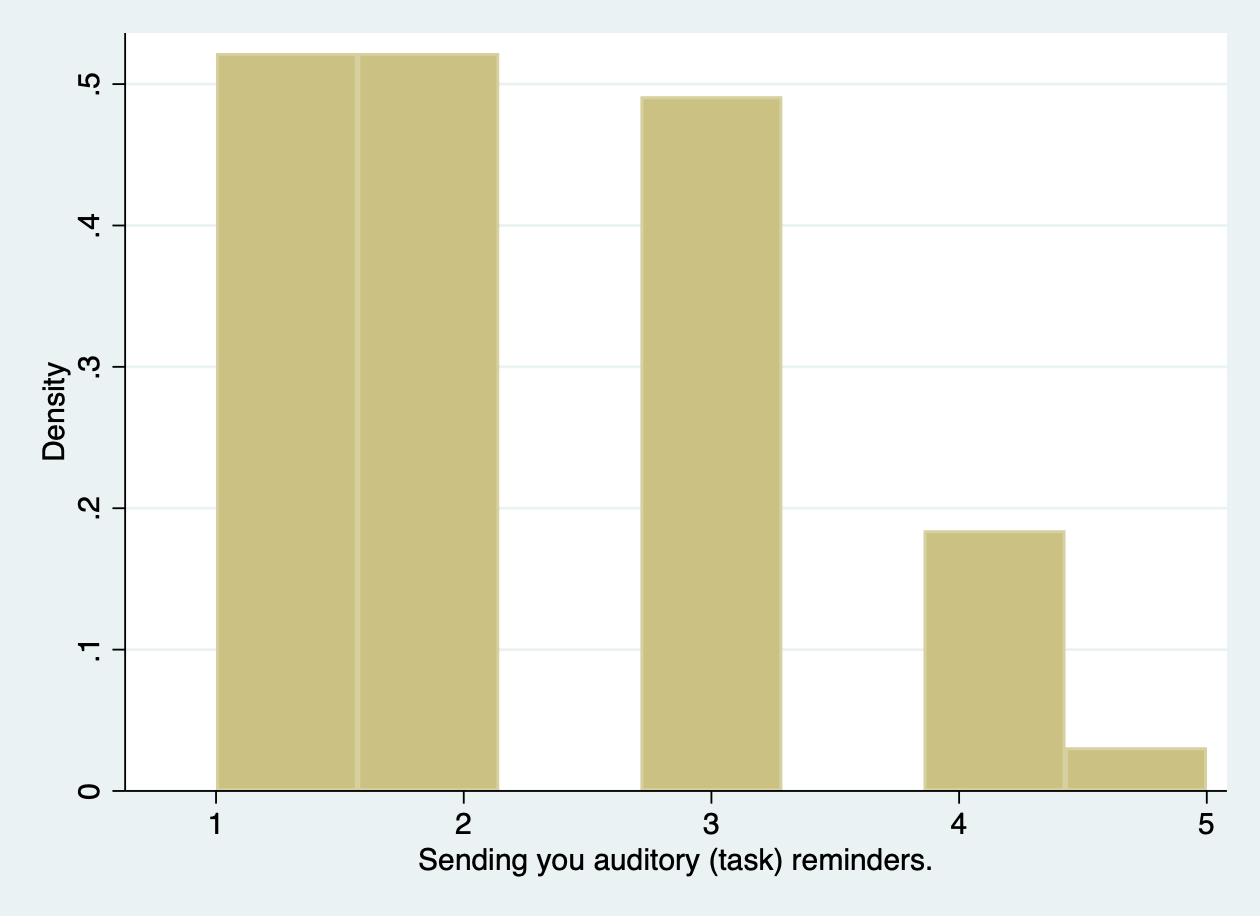

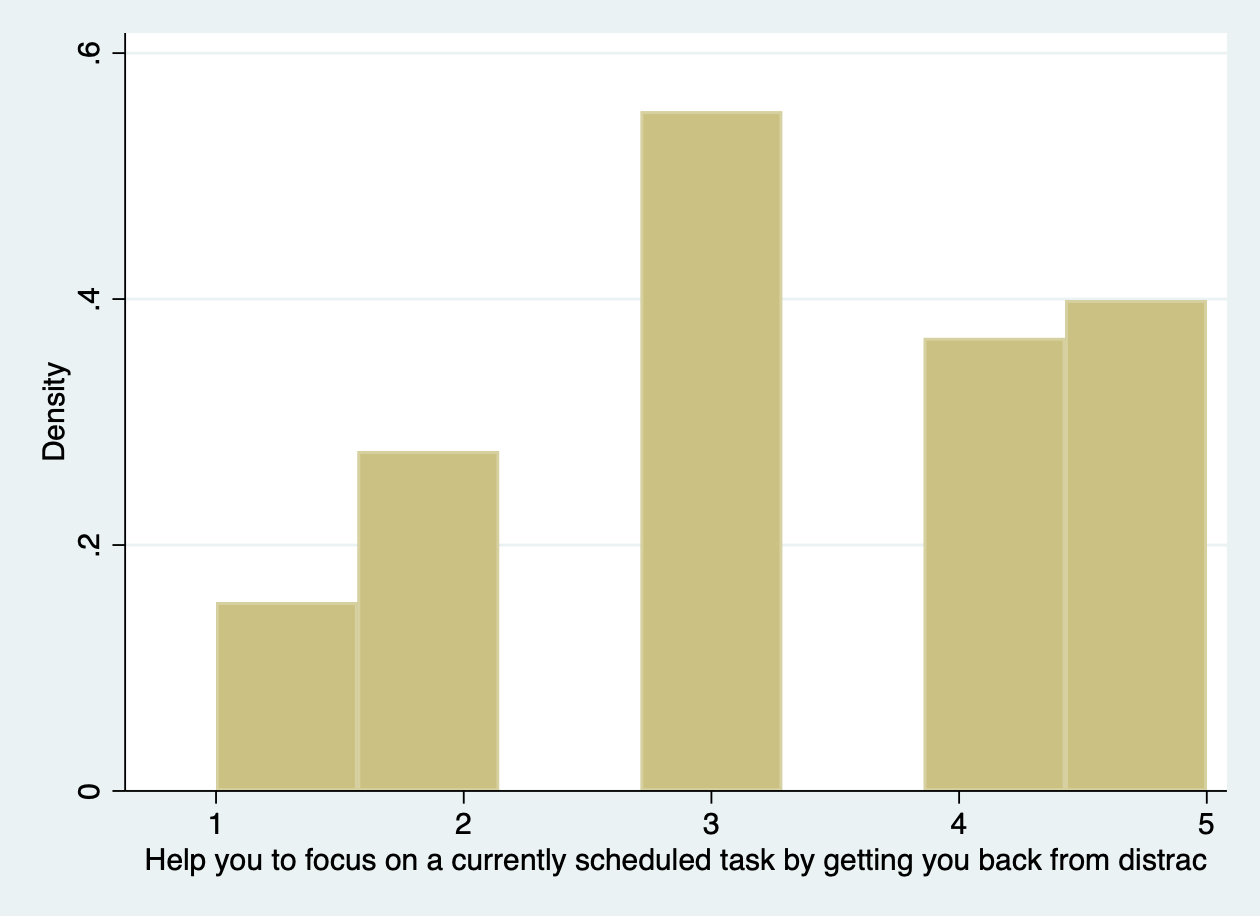

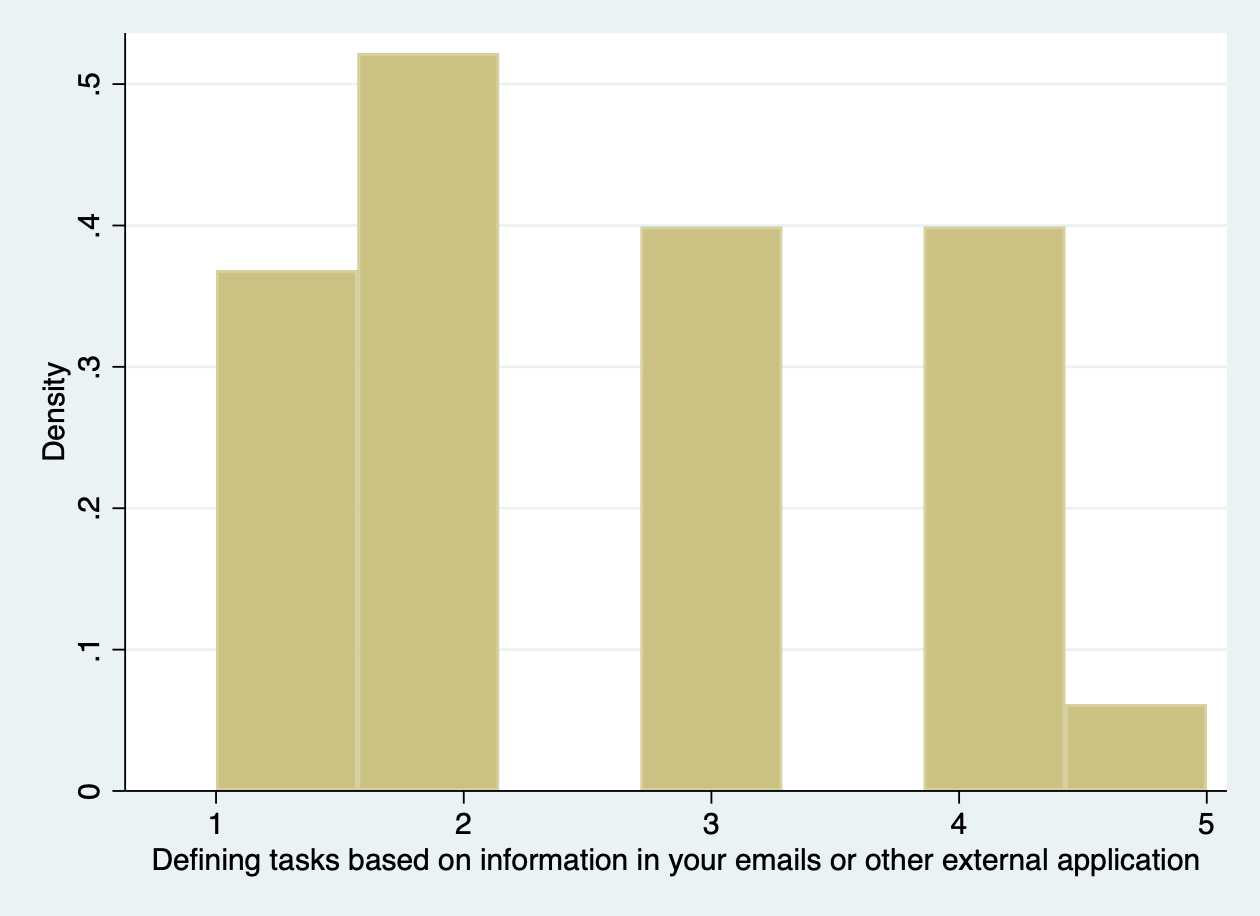

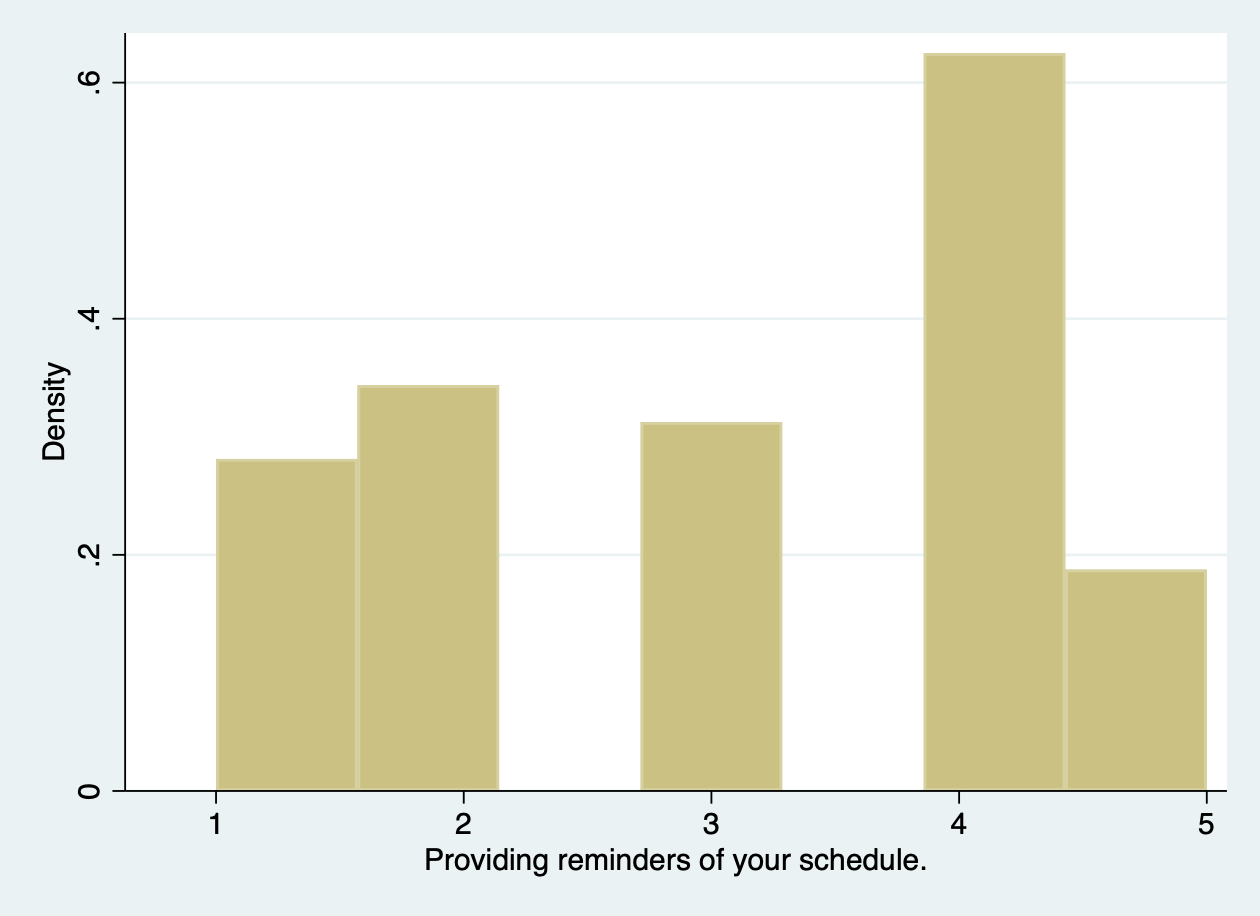

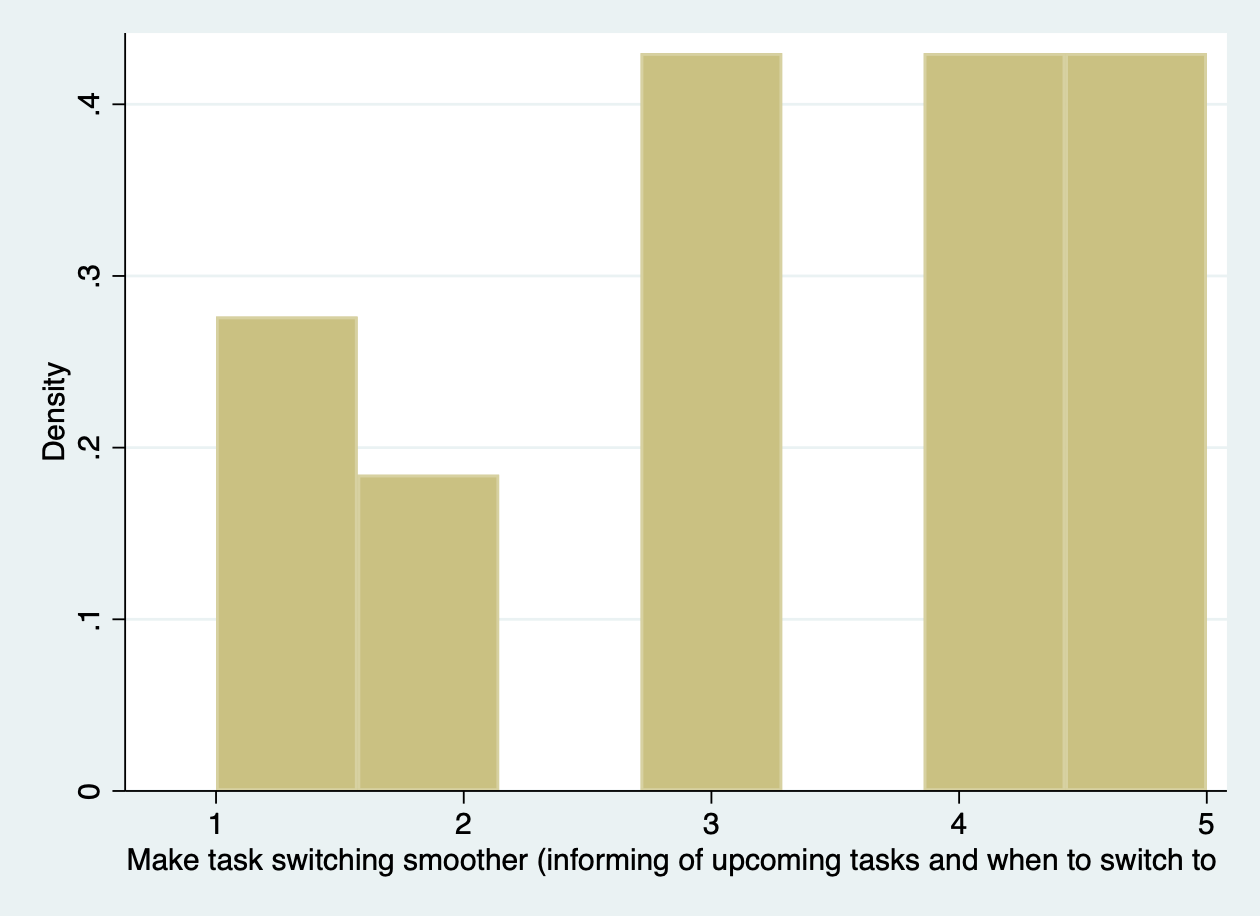

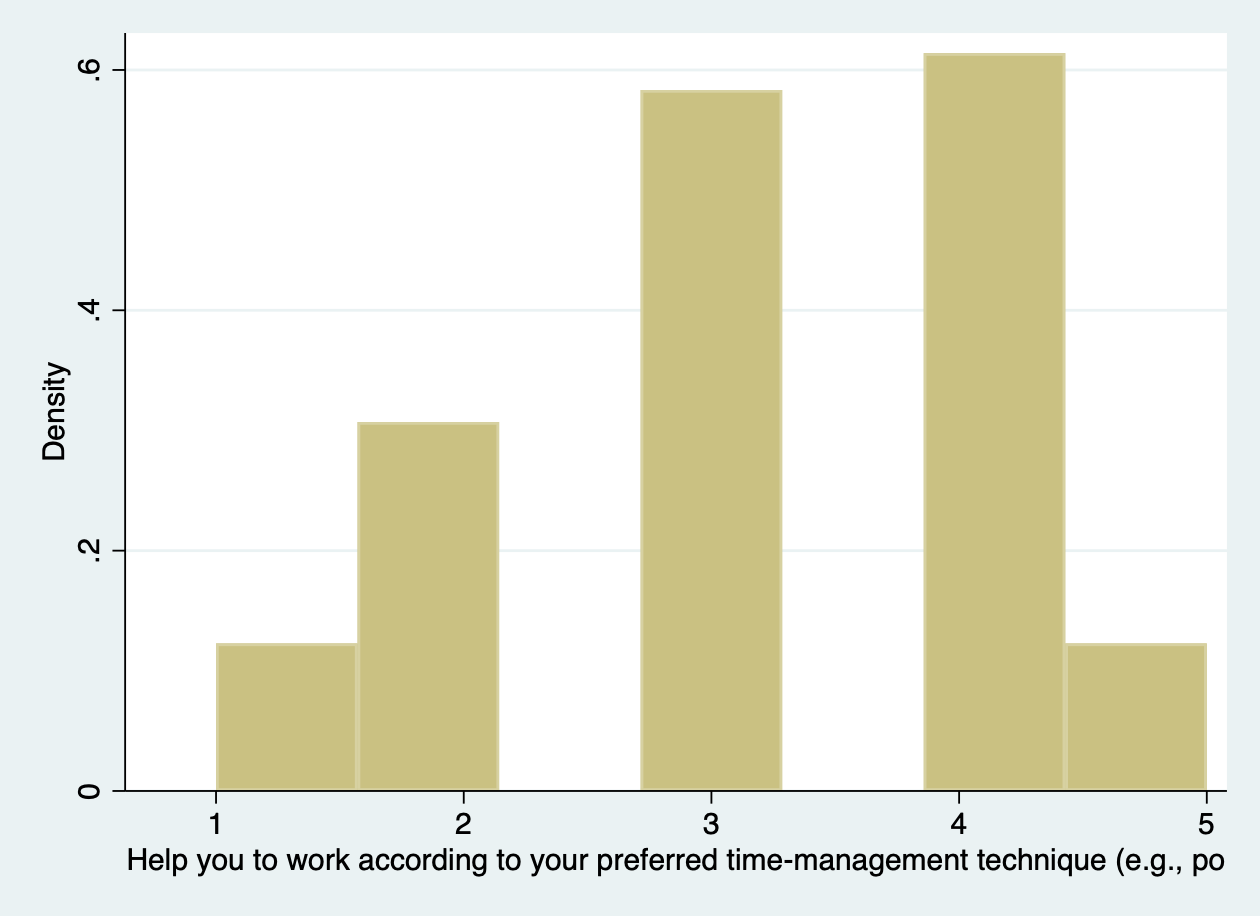

Sixteen different variables are used to see what users prefer as tasks for a virtual agent. For every variable, the respondents could choose five options from a Likert scale varying between ‘Not useful at all’ to ‘Very useful’. From the histograms it can be seen that ‘Help avoiding distractions by blocking applications’, ‘Help to split up daunting tasks’, ‘Encourage taking breaks’, ‘Encourage physical activity during breaks’, ‘Help increase productivity with your favorite concentration/focus application’, ‘Reflect on work at the end of the day’, ‘Help schedule tasks in your preferred scheduling application’, ‘Help you to focus on a currently scheduled task by getting you back from distractions’, ‘Providing reminders of your schedule’, ‘Make task switching smoother’ and ‘Help you to work according to your preferred time-scheduling technique’ all seem to lean to the right side, indicating that they are thought to be more useful.

These histograms can be supported by skewness values. A skewness value below zero means that the data is more distributed to the right, while a positive value means that the data is more distributed to the left. We try to find a negative skewness here to confirm what is mentioned above. As can be seen in table 2, all the skewness values of above topics are indeed negative, however, some are larger skewed than others and thus more often chosen to be useful. In conclusion, the most useful tasks (based on negative skewness values between –0.57 to –0.80) are, from highest to lowest; ‘Help avoiding distractions by blocking applications’, ‘Help increase productivity with your favorite concentration/focus application’, ‘Help schedule tasks in your preferred scheduling application’. All other variables have skewness values lower than -0.39 and are thus less helpful than these mentioned above.

Table 2: The skewness values for several variables of what could be helpful tasks of a VA.

| Variables | Skewness |

|---|---|

| Help avoiding distractions by blocking applications | -0.7914 |

| Help to split up daunting tasks | -0.3372 |

| Encourage taking breaks | -0.1631 |

| Encourage physical activity during breaks | -0.1469 |

| Help increase productivity with your favorite concentration/focus application | -0.7717 |

| Reflect on work at the end of the day | -0.3852 |

| Help schedule tasks in your preferred scheduling application | -0.5737 |

| Help you to focus on a currently scheduled task by getting you back from distractions | -0.2038 |

| Providing reminders of your schedule | -0.2560 |

| Make task switching smoother | -0.3755 |

| Help you to work according to your preferred time-scheduling technique | -0.3555 |

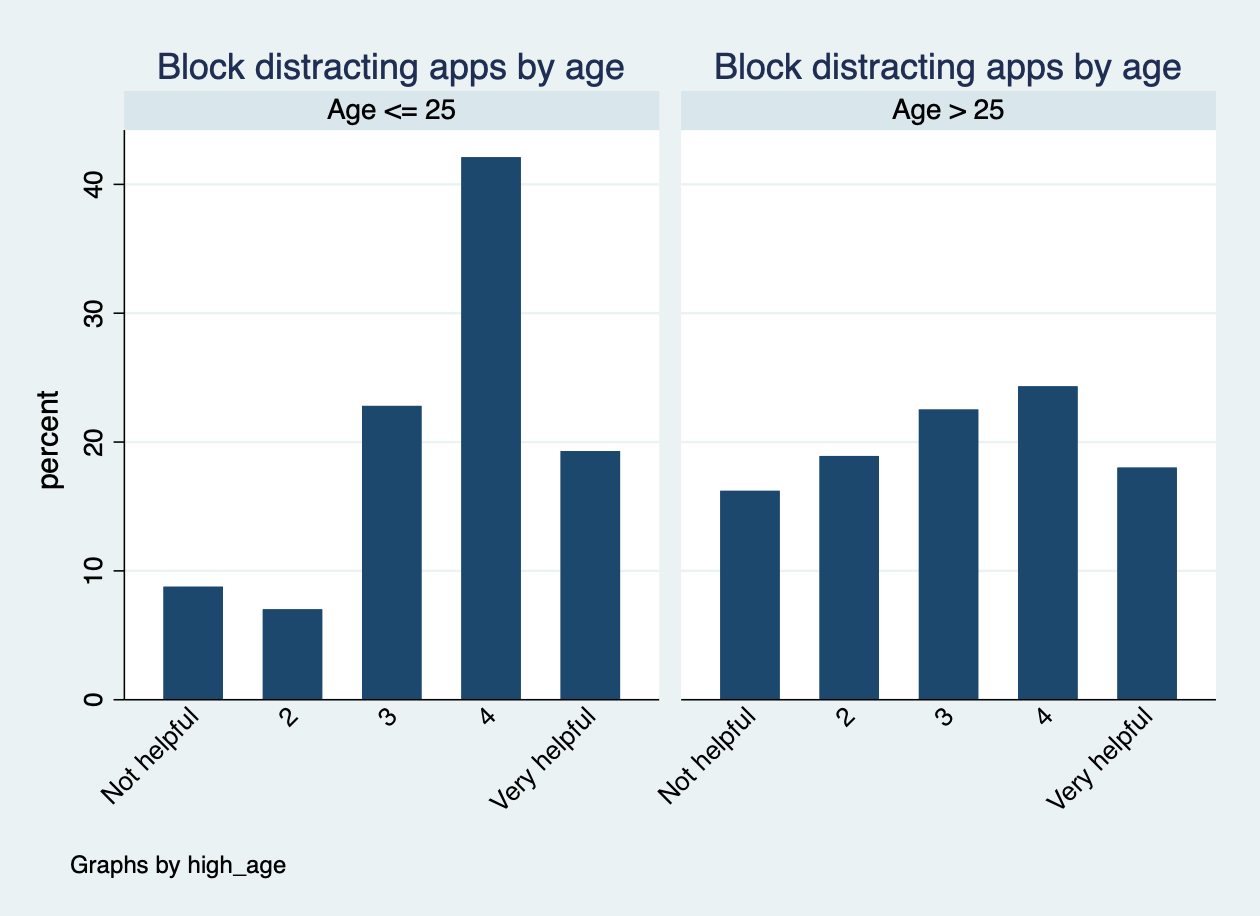

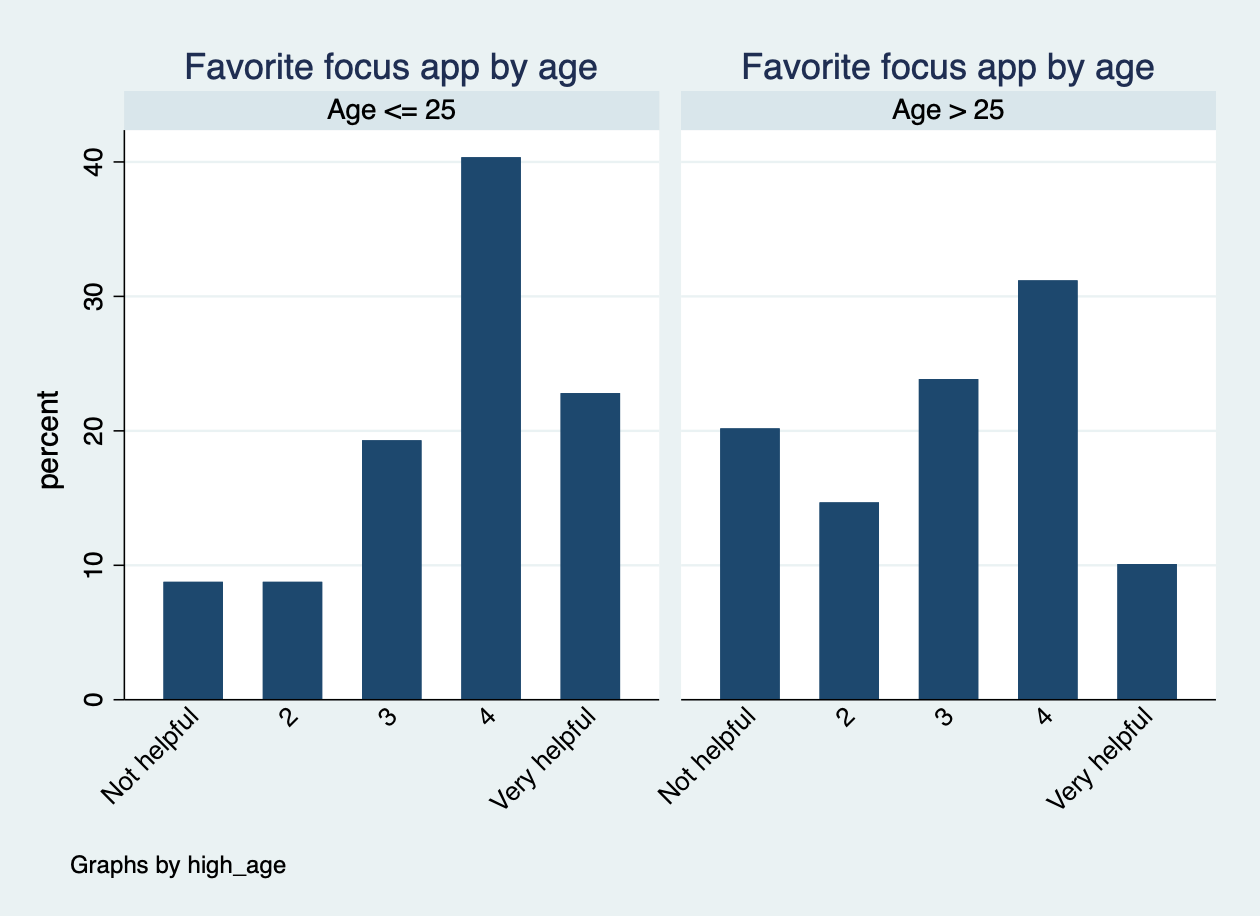

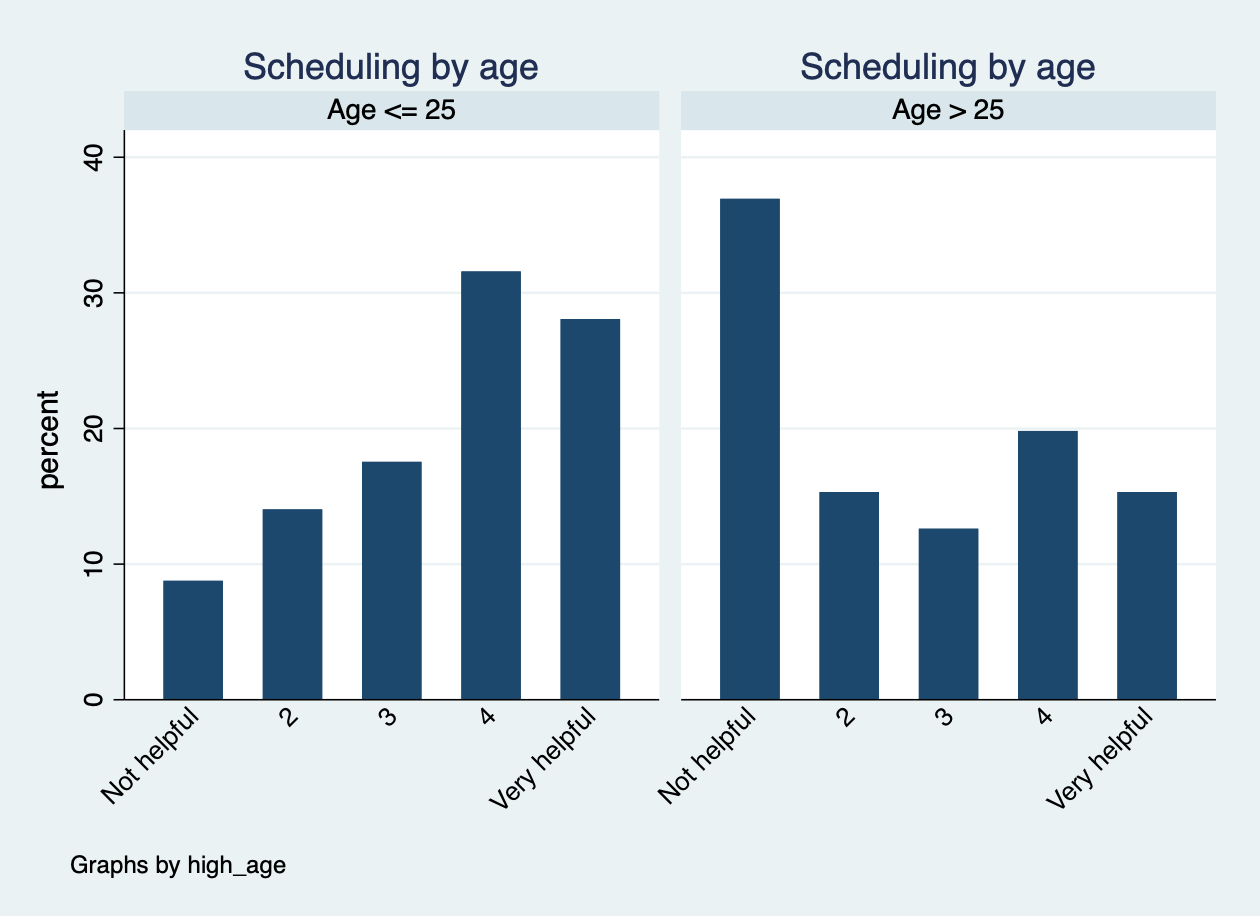

To check whether it was a good decision to focus on respondents of 25 years and younger, we have made bar charts of the three most important variables ,grouped by age. The results can be found below and it can be seen that this is indeed a good choice. More younger people have interest in the three most helpful tasks and older people are doubtful or even sure that it will not be helpful.

Furthermore, it can be checked using t-tests (or if not allowed; rank sum tests) whether there is a significant difference between age groups for the variables. We start with performing Shapiro-Wilk and Skewness/Kurtosis tests to see if the data is normally distributed. Both p-values should be larger than 0.05 here to not reject the null hypothesis (‘The data is normally distributed’). If this is the case, we may perform a t-test and otherwise we may only perform a non-parametrical test. We will only look for a significant difference in the three most helpful tasks, according to above. Table 3 shows that all variables have a corresponding p-value smaller than 0.05 and thus there is a significance difference between the two age groups and those helpful tasks.

Table 3: The corresponding significance values for the three main helpful tasks variables.

| Variables | Significance value |

|---|---|

| Help avoiding distractions by blocking applications | 0.0287 |

| Help increase productivity with your favorite concentration/focus application | 0.0023 |

| Help schedule tasks in your preferred scheduling application | 0.0001 |

Regarding the open question 'Are there any tasks which aren't mentioned in the list above that you would like a virtual agent to be able to do?', around 20 people have given useful suggestions for extra features of Coco or have confirmed that already existing features will be helpful. Quite a lot of people mentioned that Coco should be connected to streaming services for music or meditations. Furthermore, some people mentioned that they would like it if Coco were connected to their coffee machine or alarm.

It is also mentioned a lot that Coco should suggest a planning for the day based on tasks and order their email on priority. Moreover, it has also been mentioned a few times that Coco should be able to block certain applications and signal to colleagues whether they are busy or not, some people add that this should happen automatically. These are ideas we already had, and it is thus great to hear that the respondents think the same.

In the survey's additional comments, two people gave additional suggestions for functionality. The first mentioned that it would be useful if you can decide to turn of Coco for a while, especially during the weekend this might be useful. Another person worried that Coco should not make them lose their concentration: “The last thing I want is another application that sends me unsolicited and annoying notifications.”

In conclusion, the three most helpful tasks for Coco would be to help avoid distractions by blocking applications, to help increase productivity with users' favorite concentration/focus application, and finally to help schedule tasks in users' preferred scheduling application. Furthermore, there is indeed a difference between the younger age group (<= 25 years) and the older age group (> 25 years) in whether tasks will be seen as helpful or not. For the three main tasks, there is a significance difference for all of them (p < 0.05).

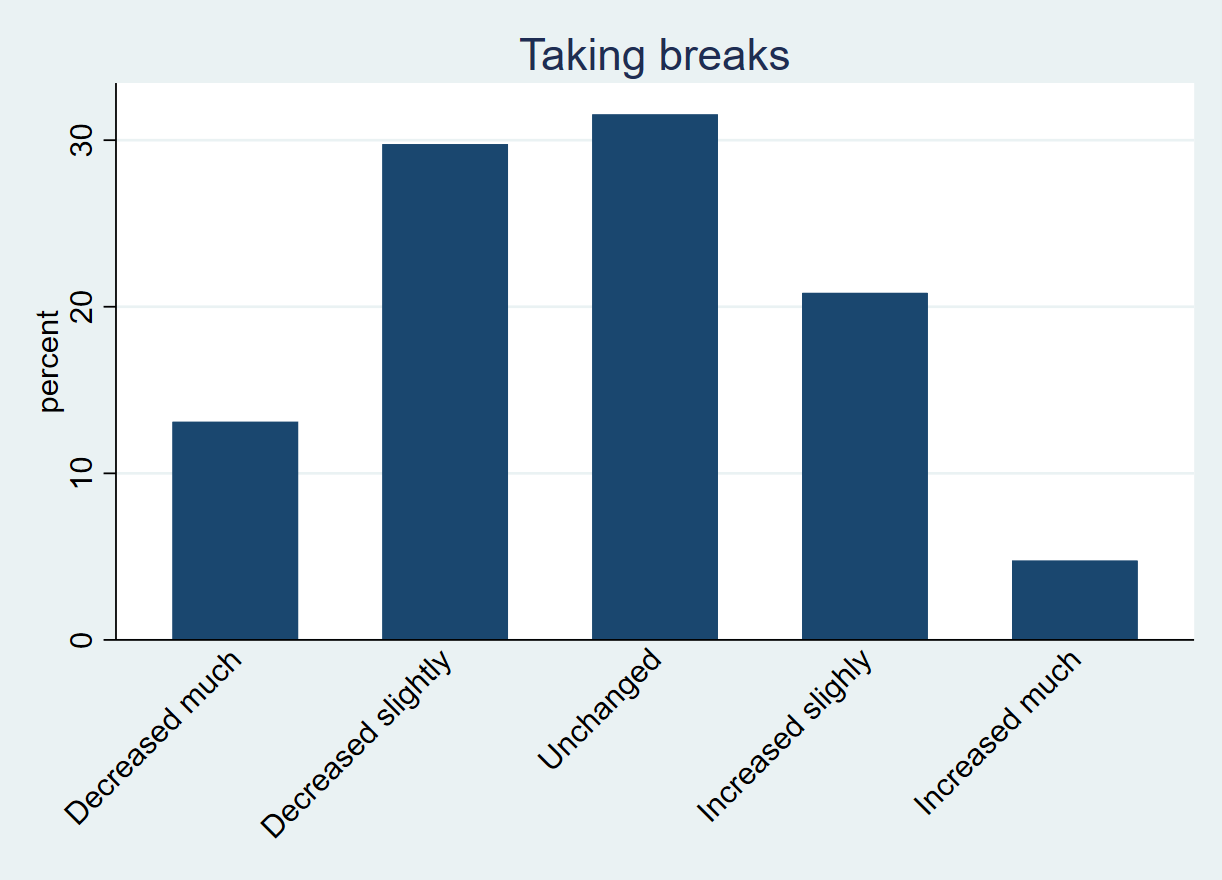

Privacy

The following section will cover possible privacy concerns, related to the use of Coco. It will try to answer the research question “Will there be any computer features for the computer companion which are not possible to implement because of privacy issues?”. If so, it would be important to know this beforehand, since potential users of Coco should be comfortable using Coco.

Subquestion 1: 'Are people generally comfortable with the computer features being used for the computer companion (that require access to personal data)?'

To investigate whether people feel comfortable when Coco would use personal data that it acquired with the help of, for instance the user's camera, microphone, or agenda, several different questions were asked in the survey. People could indicate on a Likert-scale ranging from 1 (not comfortable at all) to 3 (completely comfortable) how they felt about Coco using their agenda, camera and microphone, as well as it monitoring their activity, giving popups, and saving user data. These questions are all encoded as “comfort_agenda", “comfort_camera", “comfort_microphone", “comfort_monitoring", “comfort_popups", and “comfort_userdata". Their means are shown in Table 4.

Table 4: Means of comfort questions.

| Mean (all participants) | Mean (participants, <= 25 years) | Mean (participants, >25 years) | |

|---|---|---|---|

| Agenda | 2 | 2.33 | 1.82 |

| Camera | 1.34 | 1.38 | 1.33 |

| Microphone | 1.51 | 1.44 | 1.54 |

| Monitoring | 2.05 | 2.24 | 1.95 |

| Popups | 2.23 | 2.53 | 2.06 |

| User data | 1.80 | 1.87 | 1.77 |

As can be seen from table 4, people feel most comfortable receiving popups from the computer companion and least comfortable giving access to their camera. A score of 2 would mean that people are “somewhat comfortable”, hence a score higher than 2 could be seen as a minimal to accept the feature. For our chosen user group of people of 25 years or younger, this would mean that the computer companion could feel free to manage the user's agenda, monitor his/her activity, and send popup messages. In general, our user group feels more comfortable with the discussed features than the older user group. The only exception is in giving access to the microphone, although the difference is small.

Subquestion 2: 'Would people give permission for including the different computer features to improve the computer companion's performance?'

The survey also asked people to indicate whether people would actually give permission for the different features mentioned before (requiring access to certain programs and/or personal data). This was asked with the side note that Coco's performance could be improved by allowing these features. People could choose between “no", “yes”, and “I don't know” for Coco using their agenda, camera and microphone, as well as it monitoring their activity, giving popups, and saving user data. These questions are all encoded as “permissions_agenda", “permissions_camera", “permissions_microphone", “permissions_monitoring", “permissions_popups", and “permissions_userdata". The frequencies of people answering “yes” are shown in Table 5.

Table 5: Results of permissions questions.

| Frequency 'yes' (all participants) | Frequency 'yes' (participants <= 25 years) | Frequency 'yes' (participants >25 years) | |

|---|---|---|---|

| Agenda | 58.93% | 66.67% | 54.95% |

| Camera | 19.64% | 15.79% | 21.62% |

| Microphone | 32.14% | 26.32% | 35.14% |

| Monitoring | 60.71% | 64.91% | 58.56% |

| Popups | 69.05% | 78.95% | 63.96% |

| User data | 40.48% | 45.61% | 37.84% |

As can be seen in table 5, people are most likely to give permission to popups, and least likely to give permission to use the camera. These results are in line with the ones described in table 4. Hence, it seems likely that people also give more easily permission if they feel comfortable with the feature. This correlation between feeling comfortable and giving permission can also be confirmed by calculating the actual correlation between the two variables “comfort_X” and “permissions_X” for each different pair (agenda, camera, etc.). These values are found to vary between 0.7498 and 0.8312 and are all significant for a p-value of 0.05. When comparing the younger and older user group, the younger user group gives on average more frequently permission. However, the older user group is also this time more positive about the use of the microphone than the younger user group. One other remarkable difference is the fact that the older user group is more likely to give permission to use the camera than the younger user group. However, their difference in being comfortable with using the camera was also extremely small.

To summarize, there can be four main conclusions from the privacy analysis. First of all, people feel comfortable with the use of popups, monitoring, and the agenda. Also, a majority gives permission to use these features. In addition, people do not feel comfortable with the use of the camera, microphone, and user data. Also, only a minority gives permission to use these features. Moreover, there exist significant correlations between feeling comfortable with and giving permission for a certain feature. And finally, the younger age group (<= 25 years) seems to be more positive about the features than the older age group (> 25 years). They express being more comfortable with most of the features and more often give permission to use the features. Exceptions only concern the use of the microphone and the camera, for which older people gave more often permission.

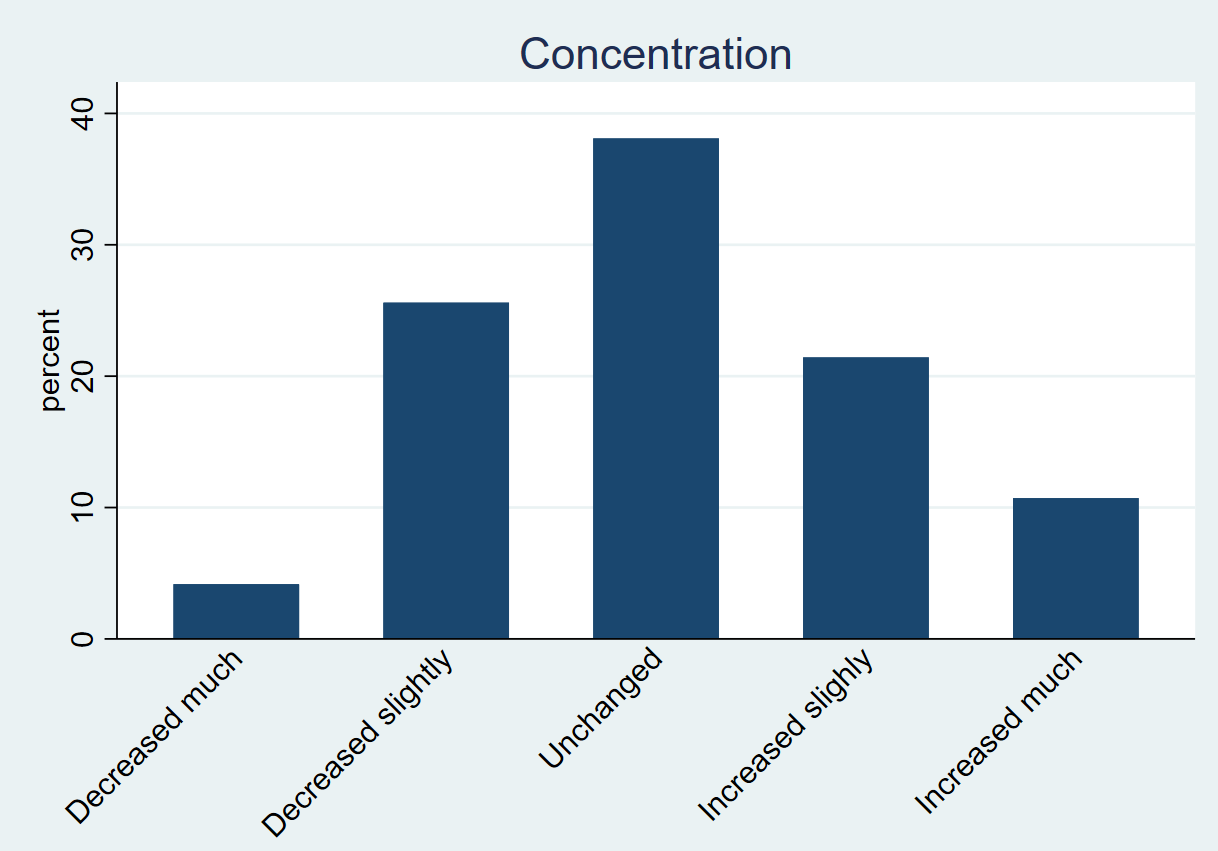

Appearance

The following section will try to answer the research question “Do people have a preference regarding the appearance of the computer companion?". These results will be useful when designing Coco during a later stage of the project.

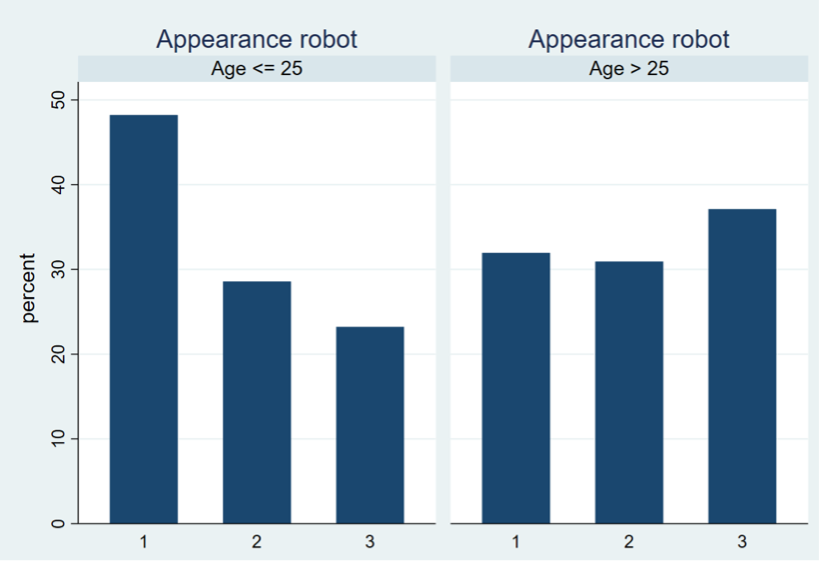

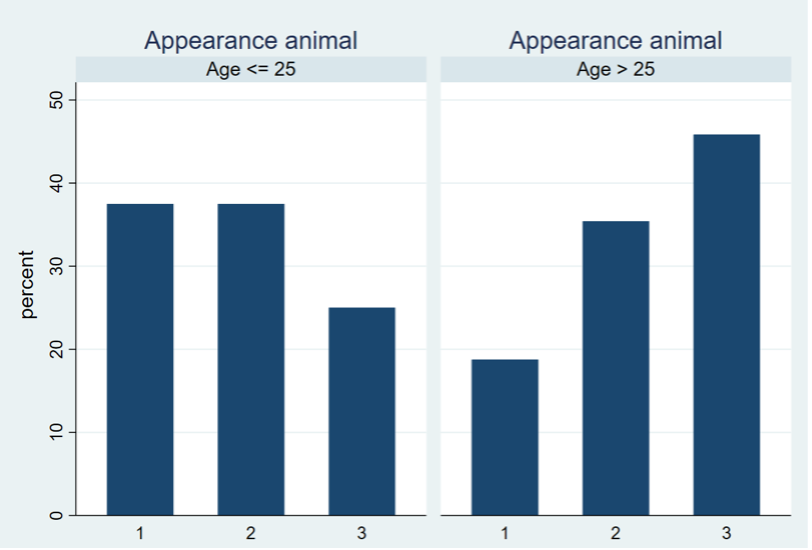

In the survey, participants had to indicate their preferences for the appearance of Coco. There were three options (human, robot, animal) which they could rank from 1 (most favorable) to 3 (least favorable). The frequency of option 1, 2, or 3 was then encoded for each appearance into three variables (“human_appearance", “robot_appearance", and “animal_appearance"). These results are shown in table 6.

Table 6: Percentages of ranking human, robot, and animal appearance.

| Human | Robot | Animal | |

|---|---|---|---|

| Ranking 1 | 35.71% | 34.52% | 23.21% |

| Ranking 2 | 27.98% | 27.38% | 32.74% |

| Ranking 3 | 29.17% | 29.17% | 34.52% |

As becomes clear from table 6, people like a human or a robot almost exactly the same. An animal, on the other hand, is somewhat less favorable, as it is chosen less often as first option and more often as second or third option. It would be useful to focus more on the preferences between humans and robots in the second survey.

Subquestion: 'Do people from different age groups differ in their preferences regarding the appearance of the computer companion?'

To check for any different preferences between younger (<= 25 years) and older (> 25 years) participants, the histograms shown in Figure X, Figure X, and Figure X are analyzed. Looking at these plots, some differences seem to exist. To check whether these differences are significant, a Chi-square test has been performed between each variable “human_appearance", “robot_appearance", and “animal_appearance" and the variable “high_age", which separates younger (<= 25 years) and older (> 25 years) participants.

The alpha-value has been set at its default-value of 0.05 and the p-values are <0.001 (human), 0.096 (robot), and 0.012 (animal). Hence, indeed a significant difference is found in the preferences for a human or animal appearance between younger and older people. Older people think a human appearance is most favorable, while younger people think the opposite. Also, older people prefer an animal appearance less than younger people. However, the differences in case of a robot appear to be insignificant.

Regarding the open question 'Would you rather have a different look for your computer buddy than indicated above?', most people did not have any additional comments about the appearance of Coco, however, some trends could be seen amongst the ones that did gave some input. First of all, five people mentioned that they would like the option to customize the appearance of Coco. They mention, for instance, that they would like to choose a figure on one day, and another one on the next day. One person even mentioned the difference between work and leisure. Next to this, they would like the option to adapt the figures themselves. Of course, this would require more (difficult) options in the software. Furthermore, six people mentioned that Coco did not even need an appearance. Something more simplistic, for example such as Siri (mentioned by a couple), would be sufficient in their opinion. Also, eighteen people gave additional options that could be designed. These ranged from “more natural elements” and “a Disney character” to “a self-chosen picture”. Lastly, two persons suggested to change our proposed appearances. One mentioned to make them “somewhat older", while the other would like to see some more details.

In conclusion, people prefer a human or robot appearance almost the same, and a robot appearance less. Furthermore, when comparing the younger (<= 25 years) and older (> 25 years) age group, a significant difference on three areas in preferences is found, regarding the human or animal appearance. First of all, older people prefer the human appearance the most, whereas younger people prefer the human appearance the least. Moreover, younger people prefer the animal appearance more than older people. And finally, the differences in preferences concerning the robot appearance are insignificant.

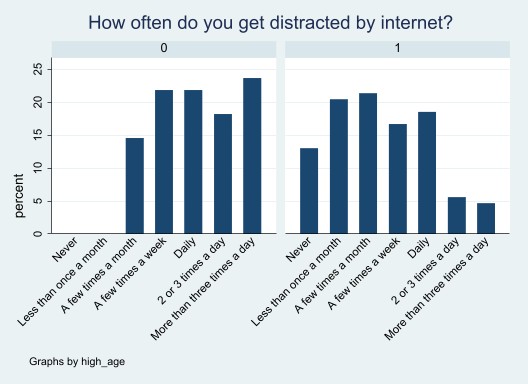

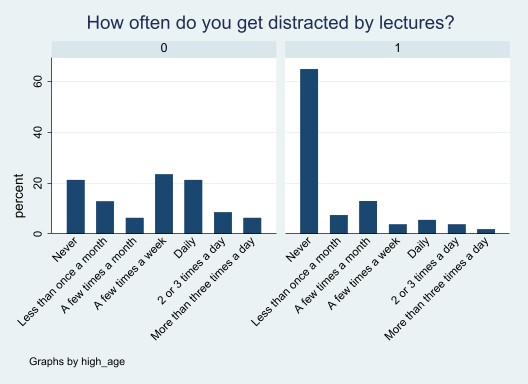

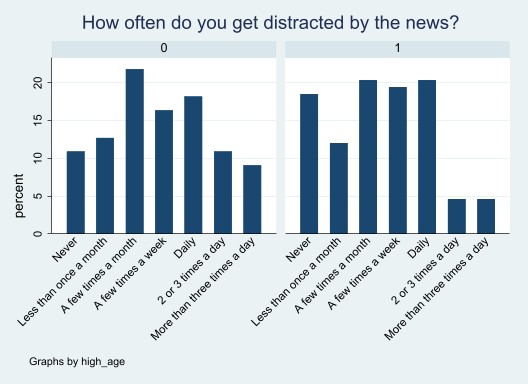

Distractions that negatively impact productivity

This section will try to answer the following research questions. The first question is, is there a significant difference in the extent to which the two groups (workers, age > 25 and students age <= 25) experience the negative impact on their productivity? If there is indeed a large difference between the two groups, it might be important to narrow down our user group. And the second research question is what types of distractions have the most influence on productivity? This question is asked so we can prioritize the functions that Coco needs to have.

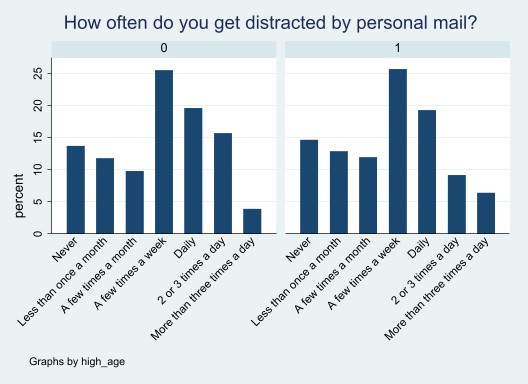

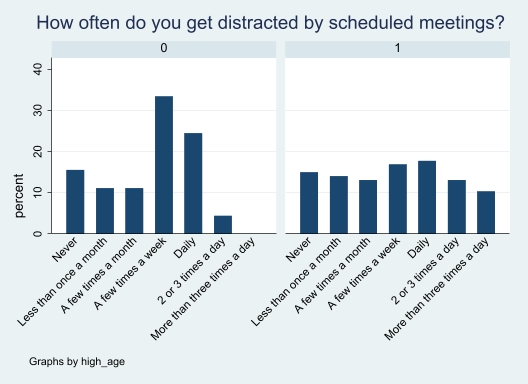

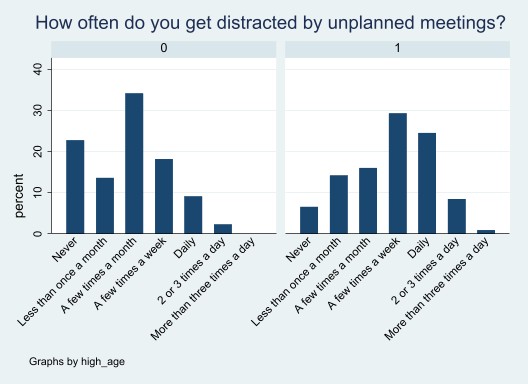

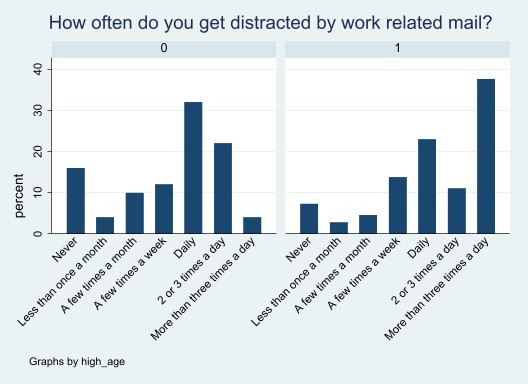

In the survey, we asked our respondents the following; 'How often do you get distracted from your work by the following activities?'. These activities are the internet, lectures, news, personal mail, scheduled meetings, online shopping, social media, unplanned meetings and work mail. To see what types of distractors have the most influence on productivity, we calculated the mean response for both students and workers. The mean response was based on the different answers the respondents could give to the question, to which they could answer the following: never, less than once a month, a few times a month, a few times a week, daily, 2 or 3 times a day, more than three times a day, in which 1 means never and 7 means more than three times a day. The mean amount of distraction can be seen for both age groups per distraction factor in table 7 below. The significance values are also shown in the table because the effect of the type of distractor can differ significantly per age group. This statistically significant difference is computed with the Wilcoxon rank-sum test.

Table 7: Distraction factors

| Distraction factor | Mean for age <= 25 | Mean for age > 25 | Significance value |

|---|---|---|---|

| Internet | 4.97 | 3.22 | p = 0.0000 |

| Lecture | 3.6 | 1.92 | p = 0.0000 |

| News | 3.71 | 3.08 | p = 0.1617 |

| Personal mail | 3.86 | 3.36 | p = 0.6041 |

| Scheduled meetings | 3.6 | 3.46 | p = 0.2918 |

| Shopping | 3.03 | 1.82 | p = 0.0000 |

| Social media | 5.4 | 2.9 | p = 0.0000 |

| Unplanned meetings | 2.8 | 3.58 | p = 0.0002 |

| Work mail | 4.06 | 4.64 | p = 0.0005 |

As you can see in table 7, the mean distraction is higher for every sort of distraction type except for work-related mail. This highlights that students’ (people aged <= 25) productivity is more often affected by these distractions than the productivity of working people. To give an example, a mean of 4.97 (internet) for people aged 25 or lower means that they are distracted 'daily' on average. The differences between the two groups are significant for internet, lectures, shopping, social media, unplanned meetings and for work mail. This table also informs us about what types of distractors have the most influence on both groups. For clarity, table 8 below shows the rank order of the amount of distraction per age group.

Table 8: Rank order of distraction factors

| Rank | Students | Workers |

|---|---|---|

| 1. | Social media | Work mail |

| 2. | Internet | Unplanned meetings |

| 3. | Work mail | Scheduled meetings |

| 4. | Personal mail | Personal meetings |

| 5. | News | Internet |

| 6. | Lectures | News |

| Scheduled meetings | ||

| 7. | Shopping | Social media |

| 8. | Unplanned meetings | Lectures |

| 9. | Shopping |

The figures below visually show the results we have found above.

To summarize, there is indeed a significant difference between the two age groups and the times that they get distracted by different factors, so this again confirms that it is a good idea to split up the groups and investigate them separately. From both the graphs and the statistical analysis you can see that students are more often distracted by multiple factors than workers. For these students, the two largest distractor factors are, by far, social media and the internet. These factors distract students two or three times a day and daily (respectively).

Money

There were quite some different answers to the question what people were willing to pay for Coco. There were three main categories in which people answered the question. One was paying per certain period, for example a monthly or yearly fee. The second category of people wanted Coco to be a one-time buy, and the third category consists of other answers.

Payments per period

Around 17 people said that they would like to pay monthly or yearly, however the amount that they were willing to pay varied widely. Most of these people wanted to pay somewhere between 5 – 20 Euros per month.

One-time buy

The amount of money that people were willing to pay in a one-time buy varied widely again, between 2 Euros to 500 Euro's. However, most people responded with 10 Euro's and 50 Euro's. The average price that people were willing to pay was approximately 68 Euro's.

Other

17 people mentioned that they did not want to pay for the software and there were also some additional ideas posed by the respondents, for example, 9 people mentioned that 'work should pay for this'. Besides that, some people said that they wanted a free tryout first to see the functionalities before they want to pay for it. If results are good, they are willing to pay around 10 Euros per month or around 100 Euros per year. Moreover, a few people commented that it depends on the type of license; a one-time purchase, or pay on monthly base or yearly base? This was an unnecessary dubiety on our side.

General conclusion of analysis 1

From the findings about interest in Coco, is it not immediately clear which group of people would most profit from the existence of a virtual productivity agent. Results however seem to suggest that younger people who (need to) work behind their laptop for more than two hours a day are somewhat more open to the idea of a virtual agent as described in the study.

As indicated in the second part of the analysis 'Corona impact on productivity and health', it might be best to focus on people of 25 years old or younger. Their productivity, concentration, mental health, and physical health have decreased the most since COVID-19. Coco would be best of help for the users if it could aid them during their work considering these aspects.

Our research question Which tasks are most important for a computer companion to possess? will be answered with the three best options, considering the user group of people of 25 years and younger. Coco should be able to help avoid distractions by blocking applications, help increase productivity with users' favorite concentration/focus application, and finally help schedule tasks in users' preferred scheduling application. Moreover, there are some tasks that are not helpful at all, for instance, sending auditory reminders.

Due to privacy concerns, it would seem logical to only focus on the use of the agenda, monitoring, and popups, looking at the percentages of people giving permission to use a certain feature. On the other hand, it does not seem convenient to give access to the user's camera and microphone. Since people have mixed feelings about the access to specific user data, it would be an option to include this as an extra feature that people could select in order to receive personalized feedback. Coming back to the research question “Will there be any features for the computer companion which are not possible to implement because of privacy issues?”, there are indeed some features that will not be possible to implement because of privacy issues of the potential users. These include the use of the microphone and camera, and perhaps also the use of personal user data.

Concerning the appearance of Coco, the rankings are from most favorable to least favorable: robot, animal, and human appearance, focusing on the user group (<= 25 years). Most of the found literature focuses on a human appearance, but this is the least favorable option according to our user group. It would therefore be recommended to look closer into the differences between a human appearance and a robot, which is the most favorable option.