Embedded Motion Control 2014 Group 1: Difference between revisions

| Line 169: | Line 169: | ||

== Software architecture == | == Software architecture == | ||

The chosen software architecture for the maze competition is shown in the figure below. All blocks represent a process which send their output to a different process. Here the architecture will be introduced in short. In the latter of this page there will be elaborated on the processes apart. | |||

Based on the incoming laser data from Pico lines are generated with use of a hough transform. With use of the laser data and the line data situations are recognized. In this architecture situations are for example exit left, exit right and dead end. This information is send to the state machine. Next to the situation recognition also information about a detected arrow is send to the state machine, this gives simply information if there is an arrow to the left or right. Whit this information it is determined what kind of behavior is needed in the given situation. An example could be that the robot needs to turn 90 degrees to the right because a t-junction is recognized. The state machine sends the command to rotate the robot with a given speed untill the odometry shows that the robot has turned 90 degrees. | |||

The needed behavior of the robot is send to the drive process which sends the velocities to Pico. The drive block also makes sure that the robot drives in the middle of the maze corridors and has an orientation perpendicular to the driving direction. This is done with use of laser and line input.Dependent on the state of the robot this control is on or off. | |||

[[File:schematic_EMC.jpg|450px]] | [[File:schematic_EMC.jpg|450px]] | ||

Revision as of 10:25, 12 June 2014

Group Info

| Name: | Student id: | Email: |

| Groupmembers (email all) | ||

| Sander Hoen | 0609581 | s.j.l.hoen@student.tue.nl |

| Marc Meijs | 0761519 | m.j.meijs@student.tue.nl |

| Wouter van Buul | 0675642 | w.b.v.buul@student.tue.nl |

| Richard Treuren | 0714998 | h.a.treuren@student.tue.nl |

| Joep van Putten | 0588616 | b.j.c.v.putten@student.tue.nl |

| Tutor | ||

| Sjoerd van den Dries | n/a | s.v.d.dries@tue.nl |

Meetings

Weekly meetings are planned during the course. Every Wednesday a standard meeting is planned to discuss progress with the group and with the tutor. Presentations from these weekly meetings can be found with the presentation links below. Important meeting decisions can be found with use of the meeting liks below. Next to the standard weekly meetings evening meetings are planned to work as a group on the software design. For some meetings the minutes can be shown by clicking on the hyperlinks below.

- Meeting - 2014-05-02

- Meeting - 2014-05-12

- Meeting - 2014-05-14

- Meeting - 2014-05-15

- Meeting - 2014-05-16

- Meeting - 2014-05-21

- Meeting - 2014-05-23

- Meeting - 2014-05-27

- Meeting - 2014-05-28

- Meeting - 2014-06-02

- Meeting - 2014-06-04

- Meeting - 2014-06-05

- Meeting - 2014-06-06

- Meeting - 2014-06-11

- Meeting - 2014-06-13

- File:Presentatie week 3.pdf

- File:Presentation week 4.pdf

Time Table

Fill in the time you spend on this course on Dropbox "Time survey 4k450.xlsx"

Planning

Week 1 (2014-04-25 - 2014-05-02)

- Installing Ubuntu 12.04

- Installing ROS

- Following tutorials on C++ and ROS.

- Setup SVN

- Plan a strategy for the corridor challenge

Week 2 (2014-05-03 - 2014-05-09)

- Finishing tutorials

- Interpret laser sensor

- Positioning of PICO

Week 3 (2014-05-10 - 2014-05-16)

- Starting on software components

- Writing dedicated corridor challenge software

- Divided different blocks

- Line detection - Sander

- Position (relative distance) - Richard

- Drive - Marc

- Situation - Wouter

- State generator - Joep

- File:Presentatie week 3.pdf

Week 4 (2014-05-17 - 2014-05-25)

- Finalize software structure maze competition

- Start writing software for maze competition

- File:Presentatie week 4.pdf

Week 5 (2014-05-26 - 2014-06-01)

- Start with arrow detection

- Additional tasks

- Arrow detection - Wouter

- Update wiki - Sander

- Increase robustness line detection - Joep

- Position (relative distance) - Richard

- Link the different nodes - Marc

Week 6 (2014-06-02 - 2014-06-08)

- Make arrow detection more robust

- Implement line detection

- Determine bottlenecks for maze competition

- Make system more robust

Week 7 (2014-06-09 - 2014-06-15)

- Implement Arrow detection

- Start with more elaborate situation recognition for dead end recognition

- Test system

Week 8 (2014-06-16 - 2014-06-22)

- Improve robustness of system for maze competiotion

Corridor challenge

For the corridor challenge a straight forward approach is used which requires minimal software effort to exit the corridor succesfully.

Approach

For PICO the following approach is used to solve the corridor challenge.

- Initialize PICO

- Drive forward with use of feedback control

- Position [math]\displaystyle{ x }[/math] and angle [math]\displaystyle{ \theta }[/math] are controlled

- A fixed speed is used, i.e., [math]\displaystyle{ v }[/math]

- Safety is included by defining a distance from PICO to the wall, where it will stop if the distance becomes [math]\displaystyle{ \lt 0.1 }[/math]m

- The distance between the left and right wall is calculated. If one of these distances becomes to small PICO will correct to maintain in the middle of the corridor

- If no wall is detected feedforward control is used

- Drive for [math]\displaystyle{ T_e }[/math] [s] after no wall is detected

- After [math]\displaystyle{ T_e }[/math] [s] PICO rotates +/-90 degrees using the odometry

- +/- depends on which side the exit is detected

- Drive forward with use of feedback control

- Stop after [math]\displaystyle{ T_f }[/math] [s] when no walls are detected

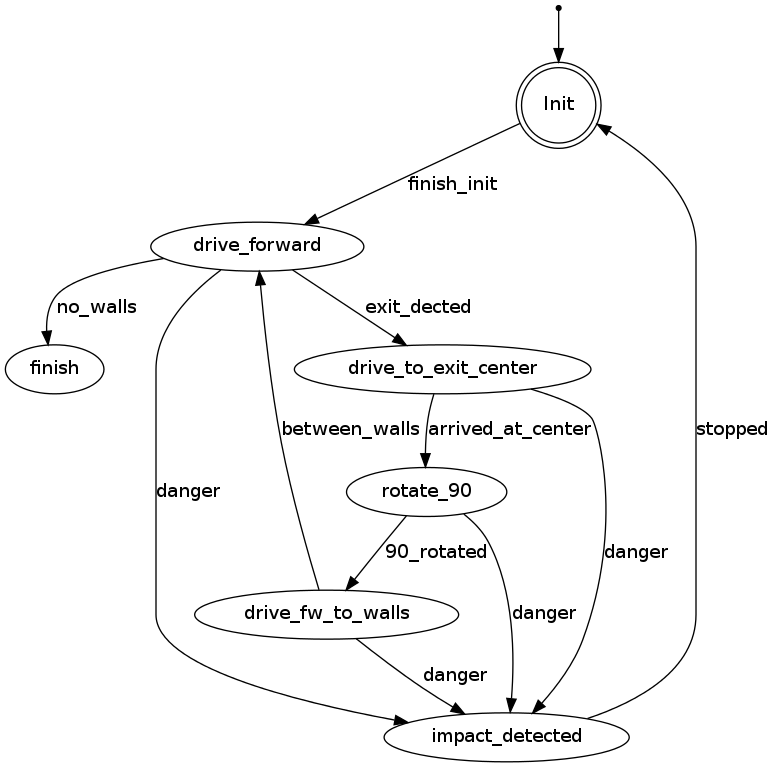

States PICO corridor challenge

The states of PICO for the corridor challenge are visualized in the following figure:

Result

First place!!

Maze challenge

Maze solving algorithm: the wall follower

The choice for this algorithm is based on the fact that its simple, easy to program but nevertheless effective.

Working principle:

This algorithm starts following passages and whenever a junction is reached it always turn right (or left). Equivalent to a human solving a Maze by putting their hand on the right (or left) wall and leaving it there as they walk through.

Examples

Examples of a maze solved by the Wall follower algorithm are shown below.

Software architecture

The chosen software architecture for the maze competition is shown in the figure below. All blocks represent a process which send their output to a different process. Here the architecture will be introduced in short. In the latter of this page there will be elaborated on the processes apart.

Based on the incoming laser data from Pico lines are generated with use of a hough transform. With use of the laser data and the line data situations are recognized. In this architecture situations are for example exit left, exit right and dead end. This information is send to the state machine. Next to the situation recognition also information about a detected arrow is send to the state machine, this gives simply information if there is an arrow to the left or right. Whit this information it is determined what kind of behavior is needed in the given situation. An example could be that the robot needs to turn 90 degrees to the right because a t-junction is recognized. The state machine sends the command to rotate the robot with a given speed untill the odometry shows that the robot has turned 90 degrees.

The needed behavior of the robot is send to the drive process which sends the velocities to Pico. The drive block also makes sure that the robot drives in the middle of the maze corridors and has an orientation perpendicular to the driving direction. This is done with use of laser and line input.Dependent on the state of the robot this control is on or off.

I/O structure

The different components that are visualized in the software architecture scheme are elaborated here

| Node | Subscibes topic: | Input | Publishes on topic: | Output | Description |

| Line detection | /pico/laser | Laser scan data | /pico/lines | Array of detected lines. Each line consists out of a start and an end point (x1,y1),(x2,y2) | Transformation of raw data to lines with use of the Hough-transform. Each element of lines is a line which exists out of two points with a x- y and z-coordinate in the Cartesian coordinate system with Pico being the center e.g. (0,0). The x- and y-axis are meters and the x-axis is the front/back of Pico while the y-axis is left/right. |

| Arrow detection | /pico/camera | Camera images | /pico/vision | Arrow detected to the left of the right | Detection of arrows pointing to left or right. |

| Odometry | /pico/odom | Quaternion matrix | /pico/yaw | float with yaw angle | Transform Quaternion in roll, pitch and yaw angles. Only yaw angle is send because roll and pitch are zero. |

| Distance | /pico/line_detection | Line coordinates | /pico/dist | (y_left, y_right, x, theta_left, theta_right) also known as the 'relative position' | Determine distance to wall to left, right and front wall. Also determines angle left and right with respect to the walls. |

| Drive | /pico/state

/pico/dist |

(y_left, y_right, dy, x, dx, theta_left, theta_right,dtheta) |

/pico/cmd_vel | Pico moving | Move pico in the desired direction |

| Situation | /pico/line_detection

/pico/laser /pico/vision |

Line data, vision interpretation and possibly laser data. | /pico/situation | The situation where pico is in. For example corridor to the right, T-junction, etc. | Situation parameters. |

| State generator | /pico/situation

/pico/yaw |

Situation and yaw angle of Pico. | /pico/state | dx,dy, dtheta | Based on the situation Pico needs to go through different states and continue to drive. Every state defines an action that Pico needs to do. |

PICO messages

The different nodes are communicating with each other by sending Messages. Each nodes sends a set of messages with use of a publisher. The Message that are sends between the nodes are defined in this section.

Lines

The line message includes two points, p1=(x1,y1) and p2=(x2,y2), which are defined by the geometry messages package. A point consists of (x,y,z) coordinate. For points p1 and p2 the z-coordinate is set to zero.

Points p1 and p2 are connected to represent a line each line is stored in array:

Line[] lines

The detected lines are visualized as a Marker in rviz, by using the visualization_msgs package.

Situation

Basically, there are eight main situations, these are shown in the figure below. If PICO does not detect any corner points, then it is located in a corridor. In this case the robot can go straight on. If PICO detects any corner points, then it might need to turn. Whether it needs to turn, depends on the situation. The different type of situations are

Situation T-junction exit left right Situation T-junction exit front right Situation T-junction exit front left Situation Corridor Situation Corridor right Situation Corridor left Situation Intersection Situation Dead end

Drive

The drive message sends commands to the drive node which actually sends the velocity commands to pico. The message contains two float32, one to specify the velocity forwards and one to represent the velocity by which Pico turns around his axis.

float32 speed_angle float32 speed_linear

Vision

The vision message contains two booleans for if it either detects an arrow pointing to the left, or a arrow pointing to the right.

bool arrow_left bool arrow_right

Software components

Line detection

Input: "/pico/laser"

Output: "/pico/lines"

Principle

Transformation of raw laser data to lines representing the walls of a corridor. This transformation is done with use of the Hough-Transform

Approach

- Convert laser data to points (x,y,z)

- Transform from sensor_msgs::Laserscan to pcl::PointCloud<T>

- Convert PointCloud to 2d Matrix

- Overlay grid, color each point white in the 2d image (CV::Mat)

- Apply Hough transform

- Transform lines in image coordinates back to world coordinates

- Result: std::vector<CV::Vec4f> >

- Filter detected lines

- Increases robustness by neglectin small gaps within the corridor

Arrow detection

Inputs: "/pico/camera"

Outputs: Booleans 'left arrow' or 'right arrow' on topic "/pico/vision"

Principle

The goal for the arrow detection block is to detect arrows in the corridor by using the asus xtion camera mounted on pico. Three different approaches/methods are considered to achieve this goal:

- Template matching: Template matching is a technique which tries to find the reference image in the current 'video-image'. The technique slides the smaller reference image over the current image, and at every location, a metric is calculated so it represents how “good” or “bad” the match at that location is (or how similar the patch is to that particular area of the source image). This was implemented using the openCV function matchTemplate, which results in a matrix with 'correlation' values from which a conclusion can be drawn where the template is matched best in the current image. See the following video:

One problem of this technique is that only one 'reference' of a certain size is used to compare to the image, in our case with our dynamical changing 'size' of our to be detected arrow we are only detecting the arrow correctly if the size of the camera image of the error is exactly the size of the reference arrow. Therefore to make this method work in our case we need a lot of arrow-reference images of different sizes, which is not suitable.

- Feature detection: Another approach is to use algorithms which detect characteristic 'features' in both the reference and camera image and attempt to match these. Because these features are generally not scale or rotation dependent, this method does not run into the same problems as described for template matching. Therefore, this approach was investigated in the following way:

- The OpenCV library contains several algorithms for feature detection and matching. In general, some steps are followed to match a reference to an image:

- Detect features or 'keypoints'. For this several algorithms are available, notably the SIFT[1] and SURF[2] algorithms. Generally, these algorithms detect corners in images on various scales, which after some filtering results in detected points of interest or features.

- From the detected features, descriptors are calculated, which are vectors describing the features.

- The features of the reference image are matched to the features of the camera image by use if a nearest-neighbor search algorithm, i.e. the FLANN algorithm[3]. This results in a vector of points in the reference image and a vector of the matched respective points in the camera image. If many such points exist, the reference image can be said to be detected.

- Finally, using the coordinates of the points in both images, a transformation or homography from the plane of the reference image to that of the camera image can be inferred. This transformation allows tells us how the orientation has changed between the reference image and the image seen by the camera.

- Implementation of the previously described steps resulted in detection of the arrow in a sample camera image. However, it was found that the orientation of the arrow could not accurately be determined by means of the algorithms in the OpenCV library. Due to the line symmetry of the reference arrow, a rotation-independent detector would match equally many points from the 'top' of the arrow to the top and the bottom of the arrow in the camera image, as the 'bottom' in the camera image could just as well be the top of the arrow had it been flipped horizontally.

- One solution to the above problem could be to compare the vectors between the matched points in both images. Because it is only of interest whether the arrow is facing left or right, a simple algorithm could be defined which determines if many points which were located 'left' in the reference image are now located 'right', and vice versa. If this is the case, the orientation of the image has been reversed, allowing us to determine which way the arrow is pointing. However, this approach is probably quite laborious and a different method of detecting the arrow was found to also be suitable, which is why the solution described above was not pursued.

- The OpenCV library contains several algorithms for feature detection and matching. In general, some steps are followed to match a reference to an image:

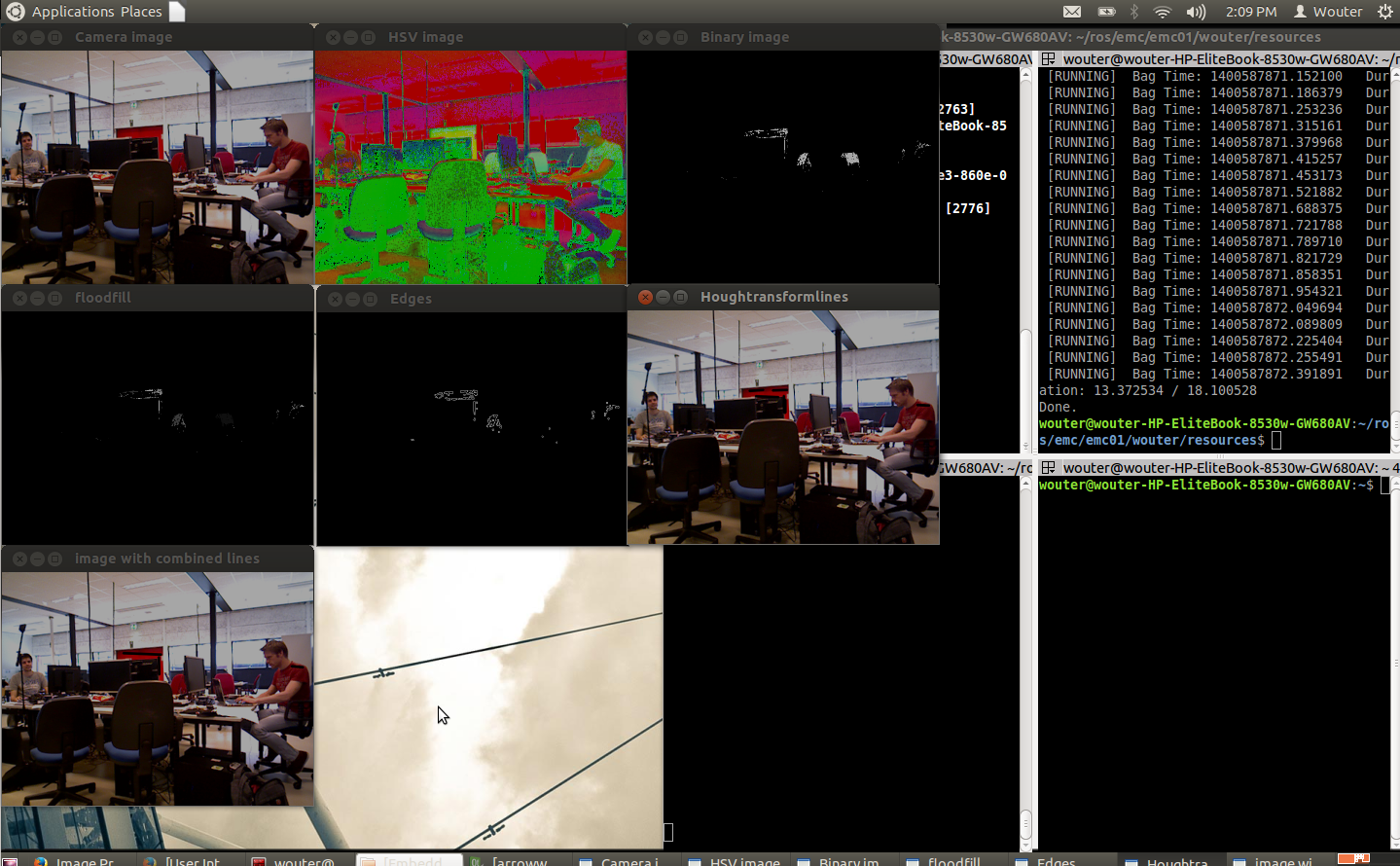

- Edge detection: By using the edge detection method, several steps are performed to recognize the edges of a red object within the camera image. Using those lines an eventual arrow can be detected. The steps are as follows:

- Receive the rgb camera image.

- Convert the rgb camera image to a hsv image.

- This hsv image is converted to a binary image, red pixels are converted to white and all the other pixels are converted to black.

- Using a floodfill algorithm the 'red' area is smoothened out, (8 connectivity is used)

- Edge detection is applied, as an edge is a 'jump' in intensity it can be recognized, this is done using a canny edge detection algorithm.

- By using a hough transform lines are fitted through the detected edges.

- CombineLines function is used to 'neaten' the lines which are coming out of the hough transform, documentation is found at 'line detection' because exactly the same code is used.

- By recognizing the slanted lines (of about 30 degrees) the arrow head can be recognized. And the direction of the arrow can be derived.

All the steps can been seen in the next video:

It can been seen that the arrow is recognized quite well and often, but to ensure some more robustness it is chosen to only create a boolean 'arrow detected' when at 3 adjacent frames the same arrow is recognized.

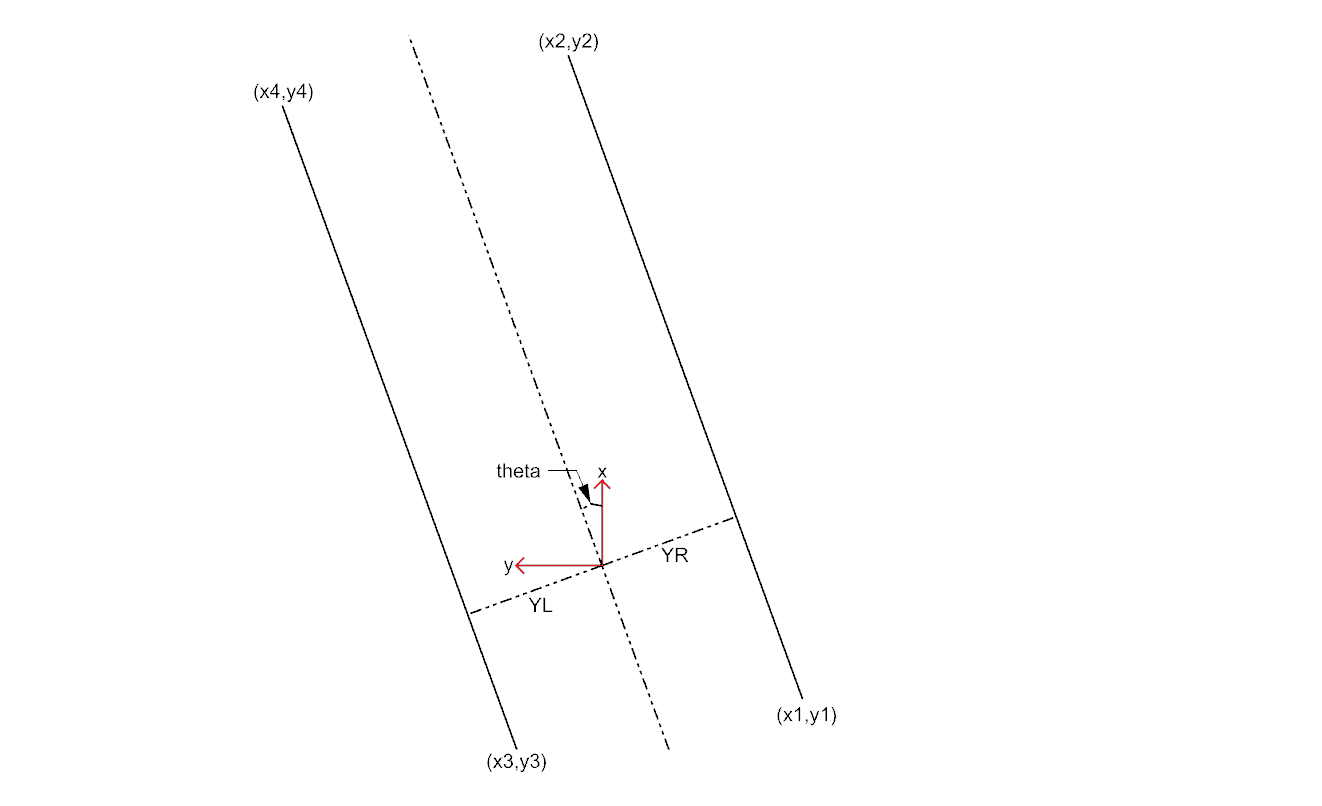

Relative distance

input topic: /pico/lines

function: Determine distance to wall to left, right and front wall. Also determines angle theta with respect to the corridor.

output: (Y_left, Y_right, X (forward), theta_left, theta_right)

output topic: /pico/dist

msg: dist.msg

float32 YR

float32 YL

float32 THR

float32 THL

float32 X

The angle theta can be calculated with the next fomula:

[math]\displaystyle{ \theta = atan((y2-y1)/(x2-x1)) }[/math]

the position perpendicular to the line/wall is calculated with the next formula:

[math]\displaystyle{ X_r = y2 - ((y2-y1)/(x2/x1))*x2*cos(\theta_1) }[/math]

[math]\displaystyle{ X_l = y4 - ((y4-y3)/(x4/x3))*x4*cos(\theta_2) }[/math]

theta is average of left and right or only left or right depending on situation

Drive

This node sends velocity commands to Pico based on input from the state generator node and relative position node. The state generator node sends a velocity in x and theta based on the state it is in. The relative position node sends the distance and angle relative to the left and right wall with Pico as the centre. When possiblethis information is used for the orientation and positioning of Pico.

Situation

inputs: lines, vision, relative position

Two different approaches are taken into account, a simple approach in which only is detected if the left, front and right side of pico are 'free' of walls/obstacles. But also a more elaborate approach in which situations are detected like, junctions, T-junctions, crossings and dead ends.

Lines can be categorized in two types of lines:

Longitudinal lines: y-coordinates of begin and end point are similar

Lateral lines: x-coordinates of begin and end point are similar

Situations to be recognized:

- inbetween two walls

No obstacles in front, no lateral line detected within X meter.

2 longitudinal lines are detected.

- Junction

3 lines are detected. From which two are longitudinal lines and one is lateral within (X meter).

Detect direction of juction by comparing the x -values of the longitudinal lines with the x-value of the lateral line.

Left junction: When the x value of the left line (the line with the smallest Y values) is 'minimum corridor width' smaller then the x value of the lateral line a gap on the left side is recognized.

Right junction: When the x value of the right line (the line with the smallest Y values) is 'minimum corridor width' smaller then the x value of the lateral line a gap on the left side is recognized.

- Dead end

3 lines are detected. From which two are longitudinal lines and one is lateral within (X meter).

Detect direction of dead end by comparing the x -values of the longitudinal lines with the x-value of the lateral line. When the x values of both longitudinal lines are similar to those of the lateral line a dead end can be recognized.

- T junction: 3 situations named T-right, T-left, T-right-left.

T-right: 3 longitudinal lines are detected, 1 lateral lines detected on the right side of pico.

T-left: 3 longitudinal lines are detected, 1 lateral lines detected on left side of pico.

T-right-left: 2 longitudinal lines are detected: 4 lateral lines are detected

- X junction

4 longitudinal and 4 lateral lines are detected

State generator

input: Situation, relative position

input topic: /pico/sit

Principle

To successfully navigate a maze, PICO must make decisions based on the environment it perceives. Because of the multitude of situations it may encounter and the desire to accurately define its behavior in those different situations, the rules for making the correct decisions may quickly become complex and elaborate. One way two structure its decision making is by defining it as a Finite State Machine (FSM). In an FSM, a set of states is defined in which specific behavior is described. Events may be triggered by, for instance, changes in the environment, and may cause a transition to another state, with different behavior. By accurately specifying the states, transitions between states, and the behavior in each state, complex behavior can be described in a well-structured way.

Approach

- SMACH http://wiki.ros.org/smach - decision_making FSM http://wiki.ros.org/smach - Own simple framework - Object-oriented, can be re-used and used as a pseudo-hierarchical FSM - Simple interface; events triggered from any other node

Videos

Simulation

| Corridor challenge | Line detection | Maze |

|

|

TBD |

Real-time

| Corridor challenge | Maze |

|

TBD |

References

- ↑ Introduction to the SIFT algorithm in OpenCV http://docs.opencv.org/trunk/doc/py_tutorials/py_feature2d/py_sift_intro/py_sift_intro.html

- ↑ Introduction to the SURF algorithm in OpenCV http://docs.opencv.org/trunk/doc/py_tutorials/py_feature2d/py_surf_intro/py_surf_intro.html

- ↑ Fast Approximate Nearest Neighbors with Automatic Algorithm Configuration http://www.cs.ubc.ca/~lowe/papers/09muja.pdf