Mobile Robot Control 2020 Group 3: Difference between revisions

| Line 169: | Line 169: | ||

%%%add explanation%%%% | %%%add explanation%%%% | ||

[[File: | [[File:targetformulation2.png]] | ||

==Path planning== | ==Path planning== | ||

Revision as of 17:19, 17 June 2020

Team members:

M.N. de Boer (Martijn)

G.J.L. Creugers (Gijs)

P. Leonavicius (Pijus)

S. Narla (Shashank)

A.L. Nooren (Anna Lisa)

M.K. Salahuddin (Mohamed Kaleemuddin)

Design Document:

Requirements:

The following requirements regarding task performance, safety and software should be satisfied by the simulated PICO robot:

- Task performance

- The robot must be able to recognize target cabinets

- The robot is capable of planning a path and is able to adapt to unexpected circumstances, for instance a closed door.

- PICO can rotate in place, in order to re-position when in front of a cabinet

- Must be able to announce the completion of the current objective

- The robot should not be inactive for more than 25 seconds.

- The robot has to be able to detect static and dynamic object and present them in the world model.

- Safety

- The robot avoids bumping into walls and doors

- The robot avoids collisions with static and dynamic obstacles

- PICO must obey the limits on translation and rotation velocity

- PICO should maintain a 5cm Stopping distance from the obstacle.

- Software

- The software is started by a single executable

- The software can be easily updated .

- The User-interaction should be minimal and User-friendly.

Functions:

Input data processing

- Laser range finder interpretation

- Inputs: distance and angle from LaserData Interpreting data generated by the laser range finder, using a 2D SLAM algorithm.

- Odometer interpretation

- Inputs: OdometryData Calculates speed of the mobile robot integrating position values, relays the data to the SLAM algorithm.

- Sensor Fusion

- Combining sensory information from multiple sensors can have uncertainty.This module can help to have reliable information flow to correlate and deconstruct data.

- Vector map data interpretation

- A function used for structuring data obtained from the provided map of the testing area. To be used as

inputs for position estimation and path planning functions.

Mapping world model

- Surroundings detection:

- Comparing the expected surroundings based on the vector map and the output of the LRF interpretation function.

- Obstacle recognition:

- Given the found surroundings, the robot has to decide whether the surrounding are known walls or unknown obstacles as mark them accordingly.

- Position estimation:

- Comparing the expected surroundings using the provided vector map and the outputs of the LRF and odometry interpretation functions.

Control

- Path planning:

- A function based on a Dijkstra’s/ A* / Dynamic programming algorithm. Uses data from the provided vector map and outputs from LRF and odometry interpretation functions. Constantly recalculates the optimal path based on detected obstacles or changes in the environment such as closed doors.

- Movement functions:

- Used for describing routines to be sent as inputs for the base controller of the robot.

- Final re-positioning:

- After the objective position is reached, the rotation of the robot is compared to the required value obtained from the vector map data.

- Signaling function:

- A print output marking the completion of an objective: called once the final state of the path planning algorithm is reached and the correct orientation of the robot is achieved.

- Safety function:

- Constantly running in the background (as a separate process/thread) in order to detect anomalous behavior of the mobile robot and interrupt the operation of the robot if necessary.

Specifications:

- Maintain a distance of 20cm between walls and PICO, stop and reroute if distance is less than 20cm

- Maintain a distance of 80cm from any moving object

- Move at a maximum speed of 0.3m/s while tracking a moving object

- Move forward slightly, if robot has been standing stationary for 30s.

- Maximum speed of 0.5m/s translational, 1.2rad/s rotational

- Position PICO π rad with respect to the cabinet upon arrival

- Visit the cabinets in the required order

Components:

Sensors

- Laser range Finder

- – Provides a set of points in polar coordinates relative to the PICO robot.

- Wheel encoders

- – With this distance translated and rotated can be measured, is however highly sensitive to noise and will require filtering.

Actuators

- Holonomic base wheel

- – It can realise the required degrees of freedom - translation in x and y and rotate about the z - axis without any position level constraints.

Computation unit

- Containing the software module that drives the robot and lets all other components communicate

Interfaces:

Design Document download:

Escaperoom challenge

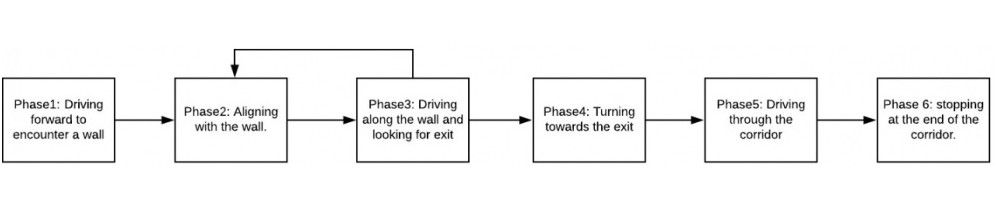

For the escaperoom challenge a wall-following algorithm has been implemented. This algorithm consists of multiple phases, these phases are visualized in the schematic below.

Phase 1:

Since the robot is not aware of its initial position first a wall has to be found before the actual wall following can happen. This is done by driving in a straight line until a wall is detected. When a wall has been found the software switches to phase 2.

Phase 2:

Now that a wall has been localized the next thing is to align the robot parallel to the wall. The estimation of the angular position is done by comparing a number of beams from the laser range finder. The robot will rotate until it is aligned in the right direction, than it will move to phase 3.

Phase 3:

In phase 3 the robot will drive along the wall while looking for the exit. In this phase two things can happen. First the robot might sense it is not aligned anymore and switch back to phase 2. This can happen because of drift, or because a corner has been found. The other option is that the exit has been found, in that case the robot switches to phase 4.

Phase 4:

Phase 4 takes care of aligning with the exit of the escape room. This means that that the robot will try to put the gap in the wall in its center. When this is done it will move to phase 5.

Phase 5:

In this phase the robot will move in a straight line trough the corridor until it reaches the end. To make sure it does not collide with the walls because of miss-alignment or drift a feedback loop has been implemented to keep the robot correctly oriented.

Phase 6:

When the robot reaches the end of the corridor it switches to phase 6 which will stop the movement to make sure it does not keep going to infinity and beyond.

Video of escape-room simulation:

click here for simulation video where the robot shows it's ability to escape the room.

GIF of escape room challenge:

Below is a gif of the actual escape room challenge where the robot failed to exit the escape room due to a minor imperfection in the software.

Hospital challenge

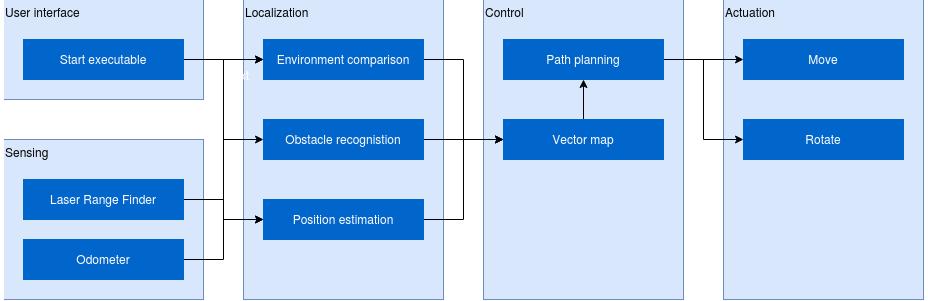

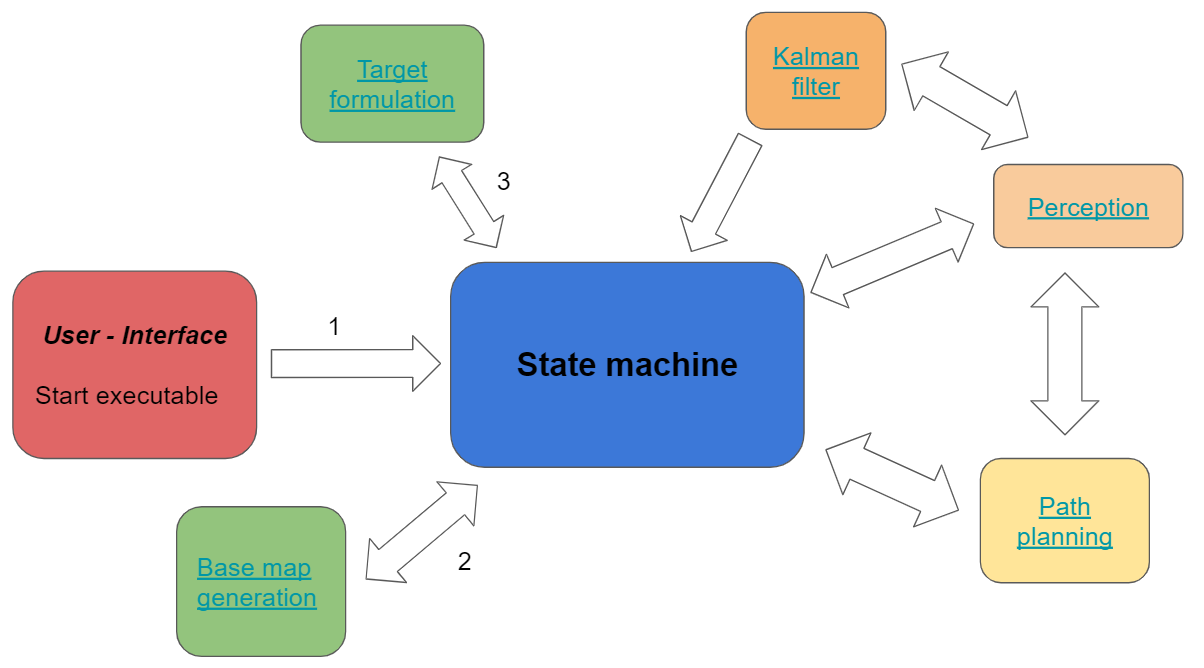

In the escape room challenge the Pico simulation environment has been explored in a relative simple fashion. The hospital challenge is obviously more advanced, but some modules can be adjusted and used again. A schematic visualization of the world model for the hospital challenge is shown below. The separate modules will be elaborated in the next paragraph.

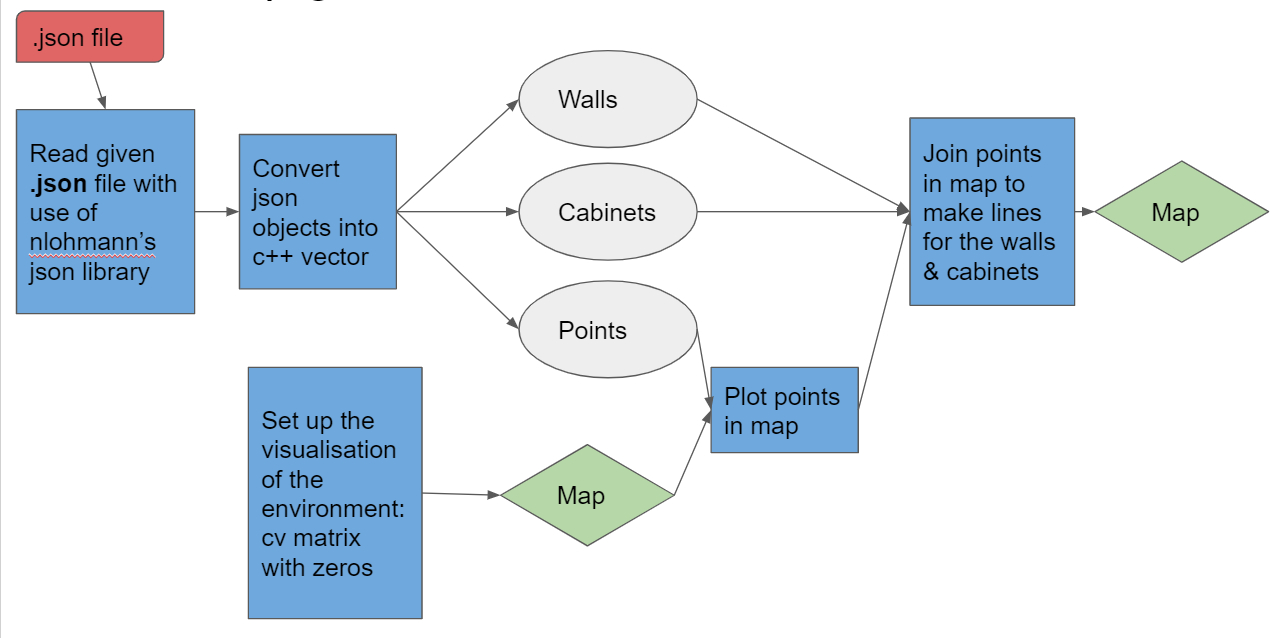

Base map generation

The pre-known layout of the hospital is given to the system in the form of a .json file. From this .json file features like walls, cabinets and points are extracted and converted in a map such that Pico can use this map to navigate and update the map on the go with new data. Below a visualization of the base map generation is shown:

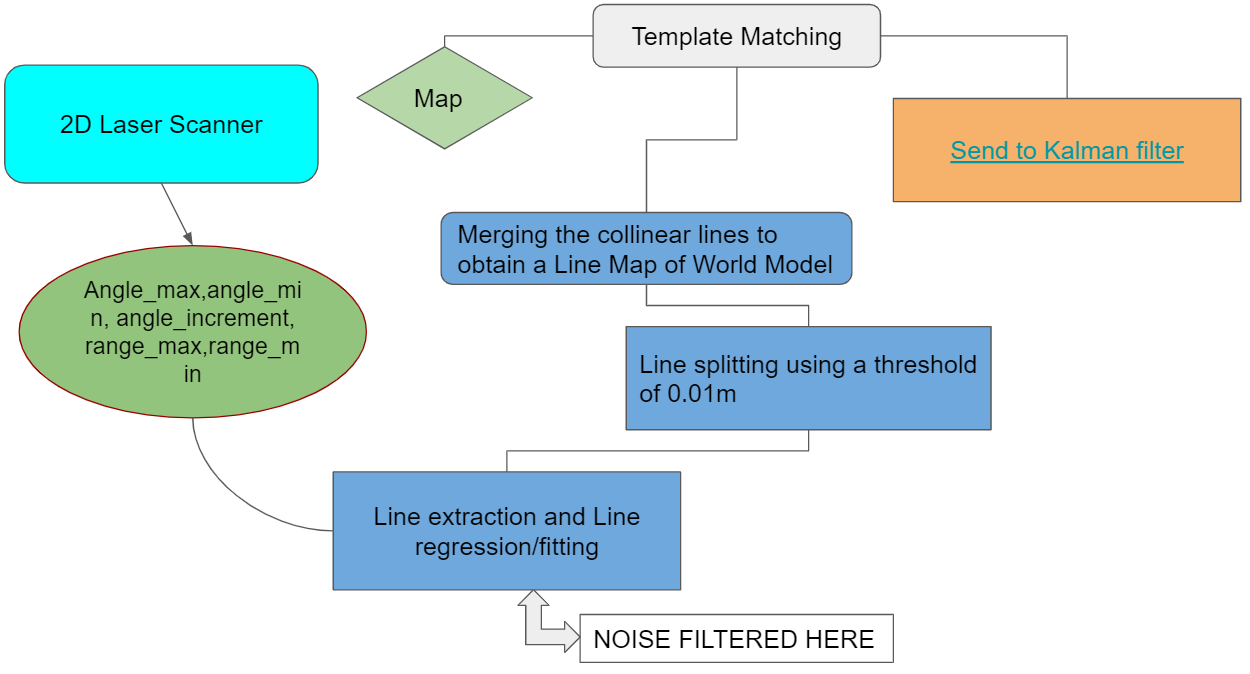

Perception

Perception is mainly done based on the laser scanner data. The raw data from the laser scanner contains info of the angle and range of the laser beams, with a line extraction and line regression this data is translated into a local map. This local map is than compared to the base map using template matching and finally a Kalman filter is implemented as discussed in the next paragraph. A visualization of this perception module is displayed below:

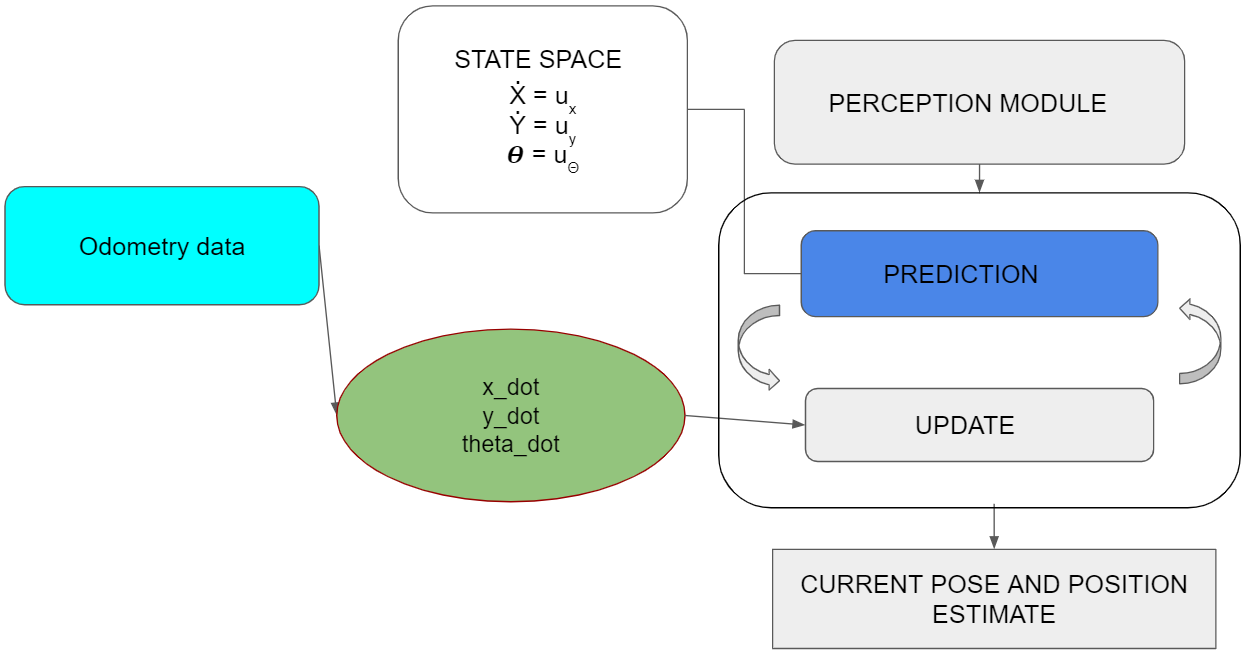

Kalman Filer

To merge the odometry data in the position estimation a Kalman filter is implemented. A state-space prediction is send to the Kalman filter together with the current speeds of the robot from the odometry data where the position prediction is continuously updated into the current pose and position estimate. This is schematically visualized in the figure below:

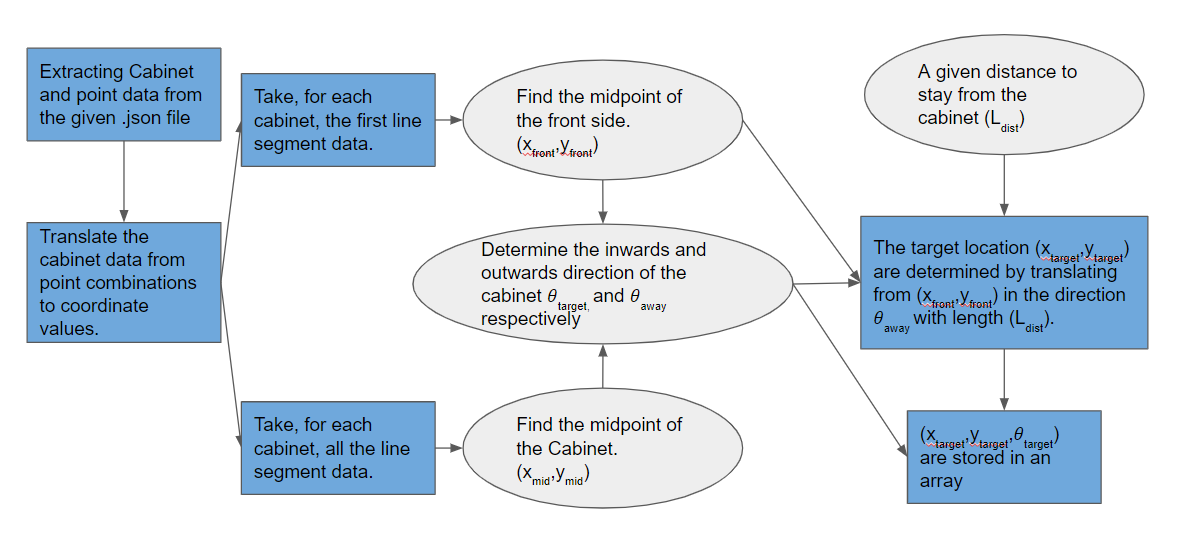

Target formulation

%%%add explanation%%%%

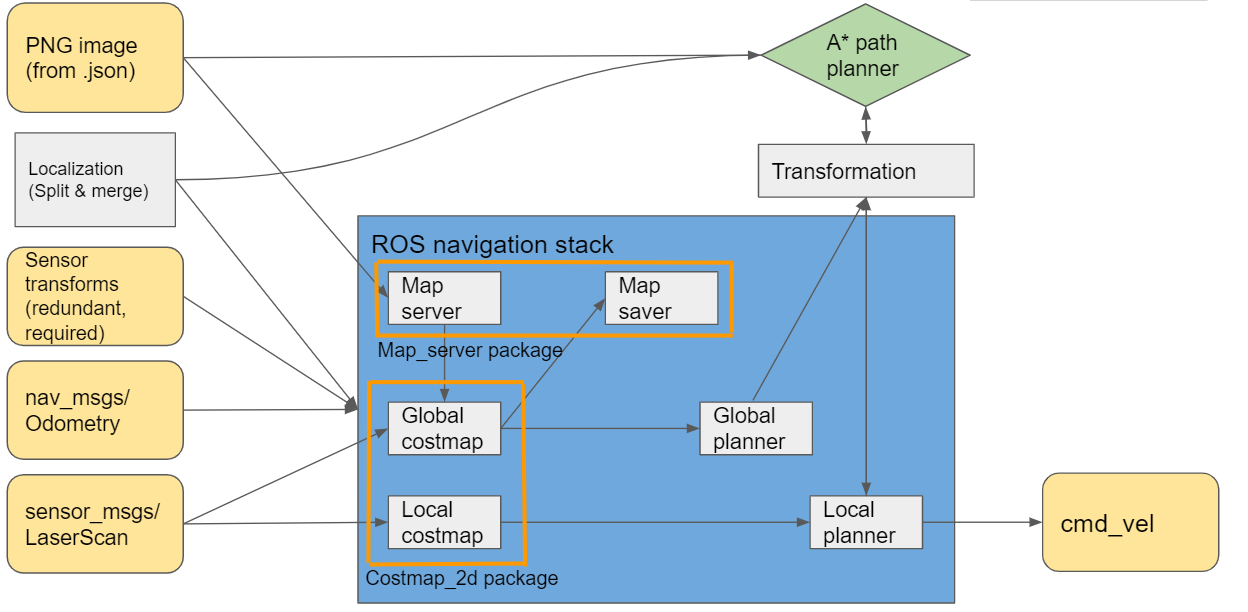

Path planning

%%%add explanation%%%%