PRE2019 4 Group3: Difference between revisions

| Line 496: | Line 496: | ||

=== From data to information === | === From data to information === | ||

Stakeholders like Rijkswaterstaat do not want raw data, but information. So the data will need to be converted to useful information, to be able to satisfy stakeholders. To do so the DIKAR model can be used, which stands for data, information, knowledge, action and result. Data represents the raw numbers, stored but not managed in a way that makes that they can easily be processed. Information comes from data when it is processed. It gets a form which makes it easier to understand or to find relationships. The last three stages: knowledge, action and result, are carried out by the stakeholder. When information is understood it becomes knowledge of the stakeholder. With this knowledge actions can be taken that in the end give results. The design collects data by means of images. These images are then labeled by the image recognition program. All these separate images with labels can form the information when it is processed right. The image recognition will label what kind of plastic can be seen on an image or within a frame. This all could be combined to information that provides how much of a certain type of plastic is picked up, within a certain amount of time. With this, it could be included at what location this plastic is picked up, i.e. at which location the Noria was released. This can show relationships between amount of (type of) waste and location. Also, if the image recognition enables this, size of plastic waste could be included, as well as the brand of the product (e.g. Coca Cola, Red Bull etc.). This all can provide useful information and relationships that can be used to take action. | Stakeholders like Rijkswaterstaat do not want raw data, but information. So the data will need to be converted to useful information, to be able to satisfy stakeholders. To do so the DIKAR model can be used, which stands for data, information, knowledge, action and result. Data represents the raw numbers, stored but not managed in a way that makes that they can easily be processed. Information comes from data when it is processed. It gets a form which makes it easier to understand or to find relationships. The last three stages: knowledge, action and result, are carried out by the stakeholder. When information is understood it becomes knowledge of the stakeholder. With this knowledge actions can be taken that in the end give results. The design collects data by means of images. These images are then labeled by the image recognition program. All these separate images with labels can form the information when it is processed right. The image recognition will label what kind of plastic can be seen on an image or within a frame. This all could be combined to information that provides how much of a certain type of plastic is picked up, within a certain amount of time. With this, it could be included at what location this plastic is picked up, i.e. at which location the Noria was released. This can show relationships between amount of (type of) waste and location. Also, if the image recognition enables this, size of plastic waste could be included, as well as the brand of the product (e.g. Coca Cola, Red Bull etc.). This all can provide useful information and relationships that can be used to take action. | ||

[[File:Ex1information.PNG|300px|Image: 300 pixels|left|thumb|Example of information display of counted plastic.]] | |||

[[File:Ex2information.PNG|300px|Image: 300 pixels|center|thumb|Example of information display of hotspots of plastic.]] | |||

=Test Plan= | =Test Plan= | ||

Revision as of 14:10, 30 May 2020

SPlaSh: The Plastic Shark

Group members

| Student name | Student ID | Study | |

|---|---|---|---|

| Kevin Cox | 1361163 | Mechanical Engineering | k.j.p.cox@student.tue.nl |

| Menno Cromwijk | 1248073 | Biomedical Engineering | m.w.j.cromwijk@student.tue.nl |

| Dennis Heesmans | 1359592 | Mechanical Engineering | d.a.heesmans@student.tue.nl |

| Marijn Minkenberg | 1357751 | Mechanical Engineering | m.minkenberg@student.tue.nl |

| Lotte Rassaerts | 1330004 | Mechanical Engineering | l.rassaerts@student.tue.nl |

Problem statement

Over 5 trillion pieces of plastic are currently floating around in the oceans [1]. For a part, this so-called plastic soup, exists of large plastics, like bags, straws, and cups. But it also contains a vast concentration of microplastics: these are pieces of plastic smaller than 5mm in size [2]. There are five garbage patches across the globe [1]. In the garbage patch in the Mediterranean sea, the most prevalent microplastics were found to be polyethylene and polypropyline [3].

A study in the Northern Sea showed that 5.4% of the fish had ingested plastic [4]. The plastic consumed by the fish accumulates - new plastic does go into the fish, but does not come out. The buildup of plastic particles results in stress in their livers [5]. Beside that, fish can become stuck in the larger plastics. Thus, the plastic soup is becoming a threat for sea life.

A lot of this plastic comes from rivers. A study published in 2017 found that about 80% of plastic trash is flowing into the sea from 10 rivers that run through heavily populated regions. The other 20% of plastic trash enters the ocean directly [6], for example, trash blown from a beach or discarded from ships.

In 2019, over 200 volunteers walked along parts of the Maas and Waal [7], they found 77.000 pieces of litter of which 84% was plastic. This number was higher than expected. The best way to help cleaning up the oceans is to first make sure to stop the influx. In order to stop the influx, it must be known how much plastic is flowing through the rivers. The amount of litter was higher than expected, which means that at this moment there is no good monitoring of the rivers on the plastic flow.

In this project, a contribution will be made to the gathering of information on the litter flowing through the river Maas, specifically the part in Limburg. This is done by providing a concept of an information-gathering 'shark'. This machine uses image recognition to identify the plastic. A design will be made and the image recognition will be tested. Lastly, it will be thought out how the shark will be able to save information and communicate it.

Objectives

- Do research into the state of the art of current recognition software, river cleanup devices and neural networks.

- Create a software tool that distinguishes garbage from marine life.

- Test this software tool and form a conclusion on the effectiveness of the tool.

- Create a design for the SPlaSh

- Think of a way to save and communicate the information gathered.

Users

In this part the different users will be discussed. With users are meant: the different groups that are involved with this problem.

Schone rivieren (Schone Maas)

Schone rivieren is a foundation which is established by IVN Natuureducatie, Plastic Soup Foundation and Stichting De Noordzee. This foundation has the goal to have all Dutch rivers plastic-free in 2030. They rely on volunteers to collectively clean up the rivers and gather information. They would benefit a lot from the SPlaSh, because it provides the organization with useful data that can be used to optimize the river cleanup.

A few of the partners will be listed below. These give an indication of the organizations this foundation is involved with.

- University of Leiden - The science communication and society department of the University does a lot of research to the interaction between science and society, this expertise is used by the foundation.

- Rijkswaterstaat (executive agency of the Ministry of Infrastructure and Water Management) - Rijkswaterstaat will provide knowledge that can be used for the project. Therefore, Rijkswaterstaat is also a user of its own, whom will be discussed later.

- Nationale Postcode Loterij (national lottery) - Donated 1.950.000 euros to the foundation. This indicates that the problem is seen as significant. This donation helps the foundation to grow and allows them to use resources such as the SPlaSh.

- Tauw - Tauw is a consultancy and engineering agency that offers consultancy, measurement and monitoring services in the environmental field. It also works on the sustainable development of the living environment for industry and governments.

Lastly, the foundation also works with the provinces, Brabant, Gelderland, Limburg, Utrecht and Limburg.

Rijkswaterstaat

Rijkswaterstaat is the executive agency of the Ministry of Infrastructure and Water Management, as mentioned before. This means that it is the part of the government that is responsible for the rivers of the Netherlands. They also are the biggest source of data regarding rivers and all water related topics in the Netherlands. Other independent researchers can request data from their database. This makes them a good user, since this project could add important data to that database. Rijkswaterstaat also funds projects, which can prove helpful if the concept that is worked out in the project is ever realized to a prototype.

RanMarine Technology (WasteShark)

RanMarine Technology is a company that is specialized in the design and development of industrial autonomous surface vessels (ASV’s) for ports, harbours and other marine and water environments. The company is known for the WasteShark. This device floats on the water surface of rivers, ports and marinas to collect plastics, bio-waste and other debris [8]. It currently operates at coasts, in rivers and in harbours around the world - also in the Netherlands. The idea is to collect the plastic waste before a tide takes it out into the deep ocean, where the waste is much harder to collect.

WasteSharks can collect 200 liters of trash at a time, before having to return to an on-land unloading station. They also charge there. The WasteShark has no carbon emissions, operating on solar power and batteries. The batteries can last 8-16 hours. Both an autonomous model and a remote-controlled model are available [8]. The autonomous model is even able to collaborate with other WasteSharks in the same area. They can thus make decisions based on shared knowledge [9]. An example of that is, when one WasteShark senses that it is filling up very quickly, other WasteSharks can come join it, for there is probably a lot of plastic waste in that area.

This concept does seem to tick all the boxes (autonomous, energy neutral, and scalable) set by The Dutch Cleanup. A fully autonomous model can be bought for under $23000 [10], making it pretty affordable for governments to invest in.

The autonomous WasteShark detects floating plastic that lies in the path of the WasteShark using laser imaging detection and ranging (LIDAR) technology. This means the WasteShark sends out a signal, and measures the time it takes until a reflection is detected [11]. From this, the software can figure out the distance of the object that caused the reflection. The WasteShark can then decide to approach the object, or stop / back up a little in case the object is coming closer [10], this is probably for self-protection. The design of the WasteShark makes it so that plastic waste can go in easily, but can hardly go out of it. The only moving parts of the design are two thrusters which propel the WasteShark forward or backward [9]. This means that the design is very robust, which is important in the environment it is designed to work in.

The fully autonomous version of the WasteShark can also simultaneously collect water quality data, scan the seabed to chart its shape, and filter the water from chemicals that might be in it [10]. These extra measurement devices and gadgets are offered as add-ons. To perform autonomously, this design also has a mission planning ability. In the future, the device should even be able to construct a predictive model of where trash collects in the water [9]. The information provided by the SPlaSh can be used by RanMarine Technology in the future to guide the WasteShark to areas with a high number of litter.

Albatross

A second device that focuses on collecting datasets of microplastics in rivers and oceans, is the Albatross from the company Pirika Inc. [12]. They do this by collecting water samples which are analysed with microscopes afterwards. These microplastics are collected using a plankton net with diameters of 0.1 or 0.3 mm. However, the device does not operate or navigate on it's own, it is a static measurement. The addition of the plankton net could be an addition to the WasteShark to focus on microplastics instead of macroplastics.

Noria

Noria focuses on the development of innovative methods and techniques to tackle the plastic waste problem in the water. They focus on tackling this problem from the time the plastic ends up in the water until it reaches the sea [13]. In the figure below, the system of Noria can be seen. Via Rijkswaterstaat, contact has been made with the founder and owner of Noria, Rinze de Vries. Rinze de Vries is interested in working together for this project. Therefore, there is decided to apply an image recognition system on the Noria system to detect the amount and sort of garbage that is collected by the system of Noria.

A pilot has been executed with the Noria. This pilot is aimed at testing a plastic catch system in the lock of Borgharen. The following conclusions can be drawn from this pilot:

- More than 95% of the plastic waste released into the lock was taken out of the water with the Noria system. This applies to plastic waste as well as organic waste with a size of 10 to 700 mm.

- At this moment, it is quite a challenge to drain the waste from the system.

Requirements

The following points are the requirements. These requirements are conditions or tasks that must be completed to ensure the completion of the project.

Requirements for the Software

- The program that is written should be able to identify and classify different types of plastic;

- The program should be able to identify plastic in the water correctly for at least 85 percent of the time based on … database;

- The image recognition should work with photos;

- The image recognition should be live;

- The same piece of plastic should not be counted multiple times.

Requirements for the Design

In order to make the requirements of the design concrete and relevant it has been decided to contact potential users. One of the users, Rijkswaterstaat, responded to the request and decided that it was allowed to conduct an interview with one of their employees, Ir. Brinkhof, who is a project manager. He is specialized in the region of the Maas and has insight in the all projects and maintenance.

- The design should be weatherproof;

- The image recognition should operate at all moments when Noria is also operating;

- The robot should be robust, so it should not be damaged easily;

- The design should have its own power source.

Finally, literature research about the current state of the art must be provided. At least 25 sources must be used for the literature research of the software and design.

Planning

Approach

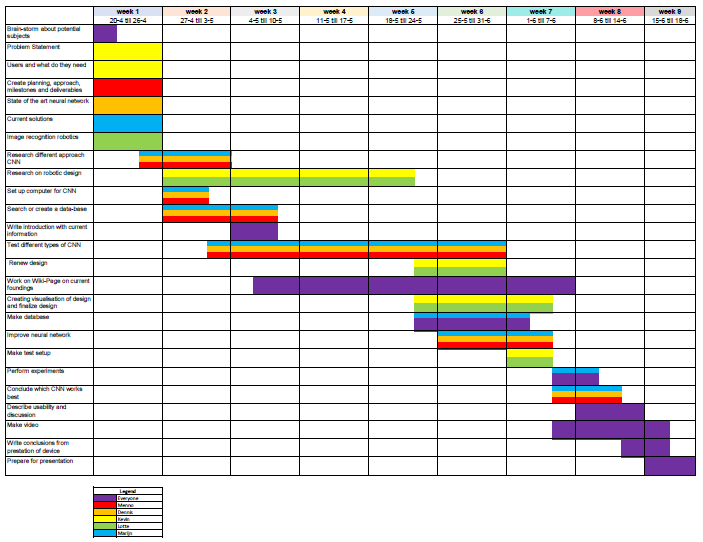

For the planning, a Gantt Chart is created with the most important things. The overall view of our planning is that in the first two weeks, a lot of research has to be done. This needs to be done for, among other things, the problem statement, users and the current technology. In the second week, more information about different types of neural networks and the working of different layers should be investigated to gain more knowledge. Also, this could lead to installing multiple packages or programs on our laptops, which needs time to test whether they work. During this second week, a data-set should be created or found that can be used to train our model. If this cannot be found online and thus should be created, this would take much more time than one week. However, it is hoped to be finished after the third week. After this, the group is split into people who create the design and applications of the robot, and people who work on the creation of the neural network. After week 5, an idea of the robotics should be elaborated with the use of drawings or digital visualizations. Also all the possible neural networks should be elaborated and tested, so that in week 8 conclusions can be drawn for the best working neural network. This means that in week 8, the Wiki-page can be concluded with a conclusion and discussion about the neural network that should be used and about the working of the device. Finally, week 9 is used to prepare for the presentation.

Currently, the activities are subdivided related to the Neural Network / image recognition and the design of the device. Kevin and Lotte will work on the design of the device and Menno, Marijn and Dennis will look work on the neural networks.

Milestones

| Week | Milestones |

|---|---|

| 1 (April 20th till April 26th) | Correct information and knowledge for first meeting |

| 2 (April 27th till May 3rd) | Further research on different types of Neural Networks and having a working example of a CNN. |

| 3 (May 4th till May 10th) | Elaborate the first ideas of the design of the device and find or create a usable database. |

| 4 (May 11th till May 17th) | First findings of correctness of different Neural Networks and tests of different types of Neural Networks. |

| 5 (May 18th till May 24th) | Conclusion of the best working neural network and final visualisation of the design. |

| 6 (May 25th till May 31st) | First set-up of wiki page with the found conclusions of Neural Networks and design with correct visualisation of the findings. |

| 7 (June 1st till June 7th) | Creation of the final wiki-page |

| 8 (June 8th till June 14th) | Presentation and visualisation of final presentation |

Deliverables

- Design of the SPlaSh

- Software for image recognition

- Complete wiki-page

- Final presentation

State-of-the-Art

Quantifying plastic waste

Plastic debris in rivers has been quantified before in three ways [14]. First of all, by quantifying the sources of plastic waste. Second of all, by quantifying plastic transport through modelling. Lastly, by quantifying plastic transport through observations. The last one is most in line with what will be done in this project. No uniform method for counting plastic debris in rivers was made. So, several plastic monitoring studies each thought of their own way to do so. The methods can be divided up into 5 different subcategories [14]:

1. Plastic tracking: Using GPS (Global Positioning System) to track the travel path of plastic pieces in rivers. The pieces are altered beforehand so that the GPS can pick up on it. This method can show where cluttering happens, where preferred flowlines are, etc.

2. Active sampling: Collecting samples from riverbanks, beaches, or from a net hanging from a bridge or a boat. This method does not only quantify the plastic transport, it also qualifies it - since it is possible to inspect what kinds of plastics are in the samples, how degraded they are, how large, etc. This method works mainly in the top layer of the river. The area of the riverbed can be inspected by taking sediment samples, for example using a fish fyke [15].

3. Passive sampling: Collecting samples from debris accumulations around existing infrastructure. In the few cases where infrastructure to collect plastic debris is already in place, it is just as easy to use them to quantify and qualify the plastic that gets caught. This method does not require any extra investment. It is, like active sampling, more focused on the top layer of the plastic debris, since the infrastructure is, too.

4. Visual observations: Watching plastic float by from on top of a bridge and counting it. This method is very easy to execute, but it is less certain than other methods, due to observer bias, and due to small plastics in a river possibly not being visible from a bridge. This method is adequate for showing seasonal changes in plastic quantities.

5. Citizen science: Using the public as a means to quantify plastic debris. Several apps have been made to allow lots of people to participate in ongoing research for classifying plastic waste. This method gives insight into the transport of plastic on a global scale.

Visual observations, done automatically

Cameras can be used to improve visual observations. One study did such a visual observation on a beach, using drones that flew about 10 meters above it. Based on input from cameras on the UAV's, plastic debris could be identified, located and classified (by a machine learning algorithm) [16]. Similar systems have also been used to identify macroplastics on rivers.

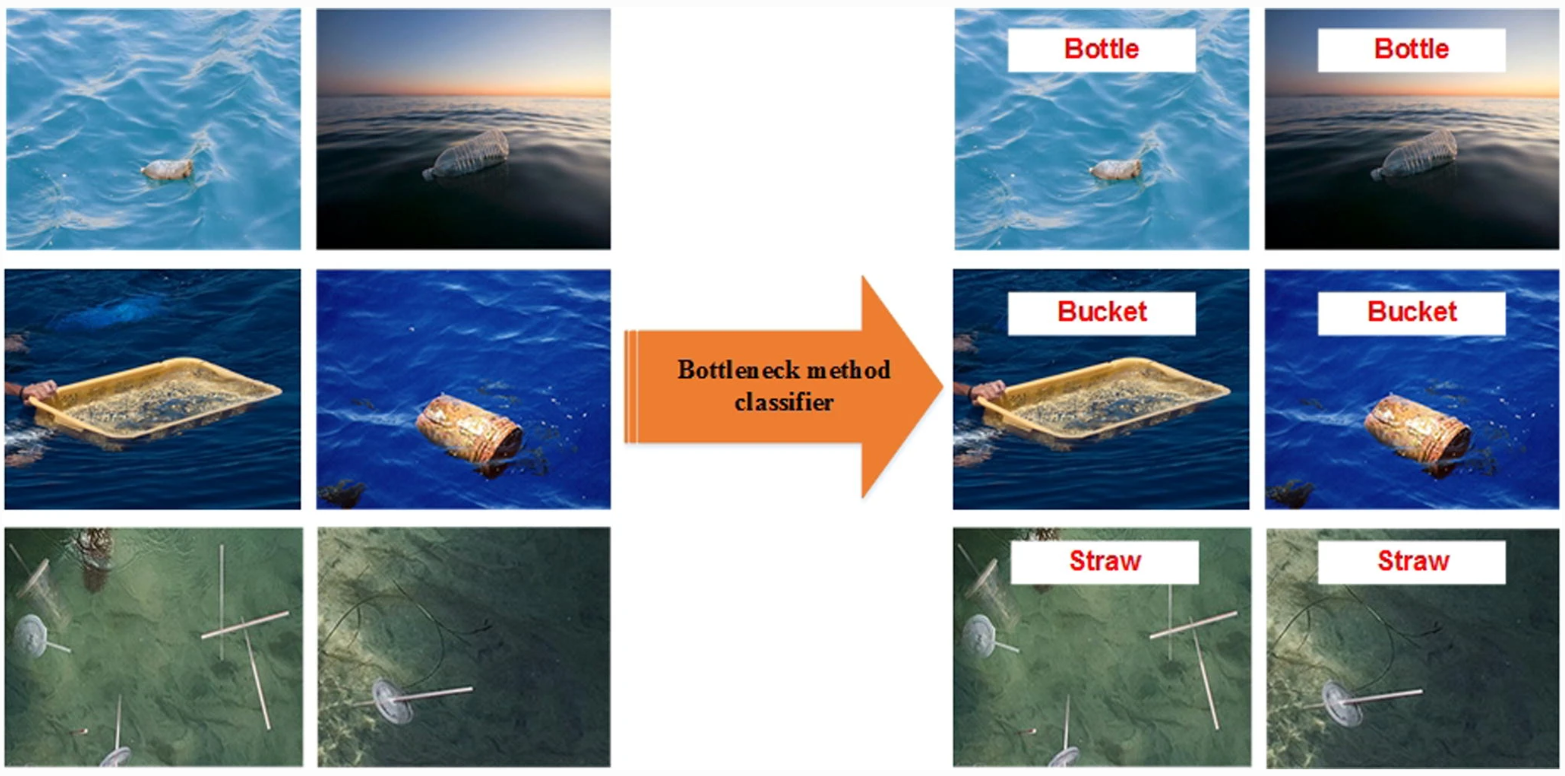

Another study made a deep learning algorithm (a CNN - to be exact, a "Visual Geometry Group-16 (VGG16) model, pre-trained on the large-scale ImageNet dataset" [17]) that was able to classify different types of plastic from images. These images were taken from above the water, so this study also focused on the top layer of plastic debris.

The algorithm had a training set accuracy of 99%. But that doesn't say much about the performance of the algorithm, because it only says how well it categorizes the training images, which it has seen lots of times before. To find out the performance of an algorithm, it has to look at images it has never seen before (so, images that are not in the training set). The algorithm recognized plastic debris on 141 out of 165 brand new images that were fed into the system [17]. That leads to a validation accuracy of 86%. It was concluded that this shows the algorithm is pretty good at what it should do.

Their improvement points are that the accuracy could be even higher and more different kinds of plastic could be distinguished, while not letting the computational time be too long. This is something we should look into in this project, too.

Neural Networks

Neural networks are a set of algorithms that are designed to recognize patterns. They interpret sensory data through machine perception, labeling or clustering raw input. The patterns they recognize are numerical, contained in vectors. Real-world data, such as images, sound, text or time series, needs to be translated into such numerical data to process it [18].

There are different types of neural networks [19]:

- Recurrent neural network: Recurrent neural networks, also known as RNNs, are a class of neural networks that allow previous outputs to be used as inputs while having hidden states. These networks are mostly used in the fields of natural language processing and speech recognition [20].

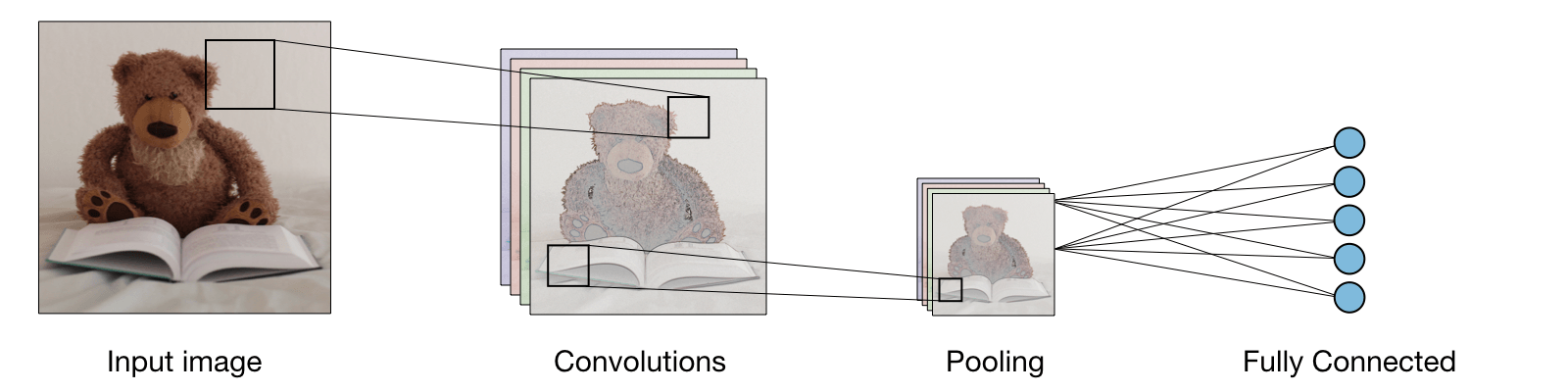

- Convolutional neural networks: Convolutional neural networks, also known as CNNs, are used for image classification.

- Hopfield networks: Hopfield networks are used to collect and retrieve memory like the human brain. The network can store various patterns or memories. It is able to recognize any of the learned patterns by uncovering data about that pattern [21].

- Boltzmann machine networks: Boltzmann machines are used for search and learning problems [22].

Convolutional Neural Networks

In this project, the neural network should retrieve data from images. Therefore a convolutional neural network will be used. Convolutional neural networks are generally composed of the following layers [23]:

The convolutional layer transforms the input data to detect patterns, edges and other characteristics in order to be able to correctly classify the data. The main parameters with which a convolutional layer can be changed are by choosing a different activation function, or kernel size. Max pooling layers reduce the number of pixels in the output size from the previously applied convolutional layer(s). Max pooling is applied to reduce overfitting. A problem with the output feature maps is that they are sensitive to the location of the features in the input. One approach to address this sensitivity is to use a max pooling layer. This has the effect of making the resulting downsampled feature maps more robust to changes in the position of the feature in the image. The pool-size determines the amount of pixels from the input data that is turned into 1 pixel from the output data. Fully connected layers connect all input values via separate connections to an output channel. Since this project has to deal with a binary problem, the final fully connected layer will consist of 1 output. Stochastic gradient descent (SGD) is the most common and basic optimizer used for training a CNN [24]. It optimizes the model using parameters based on the gradient information of the loss function. However, many other optimizers have been developed that could have a better result. Momentum keeps the history of the previous update steps and combines this information with the next gradient step to reduce the effect of outliers [25]. RMSProp also tries to keep the updates stable, but in a different way than momentum. RMSprop also takes away the need to adjust learning rate [26]. Adam takes the ideas behind both momentum and RMSprop and combines into one optimizer [27]. Nesterov momentum is a smarter version of the momentum optimizer that looks ahead and adjusts the momentum based on these parameters [28]. Nadam is an optimizer that combines RMSprop and Nesterov momentum [29].

Image Recognition

Over the past decade or so, great steps have been made in developing deep learning methods for image recognition and classification [30]. In recent years, convolutional neural networks (CNNs) have shown significant improvements on image classification [31]. It is demonstrated that the representation depth is beneficial for the classification accuracy [32]. Another method is the use of VGG networks, that are known for their state-of-the-art performance in image feature extraction. Their setup exists out of repeated patterns of 1, 2 or 3 convolution layers and a max-pooling layer, finishing with one or more dense layers. The convolutional layer transforms the input data to detect patterns and edges and other characteristics in order to be able to correctly classify the data. The main parameters with which a convolutional layer can be changed, is by choosing a different activation function or kernel size [32].

There are still limitations to the current image recognition technologies. First of all, most methods are supervised, which means they need big amounts of labelled training data, that need to be put together by someone [30]. This can be solved by using unsupervised deep learning instead of supervised. For unsupervised learning, instead of large databases, only some labels will be needed to make sense of the world. Currently, there are no unsupervised methods that outperform supervised. This is because supervised learning can better encode the characteristics of a set of data. The hope is that in the future unsupervised learning will provide more general features so any task can be performed [33]. Another problem is that sometimes small distortions can cause a wrong classification of an image [30] [34]. This can already be caused by shadows on an object that can cause color and shape differences [35]. A different pitfall is that the output feature maps are sensitive to the specific location of the features in the input. One approach to address this sensitivity is to use a max pooling layer. Max pooling layers reduce the number of pixels in the output size from the previously applied convolutional layer(s). The pool-size determines the amount of pixels from the input data that is turned into 1 pixel from the output data. Using this, has the effect of making the resulting down sampled feature maps more robust to changes in the position of the feature in the image [32].

Specific research has been carried out into image recognition and classification of fish in the water. For example, a study that used state-of-the-art object detection to detect, localize and classify fish species using visual data, obtained by underwater cameras, has been carried out. The initial goal was to recognize herring and mackerel and this work was specifically developed for poorly conditioned waters. Their experiments on a dateset obtained at sea, showed a successful detection rate of 66.7% and successful classification rate of 89.7% [36]. There are also studies that researched image recognition and classification of micro plastics. By using computer vision for analyzing required images, and machine learning techniques to develop classifiers for four types of micro plastics, an accuracy of 96.6% was achieved [37].

For these recognitions, image databases need to be found for the recognition of fish and plastic. First of all, ImageNet can be used, which is a database with many pictures of different subjects. Secondly 3 databases of different fishes have been found: http://groups.inf.ed.ac.uk/f4k/GROUNDTRUTH/RECOG/ https://wiki.qut.edu.au/display/cyphy/Fish+Dataset https://wiki.qut.edu.au/display/cyphy/Fish+Dataset (same?)

YOLO

YOLO is a deep learning algorithm which came out on may 2016. It is popular because it’s very fast compared with other deep learning algorithms [38]. For YOLO, a completely different approach is used than for prior detection systems. In prior detection systems, a model is applied to an image at multiple locations and scales. High scoring regions of the image are considered detections. For YOLO a single deep convolutional neural network is applied to the full image. This network divides the image into a grid of cells and each cell directly predicts a bounding box and object classification [39]. These bounding boxes are weighted by the predicted probabilities [40].

The newest version of YOLO is YOLO v3. It uses a variant of Darknet. Darknet originally has 53 layers trained on ImageNet. For the task of detection, 53 more layers are stacked onto it. In total, this means that a 106 layer fully convolutional underlying architecture is used for YOLO v3. In the following figure can be seen how the architecture of YOLO v3 looks like [41].

Further exploration

Location

Rivers are seen as a major source of debris in the oceans [42] . The tide has a big influence on the direction of the floating waste. During low tide the waste flows towards the sea, and during high tide it can flow over the river towards the river banks [43].

A big consequence of plastic waste in rivers, seas, oceans and river banks is that a lot of animals can mistake plastic for food, often resulting in death. There are also economic consequences. More waste in waters, means more difficult water purification, especially because of microplastics. It costs extra money to be able to purify the water. Also, cleaning of waste in river areas, costs millions a year [44].

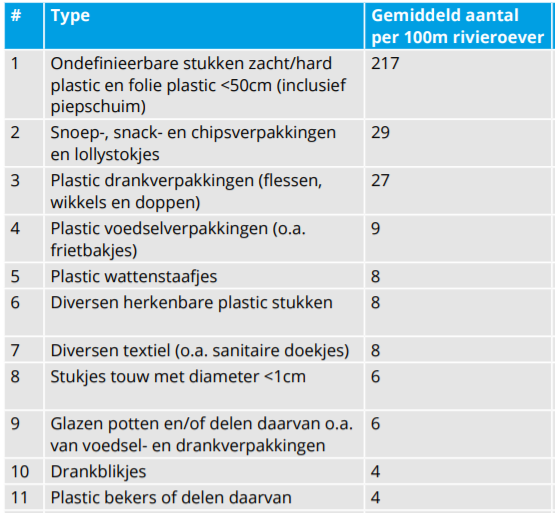

A large-scale investigation has taken place into the wash-up of plastic on the banks of rivers. At river banks of the Maas, an average of 630 pieces of waste per 100 meters of river bank were counted, of which 81% is plastic. Some measurement locations showed a count of more than 1200 pieces of waste per 100 meters riverbank, and can be marked as hotspots. A big concentration of these hotspots can be found at the riverbanks of the Maas in the south of Limburg. A lot of waste, originating from France and Belgium, flows into the Dutch part of the Maas here. Evidence for this, is the great amount of plastic packaging with French texts. Also, in these hotspots the proportion of plastic is even higher, namely 89% instead of 81% [43].

The SPlaSh should help to tackle the problem of the plastic soup at its roots, the rivers. Because of the high plastic concentration in the Maas in the south of Limburg, there will be specifically looked into designing the live image recognition program and robot, for this part of the Maas. There are many different things that have to be taken into account to avoid negative influences of the SPlaSh. Two main things that need to be taken into account are river animals and boats. The SPlaSh should namely, of course, not 'eat' animals and it should not be broken by boats.

Plastic

An extensive research into the amount of plastic on the river banks of the Maas has been executed [43]. As explained before, plastic in rivers can float into the oceans or can end up on river banks. Therefore, the counted amount of plastic on the river banks of the Maas is only a part of the total amount of plastic in the rivers, since another part flows into the ocean. The exact numbers of how much plastic flows into the oceans are not clear. However, it is certain that at the south of Limburg an average of more than 1200 pieces of waste per 100 meters of riverbank of the Maas were counted, of which 89% is plastic.

A top 15 was made of which types of waste were encountered the most. The type of plastic most commonly found is indefinable pieces of soft/hard plastic and plastic film that are smaller than 50 [cm], including styrofoam. This indefinable pieces also include nurdles. This are small plastic granules, that are used as a raw element for plastic products. Again, the south of Limburg has the highest concentration of this type of waste. This is because there are relatively more industrial areas there. Another big part of the counted plastics are disposable plastics, often used as food and drink packaging. In total 25% of all encountered plastic is disposable plastic from food and drink packages.

Only plastic that has washed up on the riverbanks has been counted. Not much is known about how much plastic is in the water, below the water surface. From the state-of-the-art it appeared that there are clues, that plastic in waters is not only present at the surface, but also at lower levels. The robot and image recognition program that will be designed, will help to map the amount of plastic in deeper waters of the Maas in the south of Limburg, to get a better idea of how much plastic floats through that part of the river in total.

Image Database

The CNN can be pretrained on the large-scale ImageNet. Due to this pre-training, the model has learned certain image features from this large dataset. Secondly the neural network should be trained on a database specified on this subject. This database should then randomly be divided into 3 group. The biggest group is the training data, which the neural network uses to see patterns and to predict the outcome of the second dataset, the validation data. Ones this validation data has been analyzed, a new epoch is started, which means that the validation data is part of the training data. Once a final model has been created, a test dataset can be used to analyzed its performance.

It is difficult to find a database perfectly corresponding to our subject. First of all, a big dataset of plastic waste in the ocean is available [45]. This could be potentially usable for detection of plastic deeper in the river, but we would also like to detect plastic on the surface, where this is the place where most macro plastics float. This database contains a total amount of 3644 images of underwater waste containing 1316 mages of plastic. Further, a big dataset of plastic shapes can be used, although these are not from underwater [46]. Using image preprocessing, it could be possible to still find corresponding shapes of plastic from pictures that the underwater camera takes. Lastly, a dataset can be created by ourselves by taken screenshots from nature documentaries.

Neural Network Design

The first findings from using a VGG7 model on the found database are: The dataset of Deep-sea Debris Database has been split up into a training and validation dataset with a ratio of 80:20. A VVG-7 model, which is a simplification of the more advanced VGG-16 model, has previously been used for a binary classification. This has been changed to a multiclass problem by using softmax as an activation and having 8 outputs in the final dense layer. The problem is that the data is not in balance, where the classes contain the following number of images:

- Cloth (67)

- Glass (63)

- Metal (507)

- Natural debris (439)

- Other artificial debris(1423)

- Paper/Lumber (39)

- Plastic (1417)

- Rubber (51)

Because of these differences, all the validation probabilities are identical. For all images, the chance to be part of a class are predicted as follows:

Because plastic and other artificial debris occur more often the highest accuracy is obtained when all images have the highest probability for these two classes.

This difference in class sizes could be resolved by using the command: class_weight = 'auto'. However the probabilities are different for different images, the probability is still the highest for the plastic class, since this is the largest class. This model and database can be found in the One Drive.

YOLO: you only look ones. Hierdoor kun je met een redelijk goede framrate video’s analyseren. Hiervoor zijn 3 goede video’s en uitleg (op volgorde):

1. Testen met bestaande weights op een afbeelding: https://pysource.com/2019/06/27/yolo-object-detection-using-opencv-with-python/ Voor import cv2: pip install opencv-python==3.4.5.20

2. Testen met bestaande weights op een video/webcam: https://www.youtube.com/watch?v=xKK2mkJ-pHU

Dit werkt allebei goed bij mij. Kan spullen op mijn bureau of auto’s die voorbij rijden detecteren.

3. Trainen van een eigen dataset. https://pysource.com/2020/04/02/train-yolo-to-detect-a-custom-object-online-with-free-gpu/ ik heb een dataset gemaakt met ‘plastic bottles’ met het labelImg programma. Deze krijg ik alleen nog niet getraind omdat hij de github pagina van DarkNet niet kan ‘clonen’.

Testing on database

The neural network has been tested with four different databases. These are completely different types of images.

The following datasets have been used:

- Patch-CAMELYON dataset

- This dataset contains images that are used for medical image analysis.

- Rock-paper-scissors dataset

- This dataset contains a large set of images of hands playing rock, paper, scissor game

- Beans dataset

- This is a dataset of images of beans taken in the field using smartphone cameras. It consists of 3 classes: 2 disease classes and the healthy class. Diseases depicted include Angular Leaf Spot and Bean Rust. Data was annotated by experts from the National Crops Resources Research Institute (NaCRRI) in Uganda and collected by the Makerere AI research lab.

- Cats and Dogs dataset

- This dataset contains a large set of images of cats and dogs.

These different datasets all gave quite good results, but there is some room for improvements. The hyperparameters can for instance be tuned better and the neural network can be extended with more layers.

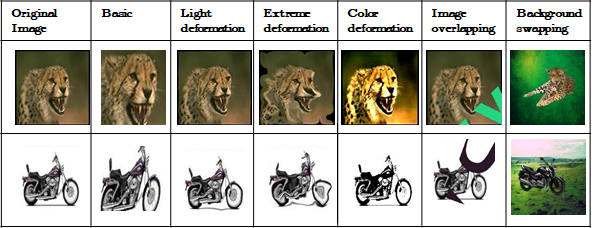

Data Augmentation

The dataset doesn't contain as many images as desired. If there is not enough data, neural networks tends to over-fit to the little amount of data there is, which is undesirable. That's why some way has to be found to increase the size of the dataset. One way to increase the size of a dataset is by use of Data Augmentation. If this is used, then not only are the original images fed into the neural network, but also slightly altered images. Alterations include:

- Translation

- Rotation

- Scaling

- Flipping

- Illumination

- Overlapping images

- Gaussian noise, etc.

Every altered images counts as completely new data for the Neural Network, which is why it is able to train using this duplicated data without over-fitting to it.

Neural Networks benefit from having more data to train on, simply because the classifications become stronger with more data. But on top of that, Neural Networks that are trained on translated, resized or rotated images are much better at classifying objects that are slightly altered in any way (this is called invariance). In the case of plastic underwater, training a CNN to be invariant makes a lot of sense: there's no saying whether a piece of plastic will be upside-down, up close, far away, etc.

Two sources for Data Augmentation in keras:

https://towardsdatascience.com/machinex-image-data-augmentation-using-keras-b459ef87cd22

Data Augmentation code has been written and can be implemented once the dataset is final.

Transfer learning

- Finding out if pre-training on a(n ImageNet) database will improve performance / Transfer-learning method (Menno)

Transfer learning is a technique where instead of random initialization of the parameters of a model, we use a model that was pre-trained for a different task as the starting point. The two ways by which the pre-trained model can be transferred to the new task is by fine-tuning the complete model, or using it as a fixed feature extractor on top of which a new (usually linear) model is trained. For example, we can take a neural network model that was trained on the popular ImageNet dataset that consists of images of objects (including categories such as "parachute" and "toaster") and apply it to cancer metastases detection.

Transfer learning seems to be a way to that could correctly work for a smaller database, but currently validation images are only classified into the two largest classes. I think the biggest problem is the unbalanced database. This should be solved and tested on both the VGG and the transfer learning model.

Dataset

With the new research goal, it is needed to have a fitting dataset. Doubts were placed on the previously found dataset (Deep-sea Debris Database) working for the application that we now have in mind. Now, either another dataset could be searched for, or a new dataset could be made. The latter requires more time, but could have much better results if it is done right.

The idea is that a test setup will be created and placed in a bath tub, swimming pool or similar. If the final proof-of-concept test setup is likewise, it makes sense that the dataset should have similar conditions. This is why the dataset will consist of self-taken pictures using a similar setup (position, camera angle, lighting) as the test setup.

At least 200 different pictures should be taken. The amount of pictures can later be increased using Data Augmentation. The pictures will be [bath tub/face down/30 cm above water/light on/no reflection]. Different types of plastics will be gathered and submerged in the water of the bath tub. There will be images of

- Plastic bags

- Plastic bottles

- Other macroplastics (cotton buds/butter cups/straws/milk packages/caps)

The ground truth of the images will be categorized by hand using folder structures or the labelling program 'labelimg'. With the latter, the position of the object can also be indicated. More than one type of plastic can be on one image. There will also be some noise in the water, to make it a bit harder for the neural network to recognize the plastics. This noise will come in the form of leaves, similar to the noise that will be faced on Noria's installation.

This means that our final product will be mostly a proof-of-concept. If the idea is actually realized on the Noria, it is advised to recreate the dataset in its environment, so that the neural network does not get confused over the sudden change of water colour and such. Given the effectiveness in the bath tub setup, the possible effectiveness of the neural network in Noria's environment will be discussed at the end of the project.

Photos

A couple of test photos (about 70), containing just bags, bottles and leaves, have been made to practice with. They were compressed to 500 x 500 pixels using a Photoshop Script. See the examples below.

They were then categorized as 'bag' and 'bottle' (for now), and then data augmentation was applied to it, expanding the dataset to 1473 photos. The resulting dataset is only 20 MB and can be found on the group's OneDrive.

There are definitely improvements that can be made to this dataset. But that is exactly the reason why this test dataset was made - to get feedback from group members.

Design

Besides, the image recognition program, the robot itself will need to meet the requirements mentioned at the beginning of this page. There, it is mentioned that the robot should operate at all moments when the Noria is also operating. This means that battery life should be long or some kind of power generation must be present at the Noria itself. Also, the design should be weatherproof and robust. The robot will need to have certain functionalities to be able to meet these requirements. There will be focused on specific parts of the robot that are essential to the operation of the robot. This includes:

- Image recognition hardware;

- Data transfer;

- Power source;

- General assembly.

Image recognition hardware

The camera that must be used, should be weatherproof. Also, it must be possible to power the battery constantly, i.e. it should not only use a removable battery. Besides, it must be possible to retract the images from the camera immediately to be able to use them for image recognition. Finally, the quality should of course be high enough to be able to let the image recognition work well. A common used camera is the GoPro. The GoPro Hero6, Hero7 and Hero8 can be powered externally, also with a weatherproof connection [47] [48]. The internal battery can be left in place as a safety net in case external power cannot be provided. Without an internal battery, the camera will turn off when the external power flow stops and it will not turn back on automatically when the power source is restored. With an internal battery it will switch seamlessly when necessary. The disadvantage is of course that the internal battery can also run out of power. GoPro does not offer very long battery life when shooting for a long time, however there are ways to improve this and this will be elaborated on in the next part. For now there will be focused on the resolution that the GoPro cameras have to offer. The newest GoPro, the GoPro Hero8 Black, takes photos in 12MP and makes video footage (including timelapses) in 4K up to 60fps. Additionally, it has improved video stabilization, called HyperSmooth 2.0, which can come in handy when there are more waves, by e.g. rougher weather [49]. However, lots of external extension (like additional power sources from external companies) are not compatible with the newest GoPros yet. The GoPro Hero7 Black has about the same specs when it comes to image and video quality. It also has video stabilization, but an older version, namely HyperSmooth [50].

GoPros are a compact and a relatively cheap option compared to DSLR cameras (Digital Single Lens Reflex). However, as mentioned before, battery life can be an issue. Therefore, another option could be to use the Cyclapse Pro, which can also come with extensions such as solar panels etc. They have a build-in Nikon or Canon camera, which can provide a higher quality [51]. The standard implemented camera is the Canon T7, that provides 24.1MP pictures and can provide full HD videos at 30 fps [52]. The camera itself is $700 USD and 2 times more expensive than GoPros, and the costs increase quickly when additional components are bought. The complete Cyclapse Pro includes a Digisnap Pro controller with Bluetooth to enable time-lapsing, a Cyclapse weatherproof housing and a lithium ion battery [51]. Because of this, this Cyclapse Pro module costs over $3000 USD. Also, the module is not as compact as a GoPro, since DSLR cameras themselves are already much larger than GoPros. Before a choice can be made between both options, there must be looked at data transfer options and additional power sources.

Data transfer

A GoPro creates its own Wifi signal to which you could connect a phone using the GoPro app. Then data could be sent from there to a computer. Another option could be Auto Upload which is part of GoPro Plus. For a monthly or yearly fee, the GoPro automatically uploads its footage to The Cloud [53] [54]. However, this works together with the GoPro app which requires a mobile device. The image recognition itself will use a computer. Also, when auto uploading to The Cloud, the images/videos are not deleted from the storage within the GoPro. This will be necessary for operation of our device, since otherwise the GoPro storage will be filled up quickly. Besides, it is not completely clear if Auto Upload requires that the GoPro and mobile device are connected to the same Wifi network. Finally, to auto upload, the GoPro must be connected with a power source and it needs to be charged at least 70%. This can be fixed with the solution mentioned in the following paragraph. However, it may not be possible to always keep the battery above 70%.

The Cyclapse Pro also offers Wifi options to be able to transfer data [55]. The DigiSnap Pro within the Cyclapse Pro can transfer images from the camera to an FTP (File Transfer Protocol) server on the local network or internet. The DigiSnap Pro most popularly uses FTP image transfers via USB cellular modems and local USB download. The Digisnap Pro also provides an Android app. Every image taken by the camera can be configured within the DigiSnap Pro Android Application to automatically transfer to a specified FTP folder location on the internet using a USB cellular modem.

Power source

CamDo offers an add-on to the GoPro Hero3 to Hero 7. It is called SolarX which is a weatherproof module, designed for usage together with the Blink or BlinkX time-lapse camera controllers [56]. This enables long term operation of GoPro cameras for time lapse photography. It includes a 9 Watt solar panel to recharge the included V50 battery. The solar panel can be upgraded to 18W for use in cloudy or rainy areas. The solar panel charges the included Lithium Polymer battery which outputs 5 volts to power the camera and can also power other accessories within the weatherproof enclosure. The solar panel can directly be attached to the casing or can be placed separately for optimal usage. The complete module adds significant size to the GoPro, but within the casing there is extra space for additional accessories. If the camera can run indefinitely with only the solar panel, depends on the weather and camera settings (e.g. how often is a picture taken?). This depends on the type of data that is needed and at the moment there is still looked into this, since it is unclear if images or video footage will be used for image recognition. CamDo made a calculator to determine battery life and the best setup, so when the data type is clear this can be used to determine battery life and the amount of solar panels needed [57].

With the BlinkX controller mentioned earlier, you can customize a daily or weekly schedule to program up to 10 separate schedules for either time lapse or motion detection in photo, day, night, burst or video modes [58]. BlinkX powers the GoPro camera down between intervals, increasing the battery life significantly and giving the ability to undertake long term time lapse sequences. The controller can be powered from the GoPro battery and does not require a separate power source. The SolarX itself costs $995 USD (with a 9W solar panel), the BlinkX controller is $355 USD and the GoPro Hero7 Black is around $230 USD. So in total it will be cheaper than the Cyclapse Pro that does not include a rechargeable power source yet. An important remark that must be made is that CamDo is an external company that makes add-ons for GoPro cameras. It is kind of a workaround to let GoPros do what they were not truly designed for and therefore it can be less reliable than using the Harbortronics Cyclapse Pro. However, the GoPro is a relatively cheap and much more light option. Also, these problems mainly apply to older versions of GoPro like the Hero4 Black [59]. This has probably been improved for newer versions (nog wat research hiernaar doen voor dat er een keuze gemaakt wordt).

Cyclapse Pro also offers a solar panel extension [60]. Without solar panel, a full battery can make around 3000 images [55]. The 20W solar panel can make sure the battery is charged. A second battery pack can be included, to increase the duration the system will operate without charging (e.g. for cloudy skies) [61]. Like with the GoPro, it also uses a controller, the Digisnap Pro, to reduce battery usage and gives programming options [51]. Total costs (dependent on specific add-ons), are around $4000 USD, which is significantly larger than for the GoPro.

Data transfer will increase the required power. Transferring data more frequently will use more battery power. The Cyclapse Pro offers a after each 30 pictures setting, which is a good balance between battery life and frequent uploads.

Decision Matrix

| Factors: | Resolution | Data transfer options | Power usage | Power generation | Costs | Total | ||

|---|---|---|---|---|---|---|---|---|

| GoPro Hero7 Black | (+)1 | (-)1? | (+)1? | (+)1 | (+)1 | (+)3 | ||

| Cyclapse Pro | (+)1 | (+)1 | (+)1? | (+)1 | (-)1 | (+)3 |

Remarks:

- Do some more research in data transfer options of GoPro.

- Do some more research in energy usage (dependent on data type we want and data transfer rate)

Localization and obstacle avoidance

The following options are the most common ways of localization and collision avoiding for robots.

- Sonar: so(und) na(vigation) (and) r(anging)

Sonar is a technique that uses soundwaves to determine the shaping of the environment[62]. It is used by both machines and animals as a way to locate and map objects. This is done by emitting a cluster of sound in a certain direction. If the sound waves hit an object they will bounce of that object while the other waves will be reflected back to the emitter. This gives the emitter information about the location and size of the object. Sonar is known to be more effective in water since soundwaves can travel longer distances in water.

The transmitters are mostly piezo-electric materials. Piezo-electric means that voltage is generated when the material is deformed. Also the opposite is possible, when a voltage is set on the material it starts to deform. This property makes the materials simultaneously useful as receivers and transmitters.

There are two types of sonar, that is active and passive sonar. Active sonar uses a transmitter and receiver, whereas passive sonar only listens without transmitting. For this project active sonar is the most useable since the goal is to determine the sides of the river and ships. Ships can be found with passive sonar since they emit sound, but the edges of the river or shallow parts don’t emit sound. Therefore, active sonar is the best option if it is decided to go for sonar.

- Radar: ra(dio) d(etection) a(nd) r(anging)

Radar works on the same principle as Sonar except periodic radio waves are send out instead of sound waves. If an object is either moving toward or away from the transmitter, a slight change in the frequency of the radio appears due to the Doppler effect [63].

- LIDAR: li(ght) d(etection) a(nd) r(anging)

LIDAR also works on the same principle as Sonar, however, light is used as a transmitter and the reflection is observed by the receiver[64].

Best choice for the project

LIDAR is the worst choice out of the three options since the SPlaSh is operated in a river environment. Because the Maas has a relatively high flow rate, especially where the Maas enters the country, there are a lot of bouncing sand grains[65]. This means that the light will be distorted by the send and that leads to a distorted signal received by the robot. Therefore, the choice has to be made between Sonar and Radar.

The choice remains between Sonar and Radar. Radar is not able to detect smaller objects[66], which might be problematic when an item like a rock happens to not be on the bottom of the river but a bit above. It can hit the robot and potentially damage it. The specific dimensions of items that can or cannot be detected by Radar are not known right now and more research has to be done might it be decided to choose for Radar technology. Sonar seems like the ideal solution, since it is frequently used underwater in oceans, it can identify smaller objects and is not disturbed by sand or other natural causes. However, Sonar uses sound and this sound might be harmful for marine life or scatter small objects[66]. The harmfulness of marine life is mostly about marine life that also uses Sonar like whales, so that will not be the case in rivers. But, it is not known if river marine life is also affected by Sonar.

The conclusion is that Radar is the safest option for marine life, but since it is not able to identify small objects it might be harmful for itself. On the other hand, Sonar might be harmful for the marine life, but is able to identify smaller objects and can therefore do a better job avoiding objects.

In the end, the potential harm to the environment and marine life rules out Sonar, which means that Radar will be implemented in the design.

How will radar be implemented

As told before, Radar sends out electromagnetic waves or radio signals that are reflected and received by the robot. This is done multiple times and each time it can be seen if an object moves towards the robot or away from the robot. If the object moves towards the robot with a speed higher as the velocity with which the robot is travelling it means it is moving towards the robot. This can be used to identify ships. If a ship moves towards the robot, it has to for a safe option to move aside. If the signal comes towards the robot but with the same speed as the robot is travelling, that means it sees a standing object. This can be the side of the river or a big object in the river. This is used to determine the path.

- More research is to be done on this subject. Such as:

Is the technology able to detect shallow waters?

How big of an object can it detect?

Etc.

If it turns out that these questions have very negative answers we will have to look for an alternative.

From data to information

Stakeholders like Rijkswaterstaat do not want raw data, but information. So the data will need to be converted to useful information, to be able to satisfy stakeholders. To do so the DIKAR model can be used, which stands for data, information, knowledge, action and result. Data represents the raw numbers, stored but not managed in a way that makes that they can easily be processed. Information comes from data when it is processed. It gets a form which makes it easier to understand or to find relationships. The last three stages: knowledge, action and result, are carried out by the stakeholder. When information is understood it becomes knowledge of the stakeholder. With this knowledge actions can be taken that in the end give results. The design collects data by means of images. These images are then labeled by the image recognition program. All these separate images with labels can form the information when it is processed right. The image recognition will label what kind of plastic can be seen on an image or within a frame. This all could be combined to information that provides how much of a certain type of plastic is picked up, within a certain amount of time. With this, it could be included at what location this plastic is picked up, i.e. at which location the Noria was released. This can show relationships between amount of (type of) waste and location. Also, if the image recognition enables this, size of plastic waste could be included, as well as the brand of the product (e.g. Coca Cola, Red Bull etc.). This all can provide useful information and relationships that can be used to take action.

Test Plan

Goal

Test the amount of correctly identified plastic pieces in the water.

Hypothesis

At least 85% of the plastic will be identified correctly out of 50 images of plastic in water.

Materials

- Camera

- Plastic waste

- Image recognition software

- Reservoir with water

Method

- Throw different types of plastic waste in the water

- Take 50 different images of this from above, with the camera

- Add the images to a folder

- Run the image recognition software

- Analyze how much pieces of plastic are correctly identified

Useful sources

Convolutional neural networks for visual recognition [67]

Datasets (Marijn)

- Dataset #1 underwater plastic - J-EDI dataset: http://www.godac.jamstec.go.jp/catalog/dsdebris/metadataList?lang=en

- Dataset #2 underwater debris, plants, and fish - MBARI research image gallery: https://www.mbari.org/products/image-gallery/

Data from the two sources above will have to be annotated by hand. The OID has annotated images:

- Dataset #3 plastic in all environments - Google Open Images Dataset: https://storage.googleapis.com/openimages/web/visualizer/index.html?set=valtest&type=segmentation&c=%2Fm%2F05gqfk (images can be downloaded from https://storage.googleapis.com/openimages/web/download.html)

Datasets (Dennis)

- Dataset #1 underwater debris - The Australian Marine Debris Database: https://www.tangaroablue.org/database/

- Since 2004 more than 15 million pieces of data have been inputted into the Australian Marine Debris Database, creating a comprehensive overview of what amounts and types of marine debris are impacting beaches around the country.

- Dataset #2 waste dataset - Kaggle (need an account)

- A dataset containing images of cardboard, glass, metal, paper, plastic and trash.

Logbook

Week 1

| Name | Total hours | Break-down |

|---|---|---|

| Kevin Cox | 6 | Meeting (1h), Problem statement and objectives (1.5h), Who are the users (1h), Requirements (0.5h), Adjustments on wiki-page (2h) |

| Menno Cromwijk | 9 | Meeting (1h), Thinking about project-ideas (4h), Working out previous CNN work (2h), creating planning (2h). |

| Dennis Heesmans | 8.5 | Meeting (1h), Thinking about project-ideas (3h), State-of-the-art: neural networks (3h), Adjustments on wiki-page (1.5h) |

| Marijn Minkenberg | 7 | Meeting (1h), Setting up wiki page (1h), State-of-the-art: ocean-cleaning solutions (part of which was moved to Problem Statement) (4h), Reading through wiki page (1h) |

| Lotte Rassaerts | 7 | Meeting (1h), Thinking about project-ideas (2h), State of the art: image recognition (4h) |

Week 2

| Name | Total hours | Break-down |

|---|---|---|

| Kevin Cox | 4.5 | Meeting (1.5h), Checking the wiki page (1h), Research and writing maritime transport (2h) |

| Menno Cromwijk | 10 | Meeting (1.5h), Installing CNN tools (2h), searching for biodiversity (4.5h), reading and updating wiki (2h) |

| Dennis Heesmans | 6.5 | Meeting (1.5h), Installing CNN tools (2h), USE analysis (3h) |

| Marijn Minkenberg | 9 | Meeting (1.5h), Checking the wiki page (1h), Installing CNN tools (2h), Research & writing WasteShark (4.5h) |

| Lotte Rassaerts | 5.5 | Meeting (1.5h), Research & writing Location and Plastic (4h) |

Week 3

| Name | Total hours | Break-down |

|---|---|---|

| Kevin Cox | 7.5 | Meeting (4h), project statement, objectives, users and requirements rewriting (3.5h) |

| Menno Cromwijk | 16 | Meeting (4h), planning (1h), reading wiki (1h), searching for database (6h), research Albatros (2h), reading and updating wiki page (2h) |

| Dennis Heesmans | 11 | Meeting (4.5h), Research & writing Plastic under water (2h), Calling IMS Services and installing keras (3h), Requirements and Test plan (1.5h) |

| Marijn Minkenberg | 13.5 | Meeting (4.5h), Research & writing Quantifying plastic waste (4h), Calling IMS Services and installing keras (3h), Reading through, and updating, wiki page (2h) |

| Lotte Rassaerts | 13 | Meeting (4h), Research & rewriting further exploration (3h), Research & writing robot requirements & functionalities (3h), Requirements and Test plan (1.5h), Start on ideas for robot design (1.5h) |

Week 4

| Name | Total hours | Break-down |

|---|---|---|

| Kevin Cox | 10 | Meeting (3h), contacting users (2h), Writing and researching localization and obstacle avoidance + contacting company about possibilities (5h) |

| Menno Cromwijk | 11.5 | Meeting (3.5h) Working on VGG model (4h) Working on tansfer learning (4h) |

| Dennis Heesmans | 11 | Meeting (4.5h), Installing Anaconda and corresponding packages (3h), Running test script (0.5h), Search database (1h), Trying to test CNN with other images (2h) |

| Marijn Minkenberg | 11 | Meeting (4.5h), Re-installing Anaconda and keras (1.5h), Running test script (0.5h), Finding 3 datasets (2.5h), Research & Writing Data Augmentation (2h) |

| Lotte Rassaerts | 9 | Meeting (3h), Thinking of/reseraching ideas/concepts for design (2h), Researching power source/battery life (4h) |

Week 5

| Name | Total hours | Break-down |

|---|---|---|

| Kevin Cox | 9 | Meeting (4.5h), Meeting with Hans Brinkhof (Rijkswaterstaat) (1h), Getting in touch with Hans Brinkhof (1h), Writing minutes regarding the meeting (0.5h), Getting in touch with Rinze de Vries (1h), meeting with Rinze de Vries (1h) |

| Menno Cromwijk | 4.5 | Meeting (4.5h) |

| Dennis Heesmans | 19 | Meeting (4.5h), Implement different datasets in neural network (8h), Searching datasets (1h), Adjustments planning (0.5h), Wiki cleanup (2h), Write about Noria (2h), Watch object detection videos (1h) |

| Marijn Minkenberg | 15.5 | Meeting (4.5h), Coding Data Augmentation (3h), Dataset plan (2h), Set-up and taking test photos (3h), Processing photos for dataset using Photoshop and Spyder (3h) |

| Lotte Rassaerts | 15 | Meeting (4.5h), Meeting with Hans Brinkhof (Rijkswaterstaat) (1h), Adjusting planning and requirements (0.5h), Working on design (camera and power source) (6h), Working on design (data transfer and decision matrix) (3h) |

Week 6

| Name | Total hours | Break-down |

|---|---|---|

| Kevin Cox | hrs | Meeting (3h), Meeting with Rinze (1h) |

| Menno Cromwijk | hrs | Meeting (3h) |

| Dennis Heesmans | 11.5 | Meeting (3h), Watching videos YOLO (3h), Labeling images (4h), Meeting with Rinze (1h), Writing about YOLO (0.5h) |

| Marijn Minkenberg | hrs | Meeting (3h), Taking new pictures for test set (2h), Training model via Google Colab (2h), Expanding test set and labelling (4h) |

| Lotte Rassaerts | 4.5 | Meeting (3h), researching data transfer GoPro (3h) |

Template

| Name | Total hours | Break-down |

|---|---|---|

| Kevin Cox | hrs | description (Xh) |

| Menno Cromwijk | hrs | description (Xh) |

| Dennis Heesmans | hrs | description (Xh) |

| Marijn Minkenberg | hrs | description (Xh) |

| Lotte Rassaerts | hrs | description (Xh) |

References

- ↑ 1.0 1.1 Oceans. (2020, March 18). Retrieved April 23, 2020, from https://theoceancleanup.com/oceans/

- ↑ Wikipedia contributors. (2020, April 13). Microplastics. Retrieved April 23, 2020, from https://en.wikipedia.org/wiki/Microplastics

- ↑ Suaria, G., Avio, C. G., Mineo, A., Lattin, G. L., Magaldi, M. G., Belmonte, G., … Aliani, S. (2016). The Mediterranean Plastic Soup: synthetic polymers in Mediterranean surface waters. Scientific Reports, 6(1). https://doi.org/10.1038/srep37551

- ↑ Foekema, E. M., De Gruijter, C., Mergia, M. T., van Franeker, J. A., Murk, A. J., & Koelmans, A. A. (2013). Plastic in North Sea Fish. Environmental Science & Technology, 47(15), 8818–8824. https://doi.org/10.1021/es400931b

- ↑ Rochman, C. M., Hoh, E., Kurobe, T., & Teh, S. J. (2013). Ingested plastic transfers hazardous chemicals to fish and induces hepatic stress. Scientific Reports, 3(1). https://doi.org/10.1038/srep03263

- ↑ Stevens, A. (2019, December 3). Tiny plastic, big problem. Retrieved May 10, 2020, from https://www.sciencenewsforstudents.org/article/tiny-plastic-big-problem

- ↑ Peels, J. (2019). Plasticsoep in de Maas en de Waal veel erger dan gedacht, vrijwilligers vinden 77.000 stukken afval. Retrieved May 6, from https://www.omroepbrabant.nl/nieuws/2967097/plasticsoep-in-de-maas-en-de-waal-veel-erger-dan-gedacht-vrijwilligers-vinden-77000-stukken-afval

- ↑ 8.0 8.1 WasteShark ASV | RanMarine Technology. (2020, February 27). Retrieved May 2, 2020, from https://www.ranmarine.io/

- ↑ 9.0 9.1 9.2 CORDIS. (2019, March 11). Marine Litter Prevention with Autonomous Water Drones. Retrieved May 2, 2020, from https://cordis.europa.eu/article/id/254172-aquadrones-remove-deliver-and-safely-empty-marine-litter

- ↑ 10.0 10.1 10.2 Swan, E. C. (2018, October 31). Trash-eating “shark” drone takes to Dubai marina. Retrieved May 2, 2020, from https://edition.cnn.com/2018/10/30/middleeast/wasteshark-drone-dubai-marina/index.html

- ↑ Wikipedia contributors. (2020, May 2). Lidar. Retrieved May 2, 2020, from https://en.wikipedia.org/wiki/Lidar

- ↑ Albatross, floating microplastic database, from https://en.opendata.plastic.research.pirika.org/

- ↑ 13.0 13.1 Noria - Schonere wateren door het probleem bij de bron aan te pakken. (2020, January 27). Retrieved May 21, 2020, from https://nlinbusiness.com/steden/munchen/interview/noria-schonere-wateren-door-het-probleem-bij-de-bron-aan-te-pakken-ZG9jdW1lbnQ6LUx6YXdoalp2cGpvcEVXbVZYaFI=

- ↑ 14.0 14.1 Emmerik, T., & Schwarz, A. (2019). Plastic debris in rivers. WIREs Water, 7(1). https://doi.org/10.1002/wat2.1398

- ↑ Morritt, D., Stefanoudis, P. V., Pearce, D., Crimmen, O. A., & Clark, P. F. (2014). Plastic in the Thames: A river runs through it. Marine Pollution Bulletin, 78(1–2), 196–200. https://doi.org/10.1016/j.marpolbul.2013.10.035

- ↑ Martin, C., Parkes, S., Zhang, Q., Zhang, X., McCabe, M. F., & Duarte, C. M. (2018). Use of unmanned aerial vehicles for efficient beach litter monitoring. Marine Pollution Bulletin, 131, 662–673. https://doi.org/10.1016/j.marpolbul.2018.04.045

- ↑ 17.0 17.1 Kylili, K., Kyriakides, I., Artusi, A., & Hadjistassou, C. (2019). Identifying floating plastic marine debris using a deep learning approach. Environmental Science and Pollution Research, 26(17), 17091–17099. https://doi.org/10.1007/s11356-019-05148-4

- ↑ Nicholson, C. (n.d.). A Beginner’s Guide to Neural Networks and Deep Learning. Retrieved April 22, 2020, from https://pathmind.com/wiki/neural-network

- ↑ Cheung, K. C. (2020, April 17). 10 Use Cases of Neural Networks in Business. Retrieved April 22, 2020, from https://algorithmxlab.com/blog/10-use-cases-neural-networks/#What_are_Artificial_Neural_Networks_Used_for

- ↑ Amidi, Afshine , & Amidi, S. (n.d.). CS 230 - Recurrent Neural Networks Cheatsheet. Retrieved April 22, 2020, from https://stanford.edu/%7Eshervine/teaching/cs-230/cheatsheet-recurrent-neural-networks

- ↑ Hopfield Network - Javatpoint. (n.d.). Retrieved April 22, 2020, from https://www.javatpoint.com/artificial-neural-network-hopfield-network

- ↑ Hinton, G. E. (2007). Boltzmann Machines. Retrieved from https://www.cs.toronto.edu/~hinton/csc321/readings/boltz321.pdf

- ↑ Amidi, A., & Amidi, S. (n.d.). CS 230 - Convolutional Neural Networks Cheatsheet. Retrieved April 22, 2020, from https://stanford.edu/%7Eshervine/teaching/cs-230/cheatsheet-convolutional-neural-networks

- ↑ Yamashita, Rikiya & Nishio, Mizuho & Do, Richard & Togashi, Kaori. (2018). Convolutional neural networks: an overview and application in radiology. Insights into Imaging. 9. 10.1007/s13244-018-0639-9

- ↑ Qian, N. (1999, January 12). On the momentum term in gradient descent learning algorithms. - PubMed - NCBI. Retrieved April 22, 2020, from https://www.ncbi.nlm.nih.gov/pubmed/12662723

- ↑ Hinton, G., Srivastava, N., Swersky, K., Tieleman, T., & Mohamed , A. (2016, December 15). Neural Networks for Machine Learning: Overview of ways to improve generalization [Slides]. Retrieved from http://www.cs.toronto.edu/~hinton/coursera/lecture9/lec9.pdf

- ↑ Kingma, D. P., & Ba, J. (2015). Adam: A Method for Stochastic Optimization. Presented at the 3rd International Conference for Learning Representations, San Diego.

- ↑ Nesterov, Y. (1983). A method for unconstrained convex minimization problem with the rate of convergence o(1/k^2).

- ↑ Dozat, T. (2016). Incorporating Nesterov Momentum into Adam. Retrieved from https://openreview.net/pdf?id=OM0jvwB8jIp57ZJjtNEZ

- ↑ 30.0 30.1 30.2 Seif, G. (2018, January 21). Deep Learning for Image Recognition: why it’s challenging, where we’ve been, and what’s next. Retrieved April 22, 2020, from https://towardsdatascience.com/deep-learning-for-image-classification-why-its-challenging-where-we-ve-been-and-what-s-next-93b56948fcef

- ↑ Lee, G., & Fujita, H. (2020). Deep Learning in Medical Image Analysis. New York, United States: Springer Publishing.

- ↑ 32.0 32.1 32.2 Simonyan, K., & Zisserman, A. (2015, January 1). Very deep convolutional networks for large-scale image recognition. Retrieved April 22, 2020, from https://arxiv.org/pdf/1409.1556.pdf

- ↑ Culurciello, E. (2018, December 24). Navigating the Unsupervised Learning Landscape - Intuition Machine. Retrieved April 22, 2020, from https://medium.com/intuitionmachine/navigating-the-unsupervised-learning-landscape-951bd5842df9

- ↑ Bosse, S., Becker, S., Müller, K.-R., Samek, W., & Wiegand, T. (2019). Estimation of distortion sensitivity for visual quality prediction using a convolutional neural network. Digital Signal Processing, 91, 54–65. https://doi.org/10.1016/j.dsp.2018.12.005

- ↑ Brooks, R. (2018, July 15). [FoR&AI] Steps Toward Super Intelligence III, Hard Things Today – Rodney Brooks. Retrieved April 22, 2020, from http://rodneybrooks.com/forai-steps-toward-super-intelligence-iii-hard-things-today/

- ↑ Christensen, J. H., Mogensen, L. V., Galeazzi, R., & Andersen, J. C. (2018). Detection, Localization and Classification of Fish and Fish Species in Poor Conditions using Convolutional Neural Networks. 2018 IEEE/OES Autonomous Underwater Vehicle Workshop (AUV). https://doi.org/10.1109/auv.2018.8729798

- ↑ Castrillon-Santana , M., Lorenzo-Navarro, J., Gomez, M., Herrera, A., & Marín-Reyes, P. A. (2018, January 1). Automatic Counting and Classification of Microplastic Particles. Retrieved April 23, 2020, from https://www.scitepress.org/Papers/2018/67250/67250.pdf

- ↑ Canu, S. (2019, June 27). YOLO object detection using Opencv with Python. Retrieved May 26, 2020, from https://pysource.com/2019/06/27/yolo-object-detection-using-opencv-with-python/

- ↑ Brownlee, J. (2019, October 7). How to Perform Object Detection With YOLOv3 in Keras. Retrieved May 29, 2020, from https://machinelearningmastery.com/how-to-perform-object-detection-with-yolov3-in-keras/

- ↑ Redmon, J. (2019, November 15). pjreddie/darknet. Retrieved May 29, 2020, from https://github.com/pjreddie/darknet/wiki/YOLO:-Real-Time-Object-Detection

- ↑ 41.0 41.1 Kathuria, A. (2018, April 23). What’s new in YOLO v3? Retrieved May 29, 2020, from https://towardsdatascience.com/yolo-v3-object-detection-53fb7d3bfe6b

- ↑ Lebreton. (2018, January 1). OSPAR Background document on pre-production Plastic Pellets. Retrieved May 3, 2020, from https://www.ospar.org/documents?d=39764

- ↑ 43.0 43.1 43.2 Schone Rivieren. (2019). Wat spoelt er aan op rivieroevers? Resultaten van twee jaar afvalmonitoring aan de oevers van de Maas en de Waal. Retrieved from https://www.schonerivieren.org/wp-content/uploads/2020/05/Schone_Rivieren_rapportage_2019.pdf

- ↑ Staatsbosbeheer. (2019, September 12). Dossier afval in de natuur. Retrieved May 3, 2020, from https://www.staatsbosbeheer.nl/over-staatsbosbeheer/dossiers/afval-in-de-natuur

- ↑ Buffon X. (2019, May 20) Robotic Detection of Marine Litter Using Deep Visual Detection Models. Retrieved May 9, 2020, from https://ieeexplore.ieee.org/abstract/document/8793975

- ↑ Thung G. (2017, Apr 10) Dataset of images of trash Torch-based CNN for garbage image classification. Retrieved May 9, 2020, from https://github.com/garythung/trashnet

- ↑ Coleman, D. (2020, April 8). Can You Run a GoPro HERO8, HERO7, or HERO6 with External Power but Without an Internal Battery? Retrieved May 22, 2020, from https://havecamerawilltravel.com/gopro/external-power-internal-battery/

- ↑ Air Photography. (2018, April 29). Weatherproof External Power for GoPro Hero 5/6/7 | X~PWR-H5. Retrieved May 22, 2020, from https://www.youtube.com/watch?v=S6Y7a3ZtoeE

- ↑ GoPro. (n.d.). HERO8 Black Tech Specs. Retrieved May 22, 2020, from https://gopro.com/en/nl/shop/hero8-black/tech-specs?pid=CHDHX-801-master

- ↑ GoPro. (n.d.-a). HERO7 Black Action Camera | GoPro. Retrieved May 22, 2020, from https://gopro.com/en/nl/shop/cameras/hero7-black/CHDHX-701-master.html

- ↑ 51.0 51.1 51.2 Harbortronics. (n.d.-b). Cyclapse Pro - Starter | Cyclapse. Retrieved May 22, 2020, from https://cyclapse.com/products/cyclapse-pro-starter/ Cite error: Invalid

<ref>tag; name "cyclapsepro" defined multiple times with different content - ↑ Canon USA. (n.d.). Canon U.S.A., Inc. | EOS Rebel T7 EF-S 18-55mm IS II Kit. Retrieved May 22, 2020, from https://www.usa.canon.com/internet/portal/us/home/products/details/cameras/eos-dslr-and-mirrorless-cameras/dslr/eos-rebel-t7-ef-s-18-55mm-is-ii-kit

- ↑ GoPro. (2020, May 22). Auto Uploading Your Footage to the Cloud With GoPro Plus. Retrieved May 23, 2020, from https://community.gopro.com/t5/en/Auto-Uploading-Your-Footage-to-the-Cloud-With-GoPro-Plus/ta-p/388304#

- ↑ GoPro. (2020a, May 14). How to Add Media to GoPro PLUS. Retrieved May 23, 2020, from https://community.gopro.com/t5/en/How-to-Add-Media-to-GoPro-PLUS/ta-p/401627

- ↑ 55.0 55.1 Harbortronics. (n.d.-d). Support / DigiSnap Pro / Frequently Asked Questions | Cyclapse. Retrieved May 23, 2020, from https://cyclapse.com/support/digisnap-pro/frequently-asked-questions-faq/

- ↑ CamDo. (n.d.-b). SolarX Solar Upgrade Kit. Retrieved May 22, 2020, from https://cam-do.com/products/solarx-gopro-solar-system

- ↑ https://cam-do.com/pages/photography-time-lapse-calculator?_ga=2.4808368.207575993.1590147015-1651516203.1590147015

- ↑ CamDo. (n.d.-a). GoPro Motion Detector X-Band Sensor with Cable for Blink and BlinkX. Retrieved May 22, 2020, from https://cam-do.com/collections/blink-related-products/products/blinkx-time-lapse-camera-controller-for-gopro-hero5-6-7-8-cameras?_pos=1&_sid=8bc03109b&_ss=r