Embedded Motion Control 2014 Group 7: Difference between revisions

| Line 380: | Line 380: | ||

The red arrow is printed on a white background. With the pico camera, this gives a transient (green) edge colour which is very useful for arrow recognition. So a binary (two-colour) image is created by iterating over every pixel and changing the color to white if it has the specific green colour. All other pixels remain black, this is called the treshold image. This image is then blurred and an edge detection algorithm gives an output of the edges that it can find, they look as follows: Vec4i line[x1,y1,x2,y2]. | The red arrow is printed on a white background. With the pico camera, this gives a transient (green) edge colour which is very useful for arrow recognition. So a binary (two-colour) image is created by iterating over every pixel and changing the color to white if it has the specific green colour. All other pixels remain black, this is called the treshold image. This image is then blurred and an edge detection algorithm gives an output of the edges that it can find, they look as follows: Vec4i line[x1,y1,x2,y2]. | ||

The list of edges is the basis for the arrow recognition, all lines are defined from left to right (so: x2 > x1). The lines are filtered, and the ones that remain are lines with a slope of +/-30 with a +/-margin. All lines with a negative slope are compared with all lines that have a positive slope. Both the +30 deg and the -30 deg line have a beginning and end point. If the left (begin) points are close enough to eachother, the output is "LEFT ARROW", and vice versa. | The list of edges is the basis for the arrow recognition, all lines are defined from left to right (so: x2 > x1). The lines are filtered, and the ones that remain are lines with a slope of +/-30 with a +/-margin. All lines with a negative slope are compared with all lines that have a positive slope. Both the +30 [deg] and the -30 [deg] line have a beginning and end point. If the left (begin) points are close enough to eachother, the output is "LEFT ARROW", and vice versa. | ||

Revision as of 21:48, 1 June 2014

Group members

| Name | |

|---|---|

| Maurice van de Ven | m dot l dot j dot v dot d dot ven @ student dot tue dot nl |

| Pim Duijsens | p dot j dot h dot duijsens @ student dot tue dot nl |

| Glenn Roumen | g dot h dot j dot roumen @ student dot tue dot nl |

| Wesley Peijnenburg | w dot peijnenburg @ student dot tue dot nl |

| Roel Offermans | r dot r dot offermans @ student dot tue dot nl |

Planning

| Week | Deadline | General plan | Groupmember | Specific task |

|---|---|---|---|---|

| 4 | 16-05-2014 | Complete Corridor Challenge | ||

| - - - - - | - - - - - | - - - - - | - - - - - | - - - - - |

| 5 | 19-05-2014 | Research Maze Strategies and define modules | ||

| 19-05-2014 before 10:45 h. | Roel | Research possibilities for wall and corner detection with OpenCV | ||

| 19-05-2014 before 10:45 h. | Glenn | Research possibilities for wall and corner detection with OpenCV | ||

| 19-05-2014 before 10:45 h. | Wesley | Research possibilities for arrow detection with OpenCV | ||

| 19-05-2014 before 10:45 h. | Maurice | Research possibilities for path planning and path tracker/driver | ||

| 19-05-2014 before 10:45 h. | Pim | Research possibilities for path planning and path tracker/driver | ||

| - - - - - | - - - - - | - - - - - | - - - - - | - - - - - |

| 6 | 26-05-2014 | Research functionality of ROS Packages: Gmapping and move_base | ||

| 28-05-2014 | Decide: use of packages or write complete new code | |||

| 30-05-2014 | Conceptual version of all modules are finished | |||

| - - - - - | - - - - - | - - - - - | - - - - - | - - - - - |

| 7 | 06-06-2014 | Integration of the modules | ||

| - - - - - | - - - - - | - - - - - | - - - - - | - - - - - |

| 8 | 13-06-2014 | Final presentation of the Maze Strategy | ||

| - - - - - | - - - - - | - - - - - | - - - - - | - - - - - |

| 9 | 20-06-2014 | Maze Competition |

Progress

| Name | Week 3 | Week 4 | Week 5 | Week 6 |

|---|---|---|---|---|

| Maurice van de Ven | All tutorials, not all C++ | |||

| Pim Duijsens | All tutorials, but not all C++ | |||

| Glenn Roumen | All tutorials | |||

| Wesley Peijnenburg | Tutorial #7 | |||

| Roel Offermans | All tutorials, not all C++ |

Time Table

| Lectures | Group meetings | Mastering ROS and C++ | Preparing midterm assignment | Preparing final assignment | Wiki progress report | Other activities | |

|---|---|---|---|---|---|---|---|

| Week 1 | |||||||

| Pim | 2 | ||||||

| Roel | 2 | ||||||

| Maurice | 2 | ||||||

| Wesley | 2 | ||||||

| Glenn | 2 | ||||||

| Week 2 | |||||||

| Pim | 2 | 2 | 4 | 3 | |||

| Roel | 0 | 2 | 10 | 6 | |||

| Maurice | 2 | 2 | 6 | 3 | |||

| Wesley | 2 | 2 | 4 | 2 | |||

| Glenn | 2 | 2 | 5 | 2 | |||

| Week 3 | |||||||

| Pim | 2 | 1 | 12 | 2 | |||

| Roel | 2 | 4 | 10 | 2 | |||

| Maurice | 3 | 10 | 4 | ||||

| Wesley | 2 | 3 | 10 | 1 | |||

| Glenn | 2 | 2 | 13 | 4 | |||

| Week 4 | |||||||

| Pim | 2 | 5 | 1 | 7 | 3 | ||

| Roel | 2 | 5 | |||||

| Maurice | 5 | 15 | |||||

| Wesley | 2 | 5 | 9 | 1 | |||

| Glenn | 2 | 5 | 6 | 1 | |||

| Week 5 | |||||||

| Pim | 2 | 2.5 | 7 | 0.5 | |||

| Roel | |||||||

| Maurice | 2 | 2.5 | 8 | ||||

| Wesley | 2 | 5 | 7 | 3 | |||

| Glenn | 2 | 2.5 | 7 | ||||

| Week 6 | |||||||

| Pim | |||||||

| Roel | |||||||

| Maurice | |||||||

| Wesley | 2 | 4 | 8 | 14 | |||

| Glenn | |||||||

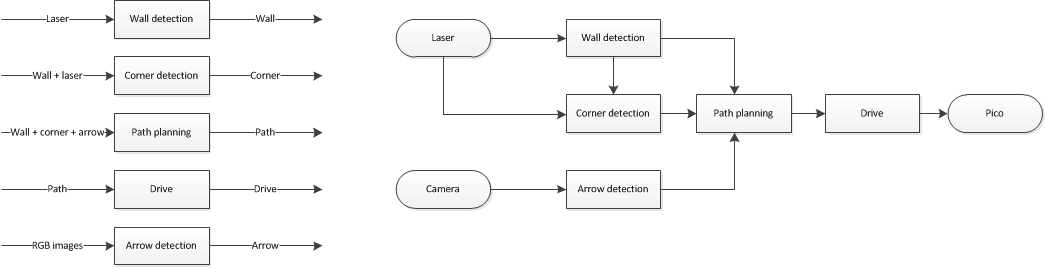

Different nodes and flow chart

Strategy for the Corridor Challenge

GMapping / SLAM

GMapping is being investigated as SLAM algorithm.

In order to run GMapping correctly a launch file gmap.launch is setup which initializes the correct settings.

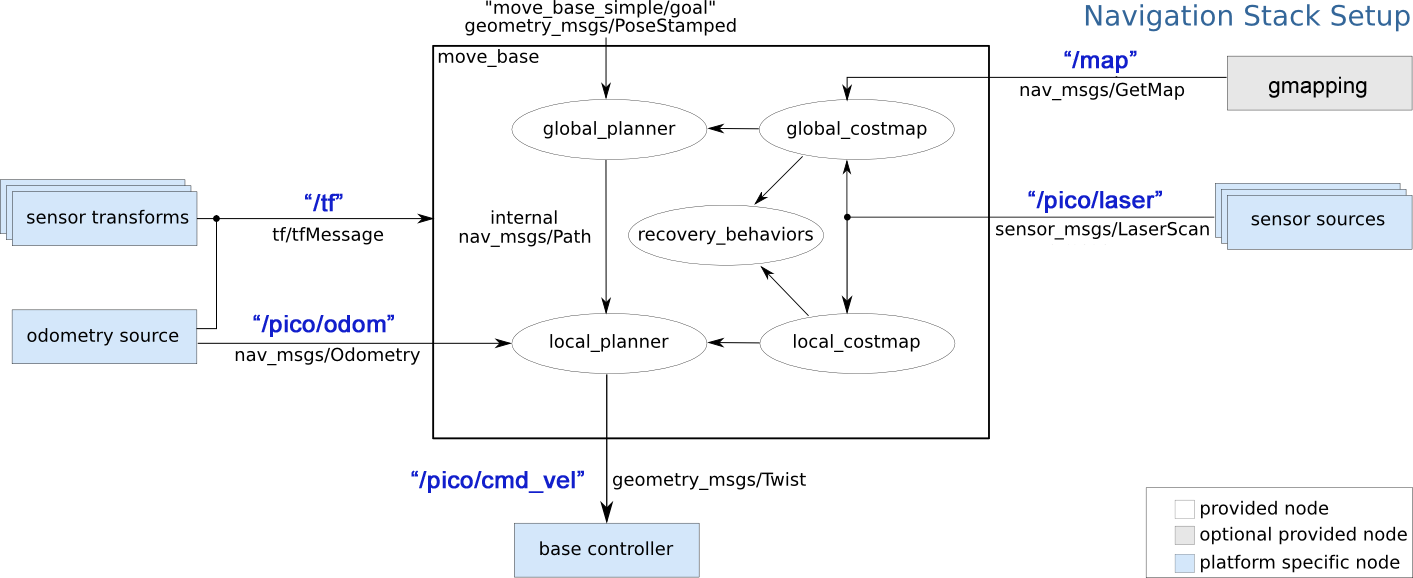

As navigation stack move_base is used. An global overview of the move_base stack is given in the figure on the right.

To launch the move_base stack, again a launch file is created which will initialize the correct settings. In global the move_base.launch file consist of the following files, each containing parameters for a specific task.

gmap.launch costmap_common_params.yaml global_costmap_params.yaml local_costmap_params.yaml base_local_planner_params.yaml remap /cmd _vel -> pico/cmd_vel

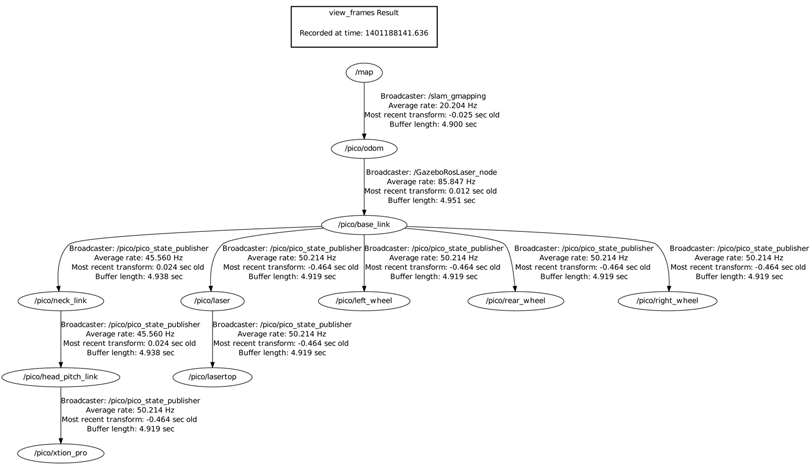

While gmapping and move_base are running a tf tree can be made to see how the different frames are linked. Viewing a tf tree can be done by:

$ rosrun tf view_frames

For correct settings of gmapping and move_base this results in:

Runnig Gmapping and move_base for simulations:

1. Start Gazebo:

$ gazebo

2. Using another terminal, spawn the maze:

$ rosrun gazebo_map_spawner spawn_maze

3. Launch PICO:

$ roslaunch pico_gazebo pico.launch

4. Start RViz to visualize how PICO perceives the world through its sensors.

$ rosrun pico_visualization rviz

5. Launch the gmapping plugin for PICO

$ roslaunch pico_2ndnav gmap.launch

6. Launch the move_base package for PICO to navigate through the maze with the preferred settings.

$ roslaunch pico_2ndnav move_base.launch

Remarks

In local_costmap_params.yaml static_map should be false. (As a local costmap should not be static, it moves with the robot)

In costmap_common_params.yaml inflation_radius should probably be smaller than 0.55, the robot has a much smaller radius (in safe_drive we used 0.25 if i remember correctly?)

In pico_configuration.launch We should look very closely to the pkg and type that are used (for example, shouldn't the types end with .msg). Furthermore, it seems like it wants to run the messages as an executable, this is probably not our intention).

OpenCV - Arrow recognition

The OpenCV C++ files can be compiled using one of the given commands below. For the g++ command, the packages need to be specified manually.

$ g++ demo_opencv.cpp `pkg-config opencv --cflags --libs` -o demo_opencv

Or you can make have ROS find the libaries for you:

$ rosmake demo_opencv

Executing the program:

$ ./demo_opencv <argcount> <arg(s)>

Troubleshooting:

There have been problems with the OpenCV and Highgui libraries (static libraries). This problem can be solved by replacing the following code:

// #include "cv.h" // (commented out) #include "opencv2/opencv.hpp" // #include <highgui.h> // (commented out) #include <opencv/highgui.h>

The program might not always respond to input, this is can be due to a subscription to the wrong topic. Make sure you are streaming the images and check the ros-topic with:

rostopic list

Then the output gives "/pico/camera", "/pico/asusxtion/rgb/image_color", or a different name. Replace the referece in the following line of code:

ros::Subscriber cam_img_sub = nh.subscribe("/pico/asusxtion/rgb/image_color", 1, &imageCallback);

Runnig OpenCV on an image stream from a bag file:

In three separate terminals:

roscore rosbag play <location of bag file> ./demo_opencv

Arrow recognition strategy:

The image is cropped using:

cv::Rect myROI(120,220,400,200); // Rect_(x,y,width,height) cv::Mat croppedRef(img_rgb, myROI); // The window is 480 rows and 640 cols

The image is converted from red-green-blue (RGB) to hue-saturation-value (HSV). The red arrow is printed on a white background. With the pico camera, this gives a transient (green) edge colour which is very useful for arrow recognition. So a binary (two-colour) image is created by iterating over every pixel and changing the color to white if it has the specific green colour. All other pixels remain black, this is called the treshold image. This image is then blurred and an edge detection algorithm gives an output of the edges that it can find, they look as follows: Vec4i line[x1,y1,x2,y2].

The list of edges is the basis for the arrow recognition, all lines are defined from left to right (so: x2 > x1). The lines are filtered, and the ones that remain are lines with a slope of +/-30 with a +/-margin. All lines with a negative slope are compared with all lines that have a positive slope. Both the +30 [deg] and the -30 [deg] line have a beginning and end point. If the left (begin) points are close enough to eachother, the output is "LEFT ARROW", and vice versa.