PRE2019 3 Group17: Difference between revisions

| Line 347: | Line 347: | ||

(Under development) | (Under development) | ||

== | == Final Results == | ||

=== Guidelines === | |||

(Under Construction) | |||

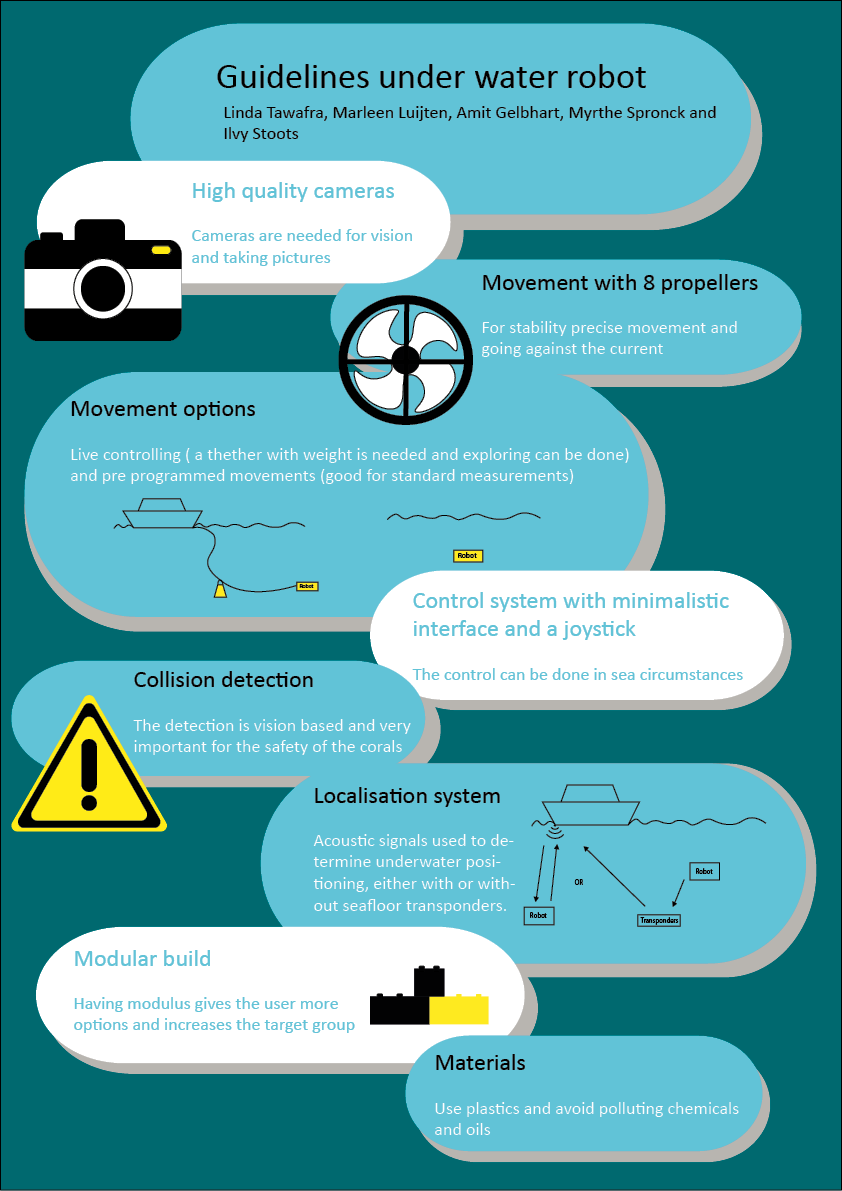

===Infographic=== | ===Infographic=== | ||

[[File:infog5.png|550 px|]] | [[File:infog5.png|550 px|]] | ||

Revision as of 11:18, 3 April 2020

Group

Team Members

| Name | ID | Major | |

|---|---|---|---|

| Amit Gelbhart | 1055213 | a.gelbhart@student.tue.nl | Sustainable Innovation |

| Marleen Luijten | 1326732 | m.luijten2@student.tue.nl | Industrial Design |

| Myrthe Spronck | 1330268 | m.s.c.spronck@student.tue.nl | Computer Science |

| Ilvy Stoots | 1329707 | i.n.j.stoots@student.tue.nl | Industrial Design |

| Linda Tawafra | 0941352 | l.tawafra@student.tue.nl | Industrial Design |

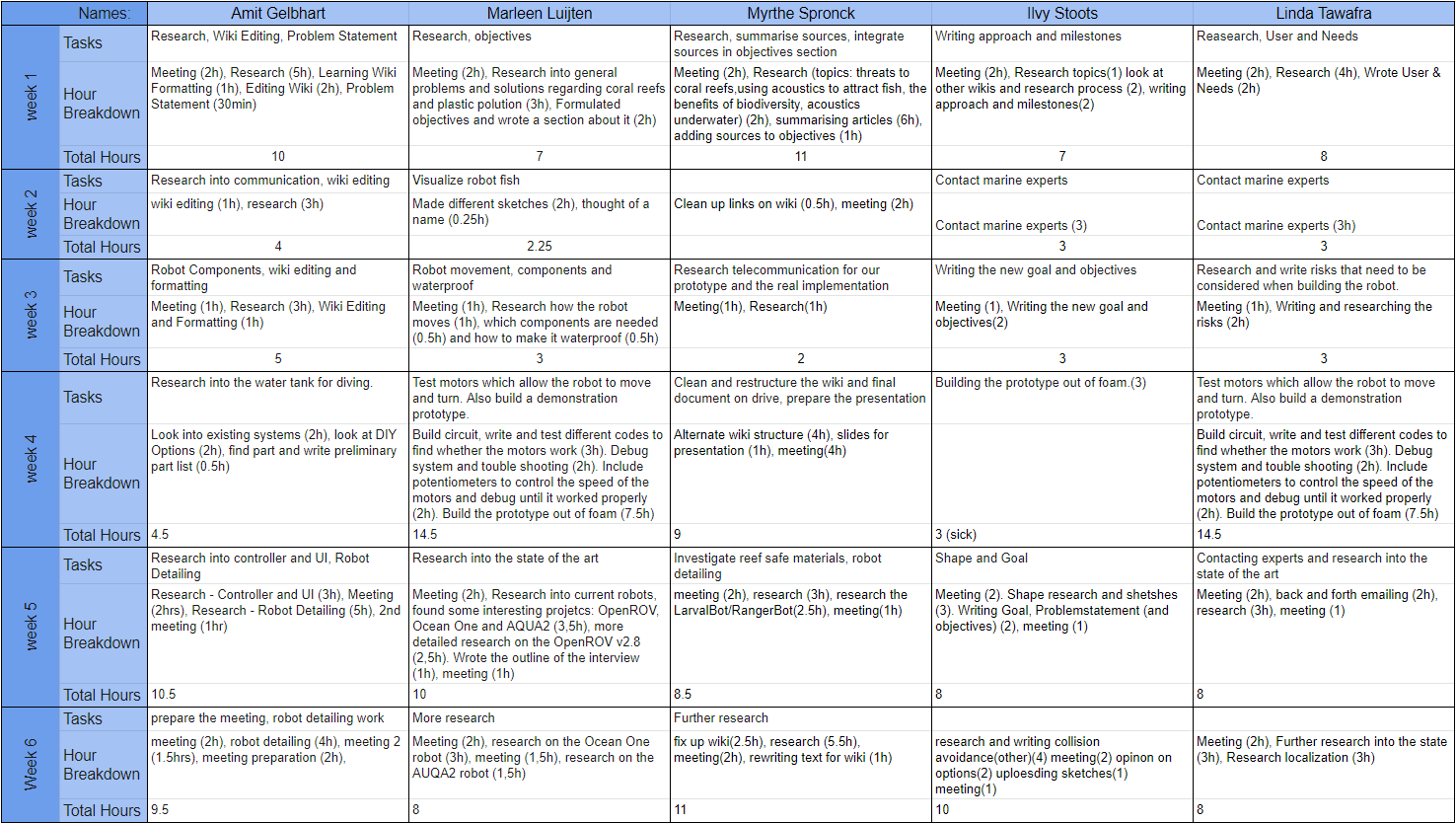

Time Sheets

Peer Review

| Name | Peer review individual relative grades |

|---|---|

| Amit Gelbhart | 0 |

| Marleen Luijten | 0 |

| Myrthe Spronck | 0 |

| Ilvy Stoots | 0 |

| Linda Tawafra | 0 |

Project Goal

The Problem

Due to the acidification and warming of oceans due to climate change, pollution and destructive fishing practices (Ateweberhan et al., 2013) the amount and variety of coral reefs is declining. Understanding and tracking how these developments happen is key to figuring out ways to protect and help the coral reefs. Monitoring the reefs is labor-intesive and time-intesive work, which often involves having to dive down to take measurements and pictures. This kind of work: structured, often repeated and in environments humans are not suited to, could benefit greatly from robotic assistance. However, for scientific work in a fragile environment like the coral reefs, not just any robot will do. In this project, we will investigate what such a specialized robot would need to be attractive and helpful to coral reef researchers.

To design a robot specialized to coral reef environments, we will investigate the needs of both coral reef researchers, as well as the needs of the corals themselves. When using the robot in an already threatened ecosystem, it is not acceptable for it to cause any harm to the corals. Each of the aspects of the robot will need to be assessed in light of these needs. Interviewing experts will give us insights into both of these categories, and we will also do our own research into the coral reefs. Since this projects is focussed on the specialized needs for robots that operate near coral reefs, not all parts of underwater robot design in general, we will base much of our design on existing underwater robots and focus on what needs to be modified.

Deliverables

- An in-depth exploration of current robots like our objective and an assessment of how suitable they are for coral reefs.

- A suggestion, based on our research, of a robot specialized for coral reefs.

- A report on what coral reef researchers desire and how our suggested design could meet those desires.

Problem Background

One of the great benefits of the coral reef is that in case of natural hazards, such as coastal storms, the reef on average can reduce the wave energies by 97% (Ferrario, 2014). Meaning that it can prevent storms and flooding and thus protect the coastal inhabitants. Since roughly 40% of the world’s population is located within a range of 100 km from the coast (Ferrario, 2014), protecting the coral reef will result in a reduction of a great amount of damage. This would not only be in regard to human lives but also to environmental destruction. In the case of these natural hazards, it is the government that will be imputable for the caused devastation. Sadly, the coral reefs have been degrading for a long time, and their recovery is lacking.

Proper monitoring of the reefs to track their development over time and identify factors that help and harm them is the main task that our research focussed on. However, a specialized coral reef robot could do much more. The initial idea behind this project was to design a robot that would not assist the researchers working with the corals, but would rather directly help the reefs themselves. While this focus was shifted later on in the project to allow us to work more directly on the user aspect, potential other uses for a coral reef robot were always on our minds.

For instance, one of the factors that could prevent the downgrading of a reef, is the resistance of a reef, its ability to prevent permanent phase-shifts; and the resilience of a reef, its ability to bounce back from phase-shifts (Nyström et al., 2008). These phase-shifts are undesirable because the reef ends up in a state where it can no longer return to a coral-dominated state (Hughes et al., 2010). If the reef has better resilience, it will be able to bounce back quicker. One of the ways to improve the resilience of the reef is increasing the species richness and abundance through the use of acoustics (Gordon, et al., 2019), which improves the reef’s resilience by giving it protection from macroalgae (Burkepile and Hay, 2008). For a coral reef to flourish, a wide biodiversity of animals is needed. Fish that lay their larvae on corals are one of the essential components in a healthy reef ecosystem. However, once corals are dying, the fish do not use them for their larvae and the whole system ends up in a negative cycle. By playing sounds, with different frequencies, fish are tricked into believing that the corals are alive and come back with their larvae. This attracts other marine animals, which causes the entire system to flourish again (Gordon, et al., 2019).

A good, coral-reef oriented roboted with a modular design could be modified to go down near the reefs to place speakers for acoustic enrichment, which would allow them to be placed faster and in areas potentially dangerous to humans.

Additionally, while our robot discussion is oriented towards the current standard coral reef research methods, which is mainly photographing, there are other ways of monitoring reefs that a robot could assist in. Monitoring the sounds of a reef has been suggested as a way of analyzing the fauna in the reef (Lammers et al., 2008), and any other number of other sensors could be attached to the robot. Specifications for a good coral reef oriented robot can therefore be applied to a wide variaty of applications.

User Needs Research

Since the goal of this project is to design a robot that is especially adapted to the coral reefs, the robot can be used for a lot of things. The user of this robot will be researchers who could use our robot to research coral reefs, interact with them and support them. The robot will be used by everybody who is trying to help the corals by making changes in the reefs or by researching them. Since the robot will have a lot of different possibilities the users and use cases can differ a bit. The robot will have variating jobs and attachment modules. Therefore the uses will also differ. The user could be a researcher that is trying to find out the current state of the coral reefs but it could also be a member of an organization that is trying to help the corals through acoustic enrichtment or delivering larvae. All of the members of our user groups are passionate about the coral reefs. One of their priorities will therefore be the safety of the corals and therefore the robot will need to not harm its environment. The robot also will need possibilities to connect a variation of attachment modules sinds the robot will be used in a lot of different use cases. We have made contact with a member of one of our user groups. An interview was held with researchers at a university to find out what their perspectives on this project are.

Interview

We interviewd Erik Meesters and Oscar Bos, both doctors at the Wageningen University & Research. Dr. Meesters works with coral reefs and specifically studies the long-term development of the reefs in Bonaire and Curacao, and dr. Bos focusses on the preservation of the biodiversity in the North Sea. They recently bought a robot that is helping them with their research. An interview was done with them to find user needs and therefore a series of questions was asked about what they would want from this robot.

We only managed to arrage this meeting further along in our project, when we had already started research into current underwater robots and coral reefs. This made the interview flow better, as we could refer to our findings during it and relate it to the information we already had.

While we intended to let the discussion flow naturally and get as much information on what the researchers want without pushing them in any particular direction with guided questions, we did prepare a list of questions to ask if the discussion ever slowed down and to make sure we covered all relevant parts of our project:

- What are issues you are facing while studying/ managing/ maintaining coral reefs?

- How do you deal with them now?

- How could a robot help?

- Is a underwater robot which is specialized for coral reefs relevant?

- Do you like the robot you bought recently?

- Why/ why not?

- What is the primary reason you chose to invest in this robot over the other robots on the market?

- Which aspects of your robot should be carried over into our design? And are those aspects already good enough or do they need to be optimized further?

- Is it easy to control the robot? If not, is the problem more in the UI or the input method?

- Are you satisfied with the control method? (At this point, we can show the various control methods we have seen for existing robots and ask for their preferences)

- Regarding the UI, what kind of information would you like to be visible on screen while you are controlling the robot?

- What things should we keep in mind regarding useful and safe movement of the robot?

- In videos of current drones moving around coral reefs, they usually float near the top, they do not go into grooves or move between the corals. Would that be a function that would be helpful?

- How much fluidity and freedom do you need in movement? (Here we can show the robots with very complex movement, like the Scubo, and those with more basic movement like the RangerBot)

- Most underwater robots use thrusters, how do you feel about some alternative movement methods? (Here we can show the AQUA2 and the Jellyfish)

- What are your concerns about having a robot moving near the corals? Is it just collisions, or also the way it moves through the water?

- How fast do you need the robot to move? Is fast movement necessary for your research? Is there potential danger in having fast-moving propellors?

- Is changing the depth of the robot via buoyancy control a viable method in your opinion?

- What materials would a robot need to be made from to be considered safe?

- Are there certain chemicals to avoid specifically?

- Are there certain battery types you would recommend avoiding?

- Are there any parts of your current drone that concern you in this area?

- What would you consider a reasonable price for a robot that could assist in your research?

- If a robot was available at a reasonable price, what applications would you want to use it for, besides just research?

Interview Results

This was a very detailed interview and therefore the most valuable and less obvious findings are listed below.

They told us that for them the most important features are first of all lights for photographs, the work of the researchers involves taking a lot of pictures and in the case of the north sea this can be hard in terms of light at the bottom of the sea. Also they made it clear that having a positioning system is very important to them. It is important to know where a picture is taken for repeating measurements, going back to the spot and analyzing data. They suggested sonar or gps, their own robot has an acoustic positioning device that measures the relative distance to a stick. Positioning is important but can cost a lot of money, they told us that half of their money was spent on the positioning device. It was also stated that having a scale bar on the pictures could be useful.

3d is a very cool way of getting images of the reefs but it is not very handy. The researchers have seen results of similar techniques and it takes a lot of computer processing. This makes 3d imaging uses far too slow. It could be good for measuring the depth of the reefs but this can also easily be done with the technique they already use which involves a chain.

On the question of how deep the robot should go into the reefs (below their highest point) they told us that a lot of today's reefs are not that deep anymore, therefore floating above the reefs will be sufficient. They also liked the fact that staying above the reefs would give more options and would be cheaper. It is advised to be very close to the reef because this will give better quality pictures.

Collision detection is needed. There have been times when the researcher got stuck with his robot, he said that vision was important, he stated that it would have been helpful if the robot also had the option of looking up. Safety is only a big deal in terms of collisions, there are no big other safety threts according to the researchers. But safety is a big deal in terms of collision avoidances. Sand is horrible for pictures but it is not dangerous for the corals to get close to the bottom of the ocean. The researchers suggested making the robot slightly lighter than the water to prevent getting to the bottom when the motors shut off.

On the subject of controls they told us that controlling is very hard on an iPad. On a boat everything is harder and you can get seasick. So different controllers could be a solution. One of the researchers was not familiar with the Xbox one controller so we should not take for granted that all people know how to use this controller. On the screen the researches would prefer less is more since more options could make you make seasick. They like the normal set and they think depth is important, having an indication of where the camera is looking and having more screens, including one with the position on the map would be nice. They like not too much distraction, voltage and battery is not needed.

On the question if they needed complicated movement they told us that this really depends on wat research is being done. For pictures it is important to be vertical and steady. But for exploring caves and wreck it would be useful to have some more complicated movements. Since navigating the robot can be hard they also suggested giving the robot some preprogramed movements. This could be good for doing measurements and repeating them. The robot is wanted to be slow but does need to go against the current. Therefore power is needed. The researchers preferred thrustors over other ways of moving the robot after some examples were shown. Thrustors are good for moving in multiple directions, and are more flexible and precise. Also the fact that lines and current can cause a lot of drag on the robot was told to us. This could be solved by putting a weight on the line so it can keep in place.

On the subjects of materials they say that there are no big problems, salt water can break down some materials. Oils and grease need to be biologically degradable. Batteries can give some trouble since some of them are not allowed on planes which can be a problem. Chargers of batteries can explode according to an experience of one of the researchers. The budget for a robot of the researchers was between 15 and 80/100 thousand euros. They stated that a modular build robot would be great for using it for more teams. This way it is handy for an organisation but also more money can be spent.

Literature Research - still being added into the wiki

Coral Reefs

General Underwater Robotics

Research Results

From our literature research and our user interview, we can conclude a number of important factors to keep in mind when looking at a robot for coral reef researchers. A brief summary of those points:

- The robot's movement should not damage the reefs or the fish around the reefs.

- This means that obstacle avoidance or some other way of preventing damage to the reefs in case of collision is required. According to dr. Meesters, obstacle avoidance is the way to go here

- The method of movement also should not damage the reefs, for instance: there should be no danger of fish or parts of the corals getting stuck in the thrusters, if thrusters are used. While we expected this to be important, our interview told us it was not a major concern, mainly since the robot will likely not be getting that close to the corals.

- The movement of the robot should not cause permanent changes to the environment of the reef. If there are no collisions, this is less of a major concern. Kicking up sand when taking off is a problem to consider, but this is less important for the reef environment and more for the quality of the photos and the robot not getting damaged.

- The materials that make up the robot should not be damaging to the coral reefs, even if the robot gets damaged itself.

- Some way of locating and retrieving the robot in case of failure will be needed, to prevent the robot from getting lost and becoming waste in the ocean.

- Batteries should be chosen so as to minimize damage in case of leakage, and they should also be safe to transport and charge.

- Chemicals or materials that might damage the reefs should not be used.

- The robots physical design should suit the coral reefs.

- Fish should not see it as prey or predators to avoid the robot being damaged by fish or causing too great a disruption.

- Depending on how close the robot moves to the corals, it should not have bits that stick out and might get caught in the corals. Based on our interview there will not be a major need for the robot to move that close to the corals, but a sleak design will still be useful for movement.

- The robot should suit the needs of the researchers (our user group) in use.

- The main task the robot will be assisting with is taking pictures for research into the state and development of the reefs. This means at least one good camera is needed, and the pictures should be taken in such a way that the information is consistent and useful. This means the camera should take all pictures at the same angle and should include information like the scale of the picture and the location the picture was taken at.

- The user-interface and control methods should be adapted to the needs of the user, simplicitly is key here. The robot should not be too confusing or difficult to control, and more nuanced control might actually make it harder to get consistent results.

- Specific attention should be paid to how the needs of coral reef researchers differ from those of hobbyists or general divers, since our research into existing robots will include robots aimed at different user bases.

- The researchers should want to buy this robot.

- Besides the usefulness of the robot, this involves researching what cost would be acceptable. The main point here seems to be that it is important to consider what features might make a university, research institute or organisation willing to pay for such a product. For instance, if the robot would be more likely to get bought if it could be applied in multiple different ways.

Research into Current Underwater Drones

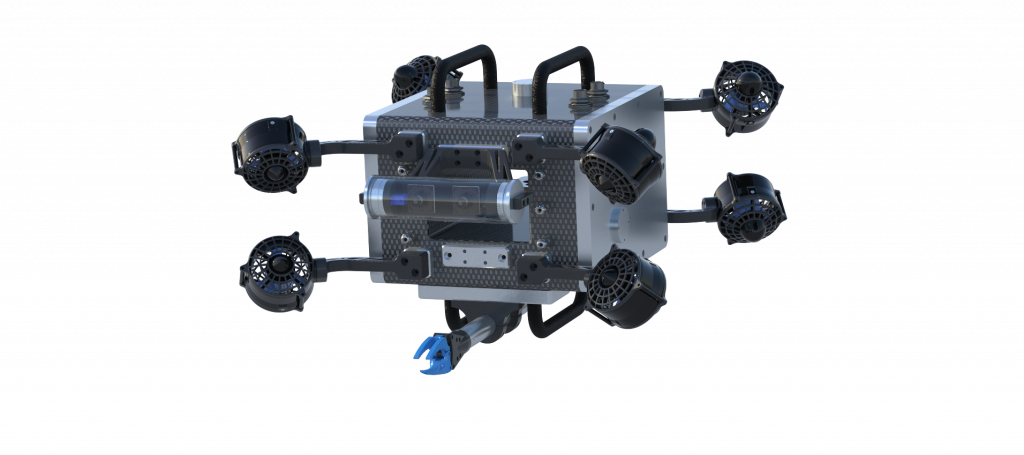

Scubo / Scubolino

Scubo is an ROV built by Tethys Robotics, a robotics team from ETH Zurich (Tethys, 2019). Scubo's defining feature is its omnidirectional movement, as well as its modularity. Scubo uses 8 propellers that extrude from its main body in order to allow the tethered robot to move with extreme agility underwater. The robot is made out of a carbon cuboid, which features a hole throughout the middle for better water flow and also cooling of the electronic components. It is constructed to be neutrally buoyant, allowing depth control through natural movement through the 8 propellers. On its body, there are 5 universal ports for modularity. The robot’s tether provides power for the onboard batteries, as well as allow direct control from a computer outside the water. It is controlled with a SpaceMouse Joystick. For processing, Tethys say they use an Arduino Due for the hard, real-time tasks, and an Intel NUC for high-performance calculations (Arduino Team, 2016).

LarvalBot / RangerBot

Description

Initially, the Queensland University of Technology (QUT) designed the COTBot to deal with the dangerous crown-of-thorns starfish that threatens coral reefs. This design got improved quite a bit (most importantly, it was reduced in size and cost) to make the RangerBot, which has the same purpose as the COTBot. This design then got shifted around a bit to make the LarvalBot, which is extremely similar to the RangerBot, but instead of killing starfish this robot is used to deliver coral larvae to promote the growth of new coral. All three of the robot designs were specifically for coral reefs. Most sources on the robots focus on the video analysis capabilities most of all, so that seems to be where the innovation of this robot lies, not the coral reef application. The RangerBot has some extra features as well, such as water quality sensors and the ability to collect water samples (Braun, 2018).

Movement

Both RangerBot and LarvalBot have 6 thrusters (Zeldovich, 2018) that allow full six degrees-of-freedom control, including hover capabilities (Dunabin et al., 2019). Both are controlled with an app that, according to the creators, takes just 15 minutes to learn (Dunabin, August 2018). The LarvalBot follows only preselected paths at a constant altitude, with the release of the larvae being controlled by user input (Dunabin, November 2018), it is unclear if the RangerBot also follows a pre-selected path, but it is likely since it operates fully automatically to dispatch the crown-of-thorns starfish, and there is no mention of automatic pathfinding in any of the report.

Collision Detection

RangerBot and LarvalBot have 2 stereo cameras (Zeldovich, 2018). The RangerBot uses these for obstacle avoidance amongst other functions, it is not specified if the LarvalBot has obstacle avoidance (Dunabin, August 2018). RangerBot uses video for obstacle avoidance because the usual method, sonar, does not work in coral reef environments. Unwater use of sonar and ultrasound is difficult either way, but the degree of complexity in coral reefs makes it manageable. Video is usually not used for this purpose because deep in the water it quickly gets dark and murky. However, coral reefs are quite close to the surface (or at least, the coral reefs we are concerned with are) and they are in clear water, so this environment is uniquely suited for cameras as a sensor (Dunabin et al., 2019).

Design, Materials, and Shape

RangerBot weighs 15 kg and is 75 cm long (Dunabin, August 2018), the LarvalBot will be bigger because it has the larvae on board, but the base robot has the same size. The RangerBot floats above the reefs and can only reach the easy-to-reach crown-of-thorns starfish (Zeldovich, 2018), that is why it can have the slightly bulky shape and can have the handle at the top without bumping into the reefs being a concern. There is unfortunately no information available online on what materials the robot was made out of.

Power Source

RangerBot and LarvalBot can both be untethered. However, the current version of LarvalBot is still tethered (Dunabin, December 2018). It is not mentioned in the report why, but I would assume this is because you need reliably real-time video to be able to tell the LarvalBot to drop the larvae at the right time. When without a tether, the robot can last 8 hours and it has rechargeable batteries (Queensland University of Technology, 2018).

OpenROV v2.8

Movement

The purpose of the robot kit is to bring an affordable underwater drone to the market. It costs about 800 euro to buy the kit and 1300 euro to buy the assembled robot (OpenROV, n.d.). The user is challenged to correctly assemble the robot, a fun and rewarding experience. Once the robot is made, the user is free to decide where and how to use the robot. The most common use is exploring the underwater world and making underwater videos.

The robot is remotely controlled and has a thin tether. Via a software program, which is provided and can be installed on a computer, the user is able to control the robot. The camera on the robot provides live feedback on the robots’ surroundings and the way it reacts to direction changes.

Two horizontal and one vertical motor allow the robot to smoothly move through the water. When assembling the robot, one should pay special attention to the pitch direction of the propellers on the horizontal motors. As the horizontal motors are counter rotating, the pitch direction of the propeller differs.

Other crucial parts are a Genius WideCam F100 camera, a BeagleBone Black processor, an Arduino, a tether, lithium batteries and extra weights.

Design, Material and Shape

All the components are held in place by a frame and stored in waterproof casings. The frame and multiple casings are made from laser cutted acrylic parts. The acrylic parts are glued together with either acrylic cement or super glue. When wires are soldered together, heat shrinks are used to secure a waterproof connection. Epoxy is used to fill up areas between wires to prevent leakage.

The design of the robot does not look very streamlined but moves surprisingly smooth. A drawback of the design is that the lower part, which contains the batteries, is always visible on the camera feed. This can be annoying when watching the video.

The robot is relatively small and can be compared to the size of a toaster. This is ideal for moving through places such as shipwrecks and coral reefs.

Power source

The robot uses 26650 (26.5mm × 65.4mm) Lithium batteries. The batteries need to be charged beforehand. The guide warns the user that the batteries could be dangerous and must be charged with the correct amount of voltage, which is 3V.

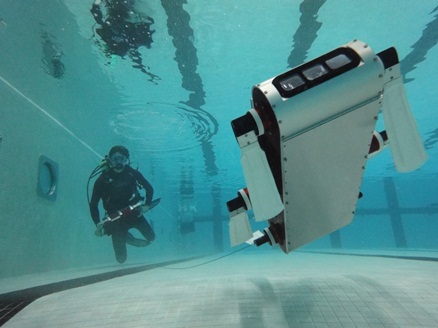

Aqua 2

Movement

The robot is designed to assist human divers. It is currently employed for monitoring underwater environments and provides insights regarding robotic research and propulsion methods. The robot can go 30 meters deep and has a maximum speed of 1 m/s (AUVAC, n.d.).

The robot can operate autonomously or be controlled via a tablet. The tablet is waterproof, so that the divers can control the robot while being underwater. When the user tilts the tablet in a certain direction, the robot moves in the same direction (De Lange, 2010).

Aqua2 has six flippers which move independently. The fins move slowly, this results in little disturbances in the robot's surroundings. The robot can also be employed on land. There it walks with a speed of 0.7 m/s.

Design, Material and Shape

The rectangle shaped body of the AquaA2 robot is made from aluminum. The flippers are made from vinyl and have steel springs inside. These materials are sea water proof.

Power source

The robot is powered through two lithium batteries. The batteries have a voltage of 28.8 V and a capacity of 7.2 Ah. After 5 hours, the batteries must be recharged, which takes 8 hours.

Image recognition

The robot is equipped with image collection and processing software. This allows the robot to operate autonomously. The image procession is made available through the ROS development environment and the OpenCV vision library.

Ocean One

Movement

The robot is designed for research purposes. Due to its design, the robot can take over tasks from human divers such as exploring archaeological sites or collecting a specimen. Moreover, it can excess places where human divers cannot go, such as places below 50 meters deep.

The robot has a high level of autonomy and is controlled via an intuitive, haptic interface. The interface “provides visual and haptic feedback together with a user command center (UCC) that displays data from other sensors and sources” (Khatib et al., 2016, p.21) . There are two haptic devices (sigma.7), a 3D display, foot pedals, and a graphical UCC. A relay station, which is connected to the controller, allows the robot to function without a tether.

“The body is actuated by eight thrusters, four on each side of the body. Four thrusters control the yaw motion and planar translations, while four others control the vertical translation, pitch, and roll. This thruster redundancy allows full maneuverability in the event of a single thruster failure” (Khatib et al., 2016, p.21).

Design, Material, and Shape

The robot has an anthropomorphic shape as it should have the same capabilities as human divers. The design of the robot’s hands allow “delicate handling of samples, artifacts, and other irregularly-shaped objects” (Khatib et al., 2016, p.21). “The lower body is designed for efficient underwater navigation, while the upper body is conceived in an anthropomorphic form that offers a transparent embodiment of the human’s interactions with the environment through the haptic-visual interface” (Khatib et al., 2016, p.21).

Power Source

The relay station can be used as a nearby charging station.

Collision Detection

In the paper, the authors seem to hint that the robot is equipped with a collision detection system. The hard- and software behind it is not explained.

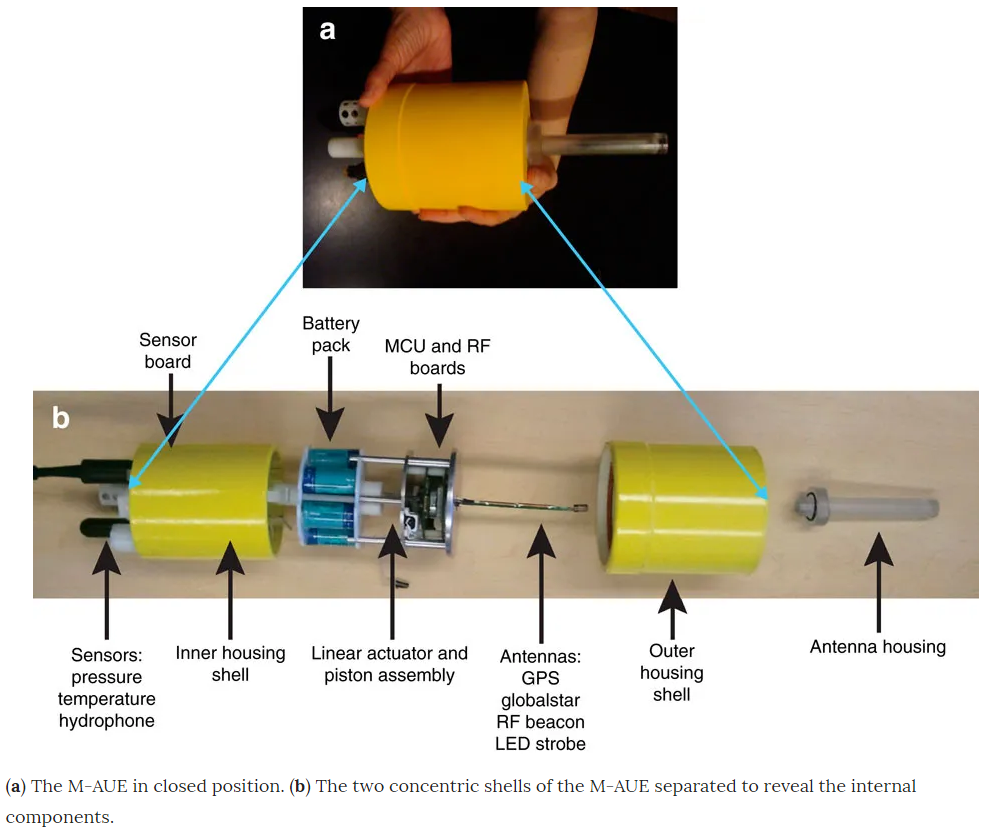

M-AUE

Current Use

Answering questions about plankton in the ocean. The M-AUEs study environmental processes in the ocean (Reisewitz, 2017).

Movement

The idea of the M-AUEs is that they move just like planktons in the ocean, by adjusting their buoyancy (through programming) going up and down while drifting with the current (Reisewitz, 2017). The author continues stating that the M-AUEs are moving vertically against the currents that are caused by the internal waves; they do this by repeatedly changing the buoyancy.

“The big engineering breakthroughs were to make the M-AUEs small, inexpensive, and able to be tracked continuously underwater,” said Jaffe (inventor of the M-AUEs) in Reisewitz (2017).

Control

The M-AUEs are designed to not go as deep to a point that they would be coming close to the seafloor. This will mean that in case of being used for coral research they will not maneuver through the coral, but instead hover above them. The M-AUEs are preprogrammed with a PID control algorithm, meaning that they will sense the actual depth that they are currently in and adjust the settings to the desired depth that they want to be at.

Localizing

According to Reisewitz (2017), acoustic signals are used to keep track of the M-AUEs while submerging, since GPS does not work under water. Receiving three-dimensional information on the location every 12 seconds, showed where exactly the M-AUEs are inside the ocean. Augliere (2019), says that GPS equipped moorings (which float on the surface of the ocean) are being used to send out sonar pings in those 12 seconds. These pings are then received by the hydrophones of the M-AUEs (which are located around 50 meters deep from the surface), giving data which researchers can use to localize them.

Battery

As stated by Augliere (2019), the M-AUEs have batteries with power to last for several days and also data storage that can last that long. “The system is powered by a battery pack made from 6 Tenergy 1.2 V, 5000, mA hour NiMH cells in a series configuration. The battery pack is fused with a 2 A fuse and is recharged at an optimal rate of 600 mA” (Jaffe et al., 2017)

Design and Material

The size of the M-AUEs is to be compared with the size of a grapefruit. It exists out of 2 shells that are concentric and made out of syntactic foam (Jaffe et al., 2017) which slide on top of each other. Inside these shells the batteries, a piston and an antenna are positioned and on the bottom, on the outside of the shell, multiple sensors are placed.

Possible Future Work

Reisewitz (2017) states that adding a camera to the M-AUEs will provide images of the state of the ocean, resulting in enabling to map the coral habitats and the movement of larvae or to do further research on the creatures in the ocean such as the planktons. Enhancing the robot with audio recording devices (hydrophones), will let the M-AUEs function as an ‘ear’ by being able to keep track of the sounds in the ocean.

Jellyfish - still being added into wiki

Current Robots Research Conclusion

From the conducted research can be concluded that a considerable amount of underwater robots are currently being developed and already brought to the market. Some of the robots are specialized for operating in specific environments, such as, coral reefs. Looking at the state-of-the-art provides insights and inspiration for the design of our robot.

Movement and Control

Movement

Many ROVs [Remote Operate Vehicles] and AUVs [Autonomous Underwater Vehicles] use a wide variety of different moving methods and techniques to navigate underwater. Some employ biomimicry like the Aqua 2, these robots move around the water in ways that are inspired by nature. However, most robots that are more oriented at professional users, such as marine researchers, use a number of propellers to move around in all directions underwater. Systems like the Scubo 2.0 use 8 propellers mounted around the frame in order to navigate the water. This system ensures full omnidirectional control as well as, with sufficient thrusters, the ability to stabilise in a current. Full omnidirectional movement is a key feature for most ROVs, these thrusters must be powerful enough to move through the current in the ocean.

Through interviewing the researchers, we gained some insights on the required range and method of movements the robot will need in order to be sufficient. First and foremost, the robot must have 2 operation modes to be optimal. These modes are tethered live control (teleoperation) and tetherless pre-programmed routes. These 2 modes ensure that the robot can be used comfortably and for a wide range of uses, a robot that can be used for many different tasks is, after all, a more cost-effective and sustainable solution. The teleoperated mode would ideally be used to explore new sectors and find new sites to scan underwater. The tetherless operation is intended for the scanning and photographing of known sites, this eliminates the need for operation by an expert in order to study certain sites. The researchers we interviewed were asked about biomimicry, robots that are inspired by nature for their design and operation. Our conclusion is that biomimicry is not the answer for most types of research done on reefs. This is because most forms of movement that these types of robots use are not nearly as stable and precise as the thruster system can be. The way underwater animals move is inherently different than what this robot needs to accomplish to provide stability for underwater pictures and provide precise positioning for measurement. Therefore we decided that it is not a suitable movement method for our robot. Therefore, the multi-propeller system, such as Scubo 2.0, is much preferred. This is due to its agility and ability to move fluently in all directions. Furthermore, omnidirectional thrusters can be used to stabilize the robot while taking photographs or scans underwater, and steady and clear scans and photographs are, according to researchers, one of the single most important features this robot can have. This movement must be followed by powerful enough thrusters to counter some strong currents (up to 0.5 knots) in order to be able to remain stable in the water.

UI and Control

User Interface [UI] and Control of an ROV are very crucial, without an easy method to send instructions to the robot, the operator cannot efficiently carry out the task that is required, and in research use cases, this could mean bad data collection and potential time wasting. Currently most robots use a few different kinds of input methods to control the robots via a computer interface. Most amateur oriented robots use on screen controls on a tablet or smartphone, while most professionally oriented robots use either standard gamepads, joysticks, or more specialised systems for a more unique robot operation (such as the sigma.7 haptic controller and foot pedals used to control the Ocean One robot). The use of a standard joystick or gamepad seems to be the most common control input method, which is likely due to most of these hardware devices being widely available, easy to operate, and very cost effective (a standard Xbox One controller costs 60 euros (Xbox)). On-screen controls seem to be missing from most serious professional ROV. The user interface of most of these robots seem to follow a general feature list, while the organization on screen varies slightly from system to system. Some features that can be found on the user interface of these robots include: a live camera feed, speedometer, depth meter, signal strength, battery life, orientation of the robot, compass, current robot location, and other less relevant features on some systems.

The system used by the researchers we interviewed uses an xbox one controller to navigate the robot, they had little to say on this matter as neither of them are avid gamers or have used this robot yet. This leads us to believe that the control input method is less relevant in a general sense, but the key importance is that it should be a standardized system in such a way that any user can plug in their controller of choice. This ensures that not only are the users using their most prefered hardware, but also will enable them to use existing hardware to avoid having to spend more resources on buying another item. Each person will have their prefered method to control the robot (which is made increasingly difficult when operating from a moving vessel on the water) and will therefore be more comfortable in controlling the robot.

Furthermore, we discussed the case of the UI with the researchers, and the conclusion was that the less busy the display is, the more ease of use the system has. The most important features we concluded are: the depth meter, the compass and orientation display, and the current location of the robot. According to researchers, the most important thing when controlling a robot underwater is knowing where you are at all times (this is why underwater location systems are so complex, expensive, and crucial to these operations).

In the movement section, 2 operation methods were discussed; a tethered live control and a tetherless pre-programmed operation. For the tetherless operation, UI can be just as crucial for two distinct reasons. The first being that you want to be able to easily program the needed path and instructions you want the robot to take. Most marine researchers will not also be programmers, so the software to program the paths needs to be very intuitive and full of features primarily being the ability to control the area of operation, and the type and size of photographs (or other types of readings) the robot will take. The second use of this software would be similar to the tethered operation but except for the live control of it, this will be for monitoring purposes and ideally has the exact same features as the tether operation setting, with the small addition of a progress meter to indicate how the route is progressing.

Design, Materials, and Shape - still being added into wiki

Power Source

In the robots which were researched, power was either supplied by batteries, a tether or a combination of both. There are different kinds of tethers. Some tethers only provide power, others transfer data and some do both (Kohanbash, 2016). The most commonly used batteries are lithium based. As mentioned in the online guides from OpenROV one should be cautious when using Lithium batteries as they can be dangerous (OpenROV, n.d.). During the interview, the researchers said that once something went wrong with the charging of a battery and an entire ship caught fire.

As described in the movement chapter, the robot we will be designing operates in two settings. Firstly, the user can program a path via software on a laptop, upload the path and the robot will autonomously move along the path. In this case, just the batteries will suffice in providing power. Without a tether, the robot can move closer to the reefs with the risk of getting entangled. In the second setting, the user can control the robot via a controller and real time video feedback. In that case, a tether is needed for the data transfer. Power can still be supplied by the batteries only.

During the interview, the possibility of incorporating both settings was discussed. The researchers got very excited about this idea and the opportunities it provides for research. As mentioned in the movement chapter, having both functions would make the robot unique.

Additional Features

Collission Detection

The robot will be tele operated, but since the researcher is only human it is recommended to also use a type of collision avoidance. This system will make sure that in case the user makes a wrong judgment, no collision will happen. This is important since no harm should come to the corals.

Sonars are frequently used for collision detection. The resolution and reliability of sonar sensors degrade when being close to objects. Since the robot will have to manoeuvre a lot in coral reefs, sonar will not work sufficiently. (Dunbabin, Dayoub, Lamont & Martin, 2018)

There are real-time vision-based perception approaches that make it possible to provide a robot in coral reefs with collision avoidance technologies. To make obstacle avoidance possible the challenges of real-time vision processing in coral reef environments needs to be overcome. To do so image enhancement, obstacle detection and visual odometry can be used. Cameras are not used frequently in underwater robots but will work well in coral reefs since the coral reefs are quite close to the surface (Spalding, Green & Ravilious (2001)), and the water in these areas is very clear.

Image enhancement is useful for making the processed images more valuable. To do so different types of colour correction are applied. And for detection semantic monocular obstacle detection can be used. Dunbabin et al.(2018) explain that for shallow water position estimation visual odometry combined with operational methods to limit odometry drift was explored and evaluated in early work using a vision only AUV [12]–[14]. This already showed navigation performance errors of <8% of distance travelled.() Therefore this visual odometry can be used for the robot.

Localization

As GPS is known to not work subsurface and thus cannot solely be used to detect the location of our underwater robot. It is, however, possible for GPS positions to be transferred underwater. As Kussat, Chadwell, & Zimmerman (2005, p. 156) state, the location of autonomous underwater vehicles (AUVs) can be determined by acquiring ties between the GPS before and after subsurface and integrating the acceleration, velocity, and rotation of the vehicle during the time of subsurface. However, they go on stating that this method causes an error of 1% of the distance traveled, which means a 10 m error with an AUV track of 1 km. According to them, this error occurs due to the quality of the inertial measurement unit (IMU).

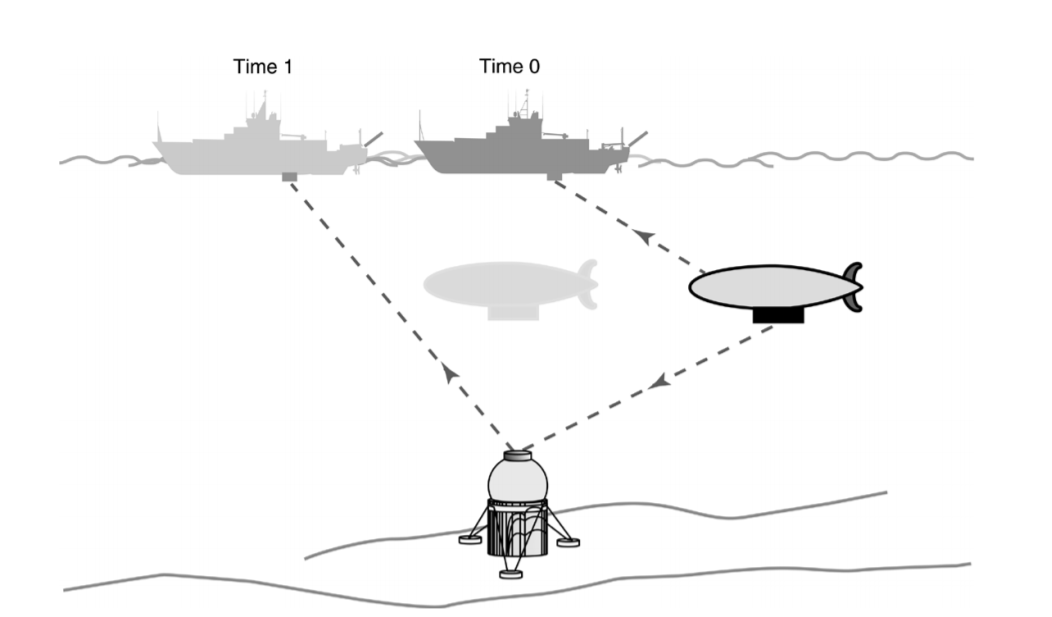

Having such an error in an underwater robot that is being teleoperated can create many issues with relocating where the robot has been. The ocean is yet still a very unknown and widely spread out space where there is constant movement that can interrupt the location constantly. This could be problematic if one were to want to research in the same location again. For this reason, it is highly important to be able to detect an object's location, as accurately as possible as well as above water as underwater. Kussat et al.(2005, p. 156), continue explaining how a much more precise localization for AUVs can be achieved by combining precise underwater acoustic ranging and kinematic GPS positioning together. This is what the M-AUEs robots use as well.

To use such a method, a precise measuring of travel time is required. Acquired travel time data with a resolution of only a couple of microseconds, can be achieved by improving the correlator system (Spiess, 1997). This can be done by having fixed delay lines and cross-correlation of a coded signal in the transponders (Kussat et al., (2005, p. 156). Kussat et al, (2005, p.156) continue to explain that the method starts off by determining the location of the transducers, that are either aboard a ship or in case of the M-AUEs in the moorings that are floating on the surface, by means of kinematic GPS. With their method transponders were placed on the seafloor, receiving signals from the transducers. This was done so a coordinate frame could globally be referenced. As a second step the autonomous underwater vehicles were located relative to these transponders by means of acoustic signals(see fig. 1).

The M-AUEs use a similar method of localizing the vehicles. The difference in method is that Kussat er al, (2005, p.156) use transponders that are located on the seafloor, whereas the M-AUEs receive the acoustic signal with their built-in hydrophones and respond back to the floating moorings. The moorings sent out 8-15 kHz signals at a time interval of 2 seconds, localizing the M-AUEs with an accuracy of ± 0.5 km (Jaffe et al., 2017). Whereas the method that includes using transponders on the seafloor achieving an accuracy of ± 1m (2-𝜎) of the horizontal positioning of the AUVs (Kussat et al., (2005, p. 156). Which method is best to use depends on the type of research for which a robot is going to be used. When for example, globally analyzing the state of the coral reefs, it is less important to have the exact location of where images have been taken than when repetitive research in one area is being done.

Overview Table

(Under development)

Final Results

Guidelines

(Under Construction)

Infographic

process

fotoooo

Discussion

References

- Hawkins, J.P., & Roberts, C.M. (1997). "Estimating the carrying capacity of coral reefs for SCUBA diving", Proceedings of the 8th International Coral Reef Symposium, volume 2, pages 1923–1926.

- Kohanbash, D. (2016, September 20). "Tether’s: Should Your Robot Have One?" Retrieved March 27, 2020

- Spalding, M. D., Green, E. P., & Ravilious, C. (2001). World Atlas of Coral Reefs (1st edition). Berkeley, Los Angeles, London: University of California Press.