PRE2019 3 Group12: Difference between revisions

| Line 335: | Line 335: | ||

==Regulations== | ==Regulations== | ||

As opposed to the system with the chairs, it is actually possible to pursue people who do not follow the rules and regulations with the rooms system . People need to reserve rooms through their account, making it possible to make them responsible for what happens to the room as long as they have reserved it (name, picture of account holder, student number, etc. are all known once a person has reserved a room through his/her account) . | As opposed to the system with the chairs, it is actually possible to pursue people who do not follow the rules and regulations with the rooms system. People need to reserve rooms through their account, making it possible to make them responsible for what happens to the room as long as they have reserved it (name, picture of account holder, student number, etc. are all known once a person has reserved a room through his/her account) . | ||

The rules and regulations for reserving a room are as follows: | The rules and regulations for reserving a room are as follows: | ||

Revision as of 15:41, 20 February 2020

Group Members

| Name | ID | Major | |

|---|---|---|---|

Subject

A real-time camera-based recognition device using capable of identifying vacant seats in areas with dynamic workplaces. These include areas like workspaces at Metaforum for studying, seats at a library or empty desks at flexible workplaces at a company. This information can be communicated to the users which will minimize the time and effort it takes to find a place to work.

Problem Statement

During examination weeks many student want to work and study at the workplaces in the MetaForum. However, during the day it often very busy and it might take a while to find a place to sit, costing effort and precious study time. If student knew the locations of vacant seats it would significantly reduce the amount of time it takes to find them and will also reduce the distance that needs to be walked, decreasing the noise for the people already studying. This same issue could arrive at various other places such as public libraries or flexible workspaces. An automatic method to determine the vacant workplaces would be ideal to deal with situations like this.

Objectives

- Libraries and other flexible workplaces

The system should be able to tell the user whether there are vacant seats available and if so, in what area.

- Room reservation

It should be able to detect whether there are people in a room and if that's not the case for a certain amount of time, it should set the room to available for reservation.

Users

The users we plan to target with our project include multiple groups of people, since it can be applied in various environments. Possible users include students, employees making use of flexible work spaces or library visitors. Each of these users can benefit from the technology in two situations. The first is in larger study or working halls, comparable to Metaforum, for checking if there are available places. The second is in smaller study rooms, comparable to booking rooms in Atlas for cancelling reservations if the student doesn't show up or leaves earlier.

Society

Our system could have both positive or negative effects on society as a whole if the assumption is made that our system would be widely adopted by public institutions and companies alike. If our system is widely adopted by companies, it might act as a driving force in the increase of the popularity of flexible workspaces. Since research has shown that flexible workspace can provide several advantages to the environment of employees, leading to increased productivity and work satisfaction, this has the possibility of being a positive force in society.

There might also be negative consequences, such as an increased concern for privacy due to the widespread implementation of our system. Since our technology relies on the use of cameras, there will need to be constant monitoring of the workspaces for it to work properly. This might make people uneasy and lead to the anxiety of being watched. However, since our technology purely uses the filmed footage in order for the algorithm to make a decision in real time, there is no need to record the footage or for it to be viewed by humans at all. This means that properly informing employees and other users of our technology about how it functions can severely limit the concerns regarding privacy.

Enterprise

Companies are able to benefit from our solution in several ways. First and foremost, companies that would implement our system in their flexible workspaces would need less buildings to accommodate their employees due to the increase in efficiency of their use. This will save building costs, especially for larger companies or high tech companies that might require expense equipment in their flexible workspaces.

Approach

Here a number of possible approaches are described with their advantages and disadvantages. Our goal is to use the "camera-based recognition software approach", but if that fails, we have some other possible methods to achieve our goal.

Camera-based recognition software: The system uses a camera to detect empty workplaces. It can tell whether there is a person sitting at the workplace or if it’s free for other people. The camera will be installed in higher places to decrease the chance of other objects obstructing the view of the camera.

Advantages:

- Not only can it tell whether a person is sitting in a certain workplace or not, it can also tell whether a person is only taking a break or leaving the workplace for someone else to take it (by looking if the person has also taken his/her stuff with him/her)

- (Relatively) easy to install

- Limited amount is needed, since one camera (if placed right) can cover a big area, also reducing the overall costs

Disadvantages:

- Visual data of people will get captured, this means that people might feel that their privacy is violated.

- Developing an algorithm that can accurately conclude everything might become difficult

Echo location-based:

The system is based on the reflection of sound waves emitted by a sound source. The reflected sound will be different based on whether a person is sitting on the chair or not. The distance the sound has to travel is shorter if there is a person (taller than the chair) sitting on the chair.

Advantages:

- It does not capture any visual data which people might feel their privacy violated by.

- It can have quite a lot of range for one sensor, since it measures the differences between signals over time and always have something to compare.

Disadvantages:

- Other objects can deteriorate the signal if they’re close to the chair or person, making the measuring of the sound waves less accurate.

- It can only detect if there is someone in the chair, it cannot tell if the person leaves stuff on the desk indicating he or she is coming back or not.

Movement-based:

Movement based cameras can work on multiple premises, passive infrared (PIR), microwave, ultrasonic, tomographic motion detector, video camera software, gesture detector. Many of the current movement-based sensors have a combination of the two but they use the different methods to detect a movement. In general a movement based sensor can also be divided into zones which detect the movement in that zones separately.

Advantages:

- A person studying will generally move while writing, typing etc so it is easy to detect a person sitting in a chair or at a location. Which is good to detect quiet spaces.

- It can not capture any visual data which people might feel their privacy violated by depending on which detection is use.

Disadvantages:

- It can only detect movement, so if there is no movement in a certain zone it does not know if there is an actual chair at that place and it can only see there is no movement.

- A movement based sensor must have a lot of different zones for movement detection, if it is one big zone then any movement in that zone will render it as busy which makes it hard to detect empty chairs.

- Since chairs are not fixed in one location they can sit in between two zones which could render two zones as busy while 1 chair in one of those zones might still be empty which cannot be seen.

Thermographic-based / Infrared:

Thermographic-based detection focuses on the premise of the different temperatures between objects to distinguish them. Since humans, chairs, tables and floor have different temperatures a thermographic visual should be able to distinguish them based upon their temperatures.

Advantages:

- A thermographic based camera detects heat, so when a person is sitting on a chair it will detect a higher temperature which indicates that the chair is occupied, while a colder object is more likely to be a chair.

- Heat lingers when someone leaves a chair, the chair will have a higher temperature than normal since a person just sat on it and transferred heat onto it. This could be used as a sort of buffer to make sure that the chair is empty for a while before stating it as empty.

- While visual data is collected, a thermographic image will make it hard to actually identify a person which will decrease the violation of privacy people might feel.

Disadvantages:

- There probably will be a difference in the base temperature of the chair due to the difference in the weather over the year and even in a single day. A chair next to a window where the sun is shining on will probably have a higher temperature than a chair in the shadow. In the summer, unless the *temperature is really well regulated, the area in the view of the camera will have a general higher temperature than in the winter, which could make it harder to detect differences. So a lot of factors have to be incorporated into the design to even the effect

- Normal consumer thermographic cameras have a small viewing angle, and work precisely relatively close. If you want to detect objects further away the preciseness of the camera goes down so it means you must install more cameras on lower ceilings to have it accurate and it can become quite expensive.

User Requirements

In order to develop a sound engineering product it is important to not become out of touch with the actual requirements and needs that our supposed users have. Although we as students are for a large part also users ourselves, we can still get misdirected in our assumptions of what the user requirements and needs are by the anecdotal problems that we personally face. For this reason we have made a questionnaire with the purpose of determining the stance of our fellow students on the following subjects:

- What are their largest frustrations regarding studying places

- How often they have trouble with locating a free studying place

- Whether our product is seen as a suitable solution

The preliminary poll can be found here:

State-of-the-art

Neural Networks

What is a neural network

Neural networks are a set of algorithms, modeled loosely after the human brain, that are designed to recognize patterns. They interpret sensory data through a kind of machine perception, labeling or clustering raw input. The patterns they recognize are numerical, contained in vectors, into which all real-world data, be it images, sound, text or time series, must be translated. Neural networks help us cluster and classify. You can think of them as a clustering and classification layer on top of the data you store and manage. They help to group unlabeled data according to similarities among the example inputs, and they classify data when they have a labeled dataset to train on. (Neural networks can also extract features that are fed to other algorithms for clustering and classification; so you can think of deep neural networks as components of larger machine-learning applications involving algorithms for reinforcement learning, classification and regression.) The most important applications, in our case are:

Classification

All classification tasks depend upon labeled datasets; that is, humans must transfer their knowledge to the dataset in order for a neural network to learn the correlation between labels and data. This is known as supervised learning. Detect faces, identify people in images, recognize facial expressions (angry, joyful) Identify objects in images (stop signs, pedestrians, lane markers…) Recognize gestures in video Detect voices, identify speakers, transcribe speech to text, recognize sentiment in voices Classify text as spam (in emails), or fraudulent (in insurance claims); recognize sentiment in text (customer feedback) Any labels that humans can generate, any outcomes that you care about and which correlate to data, can be used to train a neural network.

Clustering

Clustering or grouping is the detection of similarities. Deep learning does not require labels to detect similarities. Learning without labels is called unsupervised learning. Unlabeled data is the majority of data in the world. One law of machine learning is: the more data an algorithm can train on, the more accurate it will be. Therefore, unsupervised learning has the potential to produce highly accurate models. Search: Comparing documents, images or sounds to surface similar items. Anomaly detection: The flipside of detecting similarities is detecting anomalies, or unusual behavior. In many cases, unusual behavior correlates highly with things you want to detect and prevent, such as fraud.

Model:

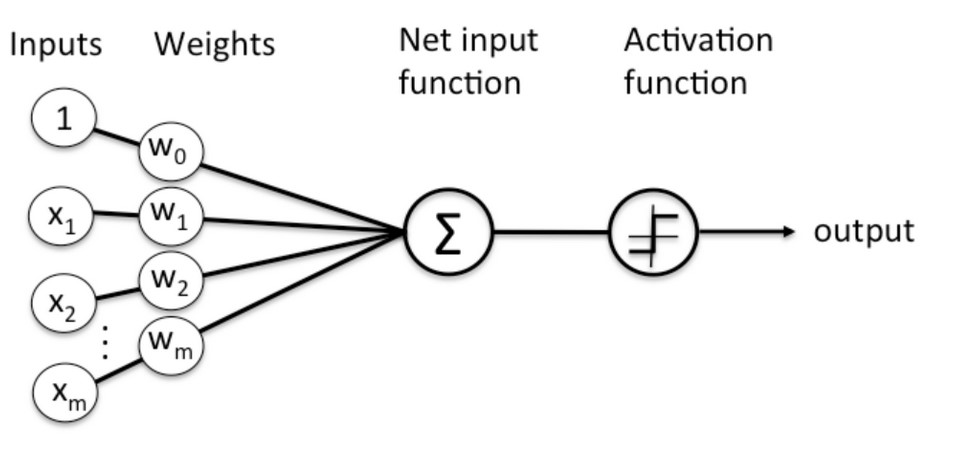

Here’s a diagram of what one node might look like.

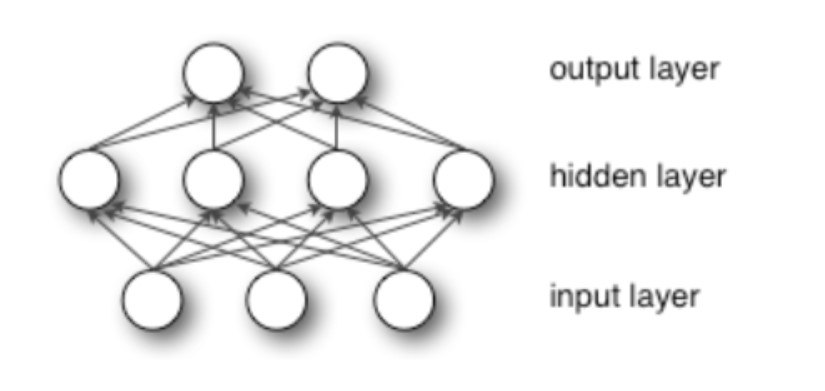

A node layer is a row of those neuron-like switches that turn on or off as the input is fed through the net. Each layer’s output is simultaneously the subsequent layer’s input, starting from an initial input layer receiving your data.

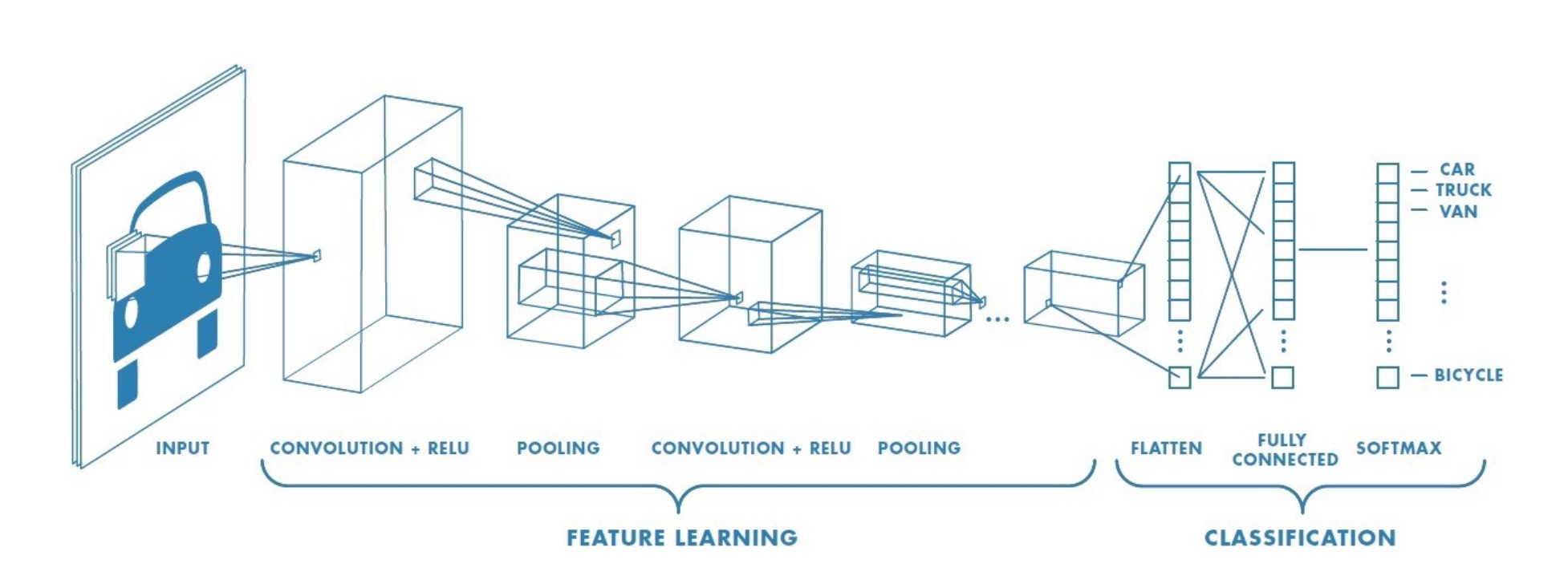

Convolutional Neural Network

For computer vision and image recognition, the most used type of Neural Network is a Convolutional Neural Network A Convolutional Neural Network (ConvNet/CNN) is a Deep Learning algorithm which can take in an input image, assign importance (learnable weights and biases) to various aspects/objects in the image and be able to differentiate one from the other. The pre-processing required in a ConvNet is much lower as compared to other classification algorithms. While in primitive methods filters are hand-engineered, with enough training, ConvNets have the ability to learn these filters/characteristics.

Model:

Current Knowledge

Neural Networks

The current state of the art library for AI and machine learning is OpenCv. OpenCV (Open Source Computer Vision Library) is an open source computer vision and machine learning software library. OpenCV was built to provide a common infrastructure for computer vision applications and to accelerate the use of machine perception in the commercial products. Being a BSD-licensed product, OpenCV makes it easy for businesses to utilize and modify the code.

The library has more than 2500 optimized algorithms, which includes a comprehensive set of both classic and state-of-the-art computer vision and machine learning algorithms. These algorithms can be used to detect and recognize faces, identify objects, classify human actions in videos, track camera movements, track moving objects, extract 3D models of objects, produce 3D point clouds from stereo cameras, stitch images together to produce a high resolution image of an entire scene, find similar images from an image database, remove red eyes from images taken using flash, follow eye movements, recognize scenery and establish markers to overlay it with augmented reality, etc. OpenCV has more than 47 thousand people of user community and estimated number of downloads exceeding 18 million. The library is used extensively in companies, research groups and by governmental bodies.

From those the current state of the heart in terms of object detections is an algorithm called Cascade Mask R-CNN. A multi-stage object detection architecture, the Cascade R-CNN, is proposed to address these problems. It consists of a sequence of detectors trained with increasing IoU thresholds, to be sequentially more selective against close false positives. Comparing it to the last year state of art algorithm , Cascade Mask R-CNN has an overall 15% increase in performance, and a 25% performance increase regarding the state of art of 2016.

This method/apporach can be found in the following papers:

Hybrid Task Cascade for Instance Segmentation[1]

CBNet: A Novel Composite Backbone Network Architecture for Object Detection. [2]

Cascade R-CNN: High Quality Object Detection And Instance Segmentation. [3]

This is a paper which describes the workings, differences and origin of multiple InfraRed cameras and techniques. [4]

Parking Space Vacancy Detection

Similar systems have already been implemented in real-life situation for parking spaces for cars.[5] Many parking environments have implemented ways to identify vacant parking spaces in an area using various methods: Counter-based systems, which only provide information on the number of vacant spaces, sensor-based systems, which requires ultrasound, infrared light or magnetic-based sensors to be installed on each parking spot, and image or camera-based systems. These camera systems are the most cost-efficient method as it only requires a few cameras in order to function and many areas already have cameras installed. This system is however rarely implemented as it doesn't work too well in outdoor situations.

Researchers from the University of Melbourne have created a parking occupancy detection framework using a deep convolutional neural network (CNN) to detect outdoor parking spaces from images with an accuracy of up to 99.7%.[6] This shows the potential that image based systems using CNN's have in these types of tasks. A major difference that our project has from this research however, is the movement of the spaces. Parking spaces of vehicles always stay on the same position. A CNN is therefore able to focus on a specific part of the camera and focus on one parking space. In our situation the chairs can move around and aren't in a constant position. The neural network will therefore first have to find and identify all the chairs in its vision before it can detect whether it is vacant or not, an additional challenge we need to overcome.

Another research group have performed a similar research using deep learning in combination with a video system for real-time parking measurement.[7] Their method combines information across multiple image frames in a video sequence to remove noise and achieves higher accuracy than pure image-based methods.

Seat or Workspace Occupancy Detection

A group students from the Singapore Management University have tried to tackle a similar problem.[8] In their research they proposed a method using capacitance and infrared sensors to solve the problem of seat hogging. Using this they can accurately determine whether a seat is empty, occupied by a human, or the table is occupied by items. This method does require a sensor to be placed underneath each table and since in our situation the chairs move around, this method can't be used everywhere.

A seat occupancy method using capacitive sensing has also been proposed.[9] The research focused on car seats and can also detect the position of the seated person. A prototype has shown that it is feasible in real-time situations.

The Institute of Photogrammetry has also done research into an intelligent airbag system, only this time using camera footage.[10] They were also able to detect if the passenger seat was occupied or not and what the positions of the people were.

A method using cameras is described in the research by the National Laboratory of Pattern Recognition in China for people in seats counting.[11] They propose a coarse-to-fine framework to detect the amount of people in a meeting using a surveillance camera with an accuracy of 99.88%.

Autodesk research also proposed a method for detecting seat occupancy, only this time for a cubicle or an entire room.[12] They used decision trees in combination with several types of sensors to determine what sensor is the most effective. The individual feature which best distinguished presence from absence was the root mean square of a passive infrared motion sensor. It had an accuracy of 98.4% but using multiple sensors only made the result worse, probably due to overfitting. This method could be implemented for rooms around the university but not for individual chairs.

A similar idea was envisioned a few years ago with the creation of an app called RoomFinder which allowed students at Bryant University to find empty rooms around campus to study in.[13] This system, created by one of the students, taps into the sensors used by the automatic lighting system in these rooms, to detect whether a room is currently being used and sends that information to the app. As that system was already implemented around campus, it didn't require any capital investment.

Other Image Detection Research

Aerial and satellite images can also be used for object detection.[14] An automatic content-based analysis of aerial imagery is proposed to mark object or regions with an accuracy of 98.6%.

Planning

Milestones

For our project we have decided upon the following milestones each week:

- Week 1: Decide on a subject, make a planning and do research on existing similar products and technologies.

- Week 2: Finish preparation research on USE aspects and subject and have a clear idea of the possibilities for our projects.

- Week 3: Start writing code for our device and finish the design of our prototype.

- Week 4: Create the dataset of training images and buy the required items to build our device.

- Week 5: Have a working neural network and train it using our dataset.

- Week 6: Test our prototype in a staged setting and gather results and possible improvements.

- Week 7: Finish our prototype based on the test results and do one more final test.

- Week 8: Completely finish the Wiki page and the presentation on our project.

Deliverables

This project plans to provide the following deliverables:

- A Wiki page containing all our research and summaries of our project.

- A prototype of our proposed device.

- A presentation showing the results of our project.

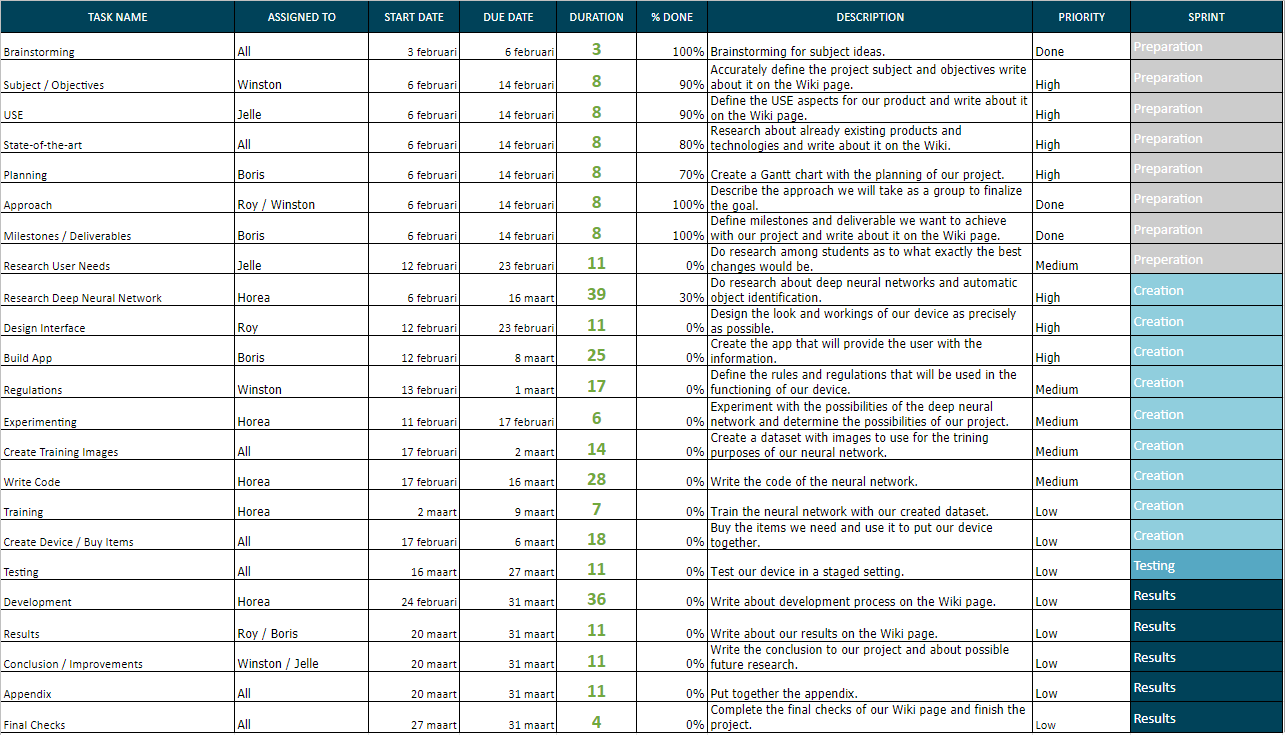

Schedule

Our current planning can be seen in the table below. This planning is not entirely finished and will be updated as the project goes on.

Chairs

Regulations (feel free to add or remove rules)

In order to run the system as optimally as possible, it is highly recommended that people follow the proposed rules and regulations. These are as follows:

- A person can only stay at a workplace that he or she has reserved

- A person cannot stay at a workplace outside of the reserved time

- A person can only claim one workplace at a time for him or herself

- Chairs should not be removed from the table or workplace they belong to

- No one is allowed to claim any chair or workplace for other people

However, the system is not able to tell (yet) who a person is based on their appearance. It treats everything as "objects" and therefore cannot distinguish who a person is. Additionally, even if the system was able to do that, detecting the faces of people can be an ethical problem on its own. Some people do not want their faces to be detected and tracked, which means that the system will induce some privacy issues. Because of this, one drawback is that it is not possible to punish or pursue people who have violated any of these rules or are causing trouble in general.

Interface Design

There are different ways to convey the information of the cameras to the people who want to use the system. However it should be easily accessible by the people, since if the display is inconvenient the people probably won’t use it. One of the more convenient ways is that the information is accessible through a smartphone since almost everyone has one. Plus most people store their smartphones at an, for them, easily accessible place. It is also mobile which helps people traverse through the environment since you are not stuck at one place in comparison to a computer screen.

So looking at possible ways to convey the information through a smartphone there could be multiple options but the most commonly used are an app or a website which are both accessible from a phone in case that phone has an internet connection. Although to see the information from the server an internet connection is needed since otherwise the data could be old and the people could walk to already occupied chairs.

Both of these ways have their advantages and disadvantages, at first there will be a look at a website. First of all is that a website is/should be accessible through all platforms, so you are not system support dependant. This results in probably less development time for the platform since you only need to develop one platform. One possible downside is that a browser tends to forget things like log-in far more often than an app since most of that information will be deleted from the cache the moment you close the browser

Comparing that to an app, the first thing to notice is that an app is more system OS dependant, there is a chance that you will have to develop different apps for Android, iOS, Windows and other platforms. This will probably increase the development time for the system if changes are to be made. It has the advantage that if a login system will be used that it should be able to store information locally and more easily. Although they do have to be downloaded separately and cannot be accessed anytime since you must have the app.

Leaving the which platform to use to convey the information aside, there is also the problem on how the information should be given to the people. Should the system just count the amount of empty chairs which are at the whole floor? Divide it further into sections which will show the count of empty chairs into that section? Another option would be that you have a complete floor plan with all chairs visible with colours to indicate whether they are occupied or not. On the other hand it is also possible to use a combination to first select an area you want to work in, with a count of the amount of empty chairs, and then see the chairs in that area.

If it is chosen to have a floor plan with all the chairs visible it might have some unintended effects. In case occupied chairs are visible it would also probably indicate that the desk which the chair is located at is probably occupied as well. Since at occasion a chair might be free but there is not room at the desk to sit at at long tables like those in Metaforum at the TU/e. Also it gives people the option to look for a space where they might be able to sit at with multiple people since they can also see the location of the occupied chairs. However this does not matter if chairs should also be located at the same place at one desk, which is more common in flexible work spaces in companies.

This also might lead to an ethical problem, since everyone with access could use the system, if occupied chairs are visible, to track the movement of people sitting there. People with bad intentions could in theory use it to stalk their fellow students or colleagues in case they know their initial position from a safer and further distance. If the occupied chairs moves there is a very big possibility the person who is / was sitting on the chair is on the move as well.

Coming back to which platform to use, an app or a website, also have to take privacy into account. If anyone can access the system people could track people’s movement for bad intentions as explained above. This can either be solved by making a login system so only certain people can access the system. However, this has the downside that the system is less accessible for everyone and might result in less use. Another option is to remove the visibility of occupied chairs. This results into that people's activity is less trackable since the chairs are not visible, so if someone moves with a chair it is unknown where it will move too. In contrast it will remove the extra functionality to check for empty desks at a long table.

App Creation

The first thing tried was using the Android Studio to make a suitable app for the user to use. This is relatively easy as a basic app with just some buttons and an interface is easy to create and doesn't take much time. After a few online tutorials a basic interface, where areas on campus can be selected and send you to a different page, was created. This looks quite slick and can be updated quickly and easily. However, if you want to do things beyond just the bare basics, quite some programming knowledge of java or kotlin is required to understand everything. The issue with this are two things. First of all if we want to use a database for the project, implementing this will be a challenge, but some guides on this have already been found and it seems quite doable with a little knowledge of java or kotlin. The second however is an issue with how we want to display the information of the vacant seats to the users. If we want to do this using green dots as discussed, this will be very difficult to do using Android Studio. No way of doing this has been found so far, maybe there is a way somewhere out there but it will surely be tough to implement.

Another way of creating the app could be using Unity. This is a program that can create various types of software on many platform including Android an iOS apps. Experimenting with Unity has been quite successful and in only a relatively short amount of time I was able to get the placement of the green dots to work. The other interface parts of the app were also easy to implement to the point where only two other things will need to be added: the overall design needs to be changes/adapted to be optimal for our users and we need to get the data of the positions of the dots from some database. Currently the positions are imported by the app from a txt file, but changing this to import it from for example an SQL table shouldn't be hard. The basics are already partially implemented as well.

The decision of which method to use depends mostly on the design of the app. If we want to only show the number of seats per area for example, Android Studio would probably be easier. However, if we want to use the green dots, Unity would definitely be best as it already pretty much works on there.

Once the final design has been decided and we have a clearer idea of how we will use the database, finishing the app shouldn't take much time or effort anymore.

Neural Network

Using the Yolo Algorithm

The first thing that we thought of when the discussion of detecting objects came, was a Neural Network. In order to detect an object you need a powerfull type of algorithms that can classify that object, atributting it a label, which in our case is the name of "chair" and its states "empty" or "occupied". The chosen programming language was Python due to its vast Neural Network community and due to is clear and easier to read syntax.

Building a Neural Network can be done in 2 ways:

- Either create your own model, with your own functions and mathematic formulas behind

- Either use an existing model and updateit in such a way to make it usefull for your desired task.

Due to lack of time to create our own Neural Network model, we chose the secodn option. In recent years, deep learning techniques are achieving state-of-the-art results for object detection, such as on standard benchmark datasets and in computer vision competitions. Notable is the “You Only Look Once,” or YOLO, family of Convolutional Neural Networks that achieve near state-of-the-art results with a single end-to-end model that can perform object detection in real-time. YOLO is a clever convolutional neural network (CNN) for doing object detection in real-time. The algorithm applies a single neural network to the full image, and then divides the image into regions and predicts bounding boxes and probabilities for each region. These bounding boxes are weighted by the predicted probabilities.

YOLO is popular because it achieves high accuracy while also being able to run in real-time. The algorithm “only looks once” at the image in the sense that it requires only one forward propagation pass through the neural network to make predictions. After non-max suppression (which makes sure the object detection algorithm only detects each object once), it then outputs recognized objects together with the bounding boxes.

With YOLO, a single CNN simultaneously predicts multiple bounding boxes and class probabilities for those boxes. YOLO trains on full images and directly optimizes detection performance.

In this project we are using Yolo in order to identify the empty chairs of a classroom. The classification is done as follows:

The chiars can be:

- Empty

- Occupied

These two tags are atributed to the chairs after the following criteria:

* If the chair is clean (it does not have anything in front of it or on it) then the chiar is empty * If the chair is empty and someone puts an object in front of it, or sits on the chair, then the chair becames occupied

There also exists a small problem, which is that the algorithm does not know at its first look if where is a person there is also a chair. It has to see a chair first in order to give its tag.

Requirements of the model

| Id | Description | MoSCoW |

|---|---|---|

| ALR1 | The system shall identify correctly 4 objects, namely: empty chiars , taken chairs, and persons, and michelineouse in fron of the chairs. | Must have |

| ALR2 | The system shall conclude that if a person sits on a chair, then that chair is taken. | Must have |

| ALR3 | The system shall conclude that if there are michelinouse in fron of a chair , then that chiar is taken. | Must have |

| ALR4 | The system shall work on a real tine feed | Must have |

| ALR5 | The system shall keep track of the persons who sees. | Won't have |

| ALR6 | The system shall also focus on people's faces. | Won't have |

| ALR7 | Whne someone places an object in front of a chair, the system shall conside that chiar occupied. | Must have |

| ALR8 | When a chair has a empty table in front of it, that system shall consider it empty. | Must have |

| ALR9 | The system shall save the number of empty chairs found in a database on cloud. | Must have |

| ALR10 | The system shall update the database once every 2 minutes | Must have |

| ALR11 | The system shall communica with the android app through the database. | Must have |

| ALR12 | The android app shall use the given database | Must have |

| ALR13 | The android app shall display the information of tha database | Must have |

| ALR14 | When someone leaves a clean chair, the system shall consider it empty. | Must have |

Rooms

Regulations

As opposed to the system with the chairs, it is actually possible to pursue people who do not follow the rules and regulations with the rooms system. People need to reserve rooms through their account, making it possible to make them responsible for what happens to the room as long as they have reserved it (name, picture of account holder, student number, etc. are all known once a person has reserved a room through his/her account) .

The rules and regulations for reserving a room are as follows:

- A person can only make use of a room when he or she has reserved it

- Rooms can be reserved up to 7 days in advance

- Reservations may not conflict with other requests of for using the room

- If no one is in the room after 15 minutes during the reservation, the reservation gets cancelled

- No one is allowed to stay in a room outside of the available reservation times

- If chairs and/or tables have been moved in the room, they need to be put back in their original positions before leaving the room

In order to prevent people from abusing the room reservation system, those who violate the rules will receive a punishment, which is based on the severity of the violation and the number of previous violations.

[Write part about punishments and the rules of punishments]

Logbook

Week 1

| Name | Activities (hours) | Total time spent |

|---|---|---|

| Boris | Brainstorming (1), Research Papers (3), Write Planning (2.5), Write State-of-the-art (4), Write Subject and Problem Statement (0.5), Other Wiki Writing (2) | 13 |

| Winston | Brainstorming (1), Look for relevant papers (2), Write subject, objectives, and approach (3),Clean up state of the art (1), clean up the references (1), Wiki writing (2), Rules and Regulations (2) | 12 |

| Jelle | Brainstorming (1), Writing users, society and enterprise (4), exploring relevant papers (2), adding to approach (2), Other wiki writing (1) | 10 |

| Roy | Brainstorming (1), Exploring relevant papers (2), Write Approach (2) | 5 |

| Horea | Brainstorming (1), Look for papers (2), Object detection and neural network (4), State-of-the-art (2), Wiki writing (2) | 11 |

Week 2

| Name | Activities (hours) | Total time spent |

|---|---|---|

| Boris | Meeting (0.5), Update planning (0.5), Clean up state-of-the-art (0.5), Group Discussion (2), App Creation (8) | 11.5 |

| Horea | Meeting (0.5), Group Discussion (2), Neural Network Creation (6), Learning to create the Neural Network(4) | 12.5 |

| Jelle | Meeting (0.5), User Requirements and questionnaire creation (4), Group Discussion (2) | 6.5 |

| Roy | Meeting (0.5), Keep in Contact with Peter Engels (0.5), Group Discussion (2), Design interface (7) | 10 |

| Winston | Group Discussion (2), write rules and regulations (3) | 5 |

Week 3

| Name | Activities (hours) | Total time spent |

|---|---|---|

| Boris | Meeting (0.5), Group Discussion (0.5), Contact Engels (0.5), App Creation (9) | 10.5 |

| Horea | Meeting (0.5), Group Discussion (0.5), Implementing Yolo object detection on live feed (5) | 6.5 |

| Jelle | Meeting (0.5), Group Discussion (0.5), Contact Engels (0.5) | 1.5 |

| Roy | Meeting (0.5), Group Discussion (0.5), Design interface (3), Creating Mockups (2) | 6 |

| Winston | Meeting (0.5), Group Discussion (0.5), Contact Engels (0.5), adjust rules and regulations (2) | 3.5 |

| Name | Activities (hours) | Total time spent |

|---|---|---|

| Boris | ... | 0 |

| Horea | ... | 0 |

| Jelle | ... | 0 |

| Roy | ... | 0 |

| Winston | ... | 0 |

Week 4

| Name | Activities (hours) | Total time spent |

|---|---|---|

| Boris | ... | 0 |

| Horea | ... | 0 |

| Jelle | ... | 0 |

| Roy | ... | 0 |

| Winston | ... | 0 |

Week 5

| Name | Activities (hours) | Total time spent |

|---|---|---|

| Boris | ... | 0 |

| Horea | ... | 0 |

| Jelle | ... | 0 |

| Roy | ... | 0 |

| Winston | ... | 0 |

Week 6

| Name | Activities (hours) | Total time spent |

|---|---|---|

| Boris | ... | 0 |

| Horea | ... | 0 |

| Jelle | ... | 0 |

| Roy | ... | 0 |

| Winston | ... | 0 |

Week 7

| Name | Activities (hours) | Total time spent |

|---|---|---|

| Boris | ... | 0 |

| Horea | ... | 0 |

| Jelle | ... | 0 |

| Roy | ... | 0 |

| Winston | ... | 0 |

Week 8

| Name | Activities (hours) | Total time spent |

|---|---|---|

| Boris | ... | 0 |

| Horea | ... | 0 |

| Jelle | ... | 0 |

| Roy | ... | 0 |

| Winston | ... | 0 |

References

- ↑ Kai Chen et al.,"Hybrid Task Cascade for Instance Segmentation"

- ↑ Yudong Liu et al., "CBNet: A Novel Composite Backbone Network Architecture for Object Detection", Sep 2019

- ↑ Zhaowei Cai, Nuno Vasconcelos, "Cascade R-CNN: High Quality Object Detection and Instance Segmentation", Jun 2019

- ↑ Carlo Corsi, "History highlights and future trends of infrared sensors", pages 1663-1686, April 2010

- ↑ Bong, D.B.L., "Integrated Approach in the Design of Car Park Occupancy Information System (COINS)", IAENG International Journal of Computer Science, 2008

- ↑ Acharya, Debaditya, "Real-time image-based parking occupancy detection using deep learning", CEUR Workshop Proceedings, 2018

- ↑ Cai, Bill Yang, "Deep Learning Based Video System for Accurate and Real-Time Parking Measurement", IEEE Internet of Things Journal, 2019

- ↑ Nguyen, Huy Hoang, "Real-time Detection of Seat Occupancy and Hogging", IoT-App, 2015

- ↑ George, Boby, "Seat Occupancy Detection Based on Capacitive Sensing", IEEE Transactions on Instrumentation and Measurement, 2009

- ↑ Faber, Petko, "Image-based Passenger Detection and Localization inside Vehicles", International Archives of Photogrammetry and Remote Sensing, 2000

- ↑ Liang, Hongyu, "People in Seats Counting via Seat Detection for Meeting Surveillance", Communications in Computer and Information Science, 2012

- ↑ Hailemariam, Ebenezer, "Real-Time Occupancy Detection using Decision Trees with Multiple Sensor Types", Autodesk Research, 2011

- ↑ Chrisni, "App helps students find empty study space", University Business, 2014

- ↑ Sevo, Igor, "Convolutional Neural Network Based Automatic Object Detection on Aerial Images", IEEE Geoscience and Remote Sensing Letters, 2016