Embedded Motion Control 2019 Group 3: Difference between revisions

| Line 366: | Line 366: | ||

[[File:V_delta_calc_EMC3_2019.png|center|frameless]] | [[File:V_delta_calc_EMC3_2019.png|center|frameless]] | ||

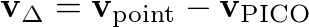

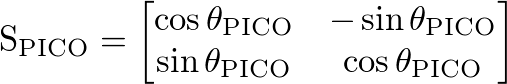

Then the coordinate space matrix of PICO [[File:S_pico_EMC3_2019.png|frameless|upright=0.2]] needs to be determined in order to create the transition matrix. This is done by using the absolute rotation of PICO [[File:Th_pico_EMC3_2019.png|frameless|upright=0. | Then the coordinate space matrix of PICO [[File:S_pico_EMC3_2019.png|frameless|upright=0.2]] needs to be determined in order to create the transition matrix. This is done by using the absolute rotation of PICO [[File:Th_pico_EMC3_2019.png|frameless|upright=0.2]] in order to calculate the vector components of the x- and y-axes of the PICO coordinate space. | ||

[[File:S_pico_calc_EMC3_2019.png|center|frameless]] | |||

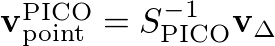

Then the relative position vector of the pathpoint in PICO's coordinate space [[File:V_point_pico_EMC3_2019.png|frameless|upright=0.2]] can be calculated by multiplying the inverse of the PICO space matrix with the difference vector: | |||

[[File:V_point_pico_calc_EMC3_2019.png|center|frameless]] | |||

==== Visualisation ==== | ==== Visualisation ==== | ||

Revision as of 22:28, 16 June 2019

Group members

| Collin Bouwens | 1392794 | |

| Yves Elmensdorp | 1393944 | |

| Kevin Jebbink | 0817997 | |

| Mike Mostard | 1387332 | |

| Job van der Velde | 0855969 |

Useful information

PDF of initial Design Document

Planning

| Week 2 | Week 3 | Week 4 | Week 5 | Week 6 | Week 7 | Week 8 |

|---|---|---|---|---|---|---|

| Wed. 1 May: initial meeting: getting to know the requirements of the design document. | Mon. 6 May: design document handed in by 17:00. Responsibility: Collin and Mike. | Wed. 15 May: escape room competition. | Wed. 5 June: final design presentation. | Wed. 12 June: final competition. | ||

| Tue. 7 May: first tests with the robot. Measurement plan and test code is to be made by Kevin and Job. | Tue. 14 May: Implementing and testing the code for the Escape Room Challenge | |||||

| Wed. 8 May: meeting: discussing the design document and the initial tests, as well as the software design made by Yves.

Presentation of the initial design by Kevin during the lecture. |

Wed. 15 May: Developing the software design for the Final Challenge |

Introduction

This wiki page describes the approach and process of group 3 concerning the Escape room challenge and the Hospital challenge with the PICO robot. The PICO robot is a telepresence robot that is capable of driving around while monitoring its environment. In the Escape Room Competition, the robot is placed somewhere inside a rectangular room with unknown dimensions with one doorway that leads to the finish line. Once the robot crosses the finish line without bumping into walls, the assignment is completed. The Hospital challenge involves a dynamic hospital-like environment, where the robot is assigned to approach a number of cabinets based on a known map, while avoiding obstacles.

The wiki is subdivided in the following parts: Firstly, the approach for the Escape room challenge is explained and evaluated. The second topic is the approach and evaluation of the Hospital challenge. This is followed by a full description of the system architecture used to perform the Hospital challenge. After the system architecture, the most important tests and test results are explained. Lastly, a conclusion and recommendation is provided.

Escape room challenge

This chapter summarizes the approach for the escape room challenge and offers some reflection on the execution of the challenge. Below a small clip of the escape room challenge.

Approach

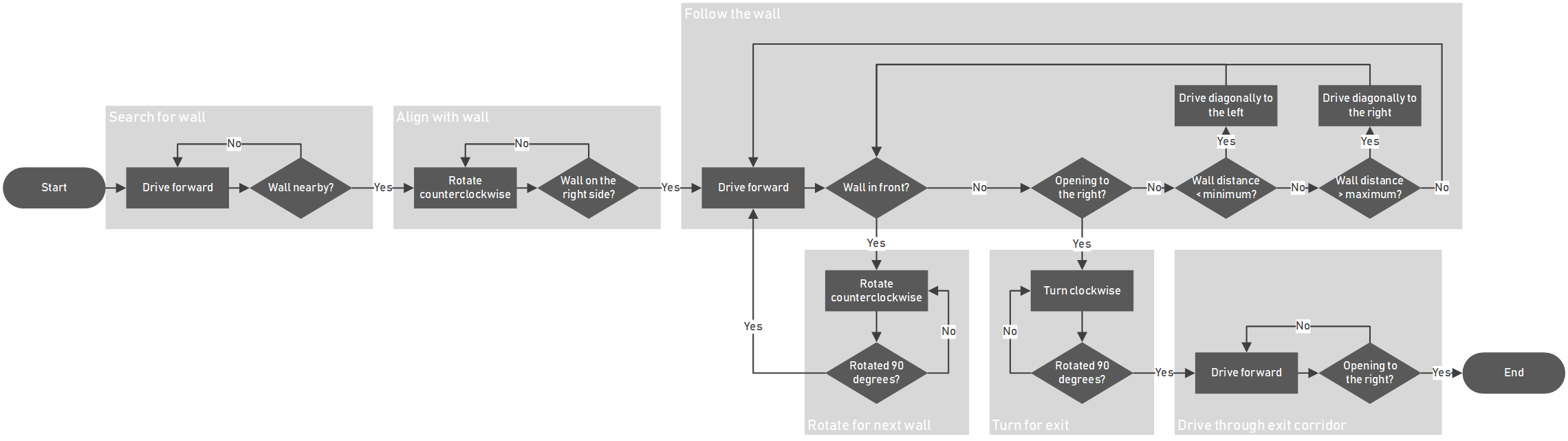

The state chart below depicts the wall following program that the robot is to execute during the escape room challenge. In a nutshell: the robot drives forward until a wall is detected, lines up with said wall to the right, and starts following it by forcing itself to stay between a minimum and a maximum distance to the wall. When something is detected in front, it is assumed that the next wall to follow is found, and thus the robot should rotate 90 degrees counterclockwise so it can start following the next wall. When a gap is detected to the right of the robot, it is assumed that the exit corridor has been found, and thus the robot should turn into the exit. Then the robot keeps following the right wall in the corridor until, once again, a gap is detected to the right of the robot. At this point, the robot should have crossed the finish line.

Reflection

Due to a lack of time and more resources being put into the final challenge, the code for the escape room challenge had to be simplified. The original plan was to have the robot scan the environment, identify the exit, and when identified, drive towards the exit and drive to the finish line. In case the robot could not identify the exit, the robot would start following the wall instead, as a robust backup plan. The testing session before the challenge proved to be too short, and only the wall follower could be tested. Therefore, only the wall follower program was executed during the challenge.

As a precaution to not bump into the walls, we reduced the speed of the robot and increased the distance the robot would keep to the wall by modifying the config file in the software. Although our program did succeed the challenge, we were the slowest performing group as a result of the named modifications to the configuration. We felt however that these modifications were worth the slowdown and proved the robustness of the simple approach our software took.

Hospital Competition

This chapter summarizes the approach for the hospital challenge and offers some reflection on the execution of the challenge.

Approach

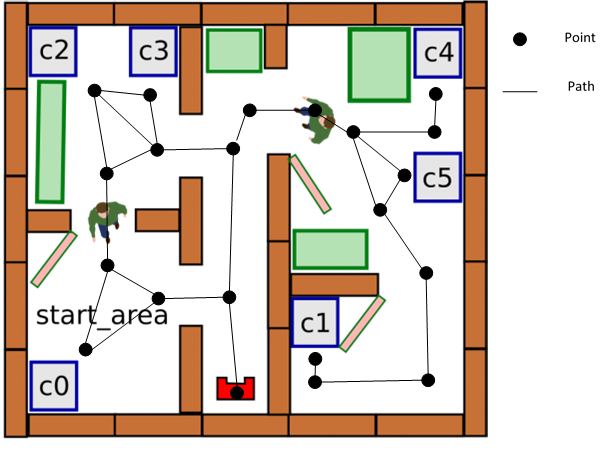

The general approach to the challenge is to create a point map of the map of the hospital. The figure below shows such a point map:

A point is placed on different locations on the map. These locations are: at cabinets, on junction, in front of doorways and in rooms. In the placement of these points, it is important that each point can be approached from a different point in a straight line. The goal of these points is that the robot can navigate from one side of the hospital to the other by driving from point to point. The points that the robot can drive to in a straight line from a point are its neighboring points.

The placement of each point is defined by the distance and direction to its neighboring points and its surrounding spatial features. When the robot is on a point (A) and wants to drive to a different point (B), the robot can use the distance and direction to from A to B to drive to where B approximately is. Then, using the spatial features surrounding point B, the robot can more accurately determine its location compared to B and drive to B. For the path between points, it can be defined whether this path is through a doorway or hallway, or whether its though a room. This can help in how the robot trajectory should be controlled during the driving from point to point.

If the robots needs to drive from a startpoint to an endpoint which is not neighbouring, the software will create a route to that point. This route is a list of points to which the robot needs to drive to get to the endpoint. To make sure the route is as efficient as possible, an algorithm is used which calculates the shortest route. The algorithm that is used is called "Dijkstra'a algorithm". A similar algorithm is also used in car navigation systems to obtain the shortest route.

Reflection

TBD

- Kevin + gifjes enzovoorts

System Design

This chapter describes the final system design for the hospital challenge. The system design is based on the original Design Document that can be found under Useful Documents.

Components

The PICO robot is a modified version of the Jazz robot, which is originally developed by Gostai, now part of Aldebaran. The key components of the robot that are relevant to this project are the drivetrain and the laser rangefinder. The drivetrain is holonomic, as it consists of three omni-wheels that allow the robot to translate in any direction without necessarily rotating. This adds the benefit of scanning the environment in a fixed orientation, while moving in any direction. The software framework allows the forward and sideways velocity to be set, as well as the horizontal angular velocity. The framework also approximates the relative position and angle from the starting position.

The laser rangefinder is a spatial measurement device that is capable of measuring the horizontal distance to any object within a fixed field of view. The software framework measures a finite number of equally distributed angles within the field of view and notifies when new measurement data is available. Using this data, walls and obstacles in the environment of the robot can be detected.

Lastly, the robot is fitted with loudspeakers and a WiFi connection according to the data sheet of the Jazz robot. This can be useful for interfacing during operation, as described in the 'Interfaces' section. Whether the PICO robot actually has these speakers and the WiFi connectivity remains to be determined.

Requirements

Different requirement sets have been made for the Escape Room Competition and the Final Competition. The requirements are based on the course descriptions of the competitions and the personal ambitions of the project members. The final software is finished once all the requirements are met.

The requirements for the Escape Room Competition are as follows:

- The entire software runs on one executable on the robot.

- The robot is to autonomously drive itself out of the escape room.

- The robot may not 'bump' into walls, where 'bumping' is judged by the tutors during the competition.

- The robot may not stand still for more than 30 seconds.

- The robot has five minutes to get out of the escape room.

- The software will communicate when it changes its state, why it changes its state and to what state it changes.

The requirements for the Final Competition are as follows:

- The entire software runs on one executable on the robot.

- The robot is to autonomously drive itself around in the dynamic hospital.

- The robot may not 'bump' into objects, where 'bumping' is judged by the tutors during the competition.

- The robot may not stand still for more than 30 seconds.

- The robot can visit a variable number of cabinets in the hospital.

- The software will communicate when it changes its state, why it changes its state and to what state it changes.

- The robot navigates based on a provided map of the hospital and data obtained by the laser rangefinder and the odometry data.

Functions

A list of functions the robot needs to fulfil has been made. Some of these functions are for both competitions, while some are for either the Escape Room or Final Competition. These functions are:

- In general:

- Recognising spatial features;

- Preventing collision;

- Conditioning the odometry data;

- Conditioning the rangefinder data;

- Communicating the state of the software.

- For the Escape Room Competition:

- Following walls;

- Detecting the end of the finish corridor.

- For the Final Competition:

- Moving to points on the map;

- Calculating current position on the map;

- Planning the trajectory to a point on the map;

- Approaching a cabinet based on its location on the map.

The key function in this project is recognising spatial features. The point of this function is to analyse the rangefinder data in order to detect walls, convex or concave corners, dead spots in the field of view, and gaps in the wall that could be a doorway. This plays a key role during the Escape Room Competition in order to detect the corridor with the finish line in it, and therefore has a priority during the realisation of the software. For this function to work reliably, it is essential that the rangefinder data is analysed for noise during the initial tests. If there is a significant amount of noise, the rangefinder data needs to be conditioned before it is fed into the spatial feature recognition function. As a safety measure, it is important to constantly monitor the spatial features in order to prevent collisions with unexpected obstacles.

Lastly, the trajectory planning function plays a major role during the Final Competition, as this determines the route that the robot needs to follow in order to get to a specified cabinet. This function needs to take obstacles into account, in case the preferred route is obstructed. This is possible, as the documentation about the Final Competition show a map in which multiple routes lead to a certain cabinet. One of these routes can be blocked, in which case the robot needs to calculate a different route.

Specifications

The specifications describe important dimensions and limitations of the hardware components of the robot that will be used during the competitions. For each component, the specifications of that components will be given, with a source of where this specification comes from.

The drivetrain of the robot can move the robot in the x and y directions and rotate the robot in the z direction. The maximum speed of the robot is limited to ±0.5 m/s translation and ±1.2 rad/s rotation. These values are from the Embedded Motion Control Wiki page. The centre of rotation of the drivetrain needs to be known in order to predict the translation of the robot after a rotation. This will be determined with a measurement.

The dimensions of the footprint of the robot need to be known in order to move the robot through corridors and doorways without collision. The footprint is 41 cm wide and 35 cm deep, according to the Jazz robot datasheet. A measurement will be made to check these dimensions.

The laser rangefinder will be used to detect and measure the distance to objects in the vicinity of the robot. The measurement distance range of the sensor is from 0.1 m to 10.0 m with a field of view of 229.2°. The range of the sensor is divided into 1000 parts. These values are determined with the PICO simulator and need to be verified with measurements on the real robot.

Interfaces

The interfacing of the robot determines how the project members interact with the robot in order to set it up for the competitions. It also plays a role during operation, in the way that it interacts with the spectators of the competitions. On the development level there is an Ethernet connection available to the robot. This allows a computer to be hooked up to the robot in order to download the latest version of the software using git, by connecting to the Gitlab repository of the project group. This involves using the git pull command, which downloads all the content from the repository, including the executable that contains the robot software.

On the operation level it is important for the robot to communicate the status of the software. This is useful for debugging the software, as well as clarifying the behaviour during the competitions. This can be made possible with the loudspeaker, by recording voice lines that explain what the robot currently senses and what the next step is that it will perform. Not only is this functionally important, but it can also add a human touch to the behaviour of the robot. In case that the PICO robot has been altered to not have loudspeakers, it needs to be determined during testing if the WiFi interface can be utilised in order to print messages in a terminal on a computer that is connected to the robot.

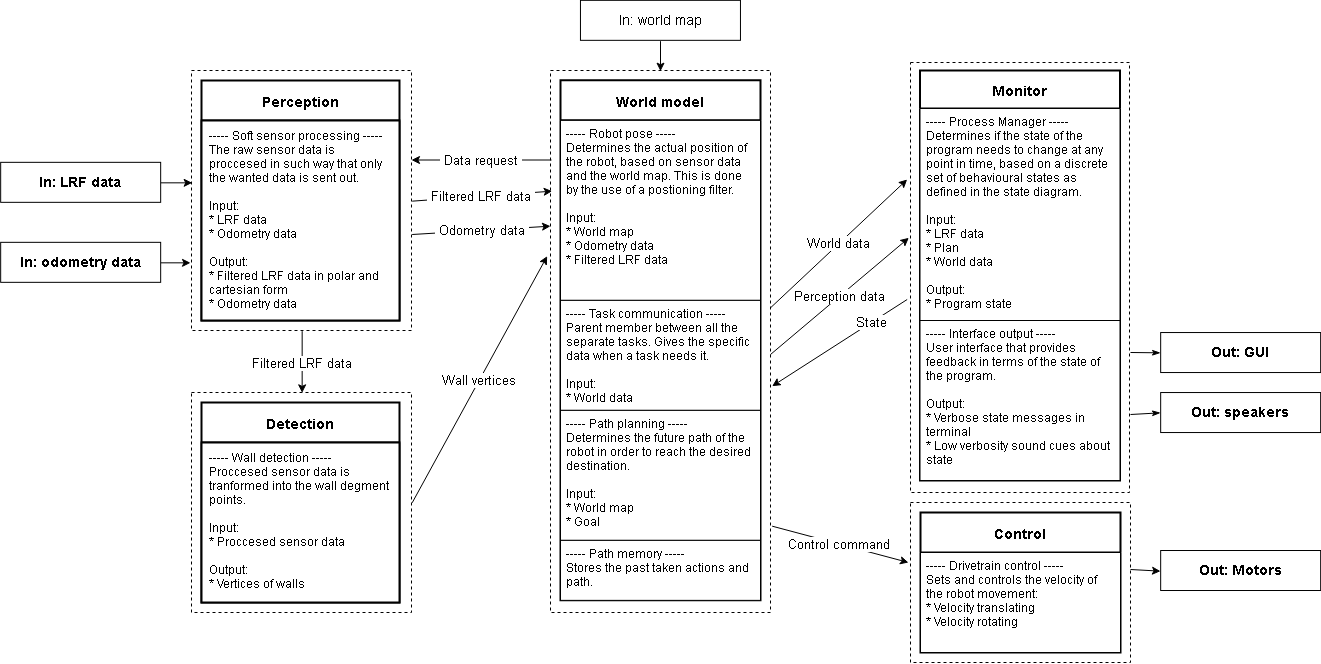

System architecture

Perception block

The purpose of the perception object is to condition the sensor data. This mainly involves filtering invalid points from the LRF measurements, such that these points cannot pollute the information that is fed into the feature detection algorithm. Such invalid points include points that are erroneously measured at the origin of the sensor, probably as a result of dust on the sensor.

Detection

- Collin dijkstra shit (of toch niet hier, maar in WorldModel!!)

Path planning

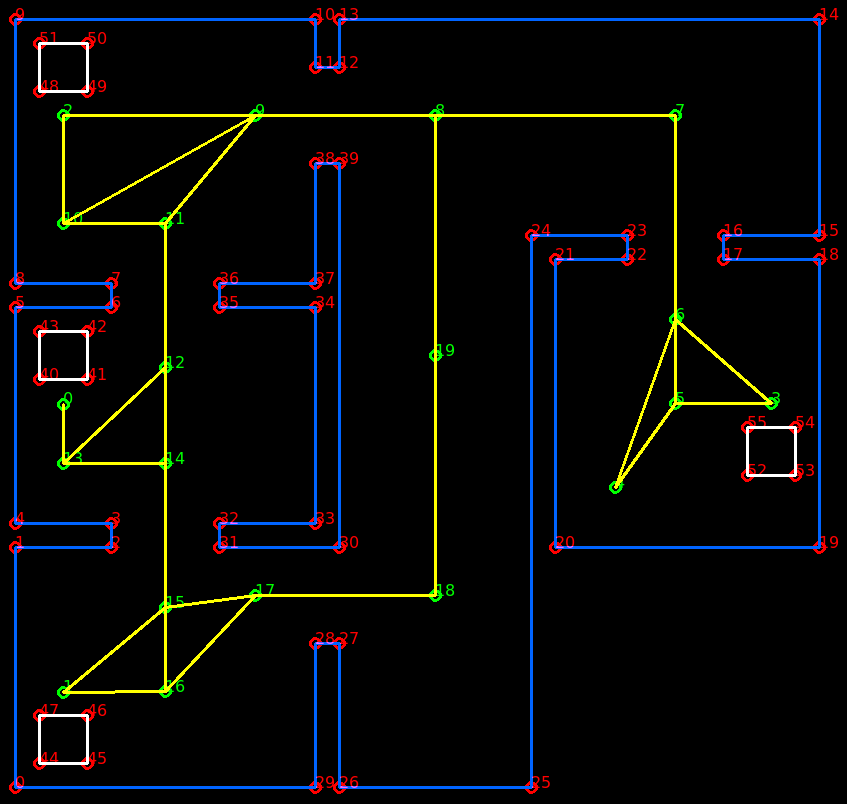

The method of determine the path points is done automatic and by hand. The program will load the Json map file when the program starts. The code will detect where all the cabinets are and what the front is of a cabinet. Each cabinet path point will exactly be placed in the middle of the virtual area that is specified in front of the cabinet. The rest of the path points are put in by hand. A path point has three variables: the x and y coordinates and the direction. The direction only applies when the path point is in front of a cabinet. The orientation that PICO needs to have to be in front of the cabinet is specified within the direction variable. The direction will be subtracted to the real orientation of PICO and afterward be corrected if PICO is not aligned right.

| Point | X | Y |

|---|---|---|

| 0 (cabinet 0) | 0.4 | 3.2 |

| 1 (cabinet 1) | 0.4 | 0.8 |

| 2 (cabinet 2) | 0.4 | 5.6 |

| 3 (cabinet 3) | 6.3 | 3.2 |

| Point | X | Y |

|---|---|---|

| 4 (Start point) | 5.0 | 2.5 |

| 5 | 5.5 | 3.2 |

| 6 | 5.5 | 3.9 |

| 7 | 5.5 | 5.6 |

| 8 | 3.5 | 5.6 |

| 9 | 2.0 | 5.6 |

| 10 | 0.4 | 4.7 |

| 11 | 1.25 | 4.7 |

| 12 | 1.25 | 3.5 |

| 13 | 0.4 | 2.7 |

| 14 | 1.25 | 2.7 |

| 15 | 1.25 | 1.5 |

| 16 | 1.25 | 0.8 |

| 17 | 2.0 | 1.6 |

| 18 | 3.5 | 1.6 |

| 19 | 3.5 | 3.6 |

| Path | Length |

|---|---|

| 4->5 | 0.86 |

| 4->6 | 1.49 |

| 5->3 | 0.8 |

| 5->6 | 0.7 |

| 3->6 | 1.06 |

| 6->7 | 1.7 |

| 7->8 | 2.0 |

| 8->9 | 1.5 |

| 9->2 | 1.6 |

| 9->10 | 1.84 |

| 9->11 | 1.17 |

| 2->10 | 0.9 |

| 10->11 | 0.85 |

| 11->12 | 1.2 |

| Path | Length |

|---|---|

| 12->13 | 1.17 |

| 12->14 | 0.8 |

| 13->0 | 0.5 |

| 13->14 | 0.85 |

| 14->15 | 1.2 |

| 15->1 | 1.1 |

| 15->16 | 0.7 |

| 15->17 | 0.76 |

| 1->16 | 0.85 |

| 16->17 | 1.1 |

| 17->18 | 1.5 |

| 18->19 | 2.0 |

| 19->8 | 2.0 |

Wall finding algorithm

To allow PICO to navigate safely, he must know where he is in the world map and what is around him. PICO is equipped with a LIDAR scanner that scans the environment with the help of laser beams. This data is then processed to be able to determine where all walls and objects are. There are many ways in which you can process the data into useful information. A commonly used algorithm is the split and merge algorithm with the RANSAC algorithm as an extension. These methods are also used within this project. In the case of this design, we do the following processing steps:

- Filtering measurement data

- Recognizing and splitting global segments (recognizing multiple walls or objects)

- Apply the split algorithm per segment

- Determine end points of segment

- Determine the linear line between these end points (by = ax + c)

- For each data point between these end points, determine the distance perpendicular to the line (d = abs(a*x+b*y+c)/sqrt(a^2+b^2))

- Compare the point with the longest distance with the distance limit value

- If our value falls below the limit value then there are no more segments (parts) in the global segment.

- If the value falls above the limit value, the segment is split at this point and steps 3.1 to 3.4 are performed again for the left and right parts of this point.

- Lines are fitted from the segment points using the RANSAC algorithm.

Below is a visual representation of the split principle. The original image is used from the EMC course of 2017 group 10 [1]:

As mentioned earlier, each segment is fitted to a line using the RANSAC algorithm. RANSAC (RANdom SAmple Consensus) iterates over various random selections of two points and determines the distance of every other point to the line that is constructed by these two points. If the distance of a point falls within a threshold distance, this point is considered an inlier. The distance d of this inlier to the line is then compared to the threshold value t to determine how well it fits the current line iteration. This is described by the score for this point, which is calculated as (t - d)/t. The sum of all scores for one line iteration is then divided by the number of points in the segment that is being evaluated. This value is the final score of the current line iteration. By iterating over various random lines among the points in the segment, the line with the highest score can be selected as being the best fit. The image below demonstrates the basic principle of an unweighted RANSAC implementation, where only the number of inliers accounts for the score of each line.

The reason that the RANSAC algorithm was selected for fitting the lines in the segments over linear fitting methods, such as least squares, is robustness. During initial testing it became clear that when the laser rangefinder scans across a dead angle, it would detect points in this area that are not actually on the map. These points should not be taken into account when fitting the line. As visualised above, these outliers are not taken into account by RANSAC. If a linear fitting algorithm such as least squares were to be used, these outliers would skew the actual line, resulting in inaccurate line detection.

A final line correction needs to be done because the RANSAC function implementation only returns start and endpoints somewhere between the found vertices. The lines need to be fitted so that the corners and endpoints align to the real wall lines. This is simply done by determining the lines between the points and then equate the lines to each other. The final endpoints are determined by equating the point on the line where the found vertices are perpendicular to this line.

Monitor block

- Collin

The monitor object, as the name implies, monitors the execution of the program. In this object, the state machine is being run. On every tick, it is checked whether the state has fulfilled its exit TODO

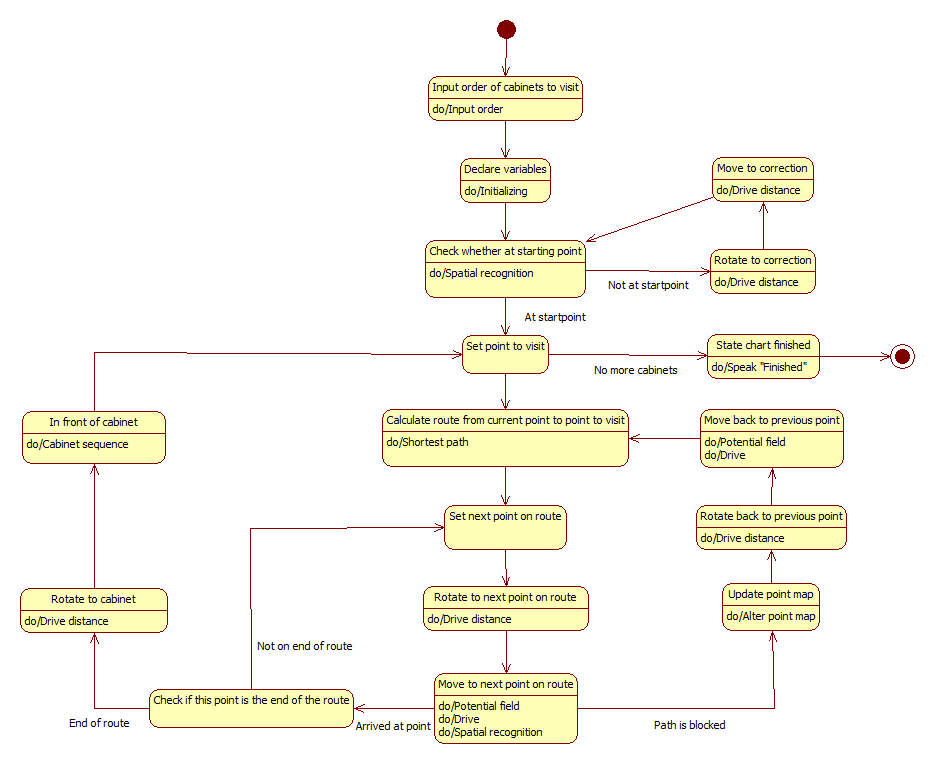

The figure below shows the state machine for this challenge. The state chart will be a part of the "World model block" form the system architecture. This diagram will be used as basis for the software written for the final challenge.

Per state, the functions which need to be performed are stated. These exclude functions, such as tracking the position of the robot on the map, which will always run in a separate thread. The state chart is designed such that all the requirements of the final challenge will be fulfilled.

World model block

The world model is the central object in the software architecture. The purpose of the world model is to act on changes in the positioning of PICO, such as sensing where on the map PICO is, determining to which pathpoints to drive, and in which direction to drive in order to reach a pathpoint. An additional responsibility of the world model is to visualise the conditioned LRF data and the pathpoint to which PICO is driving.

Position estimation

- Kevin: positiebepaling

Pathpoint route calculation

- Collin: Dijkstra algoritme

Target-based driving

Now that both absolute positions of PICO and the next pathpoint are known on the map, the robot needs to know in which direction to drive. That means that the location of the pathpoint that PICO should drive to, needs to be transformed to the coordinate space of PICO. This way it can be figured out in which relative direction PICO should drive and how much PICO should rotate before driving.

The first step is to determine the difference vector ![]() between the position of the pathpoint

between the position of the pathpoint ![]() and the position of PICO

and the position of PICO ![]() . This is done by a simple subtraction:

. This is done by a simple subtraction:

Then the coordinate space matrix of PICO ![]() needs to be determined in order to create the transition matrix. This is done by using the absolute rotation of PICO

needs to be determined in order to create the transition matrix. This is done by using the absolute rotation of PICO ![]() in order to calculate the vector components of the x- and y-axes of the PICO coordinate space.

in order to calculate the vector components of the x- and y-axes of the PICO coordinate space.

Then the relative position vector of the pathpoint in PICO's coordinate space ![]() can be calculated by multiplying the inverse of the PICO space matrix with the difference vector:

can be calculated by multiplying the inverse of the PICO space matrix with the difference vector:

Visualisation

- Mike: hier nog een stukje schrijven over het visualiseren van de laserdata, PICO zelf en zijn beoogde doel

Control block

The control block contains the actuator control, called Drivecontrol. This block provides output to the actuators based on inputs from the Worldmodel.

Drivecontrol

The actuators are controlled such that the movement of the robot is fluent. This is achieved via implementing an S-curve for any velocity change. The S-curve implementation was chosen to limit Jerk in the robot's movement and thus preventing slip. The reduction of slip in the motion of PICO increases the accuracy of its movement on top of the fluency. The S-curve is implemented in two different functions; the function 'Drive' accelerates and decelerates smoothly to a certain speed or rotation in any direction. The second function 'Drive distance' accurately accelerates and decelerates over a fixed distance or rotation. General information on S-curves can be found via the link under Useful Information.

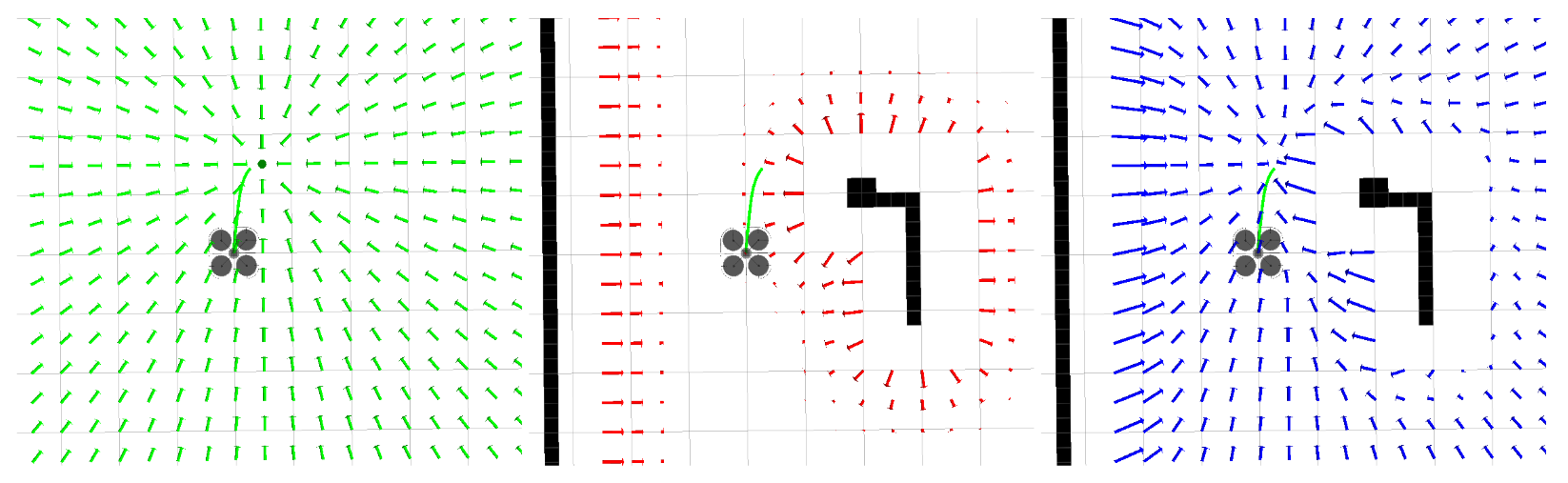

Drive has been further incorporated in a function that uses a potential field. This function prevents the robot from bumping into objects in a fluent manner. See the figure below for a visual representation of an example potential field. The leftmost image shows the attraction field to the goal, the middle image shows the repulsion from obstacles and the rightmost image shows the combination of the two. Any wall or object is taken into account for this function.

Image obtained from: [[2]]

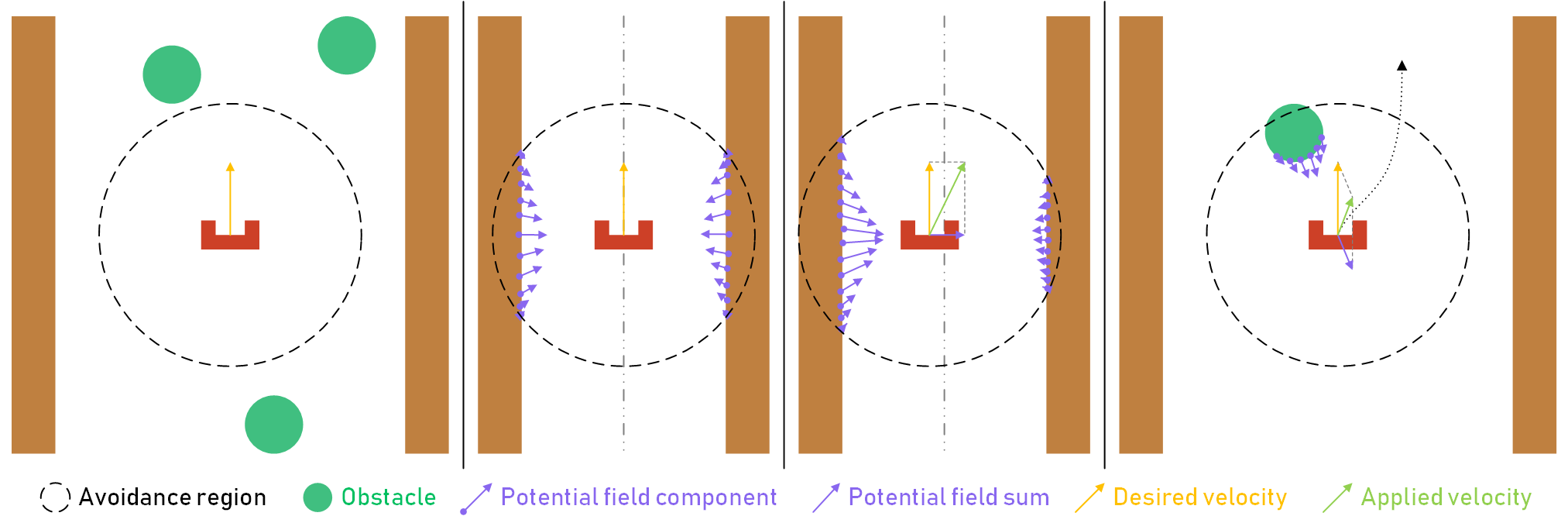

However, the implementation used for PICO does not use an attraction field, only repulsion from obstacles. The potential field vector is calculated in real-time, as the robot is expected to run into dynamic obstacles in the final challenge. This also takes the imperfections in the physical environment into account. The way the potential field is obtained is visualised in the figure below.

The first image shows how the robot is far away enough from any walls or obstacles, and thus the potential field vector is zero, causing the robot to keep its (straight) trajectory. In the second image, the robot is driving through a narrow corridor. As a result of the symmetry of the environment, the potential field component vectors cancel each other out, causing the potential field sum vector to be zero. Once again, the robot keeps its trajectory. In the third image however, the robot is closer to the left wall, causing the left potential field component vectors to outweigh the right ones. As such, the potential field sum vector points to the right, causing the robot to drive towards the middle of the corridor, until the sum vector reaches its steady state value when the robot is in the middle again. The fourth image depicts a situation where an obstacle, such a random box or a walking person, enters the avoidance region around the robot. Once again, the potential field sum vector points away from the obstacle, causing the robot to drive around the obstacle as depicted by the dotted line.

Although the potential field prevents collision with obstacles, it also pushes PICO off course. To make sure that PICO still reaches its goal, an orientation correction was implemented. This function uses the odometry data to calculate PICO's orientation relative to its current goal. If this orientation differs from the desired orientation, namely that PICO looks directly at its goal, the difference in angle is corrected. Although the odometry data is not very accurate, it is sufficient for this purpose, since PICO navigates from point to point and resets its odometry data when it reaches the next point.

Testing

This chapter describes the most important tests and test results during this project.

Test Goals

Several tests were executed during the course of the project, each with a different goal. The most important goals have been summarised below:

- Test the laser range finder and the encoders.

- Determine the static friction in the actuators for the x- and y-direction, and the rotation.

- Collect laserdata for the spatial recognition functions.

- Test the Drivecontrol functionality, consisting of the S-curve implementation and the potential field.

- Test the full system on the example map.

Results

The results from each tests are described in separate parts.

Laser Range Finder & Encoders

The range of the laser range finder according to the simulation is 10cm to 10m, the angle is +114.6 to -114.6 degrees as measured from the front of the robot. This angle is measured in 1000 parts, per an amount of time that can be determined by the user. However, the actual range is much larger. During the test, laserdata appeared within the 10cm radius of the robot. This data produced false positives and had to be filtered out. The maximum range of the range finder was actually larger than 10 meters, but this does not limit the functionality of the robot. The other properties of the laser range finder were accurate.

The values supplied by the encoders are automatically converted to distance in the x- and y-direction and a rotation a in radians. Due to the three wheel base orientation of the actuators, the x- and y-direction are less accurate than the rotation.

Static Friction

The actuators have a significant amount of static friction. The exact amount of friction in both translational and the rotational direction was difficult to determine. An attempt was made by slightly increasing the input velocity for a certain direction until the robot began to move. This differed for each test, so the average was taken as a final value for the Drivecontrol. It is important to note that the friction was significantly less for the rotational direction than the translational directions. The y-direction had the most friction and also had the urge to rotate instead of moving in a straight line.

Laserdata

Due to the limited testing moments, some laserdata was recorded in different situations. This data could be used to test the spatial recognition functions outside the testing moments. Data was recorded in different orientations and also during movement of the robot.

Drivecontrol Test

This test was executed to determine if the smoothness and accuracy of the Drivecontrol functions were sufficient or if the acceleration would have to be reduced. Both the smoothness and the accuracy were satisfactory, especially for the rotational movement.

The potential field was also tested by walking towards the robot when it was driving forward. It successfully evaded the person in all directions while continuously driving forward.

Full System Test

The full system test was executed on the provided example map. However, during this test, no dynamic or static obstacles were present that were not on the map. PICO was able to find the cabinets in the correct order from different starting positions and orientations, but the limited space between the two cabinets provided some difficulties. Since PICO is supposed to return to his previous navigation point if the orientation in front of a cabinet failed, and this point was in between the two cabinets this caused some issues. However, in the Hospital challenge map, no two navigation points were placed as close together as in the example map, which should solve this issue.

Conclusion & Recommendations

- Mike/Kevin

Appendices

This chapter contains some documents that are of minor importance to the project.

Minutes

This document contains the minutes of all meetings: Minutes