Embedded Motion Control 2013 Group 8: Difference between revisions

No edit summary |

|||

| Line 214: | Line 214: | ||

==Maze Competition== | ==Maze Competition== | ||

During the maze competition we didn't succeed to solve the maze. The first attempt got stuck at the first crossing and during the second attempt pico didn't even move. So basically we have been programming 7 weeks to be able to move the robot about 1 meter. The good news is that we know why we failed the maze competition and we have simulation to prove that our algorithm should be able to solve mazes. | |||

===First attempt === | |||

During the first attempt pico failed, because the crossing wasn't seen as a crossing. This is caused because the crossing was too close by to detect the crossing correctly. If pico would have seen this crossing correct, it should have turned left first, then turned around (because it saw a dead end), then go straight and turned again en finally it should have turned right to further explore the corridor. In reality pico started with turning right, which inidicates that the crossing wasn't detected as a crossing both simply as 2 different gaps, not in front of eachother. | |||

With a relativly simple adjumstement to the code, this problem can be solved. To detect if two gaps are across eachother, the index values of both gaps in the laserarray are compared. If the absolute value of these indexes is less than 25, the gaps are marked as across eachother and the crossing is detected correctly. For crossings far away this value of 25 is indeed a good value, but if the crossing is rahter close by (which was the case in the maze competition), 25 seems to be a bit too low. So the problem can be solved by comparing the indexes of gaps close by with a value higher than 25 and comparing the gaps close by with 25. | |||

====Second attempt=== | |||

During the second attempt pico didn't even move and no messages were send over the ros topics. This indicates that something went wrong in our begin fase. During testing a week before the maze competition we had a similar situation, but we thought we solved this problem. | |||

Revision as of 15:27, 23 October 2013

Group members

| Name: | Student id: | Email: |

| Robert Berkvens | s106255 | r.j.m.berkvens@student.tue.nl |

| Jorie Teunissen | s102861 | j.a.m.teunissen@student.tue.nl |

| Martin Tetteroo | s081356 | m.tetteroo@student.tue.nl |

| Rob Verhaart | s080654 | r.a.verhaart@student.tue.nl |

Tutor

| Name: | Email: |

| Rob Janssen | r.j.m.janssen@.tue.nl |

Final Strategy

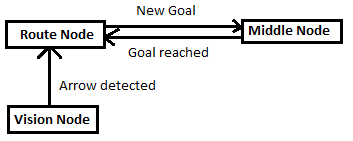

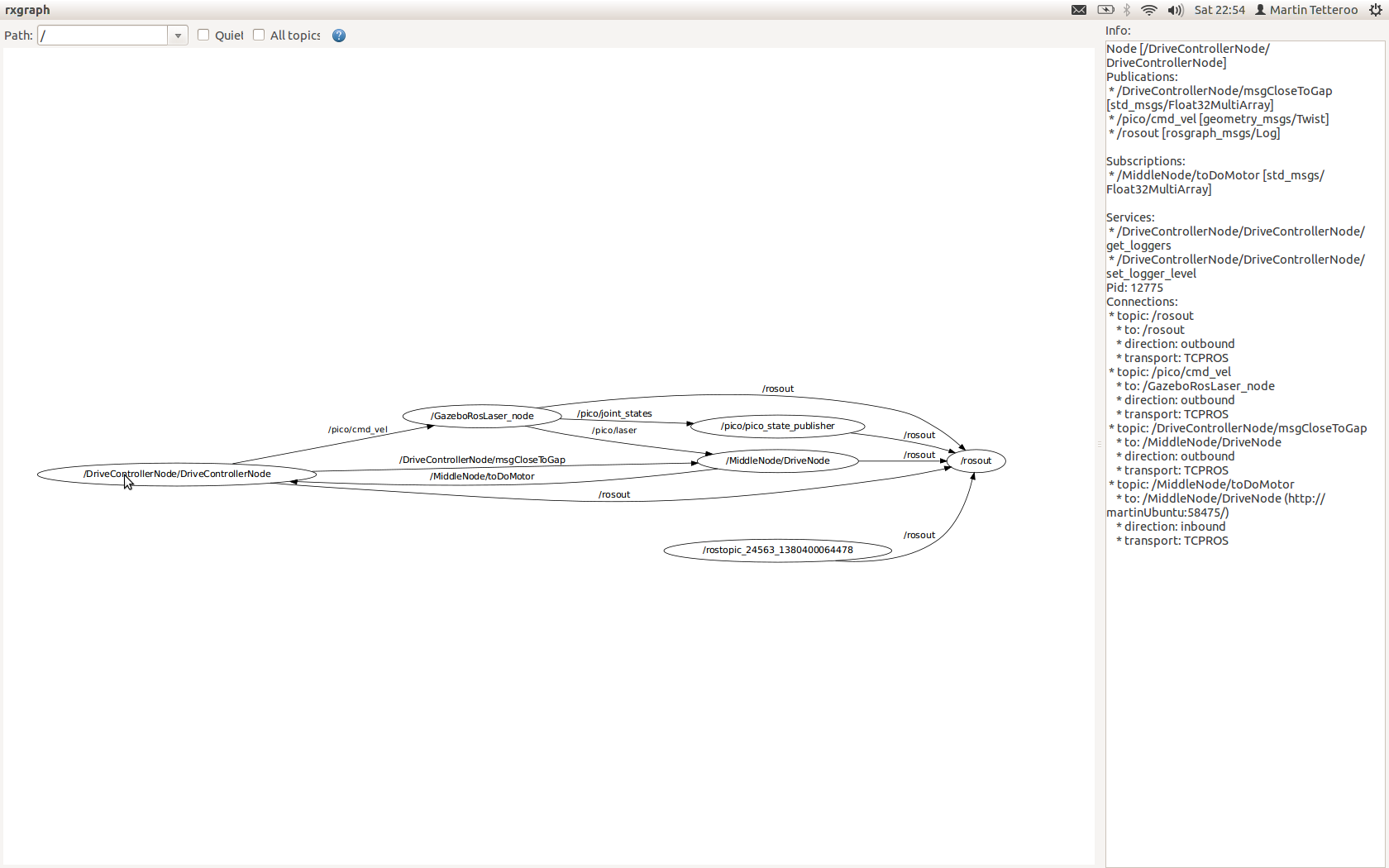

In the implemented strategy we work with three nodes, the routeNode, visionNode and the middleNode. The communication is shown in the picture below.

|

Vision node

The vision node is responsible for the detecting arrows on the walls. If an arrow is detected, a message will be send to the routeNode. The visionNode runs on a rate of 1Hz. Faster processing of the images isn't usefull.

Route node

This node keeps track of the route history (made choices and how corridors looked like) and calculates depending on the current corridor new goals. To view the corridor, the node is listening to the laserdata topic. Everytime a goal has been calculated it is send to the Middle node. If the middle node reached a goal, a message is send to the route node and a new goal will be calculated and send to the middle node. If the vision node detected an arrow, this overrules the current goals and an update will be send to the middle node. The vision node will be ignored after an arrow has been detected until a next goal has been reached.

Middle node

This mode is responsible for driving in the middle of the corridor and executing the goals choosen by the Route node. To be able to drive through the corridors this nodes listens to the laser topic. If goal has been reached, the nodes sends a goal reached message to the route node and waits for another goal.

Algorithms

VisionNode

The VisionNode performs several processing steps on the camera image, before it starts detecting the arrow. The image processing steps are as follows:

- Take 'region of interest' from camera image

- Transform image from rgb (red-green-blue) into hsv (hue-saturation-value)

- Threshold the hsv image, to filter out 'red' pixels

- Blur image by using a 2D filter (close gaps)

- Sharpen image by histogram equalization

The camera image is now processed, such that the contrast is high enough to start arrow detection. The arrow detection performs the following steps:

- Find biggest (area) contour

- Find extreme values (north,south,east,west)

- Determine if (area) contour is arrow

- Determine arrow direction

The VisionNode eventually publishes a '1' for arrow direction LEFT and a '2' for arrow direction right, this then is handled by the MiddleNode.

Route Node

Middle Node

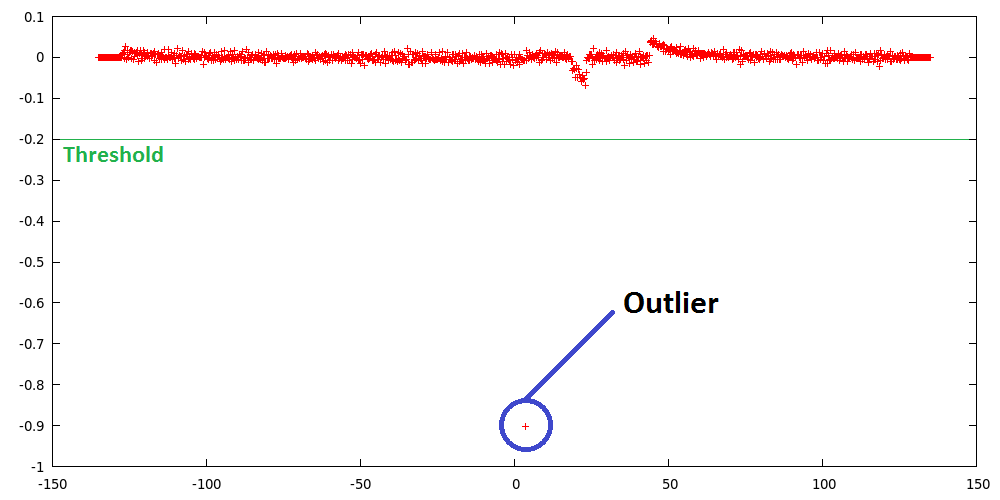

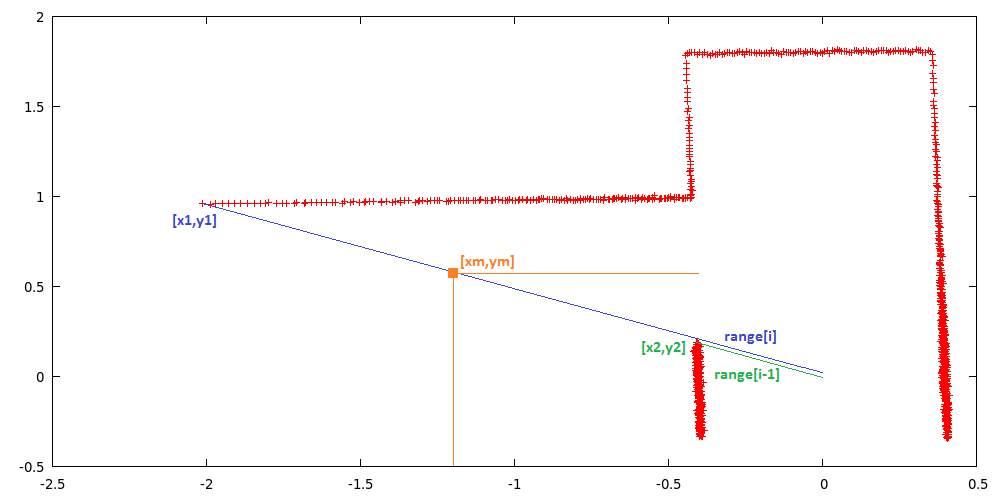

Gapdetection

Input: Laser_vector[1081] output: gap location [vector x; vector y; vector i]

A gap is detected by thresholding the derivative of the laser ranges, this derivative is defined as the difference of laser range [i] and [i-1]. If this difference is larger than the threshold, laser range [i] and [i-1] are labeled as gap corners. The function then starts calculating the position of these laser reflection points relative to its own position. The corner closest to the robot is labeled as point1 [x1,y1] and the other as point2 [x2,y2], the function also outputs the euclidean middle of both points [xm,ym]. The second gap point [x2,y2] is determined by scanning the lasers (starting AFTER the gap index) and looking for the minimum value.

|

|

Middle driving functionality

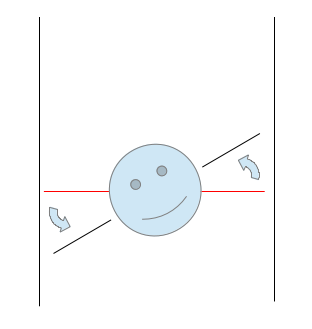

Basics

Pico needs to drive in the middle of the corridor. This is done by first making sure that Pico drives parrallel to the wall and secondly having the left wall be at the same distance as the right one. Driving parallel to walls is done by searching for the shortest distance to both walls. The laser index of the shortest laser is compared to tbe index of the laser located at 90 degrees. The difference in index can be used to calculate the angle needed to compensate. Since the laserscan outputs 270 degrees measurements in 1081 indeces, the angle difference for each index is: 270/1081=0.2497 degrees.

Getting to the middle of the corner is simply done by checking if the distance to the left wall is close to the right wall. If this is not the case, an additional angle is applied to the previously calculated parallel driving angle. Pico’s input should be in radians so the angle needs to be converted.

For example: The closest laser on the left side is 850, the index for 90 degrees is 900. Then 850-900=-50. The angle is equal to -50*0.2497=-12.488 degrees. Which is -12.488*(pi/180)=-0.218 radians. Let’s say Pico is not in the middle of the corridor, so an additional angle is applied. -0.218-0.1=-0.318.

Controller

The calculated angle is used as input to a feedback controller. The controller uses a proportional and differential gain. These gains are determined by experiments. A proportional only controller proved too sensitive to walls not being aligned perfectly. A small differential gain can help smooth these sudden changes. When this controller is applied, Pico drives in the corridor very nicely.

First Strategy (not implemented)

The described method below was the strategy we wanted to implement in the first weeks. While programming and rethinking the whole project, we decedied to radically change our strategy. Modules:

- Route calculation node

- Corridor detection

- Relative location in corridor

- map

- route calculation

- motor controller

- Drive node

- Arrow node

- Drive controller node

A diagram of the node communication File:Node communication.pdf

MainNode

The goal of this node is to solve the maze. It keeps track of the previous movements of the robot and calculates the route to solve the maze. The following modules are defined on this node.

Output message to drive_controller: MessageRoute[float DistanceToDrive, int toDoMotor], where todomotor is turnRight, Turnleft or DriveStraight

Corridor detection

This function detects all the walls. The walls are outputted as line segments in x,y coordinates.

Input: Laser_vector[1081] output: Vector[Lines], with corners[float x_gap; float y_gap; int i_gap; float x_corner; float y_corner; int i_corner]

Relative location

This function calculates the distance from the wall on the left and on the right and the angle of the robot towards these walls.

Input: Laser_vector[1081], Vector[Lines] Output: Location_rel[ float Dist_left; float dist_right, float Theta]

If the shortest distance to a wall is not the laser data directly right or left, pico is not parallel to the wall. The angle can be calculated by taking the difference of the shortest laser angle and 90 degrees.

Map

Using the results of the previous modules this module will update the current map. The location of the robot and the walls/corners/deadends will be drawn in the map. The starting point of the map (0,0) is the start point of the robot. So every time the map from this point will be calculated. As an improvement a particle filter might be needed to draw a better map.

Input: Vector[Lines], Location_rel Output: Location_abs[ float x, float y, float theta] Global: Map[vector[Lines], locations_visited[x,y]]

Route Calculation

This module calculates the route to the next goal. If the goal is in a different corridor and multiple small steps are needed to drive to this goal, a reachable setpoint is calculated and the goal is saved.

Input: Location_abs, location_rel, Map Output: Location_goal[float x, float y], Location_setpoint[float x, float y] Global: Location_goal[float x, float y]

Motor Instruction

This module translates the calculated route and the current location to a message to the motor. The motor commands are turnLeft, turnRight, driveStraight. The module outputs a message to the drive controller node

Input: Location_setpoint[float x, float y], location_rel[float x, float y] Output: float distanceToDrive, int toDoMotor (left, right, driveStraight are defined as integers)

MiddleNode

This node makes sure that pico keeps on driving in the middle of a corridor, so it is not crashing into one of the walls. The node can receive an input message from the Drive Controller Node about the distance to a gap in the walls. If Pico is close to a gap, the function is not trying to drive in the middle of the corridor, but parrallel to the wall without the gap. The node outputs messages to the Drive Controller Node with the information about how to remain in the middle of the corridor. This node updates faster than the Route Calculation Node.

Input messages From Drive controller node: messageCloseToGap[bool closeToGapLeft, bool closeToGapRight] Output messages messageDriveNode[float angle]

Since standard ROS messages do not include a boolean, it is easier to use the std_msgs::Float32MultiArray.

pseude Code

if(middleDrive)

- Drive in the middle of the corridor (if not close to a gap)

elseif(parrallelLeft

- If a gap is on the right side of Pico, start driving parrallel to the left wall. The location of a gap is send by the Drive Controller Node using messageCloseToGap

elseif(parallelRight)

- Same as left, but now the gap is on the left.

elseif(turnLeft)

- If in the middle of a gap, start making the turn to the left or right

elseif(turnRight)

- Same as above, but now turn right

VisionNode

This node is optional, if we have enough time we will try to implement this node. This nodes uses the camera information to detect arrows. If an arrow with a direction is detected, a message will be sent to the drive controller node were to drive to.

Output Messages MessageArrow[bool turnLeft]

DriveControllerNode

This node is the node which decides which commands are send to the motor. This function is on it's own node, because it has to listen to the calculated route and to the middledrive. The calulcation of the route calulcation is needed less frequent than the middledrive check. If

Input messages: MessageRoute[float DistanceToDrive, int toDoMotor], messageDriveNode[int toDoMotor], MessageArrow[bool turnLeft]. Output Messages messageCloseToGap[bool closeToGapLeft, bool closeToGapRight]

Simulation results

Corridor Competition

We failed to succeed the corridor comptetition. We were given two opportunities to detect a hole and drive though it. However, the first attempt the safety overruled the state machine that was build, and the robot got stuck. the second attempt the robot didn't turn far enough and Pico was unable to correct the angle correctly, after which it again got stuck in the safety mode.

First Attempt

When the robot was started, the MiddleDrive function worked properly and Pico started of nicely. Whenever the robot drove towards a wall, the MiddleDrive corrected for it and made sure that the robot drives straight forward. However, when the robot approached the corner whithin 0.5 meter range, it seemed like the robot corrected to drive exactly to the middle of the hole. Since your workspace is rather small and SafeDrive defines a space of 40 cm around every angle of the robot, the robot approached the was too close and the SafeDrve overruled the normal behavior of the robot. So the robot tried to correct for the hole, but instead drove too close too the wall and got stuck in the SafeDrive mode.

Second Attempt

The second attempt the robot started of nicely again, driving straigt forward. When the robot approached the hole on the left within the range of 0.5 meters, it slowed down. Pico corrected a bit to the right, but stopped nicely in the middle of the hole. However, when the turn was started, it seemed to approach a wall again, such that the SafeDrive took over again. We confirmed that the turn was never completed and the robot got stuck agan in its safety mode.

Conclusion

Unfortunately, we didn't succeed the corridor competition, but we learned from our mistakes and know how to approach the problem correctly. Next time we'll have to make sure to reserve more time with the robot to learn its behavior in practical situations. All in all, we have to make sure that the safety doesn't overrule the normal behavior of the robot too soon.

Maze Competition

During the maze competition we didn't succeed to solve the maze. The first attempt got stuck at the first crossing and during the second attempt pico didn't even move. So basically we have been programming 7 weeks to be able to move the robot about 1 meter. The good news is that we know why we failed the maze competition and we have simulation to prove that our algorithm should be able to solve mazes.

First attempt

During the first attempt pico failed, because the crossing wasn't seen as a crossing. This is caused because the crossing was too close by to detect the crossing correctly. If pico would have seen this crossing correct, it should have turned left first, then turned around (because it saw a dead end), then go straight and turned again en finally it should have turned right to further explore the corridor. In reality pico started with turning right, which inidicates that the crossing wasn't detected as a crossing both simply as 2 different gaps, not in front of eachother. With a relativly simple adjumstement to the code, this problem can be solved. To detect if two gaps are across eachother, the index values of both gaps in the laserarray are compared. If the absolute value of these indexes is less than 25, the gaps are marked as across eachother and the crossing is detected correctly. For crossings far away this value of 25 is indeed a good value, but if the crossing is rahter close by (which was the case in the maze competition), 25 seems to be a bit too low. So the problem can be solved by comparing the indexes of gaps close by with a value higher than 25 and comparing the gaps close by with 25.

=Second attempt

During the second attempt pico didn't even move and no messages were send over the ros topics. This indicates that something went wrong in our begin fase. During testing a week before the maze competition we had a similar situation, but we thought we solved this problem.