PRE2017 3 Groep5: Difference between revisions

| Line 407: | Line 407: | ||

The final model was not built completely from scratch. For training, we used convolutional weights that were pre-trained on [http://image-net.org Imagenet] for [https://pjreddie.com/darknet/imagenet/#extraction the Extraction model]. | The final model was not built completely from scratch. For training, we used convolutional weights that were pre-trained on [http://image-net.org Imagenet] for [https://pjreddie.com/darknet/imagenet/#extraction the Extraction model]. | ||

Revision as of 15:54, 30 March 2018

Group members

Bogdans Afonins, 0969985

Andrei Agaronian, 1017525

Veselin Manev, 0939171

Andrei Pintilie, 0980402

Stijn Slot, 0964882

Project

Project definition

Subject

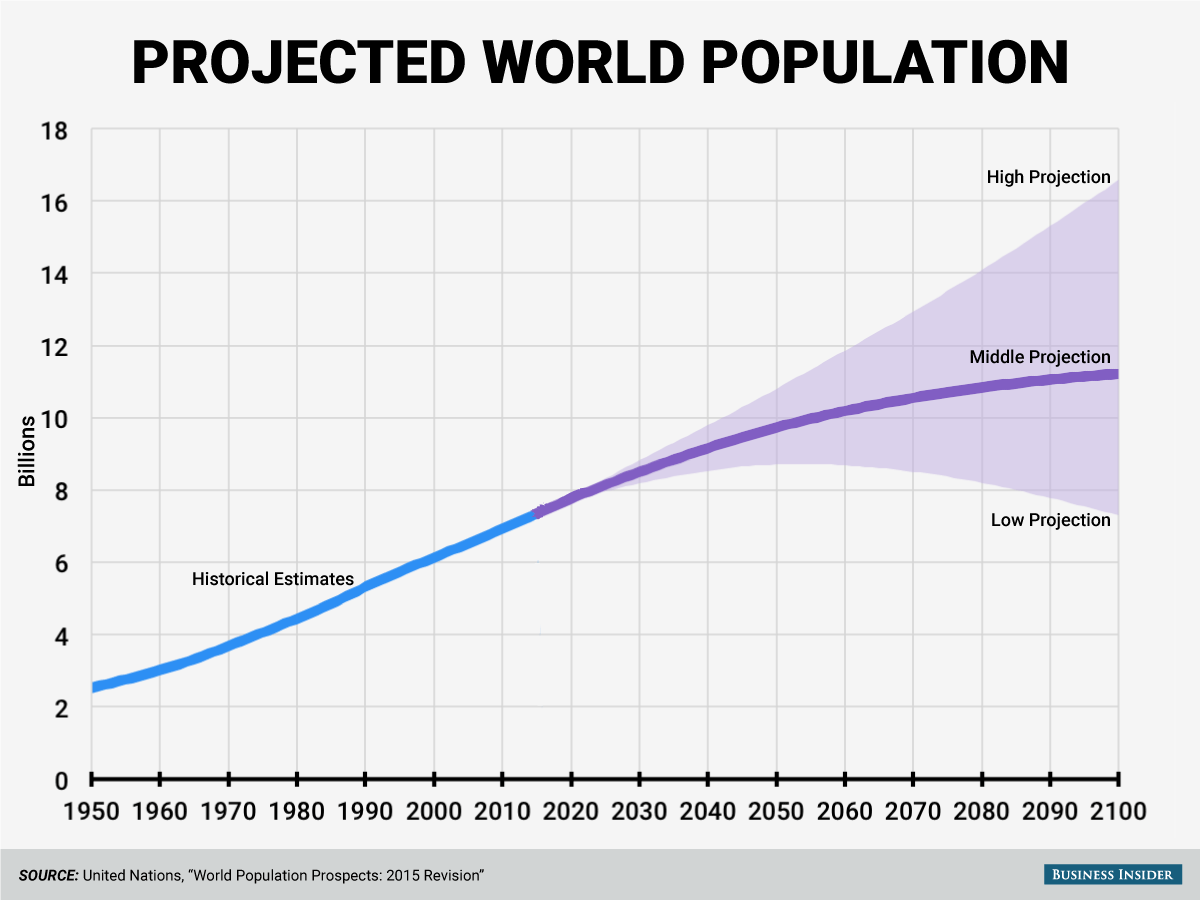

The world population continues to grow and is expected to reach 9.8 billion by 2050 and 11.2 billion by 2100.[1] The increase in population, combined with the development of poorer nations, is projected to double the global food intake by 2050.[2] There will be a greater demand for commodities such as eggs, meat, milk, leather and wool. To keep up with increasing demand, farmers need to either keep ever larger number of animals or somehow increase their productivity. One way to increase productivity is to automate part of the farming business. The manual tracking of individual animals is timeconsuming and ineffective. Automatically detecting when an animal is in heat, sick, or in general need of attention can increase an animal's "output" and wellbeing. Monitoring an animal among hundreds of other animals can prove difficult. However, current advances in technology can be adopted for use in the farming sector to track animals. Accurately tracking the animals behavior will not only improve the animal's wellbeing but also increase productivity and ease the work of farmers around the world.

Objectives

The objective is to create a design for the automation of the livestock farming sector. More precisely, realize a cow tracking system using cameras, which is capable of first distinguishing the individual animals and after that determining their behavior and activities during the day. We will explore possible tracking methods, outline a design and create a simulation for tracking cows.

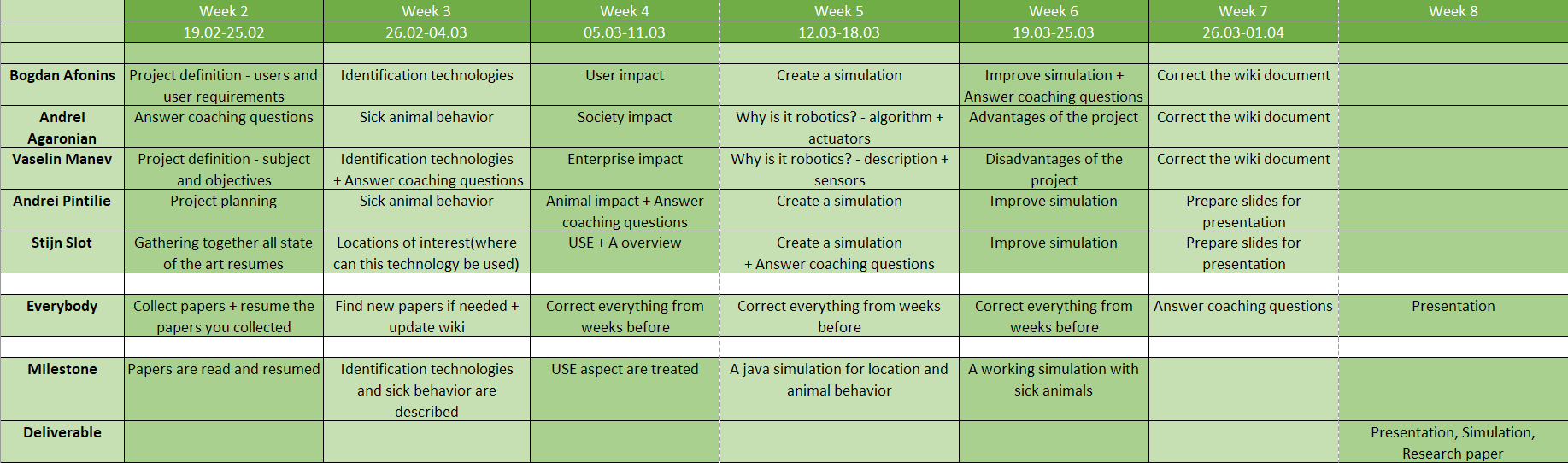

Project Planning

Approach

In order to complete the project within deadlines, we plan to start reading and summarizing the papers in the first week, being followed by establishing the USE aspect plus the impact of the technology on the animals. In the fifth week already, we plan to start working on the prototype which will be a simulation that will show a location(has to be determined) and few types of animals(chosen according to the sick behavior study). Then, by allowing the user to select an animal, that animal becomes sick, the simulation detects that and finally reports it to the user. During last weeks we also have to specify why is it robotics and what advantages or disadvantages are present.

Planning

Milestones

Milestones are shown in the planning picture.

- In the first week, we already plan to have summarized the papers and use them to identify patterns in sick animals behavior and what technologies can be used to detect these changes.

- In the 3rd week, the milestone is to deliver a full analysis of the USE aspects.

- During the following two weeks, we expect the simulation to work.

- In the last week, a milestone will be preparing and holding the final presentation.

- The last milestone is to prepare all deliverables for handing in.

Deliverables

As deliverables we decided to prepare the following:

- A presentation, which will be held in the last week. During this presentation, all of our work will be presented and a simulation will run.

- A simulation of the subject treated. For example, a barn that has a lot of animals(about 200-300) of different species(cows, pigs, chickens). The user will be allowed to place the identification technologies around the barn and "make" some of the animals sick. The simulation shall be able to discover which animal is sick by analyzing its behavior. The user is notified which animal might be sick.

- Research paper of the technology described above, which will take into account the advantages, disadvantages, costs, and impact of such an implementation.

Background

Animal sickness

Diseases can come in many forms in animals. Recent disease epidemics are estimated to have cost billions of dollars and millions of animal lives.[3] The most affected species are cattle and swine. Therefore, there is a large incentive to try and find and cure these diseases as early as possible. Luckily, there are various clues and methods for detecting when an animal is sick.

A physical exam is most often needed for finding and treating sick animals.[4] The role of this physical exam is to identify, treat, prevent the spreading of diseases, protect the food supply and improve animal welfare. Once a cow is identified as sick (some of the measured vitals are abnormal: heart rate, respiration rate, temperature, rumen contractions), the problem should be determined. Several potential disorders are presented depending on the group of animals. For cows the stress level is of huge importance to determine sickness, especially when a new cow has to adapt to the environmental changes. When multiple types of stress are gathered together, that’s a breaking point and the immune system starts falling apart. This immune suppression makes them not react to vaccines and more likely to get infected by diseases. There are 4 areas to determine diseases in new cows: temperature, appetite, uterine discharge, hydration status. In order to determine if a cow is sick, watch the attitude(lie down in corners, less energy, look depressed), the appetite(aggressive eating, not eating), check the hydration(by checking the skin). Even normal cows must be monitored.

Behavioral clues can be used for identifying illness in animals.[5] There are several examples of behavior related to sickness: hydrophobia indicates rabies, star-gazing might indicate polioencephalomalacia in cattle, and abnormal feeding and drinking is an indicator of general malaise. There are changes in the behavior in response to pain(physical injuries can be spotted), a general feeling of malaise(decrease in feeding, reproductive activities, increasing rest time to conserve energy) or other diseases. It is essential to understand which behaviors respond and why.

Thermography

Thermography is the use of infrared cameras to get thermographic images. It can be also be used for detecting when an animal is sick. Veterinarian and researcher Mari Vainionpää consider thermal imaging cameras to be a great tool to find out whether an animal is in pain, for instance. [6] It is stated that if there are changes in the organic activity there are also changes in the amounts of heat that are emitted and these deviations in the heat pattern can be detected with a thermal imaging camera. Moreover, in Vainionpää’s experience, thermal imaging cameras can be used to reveal inflammations, bruises, tendon or muscle related injuries, superficial tumors, nerve damage, blood circulation issues Thermal imaging is quick and reliable as there is no need to sedate the animal, no need to touch it and using a thermal imaging camera doesn’t expose the animal to potentially harmful radiation. Moreover, the animal paw and teeth can be thermally viewed to gain additional data for more precise results.

Eye temperature measured using thermography is also a non-invasive tool for evaluating the stress response in cattle to surgical procedures.[7] Moreover, a rapid drop in eye temperature is likely a sympathetically mediated response via vasoconstriction that can be used to detect fear and/or pain related responses in animals to different handling procedures. Thermography may be used in the future as an objective outcome-based measure for the evaluation and assessment of animal welfare.

Visual tests on cows

The detect possible sickness in cows, visual tests can be used as a general indicator. These can either be performed by a veterinarian or by use of cameras. The tests that can be divided into four categories: injuries, skin related problems, behavioral related problems, feeding problems.

The injuries are the easiest to identify and report since most are immediately visible. It can either be seen on camera, or by the effects it has on the movement of the cow. An important examination is the one taken on the feet and legs of animals, which are intended to spot injuries.[4] Even though it might be difficult to use cameras to identify lower level problems, the movement can betray the problem without actually being seen by the camera, but the feet problem is emphasized since it can reveal true problems. Skin problems are often present during the winter season, the most often problems being warts and ringworm. These two problems appear especially in young cattle until their immune system has built immunity. If this problem occurs in the older cattle, an immune deficiency is suspected. Besides these severe diseases, there are also unusual spots, dry skin, changes in shape or different signs of problems. Behavior is a good sign of problems. Behavior related problems are easy to find out most of them being related to attitude, appetite, and movement.[4] The attitude of a cow can be either stressed, alert, depressed or others, but all of them change the normal flow of things. A cow that is not in the right mood, might refuse to eat and isolate itself from the others. The feeding routine of the cow is also important to determine underfed or obese animals.

All of these problems are related to each one and one can lead to another, indeed exposing the cow to sickness. Any of these factors can be the sign of a disease and it requests a detailed clinical examination after it is found.

Sickness predictors

Injuries

A not-uncommon cause of injuring of cattle is the penetration of foreign objects into the sole of the foot. [8] This action leaves a wound in the feet of the animal which will provide a good environment for bacterias. Another injury that can produce a high amount of pain is the damage or even removing part of the hoof wall. The most common hoof defects are cracks which can be both vertical or horizontal. The horizontal crack is related to stress or disruption in the animal’s health, while the vertical one can be formed by environmental conditions or by the weight of the animal. It is not easy to spot most of these problems, but as described in the visual tests that can be carried, their effect often affects the movement of the animal.

It is also possible that the animal will not present signs of pain, so the visual identification might be a problem.[6] Thermal imaging cameras could be considered for users who wanna invest more into their herd verification in order to detect all these problems. Thermal imaging cameras can provide an interesting solution to the problem while it does not involve touching the animal. It is an effective way to increase the range of signs found, but also more expensive.

Skin problems

Viruses in cattle are often visible at the skin level.[9] Two of the most common are warts and ringworm spots.

Warts are caused by a virus and there are at least 12 different papillomaviruses that cause this sickness. It often shows up in the skin that has broken(for example in ears after tagging). It can even appear from a scratch, but fortunately, most of them disappear in few months. This virus can be spread from animal to animal just by simple touch, or by cows that are itching of the same fence. Also, if one new cow having this problem is bring in the herd, it may infect the animals which did not develop the immunity to this virus. There are vaccines for this virus and can be easily removed if detected in due time.

The ringworm is a fungal disease skin that usually appears in calves during winter, but it disappears in the spring. The problem with this fungus is that it can also be spread to other species, like humans, and the variety of types of fungus is immense, so it is unlikely somebody to have immunity against all. The disease can be spread by direct contact, or by spores which can spread on equipment that was used on that cow. That’s why in the same paper it is recommended to disinfect the utensils. Another important aspect is that “Spores may survive in the environment for years. Young cattle may develop ringworm in the fall and winter even if there weren't animals in the herd with ringworm during summer;”, which makes treating of the ringworm frustrating. There are medications, but they are expensive to use on large animals, so prevention is the best solution.

There are also other skin viruses and diseases, which can be identified [4] by visual inspection. Most of them are represented by spots and changes in shape or color of the skin, or in immediate visual problems like nasal discharges and coughing.

Behavior and feeding

According to Dr. Ruth Wonfor, it is possible to detect some illnesses even before there are any visible symptoms and clinical signs by understanding dairy cow behavior. [10] Clearly, illness affects animal behavior, as in the human case. Behavioral changes in self-isolation or appetite loss. And there are even more aspects to track changes, including social exploration. As an example, lame cows spend more time lying down, change their weight distribution and walk much slower compared to healthy animals. Dairy cows with mastitis idle more and spend shorter lying down, eating, ruminating and grooming. As chronic illnesses appear to instigate more behavioral changes, monitoring of this can distinguish whether an animal has an acute or chronic illness.

Furthermore, there has been a research carried out for a number of clinical production diseases in order to find out whether behavior indicators can act as early disease indicators. Worth to mention that in most of the cases that were examined, feeding behavior so far seems to be a relatively reliable predictor of disease onset. Cows with acute lameness have shown a reduction of time spent at the feeder of around 19 minutes every day for the week before lameness was visualized, along with a reduction in the number of visits to the feeder. Cows who develop metritis 7-9 days after calving, spend less time at the feed bunker before they have even calved. For animals with ketosis, feeding and activity behavior changes, with a reduction in feed intake in the 3 days before diagnosis and a 20% increase in standing time in the week before calving.

Moreover, the research made by Thompson Rivers University demonstrated eye temperature was more effective at detecting bovine viral diarrhea as changes occurred as early as one day, compared to 5-6 days for other areas such as the nose, ear, body, and hooves, which are commonly used as clinic signs.[7] As the authors suggest, infrared values, which can be obtained using a thermal camera, for example, were as much or even more efficient than clinical scores. Nevertheless, not only the temperature of eyes matters - sunken eyes in combination with droopy ears indicate a sign of something wrong. Coming back to appetite as a factor, panting and excessive salivation are also know to sign the cow isn’t feeling well.

Pain in cows

Finding out if the animal is in pain can sometimes be a real challenge for a vet. Most animals will try to hide their weakness and will only show that they’re in pain when the pain has become unbearable. So a common method to determine whether an animal is in pain consists of touching the animal in the area where the pain is suspected and monitoring the animal’s response closely. This is, however, not always reliable, for the animal might be very determined not to show signs of pain and another consideration is that the owner of the animal often doesn’t like the fact that the veterinarian is causing more pain to their beloved animal. Thermal imaging cameras can provide an interesting solution to that problem, for it does not involve touching the animal and it can be used to show anomalies in the thermal pattern.

Animal heat detection

For a farmer, it is very important to detect when an animal is in heat and ready to be (artificially) inseminated. Missing this window is a lost opportunity, and decreases fertility and productivity. Today, most farmers rely on manualy inspecting their animals to see when they are in estrus. This is done based on behavior. However, on average about half of the heats are undetected in the United States, and roughly 15% of cows inseminated are expected to in fact not be in heat.[11] Using a monitoring system to automatically detect this can not only improve accuracy and therefore productivity, but also free up significant amount of time for the farmer.

Heat in cattle

Cattle, like other mammals, have a distinct time when they ovulate and can become pregant. This happens in a period of three weeks, and on average takes about 15 to 18 hours, but may vary from 8 to 30 hours.[11] There are several ways to detect this period, for example based on hormones, behavior, Most animals show distinct behavior when in heat, and cows are no expection. There are several ways to detect heat, for example based on hormones. However, for this project we are mostly interested in what we are able to detect from a camera, that is their behavior. The main indicator of heat in cattle is a cow standing to be mounted.[11] If the cows moves away quickly while being mounted then this cow is not in heat. Other behavioral indicators are mounting other cows, restlessness, chin resting and decreased feeding.

Animal detection

For farmers, it can be very useful to track animal locations and detect when an animal does a certain action. At the moment, ear tags are mostly used for identification of animals, but with modern technology, animals can be more closely tracked. For example, identifying and tracking animals can show an animal's history and detecting animals at the food station can give insights into its food intake.

Greene describes in a paper the effort of U.S. Department of Agriculture’s (USDA’s) to trace farm animals by identifying them and rapidly reacting to diseases as soon as they occur.[3] The identification is done by using a national animal ID, which will allow the users to track the previous ownership, to prevent theft and check all detail about an animal. The U.S. National Identification System(NAIS) has the role to set standards and rules for animals’ health, living conditions, and trade of animals. NAIS and USDA work together to establish a new approach to animal disease traceability. The main objectives of this project are to identify animals, to track their food habits and their origins and owners. As advantages can be remembered the disease eradication, minimize economic impact, increase marketing opportunities, provide a tool for producers, address national safety regulations. But there are also some disadvantages like invasion of privacy(collection of personal data), increase costs for the farmers(to install the systems), and market domination by large retailers.

RFID

Radio Frequency Identification (RFID) tags can store and transmit data through an electromagnetic transmission. RFID readers can be used to detect RFID tags within certain ranges. Combination of RFID tags and readers can be used for detecting a moving object such as animals.

RFID tags can be used for tracking large number of moving objects.[12] The idea is as follows: each entity that is supposed to be tracked must be equipped with a basic RFID tag, that can receive queries and respond to so-called readers. The readers are static and are supposed to be positioned all around the area. Every reader has a certain range it can operate in. So it can communicate/detect entities only within that range, for example, 5 or 10 meters. The readers will pass the presence information of a certain tag to the central server that stores this information appropriately. The central server is responsible for gathering data and operating on it, for example by approximating a path that a tag took, keeping track of the number times that a certain tag appeared in a certain location.

In a US patent by Huisma, RFID tags are used for detecting animal visits to feeding stations.[13] Animals are equipped with an RFID tag, that can be read in close proximity to the feeding stations. These detected visited are used together with weighing devices in the feeding troughs to measure the difference in weight before and after a consumption event, using a mathematically weighted filter technique. The reduction in food is divided between the RFID tag last seen and the next one. By detecting animals at the food stations with RFID tags, the food intake of each animal can be recorded. This information can be used for finding animals with abnormal feeding behavior. The patent mentions the obstacles with this method, namely inaccurate RFID readings (few seconds delay, readings by other stations) and inaccurate food reduction measurements (wind, rodents, inaccurate division).

Camera detection

Cameras and Computer Vision can be used to detect and monitor animals. Different cameras technologies, such as RGB, Infrared (IR) and Time-of-Flight (TOF) can all be used for animal identification, location tracking, and status monitoring. We outline several papers where camera technology is used for detecting and identifying animals.

In a paper by Zhu et al, a 3D machine vision of livestock is described.[14] In previous papers, Internet Protocol(IP) cameras have been implemented to track the weight of animals and to ensure they do not get unhealthy. IP cameras capture RGB images, which makes them dependent on the room light, shadows and contacts between animals. In order to tackle those problems, the method used in the paper adds an IR camera to the RGB image. This gives information about the depth of every pixel thus giving a true 3D data for more accurate detection of the animals. The authors of the paper use Microsoft Kinect, which has RGB camera, IR projector, and IR camera. By setting different thresholds and creating a software, it is possible to estimate the weight of a pig very accurately and find ones which are over- or under-weighted.

In a paper by Salau et al, a TOF camera is used for determining body traits of cows.[15] They first introduce the manual method of body trait determination which relies on two measures that are used to describe a cow’s body condition - Body Condition Score, which is gathered by visually and manually judging the fat layer upon specific bone structures and how sunken the animal’s rear area is, and the BackFat Thickness. However, this manual system for body trait determination has higher costs, more stressful for the animals, doesn’t avoid errors during manual data transcription, and cannot provide large volumes of data for use in genetic evaluation, rather than an automated method would do. Then the paper fully focuses on introducing and further explaining of the automated system which relies on collecting data from the camera - Time Of Flight. Technical aspects of TOF method, which is based on using a camera mounted into a cow barn, and its implementation with testing and numbers are present in this paper. It is clearly indicated that this automated system was able to carry out the tasks camera setup, calibration, animal identification, image acquisition, sorting, segmentation and the determination of the region of interest as well as the extraction of body traits automatically. In the end, it is summed up that the application of TOF in determination of body traits is promising since traits could be gathered at comparable precision as BFT. However, the animal effect is very large and thus further analyses to specify the cows’ properties leading to the differences in image quality, reliability in measurement and trait values need to be carried out.

A paper by Kumar et al focuses on tracking pet animals that are lost.[16] They mention the fact that there is an increase in the number of pet animals that are abandoned, lost, swapped, etc., and that the current methods to identify and distinguish them are manual and not effective. For example, ear-tagging, ear-tipping or notching and embedding of microchips in the body of pet animals for their recognition purpose. The authors point out that these methods are not robust and do not help to solve the problem of identification of an animal. The idea is to use animal biometric characteristics to recognize an individual animal. To recognize and monitor pet animals (dogs) an automatic recognition system is proposed in the paper. Facial images are used for the recognition part and surveillance cameras are used for the tracking purposes. Results of the research are quite impressing, but it has yet to be tested in a real-life environment.

Similarly, a paper by Yu et al describes an automated method of identifying wildlife species using pictures captured by remote camera traps.[17] Researchers not only described the technical aspects of the method but also tested the method on a dataset with over 7,000 camera trap images of 18 species from two different field sites. After all, they’ve achieved an average classification accuracy of 82%. Summing up, it was shown that object recognition techniques from computer vision science can be effectively used to recognize and identify wild mammals on sequences of photographs taken by camera traps in nature, which are notorious for high levels of noise and clutter. In the future work, the authors say, some biometric features that are important for species analysis will be included in the local features, such as color, spots, and size of the body, which are partly responsible for determining body traits.

Animal monitoring

Monitoring animals can be of great importance for farmers and the animals themselves. First of all, it is a common situation when farmers/feedlot operators miss the time when a certain animal is in heat. This increases the costs of running a farm. Also, detecting whether an animal is sick requires the manual detection by a feedlot operator. This can be several days after an animal has initially become sick, causing pain and stress for the animals and reducing productivity. What is more, even when an animal is detected to be sick, it is usually treated with a range of different antibiotics, regardless if the animal needs treatment for all of these illnesses.

GPS

One method of tracking animals is using GPS. This technology uses a receiver that communicates with satellites to determine global position. The positional information can be stored to gain knowledge on an animals location throughout the day. GPS is accurate up to 5 meters, which may or may not be enough. Two papers are outlined here that use GPS for tracking animals.

A method is proposed by Guichon et al, for gathering reference movement patterns of the animals, for example, the ones that resemble the behavior of a sick/overfed/underfed/market ready/etc. and then compare with the movement patterns of the current livestock.[18] These patterns can be used as well to identify the exact illness an animal has. This is because the system can track all places that the animal visited and therefore can narrow the scope of possible illnesses. Animals are tracked with a GPS tracker that every 15 seconds poll the GPS satellites and collect raw GPS data representing the associated animal's position. Then the data is loaded to a server that stores it and runs several smoothing/filtering programs to “clean” the raw GPS data. Since the data is processed continuously, the SQL database with coordinates and timestamps gets updated. Afterwards, the collected data is compared with reference patterns to count, for example, how many times a particular animal through different zones in a cowshed like to the water zone/feedlot/activity zone/etc.

Similarly, in a paper by Panckhurst et al, an ear tag for livestock is developed, which is lightweight (less than 40g) and solar powered.[19] It has a GPS module, which is used for determining the location of the animals and this is then sent to a base station, where the data is collected. In the paper, a technical description of the ear tag and the base station are given

Sensors

To monitor an animal and possibly detect sickness, sensory data can be used. By placing sensors on/in an animal that measure information such as blood pressure, temperature, or activity, it is possible to gain knowledge on an animal's conditions.

In a paper by Umega and Raja, different sensors are placed on the animal to monitor its wellbeing.[20] Those sensors include heart rate sensor, Accelerometer, humidity sensor and others. RFID tags are used to identify the different animals, and the data is transmitted by wifi. The authors of the paper realize the design using Arduino Uno Microcontroller. The data from the sensors is extracted by the controller and can be displayed for the end user.

Similarly, a paper by Zhou and Dick proposes an intelligent system for livestock disease surveillance.[21] The main purpose is to detect if animals are sick/or about to be sick and treat it to avoid the diseases spreading among other animals and infect the whole livestock. In this method, special sensors are used that are attached to every animal collecting both - animals (cows) temperature and ambient temperature every 5 minutes. Each sensor is uniquely identifiable. Then, the collected data is sent via a network to the main server, where it is processed. The processed temperature readings can then be used to detect diseases.

In a paper by Maina, the use of IoT and Big Data is explored for determining an animals health status and increase productivity.[22] Interconnected sensors can be used to sample and collect the vital signs of the animals and based on that determine their health status. They have developed a prototype sensor system which was attached to the necks of cows. This sensor can distinguish between several different activities as eating grass, standing and walking. This paper, however, does not explore the actual connection of many sensors in a single network.

Lastly, in a US patent by Yarden, a system of basic tags, smart tags, a mobile unit and a PC unit for monitoring cattle and detecting illness.[23] The basic tag is put on the ear of an animal and collects data from sensors on various locations on/in the cattle’s body. The smart tag is similar to the basic tag, but also collects information from various basic tags in proximity. Either the raw sensory data is sent, or the basic tag will process this data and only send a health indicator. The smart tag periodically sends the information to the mobile unit, which relays it to the PC unit, where it is displayed to the end user and stored. When illness is detected, the basic tags can display alarm with a LED and buzzer, and a message is sent to the PC unit.

Monitoring devices

Similarly to using various sensors, animals can also be monitored by attaching a single monitoring device to them that gathers sensory data such as location and vitals. This information can then be used for tracking the animals and determining their health status. These devices can be used to detect sickness in animals at an early stage. We outline two patents and a paper that describe such a device, although many more can be found.

A US patent by Ridenour describes a monitoring device that is placed on an animal’s appendage, that contains biosensors that can measure vitals.[24] Additionally, it can be expended with a GPS tracker or a tag for monitoring visits to food and water stations. These measurements are used to determine when an animal is sick, by detecting when conditions fall outside normal ranges. When sickness is detected, it will send out an alarm. A transmitter can also be included, to send physiological information to a base station where all animals are monitored. Advantages of this device compared to other monitoring devices is that the system is non-intrusive, easy to use and can be adapted to a users need.

Similarly, a US patent by Ingley and Risco outlines a device for monitoring activity, ketone emission and vitals such as body temperature, nose wetness and humidity of cows.[25] The device will be placed as either a nose ring or an ear tag. The device will take measurements and log this for at least 8 hours. When entering a milking facility, the data can be downloaded and used as an indicator of the cow’s health.

In a paper by Lopes and Carvalho, a low power monitoring device is attached to livestock for preventing theft.[26] There will be a lower power module on the animal with a wake-up radio. A drone will be used to wake up the animal's module and collect the gathered information. Furthermore, the module should also be highly resistant to water, impacts, and dust. With such a system, each animal can be identified with the drone and theft can be caught early.

Farm visits

To get a better view of the farming sector and how a farm looks like, we thought it would be very helpful to contact an expert and visit a farm. We were encouraged to speak to Lenny van Erp, who is a lector Precision Livestock Farming at the HAS Hogeschool. She not only agreed to answer our questions, but also invited us to accompany her on a visit to two dairy companies that used technology to boost their productivity. These companies were partners of the HAS, meaning they open up their farm to students and teachers and support research. On Monday 12th of March we visited these two farms and got to see first-hand what such farms look like.

Hoeve Boveneind

The first farm we visited was Hoeve Boveneind in Herwijnen. This farm had a long history of dairy farming in the area. Most importantly, they now use monitoring technology on their cows to increase their productivity and aid with research. The company houses roughly 100 cows and also own some acres of land where they grow grass and maize. The cows stayed inside the barn all year, where they get milked three times a day by an automatic voluntary milking robot. This robot used computer vision to find each individual teat and attach the suction cup.

The farm uses tracking devices to gain insight into their cow behavior, to detect when they are in heat or need (medical) attention. The cows are equiped with a collar that is used for identification, localization and tracking activity. The localization works by connecting to devices attached to the ceiling that can traingulate the position of the cow. Additionally, the cows are fitted with a pedometer on their ankle, that can track steps taken. These activity readings from the collar and pedometer can then be used to determine when a cow is lying, standing or walking. For now, this information was used to detect when cows are in heat, since this would show up as a significant increase in the activity. Unusual activity was also reported, such that these cows could receive attention.

Melkveehouderij Pels Beheer BV

The second farm we visited was dairy farm Pels Beheer BV, which was slightly bigger, housing roughly 170 cows. This farm did have cows graze outside in the summer. The cows were milked two times a day in a carrousel milking system. Here the suction cups are attached manually by personnel. The cows were also fitted with monitoring devices, that were used for identification and also for tracking activity to detect heat.

What was special about this farm was the use of a camera that could determine body condition score. This was placed above the exit of the milking caroussel, such that the cows would pass underneath every day. The exit was made narrow such that all cows would pass straightly underneath one by one. The body condition score is a score between 1.0 and 5.0, where a lower score corresponds with a lower mass of the cow. This information is used for research purposes, but the farmer can also use this score to see when best to inseminate a cow. When the monitoring devices signal a cow in heat, the body condition score can be checked to see if the weight is stable. After giving birth the score drops since the cow loses weight when producing much milk, but the score should stop decreasing after a month when the cow is ready for insemination.

What we learned

During these visits we got to ask many questions to Lenny van Erp, who gave us interesting information and insights. We also got to look at two actually functioning dairy farms, which helped us make our solution more concrete.

The most important thing we learned was what things are important to a farmer. To be precise, we learned that detecting cows in heat was something we should seriously consider, since it is most important for productivity. Heat can be seen by the behavior and activity of a cow. Here machines can do a better job than manual detection, and also save much time for the farmer. Before the visits, we had mostly considered detecting diseases, but the same camera system can of course also be used for heat detection.

Other than that it was most useful to see the layout of two farms, since this can give us some insight of what a typical barn looks like. We also got to ask many questions on cow behavior and what a cow's "schedule" looks like.

USE aspects

Users

The technology we focus on is about improving the process of monitoring and tracking domestic animals, namely cows. Hence the main users are people involved in the process of keeping livestock, collecting the commodities and and delivering them to the market.

The main user, around which our solution is designed is the farmer. After visiting the different farms, some basic user requirements were obtained. One of the requirements is based on the cows in heat. Because the heat in cows lasts for short periods of time and is sometimes difficult to detect by eye, it is problematic for farmers to stay around constantly and do everything by hand. This process limits their free time and rest, decreasing productivity. Additionally, farmers constantly want to optimize the output of their farms. For example, if they miss a cow in heat, it directly means that they have to wait for another 3 weeks until the cow is able to produce milk again.

Different requirement is sickness detection. Cows are really expensive animals (compared to pigs for example) which forces the farmers to constantly check the health of their animals. A system, which is able to do that automatically can greatly increase the overall health by pointing individual animals, which show unusual behaviour. This also reduces the required manual labour, decreasing the costs(salaries of workers) and increasing the productivity ( more healthy cows producing more milk). Sometimes cow can be healthy and not on heat, but still need some attention, for example because of weight problems. This has to be recognized by the system and the workers must be notified to take the necessary actions.

There are also some indirect users present. For example when a cow is determined to be sick, it is brought to the veterinary. He can use statistics from the past weeks about the cow behaviour to better diagnose it. In this way one can track the illness history of the cows and try to find the cause of the sickness if it happens too often. Other indirect user is the slaughterhouse workers - they can check if a particular cow is healthy and can be used in the food industry.

Cows can also be seen as indirect users, which should not be physically hurt by the technology. Additionally, it should be able to perform its everyday activities without any disruption.

Maintenance engineers are also users of the system, which should be able to detect issues with the system as fast as possible and put as less effort as possible the make repairs if necessary.

Society

As mentioned in the introduction the world population is rising, therefore more food will be required. Since one of the main sources of food is cattle, either the number of farms must increase or their productivity. By using our technology, we can achieve the second one, and help to develop the first one.

Implementing this technology can, of course, have positive and negative sides. On one side, most of the farms rely on the labor force, which indeed is often performed by the immigrants who are willing to work for low income. By having this implementation, cases like the one in Wisconsin where low-paid workers are used for difficult tasks during long shifts are reduced. As an effect, the need for workers will be reduced, which indeed is a bad consequence for society. It will increase the unemployment rate, which will eventually increase the crime.

On the other side, the main advantage of society is that cow-related products are going to be of higher quality, so the society can get better goods. Between all these products we can point out the milk-related products like cheese, milk, yogurt, the leather related products (by removing the sensors from cows their skin won't be pierced which leads to more material to be used for quality products) and fat-related products like paints, makeup, pharmaceuticals. Additionally, the price will drop, which will make cow related products affordable to more people.

Lastly, by having all the technology in place will allow for the creation of new farms and expansion of old ones. Having a fully automated farm will give the farmers enough time for their personal life which might be considered as a good alternative for the ones who want to change the career. After a first payment of the product and the farm acquisition, it will be easy to keep the farm running only with few feedlot operators.

Like a snowball consequence will come the fact that cow-related products will become cheaper since the need to export them will be reduced.

Enterprise

The enterprise sector would be hardly affected by any negative impact of the described technology on farms. This impact is amplified by the fact that farmers usually do not have a second source of income. So, they expect somehow to sell products made out of milk, to sell cow beef or either selling the whole animal in order to earn money for their living. Besides that, trades are necessary to improve farm’s facilities and their development.

As it is described on the Organization for Economic Cooperation and Development, beef represents one of the main sources of meat in some countries, while for many others it is in top three.[27] Following all these data it is easy to find out why enterprises invest more and more money in such cow farms that will produce a huge revenue.

Among all the possible enterprise actors, the most influential ones are the companies which ask for goods like milk and meat. Their request is that the products must be fresh and edible. Here is the point, where our technology takes over others. Camera detection is not intrusive. It is not like a tag(where the skin has to be pierced) or like a collar(that can harm the cow), both technologies being really good factors for bacterias(as described in the sickness section). The camera which the only scope is to track and detect possible issues can increase the detection rate, without posing any damage to the animals. This is a good reason for companies to start investing money in such a technology.

A second major actor would be the general owner of the farm. Even if he/she can be considered also as one of the users, when the point of view is focused on money and income, the owner becomes part of the enterprise. His point of view will be focused to get as many and accurate predictions as possible while keeping the costs low, aspects which are treated by our technology.

Other enterprise interests might arise from the cameras’ companies which will try to provide competitive prices for their products to make them attractive for farmers, transport companies which will have to increase the transport rates(according to our description, by detecting more sick animals, they can be treated or the sickness can be stopped from spreading, resulting in more viable products to release on markets) and others. Overall, everybody who invests or expects money from such a technology is part of the enterprise sector, but the main beneficiaries are the goods sellers and the farm owners.

System design

Locations of interest

Our main location of interest is, of course, a farm and in particular a cow barn. To observe and track the cows with cameras, we need to be able to monitor them, preferably at all times. Since cows will spend (most of) their time in the barn, this is the place where monitoring can be done most succesfully.

Inside the barn, we can further distinguish certain regions. The place where food is put and the cows eat is the food zone. This zone is of importance, since visits to a food station and feeding time are prime indicators of sickness.[28] Similarly, we can distinguish a water zone as the place the cows will drink. Typically this will be a small zone next to a sink. Furthermore, in most farms there is a further divide between activity zones and resting zones. These resting zones are usually in the form of cubicles, where cows have individual spaces enclosed by bars. In the activity zone they can stand and walk around, usually in the form of free walking lanes. Tracking cows in these locations can also provide very meaningful information on the wellbeing of cows. For example, an indicator of the common disease mastitis in dairy cows is an increase in standing over lying down. [29] For dairy cows, we can also identify the milking zone. In this day and age, this is usually done by a milking robot. Typical used designs for the milking zone are a carrousel or a stationary lane. Lastly, the farm might contain a dedicated hospital and/or processing area, where actions such as treating sick animals and preparing new animals can take place.

Other locations of interest are the milking facility and the slaughterhause, for dairy cows and beef cows respectively. For these facilities, the welfare of the cows are of importance for productivity. Whether or not an animal is sick can have an effect on the quality of the product. Additionally, handling sick animals in this location can be time-consuming and reduce productivity.

Requirements and constraints

Assumptions

- The cows spent a significant amount of time in the barn.

- The cows do not have any other species of animals inside their enclosure.

- The barn does not have areas which are obscured from view by cameras mounted at ceiling level.

- The barn has a distinguished food zone, where feeding takes place.

- Cows cannot be on top of each other

Requirements

- The system should identify cows.

- The system should distinguish between cows to uniquely identify an animal.

- The system should inform the farm workers which data is for which cow.

- The system should inform the farm workers about deviations from the standard behavior of a cow.

- The system should track the position of each cow at any given moment.

- The system should persist historical data of every cow.

- The system should gather information from the whole barn area.

- The system should detect visits to a feeding station by a cow.

- The system should operate remotely.

- The system should operate without disturbing the cows in any way.

- The system should be possible to set up by a layman.

Preferences

- The system should be as cheap as possible.

- The system should be as accurate as possible.

Constraints

- Total maintenance and operational costs should be cheaper than the manual operations that the system replaces.

- The system should be at least as effective as doing manual inspection.

Design choices

As explained in the project definition, it is our objective to automatically detect diseases in animals. Doing this early can save cost, decrease the chance of disease outbreaks and increase productivity. Since there are infinite design choices to achieve this objective, we have laid down the requiriements. our system should implement.

Animal

Because all farm animals differ, the system cannot be uniquely made to support all possible animals. For simplicity, only one animal will be supported. The choice of animal is based on mutual agreement of the group - cows (Requirement 1). This does not mean that cows are more important than the other animals, it is solely for simplicity reasons. One reason that cows were chosen is that we believed them to be easier to distinguish. Another one is that, since cows are large and relatively expensive, it is easier for the system to be cost-effective.

After identifying the technology and where it is going to be used, more details will be given. As mentioned in the beginning, the purpose of this system is to increase the productivity of farms by detecting sickness and animals in heat. In case manual inspection is present, the system must be more effective than it (Constraint 2).

Monitoring technology

Many technologies can be used for monitoring cows and tracking behavior. In the section on identification technologies many possible solutions are proposed.

We chose cameras as our technology for monitoring cows and detecting diseases. Camera(s) can be used to identify, distinguish and monitor cows continuously, satisfying requirements 1, 2, 6, and 8. Multiple cameras can be used to monitor the entire barn, satisfying requirements 7. Furthermore, cameras do not disturb the cows in any way and are easy to set up by a layman, like a farmer. Cameras are also “passive” since they do not physically contact the animal.

Other technologies can also be used to accomplish our objectives. Cows can be monitored using wearable devices or inserted chips containing sensors, and/or RFID/GPS technology. However, such devices would break requirement 10, which states the system should not disturb the cows in any way. We believe this is important, because we are obliged to keep animal welfare and wellbeing in check, and by implementing a new technology we would like to reduce its negative impact on the animal as much as possible. Requirement 2 also excludes technologies that cannot easily differentiate between different animals, like pressure sensors, microfones, etc.

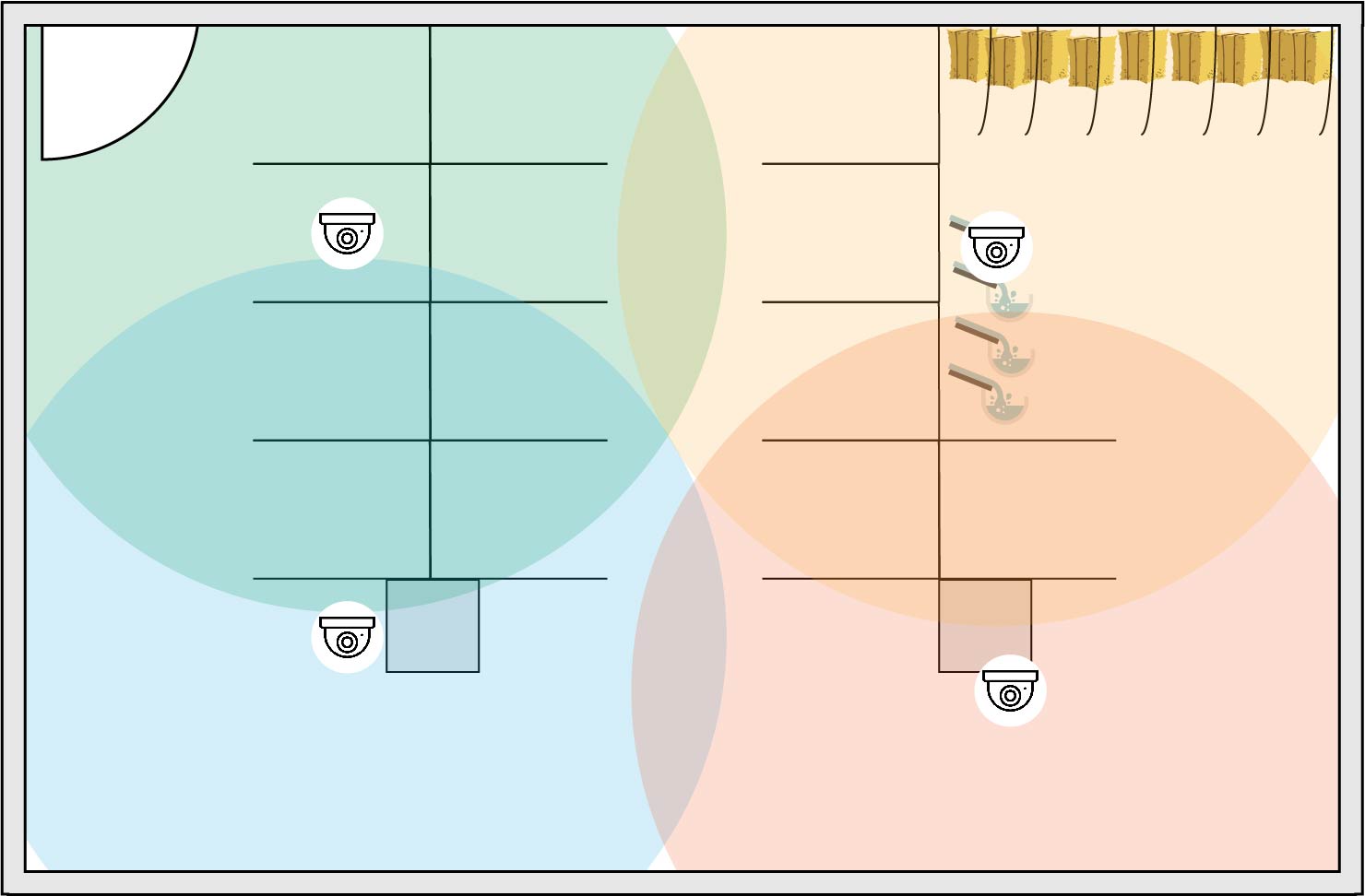

Camera position

We decided that the cameras will be mounted on the ceiling of the barn, possibly with a very slight angle. Mounting them in this way allows easy identifying and distinguishing between the individual cows (requirement 1, 2), as long as cows are not on top of eachother (assumption 5). This is important because in order to track a cow continuously, it should not be confused with neighboring animals.

Other position, for example placing the camera on ground level for a side view may result in an animal hiding behind a different one. This would hinder the ability to identify and distinguish between different animals (requirement 1, 2).

Monitoring technologies

Here we describe the technologies that are relevant to our system and that are used or can be used in the future. This technology is relevant to automate cow tracking and health monitoring, with regular surveillance cameras. In the previous sections, we discussed several promising technologies that could be or are already used for animal tracking purposes, for example, thermal cameras, RFID, GPS and other. Despite that, we narrow the scope of involved devices only to cameras. The reason behind this is simplicity in video surveillance - cameras need to be placed only once in specific areas and do not require regular maintenance. Also, the animals will not feel the presence of the cameras, as it is the case for many sensory systems.

Hardware

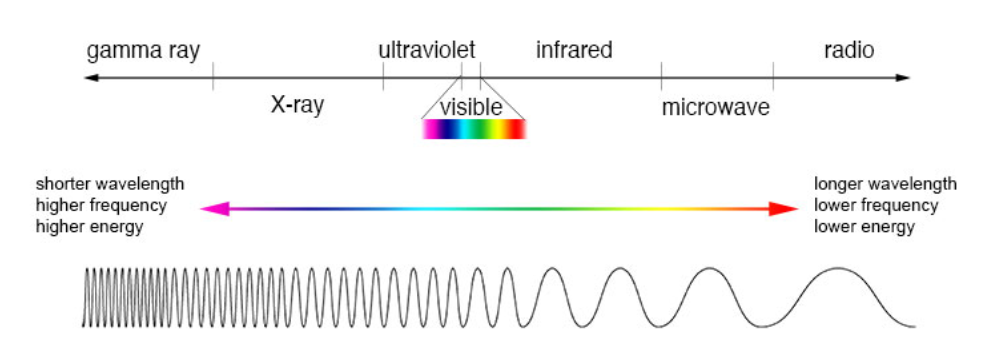

There are three main camera types which can be used, each of them being in a different electromagnetic spectrum (Fig. TBD) - RGB in visible light, thermal cameras or infrared camera at a specific wavelength, which uses triangulation to find the depth of every pixel using the Microsoft Kinect technology [30]

RGB

The RGB camera uses CMOS technology to identify the wavelength of the wave which “hits” every pixel in the range 380-780 nm. It is the simplest and cheapest camera technology from the ones, mentioned above. By using image processing techniques, the valuable information can be extracted. The disadvantage, about using only the RGB technology is that it does not give depth information. One possible way of obtaining depth information is by combining more than one cameras and triangulate the distance to every single point, but this is not reliable due to the fact that there are shadows and if the foreground and the background have the same color, it is impossible to find which point of the first camera corresponds to point on the second one.[31]

Infrared camera with projector based on Microsoft Kinect technology

This technology works based on a patent by Microsoft. Its purpose is to obtain the depth of an image, independent of its visible spectrum color, or presence of ambient light and shadows. It uses an infrared projector, which casts many points on the observed surface with different tags. The infrared camera "sees" those points and using triangulation, calculates the distance to every point ( or every pixel). The advantage is, as mentioned, the independence of ambient light and the color of the objects. For example, if the pig is covered in mud and the background is also mud, then the Kinect sensor will still be able to calculate the depth accurately. The disadvantages are that the price is much larger, compared to the RGB cameras, and a definite requirement is that the Kinect sensor must be used inside because the projected dots will be "wiped" by the sunlight's infrared radiation.

Thermal camera

Thermal cameras are based on the idea that all objects – living or not – have heat energy. That energy is used by thermal cameras, instead of contrast between objects that are illuminated by either the sun or another form of light. Because of that, a thermal camera can operate at all times, even in complete darkness. In order to present heat in a format appropriate for human vision, thermal cameras convert the temperature of objects into shades of grey. Thermal imagery is very rich in data, sensing small temperature variations down to 1/20th of a degree. Thermal cameras convert these temperature variations – representing 16.384 shades of grey into about 250 grey scales to more closely match the capability of human vision to decipher shades of grey. Despite the fact, that thermal cameras are used more frequently for commercial applications these days, their prices are high in comparison to, say, RGB cameras.

Identification devices

After being able to "see" the cows, the system should be able to distinguish the individual animals. This is necessary to gather useful data from the footage about each cow, and communicate this to the farmer. Here two technologies are given that are relevant to this issue and could potentially solve it.

RFID identification

A way to identify cows is to attach something to them that carries a unique ID and can transmit this. For this RFID technology can be used, which is discussed in an earlier section. These could be attached to the cows, and read at certain locations for identification. For example, placing just a few readers at the entrance is enough to have the cows "check-in" to the barn and from that point on track them with the cameras.

Collar with LED

A possible solution could be to attach a device to the cow that can be uniquely identified using a camera. Because of the low cost of LED lights and their durability, it makes them a good option. Since one of our main tasks is to decrease the costs of the system, this solution is also taken in to account. The LED light can be integrated in to the collars of the cows. Current RFID collars require batteries and additional equipment to read the tags. This is not the case with the LED - it can be supplied wirelessly [32]. The identity of the cows can be encoded as a blinking pattern of the LED light [33] and this pattern can be detected only by the use of the camera. This eliminates the need of any complex or expensive technologies.

Software

Placing the cameras in the barns is not enough since some decision making is required. That is why a suitable software must be found/created in order to extract useful information from the recorded images. This kind of software is called machine vision. What is more, machine vision based technologies are evolving rapidly and currently, there are plenty of open source solutions that can be used as a base platform for the software part of the project. After researching we decided to focus on two of them - OpenCV and YOLO. In this section, we give an overview of both projects and describe how a particular software solution can be used to fulfill our needs in animal tracking.

OpenCV

OpenCV - is an open source, machine vision library that exposes rich functionality for an application that requires image and video processing in 2D and 3D as part of their programs. Its main focus is real-time computer vision, which is the reason why it is suitable for our needs. It is written in C++, but wrappers in other languages also exist to support the spread of the library. The platform supports hardware acceleration on CUDA-based GPU. Using OpenCV requires rather powerful GPU, which needs to be available for use. The platform runs smoothly on most operating systems as Windows, Linux, and macOS.

You Look Only Once (YOLO)

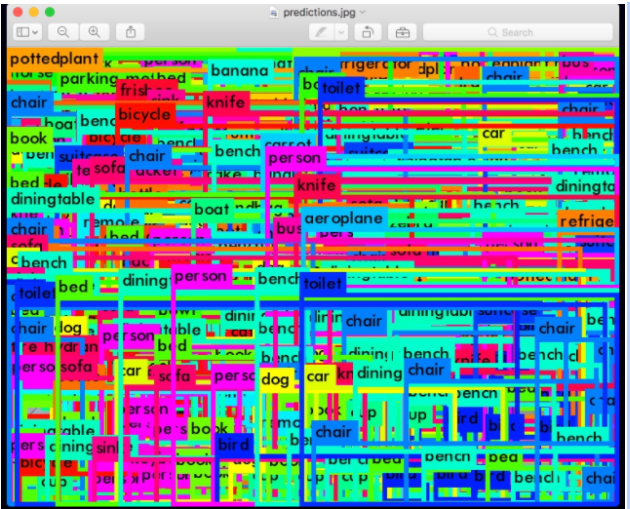

YOLO - is a real-time object detection system that is free of charge and is based on an open source neural network framework Darknet[34] It is written in C and CUDA and supports both CPU and GPU computation. It works by applying single neural network to the full image. Then the image is divided into subregions and the neural network weights them all and gives predicted probabilities (Fig TBD). After that, based on the preset threshold, some of the predictions are omitted, and the rest are displayed as boxes around the objects. If the threshold is too low, a lot of wrongly predicted items will be displayed and the image can overflow, as shown in fig. TBD. Another useful aspect of YOLO is that it can be used on live data, as it is in our case. It was decided to train YOLO from scratch. In order to do that, a different program is used - YOLO mark, where different pictures can be loaded and boxes can be drawn over the objects of interest. Results after qualifying the system are presented in the simulation section.

Costs

Costs are essential to this project, as they act as a driving force which may persuade farmers to replace the current surveillance systems or even an old-school manual behavior monitoring with a camera-oriented one.

To make it more precise, there are two costs, affecting farmers opinion the most, to distinguish, namely expenses and profit. A decrease of the first and increase of the second are the main interest of the entrepreneurs when it comes to a new offer. However, it is barely possible to estimate possible outcome increase at the current stage of development, which leaves only one option to consider – outlay.

First of all, think of the present - the most common automated surveillance system implementation right now requires a controller, reader, antennae, cables, positioning beacons, collars and collar sensors. The camera-oriented surveillance system will explicitly replace beacons, collars and their sensors with only two kinds of devices: RFIDs and cameras.

To take it closer to the actual numbers, take into consideration the known setups and the actual example. The farm known as Hoeve Boveneind, located in Herwijnen, uses the exact system described above which in their case was scaled to house 140 cows. All elements which are to be replaced with the new system cost 34150 euro, where 10 positioning beacons took together 12750 euro, collars took 4600 euro and their sensors, which were 16800 euro summed up.

The average active RFID tag price averages around 14 euro which results in a sum of 1960 euro. This leaves us with 32190 euro left to be spent on cameras to be at most as costly as the current system. Taking into account that the average price bases around 300 euro it makes possible to implement 107 cameras and still result in lower expenses. Moreover, do not forget that this is the maximum to be at most as costly and it already possible to predict that the number of cameras will be significantly smaller which will result in even bigger cost difference.

Overall, current prediction is not as accurate as some would want to simply because at the current stage it is really hard to predict the exact values. However, the skeleton provided is really promising in cutting the layout price tag which will eventually result in significantly lowering the threshold of occurrence into automation at cow farms.

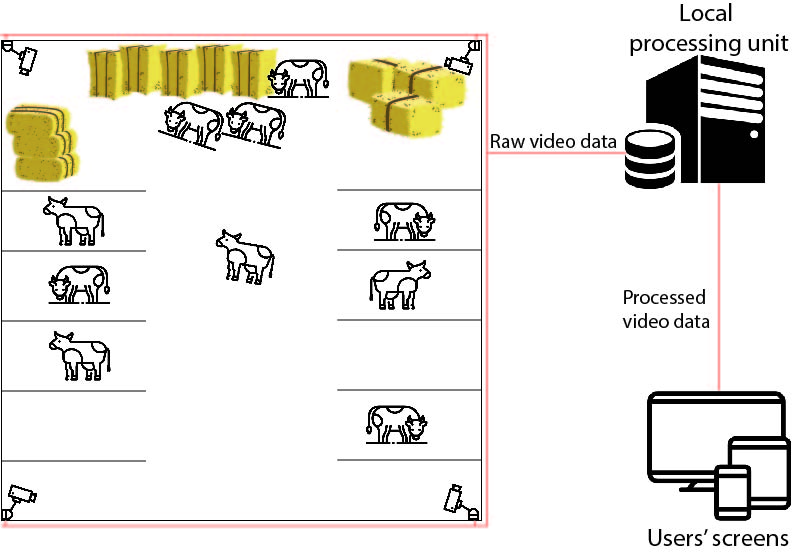

System specification

The system is divided into three parts, the camera network, the back-end and the user interface. The camera network has to be placed in the barn such that the whole area can be observed. Every camera should be running 24/7 and capture footage which is fed into the back-end. The back-end system will gather the footage from all the cameras and stitch together one image. It will feed this image into a neural network for detection. This, together with an identification system, will be able to track each cow individually. From this, the system will be able to derive measurements of the past few hours like activity/distance traveled, time spent in feeding zone, time spent in cubicle zone, etc. These measurements, together with the live feed of the cameras, can then be sent to the user which is able to monitor his/her cows. Our system will also be able detect anomalies, like sickness and heat, and notify the user in case a cow is in need of attention.

The back-end will be in the form of a computer. This computer can be either on-site or in case the farm has an internet connection it can be in the cloud. The computer will need to run stitch together the footage of several cameras, feed this into a neural network for detection. It will also need to perform identification, gather useful metrics and notify the user in case of an anomaly. The system could run entirely offline, but in that case, the user cannot be notified without being present on site.

The user interface will be able to view the various measurements were done for each cow, for example, a graph of the activity levels. Here the user can also see a list of all cows in need of attention, with a level of urgency attached. From this interface, the footage from all cameras will also be available, in case the user would like to check up on his/her cows. The interface can be accessed using multiple devices (like computers, smartphones, etc) in case the back-end is online or has internet access. This will give the user maximum usability, in case he/she is not near the barn or a computer.

In the following subsections, we will elaborate further on each specific part of the system, namely the camera network, cow detection, cow identification, metric gathering and anomaly detection. In the simulation section we explain the simulation we ran to showcase a particular part of the specification, namely the cow detection. This simulation was done using YOLO, the darknet framework, and around 7000 hand-labeled pictures.

Camera network

For our setup, we need to have cameras that are able to capture live footage of the cows in the barn. Requirement 5 states that we want to constantly track each cow in the barn. Therefore we need several cameras that together can capture the entire barn. In the design choices we decided that the cameras should be mounted from the ceiling, giving the best-unobstructed view of the cows. These cameras will be placed at a height such that the largest part of the barn can be seen, thus as high as possible as long as there are no obstructions. From our experience visiting the cow farms, most barns are relatively high (around 5 meters), therefore this is not hard to realize.

There are several types of cameras, but for our case, we can decide for a camera that has to record 4k, is capable of day and night recording and has a good price. For example, IR Dome IP67 camera will do the job for our requirements. The price is between 250 and 350 euro for one camera, which is not very expensive.

Cow detection

When the camera records footage of the barn, we need to be able to detect where a cow is and where it is not. Thus we need to detect cows and their positions from the footage. For this, we will take frames at certain intervals (say 2 seconds) from the video and feed it into a (convolutional) neural network. The network has to output where it detects the cows, in the form of bounding boxes. This neural network has to be trained beforehand on the detection of cows from images, where the cows are labeled with bounding boxes. This can be done using many (online) resources such as [1].

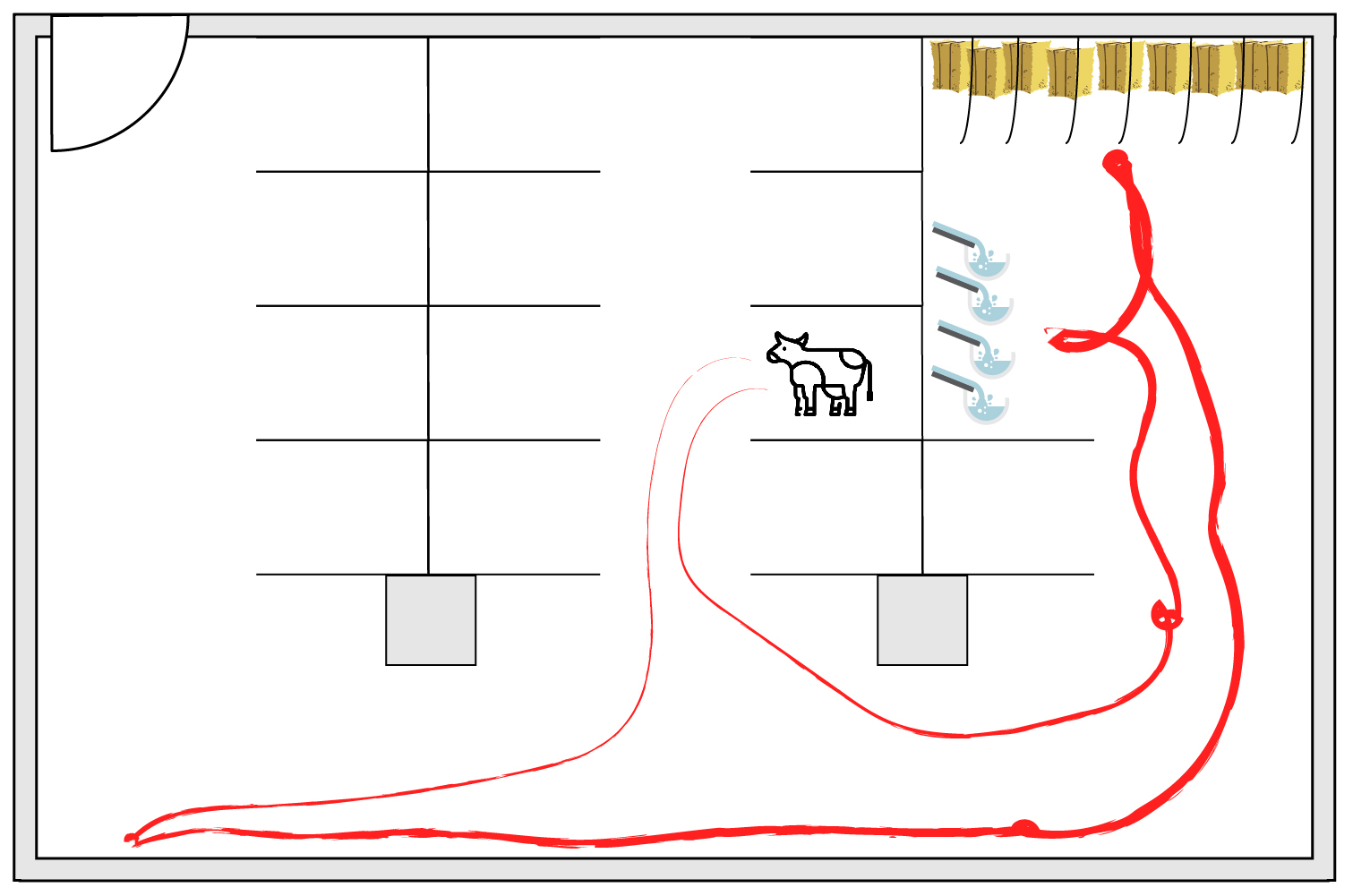

After the detection of cows with bounding boxes on the image, we need to find the position of the cow as coordinates in order to use the data. Since the cameras are top-down, this is not very difficult. We map the "pixel coordinates" of the footage to "barn coordinates", such that we know where each cow on the image is located in the barn. These positions can then later be used to track a cows position over a certain time, and measure activity and other useful metrics.

Cow identification

To keep accurate tracking data for each individual cow (Requirement 6), we need to have some way to identify a cow. Furthermore, we need to communicate this data to the farm workers, in such a way that they know what data belongs to which cow (Requirement 3). This is usually done by way of communicating the cow-ID, the unique number that every cow has. Usually, this ID is attached to an earmark. Farm workers can easily check the earmarks to identify a cow, but for our system to know this ID this is trickier. In order to solve this problem, we have come up with two solutions that could detect the cow-ID.

One solution is to attach an additional RFID tag to the ear tag. Since the cows should already have an ear tag (by law in the Netherlands [35]), this does not cause any additional disturbances to the cow, therefore not breaking Requirement 10. These RFID tags can then be used to find a cow's ID, since they are paired together with the ear tag and corresponding ID. We propose to place RFID readers at each entrance to the barn, in such a way that the cows always pass a reader when entering the barn. You can see this as the cows "checking in". The camera system can then match the detection of a new cow to the closest RFID reader, and get the latest seen cow at this reader. This method would make it possible to match detected cows directly with the cow's ID, in a way that does not cause additional harm. The benefits include that the cows can now enter and leave the barn whenever they please. The downside is the additional cost and work of buying and placing the RFID tags and readers.

Another solution is to make full use of cameras we already place in the barn. The footage taken could be used for identification of the cows, by taking advantage of the unique features of the cow. Body patterns of cows can be used for identification.[36] By training a (convolutional) neural network on each cow's patterns, the system could find the corresponding cow-id from frames taken from the footage. This would require having many images pf the body pattern per, for training an accurate network. This is a downside, since it would require additional work of taking pictures and retraining the model for every new cow. This might clash with Requirement 11, which states the system should be implementable by a layman. To avoid having to take images and retrain a model on every new cow, one could train the network on certain symbols. These symbols could then be painted on the cows back, one per cow, such that they are visible from ceiling mounted cameras. By using a background subtraction method, you could extract the regions with the symbols for every cow, and then identify them. [37]

Metric gathering

With the cow positions gathered from the detection and identification system, we then need to collect useful metrics for identifying anomalies, like illness and heat. We know a cow's position every time interval and from this data we can determine certain characteristics. From the positional data we can gather the distance traveled over a certain time frame, we will call this the activity. When we combine the positions with a map of the barn we can also find the time spent in certain regions/zones, like the feeding zone or the cubicle. This can be very informative of the cows health and behavior, for example exessive time spent in the cubicle might indicate general malaise.

These metrics will be calculated as an average over a certain timeframe, to make it less sensitive to sudden movements and behavior. For example, the activity levels can be given every hour, which will show a good indicator of average activity in the near past. Time spent in certain zones must be taken over longer timeframes (say a day) since this can be heavily influenced by the time of the day (sleeping time, feeding time, etc) and the cow's current behavior.

Anomaly detection

After we have gathered the metrics described in the previous subsection, namely activity and time spent in certain zones, we can try to detect if a cow is acting strangely. The main things we want to detect our heat and illness, since these our most important for the productivity of the farm. Heat is very important to detect, since it means the cow can be inseminated as early as possible. This means she will be able to produce more milk.Illness can negatively affect productivity if a cow becomes less- or unable to produce milk.

For the heat detection we believe the activity will be the most important indicator. We believe this because the most prominent behavioral change in this period is an increase in activity and "restlessness". Since these periods can last as short as 6 hours, it is also important that we detect this early, before the period is over. Since the other metrics need to be averaged over longer timespans, the activity is the main indicator for heat. We want to detect any abnormal increase in activity, compared to the cow's normal activity levels.

For illness we can use activity as well as the time spent in zones. We believe both will give us a good indicator of whether a cow is healthy or not. In this case, we simply look for any anomaly that cannot be explained by heat. For example, an increase/decrease in time spent in the food zone can indicate that the cow is not feeling well and needs attention. An increase in the time spent in the cubicle might mean the cow is ill. Any abnormal deviation from the cow's normal measurements can indicate that the cow will need attention from the farmer and/or a veterinarian.

Simulation

Training set

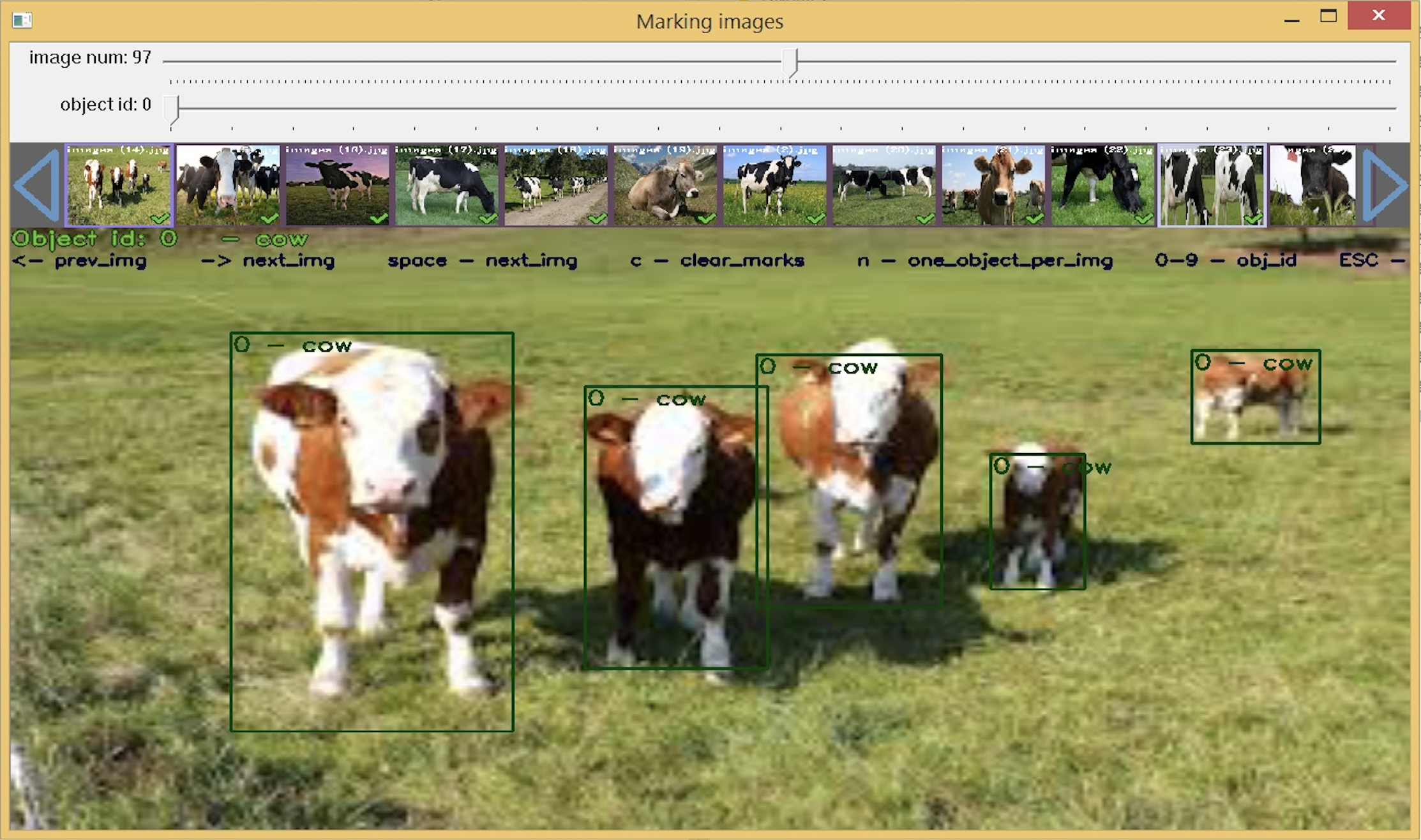

In order to develop the core component of the system, namely the computer vision part that performs animal recognition and tracking, we have to train the system to perform the task. For this purpose we collected more than 7.000 images of cows taken from different angles, different environments and conditions. These images are used by YOLO to train the underlying artificial neural network of the Darknet framework. YOLO Mark marking software is used to define bounding boxes around objects to explicitly inform the neural network about the classes of interest. YOLO Mark produces additional .txt file per picture that describes the bounding boxes in the format that Darknet can process. The whole process of labelling is manual.

Note, performing the labelling part too carelessly and indicating the bounding boxes wrong too many times (too much padding around the object, cutting important pieces off of the object), the detected bounding box will be of poor quality and can influence the final accuracy of the detection system. Also, it is crucial for the system to be able to detect partially visible objects, we have to include images in your set that represent this as well. In that case, cutting corners off of some images is a good idea.

After labelling an image, YOLO Mark will produce a .txt file describing all objects present in the image, namely the class of an object and the coordinates of its bounding box.

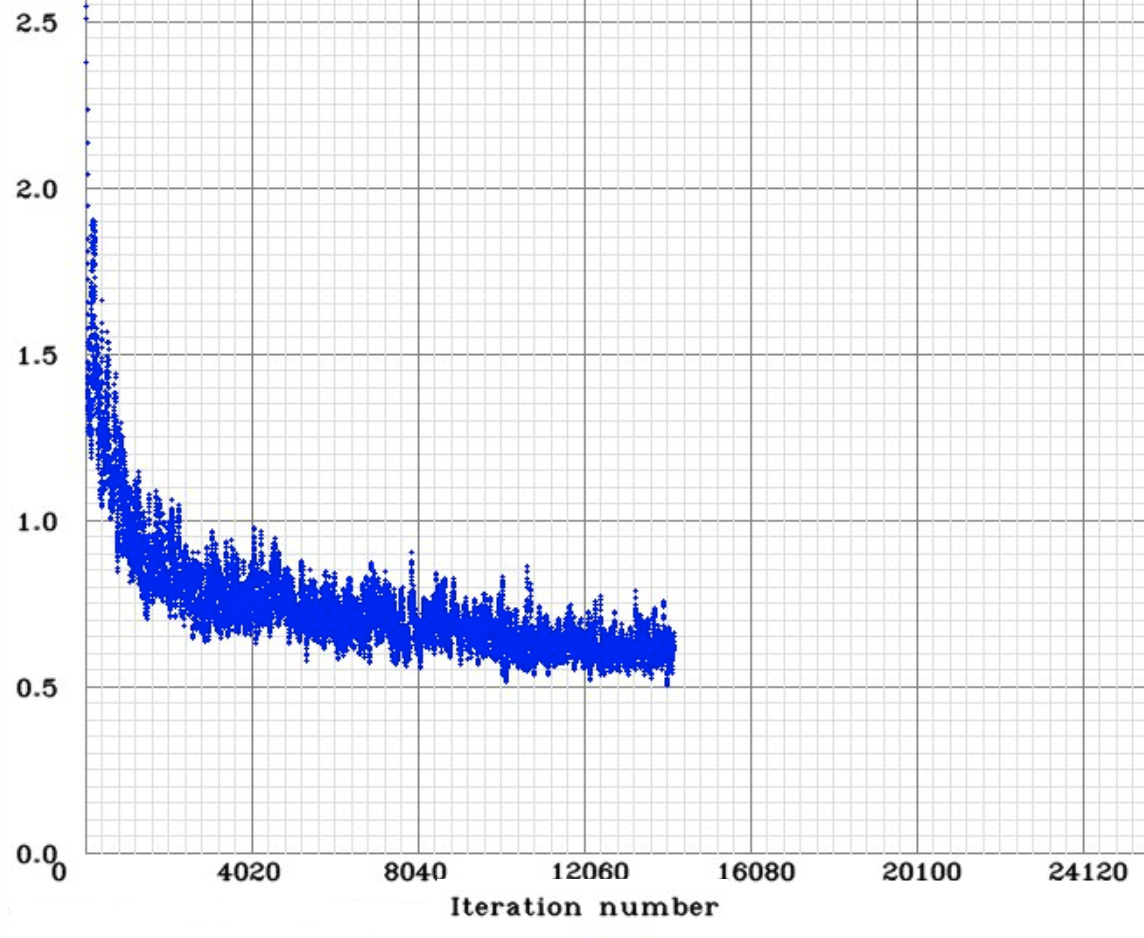

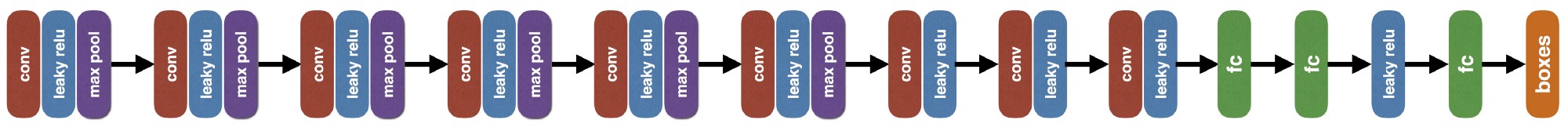

Learning

Once all images from the training set are labeled, the training process can start. As mentioned earlier in the Software section, the YOLO detection system is our choice for detection and classification purposes. Also, YOLO uses OpenCV as a dependency to interact with video files, e.g. drawing bounding boxes around detected objects, hence we use it as well. Note, we do not explain the internals of YOLO and simply use the system as a framework by using it's public API and advanced samples from the documentation. For more information devoting YOLO consult YOLO [38] and YOLO9000[39].

The YOLO approach of the object detection consists of two parts: the neural network part that predicts a vector from an image, and the post-processing part that interpolates the vector as boxes coordinates and class probabilities.

For the neural network used for this project, the tiny YOLO configuration was used with some changes according to our specific problem. Namely, we changed the number of class to be 1 and the number of filters in the last convolution layer. Overall, the configuration consists of 9 convolution layers and 3 full connected layers. Each convolution layer consists of convolution, leaky relu and max-pooling operations. The first 9 convolution layers can be understood as the feature extractor, whereas the last three fully-connected layers can be understood as the “regression head” that predicts the bounding boxes.

The final model was not built completely from scratch. For training, we used convolutional weights that were pre-trained on Imagenet for the Extraction model.

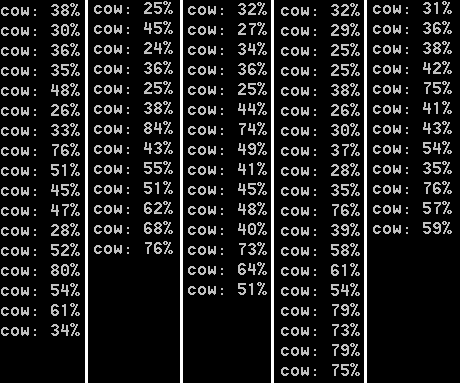

Result

In order to verify the model, we fed a video from the actual farm into the network to check its accuracy. The result can be seen in this video.

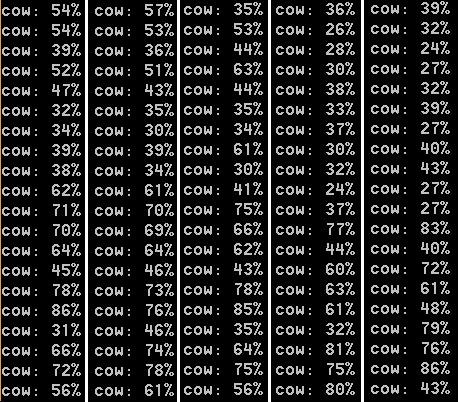

One can notice that the model draws accurate bounding boxes and is quite precise in detecting and classifying cows. The figure below presents results of the classification. It shows 5 snapshots taken at different times while the network processed the video mentioned before. As you can see, the model outputs high percentages for cows that are in forefront of the viewport of the camera, but the results for partially visible cows or the ones in the background are much lower and varies between 20-40%.

However, the number of detected and classified cows can vary drastically during the runtime, as you can see in the figure below.

Despite the results of the simulation, we would like to add several remarks:

- The position of the camera is different from the one we consider within the project. As mentioned in the Camera position section, we expect cameras to be mounted just under the ceiling and point straight to the ground. Such approach minimizes the chances that cows will overlap and hence make the detection procedure more complex.

- The quality of the video is lower than we expect to be imported into the model. One can notice that the door of the barn is opened and light coming from outside masks several cows standing in that area.

- Only a single is used. As mentioned in Camera network, our design requires several cameras to evenly cover the whole barn area.

- We were limited on the amount of time for the training phase. More time would allow us to train the model longer and hence get better results with proper configuration.

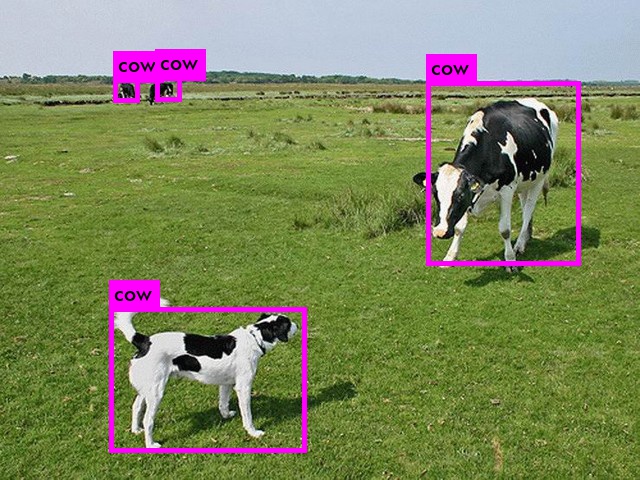

It is worth noting, that the model was trained to classify only a single class of objects - cows, so it is unaware of any other type of objects. This can cause inconsistencies as it is shown in the figure.

Here, the network falsely classified a dog as a cow. This happened because the dog has similar coloring and the pattern of the spots as the cows we used to train our model. There are two ways to solve the issue.

- The first solution does not require any extra time. So, we can simply increase the threshold for classifying. In this particular case, the neural network reports 25% chance of the dog being a cow, hence if we increase the threshold up to 30% classification should normalize.

- The more robust solution would be to train the network on different object classes, say dogs, pigs, cats, etc., such that the model can distinguish between them and discard the classes our system is not interested in. Note, that this will require more time for labelling and for the actual training phase.

- Adding negative examples, or simply adding images with objects similar to cows and leaving them unlabeled. This could be a good way to remove false positives, but it also requires extra time for training purposes, as well as gathering more images and labeling them.

Conclusion

In this report we have outlined a system used to track cows and identify illness and heat. The purpose was to help farmers, increase a farm's productivity, and improve cow wellness. We first did thorough backgroud research on the current state-of-the-art on animal monitoring systems. We found that there is still much to be gained in this sector, since most detection was either done manually or with an expensive sensory system.

To gain more insight into farms, we visited two farms together with the Lenny van Erp, who is a lector in Precision Livestock Farming. We were able to ask many questions and gain useful experience into the (dairy) farming sector. This was very useful to specify the USE aspects of our project, namely the user, society and enterprise wishes and requirements for our system.

We specified a system that can track and monitor cows using a camera system. The footage taken will be processed to gain location information (coordinates) on each cow at every timestep. This location information is used to track the cow's path in the barn. The path information like activity levels and time spent in certain zones. From these measurements we will be able to detect illness and heat in cows. If the system is implemented well, we believe it will be able to

Future improvements