Embedded Motion Control 2017 Group 4: Difference between revisions

| Line 91: | Line 91: | ||

=== Repulsion & Angle correction === | === Repulsion & Angle correction === | ||

[[File:repulsion_allown.png|thumb|right|upright=0.8]] | [[File:repulsion_allown.png|thumb|right|upright=0.8]] | ||

The first thing that was considered for the corridor challenge was some kind of repulsion from the walls to make sure Pico does not bump into anything. For the repulsion a simple version of an Artificial Potential Field is considered, which is given by the formula below | *The first thing that was considered for the corridor challenge was some kind of repulsion from the walls to make sure Pico does not bump into anything. For the repulsion a simple version of an Artificial Potential Field is considered, which is given by the formula below | ||

<math>Insert formula here</math> | <math>Insert formula here</math> | ||

| Line 99: | Line 99: | ||

[[File:angle_correction.png|thumb|right|upright=0.8]] | [[File:angle_correction.png|thumb|right|upright=0.8]] | ||

For the angle correction all the laser data at the right side of Pico is considered. The smallest distance to the wall and the corresponding angle are found using a for-loop. After subtraction of 90 degrees, the resulting angle is used as input for the desired rotation speed. This approach can be interpreted as a torsional spring connected to Pico. | *For the angle correction all the laser data at the right side of Pico is considered. The smallest distance to the wall and the corresponding angle are found using a for-loop. After subtraction of 90 degrees, the resulting angle is used as input for the desired rotation speed. This approach can be interpreted as a torsional spring connected to Pico. | ||

== Final design == | == Final design == | ||

Revision as of 11:24, 9 June 2017

Reports

Group 4

| M.R.M. Beurskens | 0843080 |

| N.H.J. Janssen | 0842075 |

| M.C.W. Schouten | 0869793 |

| J.A.E. Verest | 0844791 |

| M.S. de Wildt | 0776941 |

| R.H.M. Winters | 0854048 |

Initial design

Introduction

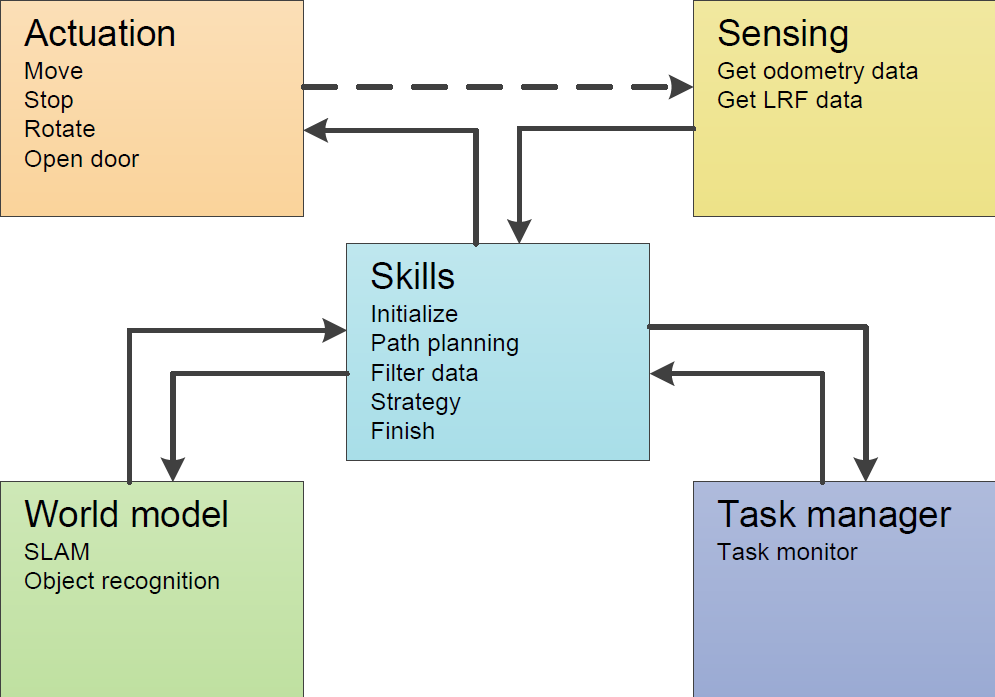

In this design chapter, the initial design of a maze solving robot is specified in terms of requirements, specifications, components, functions and interfaces. It is implemented on the PICO hardware platform. A task-skill-motion framework is used in order to describe the robot behaviour. This will also be implemented in C++.

Requirements and specifications

PICO is required to autonomously navigate through a maze and find the exit without bumping into any of the walls. A door will be present in the maze, and must be opened by "ringing the bell" in a suitable location whilst standing still. Open spaces might be present, and the walls are approximately axis aligned. The maze might also feature loops. Therefore PICO is required to do the following:

- Recognize walls and corridors in a robust manner. The walls are axis aligned, however not perfectly. PICO should allow for an alignment error. It also has to stay away from the walls with a sufficient margin (e.g. 10 cm between robot and wall). Bumping into a wall will count as a failed attempt.

- Identify possible "door areas" in a robust manner. "The door will be situated at a dead end, a dead end being defined as a piece of wall with minimum length 0.5 meter and maximum length 1.5 meter with side-walls of at least 0.3 meter length". Alignment errors again need to be taken into account.

- Map the maze to determine whether the area has been visited and to build a world model. Odometry and a Laser Range Finder (LRF) are used to build a world map and determine the location in the world. The odometry and LRF data should be synchronized to avoid drift in world location and mapping (SLAM).

- Recognize corners as nodes in order to build an abstract and simplified maze representation consisting of nodes and lines.

- Cary out a maze solving strategy based on its world model and solve and execute corresponding routing. The solving routine and the routing should be able to handle loops and open spaces and are based on the simplified maze representation. This enables more general strategy composition and faster computation.

- Open doors by ringing a bell in the door area. No part of PICO should be located outside the 1.3 meter range of the door and PICO should be standing still whilst ringing the bell. The door opens completely within 5 seconds after PICOs request. Once the door is completely open, PICO needs to pass the open doorway to find the exit of the maze.

- The software should be easy to set up and deploy, i.e. using git pull and with a single executable.

- Clear the maze as soon as possible, with a total available time of seven minutes for two attempts. A maximum speed of 0.5 m/s translational and 1.2 rad/s rotational is given.

- Do not stand still for more than thirty seconds when inside the maze.

- Recognize when the maze is solved.

Components

Pico features a Laser Range Finder (LRF) to detect surrounding obstacles and wheel encoders to measure odometry data. The wheel base is holonomic, enabling it to turn on the spot and drive in any direction. An emergency button is present on the remote. PICO runs on batteries and should always be charging when not driving around.

The software runs on an Intel i7 processor running Ubuntu 14.04 LTS. The Robotic Operating System (ROS) is used as a framework for PICOs software. However, an intermediate software layer is provided which can be used to access sensor data and actuate the robot. Our own software layer is divided into five main components: actuation, sensing, world model, task manager and skill.

Functions

Configuration file

- Configuration: Defines a number of parameters. This is a header file so no inputs or outputs.

Actuation

- Move: This function calculates the translational velocity in x and y direction and the rotational velocity corresponding to the direction vector and net velocity outputted by the path planning function. The calculated velocities are sent tot the base actuators.

- Input: Direction vector, total velocity, Output: -.

- Stop: Stops the robot by sending the base velocities of magnitude zero.

- Input:-, Output:-.

- Rotate: Rotates the robot 90 or 180 degrees. First checks the robot has enough space to rotate without hitting the wall. If this is not the case, first a call to Move is made to move the robot away from the wall.

- Open door: Makes sure the robot stands still, rings the bell to open the door and waits until the door is opened.

- Input: LRF data, Output: -.

Sensing

- Get LRF data: Gets the data from the laser range finder.

- Input: -, Output: LRF data

- Get odometry data: Gets position data from omni-wheels.

- Input: -, Output: odometry data

World Model

- SLAM: Simultaneous Localization And Mapping, this updates the position of PICO in the world model using new sensor data.

- Input: LRF data, Odometry data, Output: World model

- Object recognition: This function will estimate which objects there are in the World Model using the geometries of the environment.

- Input: World model, Output: Objects with their coordinates.

Task Manager

- Task monitor: Determines which piece of code will be executed next.

- Input: -, Output: -

Skill

- Filter data: Moving average filter to minimize noise.

- Input: noisy data, Output: averaged data.

- Finish: Recognizes that the finish line has been crossed and that Pico can stop searching for the exit. The main script can be terminated.

- Input: World model, Output: -

- Strategy: Gives a general direction based on the maze solving algorithm that is chosen.

- Input: World model data, LRF data. Output: Target point.

- Path planning: Uses an Artificial Potential Field to output a direction vector considering the target point outputted by the strategy function and the LRF data.

- Input: Target point, LRF data. Output: Direction vector

Interfaces

The software functions have multiple inputs and outputs and can be grouped by functionality. If data from the sensing component is used by a function from the skill component, the data is transferred between these components, this is referred to as an interface. A rough overview of the interfaces is shown in Figure 1. The dashed line represents that the actions of the robot will influence what the sensors measure.

Corridor Challenge

Repulsion & Angle correction

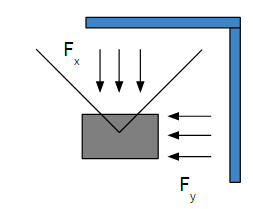

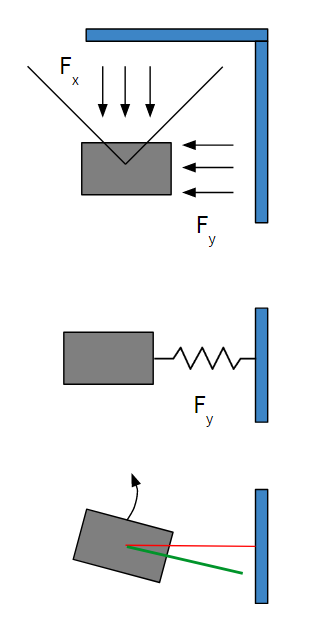

- The first thing that was considered for the corridor challenge was some kind of repulsion from the walls to make sure Pico does not bump into anything. For the repulsion a simple version of an Artificial Potential Field is considered, which is given by the formula below

[math]\displaystyle{ Insert formula here }[/math]

For a range laser data in front of Pico, this formula is used to calculate a force Fx in the forward direction. To get a force Fy in the side ward direction, the other laser range data is used. Note that if the laser sees something at a very large distance or does not see anything at all, the returned distance is very small or even negative. Therefore all distances smaller then 0.01 meter are set to 10 meter.

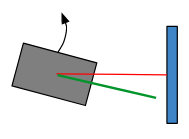

- For the angle correction all the laser data at the right side of Pico is considered. The smallest distance to the wall and the corresponding angle are found using a for-loop. After subtraction of 90 degrees, the resulting angle is used as input for the desired rotation speed. This approach can be interpreted as a torsional spring connected to Pico.

Final design

Interface

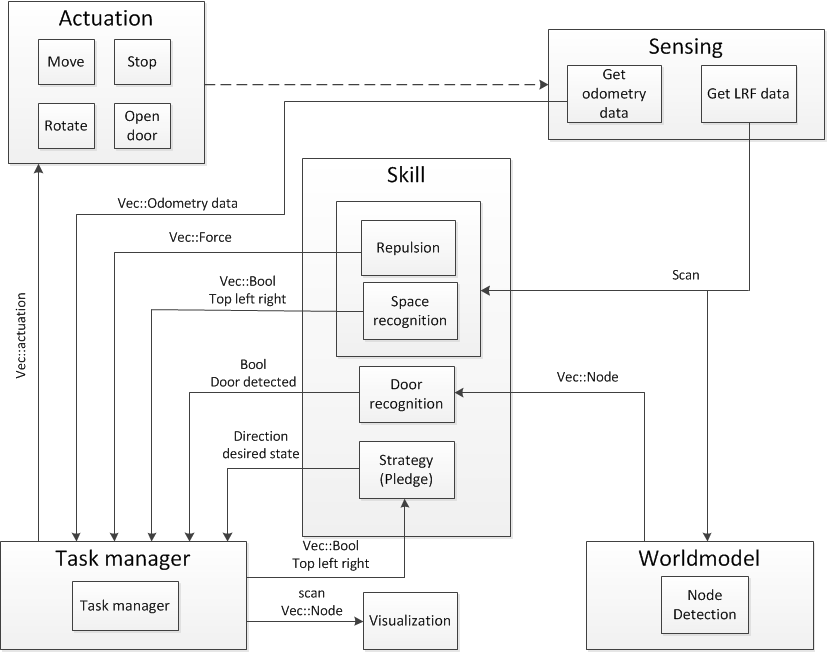

After the corridor challenge, the software architecture is changed a bit. The different software parts with their corresponding in- and outputs are depicted in Figure 2.

Maze solving algorithm

The Pledge algorithm is chosen to solve te maze. The pledge algorithm has a preferred direction and counts the corners the robot takes. A corner left counts as -1 and a corner right as +1. When the counter is 0, the robot goes in its preferred direction. The pledge algorithm is implemented in the following manner:

- If Pico is driving in preferred direction:

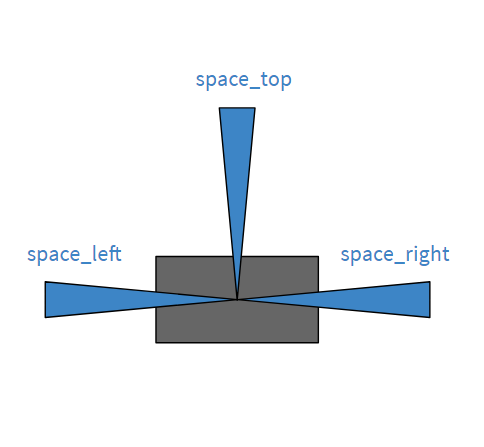

Figure 3: Space around Pico - if space_top = true: Go straight in preferred direction

- if space_top = false & space_left = true: rotate 90 degrees left

- if space top = false & spacel_left = false & space_right = false: rotate 180 degrees left

- Else

- if space_right = true: rotate 90 degrees right

- if space right = false & space top = true: go straight

- if space_right = false & space_top = false & space_left = true: rotate 90 degrees left

- if space_right = false & space_top = false & space_left = false: rotate 180 degrees left

In Figure 3 Pico is depicted with three beams: space_left, space_right and space_top. All beams are using the 10 degrees of lrf-data.

Repulsion & wall following

World model

The world model is based on two types of nodes; endnodes and cornernodes. Finding nodes is done with the following checks over all data-points:

- The current data-point is a cornernode if the distance between the current data-point and the next data-point is larger than a given value.

- The current data-point is an endnode if the distance between the current data-point and the next data-point is smaller than a given value.

- A data-point that lies at the maximum range or at the maximum angles is considered an endnode.

- The current data-point is a cornernode if is passes the 90-degrees-test. This is a test that considers a previous data-point that lies at a set distance from the current data-point, and a next data-point that also lies at a set distance from the current data-point. Using the cosine rule, angles and distances to the three points, the actual distance between the previous and next datapoint can be compared to the distance between these points when applying Pythagoras. Pythagoras only holds if the angle is 90 degrees, so if the two values are equal, then Pythagoras is applicable and thus the corner is 90 degrees.