Embedded Motion Control 2012 Group 11: Difference between revisions

No edit summary |

|||

| Line 7: | Line 7: | ||

|'''Student nr.''' | |'''Student nr.''' | ||

|- | |- | ||

|- | |- | ||

| Christiaan Gootzen | | Christiaan Gootzen | ||

| [mailto:c.f.t.m.gootzen@student.tue.nl c.f.t.m.gootzen@student.tue.nl] | | [mailto:c.f.t.m.gootzen@student.tue.nl c.f.t.m.gootzen@student.tue.nl] | ||

| 0655133 | | 0655133 | ||

|- | |- | ||

| Sander van Zundert | | Sander van Zundert | ||

Revision as of 12:44, 6 June 2012

Group Info

| Group Members | Student nr. | |

| Christiaan Gootzen | c.f.t.m.gootzen@student.tue.nl | 0655133 |

| Sander van Zundert | a.w.v.zundert@student.tue.nl | 0650545 |

Tutor

Sjoerd van den Dries - s.v.d.dries@tue.nl

Week 1 and 2:

-> Installed required software,

-> Fixed software issues, 2 members had to reinstall ubuntu 3 times plus some wireless network issues had to be fixed.

Week 3: -> Finished all tutorials -> Working on processing of a 2d map in Jazz simulator and Rviz to make the robot navigate autonomously. We are trying to measure distance in front of the robot in order to take decisions.

Week 4: -> The robot can now autonomously move through a given maze only using the LaserScan data. -> Given a corridor with exits, the robot is able to take the first exit.

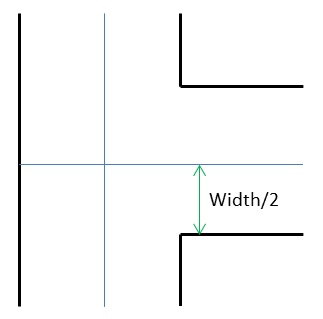

Exit detection Two candidate algorithms were chosen In order to detect an exit. In the first algorithm, the sudden jump in the laser data is detected, and the robot keeps moving forward until half the width of the exit is traversed, and then the robot halts and turns by 90deg. In the second algorithm, the time required to traverse half the width of the exit is multiplied with the loop rate, and the corresponding laser data points are monitored before an exit is detected, and then similarly the robot halts and turns by 90 deg. To make this robust, both the algorithms were applied together.

Tasks for Week 5: -> Improve algorithm implementation to correct existing flaws -> Develop an algorithm for arrow detection using Camera in rViz. -> Work on methods to implement odometry data in the system.

Week 5 -> A new self aligning algorithm was implemented, and the robot is able to align itself in the simulator, given any initial angle and position inside the maze.

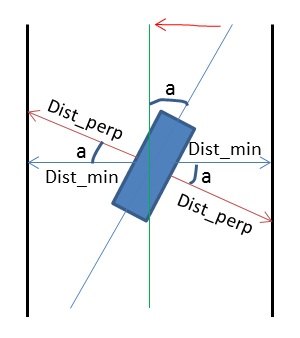

Alignment algorithm

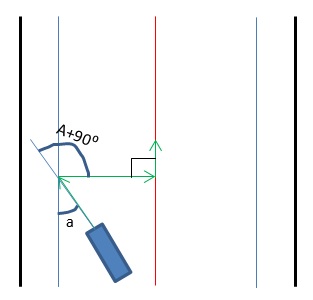

Case1: Robot in the middle The angle of inclination is calculated using trigonometry by calculating the minimum distance and the perpendicular distance to the walls, and the corresponding correction in angle is applied to the robot.

Case2: Robot closer to the wall (collision threshhold) The angle of inclination is calculated similarly and the robot rotates by angle, which is the sum of the inclination angle and 90 deg, it then travels perpendicular to the walls until the mid-point is reached. The robot then makes 90 deg turn in the opposite direction, thereby aligning the robot onto the centre of the track.

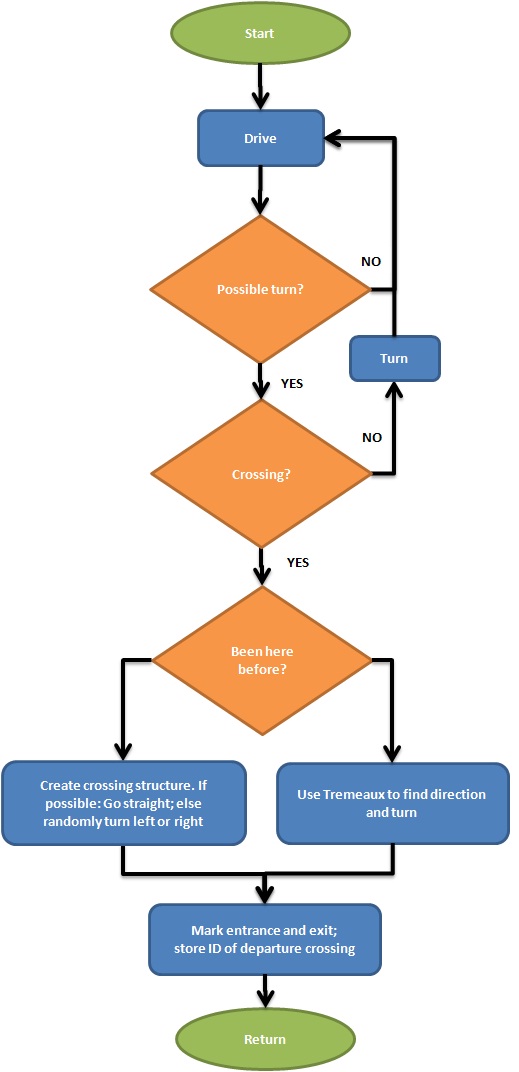

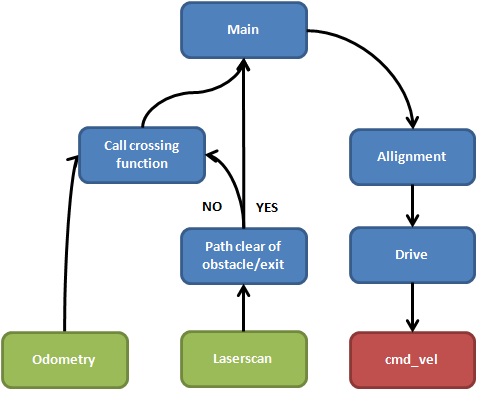

Week 6 -> The algorithm was made more robust depending on less varying parameters. More complicated mazes were designed for later testing of the maze solving algorithm. The backbone structure of the maze solving algorithm was designed and partially implemented. The allignment and exit strategy were tested on the robot. -> Working on publishing the odometry data using tf

Corridor competition: The reason for failure to align and take the exit, was perhaps due to the lack of feedback from the robot, since odometry feedback was not implemented in the code. We are currently working on combining odometry data into the code to make the algorithm more robust.