Embedded Motion Control 2012 Group 6: Difference between revisions

| Line 30: | Line 30: | ||

==ToDo== | ==ToDo== | ||

List of planned items. | List of planned items. | ||

* | * Improve arrow-recognition | ||

* | * Use recognized arrows in navigation algorithm | ||

* | * Add maze-specific cases to navigation algorithm (such as dead end detection) | ||

* Apply speed and error dependent corrections for aligning with walls | |||

==Remarks== | ==Remarks== | ||

Revision as of 21:03, 4 June 2012

SVN guidelines

- Always first update the SVN with the following command

svn up

- Commit only files that actually do work

- Clearly indicate what is changed in the file, this comes handy for logging purposes

- As we all work with the same account, identify yourself with a tag (e.g. [AJ], [DG], [DH], [JU], [RN])

- To add files, use

svn add $FILENAME

For multiple files, e.g. all C-files usesvn add *.c

Note: again, update only source files - If multiple files are edited simultaneously, more specifically the same part of a file, a conflict will arise.

- use version A

- use version B

- show the differences

How to create a screen video?

- Go to Applications --> Ubuntu software centre.

- Search for recordmydesktop and install this.

- When the program is running, you will see a record button top right and a window pops-up.

- First use the "save as" button to give your video a name and after that you can push the record button.

- This software creates .OGV files, no problem for youtube but a problem with edditing tools.

- Want to edit the movie? Use "handbrake" to convert OGV files to mp4.

Useful links

Documentation from Gostai

Standard Units of Measure and Coordinate Conventions

Specs of the robot (buy one!)

ToDo

List of planned items.

- Improve arrow-recognition

- Use recognized arrows in navigation algorithm

- Add maze-specific cases to navigation algorithm (such as dead end detection)

- Apply speed and error dependent corrections for aligning with walls

Remarks

- The final assignment will contain one single run through maze

- Paths have fixed width, with some tolerances

- Every team starts at the same position

- The robot may start at a floating wall

- The arrow detection can be simulated by using a webcam

- Slip may occur -> difference between commands, odometry and reality

- Dynamics at acceleration/deceleration -> max. speed and acceleration will be set

Presentation, chapter 8

- [RN] introduction

- [RN] semaphores

- [DH] pipes

- [JU] event registers

- [AJ] signals

- [DG] condition variables

- [RN] summary

The Presentation is available online now.

Progress

Progress of the group. The newest item is listed on top.

- Week 1

- Started with tutorials (all)

- Installed ROS + Eclipse (all)

- Installed Ubuntu (all)

- Week 2

- Finished with tutorials (all)

- Practiced with simulator (all)

- Investigated sensor data(all)

- Week 3

- Overall design of the robot control software

- Devision of work for the various blocks of functionality

- First rough implementation: the robot is able to autonomously drive through the maze without hitting the walls

- Evidence on youtube http://youtu.be/qZ7NUgJI8uw?hd=1

- Week 4

- Studying chapter 8

- Creating presentation slides (finished)

- Rehearsing presentation over and over again (finished)

- Creating dead end detection (finished)

- Creating pre safe system (finished)

- Week 5

- Evidence on youtube http://youtu.be/p8DRld3NVaY?hd=1

Progress report

This progress report aims to give an overview of the results of group 6. In some cases details are lacking due confidence reasons.

The software to be developed has one specific goal: driving out of the maze. It is yet uncertain whether the robot will start in the maze or needs to enter the maze himself. Hitting the wall results in a time penalty, which yields to the constraint not to hit the wall. In addition, speed is also of importance to set an unbeatable time.

For the above addressed goal and constraints, requirements for the robot can be described. Movement is necessary to even start the competition, secondly vision (object detection) is mandatory to understand what is going on in the surroundings. But, the most important of course is strategy. Besides following the indicating arrows, a back-up strategy will be implemented. Clearly, the latter should be robust and designed such that it always yields a satisfying result (i.e. team 6 wins).

Different modules will be designed the upcoming weeks are being developped at this very moment, which form the building blocks of the complete software framework.

But first things first: Our lecture coming Monday! Be sure all to be present, because it's going to be legendary ;) seriously, you don't want to miss this event! Ow, and also be sure to have read Chapter 8 on beforehand, otherwise you won't be able to ask intelligent questions.

To be continued...

Arrow recognition

edit in progress by [RN]

The arrows help the robot navigating through the maze. In order to decide where to go, the robot should be able to process the following steps:

- identify an arrow shape on the wall

- check if the arrow is red

- determine the arrow point direction

- use the obtained information

The ROS package provides a package for face detection, see http://www.ros.org/wiki/face_recognition. One can see the image as a poor representation of a face, and teach the robot the possible appearances of this face. The program is thought to recognise a left pointing "face" and a right pointing "face". However, one can doubt the robustness of this system. First because the system is not intended for arrow recognition, it is misused. Possibly, some crucial elements for arrow recognition is not implemented in this package. Secondly, face recognition requires a frontal view check. If the robot and wall with the arrow are not perfectly in line, the package can possibly not identify the arrow.

With these observations in mind, clearly, a different approach is necessary. Different literature is studied to find the best practice. It turned out that image recognition does not cover the objective correctly (see for example Noij2009239), it is better to use the term pattern recognition. Using this term in combination with Linux one is directed to various sites mentioning OpenCV. Especially this tutorial is of interest: http://www.shervinemami.co.cc/blobs.html In addition, ROS support this package as well. For more information see: http://www.ros.org/wiki/cv_bridge/Tutorials/UsingCvBridgeToConvertBetweenROSImagesAndOpenCVImages

OpenCV reference: http://www710.univ-lyon1.fr/~bouakaz/OpenCV-0.9.5/docs/ref/OpenCVRef_ImageProcessing.htm

Using webcam in ROS: http://pharos.ece.utexas.edu/wiki/index.php/How_to_Use_a_Webcam_in_ROS_with_the_usb_cam_Package

After discussion with software department within AAE, Helmond, (where [RN] has a job :-))it is learned that openCV is a frequently used program in automation as well. In addition, two reference books are retrieved namely: Open Source Computer Vision Library - Reference Manual by Intel Learning openCV by O'Reilly

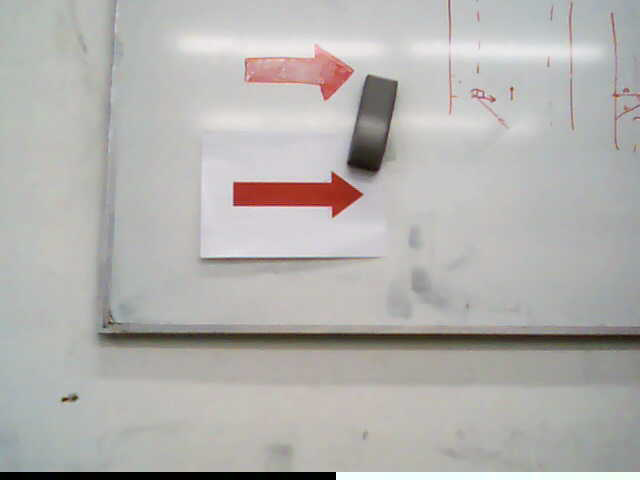

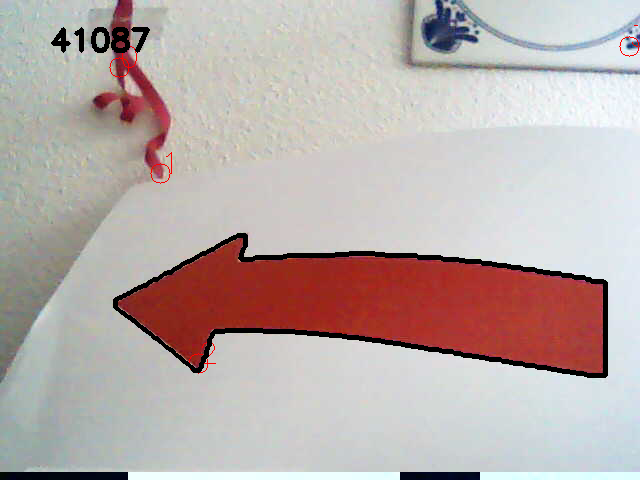

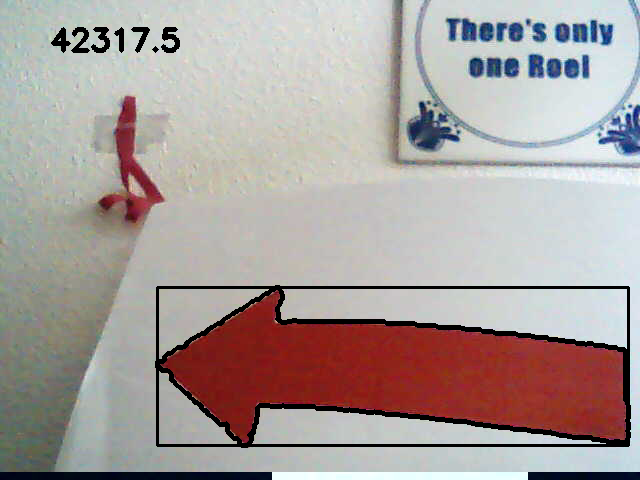

Results:

|

|

|

|

|

|

Issues:

The above described procedure is working fine at first glance. However, robustness is an important criteria. Two thing can fool the above procedure:

- different light source

- colours close to red

Concerning the first, different light source, an Guided Self Room at the University was an ideal setup. This room offered 4 different light situations:

- all lights off

- all lights on

- one half of the lights on

- other half of the lights on

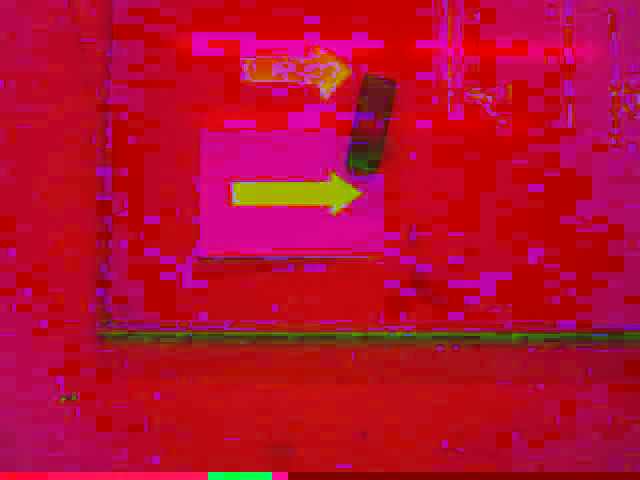

The picture below, however not so clearly, indicates the problem. The HSV transform is not homogeneous for the arrow. This resulted in a very noise canny detection and other issues in the rest of the algorithm.

|

For sure, the arrow is printed on a white sheet of paper. Concerning the environment of the maze nothing is told, this forced us to check the robustness in an not so ideal environment. Colours close to red are, e.g. pink and orange. A test set-up was created at [RN] consisting of orange scissors on one side and a nice pink ribbon on the other.

|

|

Clearly, the algorithm is fooled. False corners are detected on both objects.

As light influences the way red is detected, setting the boundary's for the HSV extraction is quite tricky if not impossible. Another solution to cope with this problem is currently being developed.

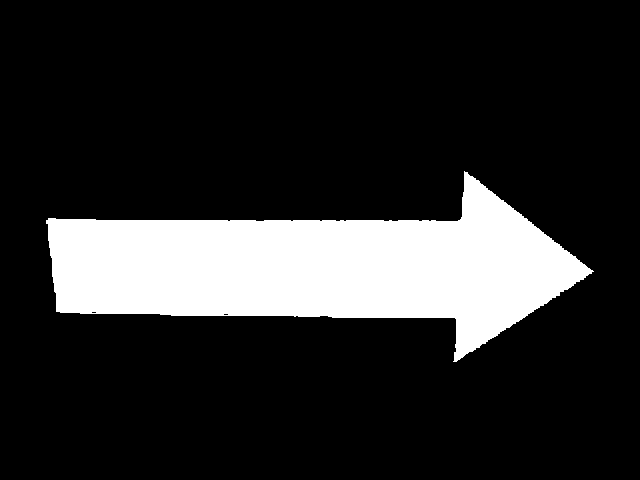

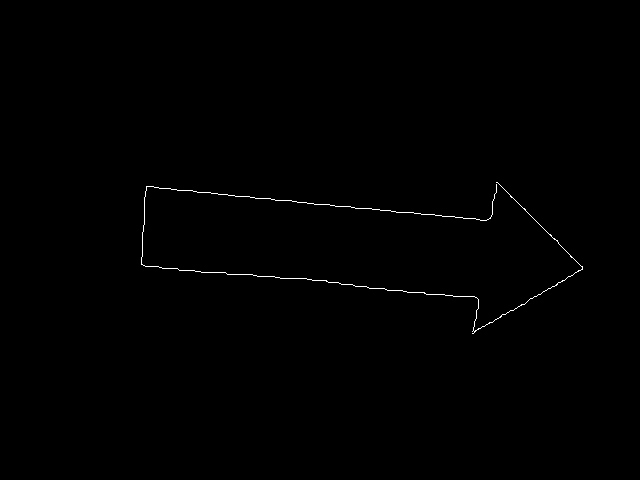

Result:

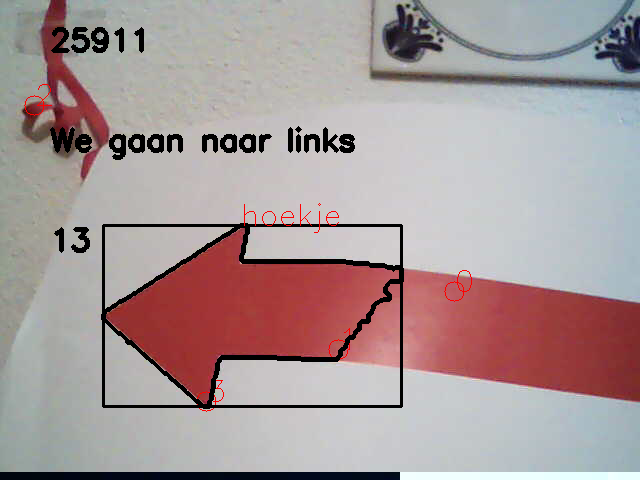

Prrrr, bom (sound of drum): the script can tell us where to go. See the results in the two files below.

|

|

In these files we see some numbers, the above one (e.g. 25911) is used to determine the biggest red object in the current view. We assume that we will not be fouled with a larger red object besides the arrow. note: point of improvement to check for real arrow One specific property of an arrow is checked, which enables us to handle glitches as seen in the arrow indicating a left direction. Clearly, due to the light circumstances, a white dash is formed on the arrow body. The algorithm is able to handle these glitches without problem.

Using some maths, the coordinate of "hoekje" is checked against a reference frame to indicate the direction.

Result II:

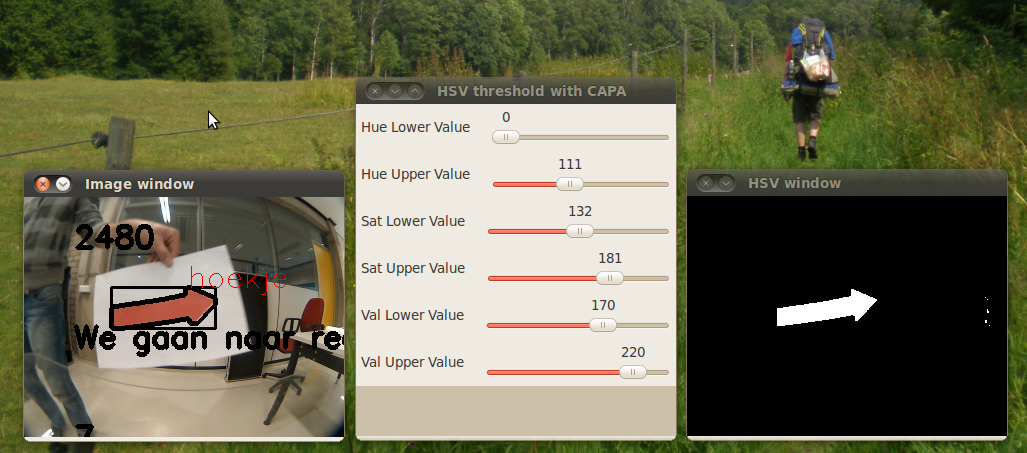

Well, theory works different in practice and practice at home works different than practice at uni. Before the corridor competition, the remaining testing time was, among other things, used to check the above algorithm. Maybe due different cameras, colour schemes, and so on the results were disappointing. The algorithm was assumed to work perfectly. The only possible flaw was expected in the colour extraction (i.e. the HSV image). With the published rosbag file, this problem was studied in greater detail. Apparently, the yellow door and red chair are challenging objects for proper filtering. As changing variables, making the code and testing the result got boring, some proper computer aided parameter adjustment was developed. See the result in the figure below.

|

Line detection

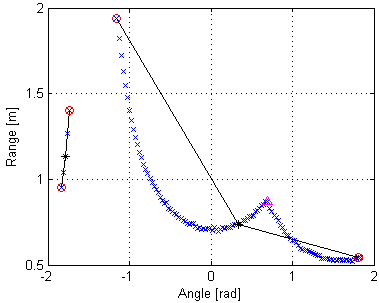

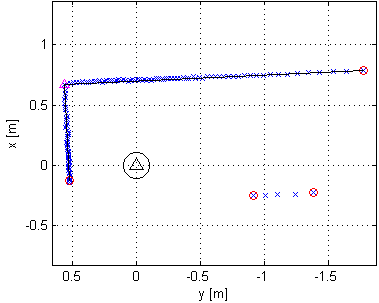

edit in progress by [AJ]

Since both the corridor and maze challenge do only contain straight walls, without other disturbing objects, the strategy is based upon information about walls in the neighborhood of the robot. These can be walls near the actual position of the robot, but also a history of waypoints derived from the wall-information. The wall-information is extracted from the lasersensor data. The detection algorithm needs to be robust, since sensor data will be contaminated by noise and the actual walls will not be perfectly straight lines and may be non-ideally aligned.

Each arrival of lasersensor data is followed by a call to the wall detection algorithm, which returns an array of line objects. These line objects contain the mathematical description of the surrounding walls and are used by the strategy algorithm. The same lines are also published as markers for visualization and debugging in RViz.

The wall detection algorithm consists of the following steps:

- Set measurements outside the range of interest to a large value, to exclude them from being detected as a wall.

- Using the first and second derivative of the measurement points, the sharp corners and end of walls are detected. This gives a first rough list of lines.

- A line being detected may still contain one or multiple corners. Two additional checks are done recursively at each detected line segment.

- The line is split in half. The midpoint is connected to both endpoints. For each measurement point the distance to these linear lines is calculated (in the polar-grid). Most embedded corners are now detected by looking at the peak values.

- In certain unfavorable conditions a detected line may still contain a corner. The endpoints of the line are connected (in the xy-grid) and the absolute peaks are observed to find any remaining corners.

- Lines that do not contain enough measurement points to be regarded as reliable are thrown away.

The figures shown below do give a rough indication of the steps involved and the result.

|

|

Characteristic properties of each line are saved. The typical equation of a straight line (y=c*x+b) can be used to virtually extend a wall to any desired position, since the coefficients are saved. Also, the slope of the line indicates the angle of the wall relative to the robot. Furthermore the endpoints are saved by their x- and y-coordinate and index inside the array of laser measurements. Some other parameters (range of interest, tolerances, etc) are introduced to match the algorithm to the simulator and real robot. Several experiments proved the algorithm to function properly. This is an important step, since all higher level algorithms are fully dependent on the correctness of the provided line information.

[Video to be added soon…]