Mobile Robot Control 2023 Ultron: Difference between revisions

No edit summary |

|||

| Line 71: | Line 71: | ||

The diagram for data flow was created based on the available data to the robot from its environment through its sensors namely, the bumper data, laser data and the odometry data, as well as the known data from the map and the input table sequence. Each of these inputs were used in different segments of the code to generate intermediate data. The odometry and laser data were used to perform localization which in turn gives the pose (position and orientation) of the robot. The laser data was also used in the process of detecting obstacles and doors. This data is used further down the line in other processes like local and global path planning. Overall, the smooth transfer and interaction of data between each individual part of the code ensures that the robot moves in a predictable manner.[[File:StateFlowDiagram1.jpg|thumb|State Diagram|border]] | The diagram for data flow was created based on the available data to the robot from its environment through its sensors namely, the bumper data, laser data and the odometry data, as well as the known data from the map and the input table sequence. Each of these inputs were used in different segments of the code to generate intermediate data. The odometry and laser data were used to perform localization which in turn gives the pose (position and orientation) of the robot. The laser data was also used in the process of detecting obstacles and doors. This data is used further down the line in other processes like local and global path planning. Overall, the smooth transfer and interaction of data between each individual part of the code ensures that the robot moves in a predictable manner.[[File:StateFlowDiagram1.jpg|thumb|State Diagram|border]] | ||

====State Diagram==== | ====State Diagram==== | ||

<br /> | <br />[[File:Grid map.png|alt=Grid length bigger than the final grid length used. |thumb|Grid Map]] | ||

==== | |||

==== Navigation ==== | |||

The navigation process for mobile robots are categorized into four parts: environmental mapping, path planning, motion control, and obstacle avoidance. | |||

First and foremost, it becomes imperative to provide the robot with some information about the environment in which it is to operate. This is achieved by creating a map that can be as accurate to the real world as possible. The next section describes the method utilized to creating a map for the robot to plan its path from its starting position to all the necessary tables. | |||

====== Map Creation ====== | |||

[Just listed the bullet points. Should be written in full sentences] | [Just listed the bullet points. Should be written in full sentences] | ||

| Line 82: | Line 88: | ||

*Dimensions of few inner walls and table were increased by a small margin so that the grids are not created close to them. | *Dimensions of few inner walls and table were increased by a small margin so that the grids are not created close to them. | ||

<br /> | <br /> | ||

====Global Navigation==== | ====== Global Navigation ====== | ||

The A* algorithm is a widely used path planning algorithm for autonomous robots. Its importance to the challenge comes in the form of finding the shortest path between two points in the map. It calculates the cost to travel to a given grid and plans the path such that this cost is the least. | |||

First, the start and goal positions are determined. In our case, the start goal at the beginning of the challenge was the point and corresponding grid where the robot starts. The goal was set as one of the grids in the cloud of grids surrounding the first table. In case the robot is unable to reach this grid due to there being an obstacle occupying the grid, another grid was selected. The subsequent start and goal grids were the current grid and a grid surrounding the next table in the sequence respectively. Next the list of all open and traversable grids was created and used to check the next available grid to be traversed. A list of closed grids was also maintained to keep track of visited grids. We then assign initial values to the cost and heuristic function for the start grid and add it to the open list. The cost function thus gives the cost to reach the current grid from the start, and the heuristic function estimates the cost from the current grid to the goal. The A* algorithm uses the sum of these two values, called the "f-value," to determine the priority of grids in the open list. | |||

While the open list is not empty, we select the grid with the lowest f-value and remove it from the open list. Thid grid is then added to the closed list to mark that it hs been visited. The neighboring grids of the current grid are opened and their cost and heuristic values are calculated. For each neighboring grid, we calculated its f-value and update its parent grid if the cost to reach it is lower than the previously recorded cost. If the grid is not in the open list, we added it to the open list. If it is already in the open list, we updated its f-value if the newly calculated value is lower. | |||

<br /> | |||

*Using the final grid map, optimal path was computed using A* path planning algorithm. | *Using the final grid map, optimal path was computed using A* path planning algorithm. | ||

Revision as of 15:05, 7 July 2023

Group members:

| Name | student ID |

|---|---|

| Sarthak Shirke | 1658581 |

| Idan Grady | 1912976 |

| Ram Balaji Ramachandran | 1896067 |

| Anagha Nadig | 1830961 |

| Dharshan Bashkaran Latha | 1868950 |

| Nisha Grace Joy | 1810502 |

Design Presentation Link

Introduction

In an increasingly autonomous world, robotics plays a vital role in performing complex tasks. One such task is enabling a robot to autonomously locate and navigate itself in a restaurant environment to serve customers. While this may seem like a straightforward task for humans, there are various challenges when considering robots. Planning the best routes for the robot to follow in order to navigate is one of the main challenges. Global path planning algorithms like A* or Dijkstra's algorithm, which guarantee effective route planning based on a predefined map, are just two examples of techniques that can be used to address this issue.

Techniques like the artificial potential field method or the dynamic window approach can be used for local navigation to achieve real-time obstacle avoidance and adaptation to dynamic objects, addressing the challenges posed by unknown and dynamic scenes.

Another difficulty is localization, which is required for an autonomous robot to determine its precise position and orientation. Particle filtering and Kalman filtering are two techniques that can be used to combine sensor measurements with a predetermined map, compensating for imperfections and adapting to real-world scenarios. Combining localization and navigation techniques is the final challenge. A robot can identify its location on a map, plan an optimal path, and successfully complete complex tasks by maneuvering precisely to the designated table by developing the necessary software.

This wiki will guide you through the process of attempting to solve the problem of allowing a robot to autonomously locate and navigate itself in a restaurant setting. Our journey will be shared, from designing and constructing the requirements to the decisions made along the way. We will go over what went well and what could have been better, providing a thorough overview of our project.

The challenge and deliverables

The basic map for the challenge contains the tables and their numbers correspondingly, walls and doors. During the challenge, the robot starts at an area of 1x1 meters in an arbitrary orientation. The robot then has to make its way to each of the tables, orient itself and announce that it has arrived at the respective table before moving to the next table in the sequence. To make things more challenging, a few unknown static and dynamic obstacles were also added to the map. The presence of these obstacles is not known to the robot prior to the commencement of the challenge. And it has to safely navigate around the obstacles without bumping into any of them.

With regard to deliverables, we presented an overview of the system, including the construction of requirements, data flow, and state diagrams to the class. Through weekly meetings with our supervisor and usage of the lab, we built towards the final assignment week by week. The culmination of our efforts was a presentation in a semi-real setting led by the teaching assistants.

The code could be found in our GitLab page: https://gitlab.tue.nl/mobile-robot-control/2023/ultron

General System Overview

- Our approach to this challenge involved carefully considering the various requirements that had to be met in order to complete it. These requirements were divided into three categories: Stakeholder requirements, Border requirements, and System Requirements. The stakeholder (or environmental) requirements of the challenge included safety, time, and customer intimation. The robot had to be able to avoid obstacles and humans without bumping into them, take minimal time to complete all deliveries, and announce its arrival at the respective table.

- The border requirements were derived from the stakeholder requirements and encompassed the different strategies and considerations taken by our team to cater to each of the overarching stakeholder requirements. The system requirements/specifications gave the constraints of the robot and the environment in which it was operated. These were taken into account when trying to implement the border requirements.

The first step in tackling the Restaurant Challenge was to build the requirements based on the system specifications. This entailed carefully considering the robot and the environment in which it was used, as well as the overarching stakeholder requirements of safety, time, and customer intimation. We were able to develop a set of requirements that would guide our efforts toward successfully completing the challenge by taking these factors into consideration.

Game plan and approach - Midterm

Requirements

Requirements are a crucial part of the design process as they define the goals and constraints of a project. By establishing clear requirements, a team can ensure that their efforts are focused on achieving the desired outcome and that all necessary considerations are taken into account. In the case of the Restaurant Challenge, we took the system setting from the previous paragraph as well as challenge into account when designing the specifications for our requirements. This allowed us to develop a set of requirements that were tailored to the specific needs of the challenge, ensuring that our efforts were directed towards achieving success.

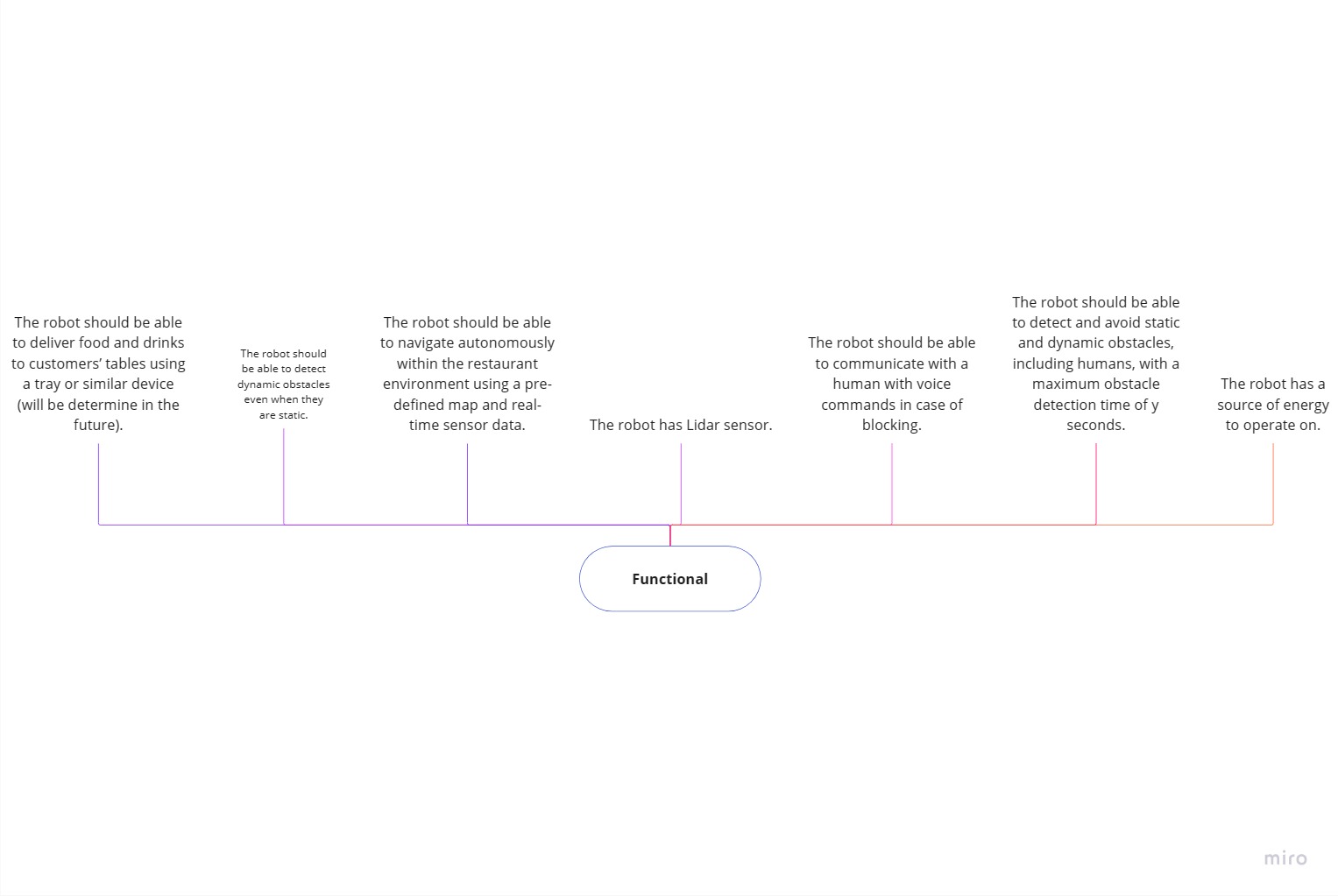

We divided our requirements into two sections: functional and non-functional. This allowed us to clearly define the capabilities and constraints of the robot, as well as the overarching goals of the challenge.

Our functional requirements included the ability for the robot to deliver food and drinks, detect dynamic and static obstacles, navigate autonomously using its sensors and a defined map in real-time, communicate with humans to avoid blocking, and avoid static and dynamic obstacles. The robot was also equipped with a lidar sensor and a source of energy.

Our non-functional requirements were divided into three groups: obstacle detection, safety, and reliability. For obstacle detection, the obstacles should be classified into dynamic and static obstacles. We specified that for every static object/obstacle detected within 0.7m range of the robot, it should plan a local path such that it avoids the obstacle and move along that local path. For every dynamic obstacle detected within the 0.7m range of the robot, the robot should send an audio command asking to move the dynamic obstacle away from the path, wait for some time until the object is moved and continue along the path. If the dynamic obstacle does not move within a certain amount of time, the robot should compute a local path to avoid the obstacle and move along that path. We did not specify the exact time to wait as it was an early process and we could not estimate or know the exact computation overload.

For safety, we specified that the maximum speed of the robot should be undefined for the same reason, and that the minimum distance to static and dynamic obstacles should be different. The frequency of the lidar sensor should be updated at least at a certain frequency, such that it is sufficient to capture both static and dynamic obstacles.

For reliability, we specified that global and local path planners should be restricted by time constraints. The maximum delivery time should not exceed 10 minutes as this was given to us as an overall constraint.

By dividing our requirements into functional and non-functional sections, we were able to clearly define the capabilities and constraints of the robot, ensuring that our efforts were directed towards achieving success in the challenge.

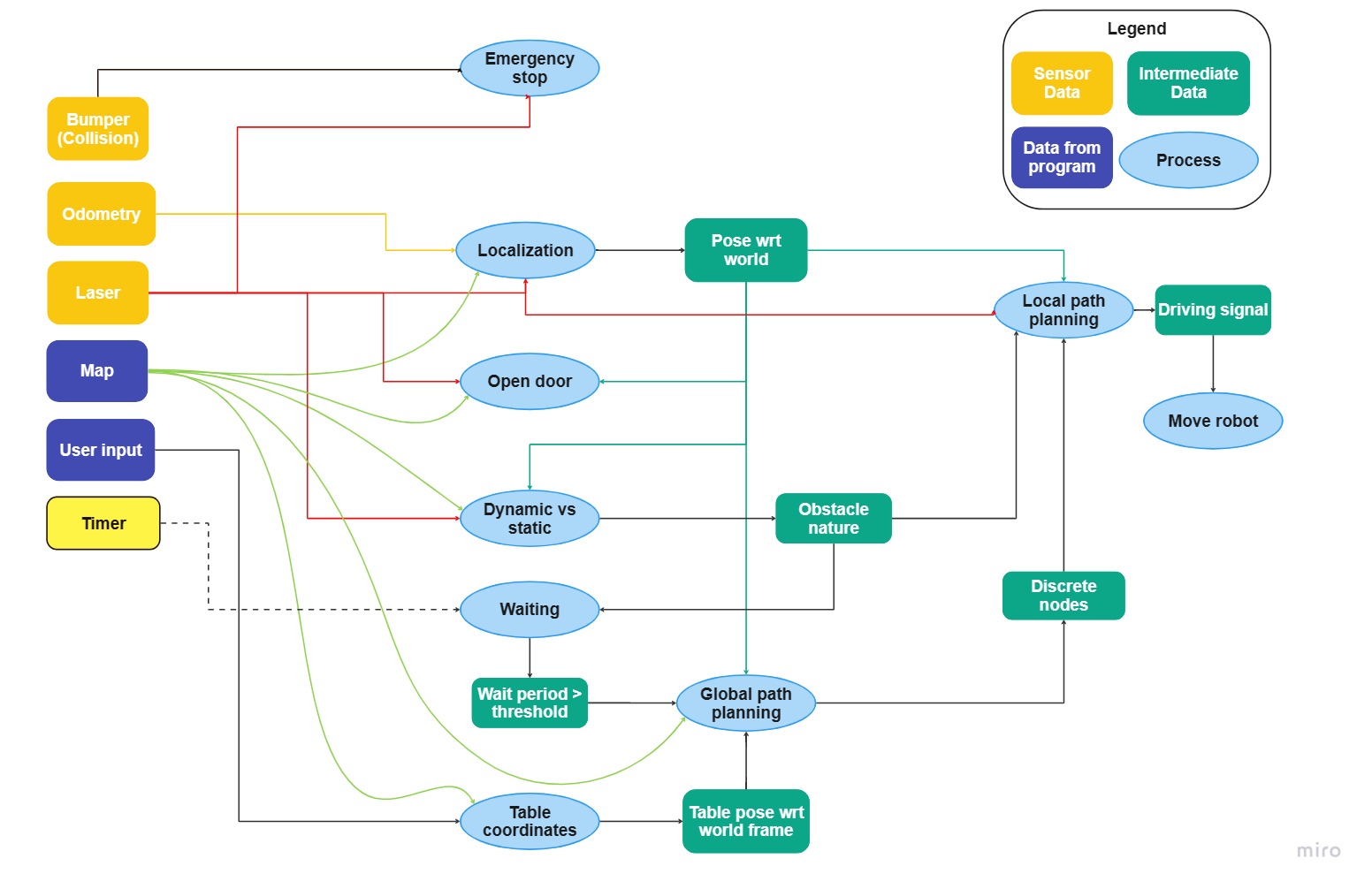

Data Flow

The diagram for data flow was created based on the available data to the robot from its environment through its sensors namely, the bumper data, laser data and the odometry data, as well as the known data from the map and the input table sequence. Each of these inputs were used in different segments of the code to generate intermediate data. The odometry and laser data were used to perform localization which in turn gives the pose (position and orientation) of the robot. The laser data was also used in the process of detecting obstacles and doors. This data is used further down the line in other processes like local and global path planning. Overall, the smooth transfer and interaction of data between each individual part of the code ensures that the robot moves in a predictable manner.

State Diagram

The navigation process for mobile robots are categorized into four parts: environmental mapping, path planning, motion control, and obstacle avoidance.

First and foremost, it becomes imperative to provide the robot with some information about the environment in which it is to operate. This is achieved by creating a map that can be as accurate to the real world as possible. The next section describes the method utilized to creating a map for the robot to plan its path from its starting position to all the necessary tables.

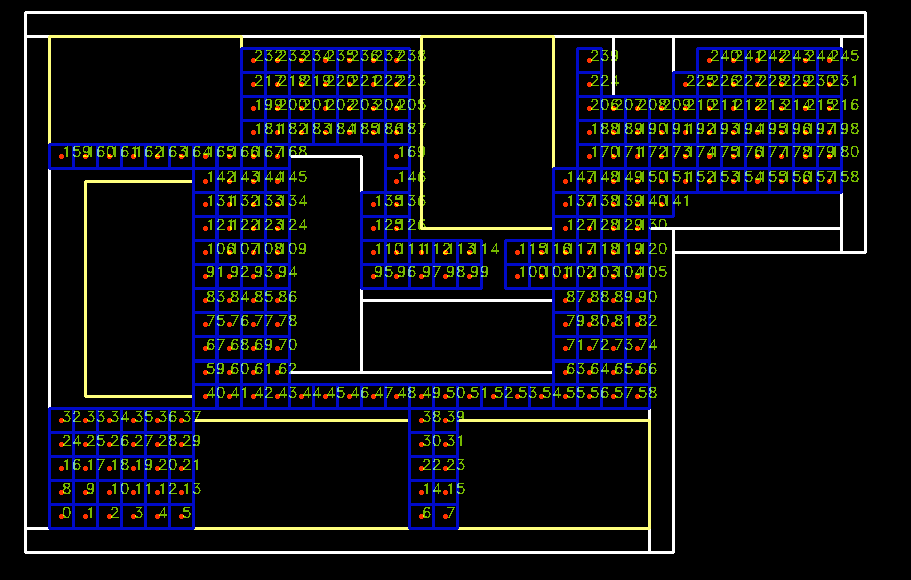

Map Creation

[Just listed the bullet points. Should be written in full sentences]

- A map json file was provided to us which specified the exact location coordinates of the walls, doors , a few static obstacles and tables.

- Discretizing the given map into a grid map with numbered grids. The size of each grid was 0.025m.

- Each grid has specific properties such as 1) doorflag- true when the door is on the grid, 2) logodd - score for the grids that contain objects and a few more...

- The grids not containing the obstacles, tables, walls were added to the final grid map with updated connections between each grid and its neighbours are listed.

- The final grid map is used in A* path planning algorithm.

- Dimensions of few inner walls and table were increased by a small margin so that the grids are not created close to them.

The A* algorithm is a widely used path planning algorithm for autonomous robots. Its importance to the challenge comes in the form of finding the shortest path between two points in the map. It calculates the cost to travel to a given grid and plans the path such that this cost is the least.

First, the start and goal positions are determined. In our case, the start goal at the beginning of the challenge was the point and corresponding grid where the robot starts. The goal was set as one of the grids in the cloud of grids surrounding the first table. In case the robot is unable to reach this grid due to there being an obstacle occupying the grid, another grid was selected. The subsequent start and goal grids were the current grid and a grid surrounding the next table in the sequence respectively. Next the list of all open and traversable grids was created and used to check the next available grid to be traversed. A list of closed grids was also maintained to keep track of visited grids. We then assign initial values to the cost and heuristic function for the start grid and add it to the open list. The cost function thus gives the cost to reach the current grid from the start, and the heuristic function estimates the cost from the current grid to the goal. The A* algorithm uses the sum of these two values, called the "f-value," to determine the priority of grids in the open list.

While the open list is not empty, we select the grid with the lowest f-value and remove it from the open list. Thid grid is then added to the closed list to mark that it hs been visited. The neighboring grids of the current grid are opened and their cost and heuristic values are calculated. For each neighboring grid, we calculated its f-value and update its parent grid if the cost to reach it is lower than the previously recorded cost. If the grid is not in the open list, we added it to the open list. If it is already in the open list, we updated its f-value if the newly calculated value is lower.

- Using the final grid map, optimal path was computed using A* path planning algorithm.

- The robot's current position is translated to the grid number of the start position.

- The final grid can be selected from a list of possible grids around the table. The grid which is at the shortest distance from the current position of the robot is taken as the final grid.

- A* algorithm computes an optimal path from the start position to the final grid. The grid list along the path is stored.

Following the path

- The "first/topmost" grid in the grid list is made as the immediate target grid. When the robot reaches atleast 0.3m(check the value & logic) near the grid, the next grid is given as the new target grid. This process continues until the last grid in the grid is reached where the tolerance is even smaller.

Obstacle Avoidance

- (Add open space approach and Potential field algorithm)

- But, if a grid is occupied by an obstacle which was not there in the map before, the logodd value will be higher or equal to the initial value and this grid is skipped and the next grid in the list is taken. However, if large number of grids are skipped, the robot is stopped, the map is updated based on the logodd values of each grid and this map is used to create a new path.

Opening of doors

- If the next target grid has a door flag true, we will check if the door is open or closed, based on the logadd value.

- If the door is closed, a command "Open the door" is given, wait for 5 seconds. if the door is still not open, give a command "Open the door". Repeat it until it is open.

- If the door is open, follow the regular path.

Reaching the final goal

- If the final grid around the table selected initially is occupied by an obstacle, another non-occupied grid is selected as the final goal randomly.

Localization

Localization is an important aspect of autonomous navigation, as it allows a robot to precisely determine its position and orientation. In our approach to the Restaurant Challenge, we aimed to implement localization using a particle filter. This technique combines sensor measurements with a predetermined map, compensating for imperfections and adapting to real-world scenarios. However, implementing the particle filter proved to be more challenging than we anticipated. Our team was split and overloaded with multiple tasks, which resulted in a delay in finishing the particle filter assignment. Although we did manage to complete it, our attempt to transfer it to the robot at the last minute was not successful. As a result, we decided to focus our efforts elsewhere. For the final assignment, localization was done only with odometry data. While this was not our original plan, it allowed us to successfully complete the challenge and demonstrate the capabilities of our robot.

Integration

Implementation

Occupancy Grid

Initial the object detection was done using the logic that if the laser point hits a object in a scan radius of 0.7m, the corresponding grid is identified and updated as occupies such that local path planner can update it. However, there were few issues as we decreased the grid size, the grids which fall inside the object and not on the periphery of the object were considered as unoccupied grids, which are actually occupied. To over come this we decided to expand go with a score called logodd value for each grid rather than logical flag like occupied or not occupied and also the maximum laser range was used as the scan range. The new algorithm works as follows,

- The laser scans the complete area in its range and for each laser hit point the respective grid is fond. Then we use Bresanham's algorithm to compute all the grids that connect the current grid of the robot to the hit point grid using a straight line. The logodd value for the final hit point grid is increased by 1 and all the intermediate grids are decreased by a factor of 0.5 and this logodd value can be between -100 to +100. The initial value for logodd is 5.

- Once the logodd values are updated for each grid we can identify if the grid is occupied or not.

Milestones and achievements

Occupancy Grid

Global Path Planning -

Implemented A* Path Planning Algorithm successfully. The shortest path and the path node sequence from the start position to the goal positions in the order given was successfully computed.

Obstacle Detection

Local Path Planning

Rerouting -

When the robot deviated from the original global path whenever an obstacle was encountered and avoided, another shortest path was replanned successfully from the current position of the robot to the goal. position.

Map Update

Simulator vs Real world

Discussion and future scope

//On what we saw and why

Conclusion