PRE2020 1 Group1: Difference between revisions

| Line 243: | Line 243: | ||

===Enterprise=== | ===Enterprise=== | ||

Since the robot should be developed the stakeholder would be the companies developing the product. | |||

==What are the users needs== | ==What are the users needs== | ||

Revision as of 12:28, 3 September 2020

Group members

| Name | Student ID | Department |

|---|---|---|

| Davide | 1255401 | Electrical Engineering |

| Lieke Nijhuis | 0943276 | Innovation Sciences |

| Ikira Wortel | 1334336 | Electrical Engineering |

| Wout Opdam | 1241084 | Mechanical Engineering |

Problem statement and objectives

The project

This project is part of the course 0LAUK0 Robots Everywhere. The project concerns a COVID-19 detection system for choke points and crowded places.

Approach

A literature study will be performed on the feasability of a COVID-19 detection system for choke points and crowded places. This literature study will include an investigation of possible privacy issues that can arise when such a system is deployed in public places.

A design for the hardware of the system will be made and a pseudocode will be written for the software behind the system.

Also, a cost-benefit analysis will be performed to get a view on the financial feasibility of the system.

Deliverables

The deliverables for this project include:

- Literature/feasability study of a COVID-19 detection system for choke points and crowded places, including a privacy-issue study.

- Design of the hardware of the system.

- Pseudocode of the software of the system.

- Cost-benefit analysis.

- A wiki page.

- A presentation video.

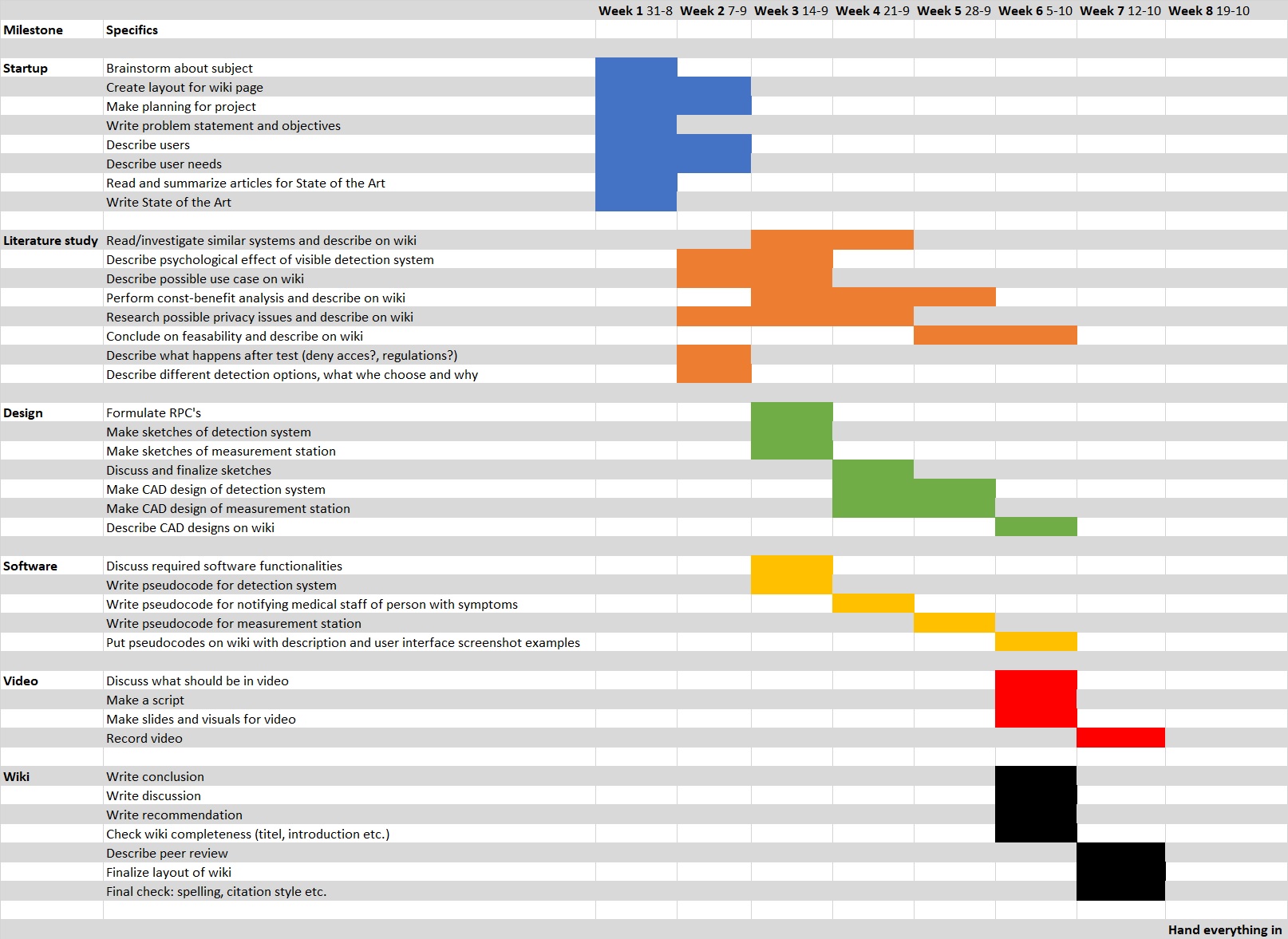

Planning and milestones

In the figure below, the project planning is given. The left most column contains the milestones for the project. The column to the right of the first column contains all tasks that need to be completed in order to achieve that milestone.

Here, an overview will be given of the tasks that need to be completed per week (corresponding to the figure above), and who is responsible for them:

Week 1

| Task | Person | Completed |

|---|---|---|

| Brainstorm about subject | Everyone | Yes |

| Create layout for wiki page | Wout | Yes |

| Make planning for project | Wout | Yes |

| Write problem statement and objectives | Lieke | |

| Describe users | Ikira | |

| Describe user needs | Wout | |

| Read and summarize articles for State of the Art | Everyone | |

| Write State of the Art | Davide |

Week 2

| Task | Person | Completed |

|---|---|---|

| Read/investigate similar systems and describe on wiki | ||

| Describe psychological effect of visible detection system | Lieke | |

| Formulate RPC's | Everyone | |

| Make sketches of camera with microphone on pole | Wout | |

| Make sketches of IR measurement station | Wout | |

| Make sketches of notification screen for 1.5m violation | Wout | |

| Discuss required software functionalities | Everyone | |

| Write pseudocode for cough detection via camera and microphone | Ikira |

Week 3

| Task | Person | Completed |

|---|---|---|

| Read/investigate similar systems and describe on wiki | ||

| Describe psychological effect of visible detection system | Lieke | |

| Describe possible use cases on wiki (questionaire?) | ||

| Perform cost-benefit analysis and describe on wiki | Lieke | |

| Research possible privacy issues and describe on wiki | Ikira | |

| Discuss and finalize sketches | Everyone, Wout | |

| Write pseudocode for 1.5m violation detection via camera | Ikira |

Week 4

| Task | Person | Completed |

|---|---|---|

| Describe possible use cases on wiki (questionaire?) | ||

| Perform cost-benefit analysis and describe on wiki | Lieke | |

| Research possible privacy issues and describe on wiki | Lieke | |

| Make CAD design of camera with microphone pole | Wout | |

| Make CAD design of 1.5m notification screen | Wout | |

| Make CAD design of IR measurement station | Wout | |

| Write pseudocode for notifying medical staff of coughing person | Ikira |

Week 5

| Task | Person | Completed |

|---|---|---|

| Describe possible use cases on wiki (questionaire?) | ||

| Perform cost-benefit analysis and describe on wiki | Lieke | |

| Research possible privacy issues and describe on wiki | Lieke | |

| Conclude on feasability and describe on wiki | Ikira | |

| Make CAD design of camera with microphone pole | Wout | |

| Make CAD design of 1.5m notification screen | Wout | |

| Make CAD design of IR measurement station | Wout | |

| Write pseudocode for IR temperature measurement station | Ikira |

Week 6

| Task | Person | Completed |

|---|---|---|

| Conclude on feasability and describe on wiki | Ikira | |

| Describe CAD designs on wiki | Wout | |

| Put pseudocodes on wiki with description and user interface screenshot examples | Ikira | |

| Discuss what should be in video | Everyone | |

| Make a script for video | Lieke | |

| Make slides and visuals for video | Lieke | |

| Write conclusion | ||

| Write discussion | ||

| Write recommendation | ||

| Check wiki completeness (titel, introduction etc.) | Everyone |

Week 7

| Task | Person | Completed |

|---|---|---|

| Record video | Lieke | |

| Describe peer review | Ikira | |

| Finalize layout of wiki | Everyone | |

| Final check: spelling, citation style etc. | Everyone |

Week 8

| Task | Person | Completed |

|---|---|---|

| Hand everything in | Everyone |

Users

In this section the users and their needs are identified.

Who are the users

User

The users of the robot are the people walking through choke point and in crowded places and the medical staff that is informed of the people that are suspected to have COVID-19.

The people in crowded places will be checked to see if they are infected, if not then there is no problem, however if they are infected they could then be picked out by the medical staff and get checked. This will prevent people who are unaware of that they are infected from spreading it further. Especially since they are in a place where it is easy to infect other people.

Furthermore since the robot can also check if a distance of 1.5 meters is kept. Since sometimes people do not notice how close they are actually standing to other people they could keep a bigger distance if they were told and so the spread can also be slowed down.

Society

The main stakeholders for the society would be the government and uninfected and infected people.

The government is a stakeholder since they would need to spend money to place the device and are responsible for the regulations around COVID-19.

The infected people are a stakeholder since they can be informed about having COVID-19 or not. Which could change their behavior and limit the spread of COVID-19

The uninfected people are a stakeholder since when there is a detection device for COVID-19 less people will be walking around unaware of being infected which results in infected people taking more precaution not to spread it further. Since there is it also detects the distance of 1.5 meter people will better keep their distance and slow the spread.

Enterprise

Since the robot should be developed the stakeholder would be the companies developing the product.

What are the users needs

User

Society

Enterprise

State of the art

Can robots handle your healthcare? By Nicola Davies. Summarized by Wout.[1]

The demand for healthcare robots will rise due to an ageing population and a shortage of carers. Japan is a leading country in the field of healthcare robots. They develop their robots in close coordination with elderly and patients.

Safety is an important aspect for healthcare robots. They must be absolutely fool proof. In order to safely operate around humans, robots need to feature things like quick reflexes, communication capabilities and interaction capabilities via video and audio. This translates into a wide range of sensors, cameras and actuators the robot needs to have. Next to a robot’s features, their looks are also important. Robots that try to impersonate actual humans, but still show imperfections, can make users uneasy.

An area in which robots can do a better job than humans is being consistent in the quality of the care that is provided. After a long difficult shift, humans can be prone to errors while robots are not affected by exhaustion or other emotions.

For simple routine healthcare tasks, robots can be a good solution.

How medical robots will change healthcare By Peter B. Nichol. Summarized by Wout.[2]

A possible major field in future healthcare is medical nanotechnology, and more specific nanorobots. These robots will work inside the patient at cellular level. The most important stakeholders in robotic healthcare are not the people that develop the technology, but the people that will use it.

There are three main ways in which robotics can be deployed in healthcare: Direct patient care (e.g. robots used in surgeries), Indirect patient care (e.g. robots delivering medicine), Home healthcare (e.g. robots that keep the elderly company).

Acceptance of Healthcare Robots for the Older Population: Review and Future Directions by E. Broadbent, R. Stafford, B. MacDonald. Summarized by Wout.[3]

It is projected that the proportion of people older than the age of 60 will double between 2000 and 2050. This increases the need for healthcare. Alongside this there is also a shortage of people working in the healthcare industry. In western countries, most of the older people want to remain living independently in their own homes for as long as possible. To facilitate this, smart solutions need to be found, for example healthcare robots.

Of great importance is the acceptance of healthcare robots by the people in need of care. There are three requirements for acceptance of a robot to occur: motivation for using the robot, sufficient user-friendliness and a feeling of comfort with the robot.

Healthcare robots need to be able to adapt to individual differences since not all patients are equal.

Basic healthcare robots have been developed which can assist with simple tasks like: assist in walking, reminding of doctors appointments, carry objects, lift patients, providing companionship. Mitsubishi sold the first commercially available mobile robot in 2005. This robot is designed to help in the home.

The older the age, the less willing people are to use robots. Older people are more fearful of new technology. However, they are more willing to accept assistive devices if this helps them maintain their independence.

Studies have shown that people prefer robots that do not have a human-like appearance. For companionship robots an animal-like appearance is preferred. Other examples of desired characteristics of a robot for the elderly are slow moving, safe, reliable, small and easy to use. Older people also appreciate having some form of control over the robot because this reinforces their sense of independence.

Medical Robots: Current Systems and Research Directions by Ryan A. Beasley. Summarized by Wout.[4]

An example of a great success in medical robotics is the surgical robot da Vinci. The biggest impact of medical robots has been improving surgeries that require great precision.

Medical robots can be employed during brain surgeries. By using medical images (e.g. CT-scan) a robotic arm can orient the tools in the correct direction, or the surgeon can specify a target which the robot guides the instrument towards with submillimetre accuracy. Medical robots are also used to assist in spine surgery.

Another application field of medical robots is orthopaedics (e.g. hip or knee replacement). An example of such a robot is Robodoc, which is used for milling automatically according to a surgical plan. Other devices, like the RIO, are designed such that the robot and the surgeon hold the tool simultaneously. The RIO ensures that the surgeon makes the correct movements.

There also exist devices which are controlled by the surgeon (technically not robots). The surgeon uses joysticks to manipulate the tools with great precision. An example of such machines are the Zeus and the da Vinci. These systems filter out hand tremors and enable the surgeon and patient to not be at the same location.

InnoMotion is a robot arm which can accurately guide a needle using CT or MRI imaging.

Robots exist that can help a surgeon guide a tube through a patient’s blood vessel. The surgeon can steer the tube and force feedback sensors tell the surgeon how much pressure is being applied.

Robotic arms are also used in radiosurgery, in which a specific area inside the patient is targeted with beams of ionizing radiation from multiple angles.

Robots are also used to perform CPR. A band is placed around the patient’s chest which is pulled tight by an actuator to compress the chest. The tightness of the band is adjusted based on the patient’s chest size.

Microprocessor-controlled prosthetics are prosthetics in which the patient is assisted in the movement or are controlled by the patient to perform movements which have been lost (e.g. exoskeletons).

Another area of medical robots consists of assistive and rehabilitation systems. These include things like a controllable arm holding a spoon or a grasper which can be attached to a wheelchair.

In the future, medical robots will become smaller and cheaper. More functionality will be added. Also, robots will be used more for medical training. Medical robots need to provide solutions to real problems, otherwise there is a change that they are displaced by other medical advancements.

Medical robots go soft by Eliza Strickland. Summarized by Wout.[5]

New kinds of medical robots are being developed of which the parts that touch the patient are soft. This is to prevent damage to organs and blood vessels and makes sure the human body does not surround the object with scar tissue which can diminish its effectiveness.

An example of a soft medical robot is a sleeve that surrounds the hart. This sleeve contains rings which can be pneumatically inflated to detect and force a heartbeat in a certain tempo.

Another example is a device which can be implemented in the body to give of small dosages of a drug. This device is made of a soft gel with a mechanism inside which can be activated from outside the body.

A final example is a soft gripper-hand made of hydrogel. The hand can be actuated by forcing water into the hand. This hand can be placed on a robotic arm and used for grabbing organs inside the human body without damaging them.

Robots in Health and Social Care: A Complementary Technology to Home Care and Telehealthcare? By T. Dahl, M. Kamel Boulos. Summarized by Lieke.[6]

This article discusses several specific robotics applications.The robots that were considered were classified in 9 categories: Surgery robots, Robots supporting Human-Robot interaction tasks, Robots providing logistics in care home environments, Telepresence and companion robots, Humanoid robots for entertaining and educating children with special needs, Robots as motivational coaches, Home assistance robots for an ageing society, Human-Robot relationships in medical care and society, and Human- Robot relationships in medical and care situations.

Between these categories, there are a few ingredients for success that can be found. The general ingredients for success include: Adequate level of personalization, Appropriate safety levels, Proper object manipulation and navigation in unstructured environments, Patient/user safety, Reliability and robustness and Sustainability. In addition, robots that serve motivational or social purposes also need to be personable, intelligent and highly interactive.

However, there are also some concerns with using robots extensively. For example, humanoid robots need to have a set-up etiquette, like following the eyes of a talking person. What also needs to be kept in mind, especially using social robots with elderly people, is that the extensive use of robots could lead to a potential reduction of human contact, social isolation, an increasing feeling of objectification and a loss of privacy. Furthermore, cost effectiveness plays a great deal in the practical use of robots.

Autonomous Robots Are Helping Kill Coronavirus in Hospitals By E Ackerman. Summarized by Lieke.[7]

UVD Robots is making robots that are mobile arrays of powerful UVC lights that kill microorganisms. UV lights on carts are used to disinfect areas for some time, but they are dangerous for human skin and when they are operated by humans it is prone to human error and it might skip a few places. This newly developed product is able to autonomously disinfect a room between 10 and 15 minutes, with the robot spending 1 or 2 minutes in five or six different positions to maximize the surface it disinfects.

Estimated effectiveness of symptom and risk screening to prevent the spread of COVID-19 By Kately Gostic, Ana Gomez, Riley Mummah, Adam Kucharski, James Lloyd-Smith. Summarized by Lieke.[8]

This article uses a mathematical model about the spread of emerging pathogens from 2015 combined with the current knowledge about the epidemiological parameters of the COVID-19 spread to estimate the extent in which traveler screening will be useful. Because COVID-19 has a long incubation period many cases are fundamentally undetectable, which will lead to screening missing more than half of the infected people. This number might increase a bit when the epidemic is stable instead of growing, because the times since exposure will be more evenly distributed.

Diagnosing COVID-19: The disease and tools for detection By Buddhisha Udugama et al.. Summarized by Lieke.[9]

To detect COVID-19 in the hospital there are several tests that can be used. Molecular tests are useful because they can target and identify specific pathogens, although nucleic acid testing is the primary way of testing for COVID-19. CT scans can be used but have a lower specificity because the imaging features overlap with other viral pneumonia.

Emerging diagnostic tests for COVID-19 are protein tests, more developed nucleic acid tests and detection at the point-of-care, such as lateral flow antigen detection and microfluidic devices

Asymptomatic Transmission, the Achilles’ Heel of Current Strategies to Control Covid-19 By Monica Gandhi, Deborah S. Yokoe, Diane V. Havlir. Summarized by Lieke.[10] Infection control relies heavily on early detection, but because COVID-19 has a long incubation time this does not work. This is why it is important to continuously test especially nurses on COVID-19, because it can be detected before the symptoms start to manifest.

AI4COVID-19: AI enabled preliminary diagnosis for COVID-19 from cough samples via an app. Summarized by Ikira.[11]

This paper discusses an alternate way of testing for covid-19, since the current testing:

- Requires people to move through public places which could spread the virus

- Puts medical staff at risk for the personal

- Costs a lot

- Not everyone goes to a medical facility to get tested

The solution they came up with is to make an smartphone app that does a preliminary test based on your cough to see if you have covid-19 or not.

The reason for choosing this method is because:

- Prior studies have shown different respiratory syndromes have distinct features

- Coughing is the way the virus is mostly spread.

- There are less medical conditions that cause cough than fever

The steps taken for the making of the smartphone app are to first test if covid-19 has specific coughing characteristics (Results show there are). Second to train an engine that can distinguish a cough from background noise. third to train an engine that can distinguish between the different types of coughs and fourth to check the feasibility of the app. The accuracy of the proposed app is encouraging enough to support large scale testing.

A Smart Home is No Castle: Privacy Vulnerabilities of Encrypted IoT Traffic. Summarized by Ikira.[12]

Smart Home devices collect data not only when you are on the internet, but also about your daily life. There is a privacy concern if a passive network observer deduce behavior in the change in internet traffic. Some people say that due to encryption no sensitive information can be seen, however it can be argued that meta data and traffic patterns can reveal sensitive information. In this paper a strategy to recognize user behavior with traffic load of internet of things devices is given. Four Smart home devices are used for this. From all of these devices a network observer could infer behavioral patterns from observing the traffic load. Thus it would be necessary for devices to mask the true rate of devices. For the research in this paper a few assumptions were made. First of it is assumed there is only a passive network threat. Second packet contents are not used and finally it is assumed that the observer can obtain and study those devices. The way to analyze these traffic rates is to first separate traffic into sperate packet streams, then to find which stream belongs to which device. With this the traffic rates can be examined and the behavior of the consumer can be deduced.

Multimedia Companion for Patients in Minimally Conscious State (MCS)

The idea is to create a multimedia system for patients in MCS. This system would project films, music and videos of family members. This system can communicate with the patient only with binary (yes or no) questions. Communication is used to let the patient navigate the multimedia system (i.e. choosing what to watch or do). The yes or no answers are given by the patient's ability to modulate brain activity. The patient's response is decoded by sensing which areas of the brain intensify in activity.

System:"Do you want to watch the news? If YES imagine playing tennis. If NO imagine walking in the rooms of your house."

Patient: The patient imagines to play tennis.

The motor area of the patient's brain activates.

Activity is recorded by the system.

The system plays the latest bulletin.

Articles

First discovery using functional Magnetic Resonance Imaging (fMRI) and PET.

The Aware Mind in the Motionless Body (M. M. Monti, A. M. Owen, 2010)[13] & Detecting Awareness in the Vegetative State (A. Owen et al., 2006)[14]

Case Study: Martha

Martha, a young woman of not even 30 years old is victim of a car accident. She survives the accident and slips into coma; her eyes are closed, her body is motionless and unable to breath autonomously. After a week her eyes open, her body is able to breathe again autonomously and even makes small movements. For example, she yawns, she stretches her arms and slightly moves her body in her bed. It seems she is even able to sleep and wake up.

Vegetative State, Comatose state, and sleep

When a patient in a comatose state is able to sleep and wake up again, she/he is said to be in the Vegetative State (VS). A patient in a vegetative state might have his/her eyes open, can breathe autonomously, can move his/her body, can produce urine, and can vocalize sounds. The big question, are patients in vegetative state conscious? Consciousness is made of wakefulness and awareness. A healthy person is both awake and aware, when not asleep. A comatose patient is neither awake nor aware. While a patient in vegetative state is awake, but is not aware. Now around 40% of patients diagnosed in the vegetative state seem to be aware. Therefore, these misdiagnosed patients are said to be in a Minimally Conscious State (MCS). Often while diagnosing patients the “Lack of evidence of consciousness is, in this situation, equated to evidence of lack of consciousness” (Monti, Owen, 2008)(i.e. False Negative). To distinguish patients in MCS from VS one of three factors must be met:

• Awareness of the environment

• Sustained, reproducible, purposeful or voluntary response to visual or tactile stimuli

• Language comprehension and/or expression

Novel neuroimaging techniques are used to detect markers of consciousness, such as Positron Emission Tomography (PET) and functional Magnetic Resonance Imaging (fMRI).

Experiment 1: brain response to speech of patients in VS

Two patients in VS showed brain activities similar to healthy volunteers in response to speech and picture of faces. The two patients in VS listened to simple and complex (semantically ambiguous) sentences. The complex sentences activated, in the VS patients, the same brain area as in healthy volunteers. Furthermore, similar experiments were conducted with healthy volunteers heavily sedated. In the heavily sedated volunteers, no activation of the same area was recorded. This shows a higher level of awareness (some language comprehension) in the VS patients than the sedated volunteers. Nevertheless, simple brain responses cannot be taken as a factors of awareness nor consciousness. Much of sensory processing is automatic. Such as lines depicting a face or speech of a familiar language. Therefore, the type of stimuli and the type of brain activity recorded must be attentively chosen.

Experiment 2: voluntary modulation of brain activity in VS patient

A young woman victim of a car accident slips into coma, then wakes up again, but is diagnosed in the Vegetative State. The patient was given spoken instruction. The first instruction was to imagine playing tennis. The second, to imagine walking in her own apartment. When the patient was instructed to imagine playing tennis intense activity in the motor area of the brain was recorded. While when she was asked to imagine walking in her apartment activity in the parahippocampal gyrus (memory retrieval area), posterior parietal cortex (spatial reasoning area) and some motor areas. Healthy control volunteers, given the same instructions, reproduce the same brain activity of the VS patient. Unless the volunteers did not understand the instructions or decided to not comply with them. Therefore, in this experiment the idea of an automatic brain response is discredited.

A perturbational approach for evaluating the brain’s capacity for consciousness (M. Massimini et al. 2009)[15]

It does not confirm if a person is conscious or not. The technique weighs the capacity of the brain for consciousness.

1. Start from a theory describing how consciousness is created. Integrated Information Theory of Consciousness (IITC) suggests that consciousness is created by a physical system.

2. Measure the brain’s capacity to generate consciousness. Measurement is done by poking (exciting) the concerned physical systems, with TMS, and record the response, with hd-EEG.

Workload

An overview of who has done what per week of the project.

Week 1

| Name | Student ID | Hours this week | Tasks |

|---|---|---|---|

| Davide | 1255401 | ||

| Lieke Nijhuis | 0943276 | Intro videos + first brainstorm (1.5 hours) + Studied and wrote summaries for papers: [6], [7], [8], [9], [10] (4.5 hours) second meeting+ sending e-mail (45 minutes) | |

| Ikira Wortel | 1334336 | Intro videos + first brainstorm (1.5 hours) + Studied and wrote summaries for papers[11], [12] (2 hours) extra meeting discussing chosen subject (30 min) | |

| Wout Opdam | 1241084 | Intro videos + first brainstorm (1.5 hours) + Start with wiki layout (1 hour) + Studied and wrote summaries for papers: [1], [2], [3],[4] and [5] (5 hours) + wrote about approach and deliverables (0.5 hour) + Made planning for project (2.5 hours) + extra meeting discussing chosen subject (0.5 hour) |

References

- ↑ 1.0 1.1 Can robots handle you heatlhcare?. Nicola Davies (2016, December 15). Engineering & Technology Volume 11, Issue 9, P 58-61. Retrieved August 31, 2020.

- ↑ 2.0 2.1 How medical robots will change healthcare. Peter B. Nichol (2016, March 23). CIO, Framingham. Retrieved August 31, 2020.

- ↑ 3.0 3.1 Acceptance of Healthcare Robots for the Older Population: Review and Future Directions. E. Broadbent, R. Stafford, B. MacDonald (2009, October 3). International Journal of Social Robotics (2009) 1: 319–330. Retrieved August 31, 2020.

- ↑ 4.0 4.1 Medical Robots: Current Systems and Research Directions. Ryan A. Beasley (2012, July 6). Journal of Robotics Volume 2012, Retrieved August 31, 2020.

- ↑ 5.0 5.1 Medical robots go soft. Eliza Strickland (2017, April). IEEE Spectrum, volume 54, issue 4 (2017) Retrieved August 31, 2020.

- ↑ 6.0 6.1 Robots in Health and Social Care: A Complementary Technology to Home Care and Telehealthcare?. Torbjorn S. Dahl and Maged N. Kamel Boulos (2013, November 1). Robotics (3.1), Retrieved September 1, 2020

- ↑ 7.0 7.1 Autonomous Robots Are Helping Kill Coronavirus in Hospitals. E Ackerman (2020). IEEE Spectrum, Retrieved September 1, 2020.

- ↑ 8.0 8.1 Estimated effectiveness of symptom and risk screening to prevent the spread of COVID-19. Kately Gostic, Ana Gomez, Riley Mummah, Adam Kucharski, James Lloyd-Smith (2020). Elife 9, Retrieved September 3, 2020.

- ↑ 9.0 9.1 Diagnosing COVID-19: The disease and tools for detection. Buddhisha Udugama, Pranav Kadhiresan, Hannah N. Kozlowski, Ayden Malekjahani, Matthew Osborne, Vanessa Y. C. Li, Hongmin Chen, Samira Mubareka, Jonathan B. Gubbay, and Warren C. W. Chan (2020, march 30). ACS Nano 2020, 14,4,3822-3835, Retrieved September 3, 2020.

- ↑ 10.0 10.1 Asymptomatic Transmission, the Achilles’ Heel of Current Strategies to Control Covid-19. Monica Gandhi, Deborah S. Yokoe, Diane V. Havlir. (May 2020). N Engl J Med 2020 328:2158-2160, Retrieved September 3, 2020.

- ↑ 11.0 11.1 [1] AI4COVID-19: AI enabled preliminary diagnosis for COVID-19 from cough samples via an app. Imran A., Posokhova I., Qureshi H.I., Masood U., Riaz M.S., Ali K., Jhon C.N., Hussain M.I., Nabeel M., (2020) Elsevier, Retrieved September 2, 2020.

- ↑ 12.0 12.1 [2] A Smart Home is No Castle: Privacy Vulnerabilities of Encrypted IoT Traffic. N. Apthorpe, D. Reisman and N. Feamster (2017), Retrieved September 2, 2020.

- ↑ [3] Monti, M. M., & Owen, A. M. (2010). The aware mind in the motionless body. Psychologist, 23(6), 478–481.

- ↑ [4] Owen, A. M., Coleman, M. R., Boly, M., Davis, M. H., Laureys, S., & Pickard, J. D. (2006). Detecting Awareness in the Vegetative State. Science, 313(5792), 1402 LP – 1402.

- ↑ [5] Massimini, M., Boly, M., Casali, A., Rosanova, M., & Tononi, G. (2009). A perturbational approach for evaluating the brain’s capacity for consciousness. In Progress in Brain Research (Vol. 177, Issue C, pp. 201–214). Elsevier.