Embedded Motion Control 2014 Group 10: Difference between revisions

| Line 78: | Line 78: | ||

Once Pico has detected a situation and determined if he want to turn, he will rotate + or - 90 degrees. After the rotation, Pico will drive forward a small distance to position himself fully into the next corridor and will look around once more. | Once Pico has detected a situation and determined if he want to turn, he will rotate + or - 90 degrees. After the rotation, Pico will drive forward a small distance to position himself fully into the next corridor and will look around once more. | ||

'''Arrow Detection''' | '''Arrow Detection''' | ||

To recognize or a given arrow is pointed to the left or to the right, and to let Pico react on this, several steps are followed: | To recognize or a given arrow is pointed to the left or to the right, and to let Pico react on this, several steps are followed while driving Pico: | ||

'''''Step 1:''''' The ROS image is converted to an Open CV image. Picture used as example: | '''''Step 1:''''' The ROS image is converted to an Open CV image. Picture used as example: | ||

[[File:Arrowfar.png|300px]] | |||

[[File:Arrow.jpg|300px]] | [[File:Arrow.jpg|300px]] | ||

| Line 90: | Line 93: | ||

'''''Step 2:''''' There are different color spaces to describe pictures. In our case the RGB image is converted to an HSV image. HSV is commonly used in computer based graphic design. Converting RGB to HSV and vice versa is unambiguous. This results in the following picture: | '''''Step 2:''''' There are different color spaces to describe pictures. In our case the RGB image is converted to an HSV image. HSV is commonly used in computer based graphic design. Converting RGB to HSV and vice versa is unambiguous. This results in the following picture: | ||

[[File:Arrow_hsv_(1).jpg|300px]] | |||

[[File:Arrow_hsv.jpg|300px]] | [[File:Arrow_hsv.jpg|300px]] | ||

| Line 95: | Line 99: | ||

'''''Step 3:''''' The red pixels are filtered out, in other words the HSV image is tresholded which results in a binary image. The tresholded picture looks as follows: | '''''Step 3:''''' The red pixels are filtered out, in other words the HSV image is tresholded which results in a binary image. The tresholded picture looks as follows: | ||

[[File:Arrow_tresholded_(1).jpg|300px]] | |||

[[File:Arrow_tresholded.jpg|300px]] | [[File:Arrow_tresholded.jpg|300px]] | ||

| Line 100: | Line 105: | ||

'''''Step 4:''''' The binary image gives the different red spots a little bit frayed contours. Therefore the image is blurred, in other words the contours are smoothened and some pixels are filled up to create a fully padded area. This is done with the floodfill algorithm, which works a bit like a minesweeper game. This results in the following picture: | '''''Step 4:''''' The binary image gives the different red spots a little bit frayed contours. Therefore the image is blurred, in other words the contours are smoothened and some pixels are filled up to create a fully padded area. This is done with the floodfill algorithm, which works a bit like a minesweeper game. This results in the following picture: | ||

[[File:Arrow_blurred_(1).jpg|300px]] | |||

[[File:Arrow_blurred.jpg|300px]] | [[File:Arrow_blurred.jpg|300px]] | ||

| Line 105: | Line 111: | ||

'''''Step 5:''''' With the OpenCV option findcontours the different contours of all the red blobs are found. For each founded contour, the corresponding blob is coloured differently. This results in the following picture: | '''''Step 5:''''' With the OpenCV option findcontours the different contours of all the red blobs are found. For each founded contour, the corresponding blob is coloured differently. This results in the following picture: | ||

[[File:Arrow_contours_(1).jpg|300px]] | |||

[[File:Arrow_contours.jpg|300px]] | [[File:Arrow_contours.jpg|300px]] | ||

| Line 110: | Line 117: | ||

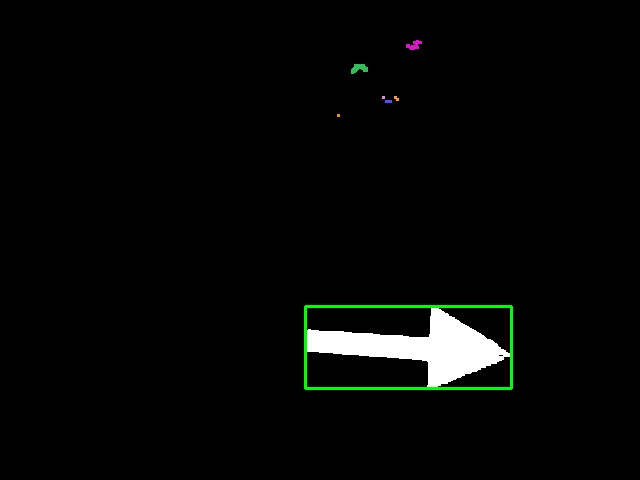

'''''Step 6:''''' Now the areas inside all different contours are calculated. With a loop the largest area is determined. The largest area is assumed to be the arrow. (Important assumption, because a other large red area which is larger than the area of the arrow may let the arrow recognition fail). When the largest area is found, a closest rectangle is drown around the area. This results in the following picture: | '''''Step 6:''''' Now the areas inside all different contours are calculated. With a loop the largest area is determined. The largest area is assumed to be the arrow. (Important assumption, because a other large red area which is larger than the area of the arrow may let the arrow recognition fail). When the largest area is found, a closest rectangle is drown around the area. This results in the following picture: | ||

[[File:Arrow_largestcontour_(1).jpg|300px]] | |||

[[File:Arrow_largestcontour.jpg|300px]] | [[File:Arrow_largestcontour.jpg|300px]] | ||

Revision as of 10:33, 12 June 2014

Members of group 10

| Bas Houben | 0651942 |

| Marouschka Majoor | 0660462 |

| Eric de Mooij | 0734166 |

| Nico de Mooij | 0716343 |

Planning

Week 1

- Introduction

- Install Ubuntu

Week 2

- Determine the course goals

- Brainstorm about the robot control architecture

- Start with the tutorials

Week 3

- Continue with the tutorials

- Install ROS/QT

- Finish robot control architecture

- Brainstorm about collision principle

- Brainstorm about navigation

Week 4

- Meet with the tutor

- Finish installation

- Finish tutorials

- Write collision detection

- Write corridor navigation

- Test the written code on Pico

- Corridor challenge (Recorded performance)

Concepts

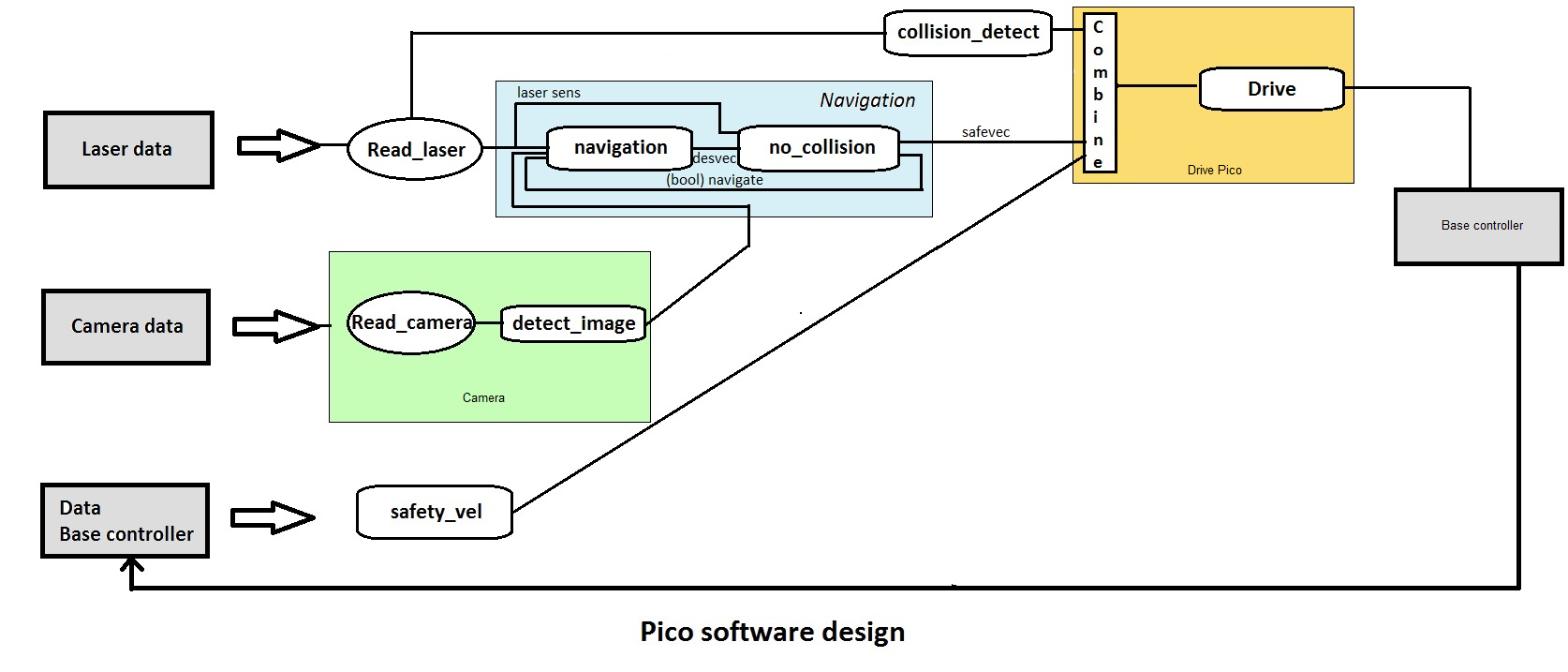

Robot program architecture

The idea of our architecture is to create different process layers. By splitting the incoming signals and combining them later, the tasks can be divided and the different processes can run in parallel. Layers are based on the available sensors as much as possible.

Wall detector

The wall detector determines whether the robot is close to a wall or not by determining the unblocked distances at its two sides. When either side is too close (within 30cm) it turns parallel to that side, taking a distance of 30cm from the wall.

Intersection detection

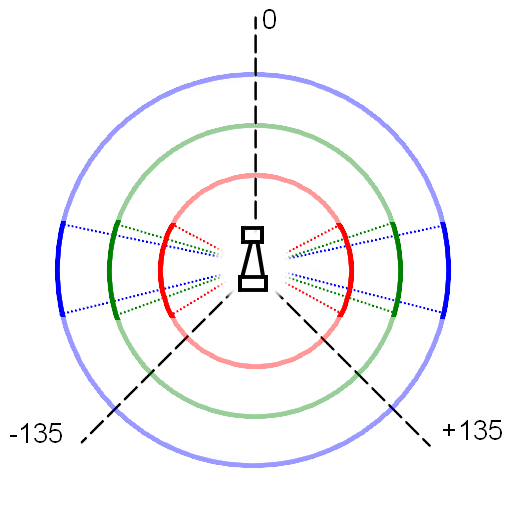

When driving forward, Pico uses the measured distances at different angles from the laser data in order to detect corridors. These distances are visualized in the figure on the left. The red circle part covers 72 degree with a radius of 0.5m, the green circle part covers 35 degree with a radius of 1.0m and the blue circle part covers 25 degree and a distance of 1.5m. The circle part tips outside of pico are on two parallel lines 60cm apart. When each of this circle parts doesn't detect any obstacles inside, this is considered to be the corridor.

All holes smaller than 0.30m will never be detected as corridor because the slimmest part of the laser range bundle outside pico is 0.30m. (Just outside the part covered by the red circle part, inside the blue circle part). The smallest hole that always will be determined as corridor is 0.60m; the laser covers a with of 0.6m. Holes within 0.30m and 0.60m might be determined as corridor, dependent on the length of the exit and pico's positioning.

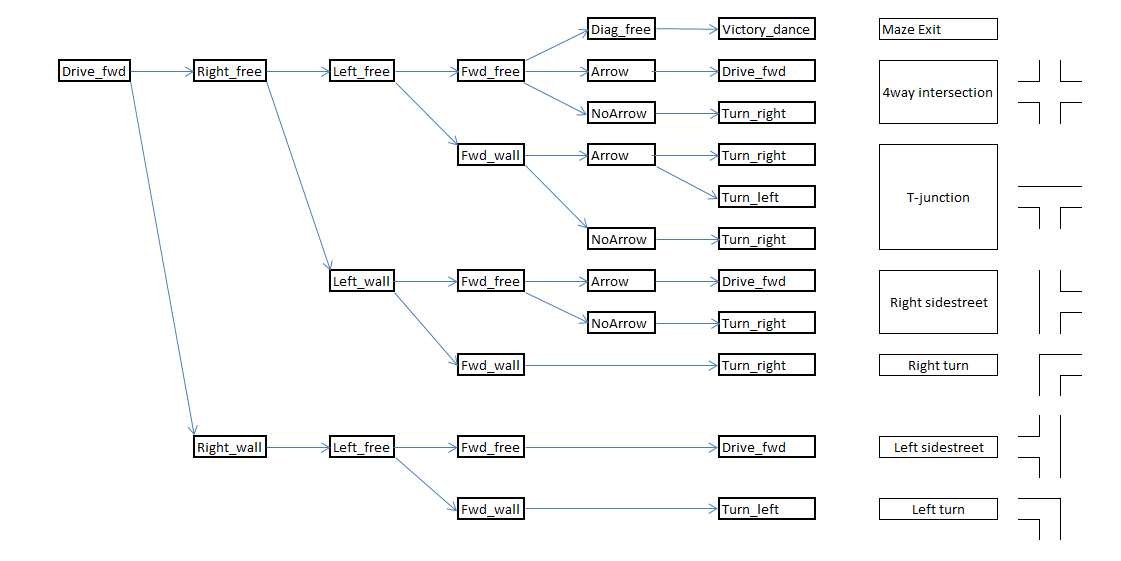

Intersection determination

Depending on the results from the intersection detection part, Pico will determine which situation he is in. In the maze, these situations could be 4-way intersection, corners and T-junctions. The image below shows how each situation is determined depending on the presence of a wall or corridor in the forward, left or right detectors. At this point, arrows can not yet be detected, so that part of the protocol is skipped. Once arrow recognition is implemented, the arrows will be used to determine the correct corridor to take at T-junctions, or to move towards a T-junction at the end of a corridor, if an arrow is detected in the distance.

Moving through intersections and corners

Once Pico has detected a situation and determined if he want to turn, he will rotate + or - 90 degrees. After the rotation, Pico will drive forward a small distance to position himself fully into the next corridor and will look around once more.

Arrow Detection

To recognize or a given arrow is pointed to the left or to the right, and to let Pico react on this, several steps are followed while driving Pico:

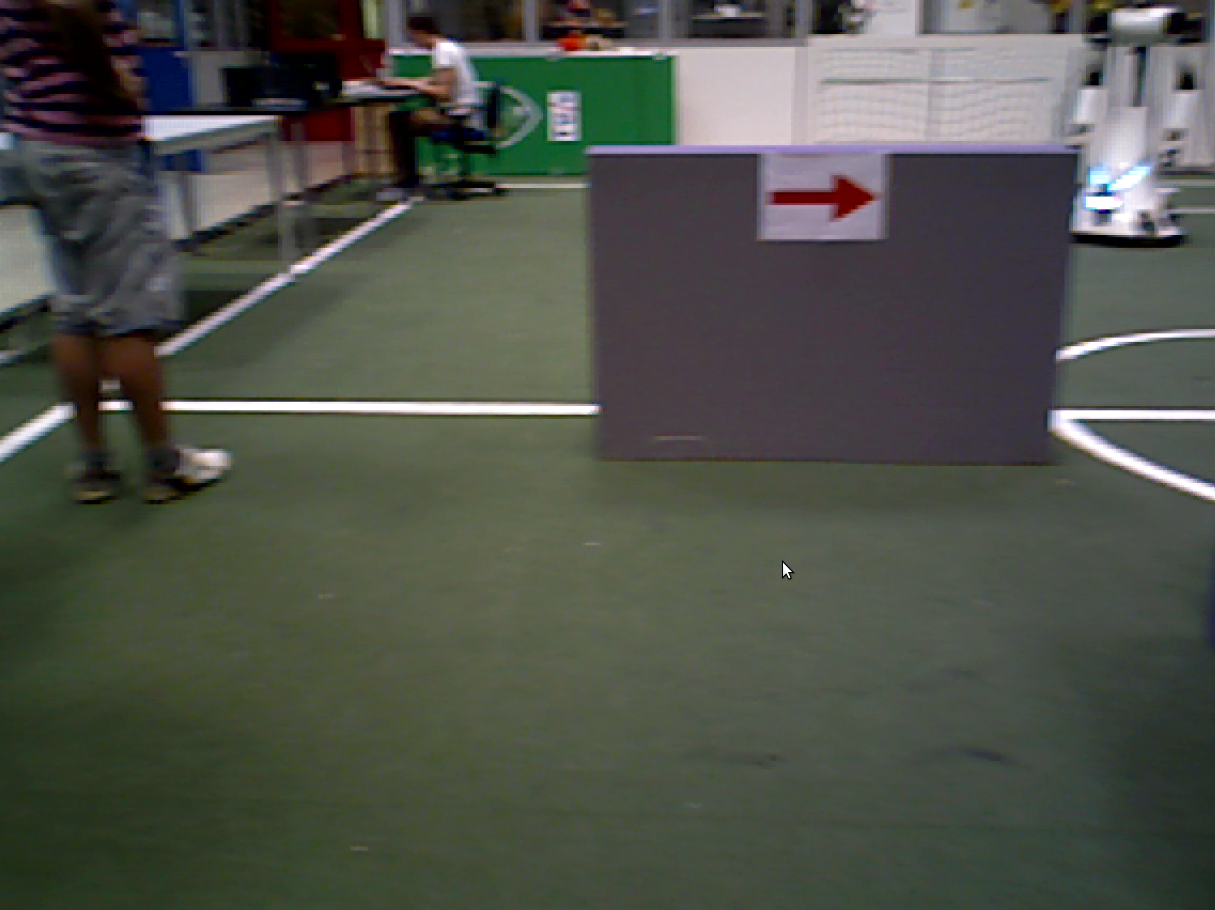

Step 1: The ROS image is converted to an Open CV image. Picture used as example:

Step 2: There are different color spaces to describe pictures. In our case the RGB image is converted to an HSV image. HSV is commonly used in computer based graphic design. Converting RGB to HSV and vice versa is unambiguous. This results in the following picture:

Step 3: The red pixels are filtered out, in other words the HSV image is tresholded which results in a binary image. The tresholded picture looks as follows:

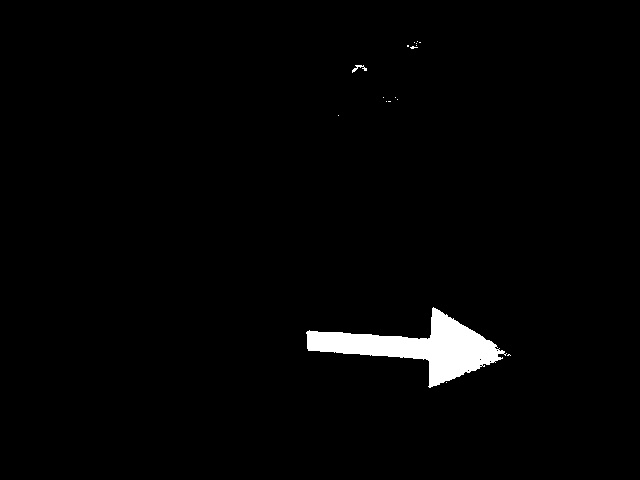

Step 4: The binary image gives the different red spots a little bit frayed contours. Therefore the image is blurred, in other words the contours are smoothened and some pixels are filled up to create a fully padded area. This is done with the floodfill algorithm, which works a bit like a minesweeper game. This results in the following picture:

Step 5: With the OpenCV option findcontours the different contours of all the red blobs are found. For each founded contour, the corresponding blob is coloured differently. This results in the following picture:

Step 6: Now the areas inside all different contours are calculated. With a loop the largest area is determined. The largest area is assumed to be the arrow. (Important assumption, because a other large red area which is larger than the area of the arrow may let the arrow recognition fail). When the largest area is found, a closest rectangle is drown around the area. This results in the following picture:

Step 7: The largest area counts the number of dots of the arrow. A minimum number of dots is set, to determine if an possible arrow can be recognized.

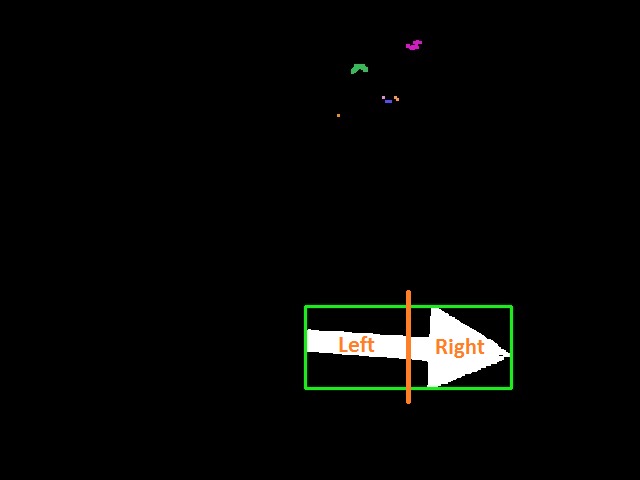

Step 8: The rectangle is now divided in two equal parts, a left and a right part. This is done by creating two different ROI’s (Regions of interest) on base of the coordinates of the rectangle. This can be visualised as follows:

Step 9: This is the last step where the direction of the arrow is recognized. The number of red dots are calculated for the left part as well as the right part. The arrow is pointed to the sight with the most red dots.