PRE2019 4 Group4: Difference between revisions

| (311 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

<div style="font-family: 'Arial'; font-size: 16px; line-height: 1.5; max-width: 1300px; word-wrap: break-word; color: #333; font-weight: 400; box-shadow: 0px 25px 35px -5px rgba(0,0,0,0.75); margin-left: auto; margin-right: auto; padding: 70px; background-color: rgb(255, 255, 253); padding-top: 25px; transform: rotate(0deg)"> | |||

<font size='6'>Emotion Recognition in companion Robots for elderly people</font> | |||

==Presentation== | |||

https://youtu.be/1-yBVy1HmfU | |||

==Group Members== | ==Group Members== | ||

{| | {| | ||

| Line 15: | Line 22: | ||

== Problem Statement == | == Problem Statement == | ||

As the years pass, every people get older and they start to lose some abilities, due to the nature of biological human body. Older people get vulnerable in terms of health, their body movements slow down, the communication rate between neurons on their brain decreases which might cause mental problems, etc. Thus, their dependency on other people increases, in order to maintain their lives properly. In other words, older people may need to be cared by someone else, due to deformation of their bodies. However, some of the old people aren’t lucky to find someone to receive their support. For those people, the good news is that the technology and artificial intelligence is developing and one of the great applications is care robots for elder people. Although “care robots” is a great idea and most likely to ease the human life, the technology brings some concerns and ambiguities along with it. Since “communication with elder people” is an essential for caring, the benefit of care robots increases as the communication between the user and the robot gets clearer and easier. Moreover, emotion is one of the most important tools for communication, that’s why we aim to investigate the use of emotion recognition on care robots with the help of artificial intelligence. Our main goal is to make the communication between an old person and the robot more powerful by making the robot understand the current emotional situation of the user and behave correspondingly. | As the years pass, every people get older and they start to lose some abilities, due to the nature of biological human body. Older people get vulnerable in terms of health, their body movements slow down, the communication rate between neurons on their brain decreases which might cause mental problems, etc. Thus, their dependency on other people increases, in order to maintain their lives properly. In other words, older people may need to be cared by someone else, due to deformation of their bodies. However, some of the old people aren’t lucky to find someone to receive their support. For those people, the good news is that the technology and artificial intelligence is developing and one of the great applications is care robots for elder people. Although “care robots” is a great idea and most likely to ease the human life, the technology brings some concerns and ambiguities along with it. Since “communication with elder people” is an essential for caring, the benefit of care robots increases as the communication between the user and the robot gets clearer and easier. Moreover, emotion is one of the most important tools for communication, that’s why we aim to investigate the use of emotion recognition on care robots with the help of artificial intelligence. Our main goal is to make the communication between an old person and the robot more powerful by making the robot understand the current emotional situation of the user and behave correspondingly. | ||

== Users and their needs== | == Users and their needs== | ||

| Line 60: | Line 65: | ||

* Make research about the elderly opinion and their wishes/concerns <br/> | * Make research about the elderly opinion and their wishes/concerns <br/> | ||

'''Week3:''' <br/> | '''Week3:''' <br/> | ||

* Analyze how facial expressions changes for different emotions <br\> | |||

* Analyze different aspects of this technology ( for example how the robot should react to different emotions ..) <br/> | * Analyze different aspects of this technology ( for example how the robot should react to different emotions ..) <br/> | ||

* The use of convolutional Neural Network for facial emotion recognition <br/> | * The use of convolutional Neural Network for facial emotion recognition <br/> | ||

'''Week4:''' <br/> | |||

* Find a database of face pictures that is large enough for CNN training <br/> | * Find a database of face pictures that is large enough for CNN training <br/> | ||

* Start the implementation of the facial emotion recognition <br/> | * Start the implementation of the facial emotion recognition <br/> | ||

*Companionship and Human-Robot relations <br/> | |||

'''Week5:''' <br/> | '''Week5:''' <br/> | ||

* Implementation of the facial emotion recognition <br/> | * Implementation of the facial emotion recognition <br/> | ||

'''Week6:''' <br/> | '''Week6:''' <br/> | ||

* Testing and improving <br/> | * Testing and improving <br/> | ||

* Desinging the robot <br/> | |||

'''Week7:''' <br/> | '''Week7:''' <br/> | ||

* Prepare presentation | * Software results analysis <br/> | ||

* Desinging facial expressions <br/> | |||

* Prepare presentation <br/> | |||

'''Week8:''' <br/> | '''Week8:''' <br/> | ||

* Derive conclusions and possible future improvements <br/> | * Derive conclusions and possible future improvements <br/> | ||

* Finalize presentation. <br/> | * Finalize presentation. <br/> | ||

'''Week9:''' <br/> | |||

* Make quiz for human vs software accuracy comparison <br/> | |||

* Finalize wiki page. <br/> | |||

== Deliverables: == | == Deliverables: == | ||

| Line 82: | Line 94: | ||

== State of the art== | == State of the art== | ||

==Companion robots that already exist== | ===Companion robots that already exist=== | ||

====SAM robotic concierge==== | |||

Luvozo PBC, which focuses on developing solutions for improving the quality of life of elderly people, started testing its product SAM in a leading senior living community in Washington. SAM is a concierge smiling robot that is the same size as a human, it’s task is to check on residents in long term care settings. This robot made the patients more satisfied as they feel that there is someone checking on them all the time, and it reduced the cost of care.<br\> | |||

[[File:Samrobot.jpg|center|400px]] | |||

====ROBEAR==== | |||

Developed by scientists from RIKEN and Sumitomo Riko Company, ROBEAR is a nursing care robot which provides physical help for the elderly patients, such as lifting patients from a bed into a wheelchair, or just help patients who are able to stand up but require assistance. It’s called ROBEAR because it is shaped like a giant gentle bear.<br/> | |||

[[File:Robear.jpg|center|400px]] | |||

====PARO==== | |||

PARO robot is an advanced interactive robot that is aimed to be used for therapy of elderly people on nursing homes. It’s a robot that is shaped like baby harp seal and it has a calming effect on and elicit emotional responses in patients. It can actively seek out eye contact, respond to touch, cuddle with people, remember faces, and learn actions that generate a favorable reaction. The benefits of PARO robot on elderly people are documented. | |||

[[File:paro-robot.jpg |center |400px]] | |||

=== Existing robots that are able to perform NLP === | |||

==== Sophia ==== | |||

Robot Sophia is a social humanoid robot developed by Hanson Robotics. She can follow faces, sustain eye contact, recognize individuals and most importantly process human language and speak by using pre-written responses. She is also able to simulate more than 60 facial expressions. | |||

[[File:sophia.jpg |center |300px]] | |||

==== Apple's Siri ==== | |||

Siri is a voice assistant program that is embedded to Apple devices. The assistant uses voice queries and a natural-language user interface to perform actions such as giving responses, making recommendations etc. It processeses different human lanugages and give responses on same language. Siri is in still active use at present time. | |||

[[File:siri.jpg |center |250px]] | |||

==Elderly wishes and concerns== | |||

The interview will be conducted with working professionals in the elderly care. Below are the questions with generalized answers from the caregivers that conducted this interview. | |||

'''Interview Questions and Answers''' | |||

*What are common measurements that elderly take against loneliness? <br\> | |||

::Elderly are passive towards their loneliness or often physically or mentally not capable to change it. | |||

*Are the elderly motivated to battle their loneliness? If so, how? <br\> | |||

::There are lot of activities that are organized.<br\> | |||

::Caregivers think about ways to spend the day and provide companionship as much as possible. <br\> | |||

*Do lonely elderly have more need of companionship or more need of a interlocutor? For example, a dog is a companion but not someone to talk to while frequently calling a family member is a interlocutor but no companionship. <br\> | |||

::This differs per person. <br\> | |||

::It is hard to maintain a conversation because it is difficult to follow or hear. Most are not able to care for a pet. Many insecurities that come with age are reasons to remain at home. <br\> | |||

*Are there, despite installed safety measures, a lot of unnoticed accidents? For example, falling or involuntary defecation. <br\> | |||

::Alcohol addiction. <br\> | |||

::Bad eating habits. <br\> | |||

*If the caregivers could be better warned against one type of accident, which one would it be and why? | |||

*Are there clear signals that point to loneliness or sadness? <br\> | |||

::Physical neglect of their own person.<br\> | |||

::Sadness and emotionally distant.<br\> | |||

*What is your method to cheer sad and lonely elderly up? <br\> | |||

::Giving them extra personal attention. <br\> | |||

::Supplying options to battle sadness and help them accomplish this. <br\> | |||

'''Results''' | |||

Loneliness is one of the biggest challenges for caregivers of the elderly. Theoretically, elderly do not have to be lonely. There are many options to find companionship and activity. These are provided or promoted by caregivers. However, old ages comes with many disadvanteges. Activities become to difficult or intens, they may have difficulties keeping a conversation in real life or via phone and they are not able to care for companions like pets. | |||

Common signs of loneliness are visible sadness, confusion, bad eating habits and general self neglect. Attention is the only real cure according to caregivers. Because the elderly lose the abbility to look for this companionship this attention should come from others without input from the elderly. | |||

'''Conclusions''' | |||

Since the elderly lose the abillity to find companionship and hold meaningful conversations, loneliness is a certainty, especially when the people close to them die or move out of their direct surrounding. A companion robot that provides some attention may be a useful supplement to the attention that caregivers and family. | |||

==RPCs== | |||

===Requirements=== | |||

====Emotion recognition software requirements==== | |||

*The software shall capture the face of the person | |||

*The software shall recognize when the person is happy | |||

*The software shall recognize when the person is sad | |||

*The software shall recognize when the person is angry | |||

*The software will be as accurate or more accurate as emotion recognition from photos as humans | |||

====Companion robot requirements==== | |||

*The robot will respond empahtically to the recognized emotion, this entails: | |||

:*When the person is happy, the robot shall act in a way to enforce this | |||

:*When the person is sad, the robot shall act in a way to cheer the client up | |||

:*When the person is angry the robot shall act in a way to difuse the situation | |||

* The robot will be able to give a hug | |||

===Preferences=== | |||

*The software can recognize different other emotions. | |||

===Constraints=== | |||

* The robot can only use camera captures of faces to recognize emotions, not (body)language. | |||

* The robot can only recognize three emotions. Sadness, Happiness and Anger. | |||

==How do facial expressions convey emotions?== | |||

===Introduction=== | |||

Emotions are one of the main aspects of non-verbal communication in human life. They are the reflections of what does a person think and/or feel towards a specific experience s/he had in the past or at the present time. Therefore, they give clues for how to act towards a person which eases and strengthens the communication between two people. One of the main methods to understand someone’s emotions is through their facial expressions. Because humans either consciously or unconsciously express them by the movements of the muscles on their faces. For the goal of our project, we want to use emotions as a tool to strengthen human-robot interaction to make our user to feel safe. Although it’s obvious that having an interaction with a robot never can replace having an interaction with another person, we believe that the effect of this drawback can be minimized by the correct use of emotions for human-robot interaction. Despite that there are different types of emotions, for the sake of simplicity and the focus on our care robot proposal, we will evaluate three main emotional situation which are happiness, sadness and anger through the features of the human face. | |||

===Happiness=== | |||

[[File:happyy.jpg |right|200px|A happy human face.]] | |||

Smile is the greatest reflector of happiness on human face. The following changes on human face describes how a person smile and show his/her happiness: | |||

*Cheeks are raised | |||

*Teeth are mostly exposed | |||

*Corners of the lips are drawn back and up | |||

*The muscles around the eyes are tightened | |||

* “Crow’s feet” wrinkles occur near the outside of the eyes. | |||

===Sadness=== | |||

[[File:sad.jpg | right| 200px | A sad human face.]] | |||

Sadness is the hardest facial expression to identify. The face looks like a frown-look and the followings are clues for sad face: | |||

*Lower lip pops out | |||

*Corners of the mouth are pulled down | |||

*Jaw comes up | |||

*Inner corners of the eyebrows are drawn in and up | |||

*Mouth is most likely to be closed | |||

===Anger=== | |||

[[File:anger.jpg|right|200px |An angry human face.]] | |||

Facial expression of anger is not hard to identify and the followings describe the facial changes in a anger situation: | |||

*Eyebrows are lowered and get close to each other | |||

*Vertical lines appear between the eyebrows | |||

*Eyelids are tightened | |||

*Cheeks are raised | |||

*The lower jaw juts out | |||

*Mouth may be wide open with "o" shape (if shouting) | |||

*Lower lip is tensed (if mouth is closed) | |||

==Our robot proposal == | |||

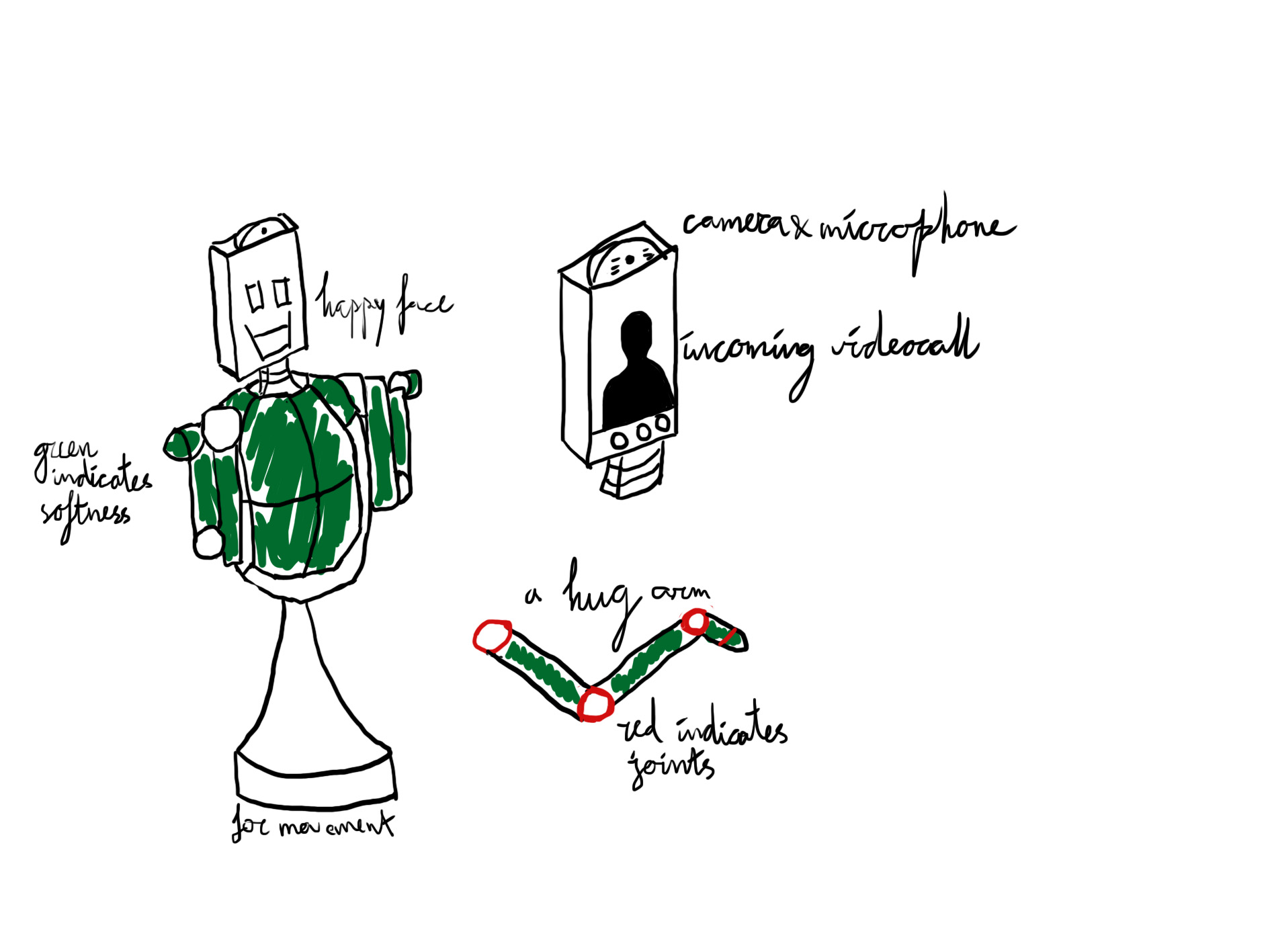

Our robot is a companion robot that will help the elderly people in living independently. It will have humanoid shape, and will have a screen attached to it that will allow the robot to display some pictures/video to the elderly person. It will also be a robot that can move from a place to another. <br\> | |||

===Our robot's design=== | |||

[[File:Sketch2020group4.jpg|thumb|center|1000px|Skectch of the robot design and two prominant features. The face display and the hug arms. Red indicates joints while green indicates a soft padding.]] | |||

<br\> | |||

'''General shape'''<br\> | |||

Our robot will be mostly humanoid except for the bottem half. Here we do not need to add the complexity of walking with two legs. The awkward movement of bipedal robots will not influence the relatability positivly and the added movement will not be neccessary in most of the elder homes. Seperate legs give the robot advanced mobility but since elderly homes often do not include stairs due to the reduced mobility of the residents we consider this not a relevant gain. Therefore the bottom will be a roombo-like in shape and mobility. <br\> | |||

<br\> | |||

The head is a screen that displays, video calls and robot reactions. On top of this screen is located the camera and audio in- and output. They are located at top of the head so that looking and talking towards the face of the robot will also conclude in the heighest efficiency of picking up facial expressions and speech. That speech from the robot also comes from the head will enforce this behavior. <br\> | |||

<br\> | |||

Because of the hugging ability the shape of the arms and torso are heavily dependend on the research of Huggiebot. There are not many hugbots so the shapes that are proven to be effective are rare. The torso is human shaped and made of soft materials. Huggiebot includes that these soft parts are also heated to bodytemperature, this could be a possibility for our robot. The arms, also padded with soft material, can move freely like human arms. They are sensitive for haptic feedback to prevent hurting the user. <br\> | |||

<br\> | |||

The robot will be a bit shorter then average height for the target users so to be non threatening for most users, around a height of 1.60 meter. <br\> | |||

<br\> | |||

[[File:Faces2020group4.jpg|thumb|center|1000px|Skectch of the robot facial expressions. Respectivly neutral, happy and sad]] | |||

'''expressions''' <br\> | |||

Our robot will have three expressions. Neutral, happy and sad. Its neutral expressions will be cheerful and accesable. It has to communicate friendlyness and be accessibility so that elderly will not be alarmed by them and know it will be a sicual robot. The happy speaks for itself and will be used in the happy scenarios. The sad expression will of course be used by the sadness scenarios but also by the angry scenario. The robot itself will not express anger. However, it will expres empathy and a willingness to hear the grievances of the client. Therefore a sad face is sufficient. It is also not the intent that the robot gets angry about the things that the client gets angry about. The robot should deescalete the situation. | |||

=== The assumptions on robot's abilities === | |||

*Facial recognition: The robot has to recognize the face of the elderly person <br\> | |||

*Speaking: The robot has to verbally communicate with the elderly. <br\> | |||

*Movement: The robot has to be able to move. <br\> | |||

*Calling: The robot has to be able to connect the elderly with other people, both by alarming and creating a direct connection between the client and a relevant person. <br\> | |||

For calling, we assume the communication with caregivers or emergency contacts will processed with “pre-prepared” texts, similar with speaking with a human. The communication functionality will be provided to our robot with some systems embedded to it and our robot will be trained to call a contact properly, when needed. | |||

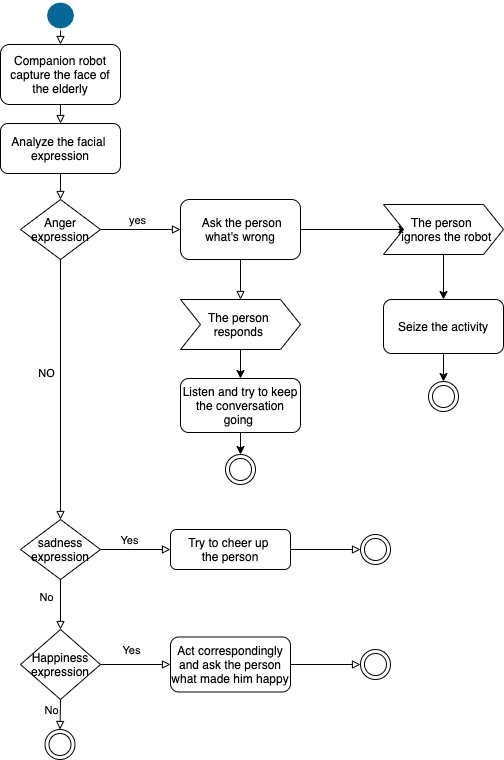

=== Different scenarios for different emotions === | |||

====Scenarios for anger==== | |||

Just as with frustration people can be angry with their own state. But anger extents to other people and things as well. It might seem that a robot has no business is solving anger and it may not have any. But the airing of grievances is healthy as well. Having something to talk to, even if this thing is not capable of solving that situation might relieve some of that pressure. Therefore, our robot will try to let the person talk about what has them so fired up. This may not solve the practical problem but it also helps with building a relationship with the elder. <br\> | |||

<br\> | |||

If the robot recognizes anger based on the emotional recognition it will try to open a conversation. The goal is to let the client vent out the reason for their anger. Anger is not an easy emotion to deal with for a robot. It is important that the anger does not get directed to the robot. Therefore the robot will not engage further if the response to the robots conversation starter is ignored or if the client reacts with anger. The natural language processing of the robot should make this decision. <br\> | |||

<br\> | |||

Once a conversation is started the robot will follow the same basic principles as with sadness. It is not the goal to talk but to make the elder feel heard. Therefore the robot will only engage once a day on anger or more if and only if the client engages the robot with a need to vent. | |||

====Scenarios for sadness==== | |||

'''Scenario sadness 1'''<br\> | |||

There are many things the robot can do. Something he can do is start a conversation and listen. When life gets overwhelming, it helps to have someone willing to listen to your problems, so the robot needs to allow the elderly person to air out their problems. At this time, the robot needs to take the information given by the person, and try to categorize the reasons behind the sadness of the person (loosing someone, missing someone, feeling lonely …) This will help the robot to know how to react making sure it will not make the situation worse. Another think he can do is give a hug, as hugging someone relieves stress and can make another feel lot better, it was proved scientifically that a chemical that is natural stress reliever called oxytocin is released in the brain while hugging. Another option is calling someone that can make the elderly feel better, that can be done if the robot doesn’t succeed in cheering up the person. <br\> | |||

<br\> | |||

If the robot recognizes sadness it will try to open up a conversation. A "what is wrong" or "how are you" will try to probe a reaction from the client. If there is no reaction the robot will proceed to scenario 2, more on this later. If the elder does respond the goal of the robot is not to talk but to make the elder feel heard and not alone. The robot may even ask if the client wants a hug. If the client stop responding but still recognizes sadness it will go on to scenario 2. If the robot recognizes neutrallity or happiness it will stop. <br\> | |||

<br\> | |||

To prevent becoming intrusive the robot will not react to the elder for 4 hours. Unless the elder itself engages the robot. | |||

'''Scenario sadness 2'''<br\> | |||

The world of a the elderly can be very small, especially if dementia plays a role. People start to get lost in the world with nothing to hold on to. This results in an apathic sadness. The robot should recognize this and try to bring the person back. With dementia this is often achieved by raising memories about the past. Showing pictures of their youth, former homes and family members helps the client to come back to the world and live happy memories. <br\> | |||

<br\> | |||

The robot starts with scenario 1 but if it gets no reaction it will try to activate this person. Playing music that the client likes and showing pictures of days gone by has often a positive effect on elders, especially with cases of dementia. Because our robot is not equiped to recognize activation of the client it will simply stop when the elder says so or after 30 minutes. The robot will again wait 4 hours before it repeats this program but will act otherwise if engaged by the client. | |||

====Scenarios for happiness==== | |||

When the elderly person is happy, it’s not necessarily for the robot to take immediate actions. Nonetheless, it needs to try to keep the elderly as much as possible, in other words it can maximize the happiness of the elderly. Studies have shown that the person is more likely to maximize his happiness when he finds someone to talk to about what made him happy (need to look for some official studies cause this is something I came up with :)))) ) . So the robot has to start a conversation a try to talk to the elderly about it. Another thing it can do is to also act happy, by using some facial expression (eg., smiling face) , or by using its capability of movement and ask the elderly to dance with him. <br\> | |||

<br\> | |||

When the robots detects happiness it can be a chance to deepen the relationship. Friends are there for the good and the bad times so will this companion robot. The robot will start a conversation pointing towards the happy expression. For exemple "What a beautiful day, how are you doing?". As usual it is the goal of the robot to make the elder feel heard and less alone. The goal is not to make a talkative robot. <br\> | |||

<br\> | |||

Movement is important for everyone but especially the elder who tend to move less and less. This happy state could be capatilized on by asking the client if they want to dance. If so the robot will play nice music that the elder likes and use it's movement capabilities for dancing. It will only do this for a couple of minutes but this may depend on the physical state of the client. IT will only dance once a day but may open a conversation about the happy state every four hours if happiness persists. Of course when the robot is engaged it will react to the emotion of the elder. <br\> | |||

<br\> | |||

The activity diagram below present how the robot should react to different emotions expressed by the elderly person.<br\> | |||

[[File:R_Diagram.png|center]] | |||

<br\> | |||

===Natural Language Processing & Handling data === | |||

Artificial Intelligence is developing day by day and Natural Language Processing (NLP) is one of the greatest subfields of AI. Natural Language Processing is a functionality which lets devices to understand human language, thus provides a communication between humans and devices that use artificial intelligence. Two of the mostly known example technologies are Apple’s Siri and Amazon’s Alexa. They both are accepted as voice-activated helpers which are able to process the input coming from a human in a human language and give an output correspondingly. In addition to these technologies, there are existing few humanoid robots that are able to perform NLP and speak. Sophia is one of the examples to that kind of robots. Sophia is a robot that are able to speak but her speech is not like humans’. Sophia recognizes the speech and then classifies according to its algorithms and answers back by mostly scripted answers or information on internet rather than reasoning. We also want our robot to behave similar to Sophia, we assume that it can recognize human speech but rather than answering back by selecting its answer in a pool of our “pre-prepared” answers, the algorithms will be designed in a way that it can apply reasoning according to different scenarios for emotion recognition. | |||

====Technical details for NLP==== | |||

The robot Sophia performs natural language processing, conceptually similar to computer program ELIZA. ELIZA is one of the earliest natural language programs which is created in 1960s. The program is created to establish the communication between humans and machines. It simulated a conversation using the string or the pattern matching and the substitution methodology. Thus, NLP used for robot Sophia also follows a method similar to pattern matching. The software is programmed to give the pre-responses for the set of specific questions or the phrases. Additionally, the inputs and the responses are processed with blockchain technology and the information is shared in a cloud network. | |||

Moreover, Ben Goertzel, who has built Sophia’s brain, mentions that Sophia doesn’t have the human-like intelligence to construct those witty responses. He adds that Sophia’s software can be evaluated in three main parts: | |||

1. Speech reciting robot: Goertzel said that Sophia is pre-loaded with some of the text that she should speak and then it uses some of the machine learning algorithms to match the pauses | |||

and the facial expressions to the text. | |||

2. Robotic chatbot: Sophia sometimes runs a dialogue system, where it can look and listen to the people to what they say and pick some of the pre-loaded responses in it based on what | |||

the opposite person says and some of the other information is gathered from the internet. | |||

3. Research platform for AI research: the robot Sophia doesn’t have quick responses for research platform but can answer simple questions. | |||

==== Data Storage & Processing ==== | |||

The robot Sophia’s brain is powered by OpenCog, which is a cloud-based AI program, including a dialog system that facilitating conversations of robot Sophia with others. Program enables large scale of cloud control of the robots. Moreover, Sophia’s brain has deep-learning data analytics for processing massive social data gathered from the millions of interactions the robot Sophia has. Therefore, we assume that our robot’s brain is also powered by a similar program to OpenCog, thus it can process data, understand human speech and recognize faces. | |||

===== Use of Blockchain Technology ===== | |||

SingularityNET is a decentralized marketplace for Artificial Intelligence that built on blockchain which enables AI systems to barter with each other for many different services. All the information exchange between AI systems are recorded on blockchain, so that any AI system that isn’t able to do some task due to lack of corresponding algorithm, may request an algorithm from any AI system on the blockchain. By this means, information processing of robot becomes more efficient and the robot is most likely to perform more tasks without facing a problem. Therefore, our robot is assumed to be a part of that kind of a blockchain which will increase its performance in terms of achieving various tasks more easily. | |||

==== Some possible concerns & details about our robot ==== | |||

1) Will the robot need to be able to recognize the elderly person it is attending to (if the robot works with multiple clients either in parallel or over time), or is sufficient to respond to the client regardless of who it is? | |||

*The robot will be trained to recognize its user as well as the other people that will be authorized on the robot’s system such as carer, relatives etc. of the user. With the usage of camera and face recognition technology, our robot will be able to distinguish people and know the primary user of it. In order to increase the accuracy of recognition, initially, authorized people’s biometric profiles will be stored by the robot. This procedure is for once. Our robot’s main focus will be its user and the other authorized people in the system. Therefore, the robot will not respond to any random people due to security reasons. If any unauthorized person needs to interact with the robot, this have to be done via an authorized person. | |||

2) Will the robot keep on learning after deployment? | |||

*One of the main goals of our robot is to give maximum performance with minimum data, which corresponds to data minimization principle. However, since every user’s preferences, daily lives, needs vary; our robot’s adaptation to all these aspects regarding its user will take time. In this time interval, our robot will perform its best and, in the meantime, it will continue to learn for better adaptation and assistance. Especially, for emotion recognition feature, the user’s facial expressions are important for further training in order to increase accuracy. However, visual data is an important aspect for security and privacy; so that we will need the user’s consent in order to use data for further training. If users let us to use their visual data for further training of the robot, we ensure them that their data will be stored on encrypted channels and won’t be shared with third parties. There will be an option that users may let us to store their data either locally or on cloud network. If they let us to store cloud network their data will be used to train other robots on that cloud network, but still won’t be shared by any other people, companies etc. Moreover, we will review the data we have weekly and delete the unused data. | |||

3) Will the robot build up knowledge about the client’s life? | |||

*Our robot will build up knowledge from what it learned by performing Natural Language Processing feature in order to give better assistance. The user can tell their preferences about a certain thing/event to the robot, so that the robot will behave respectively to user preferences by storing corresponding data. One example would be if user doesn’t want the robot to enter his/her bedroom between a time interval, our robot will store that information and he won’t enter the bedroom unless it recognizes an emergency situation. The data gained by NLP will be stored locally due to reasons of privacy. Moreover, if the user wants a certain data to be removed from local storage, the data will be deleted as s/he tells that to the robot. | |||

4) Suppose the client (or a carer for the client) would like to know why the robot responded in a certain way, would the robot be able to provide that information? | |||

*The algorithms of the robot are designed in a way that the reasons for choosing a certain action can be reconstructed in order to get maximum efficiency from the robot in daily tasks. The robot will apply reasoning for its behaviors, interactions responses and when an explanation is requested it will do its best to explain it using human language. | |||

5) What will happen to personal data in a possible death of the user or if the user decides to stop using the robot? | |||

*The personal data is stored locally and its only usage area is to assist its user. If assisting its user not possible anymore, the personal data will be deleted permanently from the storage. | |||

6) For what purpose will the user's personal information be processed? How will the user be ensured that the personal data won't be used for other purposes? | |||

*Since our robot’s main focus is the health and needs of its user, the personal data will be used and processed for better assistance. The amount of information that robot will know is up to the client, client may share as much as data s/he wants to. These data will be stored in encrypted channels so that no one can reach data except our company. An extensive agreement will be signed between client and the company so that user can understand what is data used for. | |||

7) Can any third-party reach robot's camera and track user's home? | |||

*An end-to-end security system will be used for robot’s camera which will ensure your privacy and security. In that case, it’s not possible for any other third-party can access the robot’s camera and use it for other purposes. | |||

===Ensure safety of the elderly=== | |||

Hugging can provide great comfort to a person and builds a thrusted relationship between two people. Therefore we want our robot to be able to give this physical affection. The elderly are often fragile. They bruise easily, bones are brittle and joints hurt. Some will not be able to give hugs but many will be able to give and recieve some sort of physical affection. However, thrusting these fragile bodies to a robot raises immediate concerns about safity. Therefore we have looked at state of the art robots that are build to give hugs. We look at two systems that provide a non-human hug. The first one is the HaptiHug, a kind of belt that simulates a hug and is used in online spaces like Second Live. Not a robot per se but the result of the psychological effect are very promising that an artificial hug could go a long way. <br\> | |||

<br\> | |||

Next is HuggieBot, currently in development, an actual robot that gives hugs. Just as with the HaptiHug, Huggiebot's main goal is to mimic the psychological effect of a hug. It uses a soft chest and arms that are also heated to mimic humans. Since this robot uses actual robotic arms this proves that a robot hug can be safe on an average human. As mentioned before our target group is especially vulnerable for physical damage but that there are robots with a sophisticated enough haptic feedback system gives us the confidence that this is possible to apply in our robot. | |||

== Companionship and Human-Robot relations == | |||

Relationships are complicated social constructs. Present in in some form in almost all forms of life, relationships know many forms and complexity. We are aiming for a human-robot relation that reaches the level of complexity between an animal companion and a human friend. Even the most superficual form of companionship can battle loneliness, a common and serieus problem with elderly. <br\> | |||

<br\> | |||

Relationships are complex but can be generalized in two pillars on which they are build. The first one is trust and the second is depth. While creating the behaviour of the robot we can test our solutions by asking the questions: "Is this building or compremising trust?" and "Does this action deepen the relationship?". The answers to these questions will be a guideline toward a companion robot. First lets talk about trust.<br\> | |||

<br\> | |||

'''Trust'''<br\> | |||

<br\> | |||

Trust can be generalized for human-robot relations and defined as the following: "If the robot reacts and behaves as a human expect it to react and behave, the robot is considered trustworthy". To abide to this we as designers need to know to things. What is expected of the robot by first impressions? Which behaviour is considered normal according these expectancies. To answer the first question we need an extensice design cycle and user tests. Somethings that we do not have the ability to do, considering the main users and the times we live in now. Therefore we might need to assume that our robot has an chosen aesthetic and calls on a predetermined set of first impressions and expectancies from the user. Doing that we can create a set of expected behavior. <br\> | |||

<br\> | |||

First impressions are important. Elderly are often uncomfortable with new technology. They should be eased into it with a easy to read, trustworthy robot. Because of this uncomfortability there is another challenge. Eldery might not know what they can expect from a robot. Logical behaviour that we expect to be normal and therefore trustworthy might be unexpected and unthrustworthy for an elder person who does not know the abilities a modern robot has. To gain this knowledge it might be so that we again need a user test with which might, again, be impossible with the current pandamic. <br\> | |||

<br\> | |||

However, there are already many things known about human-robot relationships. Especially with children there have been lots of tests. From this we can already extract some abilities that our robot should have. Emotional responsiveness is a huge building block for trust. This means that a sad story should be mirrored with empathy and a funny story with a joyful response. Facial expression but also tone of voice are important with this. Interacting based on memory is also significant however very complex. Later in this chapter we will talk a bit about memory and how, in it most basic form, it will be implemented. <br\> | |||

<br\> | |||

Facial expressions are incredible difficult to recreate. To recreate all the subtleties of human facial expression often brings it in the notorious Uncanny Valley and often goes hand in hand with a loss of intelligibility. Therefore there is often seen a preference for simplified facial expressions. This shows that recreating life does not have to be the goal for a companionship robot. Clearity and clear communication are also vital for a human-robot relation. <br\> | |||

<br\> | |||

To conclude, we need to design a robot which reacts and behaves in a way that our user group, the lonely elder, expects it to behave and react. Not only physical but emotional as well. <br\> | |||

<br\> | |||

'''Depth'''<br\> | |||

<br\> | |||

Relations are not binary but rather build slowly over time. In human relationships people get to know another. While building trust they also start to share interests and experiences. Of course this is very limited in our robot. Our main goal is to battle loneliness not to make a full fletched friend. However, a robot can learn about its human. Emotion recognition will play a big part here. If the robot starts to learn when and how it should respond. Based on the emotion before and after the reaction of the robot, it could create a more accurate image of the needs and wants of the human. It would "get to know" the human. This creates opppertunity for more complex beheavior and therefore an evolving relation.<br\> | |||

<br\> | |||

We have descriped several scenarios where a robot reacts to an emotion. The word threshold has been used a couple of times. So what does this mean? It means that a person needs to be enough frustrated, angry, sad etc. before a robot starts interacting. What this will look like in practice would need a lot of user testeng. But even with extensice testing we would still settle on an average. Per person these thresholds will be different. Some people would like the extra attention while others may find the robot to be intrusive. These positive and negative reactions to the robot's actions can be used to tweak the thresholds per emotion to the users preference. Simply by interacting with the robot. <br\> | |||

<br\> | |||

It is important to note that this will not change if the robot recognizes an emotion. It changes when and how it will act on that recognition. | |||

==Facial emotion recognition software == | |||

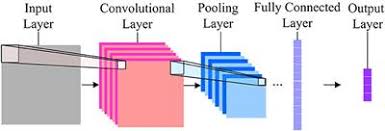

=== The use of convolutional Neural Network: === | |||

In our project, we will create a convolutional Neural Network that will allow our software to make a distinction between three main emotions (pain, happiness, sadness, and maybe more emotions) by analyzing the facial expression. <br/> | |||

'''What’s Convolutional Neural Network?''' <br\> | |||

Convolutional neural network are neural networks which are sensitive to spatial information, they are capable of recognizing complex shapes and patterns in an image, and it’s one of the main categories of neural networks to do images recognition and images classifications. <br\> | |||

'''How it works?''' <br\> | |||

CNN image classifications takes an input image, process it and classify it under certain categories (eg:sad, happy, pain). The Computers see the input image as an array of pixels and based on the image resolution, it will see h x w x d(height, width, dimension). | |||

Technically, deep learning CNN models to train and test, this training is done by providing to the CNN data that we want to analyze, each input image will pass it through a series of convolution layers with filters, Pooling, fully connected layers and apply Softmax function to classify an object, each classification with a percentage accuracy values. <br\> <br\> | |||

[[File:CNN-Layers.jpeg| center]] | |||

<br\> <br\> | |||

'''Different layers and their purposes:''' <br\> | |||

*'''Convolution layer:''' <br\> | |||

Is the first layer that extract features from the input image, it maintains the relation between pixels by learning image features using small squares of input data. Mathematically, it’s an operation that multiplies the image matrix (h x w x d) with a filter (fh x fw x d) and it outputs a volume dimension of (h – fh + 1) x (w – fw + 1) x 1 <br\> | |||

[[File:Convolution-layer.png | center]] <br\> | |||

Convolutions of an image with different filters are used in order to blur and sharpen images, and perform other operations such as enhance edges and emboss. | |||

*'''Pooling layer:''' <br\> | |||

Reduce the number of parameters when the images are too large. Max pooling takes the largest element from the rectified feature map. | |||

*'''Fully connected layer:''' <br\> | |||

We flattened our matrix into vector and feed it into a fully connected layer like a neural network. Finally, we have an activation function to classify the outputs. | |||

==About the software== | |||

We will build a CNN which is capable of classifying three different facial emotions (happiness, sadness, anger), and try to maximize its accuracy. <br/> | |||

We’ll use python 3.6 as programming language. <br/> | |||

The CNN for detecting facial emotion will be build using keras.<br/> | |||

To detect the faces on the images, we will use LBP Cascade Classifier for frontal face detection; it’s available in OpenCV.<br/> | |||

===dataset:=== | |||

We used the fer2013 datast from Kaggle expended with the CK+ datasets. The data from fer2013 consists of 48x48 pixel grayscale images of faces, while the data from CK+ consists of 100x100 pixel grayscale images of faces, so we merged the two making sure all the pictures are 100x100 pixel. The faces have been automatically registered so that the face is more or less centered and occupies about the same amount of space in each image. The task is to categorize each face based on the emotion shown in the facial expression in to one of seven categories (0=Angry, 1=Disgust, 2=Fear, 3=Happy, 4=Sad, 5=Surprise, 6=Neutral). But we'll only use (Angry,Happy, Sad, Neutral). <br/> | |||

In total, we obtained a dataset of 21485 images. <br/> | |||

===Program Modules:=== | |||

{| border=1 style="border-collapse: collapse;" | |||

|- | |||

| dataInterface.py || process the images from dataset | |||

|- | |||

| faceDetectorInterface.py || detect faces on the images using LBP Cascade Classifier | |||

|- | |||

| emotionRecNetwork.py || create and train the CNN | |||

|- | |||

| cnnInterface || allow applications to use the network | |||

|- | |||

| applicationInterface || emotion recognition using webcam or screen images | |||

|- | |||

| masterControl || user interface | |||

|} | |||

<br/> | |||

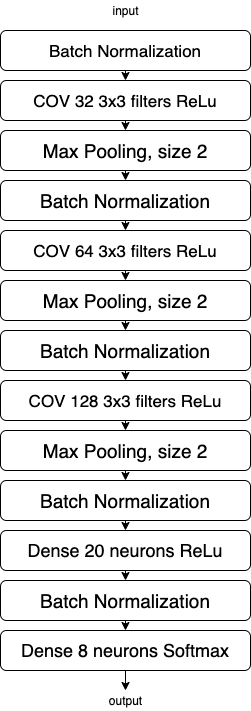

===The Neural Network Architecture:=== | |||

The neural network consist of three convolutional layers with 32 filters in the first layer with ReLu, 64 filters on the second layer, and 128 filters on the third layer. To improve speed of the network, max Pooling was done after every layer. <br/> | |||

The neural network also contains two dense layers with 20 neurons on the first layer with ReLu activation, and 8 neurons on the second layer with softmax activation. <br/> | |||

There was also a batch normalization before before every layer. <br/> | |||

[[File:CNN_design.png| center]] | |||

<br/> | |||

<br/> | |||

===Training The Network:=== | |||

When training the network, it will take a big training time and won't be efficient to access the image files each time because this will involve system calls for image access.To speed up the training time, we created two files 'data.npy' and 'labels.npy' where all the pictures from all the directories were merged into the file data.npy and their corresponding emotion labels were stored in labels.npy. <br/> | |||

The data and labels imported for the the two files 'data.npy' and 'labels.npy' were shuffled and split into three categories: training dataset that represents 80% of the data, validation dataset that represents 10%, and testing data represents the remaining 10%. The testing dataset is for testing purposes, it will never be used during the training of the network. Therefore, the performance of the network on this data is an excellent way to test the efficiency of it <br/> | |||

===Results:=== | |||

After training our CNN, we got on the 22nd epoch a validation accuracy of 89.6%.<br/> <br/> | |||

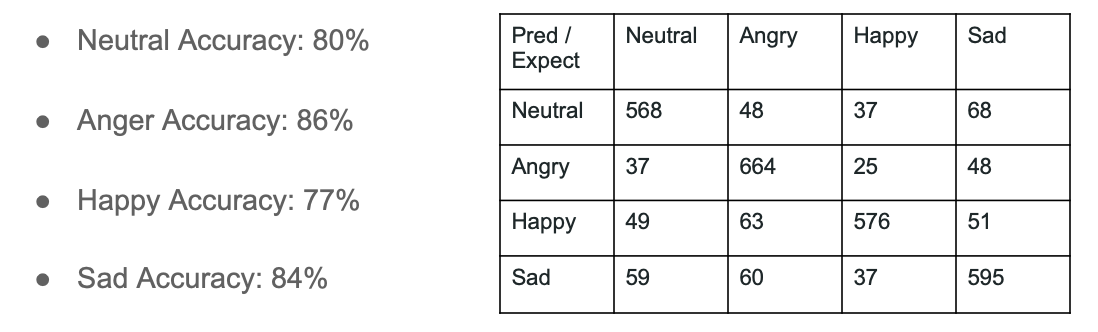

'''testing dataset accuracy:'''<br/> | |||

After that the trained model was used to predict emotions of the testing set. We got the following results. The confusion matrix shows the rows of expected results, and columns of the prediction results.<br/> | |||

As a result the accuracy percentage of each emotion for the testing set is shown on the left. <br/> | |||

[[File:testing_result.png|center]] <br/> <br/> | |||

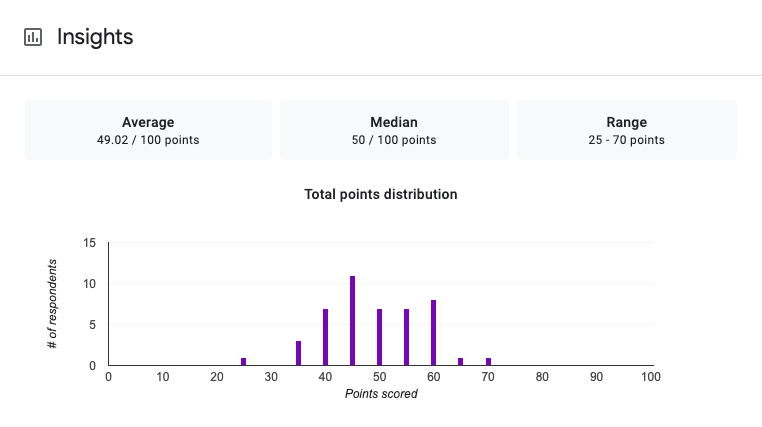

===Humans emotion recognition accuracy:=== | |||

In order the compare our software accuracy with humans, we made a quiz that contains 5 pictures for each emotion ( Neutral, Happiness, Sadness, Anger), selected completely randomly for the our dataset, where people had to guess which emotion was expressed. As the pictures were two small, we resized them into bigger clear pictures. Here is the link for the quiz: | |||

https://docs.google.com/forms/d/e/1FAIpQLSfbQ8NNHm31mMKqJzeEqYNpYy4jog6lvtupuCI2rFNajArymw/viewform?usp=sf_link . <br> | |||

We gathered responses from 46 people, and got the following results: <br/> | |||

[[File:quiz.png|center]] | |||

The lowest accuracy scored was 25%, while the highest scored was 70%. However, the average accuracy score for the 46 responses was around 49%. <br/> | |||

===Consequences of making errors:=== | |||

With this accuracy, there is a possibility for the software to make a mistake when predicting an emotion. For every emotion there are two types of mistakes: false positive when the software predicts an emotion that is not expressed, and false negative when the software doesn’t recognize the expressed emotion. Those can have different consequences for each emotion. <br/> | |||

'''Happiness:''' | |||

*False positive: <br/> | |||

The consequence of the false positive for happiness depends on the real emotion that is expressed by the elderly. If the elderly is angry or sad, and the robot acts like if he was happy, the elderly might think that robot doesn’t care about him, and it will also fail to take the required actions for angriness and sadness. | |||

*False negative: <br/> | |||

The consequence of not recognizing when the elderly is happy is that the robot will fail in trying to keep the elderly happy as much as possible and maximizing that happiness. <br/> <br/> | |||

'''Sadness:''' | |||

*False positive: <br/> | |||

The consequence of recognizing sadness emotion while the elderly is happy can be really bad, as the robot acting according to sadness can reduce the happiness of the elderly, and that’s the opposite of what the robot is required to do. <br/> | |||

The consequence of recognizing sadness emotion while the elderly is angry is not as bad as when the elderly is happy, as the robot takes almost the same action for the two. | |||

*False negative:<br/> | |||

The consequence of not recognizing when the elderly is sad is that he will feel lonelier and that the robot doesn’t care about him.<br/> <br/> | |||

'''Anger:''' | |||

*False positive:<br/> | |||

Similarly to sadness, this can lead to reducing the happiness of the elderly and taking some actions/precautions that are not needed. | |||

*False negative: <br/> | |||

The consequence of robot not recognizing when the elderly is angry can be very bad, as the robot can permits himself to do some things that are not acceptable, which will make the elderly more angry.<br/> <br/> | |||

Generally, we can say that the false negative recognitions of Anger can have the worst consequences, as excessive anger can be dangerous. If the robot acts happily, or enters the elderly private space while being angry, it may even create physical damage for the robot.<br/> <br/> | |||

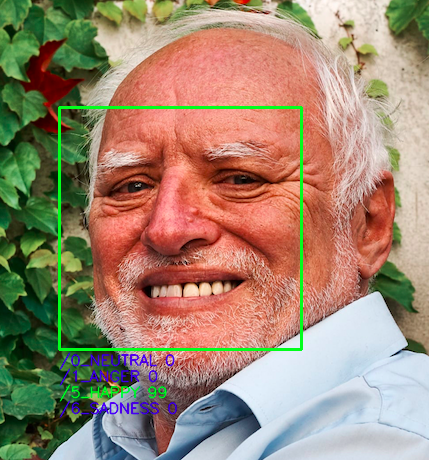

===Application:=== | |||

In order to demonstrate the functionality of the CNN, we built an application that can recognize facial emotion expression both via a webcam or using images. | |||

First it detects the face on the webcam/picture using faceDetectorInterface.py, where the LBP Cascade Classifier (available in OpenCv) is used in order to quickly detect and extract the face portion of the images. <br/> | |||

After that we use the cnnInterface.py to predict the emotion of the resulting face portion. Then the predicted emotion is displayed on the screen with a percentage on confidence. <br/> <br/> | |||

===Testing of application with random pictures from internet results:=== | |||

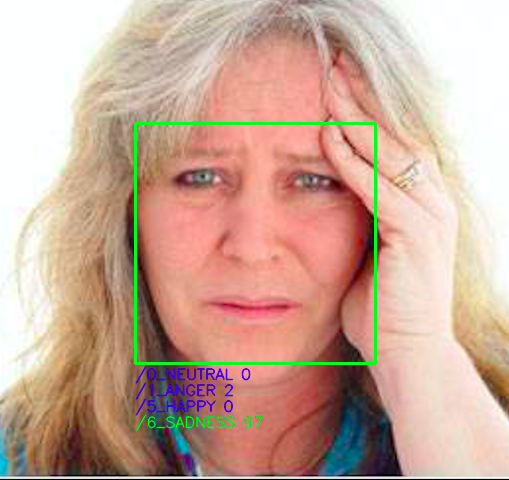

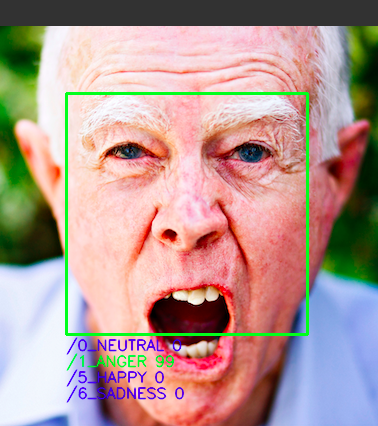

We used the screen picture option on our application, and used some random pictures of old people expressing some emotions from internet. It worked very well We got the following results: <br/> <br/> | |||

[[File:happy_result.png|350px]] | |||

[[File:sad_result.png|350px]] | |||

[[File:angry_result.png|350px]] | |||

<Br/> | |||

===Possible improvements:=== | |||

There are many things that can be done in order to improve our software: | |||

*It can be made to recognize more than the four emotions discussed (neutral, anger, Happiness, Sadness), recognizing pain, or surprise for example is also important for the companion robot. | |||

*Technically, there is a room of improvement in the accuracy of the software predictions; one way to do this is by making our dataset bigger and train our CNN according to that. | |||

*If we want to make it our emotion recognition more accurate, we can combine some other techniques with the facial emotion recognition, for example add a voice emotion recognition to that. | |||

*Recognizing the degree of each emotion. The robot reaction will definitely change according to the degree of angriness, sadness, happiness, or any other emotion. We were planning to do that on our project, but we couldn’t find datasets with different degrees of each emotion. | |||

== | ===Code on github:=== | ||

Link for the code: https://github.com/zakariaameziane/emotion_recognition_project | |||

'''What's done:''' <br\> | |||

'''week 5:''' <br\> | |||

Built an emotion classification network using a vgg architecture. Ready for data training. <br\> | |||

Trained the dataset (training took around 8 hours), had an accuracy of almost 60%. <br\> | |||

Programed test.py, that detects a facial expression via the webcam and display the emotion expressed. Works really good for neutral, happy , angry, and sad. Less accurately for pain (that's because I couldn't find a large training dataset for pain facial expression) <br\> | |||

'''week 6:''' <br\> | |||

Made the dataset bigger by merging both fer2013 and CK+ <br\> | |||

Changed Pain recognition with angriness recognition due to lack of pain datasets which leads to very low accuracy for pain recognition. <br/> | |||

Made some changes to the model, and trained it according to the new dataset. Got a great accuracy of 98.2 % <br/> | |||

Changed the application interface so it can recognize the facial expression both using the webcam or that appears on the screen. <br/> | |||

Testing: works perfectly both with both webcam and some random pictures of elderly from internet. <br/> | |||

==References== | |||

=== Academic === | |||

*Blechar, Ł., & Zalewska, P. (2019). The role of robots in the improving work of nurses. Pielegniarstwo XXI wieku / Nursing in the 21st Century, 18(3), 174–182. https://doi.org/10.2478/pielxxiw-2019-0026 | *Blechar, Ł., & Zalewska, P. (2019). The role of robots in the improving work of nurses. Pielegniarstwo XXI wieku / Nursing in the 21st Century, 18(3), 174–182. https://doi.org/10.2478/pielxxiw-2019-0026 | ||

Nurses cannot be replaced by robots. Their task is more complex than just the routine tasks that they deliver. However due to the enormous shortage of nurses the pressure on nurses also inhibits them to give this human side of care. Therefore robots cannot replace but can relieve the nurses from the routine care and create more time for empathic and more human care. | Nurses cannot be replaced by robots. Their task is more complex than just the routine tasks that they deliver. However due to the enormous shortage of nurses the pressure on nurses also inhibits them to give this human side of care. Therefore robots cannot replace but can relieve the nurses from the routine care and create more time for empathic and more human care. | ||

| Line 126: | Line 517: | ||

*Levi, G., & Hassner, T. (2015). Emotion Recognition in the Wild via Convolutional Neural Networks and Mapped Binary Patterns. Proceedings of the 2015 ACM on International Conference on Multimodal Interaction - ICMI 15. doi: 10.1145/2818346.2830587 | *Levi, G., & Hassner, T. (2015). Emotion Recognition in the Wild via Convolutional Neural Networks and Mapped Binary Patterns. Proceedings of the 2015 ACM on International Conference on Multimodal Interaction - ICMI 15. doi: 10.1145/2818346.2830587 | ||

This paper present a novel method for classifying emotions from static facial images. The approach leverages on the success of Convolutional Neural Networks (CNN) on face recognition problems | This paper present a novel method for classifying emotions from static facial images. The approach leverages on the success of Convolutional Neural Networks (CNN) on face recognition problems | ||

*van Straten, C. L., Peter, J., & Kühne, R. (2019). Child–Robot Relationship Formation: A Narrative Review of Empirical Research. International Journal of Social Robotics, 12(2), 325–344. https://doi.org/10.1007/s12369-019-00569-0 | |||

Abstract: Review about child-robot relations and trust. | |||

*https://hi.is.mpg.de/research_projects/huggiebot-2-0-a-more-huggable-robot (NOT YET APA) | |||

*https://www.researchgate.net/publication/221011940_HaptiHug_A_Novel_Haptic_Display_for_Communication_of_Hug_over_a_Distance (NOT YET APA) | |||

*Fosch-Villaronga, E., & Millard, C. (2019). Cloud robotics law and regulation. Robotics and Autonomous Systems, 119, 77-91. doi:10.1016/j.robot.2019.06.003 | |||

*Villaronga, E. F. (2019). “I Love You,” Said the Robot: Boundaries of the Use of Emotions in Human-Robot Interactions. Human–Computer Interaction Series Emotional Design in Human-Robot Interaction, 93-110. doi:10.1007/978-3-319-96722-6_6 | |||

=== Website === | |||

* Cuncic, A. (2020, June 01). 5 Tips to Better Understand Facial Expressions. Retrieved from https://www.verywellmind.com/understanding-emotions-through-facial-expressions-3024851 | |||

* About Vanessa Van Edwards Vanessa Van Edwards is a national best selling author & founder at Science of People. Her groundbreaking book, Edwards, A., Vanessa Van Edwards is a national best selling author & founder at Science of People. Her groundbreaking book, Potato, Allen, A., Transformice, . . . Ashley. (2020, June 04). The Definitive Guide to Reading Facial Microexpressions. Retrieved from https://www.scienceofpeople.com/microexpressions/ | |||

*What Is SingularityNET (AGI)?: A Guide to the AI Marketplace. (2018, November 30). Retrieved from https://coincentral.com/singularitynet-beginner-guide/ | |||

*Vincent, J. (2017, November 10). Sophia the robot's co-creator says the bot may not be true AI, but it is a work of art. Retrieved from https://www.theverge.com/2017/11/10/16617092/sophia-the-robot-citizen-ai-hanson-robotics-ben-goertzel | |||

*Desmond, J. (2018, July 23). Dr. Ben Goertzel, Creator of Sophia Robot, Hopes for a Benevolent AI Future. Retrieved from https://www.aitrends.com/features/dr-ben-goertzel-creator-of-sophia-robot-hopes-for-a-benevolent-ai-future/ | |||

*All you need to know about world's first robot citizen Sophia. (2018, September 26). Retrieved from https://www.techienest.in/need-know-worlds-first-robot-citizen-sophia/ | |||

*Gershgorn, D. (2017, November 14). Inside the mechanical brain of the world's first robot citizen. Retrieved from https://qz.com/1121547/how-smart-is-the-first-robot-citizen/ | |||

*ELIZA. (2020, May 13). Retrieved from https://en.wikipedia.org/wiki/ELIZA | |||

*Gershgorn, D. (2017, November 14). In the future, algorithms may barter between each other to make better decisions. Retrieved from https://qz.com/1128542/the-makers-of-sophia-the-robot-want-algorithms-to-barter-among-themselves-on-a-blockchain/ | |||

*Cloud robotics. (2020, June 22). Retrieved from https://en.wikipedia.org/wiki/Cloud_robotics | |||

*FAQs. Retrieved from https://www.hansonrobotics.com/faq/ | |||

*The Government of the Hong Kong Special Administrative Region - Innovation and Technology Commission. Retrieved from https://www.itc.gov.hk/enewsletter/180801/en/worlds_first_AI_robot_citizen_sophia.html | |||

*Data protection by design and default. Retrieved from https://ico.org.uk/for-organisations/guide-to-data-protection/guide-to-the-general-data-protection-regulation-gdpr/accountability-and-governance/data-protection-by-design-and-default/ | |||

*Boyd, N., & Manager, S. (2019, September 03). Achieving Network Security in Cloud Computing. Retrieved from https://www.sdxcentral.com/cloud/definitions/achieving-network-security-in-cloud-computing/ | |||

*Paro (robot). (2019, December 30). Retrieved from https://en.wikipedia.org/wiki/Paro_(robot) | |||

*Sophia (robot). (2020, June 22). Retrieved from https://en.wikipedia.org/wiki/Sophia_(robot) | |||

*Siri. (2020, May 25). Retrieved from https://en.wikipedia.org/wiki/Siri | |||

*Bennett, T., Travis Bennett Recent Articles by Travis BennettLearn Ethical Hacking OnlineTypes of Cybercrime: Stay, & Bennett, R. (2014, May 15). Facial Expressions Chart: Learn What People are Thinking. Retrieved from https://blog.udemy.com/facial-expressions-chart/ | |||

*Download Close-up Happy Face Boy for free. (2019, June 12). Retrieved from https://www.freepik.com/free-photo/close-up-happy-face-boy_4743622.htm | |||

*Stock Photo. (n.d.). Retrieved from https://www.123rf.com/stock-photo/angry_man_face.html?sti=mxg6jowadig513r84n%7C | |||

== Weekly contribution == | == Weekly contribution == | ||

| Line 141: | Line 565: | ||

| Zakaria || Introduction lecture(2h) - brainstorming ideas with the group(2h) - research about the topic(2h)- planning(0.5h) - Users and their needs(0.5h)- study scientific papers (3h) || 10 hours | | Zakaria || Introduction lecture(2h) - brainstorming ideas with the group(2h) - research about the topic(2h)- planning(0.5h) - Users and their needs(0.5h)- study scientific papers (3h) || 10 hours | ||

|- | |- | ||

| Lex || Research | | Lex || Introduction and brainstorm (4h) - Research (3h) - Approach (1.5h) - planning (0.5h) || 9 hours | ||

|} | |||

=== Week 2 === | |||

{| class="wikitable" | |||

|- | |||

! scope="col" style="width: 50px;" | Name | |||

! scope="col" style="width: 250px;" | Tasks | |||

! scope="col" style="width: 225px;" | Total hours | |||

|- | |||

| Cahitcan || meeting (1.5h) - RPCs (1.5h) - state of the art (2h) - USE analysis (1h) || 6 hours | |||

|- | |||

| Zakaria || meeting(1.5h)- USE analysis(3h)-RPCs(2.5h)- robots that already exist(2h) || 9 hours | |||

|- | |||

| Lex || meeting (1.5h) - writing the interview (3h) - distribuing the interview (1h) || 5.5 hours | |||

|} | |||

=== Week 3 === | |||

{| class="wikitable" | |||

|- | |||

! scope="col" style="width: 50px;" | Name | |||

! scope="col" style="width: 250px;" | Tasks | |||

! scope="col" style="width: 225px;" | Total hours | |||

|- | |||

| Cahitcan || meeting (1h) - research on how facial expressions convey emotions (4h) - finding&reading papers(3.5h) || 8.5 hours | |||

|- | |||

| Zakaria || How the robot should react to different emotions (3h)- meeting (1h)- research about CNN(5h)|| 9 hours | |||

|- | |||

| Lex || Meeting (1h) - research on trust and companionship (7h) - processing interview (1h)|| 9 hours | |||

|} | |||

=== Week 4=== | |||

{| class="wikitable" | |||

|- | |||

! scope="col" style="width: 50px;" | Name | |||

! scope="col" style="width: 250px;" | Tasks | |||

! scope="col" style="width: 225px;" | Total hours | |||

|- | |||

| Cahitcan || analysis of how facial expressions convey emotions (3.5h), meeting (30 min), reflection on groupmate's works (1.5h), research (1.5h) || 7h | |||

|- | |||

| Zakaria ||The use of convolutional Neural Network (4h), work on building up the software (download tools, make specifications, start programming, select a dataset)(4.5h) , Our Robot part (4h) , programming(faceDetectorInterface.py) (3h) , meeting (30min) || 16h | |||

|- | |||

| Lex || Meeting (0.5h), Writing about relations and companionship(3h), reflecting current scenarios with prof (2h), editing, writing and checking scenarios with professionals (5h) || 10.5h | |||

|} | |||

=== Week 5=== | |||

{| class="wikitable" | |||

|- | |||

! scope="col" style="width: 50px;" | Name | |||

! scope="col" style="width: 250px;" | Tasks | |||

! scope="col" style="width: 225px;" | Total hours | |||

|- | |||

| Cahitcan || meeting (30 min), help Zakaria with software (4h) Research robot functionalities (3h), NLP analysis (2.5h) || 10 hours | |||

|- | |||

| Zakaria ||meeting(30min)- programming(built emotion classification CNN)(5 hours) - trained the CNN (1h) - programmed test.py for facial emotion recognition via the webcam (3h) ||- 9.5 hours | |||

|- | |||

| Lex ||meeting (0,5h) - translated and generalized interview answers (2h) - Research robotic hugs (3h) - Research calling features (1.5h)|| 7 hours | |||

|} | |||

=== Week 6=== | |||

{| class="wikitable" | |||

|- | |||

! scope="col" style="width: 50px;" | Name | |||

! scope="col" style="width: 250px;" | Tasks | |||

! scope="col" style="width: 225px;" | Total hours | |||

|- | |||

| Cahitcan || meeting (0.5h), Research on NLP, blockchain, cloud storage, robot Sophia (8h), analysis (3h) || 11.5 h | |||

|- | |||

| Zakaria ||meeting(0.5h)- about the software part(5h)- Programming(specific tasks are mentioned in software part week 6)(12h)||17.5h | |||

|- | |||

| Lex ||meeting (0,5h), research relations (6h), research robot designs (3h), writing (2h), drawing (2h)|| 13.5 h | |||

|} | |||

=== Week 7=== | |||

{| class="wikitable" | |||

|- | |||

! scope="col" style="width: 50px;" | Name | |||

! scope="col" style="width: 250px;" | Tasks | |||

! scope="col" style="width: 225px;" | Total hours | |||

|- | |||

| Cahitcan || meeting (0.5h) - reading articles, websites for data concerns (7h) - data analysis (4h) || 11.5h | |||

|- | |||

| Zakaria ||meeting(0.5h) - software/Application/results/consequences of errors/testing (9h)|| 9.5h | |||

|- | |||

| Lex ||-|| - | |||

|} | |||

=== Week 8=== | |||

{| class="wikitable" | |||

|- | |||

! scope="col" style="width: 50px;" | Name | |||

! scope="col" style="width: 250px;" | Tasks | |||

! scope="col" style="width: 225px;" | Total hours | |||

|- | |||

| Cahitcan || meeting (0.5h) - presentation & data concerns (10h) || 10.5h | |||

|- | |||

| Zakaria ||meeting(0.5), presentation(content/recording/editing)(8h), update wiki page(6h) || 15,5h | |||

|- | |||

| Lex ||meeting (0.5h) - presentation (6h) - facial expressions (3h) - edditing wiki (2h)|| 11.5h | |||

|} | |||

=== Week 9=== | |||

{| class="wikitable" | |||

|- | |||

! scope="col" style="width: 50px;" | Name | |||

! scope="col" style="width: 250px;" | Tasks | |||

! scope="col" style="width: 225px;" | Total hours | |||

|- | |||

| Cahitcan || meeting (0.5h) - updating wiki (5h) || 5.5h | |||

|- | |||

| Zakaria ||meeting(0.5)- make quiz(4h)- finalize wiki page(3h)||7.5h | |||

|- | |||

| Lex ||redefining requirements (3h) - reworking scenarios (4h)|| - | |||

|} | |} | ||

Latest revision as of 09:14, 25 June 2020

Emotion Recognition in companion Robots for elderly people

Presentation

Group Members

| Name | Student Number | Study | |

|---|---|---|---|

| Cahitcan Uzman | 1284304 | Computer Science | c.uzman@student.tue.nl |

| Zakaria Ameziane | 1005559 | Computer Science | z.ameziane@student.tue.nl |

| Lex van Heugten | 0973789 | Applied Physics | l.m.v.heugten@student.tue.nl |

Problem Statement

As the years pass, every people get older and they start to lose some abilities, due to the nature of biological human body. Older people get vulnerable in terms of health, their body movements slow down, the communication rate between neurons on their brain decreases which might cause mental problems, etc. Thus, their dependency on other people increases, in order to maintain their lives properly. In other words, older people may need to be cared by someone else, due to deformation of their bodies. However, some of the old people aren’t lucky to find someone to receive their support. For those people, the good news is that the technology and artificial intelligence is developing and one of the great applications is care robots for elder people. Although “care robots” is a great idea and most likely to ease the human life, the technology brings some concerns and ambiguities along with it. Since “communication with elder people” is an essential for caring, the benefit of care robots increases as the communication between the user and the robot gets clearer and easier. Moreover, emotion is one of the most important tools for communication, that’s why we aim to investigate the use of emotion recognition on care robots with the help of artificial intelligence. Our main goal is to make the communication between an old person and the robot more powerful by making the robot understand the current emotional situation of the user and behave correspondingly.

Users and their needs

The healthcare service: The healthcare services have a big shortage of human careers, which lead to the use of robots to take care of elderly people. The healthcare services want to provide care robots that are as similar as possible to the human careers.

Elderly people: The elderly people need someone to take care of them both physically, and also share their emotions with, so the care robot need to understand his/her emotions. That way it can understand what the person needs and act based on that.

Enterprises who develop the care robot: The enterprise aim to improve the care robots technology and make it as effective as a human career by adding features that can allow the robot to interact emotionally with people.

USE analysis

Users: <br\> The population of old people keeps growing in a high rate. In Europe, citizens aged 65 and over comprised 20.3 of the population in 2019(1) and it’s expected to keep increasing every year. <br\> As people get older, not only they need someone to take care of them physically, but they also need human interactions, share their emotions, otherwise they become vulnerable to loneliness and social isolation. The leader of Britain’s GPs said in 2017 :“Being lonely can be as bad for someone’s health as having a long term illness such as diabetes or high blood pressure” (2). The fight against social isolation and loneliness is an essential reason for social companion robots.<br\> Introducing the technology of facial emotion recognition in social companion robots for elderly people will help the robot understand the emotions of the person and react based on that, as for example if the person is sad, the robot may use some techniques to cheer up the person, or if the person is happy the robot can ask the person to share what made him/her happy. By sharing emotions, the elderly people will feel less lonely, and by understanding the emotion of the person, the robot will know more about what the person really needs.

<br\>

Society:

This technology can have a big impact on the society. In many countries, the healthcare system can’t provide enough careers to cover all the needs, so this turns to be so difficult for the elderly people and especially for their families. Especially because the phenomena of “living alone” at the end of life has grown enormously in many societies in last decades, even in societies in which traditionally there are strong family ties(1). <br\>

Society will benefit from this technology because it will increase the overall happiness by preventing the social isolation and loneliness of the elderly.

<br\>

enterprise: <br\> Over the years, the companies who develop care robots made a huge development in improving the efficiency of the robot, as it started with a simple robot that can only accomplish some basic physical task, to a robot that can communicate in human language with people, then a robot that can entertain the elderly people in different ways. Those companies always aim to improve its robots to satisfy the needs of the users, and adding the facial emotion recognition to the robot will be a big improvement and is expected to rise the desire of elderly people to have one of these care robots. The companies will definitely benefit from this technology as the need of care robots is expected to rise in the coming years. Studies have shown that in year 2050, the population of people over the age of 65 in Europe will represent 28.5% of the population. So this investment will come up with a huge profits to those companies

Approach

The subject of face recognition has strongly developed in the past few years. One can find face recognition and face tracking in daily life in camera's that automate the focus on the faces in view and of course snapchat filters. This technology will be a good starting point for this project. However, thee consistency of face recognition is very low. Factors like skin color, lighting and deformaties of the face are certain to upset most face recognition systems every once in a while. Especially the point of deformaties is important for our target user. As mentioned above, old age comes with many different effects and the decay of skin is especially relevant for this project. Skin on the face begins to hang, wrinkle and colorize at older age and might create issues for our face recognition software. Tuning this software to work with elderly will be the first milestone.

The second milestone will, of course, be the recognition of emotion. Recognition of emotion is complex. It needs a relevant large database to work correctly. Here we will probably come against the same problems. Namely working with older elderly as target group. Our software should be fine tuned to their face structure as well as creating a database that is created from relevant data. At last, the system should be consistent. This is designed to be part of a care robot. Care robots should relieve a part of the care that is given by human professionals and should therefore be consistent enough te actually take some of the work pressure of their hands.

Planning

Week 1:

- Brainstorming ideas

- Decide upon a subject

- Who are the users and their needs

- Study literatures about the topic

Week 2:

- USE aspects analysis

- Gathers RPCs

- Care robots that already exist

- Make research about the elderly opinion and their wishes/concerns

Week3:

- Analyze how facial expressions changes for different emotions <br\>

- Analyze different aspects of this technology ( for example how the robot should react to different emotions ..)

- The use of convolutional Neural Network for facial emotion recognition

Week4:

- Find a database of face pictures that is large enough for CNN training

- Start the implementation of the facial emotion recognition

- Companionship and Human-Robot relations

Week5:

- Implementation of the facial emotion recognition

Week6:

- Testing and improving

- Desinging the robot

Week7:

- Software results analysis

- Desinging facial expressions

- Prepare presentation

Week8:

- Derive conclusions and possible future improvements

- Finalize presentation.

Week9:

- Make quiz for human vs software accuracy comparison

- Finalize wiki page.

Deliverables:

- The wiki page: this will include all the steps we took to make this project, as well as all the analysis we’ve made and results we achieved.

- A software that will be able to recognize facial emotions

- A final presentation of our project

State of the art

Companion robots that already exist

SAM robotic concierge

Luvozo PBC, which focuses on developing solutions for improving the quality of life of elderly people, started testing its product SAM in a leading senior living community in Washington. SAM is a concierge smiling robot that is the same size as a human, it’s task is to check on residents in long term care settings. This robot made the patients more satisfied as they feel that there is someone checking on them all the time, and it reduced the cost of care.<br\>

ROBEAR

Developed by scientists from RIKEN and Sumitomo Riko Company, ROBEAR is a nursing care robot which provides physical help for the elderly patients, such as lifting patients from a bed into a wheelchair, or just help patients who are able to stand up but require assistance. It’s called ROBEAR because it is shaped like a giant gentle bear.

PARO

PARO robot is an advanced interactive robot that is aimed to be used for therapy of elderly people on nursing homes. It’s a robot that is shaped like baby harp seal and it has a calming effect on and elicit emotional responses in patients. It can actively seek out eye contact, respond to touch, cuddle with people, remember faces, and learn actions that generate a favorable reaction. The benefits of PARO robot on elderly people are documented.

Existing robots that are able to perform NLP

Sophia

Robot Sophia is a social humanoid robot developed by Hanson Robotics. She can follow faces, sustain eye contact, recognize individuals and most importantly process human language and speak by using pre-written responses. She is also able to simulate more than 60 facial expressions.

Apple's Siri