PRE2019 3 Group11: Difference between revisions

| (216 intermediate revisions by 5 users not shown) | |||

| Line 1: | Line 1: | ||

<div style="font-family: 'Calibri'; word-wrap: break-word;font-weight: 420; box-shadow: 0px 20px 30px -2px rgba(0,0,0,0.50); padding: 70px; background-color: rgb(255, 255, 253); padding-top: 35px; font-size: 16px; line-height: 2.0; max-width: 1100px; margin-left: auto;margin-right: auto; "> | <div style="font-family: 'Calibri'; word-wrap: break-word;font-weight: 420; box-shadow: 0px 20px 30px -2px rgba(0,0,0,0.50); padding: 70px; background-color: rgb(255, 255, 253); padding-top: 35px; font-size: 16px; line-height: 2.0; max-width: 1100px; margin-left: auto;margin-right: auto; "> | ||

<font size='10'>Emotional | <font size='10'>Emotional Feedback System</font> | ||

| Line 24: | Line 24: | ||

|- | |- | ||

|} | |} | ||

= Introduction = | |||

Nowadays, the global population is growing ever older and as such we are seeing more and more elderly that are in need of care. One | Nowadays, the global population is growing ever older<ref> United Nations Publications. (2019b). World Population Prospects 2019: Data Booklet. World: United Nations.</ref> and as such we are seeing more and more elderly that are in need of care. One of the issues that the elderly face is increasing feelings of loneliness.<ref> Holwerda, T. J., Beekman, A. T. F., Deeg, D. J. H., Stek, M. L., van Tilburg, T. G., Visser, P. J., … Schoevers, R. A. (2011). Increased risk of mortality associated with social isolation in older men: only when feeling lonely? Results from the Amsterdam Study of the Elderly (AMSTEL). Psychological Medicine, 42(4), 843–853. https://doi.org/10.1017/s0033291711001772</ref> This may be due to the loss of close relationships, incapability to maintain relationships or even just increasing isolation from living in a care home. In order to solve this problem, our group has decided to come up with an emotional feedback system which could be used in a social robot that partakes in interaction with the elderly to combat loneliness. Our emotional feedback system uses emotion recognition technology in order to find out how an elderly person is feeling. With this information, the system finds a way to react to and interact with the person to imitate human interaction. The goal of this system is to bring human interaction into the daily life of elderly people who otherwise lack or are completely devoid of human interaction. With our project, we want to improve the social interface of robots interacting with lonely elders by using emotion recognition. | ||

==Project== | == Project == | ||

=== Problem Statement === | === Problem Statement === | ||

We need to be able to detect and recognize emotions. We need to know how a robot should respond to different emotions, in different situations that | We need to be able to detect and recognize emotions. We need to know how a robot should respond to different emotions, in different situations, that imitate human interaction as closely as possible. | ||

=== Objectives === | === Objectives === | ||

* Do research into the state-of-the-art robots, current emotion recognition technologies and the subject in general | * Do research into the state-of-the-art social robots, current emotion recognition technologies and the subject in general | ||

* Analyze the ways in which a robot can communicate with the elderly be it verbal or non-verbal communication | * Analyze the ways in which a robot can communicate with the elderly be it verbal or non-verbal communication | ||

* Provide scenarios in which our hypothetical robot could be used and the actions that robot needs to take | * Provide scenarios in which our hypothetical robot could be used and the actions that a robot needs to take | ||

* Use the gathered information on human interaction to define the | * Use the gathered information on human interaction to define the feedback which the emotional feedback system gives | ||

* Develop emotion recognition software that provides appropriate feedback based on our findings | |||

=== Approach === | === Approach === | ||

To orientate ourselves on the topic, we gave ourselves the assignment to find and read 5 relevant papers each. These papers, and a short summary of their contents, can be found in the appendices (see Appendix A). An USE case analysis was done and we did research on existing social robots and existing emotion recognition technologies. From this research we got an idea of what feedback our system can give and with which method the system can recognize emotions. To research reactions on emotions, we conducted a digital survey and did research into existing literature. Our original intention was to work with a robot, giving it emotion recognition, designing its facial reactions and testing it in elderly care homes. Sadly, this became impossible due to elderly care homes and university closing down at the time at which we are doing this project. Since we could not implement our emotion system in a robot and test it with people, we no longer could get direct feedback of people, which we were planning to use to further improve the feedback our system gives. To compensate for this, we also analysed videos with human interaction. By looking at emotional reactions to different emotions, we got a better idea of how humans respond to various emotions. We combined the results of the survey, the research into the literature and the video analysis to come to a conclusion. | |||

= USE Case Analysis = | = USE Case Analysis = | ||

| Line 56: | Line 47: | ||

The world population is growing older each year with the UN predicting that in 2050, 16% of the people in the world will be over the age of 65.<ref> United Nations Publications. (2019b). World Population Prospects 2019: Data Booklet. World: United Nations.</ref> In the Netherlands alone, the amount of people of age 65 and over is predicted to increase by 26% in 2035.<ref> Smits, C. H. M., van den Beld, H. K., Aartsen, M. J., & Schroots, J. J. F. (2013). Aging in The Netherlands: State of the Art and Science. The Gerontologist, 54(3), 335–343. https://doi.org/10.1093/geront/gnt096</ref> This means that our population is growing ever older and thus having facilities in place to help and take care of the elderly is becoming a greater necessity. A key issue that is present in the elderly today is a lack of social communication and interaction. In a study performed in the Netherlands, of a group of 4001 elderly individuals, 21% reported that they experienced feelings of loneliness.<ref> Holwerda, T. J., Beekman, A. T. F., Deeg, D. J. H., Stek, M. L., van Tilburg, T. G., Visser, P. J., … Schoevers, R. A. (2011). Increased risk of mortality associated with social isolation in older men: only when feeling lonely? Results from the Amsterdam Study of the Elderly (AMSTEL). Psychological Medicine, 42(4), 843–853. https://doi.org/10.1017/s0033291711001772</ref> Since the average amount of elderly people is only increasing each year, this problem is becoming more and more relevant. | The world population is growing older each year with the UN predicting that in 2050, 16% of the people in the world will be over the age of 65.<ref> United Nations Publications. (2019b). World Population Prospects 2019: Data Booklet. World: United Nations.</ref> In the Netherlands alone, the amount of people of age 65 and over is predicted to increase by 26% in 2035.<ref> Smits, C. H. M., van den Beld, H. K., Aartsen, M. J., & Schroots, J. J. F. (2013). Aging in The Netherlands: State of the Art and Science. The Gerontologist, 54(3), 335–343. https://doi.org/10.1093/geront/gnt096</ref> This means that our population is growing ever older and thus having facilities in place to help and take care of the elderly is becoming a greater necessity. A key issue that is present in the elderly today is a lack of social communication and interaction. In a study performed in the Netherlands, of a group of 4001 elderly individuals, 21% reported that they experienced feelings of loneliness.<ref> Holwerda, T. J., Beekman, A. T. F., Deeg, D. J. H., Stek, M. L., van Tilburg, T. G., Visser, P. J., … Schoevers, R. A. (2011). Increased risk of mortality associated with social isolation in older men: only when feeling lonely? Results from the Amsterdam Study of the Elderly (AMSTEL). Psychological Medicine, 42(4), 843–853. https://doi.org/10.1017/s0033291711001772</ref> Since the average amount of elderly people is only increasing each year, this problem is becoming more and more relevant. | ||

A lot of factors affect life quality in old age. Being healthy is a complex state including physical, mental and emotional well being. The quality of life of the elderly is impacted by feeling of loneliness and social isolation.<ref> Luanaigh, C. Ó., & Lawlor, B. A. (2008). Loneliness and the health of older people. International Journal of Geriatric Psychiatry, 23(12), 1213–1221. https://doi.org/10.1002/gps.2054</ref> The elderly are especially prone to feeling lonely due to living alone and having fewer and fewer intimate relationships.<ref> Victor, C. R., & Bowling, A. (2012). A Longitudinal Analysis of Loneliness Among Older People in Great Britain. The Journal of Psychology, 146(3), 313–331. https://doi.org/10.1080/00223980.2011.609572</ref> | A lot of factors affect life quality in old age. Being healthy is a complex state including physical, mental and emotional well being. The quality of life of the elderly is impacted by a feeling of loneliness and social isolation.<ref> Luanaigh, C. Ó., & Lawlor, B. A. (2008). Loneliness and the health of older people. International Journal of Geriatric Psychiatry, 23(12), 1213–1221. https://doi.org/10.1002/gps.2054</ref> The elderly are especially prone to feeling lonely due to living alone and having fewer and fewer intimate relationships.<ref> Victor, C. R., & Bowling, A. (2012). A Longitudinal Analysis of Loneliness Among Older People in Great Britain. The Journal of Psychology, 146(3), 313–331. https://doi.org/10.1080/00223980.2011.609572</ref> | ||

Loneliness is a state in which people don’t feel understood, where they lack social interaction and they feel psychological stress due to this isolation.<ref> Şar, A. H., Göktürk, G. Y., Tura, G., & Kazaz, N. (2012). Is the Internet Use an Effective Method to Cope With Elderly Loneliness and Decrease Loneliness Symptom? Procedia - Social and Behavioral Sciences, 55, 1053–1059. https://doi.org/10.1016/j.sbspro.2012.09.597</ref> We distinguish | Loneliness is a state in which people don’t feel understood, where they lack social interaction and they feel psychological stress due to this isolation.<ref> Şar, A. H., Göktürk, G. Y., Tura, G., & Kazaz, N. (2012). Is the Internet Use an Effective Method to Cope With Elderly Loneliness and Decrease Loneliness Symptom? Procedia - Social and Behavioral Sciences, 55, 1053–1059. https://doi.org/10.1016/j.sbspro.2012.09.597</ref> We distinguish two types of loneliness: social isolation and emotional loneliness.<ref> DiTommaso, E., & Spinner, B. (1997). Social and emotional loneliness: A re-examination of weiss’ typology of loneliness. Personality and Individual Differences, 22(3), 417–427. https://doi.org/10.1016/s0191-8869(96)00204-8</ref> Social isolation is when a person lacks in interactions with other individuals.<ref> Gardiner, C., Geldenhuys, G., & Gott, M. (2016). Interventions to reduce social isolation and loneliness among older people: an integrative review. Health & Social Care in the Community, 26(2), 147–157. https://doi.org/10.1111/hsc.12367</ref> Emotional loneliness refers to the state of mind in which one feels alone and out of touch with the rest of the world.<ref> Weiss, R. (1975). Loneliness: The Experience of Emotional and Social Isolation (MIT Press) (New edition). Amsterdam, Netherlands: Amsterdam University Press.</ref> Although different concepts, they are two sides of the same coin and there is quite a clear overlap between the two.<ref> Golden, J., Conroy, R. M., Bruce, I., Denihan, A., Greene, E., Kirby, M., & Lawlor, B. A. (2009). Loneliness, social support networks, mood and wellbeing in community-dwelling elderly. International Journal of Geriatric Psychiatry, 24(7), 694–700. https://doi.org/10.1002/gps.2181</ref> The effect of loneliness in the elderly from mental to physical health issues can be quite profound. Research shows that such loneliness can lead to depression, cardiovascular disease, general health issues, loss of cognitive functions, and increased risk of mortality.<ref> Courtin, E., & Knapp, M. (2015). Social isolation, loneliness and health in old age: a scoping review. Health & Social Care in the Community, 25(3), 799–812. https://doi.org/10.1111/hsc.12311</ref> | ||

In order to aid the elderly with | In order to aid the elderly with the Emotional Feedback System (EFS), it will have to communicate effectively with them.<ref> Hollinger, L. M. (1986). Communicating With the Elderly. Journal of Gerontological Nursing, 12(3), 8–9. https://doi.org/10.3928/0098-9134-19860301-05</ref> One of the best ways to communicate with the elderly population is to use a mixture of verbal and non-verbal communication. At this age, people tend to have communication issues due to cognitive problems and sensory problems thus effective communication can be a challenge. To facilitate interaction between the robot and the elderly, we must take into consideration the specificity of this age group. Older people respond better to carers who show empathy and compassion, take their time to listen and show respect and try to build a rapport with the person to make them feel comfortable. Instead of being forced to do things and being ordered around, it helps them feel at ease when they are given questions and offered choices. Elderly people like to feel in control of their life as much as possible.<ref> Ni, P. I. (2014, November 16). How to Communicate Effectively With Older Adults. Retrieved February 19, 2020, from https://www.psychologytoday.com/us/blog/communication-success/201411/how-communicate-effectively-older-adults</ref> | ||

==Society== | ==Society== | ||

With the advent of the 21st century, technology has grown exponentially and in an unpredictable manner. With this growth came progress in all areas of human life. However a seemingly unavoidable downside to this growth has been increased social isolation. The use of smartphones and social media gives people less incentive to go out and converse or have meaningful social interaction. The elderly already suffer from such social isolation due to loss of family members and loved ones and as they get older it becomes tough to maintain social contact. With a rise in the overall age of the population, there will be a growing number of lonely elderly people. Care homes are understaffed and care workers are already overworked, it is inevitable that a new solution needs to be found. A clear solution is the use of care robots to help keep the elderly occupied and overall improve their living situation. Japan is a leading force in the development of this technology as the country is already facing the penury of workers and the rise of the elderly population. A robot cannot fully replace the need | With the advent of the 21st century, technology has grown exponentially and in an unpredictable manner. With this growth came progress in all areas of human life. However, a seemingly unavoidable downside to this growth has been increased social isolation. The use of smartphones and social media gives people less incentive to go out and converse or have meaningful social interaction. The elderly already suffer from such social isolation due to the loss of family members and loved ones and as they get older it becomes tough to maintain social contact. With a rise in the overall age of the population, there will be a growing number of lonely elderly people. Care homes are understaffed and care workers are already overworked, it is inevitable that a new solution needs to be found. A clear solution is the use of care robots to help keep the elderly occupied and overall improve their living situation. Japan is a leading force in the development of this technology as the country is already facing the penury of workers and the rise of the elderly population. A robot cannot fully replace the need for human-to-human social interaction but in many ways, it can provide benefits that a human cannot. Since a robot never sleeps it can always tend to the elderly patient. Furthermore, using neural networks and data gathering technologies, the robot can learn the best ways in which to interact with the person in order to relieve them of their loneliness and be a ‘friend’ figure that knows what they need and when they need it. Research has shown that care robots in the elderly home do bring about positive effects to mood and loneliness.<ref> Broekens, J., Heerink, M., & Rosendal, H. (2009). Assistive social robots in elderly care: a review. Gerontechnology, 8(2). https://doi.org/10.4017/gt.2009.08.02.002.00</ref> | ||

A few issues arise when trying to implement such robots into the lives of the elderly. One of the main ones is that the person's autonomy should be preserved. A study shows that when questioned, people agreed that out of a few key values that the robot should provide, autonomy was the most important.<ref> Draper, H., & Sorell, T. (2016b). Ethical values and social care robots for older people: an international qualitative study. Ethics and Information Technology, 19(1), 49–68. https://doi.org/10.1007/s10676-016-9413-1</ref> This means that although | A few issues arise when trying to implement such robots into the lives of the elderly. One of the main ones is that the person's autonomy should be preserved. A study shows that when questioned, people agreed that out of a few key values that the robot should provide, autonomy was the most important.<ref> Draper, H., & Sorell, T. (2016b). Ethical values and social care robots for older people: an international qualitative study. Ethics and Information Technology, 19(1), 49–68. https://doi.org/10.1007/s10676-016-9413-1</ref> This means that although the EFS is meant to help reduce loneliness in the elderly, it should not impede that person's autonomy. This further leads to the case in which the robot is pressing things onto an elderly person and they don’t have the mental or physical capacities to agree with what the robot wants to do. To avoid such issues, it is clear that the EFS should mainly be applied to the elderly that are both physically and mentally healthy. Another issue is the case of privacy. For the EFS to correctly function and optimally aid the patient, it is necessary that it gathers a reasonable amount of data on the person in order to respond in kind. Video footage will be recorded in order to correctly grasp the person's emotions and robots will have access to sensitive information and private data in order to perform their tasks. This means that strict policies will need to be put in place to make sure their privacy is respected. Furthermore, the elderly should be in control of this information and have all rights to allow what the robot should and should not be storing. This immense amount of data could have ethical and commercial repercussions. It is very easy to use such data to profile a person and make targeted advertisements for example. Thus there should be a guarantee that the data will not be sold to other companies and it is stored safely such that only the elderly or care home workers would have access to it. | ||

==Enterprise== | ==Enterprise== | ||

The world is moving towards a more technological age and with it come new and innovative business opportunities. Social robots are becoming more and more apparent and many companies have been developed to focus on creating such robots.<ref> Lathan, C. G. E. L. (2019, July 1). Social Robots Play Nicely with Others. Retrieved from https://www.scientificamerican.com/article/social-robots-play-nicely-with-others/</ref> There have been quite a lot of advancements related to | The world is moving towards a more technological age and with it come new and innovative business opportunities. Social robots are becoming more and more apparent and many companies have been developed to focus on creating such robots.<ref> Lathan, C. G. E. L. (2019, July 1). Social Robots Play Nicely with Others. Retrieved from https://www.scientificamerican.com/article/social-robots-play-nicely-with-others/</ref> There have been quite a lot of advancements related to the EFS through businesses such as Affectiva developing emotion recognition software and many different care robots being made i.e. Stevie the robot. However, there is a gap in the market for a robot that can read the emotions of the elderly and respond in a positive manner to the benefit of the elderly. As we have seen, the technology is available and thus taking this step is not too far fetched. With the increasing amount of elderly and care homes having high turnover rates due to burnout, the need for such robots is quite high, meaning there will be demand for this technology.<ref> Costello, H., Cooper, C., Marston, L., & Livingston, G. (2019). Burnout in UK care home staff and its effect on staff turnover: MARQUE English national care home longitudinal survey. Age and Ageing, 49(1), 74–81. https://doi.org/10.1093/ageing/afz118</ref> Although some care homes would welcome this technology to alleviate the loss of employees, some may disagree with using a robot to provide social interaction. However, the labor force in care homes is drastically decreasing due to hard working conditions and low pay rates. This means that it is almost inevitable that some solution will need to be implemented in the near future. Since there is a market for this technology and many companies are already building care robots, investing in the EFS proposal would be very plausible as it is clear that there is profit to be made. | ||

Taking care of the elderly requires good communication skills, empathy and showing concern. The elderly population covers a number of people from various social circles, with different cultures, ages, goals and abilities. To take care of this population in the best way possible, we need a wide variety of techniques and knowledge. | Taking care of the elderly requires good communication skills, empathy, and showing concern. The elderly population covers a number of people from various social circles, with different cultures, ages, goals and abilities. To take care of this population in the best way possible, we need a wide variety of techniques and knowledge. | ||

= State-of-the-art = | = State-of-the-art = | ||

== Current emotion recognition technologies == | == Current emotion recognition technologies == | ||

Developing an interface that can respond to the emotion of a person starts with choosing a way to read and recognize this emotion. There are many techniques to utilize while recognizing emotion, three common techniques are facial recognition, measuring physiological signals and speech recognition. Emotion recognition using facial recognition can be extended to analyze the full body of the person(e.g. gestures), other combinations of techniques are also possible. A 2009 study proposed such a combination of different methods. The proposed method used facial expressions and physiological signals like heart rate and skin conductivity to classify four emotions of users; joy, fear, surprise and love. <ref>Chang, C.-Y., Tsai, J.-S., Wang, C.-J., & Chung, P.-C. (2009). Emotion recognition with consideration of facial expression and physiological signals. 2009 IEEE Symposium on Computational Intelligence in Bioinformatics and Computational Biology. https://doi.org/10.1109/cibcb.2009.4925739 </ref> | Developing an interface that can respond to the emotion of a person starts with choosing a way to read and recognize this emotion. There are many techniques to utilize while recognizing emotion, three common techniques are facial recognition, measuring physiological signals and speech recognition. Emotion recognition using facial recognition can be extended to analyze the full body of the person(e.g. gestures), other combinations of techniques are also possible. A 2009 study proposed such a combination of different methods. The proposed method used facial expressions and physiological signals like heart rate and skin conductivity to classify four emotions of users; joy, fear, surprise, and love. <ref>Chang, C.-Y., Tsai, J.-S., Wang, C.-J., & Chung, P.-C. (2009). Emotion recognition with consideration of facial expression and physiological signals. 2009 IEEE Symposium on Computational Intelligence in Bioinformatics and Computational Biology. https://doi.org/10.1109/cibcb.2009.4925739 </ref> | ||

The most intuitive way to detect emotion is using facial recognition software<ref>Zhentao Liu, Min Wu, Weihua Cao, Luefeng Chen, Jianping Xu, Ri Zhang, Mengtian Zhou, Junwei Mao. (2017). A Facial Expression Emotion Recognition Based Human-robot Interaction System. IEEE/CAA Journal of Automatica Sinica, 4(4), 668-676</ref>. Emotional recognition using facial recognition is easy to set up and the only thing needed is a camera and code. So these factors together make the facial recognition technique user-friendly. However, humans can fake emotions, this way a person can trick the recognition software. When extending the technique with full body analysis, the result can be more accurate, depending on how much body language the person uses. But also body language can be faked. | The most intuitive way to detect emotion is using facial recognition software<ref>Zhentao Liu, Min Wu, Weihua Cao, Luefeng Chen, Jianping Xu, Ri Zhang, Mengtian Zhou, Junwei Mao. (2017). A Facial Expression Emotion Recognition Based Human-robot Interaction System. IEEE/CAA Journal of Automatica Sinica, 4(4), 668-676</ref>. Emotional recognition using facial recognition is easy to set up and the only thing needed is a camera and code. So these factors together make the facial recognition technique user-friendly. However, humans can fake emotions, this way a person can trick the recognition software. When extending the technique with full-body analysis, the result can be more accurate, depending on how much body language the person uses. But also body language can be faked. | ||

There are many papers about using physiological signals to detect emotions<ref>Haag, A., Goronzy, S., Schaich, P., & Williams, J. (2004). Emotion recognition using bio-sensors: First steps towards an automatic system. In Lecture Notes in Artificial Intelligence (Subseries of Lecture Notes in Computer Science) (Vol. 3068, pp. 36–48). Springer Verlag. https://doi.org/10.1007/978-3-540-24842-2_4</ref><ref>Jerritta, S., Murugappan, M., Nagarajan, R., & Wan, K. (2011). Physiological signals based human emotion recognition: A review. In Proceedings - 2011 IEEE 7th International Colloquium on Signal Processing and Its Applications, CSPA 2011 (pp. 410–415). https://doi.org/10.1109/CSPA.2011.5759912</ref><ref>Kim, K. H., Bang, S. W., & Kim, S. R. (2004). Emotion recognition system using short-term monitoring of physiological signals. Medical and Biological Engineering and Computing, 42(3), 419–427. https://doi.org/10.1007/BF02344719</ref><ref>Shu, L., Xie, J., Yang, M., Li, Z., Li, Z., Liao, D., … Yang, X. (2018, July 1). A review of emotion recognition using physiological signals. Sensors (Switzerland). MDPI AG. https://doi.org/10.3390/s18072074</ref><ref>Zong, C., & Chetouani, M. (2009). Hilbert-Huang transform based physiological signals analysis for emotion recognition. 2009 IEEE International Symposium on Signal Processing and Information Technology (ISSPIT). https://doi.org/10.1109/isspit.2009.5407547 </ref>. The papers discuss and research detecting physiological signals like heart rate, skin temperature, skin conductivity and respiration rate to infer the person’s emotion. To measure these physiological signals, biosensors, | There are many papers about using physiological signals to detect emotions<ref>Haag, A., Goronzy, S., Schaich, P., & Williams, J. (2004). Emotion recognition using bio-sensors: First steps towards an automatic system. In Lecture Notes in Artificial Intelligence (Subseries of Lecture Notes in Computer Science) (Vol. 3068, pp. 36–48). Springer Verlag. https://doi.org/10.1007/978-3-540-24842-2_4</ref><ref>Jerritta, S., Murugappan, M., Nagarajan, R., & Wan, K. (2011). Physiological signals based human emotion recognition: A review. In Proceedings - 2011 IEEE 7th International Colloquium on Signal Processing and Its Applications, CSPA 2011 (pp. 410–415). https://doi.org/10.1109/CSPA.2011.5759912</ref><ref>Kim, K. H., Bang, S. W., & Kim, S. R. (2004). Emotion recognition system using short-term monitoring of physiological signals. Medical and Biological Engineering and Computing, 42(3), 419–427. https://doi.org/10.1007/BF02344719</ref><ref>Shu, L., Xie, J., Yang, M., Li, Z., Li, Z., Liao, D., … Yang, X. (2018, July 1). A review of emotion recognition using physiological signals. Sensors (Switzerland). MDPI AG. https://doi.org/10.3390/s18072074</ref><ref>Zong, C., & Chetouani, M. (2009). Hilbert-Huang transform based physiological signals analysis for emotion recognition. 2009 IEEE International Symposium on Signal Processing and Information Technology (ISSPIT). https://doi.org/10.1109/isspit.2009.5407547 </ref>. The papers discuss and research detecting physiological signals like heart rate, skin temperature, skin conductivity, and respiration rate to infer the person’s emotion. To measure these physiological signals, biosensors, electrocardiographic, and electromyography are used. Even though these methods show more reliable results compared to methods like facial recognition, they all require some effort to set up and are not particularly user-friendly. | ||

Lastly, speech recognition can be used to detect emotions<ref>Park, J.-S., Kim, J.-H., & Oh, Y.-H. (2009). Feature vector classification based speech emotion recognition for service robots. IEEE Transactions on Consumer Electronics, 55(3), 1590–1596. https://doi.org/10.1109/tce.2009.5278031 </ref>. Different emotions can be recognized by analyzing the tone and pitch of a person’s voice. Of course, the norm differs from person to person and from region to region. In addition, the tone of voice for some emotions are very similar, this makes it hard to classify emotions purely based on voice. And similarly to facial expressions, speech can also be faked. | Lastly, speech recognition can be used to detect emotions<ref>Park, J.-S., Kim, J.-H., & Oh, Y.-H. (2009). Feature vector classification based speech emotion recognition for service robots. IEEE Transactions on Consumer Electronics, 55(3), 1590–1596. https://doi.org/10.1109/tce.2009.5278031 </ref>. Different emotions can be recognized by analyzing the tone and pitch of a person’s voice. Of course, the norm differs from person to person and from region to region. In addition, the tone of voice for some emotions are very similar, this makes it hard to classify emotions purely based on voice. And similarly to facial expressions, speech can also be faked. | ||

Many papers about emotion recognition methods have a similar approach to classify emotions. First they preprocess existing data sets and extract features from this data. After that they can start classifying the data using classification methods. The difference between the papers lies in the use of different methods to extract features<ref>Zong, C., & Chetouani, M. (2009). Hilbert-Huang transform based physiological signals analysis for emotion recognition. 2009 IEEE International Symposium on Signal Processing and Information Technology (ISSPIT). https://doi.org/10.1109/isspit.2009.5407547 </ref> and different ways to classify the data<ref>Chang, C.-Y., Tsai, J.-S., Wang, C.-J., & Chung, P.-C. (2009). Emotion recognition with consideration of facial expression and physiological signals. 2009 IEEE Symposium on Computational Intelligence in Bioinformatics and Computational Biology. https://doi.org/10.1109/cibcb.2009.4925739 </ref><ref>Zhentao Liu, Min Wu, Weihua Cao, Luefeng Chen, Jianping Xu, Ri Zhang, Mengtian Zhou, Junwei Mao. (2017). A Facial Expression Emotion Recognition Based Human-robot Interaction System. IEEE/CAA Journal of Automatica Sinica, 4(4), 668-676</ref><ref>Park, J.-S., Kim, J.-H., & Oh, Y.-H. (2009). Feature vector classification based speech emotion recognition for service robots. IEEE Transactions on Consumer Electronics, 55(3), 1590–1596. https://doi.org/10.1109/tce.2009.5278031 </ref>. The classification accuracy ranges from around 90%, with bio sensors and using a neural net classifier<ref>Haag, A., Goronzy, S., Schaich, P., & Williams, J. (2004). Emotion recognition using bio-sensors: First steps towards an automatic system. In Lecture Notes in Artificial Intelligence (Subseries of Lecture Notes in Computer Science) (Vol. 3068, pp. 36–48). Springer Verlag. https://doi.org/10.1007/978-3-540-24842-2_4</ref>, to around 70%, using various physiological signals and a support vector machine as classifier<ref>Kim, K. H., Bang, S. W., & Kim, S. R. (2004). Emotion recognition system using short-term monitoring of physiological signals. Medical and Biological Engineering and Computing, 42(3), 419–427. https://doi.org/10.1007/BF02344719</ref>. | Many papers about emotion recognition methods have a similar approach to classify emotions. First, they preprocess existing data sets and extract features from this data. After that, they can start classifying the data using classification methods. The difference between the papers lies in the use of different methods to extract features<ref>Zong, C., & Chetouani, M. (2009). Hilbert-Huang transform based physiological signals analysis for emotion recognition. 2009 IEEE International Symposium on Signal Processing and Information Technology (ISSPIT). https://doi.org/10.1109/isspit.2009.5407547 </ref> and different ways to classify the data<ref>Chang, C.-Y., Tsai, J.-S., Wang, C.-J., & Chung, P.-C. (2009). Emotion recognition with consideration of facial expression and physiological signals. 2009 IEEE Symposium on Computational Intelligence in Bioinformatics and Computational Biology. https://doi.org/10.1109/cibcb.2009.4925739 </ref><ref>Zhentao Liu, Min Wu, Weihua Cao, Luefeng Chen, Jianping Xu, Ri Zhang, Mengtian Zhou, Junwei Mao. (2017). A Facial Expression Emotion Recognition Based Human-robot Interaction System. IEEE/CAA Journal of Automatica Sinica, 4(4), 668-676</ref><ref>Park, J.-S., Kim, J.-H., & Oh, Y.-H. (2009). Feature vector classification based speech emotion recognition for service robots. IEEE Transactions on Consumer Electronics, 55(3), 1590–1596. https://doi.org/10.1109/tce.2009.5278031 </ref>. The classification accuracy ranges from around 90%, with bio sensors and using a neural net classifier<ref>Haag, A., Goronzy, S., Schaich, P., & Williams, J. (2004). Emotion recognition using bio-sensors: First steps towards an automatic system. In Lecture Notes in Artificial Intelligence (Subseries of Lecture Notes in Computer Science) (Vol. 3068, pp. 36–48). Springer Verlag. https://doi.org/10.1007/978-3-540-24842-2_4</ref>, to around 70%, using various physiological signals and a support vector machine as classifier<ref>Kim, K. H., Bang, S. W., & Kim, S. R. (2004). Emotion recognition system using short-term monitoring of physiological signals. Medical and Biological Engineering and Computing, 42(3), 419–427. https://doi.org/10.1007/BF02344719</ref>. | ||

Since our | Since our Emotional Feedback System(EFS) is going to be intended for a service robot, it needs to be user-friendly. This means using physiological signals to detect emotion is a bad choice. Assuming that the user does not fake their emotions since the user does not benefit from that, we are going to use only facial recognition in our system. Due to time restrictions and our main emphasis not being on the emotion recognition part, we decided not to use speech recognition. This could be added in the future to improve upon our system. | ||

== Current robots == | == Current robots == | ||

There are a | There are a bunch of robots currently on the market that would be capable of applying our system to, in an effective manner, if the project were to be applied to a real-life application. | ||

We | We looked into a few robots that are suited for this project based on the capability of them to convey facial emotion in a human fashion, as well as some practicality for in-home use. | ||

===Haru=== | ===Haru=== | ||

[[File:Haru.png|thumb|300px|alt=Alt text|Figure 1: The Haru robot. ]] | [[File:Haru.png|thumb|300px|alt=Alt text|Figure 1: The Haru robot.<ref>Honda Research Institute. (2018, August 15). Haru side and front views. [Photograph]. Retrieved from https://spectrum.ieee.org/automaton/robotics/home-robots/haru-an-experimental-social-robot-from-honda-research</ref> ]] | ||

Haru<ref>Ackerman, E. (2018, August 15). Haru: An Experimental Social Robot From Honda Research. Retrieved April 1, 2020, from https://spectrum.ieee.org/automaton/robotics/home-robots/haru-an-experimental-social-robot-from-honda-research</ref> is a social robot that can convey facial emotions using the two screens as eyes, and using the RGB lights in the base of the robot as a mouth. | Haru<ref>Ackerman, E. (2018, August 15). Haru: An Experimental Social Robot From Honda Research. Retrieved April 1, 2020, from https://spectrum.ieee.org/automaton/robotics/home-robots/haru-an-experimental-social-robot-from-honda-research</ref> is a social robot that can convey facial emotions using the two screens as eyes, and using the RGB lights in the base of the robot as a mouth. | ||

Pros of the robot, in context of our project: | Pros of the robot, in the context of our project: | ||

*Can convey facial emotion with the eyes and the mouth. | *Can convey facial emotion with the eyes and the mouth. | ||

*Small, so the robot does not take up that much space in the clients house. | *Small, so the robot does not take up that much space in the clients house. | ||

| Line 105: | Line 95: | ||

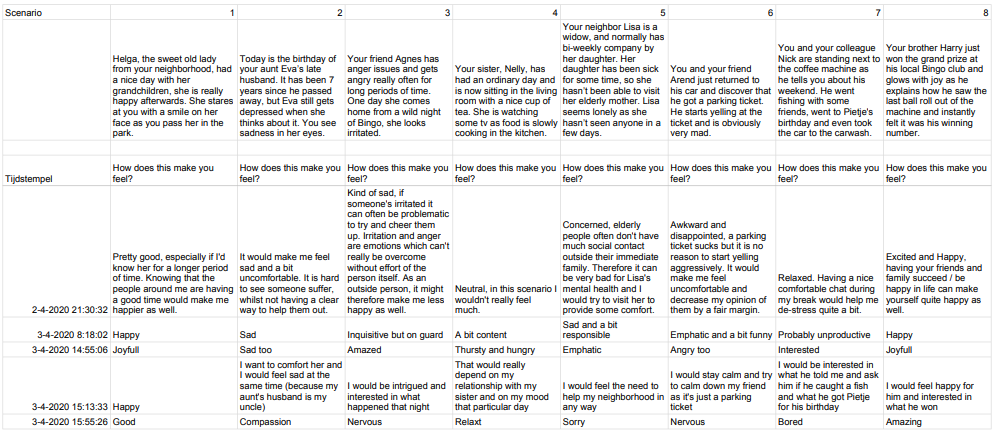

[[File:NAO.png|thumb|300px|alt=Alt text|Figure 2: The NAO humanoid robot. ]] | [[File:NAO.png|thumb|300px|alt=Alt text|Figure 2: The NAO humanoid robot. ]] | ||

NAO<ref>Softbank Robotics. (n.d.). NAO the humanoid and programmable robot. Retrieved April 1, 2020, from https://www.softbankrobotics.com/emea/en/nao</ref> is a humanoid robot which can move its entire body, and has been used in a widespread of functions, like teaching autistic children as the children found the robot to be more relatable. | NAO<ref>Softbank Robotics. (n.d.). NAO the humanoid and programmable robot. Retrieved April 1, 2020, from https://www.softbankrobotics.com/emea/en/nao</ref> is a humanoid robot which can move its entire body, and has been used in a widespread of functions, like teaching autistic children as the children found the robot to be more relatable. | ||

====ZORA bot==== | ====ZORA bot (NAO robot)==== | ||

Utilization of the NAO robot is the ZORA<ref>Zora Robotics. (n.d.). Zora robot voor activering. Retrieved April 1, 2020, from https://www.robotzorg.nl/product/zora-robot-voor-activering/</ref> robot. This robot has specific software designed to turn the robot into a social robot so the robot can aid in entertainment purposes or to stimulate physical activities of clients in residential care. | |||

Pros of the robot, in context of our project: | Pros of the robot, in context of our project: | ||

*Very good at expressing bodily gestures. | *Very good at expressing bodily gestures. | ||

| Line 114: | Line 105: | ||

===PARO Therapeutic robot=== | ===PARO Therapeutic robot=== | ||

[[File:PARO.png|thumb|300px|alt=Alt text|Figure 3: The PARO Therapeutic Robot. ]] | [[File:PARO.png|thumb|300px|alt=Alt text|Figure 3: The PARO Therapeutic Robot.<ref>The PARO Therapeutic Robot. (2016, November 8). [Photograph]. Retrieved from http://tombattey.com/design/case-study-paro/</ref> ]] | ||

The PARO Therapeutic robot<ref>PARO robots. (n.d.). PARO Therapeutic Robot. Retrieved April 1, 2020, from http://www.parorobots.com/whitepapers.asp</ref> is an animatronic seal that can interact with humans, with a main focus of reducing a patient’s stress and helping a patient be more relaxed and more motivated. PARO is deemed the most therapeutic robot by Guinness World Records. | The PARO Therapeutic robot<ref>PARO robots. (n.d.). PARO Therapeutic Robot. Retrieved April 1, 2020, from http://www.parorobots.com/whitepapers.asp</ref> is an animatronic seal that can interact with humans, with a main focus of reducing a patient’s stress and helping a patient be more relaxed and more motivated. PARO is deemed the most therapeutic robot by Guinness World Records. | ||

Pros of the robot, in context of our project: | Pros of the robot, in context of our project: | ||

* | *Can convey emotion by gestures. | ||

*Generally makes the client more relaxed and helps against stress. | *Generally makes the client more relaxed and helps against stress. | ||

Cons of the robot, in context of our project: | Cons of the robot, in context of our project: | ||

| Line 123: | Line 114: | ||

===Pepper=== | ===Pepper=== | ||

[[File:Pepper.png|thumb|200px|alt=Alt text|Figure 4: The Pepper robot. ]] | [[File:Pepper.png|thumb|200px|alt=Alt text|Figure 4: The Pepper robot.<ref>Tokumeigakarinoaoshima , . (2014, July 18). The robot Pepper standing in a retail environment [Photograph]. Retrieved from https://en.wikipedia.org/wiki/Pepper_(robot)#/media/File:SoftBank_pepper.JPG</ref> ]] | ||

“Pepper is a robot designed for people. Built to connect with them, assist them, and share knowledge with them – while helping your business in the process. Friendly and engaging, Pepper creates unique experiences and forms real relationships.”<ref>Softbank Robotics. (n.d.-b). Pepper. Retrieved April 1, 2020, from https://www.softbankrobotics.com/us/pepper</ref> | “Pepper is a robot designed for people. Built to connect with them, assist them, and share knowledge with them – while helping your business in the process. Friendly and engaging, Pepper creates unique experiences and forms real relationships.”<ref>Softbank Robotics. (n.d.-b). Pepper. Retrieved April 1, 2020, from https://www.softbankrobotics.com/us/pepper</ref> | ||

Pepper is a humanoid robot that can talk in a regular fashion, while utilizing bodily gestures, like moving its arms and head. It has a screen on its torso which can be used to show the person it is talking to the things it wants to show. | Pepper is a humanoid robot that can talk in a regular fashion, while utilizing bodily gestures, like moving its arms and head. It has a screen on its torso which can be used to show the person it is talking to the things it wants to show. | ||

Pepper has also been used in a use case with No1 Robotics.<ref>No1Robotics. (n.d.). Current Projects – No1Robotics. Retrieved April 1, 2020, from https://www.no1robotics.com/current-projects/</ref> | Pepper has also been used in a use case with No1 Robotics.<ref>No1Robotics. (n.d.). Current Projects – No1Robotics. Retrieved April 1, 2020, from https://www.no1robotics.com/current-projects/</ref> | ||

Pros of the robot, in context of our project: | Pros of the robot, in the context of our project: | ||

*The screen on the robot can be utilized in many ways. | *The screen on the robot can be utilized in many ways. | ||

*Can make bodily gestures to convey emotions. | *Can make bodily gestures to convey emotions. | ||

Cons of the robot, in context of our project: | Cons of the robot, in the context of our project: | ||

*Designed more typically for businesses, so not very suitable for in home use, as it is fairly large. | *Designed more typically for businesses, so not very suitable for in-home use, as it is fairly large. | ||

*Lacking in facial emotion gestures. | *Lacking in facial emotion gestures. | ||

===Furhat=== | ===Furhat=== | ||

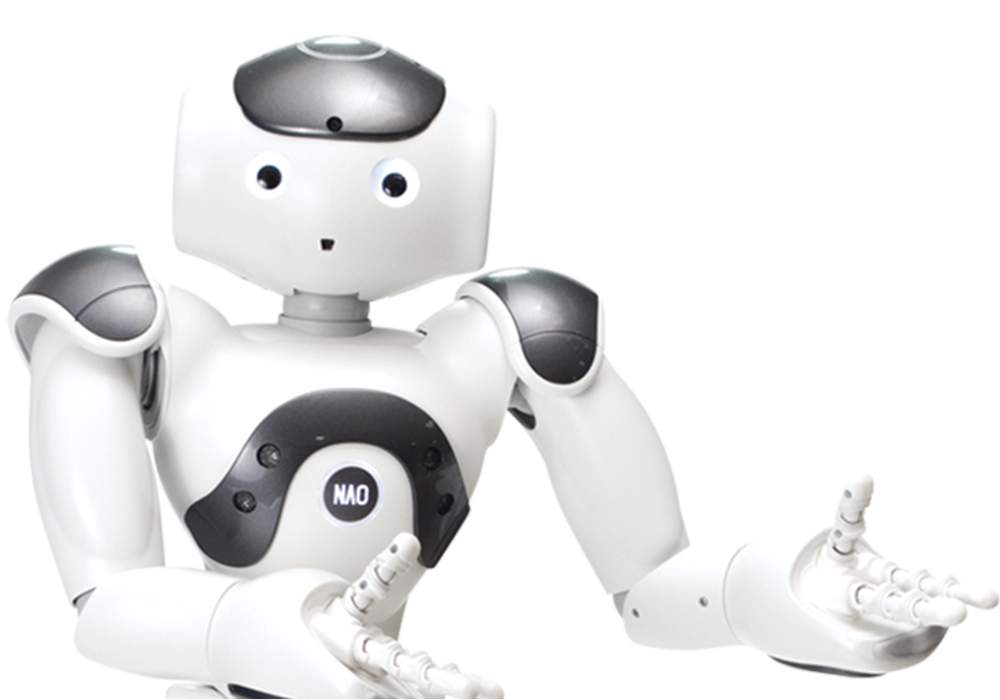

[[File:Furhat.png|thumb|300px|alt=Alt text|Figure 5: The Furhat robot. ]] | [[File:Furhat.png|thumb|300px|alt=Alt text|Figure 5: The Furhat robot.<ref>Engadget. (2018, November 23). Furhat Robotics [Photograph]. Retrieved from https://www.engadget.com/2018-11-23-furhat-social-robot-impressions.html?guccounter=1&guce_referrer=aHR0cHM6Ly93d3cuZ29vZ2xlLm5sLw&guce_referrer_sig=AQAAAGhN1pdIhyFcKWIleq_D3uoMgGPrsb4k39Vs8DanfiPBNK-SB5PhQK0RQJCTaUzqU0ow-n98z5nmPm3O8hca9ZgOFigZ5c9a0eLKgP0pkkar8jYRm_NON6imcis6KNw2No7IJU3l1v05g0_WGMm4x5Tsf_Q_SGCCB6_is_8_UOwo</ref> ]] | ||

Furhat<ref>Furhat Robotics. (n.d.). Meet the Furhat robot. Retrieved April 1, 2020, from https://www.furhatrobotics.com/furhat/</ref> is a social robot | Furhat<ref>Furhat Robotics. (n.d.). Meet the Furhat robot. Retrieved April 1, 2020, from https://www.furhatrobotics.com/furhat/</ref> is a social robot that can talk and has the ability to project and simulate a face which can show emotions and keeps eye contact. The plastic faceplate in front of the screen can be interchanged. Furhat can move its head around freely. | ||

Pros of the robot, in context of our project: | Pros of the robot, in the context of our project: | ||

*Best method of conveying facial expressions. | *Best method of conveying facial expressions. | ||

*Humanlike, so easier to empathize with, which is crucial for this project | *Humanlike, so easier to empathize with, which is crucial for this project | ||

Cons of the robot, in context of our project: | Cons of the robot, in the context of our project: | ||

*On the bigger side for in-home use. | *On the bigger side for in-home use. | ||

===Socibot=== | ===Socibot=== | ||

[[File:Socibot2.jpeg|thumb|300px|alt=Alt text|Figure 6: The Socibot. ]] | [[File:Socibot2.jpeg|thumb|300px|alt=Alt text|Figure 6: The Socibot.<ref>Engineerd Arts. (2014, April 11). SociBot: the “social robot” that knows how you feel [Photograph]. Retrieved from https://www.theguardian.com/artanddesign/2014/apr/11/socibot-the-social-robot-that-knows-how-you-feel</ref> ]] | ||

Like Furhat, the Socibot<ref>Engineered Arts. (n.d.). SociBot | The Robot that can Wear any Face. Retrieved April 1, 2020, from https://www.engineeredarts.co.uk/socibot/</ref> is a social robot that can project a face onto its head. The face can talk and make emotional gestures, and has a moveable head, just like Furhat. | Like Furhat, the Socibot<ref>Engineered Arts. (n.d.). SociBot | The Robot that can Wear any Face. Retrieved April 1, 2020, from https://www.engineeredarts.co.uk/socibot/</ref> is a social robot that can project a face onto its head. The face can talk and make emotional gestures, and has a moveable head, just like Furhat. | ||

The pros and cons would be the same as | The pros and cons would be the same as Furhat. | ||

= Emotion Recognition = | |||

== Software == | |||

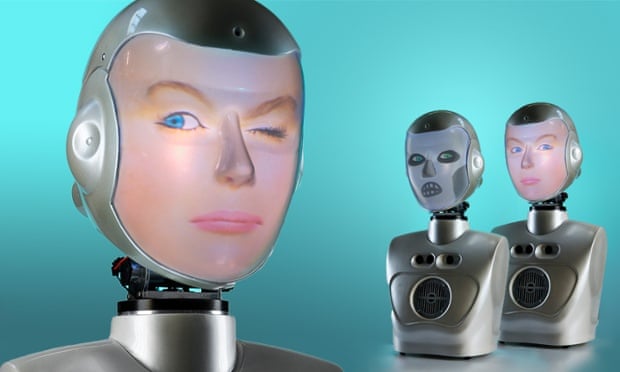

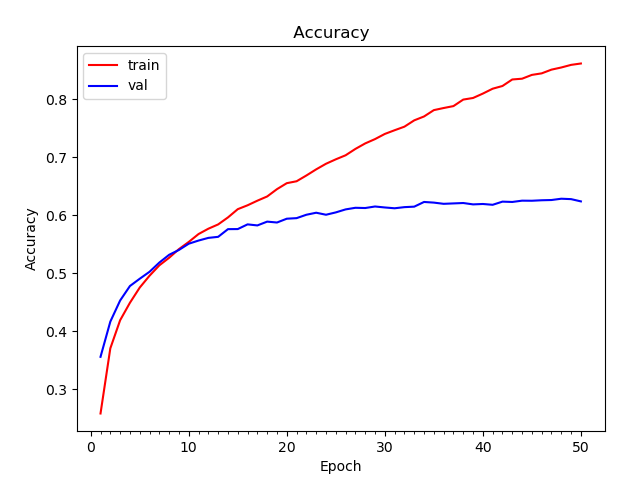

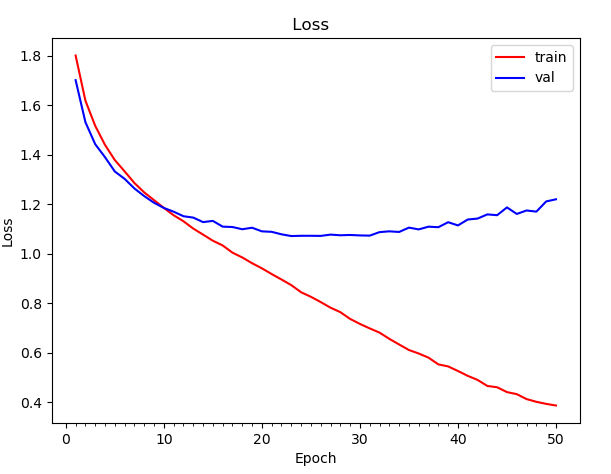

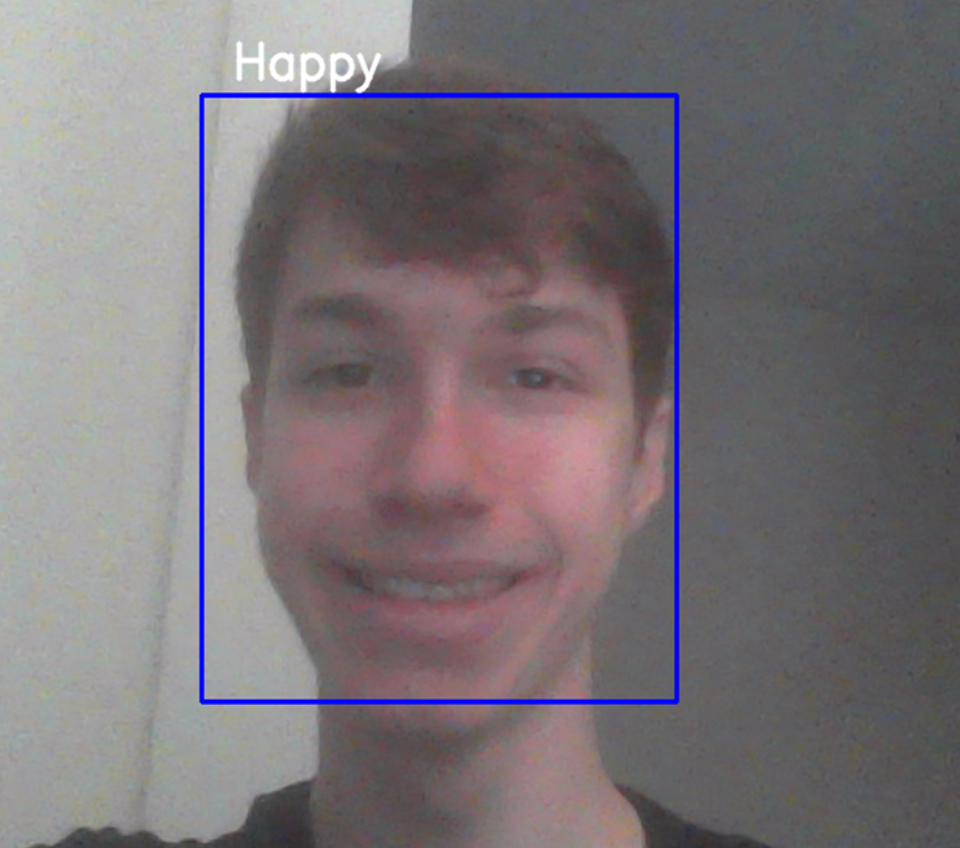

A key aspect to allow the Emotional Feedback System (EFS) to perform is the ability to read the emotions of the elderly and respond in kind. To achieve this, we will use deep convolutional neural networks. Convolutional neural networks excel in image recognition which is ideal for our case as we need to perform emotion recognition. For our situation, we used an already existing piece of emotion recognition software made by Atulapra which classifies emotions using a CNN.<ref>atulapra. Emotion-detection [Computer software]. Retrieved from https://github.com/atulapra/Emotion-detection</ref> The CNN is able to distinguish in real time between seven different emotions: angry, disgusted, fearful, happy, neutral, sad and surprised. In order to get the CNN to properly classify the different emotions, we must first train the model on some data. The dataset that was chosen for training and validation is the FER-2013 dataset. <ref>Pierre-Luc Carrier and Aaron Courville. (16AD). FER-2013 [The Facial Expression Recognition 2013 (FER-2013) Dataset]. Retrieved from https://datarepository.wolframcloud.com/resources/FER-2013</ref> This is because in comparison to other datasets, the images provided are not of people posing and thus it makes it more realistic when comparing it to a webcam feed of an elderly person. Furthermore, this dataset has 35,685 different images and although they are quite low resolution, the quantity makes up for the lack of quality. Now that we have a dataset for training and validation, the network needs to be programmed. With the use of TensorFlow and the Keras API, a 4-layer CNN is constructed. These libraries help lower the complexity of the code and allow us to get extensive feedback on the accuracy and loss of the model. Once the model has been completed, it can then be saved and reused in the future. Using the software, a model was trained using the 4-layer CNN and the accuracy and loss of the model were plotted. Figure x shows the accuracy/epoch with the the validation accuracy being around 60% at 50 epochs and figure y shows the loss/epoch of the model. | |||

[[File:Cnnacc.png|thumb|400px|alt=Alt text|Figure x: A plot of the accuracy/epoch of the model.]] | |||

[[File:Cnnloss.png|thumb|400px|alt=Alt text|Figure y: A plot of the loss/epoch of the model.]] | |||

[[File:Happy.png|thumb|400px|alt=Alt text|Figure 7: The live application of the program. The image shows a face being captured as evident by the blue square. Above it, we can see the current emotion of the user being displayed. ]] | |||

Now that we have a fairly accurate neural network, we just need to apply it to a real-time video feed. Using the OpenCV library, we can capture real-time video and use the cascade classifier in order to perform Haar cascade object detection which allows for facial recognition. The program takes the detected face and scales it to a 48x48 size input which is then fed into the neural network. The network returns the values from the output layer which represents the probabilities of the emotions the user is portraying. The highest probable value is then returned and displayed as white text above the user's face. This can be seen in figure 7. When in use, the software performs relatively well displaying the emotions fairly accurately when the user is face on. However, with poor lighting and at sharp angles, the software starts to struggle in detecting a face which means it cannot properly detect emotions. These aspects are ones to improve on in the future. | |||

With all this information, a basic response program is created as a prototype such that it can be tested. It contains two responses to two of the seven emotions, happy and sad. If the software captures that the user is portraying a happy emotion for an extended period of time, it uses text to speech to say “How was your day?” and outputs an uplifting image to reinforce the person's positive attitude. If the software captures that the user is portraying a sad emotion for an extended period of time, it uses text to speech to say “Let’s watch some videos!” and outputs some funny youtube clips in order to cheer the person up. | |||

== Methods == | |||

The testing was split up into two sections: testing the emotion recognition and testing the response system. Due to unforeseen circumstances, we were not able to test this software with the elderly or even a large quantity of people and thus both tests were done with two siblings. Since both of these tests suffered in the quantity of people tested on due to COVID-19 and the results are only seen as preliminary to give a basic idea of how well the software works. | |||

Firstly, we tested the emotion recognition by having the software running in the background while the participant watches funny or scary videos in order to evoke a reaction. We then recorded the footage and watched it back in order to see if the emotion displayed by the software was indeed similar to the facial expression given by the participant. | |||

The second test revolved around installing the software onto their laptops such that it would trigger at an unexpected time. With their consent, the software was set up such that if it detects a particular emotion much more than the others, it will trigger a response in order to reinforce this emotion or to try to comfort them. The two emotions focused on for the test were happy and sad. On one particular laptop, a response for happy was installed and on the other a response for sad. The responses are as stated above. Once the test was over, the two participants were questioned for feedback. | |||

== Results == | |||

Since the testing was so brief, we cannot draw any conclusive results however it does allow us to see if the software is working as intended and points us in the right direction in order to improve it. | |||

The results of the first test allowed us to see if the neural network was working as intended in a real life application. From this testing alone, it is clear that happy and surprised were the two most well defined expressions such that the emotion displayed matched the facial expression almost every time. This furthered into an issue with a neutral facial expression as the software tends to display surprised instead of neutral. Sad and fearful also seemed to be displayed fairly accurately although there were times where it would display sad/fearful interchangeably even though the participant was clearly one or the other. Lastly the software struggled with displaying digust/anger with these two emotions rarely coming up even though. | |||

The results of the second test provided us with feedback on the current response system in order to further improve it in the future. For this test, we asked the participants to summarize the overall experience in a few sentences. This gives us a rough idea of where the system is working as intended and where it needs to be changed. | |||

Participant 1 (Happy): | |||

“Although it startled me at first, I was quite content that someone asked how my day was going and this gave me the motivation to keep a positive attitude for the rest of the day. It did overall uplift my spirit and I honestly thought it was helpful. I would personally use this software again.” | |||

Participant 2 (Sad): | |||

“To be honest, my day wasn’t going well at all. And when the program triggered and shoved a Youtube video in my face I really didn’t think it was going to make it any better. But it actually did end up helping me feel a bit better. It took my mind off of all the extraneous things I was worrying about and I just spent a good few minutes having a laugh. I could see this being pretty useful if it had varying responses, maybe some different videos!” | |||

== Conclusion == | |||

In conclusion, the first test shows that although brief, the software works quite well at detecting certain emotions in real life scenarios. Some emotions were getting displayed too much whereas others not enough, and this is caused by the accuracy of the neural network. Thus an improvement for the future would be to work on the model in particular using a different training set and expanding the current number of layers. | |||

Although the results are very qualitative as we were lacking people to test it on, the software shows promising results. The participants were both very positive about the software but it is hard to take them fully into account as with such little quantity of results there is no way to see past bias. However, if we do take the results at face value, we can conclude that the response system is rather successful and if implemented in a more subtle manner it could work well. For example, integrating it into a robot such that the response is more seamless and clear to the user what is going to happen next would help a lot to remove the startling element. Since the response felt quite random to the participant, a method needs to be developed to inform the user that a response is happening. | |||

Overall, both tests helped to discern the positives and the negatives of the current state of the software. This testing ended up providing more of anecdotal evidence rather than being a proper scientific test, however it does provide us with indications on what works well in the software and what can be improved. With some tweaking to the responses and further research into improving the accuracy of the neural network, it is very possible that we could see this technology being used in a few years time. | |||

=Emotional feedback = | |||

== Communication with the Elderly: Verbal vs Non-Verbal == | |||

A central aspect of the Emotional Feedback System (EFS) is its ability to effectively communicate with the elderly. In this sense, we would like the EFS to be relatively human-like in such that having a conversation with the EFS is almost indistinguishable from having a conversation with a human. Communication theory states that communication is made of around six basic elements: the source, the sender, the message, the channel, the receiver and the response.<ref>Shannon, C. E. (1948). A Mathematical Theory of Communication. Bell System Technical Journal, 27(3), 379–423. https://doi.org/10.1002/j.1538-7305.1948.tb01338.x</ref>This shows that although communication appears simple, it is actually a very complex interaction and that breaking it down into simple steps for the EFS to do is very improbable. Instead, we may observe already existing human-human interaction and use this as a means to give the EFS lifelike communication. Communication in our case can be split up into two categories: verbal and non-verbal communication. Verbal communication is the act of conveying a message with language whereas non-verbal communication is the act of conveying a message without the use of verbal language.<ref>Caris‐Verhallen, W. M. C. M., Kerkstra, A., & Bensing, J. M. (1997). The role of communications in nursing care for elderly people: a review of the literature. Journal of Advanced Nursing, 25(5), 915–933. https://doi.org/10.1046/j.1365-2648.1997.1997025915.x</ref> | |||

A key source to look at in order to find out how the EFS should communicate is the nurse-elderly interaction as nurses tend to be extremely competent at comforting and easing an elderly patient into a conversation. Communication with older people has its own characteristics; elderly people might have sensory deficits; nurses and patients might have different goals; finally, there is the generation gap that might hinder communication. Robots can easily overcome these difficulties and thus focus on the relational aspect. Nurses need to develop different types of abilities, abilities that might overlap. They must be able to fulfill objectives in terms of physical care and at the same time to establish a good relationship with the patient. These two aspects call upon two types of communication: the instrumental communication which is task-related behavior and effective communication which is a socio-emotional behaviour. There is research from Peplau that shows that depending on the action performed by a nurse the communication varies from only instrumental to fully emotional with a mixed of both in lots of situations. In our case, the EFS would focus on the more emotional aspect of communication to try and build a relationship with the elderly.<ref>Hagerty, T. A., Samuels, W., Norcini-Pala, A., & Gigliotti, E. (2017). Peplau’s Theory of Interpersonal Relations. Nursing Science Quarterly, 30(2), 160–167. https://doi.org/10.1177/0894318417693286</ref> | |||

Nurses tend to use affective behavior in which they express support, concern and empathy.<ref>Caris-Verhallen, W. M. C. M., Kerkstra, A., van der Heijden, P. G. M., & Bensing, J. M. (1998). Nurse-elderly patient communication in home care and institutional care: an explorative study. International Journal of Nursing Studies, 35(1–2), 95–108. https://doi.org/10.1016/s0020-7489(97)00039-4 | |||

</ref>Furthermore, the use of socio-emotional communication such as jokes and personal conversation are often used in order to establish a close relationship. Although conversations come in the form of both verbal and non-verbal communication, studies show that non-verbal behaviour is key in creating a solid relationship with a patient. Actions such as eye gazing, head nodding and smiling are all important aspects in communication with the elderly.<ref>Caris‐Verhallen, W. M. C. M., Kerkstra, A., & Bensing, J. M. (1999). Non‐verbal behaviour in nurse–elderly patient communication. Journal of Advanced Nursing, 29(4), 808–818. https://doi.org/10.1046/j.1365-2648.1999.00965.x | |||

</ref> Another important aspect of communication is touch which serves as both a tool to communicate and show affection. This study shows that the use of touch significantly increased non-verbal responses from patients showing that it helps trigger a communicative response from the patients.<ref>Langland, R. M., & Panicucci, C. L. (1982). Effects of Touch on Communication with Elderly Confused Clients. Journal of Gerontological Nursing, 8(3), 152–155. https://doi.org/10.3928/0098-9134-19820301-09 | |||

</ref>Overall, for successful communication with the elderly, the EFS will have to use a mix of verbal and non-verbal communication with a slight focus on some specific non-verbal behavior such as head nodding and smiling. | |||

== Emotion selection == | |||

This study focuses on facial expressions as a reaction to someone else’s emotion. According to Ekman, there are six basic emotions: anger, disgust, fear, happiness, sadness, surprise <ref>Ekman, P. (1992). Are there basic emotions? Psychological Review, 99(3), 550–553. https://doi.org/10.1037/0033-295x.99.3.550</ref>. Based on Ekman’s six basic emotions, we choose to research four distinct emotions: happiness, anger, sadness, and neutrality. This choice was made based on how prominent the emotions were and how important it was they were reacted to. We added neutrality because we needed a baseline for when none of the other emotions would be present. Our reasoning for leaving out disgust and surprise is that we were of the opinion that people often experience these emotions only for a short moment at a time, that they are less prominent in everyday life than happiness, anger, and sadness and that they are less important to react to. Our reasoning for leaving out fear is that it, while important to be noticed and acted upon, is so uncommon in everyday life that it is still less important than happiness, anger, and sadness. | |||

==Russell's circumplex model of affect== | |||

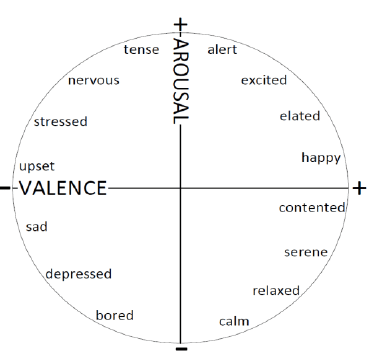

[[File:Russell.png|thumb|600px|alt=Alt text|Figure 8: Russell's circumplex model of affect. ]] | |||

To produce a fitting reaction, a system should be applied where the goal of the system could be set. To achieve this, Russell’s circumplex model of affect could be applied. | |||

Russell’s model<ref>Russell, J. A. (1980). A circumplex model of affect. Retrieved April 3, 2020, from https://psycnet.apa.org/record/1981-25062-001</ref> suggests emotions can be mapped to an approximate circle on two axes; valence and arousal. Having a positive valence means being at ease and feeling pleasant, while having a negative valence means a feeling of unpleasantness. As for arousal, having positive arousal means being very active, as opposed to inactive for a negative arousal. | |||

The purpose of the model related to this project would be to elevate the emotional status of the client. In this case, the valence of the client should increase. As for arousal, it would not be too big of a deal if the arousal was negative, as long as the valence is positive in that situation. A positive arousal with a positive valence would be the set goal. As a fallback, a possibility would be to try to only increase the valence of the client. | |||

This model was chosen as a guideline for this project because it maps distinct emotions to two different values depicted on the axes. This seemed like a good method, as this system would have a way of knowing what the goal should be for a situation. This system, however, should only be a guideline to how the overall reactions should be to certain situations, as the video analysis and the survey results would be the main influences on the system's feedback. The main decision on this is because the goal of the project is to make the interaction with a robot that uses our EFS as human as possible, not to make it the most optimal way to comfort a human, regarding how a robot following the guidelines should react. | |||

== Reaction analysis == | |||

Investigating the four emotions, happiness, anger, sadness, and neutrality is done in two ways. An analysis of video fragments, literature, and a questionnaire. The separate researches are combined because the video analysis gathers qualitative data, but is very time-consuming, meaning that little data is collected. The questionnaire can gather a lot of data, while less in quality. These results can then be combined with literature to form a conclusion of relative certain truth. | |||

The video analysis does not include fragments from movies or series to ensure the fragments obtained from it would be as true to real-life as possible. The video analysis involved finding circumstances in which a certain emotion was displayed in a conversation, and how this was reacted upon. These instances were most easily found in talk shows, as they cover a wide variety in topics, contain spontaneous and real-life conversations and often switch between camera angles (which ensures a reaction is caught on film). The reason parts from movies, series, or other acted-out clips were excluded is that the reaction should be honest in order to be useful for this study. The found reactions, which can be found in the results section with a short description of the circumstances in which they took place, have been captured and analyzed in order to find trends. These findings have been compared with findings from literature research to explain trends and possible outliers. | |||

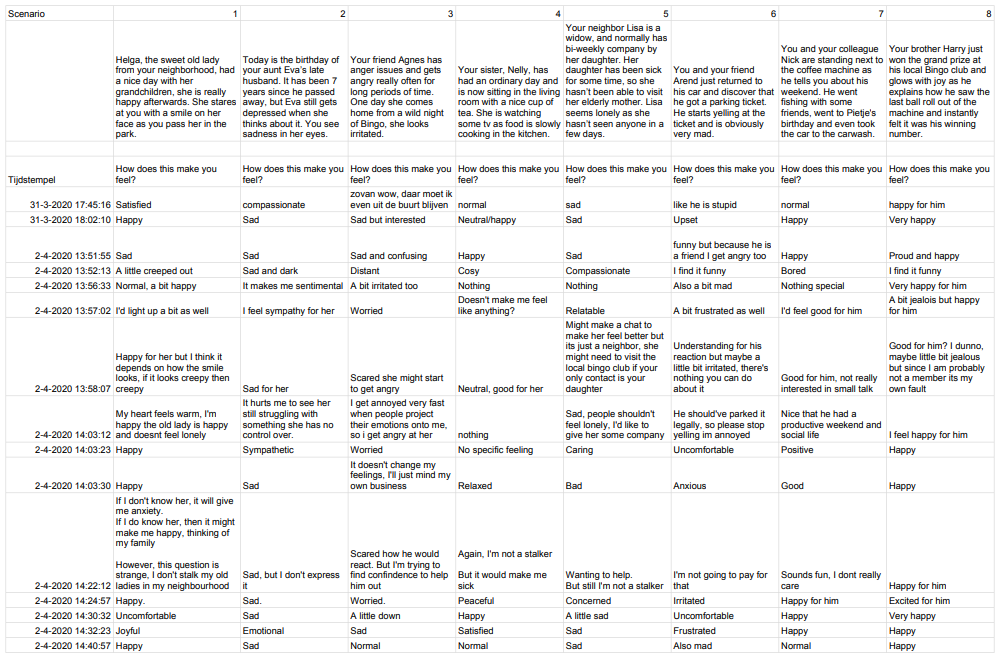

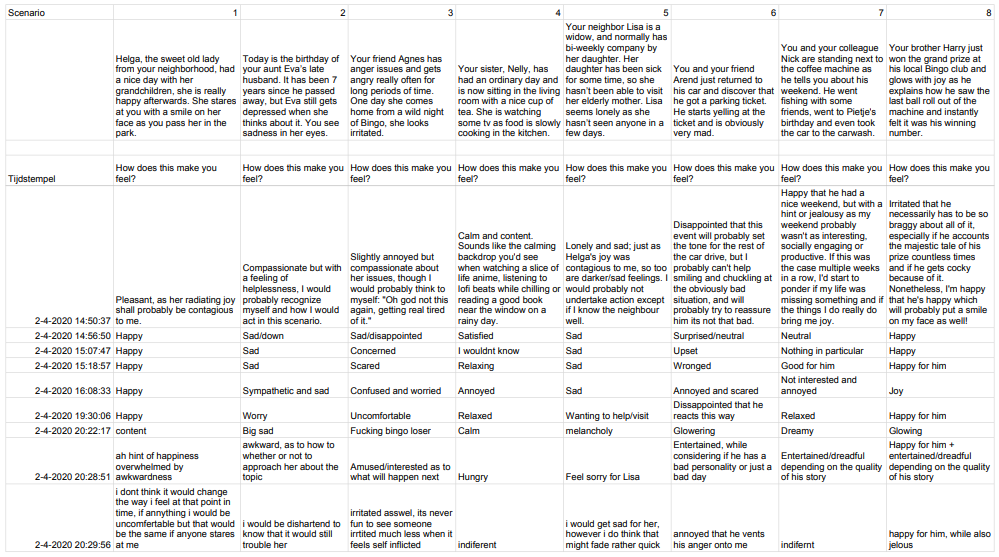

In the questionnaire (see Appendix B), participants were asked how they would feel in certain scenarios. Each scenario represents one of the basic emotions described above: happiness, anger, sadness, and neutrality. The questionnaire consists of 8 scenarios, two for each emotion. This is done to maximize the useful data that can be gathered. | |||

Each scenario is only two or three sentences long. This is done to keep the scenario more general and also to prevent participants from losing attention. Having to read long parts of backstory will increase the time they have to spend on the questionnaire, which can result in lower quality of data or participants not completing the questions at all <ref> Mirta Galesic, Michael Bosnjak, Effects of Questionnaire Length on Participation and Indicators of Response Quality in a Web Survey, Public Opinion Quarterly, Volume 73, Issue 2, Summer 2009, Pages 349–360, https://doi.org/10.1093/poq/nfp031 </ref>. This is also the reason why we chose to make two scenarios per emotion, three or more would make the questionnaire too long. | |||

Making the questions multiple choice was considered but decided against as open questions would give the participants more freedom to express themselves. These results would then be manually grouped based on similarity. Asking participants about their facial expression in hypothetical scenarios was considered to be too difficult a question to answer. Therefore, we settled on the question “How does this make you feel?”, as this could still be useful in comparison with the video analysis. | |||

= Results = | |||

== Video analysis == | |||

=== Happiness === | |||

Reactions to an excited woman who has had the opportunity to talk with some of her idols (figures 8 & 9)<ref> The Graham Norton Show. (2020, March 25). The Best Feel Good Moments On The Graham Norton Show | Part One [Video file]. Retrieved from https://www.youtube.com/watch?v=o42-l8P-0dM </ref>. | |||

[ | [[File: Graham Northon Happy 1.png|bottom-text|100px|Figure 8: Reaction to happiness 1.1.<ref> The Graham Norton Show. (2020, March 25). The Best Feel Good Moments On The Graham Norton Show | Part One [Video file]. Retrieved from https://www.youtube.com/watch?v=o42-l8P-0dM </ref>]] [[File: Graham Northon Happy 2.png||bottom-text|100px|Figure 9: Reaction to happiness 1.2.]] | ||

Someone's reaction after he was praised for his deeds in obtaining residence permits for children (figure 10)<ref> DWDD. (2020, March 25). Blij in bange dagen: de BOOS AntiCoronaDepressiePodcast [Video file]. Retrieved from https://www.youtube.com/watch?v=02d2oaN_sVc </ref>. | |||

[ | [[File: DWDD Happy 1.png|bottom-text|100px|Figure 10: Reaction to happiness 2.]] | ||

After the person on the left told a joke (figure 11), the host bursts out laughing. This made the person on the left laugh as well (figure 12) <ref> DrSalvadoctopus (2016, November 19). The Best Interview In The History Of Television [Robin Williams] [Video file]. Retrieved from https://youtu.be/lvPxRyIWWX8 </ref>. | |||

[ | [[File: Robin Happy 1.png|bottom-text|200px|Figure 11: Reaction to happiness 3.1]] [[File: Robin Happy 2.png|bottom-text|200px|Figure 12: Reaction to happiness 3.2]] | ||

The host mentioned knowing how some of the tricks of the illusionist he is talking with (clearly seeming proud about this fact), after which the illusionist laughed (figure 13) <ref> DWDD. (2019, November 22). Victor Mids legt Matthijs eindelijk illusie uit! [Video file]. Retrieved from https://www.youtube.com/watch?v=FGpMw3prEMk </ref>. | |||

[ | [[File: DWDD Happy 2.png|bottom-text|100px|Figure 13: Reaction to happiness 4]] | ||

People went about the streets of New York smiling to strangers, many people smiled as a reaction (figure 14) <ref> Iris. (2015, December 17). The Dolan Twins Try to Make 101 People Smile | Iris [Video file]. Retrieved from https://www.youtube.com/watch?v=zBJAzedrEic </ref>. | |||

[ | [[File: People Happy 1.png|bottom-text|400px|Figure 14: Reaction to happiness 5]] | ||

=== Sadness === | |||

The guest talks about his daughter’s illness (figure 15) <ref> The Graham Norton Show. (2015, November 27). Johnny Depp Gets Emotional Talking About His Daughter's Illness - The Graham Norton Show [Video file]. Retrieved from https://www.youtube.com/watch?v=Rh0kQpmz0ww </ref>. | |||

[15] | [[File: Graham Northon Sad.png|100px|Figure 15: Reaction to sadness 1 ]] | ||

The person first reacts by copying the other’s sadness, but later tries to cheer the other up (figure 16) <ref> Videojug. (2011, November 21). How To Cheer Someone Up Who Is Feeling Low [Video file]. Retrieved from https://www.youtube.com/watch?v=FoHYwuYGufg. </ref>. | |||

[ | [[File: Person Sad 1.png|300px|Figure 16: Reaction to sadness 2 ]] | ||

Someone talks about the loss of her baby and how this affected her emotionally. These are two reactions to this conversation (figure 17) <ref> RTL Late Night met Twan Huys. (2018, October 4). Sanne Vogel emotioneel over verlies baby - RTL LATE NIGHT MET TWAN HUYS [Video file]. Retrieved from https://www.youtube.com/watch?v=pc16fHVl1BI </ref>. | |||

[ | [[File: RTLZ Sad.png|200px|Figure 17: Reaction to sadness 3 ]] | ||

This father responds to the mental breakdown of his child. He looks sad, worried and empathetic (figure 18) <ref> Channel 4. (2019, August 18). 16-Year-Old Says Goodbye to Family Before Brain Surgery - Heart-Wrenching Moment | 24 Hours in A&E [Video file]. Retrieved from https://youtu.be/LyRRoP-PlUY </ref>. | |||

[ | [[File: Father Sad.png|100px|Figure 18: Reaction to sadness 4 ]] | ||

=== Anger === | |||

Guy (slightly jokingly) explains how he was fired and then sent to another, much less enjoyable company as some sort of punishment. It was clear from the guys wording he disliked this (figure 19) <ref> The Graham Norton Show. (2020, March 9). Lee Mack's Joke Leaves John Cleese In Near Tears | The Graham Norton Show [Video file]. Retrieved from https://www.youtube.com/watch?v=dmbpagijVkk </ref>. | |||

[ | [[File: Graham Northon Angry.png|300px|Figure 19: Reaction to anger 1 ]] | ||

Reaction to someone being angry for being called a slur (figure 20) <ref> RTL Late Night met Twan Huys. (2016, September 15). Rapper Boef: "Ik ben geen treitervlogger" - RTL LATE NIGHT [Video file]. Retrieved from https://www.youtube.com/watch?v=5lM-DDGA8G0 </ref>. | |||

[ | [[File: Person Angry 1.png|100px|Figure 20: Reaction to anger 2 ]] | ||

A reaction to racist deeds (figure 21) <ref> DWDD. (2020, February 11). Coronavirus leidt tot racisme in Nederland [Video file]. Retrieved from https://www.youtube.com/watch?v=Abdiasgxvp8 </ref>. | |||

[[File: DWDD Angry.png|100px|Figure 21: Reaction to anger 3 ]] | |||

One person got slightly frustrated, so the other person keeps a neutral face and tries to calm him down (figure 22)<ref> Good Mythical Morning (figure 22). (2017, November 6). Will It Omelette? Taste Test [Video file]. Retrieved from https://youtu.be/noESoLOqxgo </ref>. | |||

[[File: GMM Angry.png|150px|Figure 22: Reaction to anger 4 ]] | |||

=== Neutrality === | |||

General questions about what a movie was about were asked, below are reactions to the answers (figure 23) <ref> Sd Tv. (2020, February 1). FULL Graham Norton Show 31/1/2020 Margot Robbie, Daniel Kaluuya, Jodie Turner-Smith, Jim Carrey [Video file]. Retrieved from https://www.youtube.com/watch?v=2Diorr1SlSw </ref>. | |||

</ref> | |||

[[File: Graham Northon Neutral.png|250px|Figure 23: Reaction to neutrality 1]] | |||

The host politely smiled at the person in the picture, who then smiled in response (figure 24) <ref> DrSalvadoctopus. (2016, November 19). The Best Interview In The History Of Television [Robin Williams] [Video file]. Retrieved from https://youtu.be/lvPxRyIWWX8 </ref>. | |||

[[File: Robin Neutral.png|150px|Figure 24: Reaction to neutrality 2]] | |||

= | The host is talking to someone in a normal fashion (figure 25) <ref> DWDD. (2019, November 22). Victor Mids legt Matthijs eindelijk illusie uit! [Video file]. retrieved from https://www.youtube.com/watch?v=FGpMw3prEMk </ref>. | ||

[[File: DWDD Neutral .png|100px|Figure 25: Reaction to neutrality 3]] | |||

Reaction to a neutral comment during a conversation (figure 26) <ref> fun. (2015, October 6). SCHRIKKEN! - MILKSHAKEVLOG! #117 - FUN [Video file]. Retrieved from https://www.youtube.com/watch?v=P_uxEpQxloA </ref>. | |||

[[File: Youtuber Neutral.png|100px|Figure 26: Reaction to neutrality 4]] | |||

== Questionnaire == | |||

There was a total of 29 participants who filled out the questionnaire. | |||

The full answers can be found in Appendix B. | |||

For each scenario, the answers were then grouped based on similarity. Responses that did not fit in with the others, are categorized under “Others”. These results can be found in Appendix C. | |||

= | = Conclusions = | ||

== Happiness == | == Happiness == | ||

The most common reaction to someone else expressing joy is to mimic this expression. In social interaction between humans, laughter occurs in a variety of contexts featuring diverse meanings and connotations <ref> Becker-Asano, C., & Ishiguro, H. (2009). Laughter in Social Robotics – no laughing matter. Retrieved from: https://www.researchgate.net/publication/228699540_Laughter_in_Social_Robotics-no_laughing_matter </ref>. However, laughing is most often used in social situations <ref> Provine, R. R., & Fischer, K. R. (2010). Laughing, Smiling, and Talking: Relation to Sleeping and Social Context in Humans. Ethology, 83(4), 295–305. https://doi.org/10.1111/j.1439-0310.1989.tb00536.x </ref>. It has even been stated to be “a decidedly social signal, not an egocentric expression of emotion.” <ref> Provine, R. (1996). Laughter. American Scientist, 84(1), 38-45. Retrieved March 28, 2020, from www.jstor.org/stable/29775596 </ref> As to be expected with this information, most people react to someone else’s joy with an obvious smile or laughter. | The most common reaction to someone else expressing joy is to mimic this expression. In social interaction between humans, laughter occurs in a variety of contexts featuring diverse meanings and connotations <ref> Becker-Asano, C., & Ishiguro, H. (2009). Laughter in Social Robotics – no laughing matter. Retrieved from: https://www.researchgate.net/publication/228699540_Laughter_in_Social_Robotics-no_laughing_matter </ref>. However, laughing is most often used in social situations <ref> Provine, R. R., & Fischer, K. R. (2010). Laughing, Smiling, and Talking: Relation to Sleeping and Social Context in Humans. Ethology, 83(4), 295–305. https://doi.org/10.1111/j.1439-0310.1989.tb00536.x </ref>. It has even been stated to be “a decidedly social signal, not an egocentric expression of emotion.” <ref> Provine, R. (1996). Laughter. American Scientist, 84(1), 38-45. Retrieved March 28, 2020, from www.jstor.org/stable/29775596 </ref> As to be expected with this information, most people react to someone else’s joy with an obvious smile or laughter. | ||

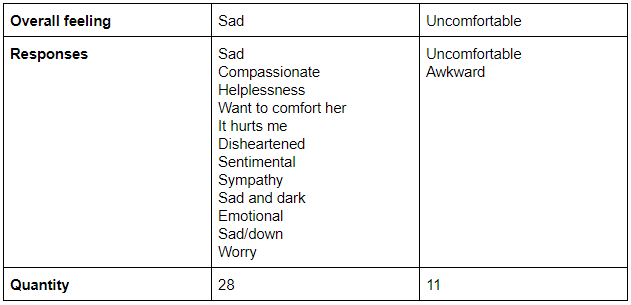

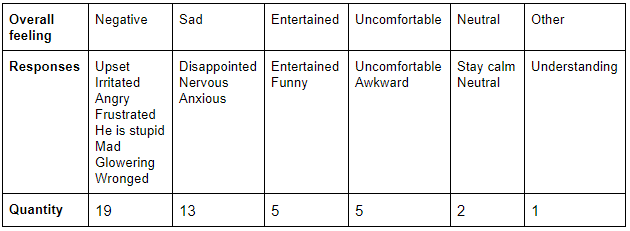

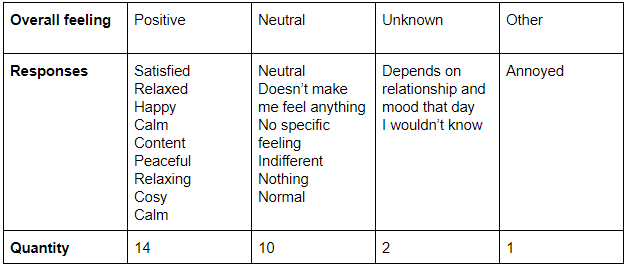

From the questionnaire, both scenarios that are representing Happy, scenario 1 (Table 1) and scenario 8 (Table 2), show similar results. Almost all participants indicate a positive feeling as response to the scenario, where the responses for scenario 8 are more extravagant then for scenario 1. This can be explained by the amount of happiness the person in the scenario is showing themself. Helga from scenario 1 only showed a pleasant smile, whereas Harry from scenario 8 glows with joy and enthusiasm. Due to the phrasing in the first scenario, some participants also felt a bit creeped out. There are also feelings of jealousy mentioned for scenario 8, as they would have wanted to win a prize as well. But overall, someone's’ happiness is returned with a relatively equal amount of happiness. | |||

== Sadness == | == Sadness == | ||

Research <ref> BURLESON, B. R. (1983). SOCIAL COGNITION, EMPATHIC MOTIVATION, AND ADULTS’ COMFORTING STRATEGIES. Human Communication Research, 10(2), 295–304. https://doi.org/10.1111/j.1468-2958.1983.tb00019.x </ref> shows that someone’s competence to comfort a distressed person in a sensitive way depends on both their social-cognitive abilities and their motivation to comfort the other. Being competent does not always imply that a highly sensitive comforting strategy will be produced. | Research <ref> BURLESON, B. R. (1983). SOCIAL COGNITION, EMPATHIC MOTIVATION, AND ADULTS’ COMFORTING STRATEGIES. Human Communication Research, 10(2), 295–304. https://doi.org/10.1111/j.1468-2958.1983.tb00019.x </ref> shows that someone’s competence to comfort a distressed person in a sensitive way depends on both their social-cognitive abilities and their motivation to comfort the other. Being competent does not always imply that a highly sensitive comforting strategy will be produced. | ||