PRE2019 3 Group2: Difference between revisions

TUe\s166777 (talk | contribs) |

|||

| (327 intermediate revisions by 5 users not shown) | |||

| Line 1: | Line 1: | ||

<font size = '6'>Research on Idle Movements for Robots</font> | <font size = '6'>Research on Idle Movements for Robots</font> | ||

== Group Members == | == Group Members == | ||

| Line 24: | Line 19: | ||

|} | |} | ||

==''' | == '''Abstract''' == | ||

''' | It is likely that in the future, robots will be implemented into society. This study focuses on improving human-robot interaction by researching idle movements. First, the idle movements of humans are determined by a literature search and an experiment. This was done in three categories: ‘casual conversation’, ‘waiting / in rest’ and ‘serious conversation’ Then, the found idle movements at different categories were tested using a NAO robot. The participants needed to fill in the Godspeed questionnaire before and after a conversation with a NAO robot, which was performing idle movements. Furthermore, a conversation of the NAO robots which performed different kinds of idle movements was put online. The participant of this online test needed to full in the Godspeed questionnaire before and after watching the conversation. | ||

The research is performed in a total of eight | Results showed that the different types of conversations had different idle movements, resulting in different combinations for the conversation experiments. These two experiments with the NAO robots show two completely different outcomes. The result of the experiment with the participants having a conversation of the NAO robot shows that the combination of a ''casual conversation with serious movement'' and the combination of a ''serious conversation with casual movements'' were the best in most of the categories of the Godspeed questionnaire. The online experiment showed that the expected result, ''casual conversation with casual movements'' peaking together with ''serious conversation with serious movements''. Overall, the usage of movements positively stimulates the participants (and, thus, speakers). The best set of movements seems to be dependent on whether the speaker is directly in front of the robot or sees the robot on their screen. For when people see the robot in real life, the best combinations would be the casual conversation with serious movements and the serious conversation with casual movements. For when people see the robot on a screen, the best combinations would be the casual conversation with casual movements and the serious conversation with serious movements. | ||

1. Experiment A: ..... | |||

2. Experiment B:....... | == '''Introduction''' == | ||

=== '''Problem statement''' === | |||

The future will contain robots, which will interact with humans in a very socially intelligent way. [[File:Nao_robot.png|right|thumb|300px|Figure 1: Nao Robot]] Robots will, then, demonstrate humanlike social intelligence and non-experts will not be able to distinguish robots and other human agents anymore. To accomplish this, robots need to develop a lot further: the social intelligence of robots needs to be increased a lot, but also the movement of the robots. Nowadays, robots do not move the way humans do. For instance, when moving your arm to grab something, humans tend to overshoot a bit<ref>Yamauchi, M., Imanaka, K., Nakayama, M., & Nishizawa, S. (2004). Lateral difference and interhemispheric transfer on arm-positioning movement between right and left-handers. Perceptual and motor skills, 98(3_suppl), 1199-1209.</ref>. A robot specifies the target and moves towards to object in the shortest way possible. Humans try to take the least resistance path. This means they also use their surroundings to reach for their target. For instance, lean on a table to cancel out the gravity force. Furthermore, humans use their joints more than robots do. | |||

This lack of movement creates a big problem for a robot's motion for idle movements. An idle movement is a movement during rest or waiting (e.g touching your face). For humans and every other living creature in the world, it is physically impossible to stand precisely still. Robots, however, when not in action stand completely lifeless. Creating a static (and, therefore, an unnatural) environment during the interaction between the robot and the corresponding person. It is unsure if the robot is turned on and can respond to the human, and it can also create an uncomfortable scenario for the human<ref>Homes, S., Nugent, B. C., & Augusto, J. C. (2006). Human-robot user studies in eldercare: Lessons learned. Smart homes and beyond: Icost, 2006, 4th.</ref>. As it is suggested, in the latter paper, that social ability (which also covers includes movements) in a robotic interface contributes to feeling comfortable talking to it. Another thing is that humans are always doing something or holding something while having a conversation. | |||

To improve the interaction with humans and robots, the questions that have to be answered are: "Which idle movements are considered the most appropriate human-human interaction?", and "Which idle movements are considered the most appropriate human-robot interaction?". In this research, we will look at all these things by observing human idle movements, test robot idle movement, and research into the most preferable movements according to participants. | |||

=== '''Objectives''' === | |||

It is still very hard to get a robot to appear in a humanistic natural way as robots tend to be static (whether they move or not). As a more natural human-robot interaction is wanted, the behavior of social robots needs to be improved on different levels. In this project, the main focus will be on the movements that make a robot appear more natural/lifelike during idling, which is called 'idle movements'. The objectives of the research will be to find out which set of idle movements make a robot appear in a more natural way. In this research, the information will be based on previous research that has been done on this subject (which is explained in the chapter 'State of the art'). More information will be gathered by observing people in videos; these videos will differ in categories as will be explained later in the chapter 'Approach'. These videos will give a conclusion on what the best idle movements are for certain conversations. | |||

Next to this, the NAO robot will be experimented with. The NAO robot can be seen in figure 1. This robot has many human characteristics such as human proportions and facial properties. The only big difference is the height as the NAO robot is just 57 centimeters. The NAO robot will be performing different idle movements and future users give their responses to these movements. With these acquired responses, the received data is supposed to give a conclusion on what the best idle movements are and will hopefully make the robot appear more life-like. Due to the fact that in the future humanoid robots will also improve, possible expectations on the most important idle movements will also be given. Altogether, it is hoped that the information will give greater insight into the use of idle movements on humanoid robots to be used in future research and projects on this subject. | |||

== '''USE''' == | |||

When taking a look at the stakeholders of social robots, a lot of groups can be taken into account. This is caused by the fact that it is uncertain to what extent robots will be used in the future. Focusing on all the possible users for this project would be impossible and 'designing for everyone, is designing for no-one'. A selection has been made of users who will likely be the first to benefit from or have to deal with social robots. This selection is based on collected research from other papers<ref name=Torta>Torta, E. (2014). Approaching independent living with robots. https://pure.tue.nl/ws/portalfiles/portal/3924729/766648.pdf</ref>, where these groups where highlighted and researched on their opinion on robot behavior. | |||

The main focus of research papers on social robots is for future applications in elderly care, and therefore elderly people are the main subject. Another group of users who will reap the benefits of social robots are physically or mentally disabled people. | |||

=== '''Users''' === | |||

The primary users are: | |||

*'''elderly''' | |||

It is possible that in the future socially assistive robots will be implemented into care homes, to provide care and companion to elderly people. The elderly are the primary users as they will have the most contact with the robots. For those people in need of care, it is essential that these social robots are as human-like as possible. This will help them better accept the presence of a social robot in their private environment. One key element of making a robot appear life-like is the presence of (the to be studied) idle motions. | |||

The nurses and doctors who care for these people are secondary users of socially assistive robots. They can feel threatened by social robots as the robots might take over their jobs, but can also be relieved that the robot will assist them<ref>Rantanen, T., Lehto, P., Vuorinen, P., & Coco, K. (2018). The adoption of care robots in home care—A survey on the attitudes of Finnish home care personnel. Journal of clinical nursing, 27(9-10), 1846-1859.</ref>. | |||

*'''physically disabled persons''' | |||

Another field of application of socially active robots is helping physically disabled persons. Post-stroke application is a possible application of the socially active robot since stroke is a big cause of severe disability in the growing aging society. 'Motivation is recognized as the most significant challenge in physical rehabilitation and training' <ref name=Tapus>Tapus, A. (2013). The Grand Challenges in Socially Assistive Robotics.https://hal.archives-ouvertes.fr/hal-00770113/document</ref>. The challenge is 'aiming toward establishing a common ground between the human user and the robot that permits a natural, nuanced, and | |||

engaging interaction' in which idle motion could be of a big role. | |||

*'''children''' | |||

Humanoid robots are used in various contexts in child-robot interactions, for example, autism therapy. In order to be accepted, the robot should be (perceived) as socially intelligent. In order to improve human-like abilities and social expression, idle movements should be implemented. | |||

There has been done a loopback towards the users, this will be addressed at the end of this research (chapter 'Feedback loop on the users'). | |||

=== '''Society''' === | |||

The main interest of the society is the implementation of socially assistive robots in elderly care. The population of the world is getting older. It is estimated that in the year 2050, there will be three times more people over the age of 85 than there are today, while a shortage of space and nursing staff is already a problem today<ref name=Tapus>Tapus, A. (2013). The Grand Challenges in Socially Assistive Robotics.https://hal.archives-ouvertes.fr/hal-00770113/document</ref>. The implementation of socially assistive robots could help to overcome this problem as these robots may assist the caregivers during their day at work, reducing their stress and work pressure. | |||

=== '''Enterprise''' === | |||

Companies and manufacturers of socially assistive robots will benefit from this research, implementing it in their products. They want to offer their customers the best social robot, they are able to produce, which in turn has to be as human-like as possible. Thus, it is key to include idle movements in their design as this would increase their sales, resulting in more profit over time. There are already some companies selling social robots, for example, the PARO robot. PARO is an advanced interactive robot made by AIST, a Japanese industrial pioneer. It is described as animal therapy administered to patients in elderly care and results show reduce patient stress, caregiver stress and improve the socialization of the patients. | |||

== '''Approach, Milestones and Deliverables''' == | |||

=== Approach === | |||

To get knowledge about idle movements, observations have to be done on humans. Though, the idle movements will be different for various categories (see experiment A), which requires research beforehand. This research will be done via state of the art. Papers, such as papers from R. Cuijpers and/or E. Torta and Hyunsoo Song, Min Joong Kim, Sang-Hoon Jeong, Hyeon-Jeong Suk, and Dong-Soo Kwon, contain a lot of information concerning the idle movements that are considered important. Therefore, it is important to read the papers of the state of the art carefully. The state of the art will be explained in the chapter ‘State of the art’, which is positioned at the bottom of this document due to its size. After the research, observations can be done. The perfect observation method is dependent on the category. Examples of such methods are observing people walking (or standing) around public spaces, such as on the campus of the university or on the train. However, for this research, videos or live streams will be watched on (e.g) Youtube. The noticed idle movements can be listed in a table and can be tallied. The most tallied idle movement will be considered the best for that specific category. However, that does not mean it will work for a robot as the responses of users might be negatively influenced. Experiment B will clarify this. | |||

The best idle movement per category will be used in experiment B. This experiment will be done by using the NAO robot. The experiment makes use of a large number of participants (which optimally would consist out of the primary users, see chapter 'Users'). The NAO robot will start a conversation with the participant for a given amount of time; this is done twice. At first, the plan was to let the robot use different idle movements per conversation: ''the NAO robot will talk without using any idle movements and, then, using different idle movements for different types of conversations. The used idle movements will be based on the research as listed above and are in the same order for every participant.'' However, as will be explained in 'chapter Experiment B, Setting up experiment B', the setup has changed: the NAO robot will have 3 different types of motion sets: casual, serious, and no. The NAO robot will perform two conversations with the participants while using one of the motion sets (the combinations of the conversations and motion sets are fixed). | |||

The participants will have to fill in a Godspeed questionnaire<ref name=godspeed>Bartneck, C., Kulić, D., Croft, E. and Zoghbi, S. (2009). Measurement instruments for the anthropomorphism, animacy, likeability, perceived intelligence, and perceived safety of robots. International Journal of Social Robotics 1(1), 71–81.</ref> before the conversation, after the first conversation, and after the last conversation. This ensures that there can be looked into the way people's thoughts change relative to the robot's behavior. The Godspeed questionnaire includes many different questions such as animacy or anthropomorphism. These questions should also be answered with a scale between 1-5. By using the data of this experiment, a diagram can be made to the responses of the participants to the various idle movements. Via this, the best idle movement can be decided for each category. The result can also occur in a combination of various idle movements as being the best. | |||

=== Milestones and Deliverables === | |||

The milestones and deliverables can be found in the planning. | |||

== '''Experiment A''' == | |||

The first experiment will be a human-only experiment. To understand the meaning of robot idle-movement better humans have to be observed. People will be observed in multiple places such as mentioned in the Approach but still have different movements. Listed down below, it is stated which type of video has been used (e.g youtube videos or live streams). The videos differ as the idle movements most likely differ in different categories. For instance, when people are having a conversation with a friend a possible idle move will be shaking with their leg and or feet or biting their nails. However, when a job interview people tend to be a lot more serious and try to focus on acting 'normal'. The different categories for the idle movement with multiple examples to implement on the robot are listed down below. Furthermore, these examples are based on eleven motions suggested by this paper<ref name=japanpaper>Song, H., Min Joong, K., Jeong, S.-H., Hyen-Jeong, S., Dong-Soo K.: (2009). Design of Idle motions for service robot via video ethnography. In: Proceedings of the 18th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN 2009), pp. 195–99 https://ieeexplore.ieee.org/abstract/document/5326062</ref> and these eleven motions are eye stretching, mouth movement, coughing, touching the face, touching hair, looking around, neck movement, arm movement, hand movement, lean on something and body stretching. In this research, nothing will be done with the speaking research. However, it is good to see that the motions differ per action (listening or speaking) and that the eleven motions can be applied to these. | |||

{| class="wikitable" style="border-style: solid; border-width: 1px;" cellpadding="3" | |||

!style="text-align:left;"| Category | |||

!style="text-align:left"| Examples of idle movements | |||

|- | |||

| Casual conversation || nodding, briefly look away, scratch head, putting arms in the side, scratch shoulder, change head position. | |||

|- | |||

| Waiting / in rest || lightly shake leg/feet up and down or to the side, put fingers against head and scratch, blink eyes (LED on/off), breath. | |||

|- | |||

| Serious conversation || nodding, folding arms, hand gestures, nod, lift eyebrows slightly, touch the face, touch/hold arm. | |||

|- | |||

|} | |||

The listed eleven motions are used for the tallying of the idle movements so that its full comparison can be given between the categories. The type of tape/video used is, thus, dependent on such a category. For each category, it will be explained which type of video has been used and why. Furthermore, the analysis of the video has been done twice, as that was one of the main problems stated during the presentation. | |||

=== Casual conversation === | |||

Casual conversation is talking about non-serious activities, which can occur between two strangers but also between two relatives. The topics of those conversations are usually not really serious, resulting in laughter or just calm listening. No different from the serious conversation, attention should be paid to the speaker. During conversations, the listeners will show such attention towards the speaker, which can generally be done by having eye contact. Furthermore, lifelike behavior is also important as it results in a feeling of trust towards the listener. Therefore, it is important for a robot to have the ability to establish such trust via idle movements. | |||

Eye contact for the NAO robot has already been researched and it is established that it is important<ref>Andrist, S., Tan, X. Z., Gleicher, M., & Mutlu, B. (2014, March). Conversational gaze aversion for humanlike robots. In 2014 9th ACM/IEEE International Conference on Human-Robot Interaction (HRI) (pp. 25-32). IEEE.</ref>. The usage of idle movements for the gain of trust is also considered important. Examples of such idle movements are nodding, briefly looking away, scratching the head, putting the arms in the side (and changing its position), scratching the shoulder and changing the head position. These idle movements are corresponding with what has been found in the idle movement research<ref name=japanpaper />. In casual conversations, nodding can confirm the presence of the listener and ensure that attention is being paid. Briefly looking away can mimic the movement of thought as people tend to look away and think while talking. Scratching your head might mimic the same idea, as scratching your head is associated with thinking. Moreover, scratching your head might also give the speaker a feeling of confidence due to the mimic of liveliness. Putting the arms in the side is generally a gesture of confidence and also of relaxation. Mixing up the number of arms in the side and the position of the legs will give the speaker an idea that the robot will relax, which gives the speaker a feeling of confidence. Scratching the shoulder is just a general mimic that ensures that the robot will look alive and seems confident. The change of the head position has the same purpose, the change of head position generally is a movement that occurs when thinking and during questioning what has been said. Both reasons give the speaker an idea that the robot is more lifelike. All of these examples of idle movements come back to the (already spoken about) research, resulting in the stated eleven motions (see the first paragraph of 'Experiment A'). | |||

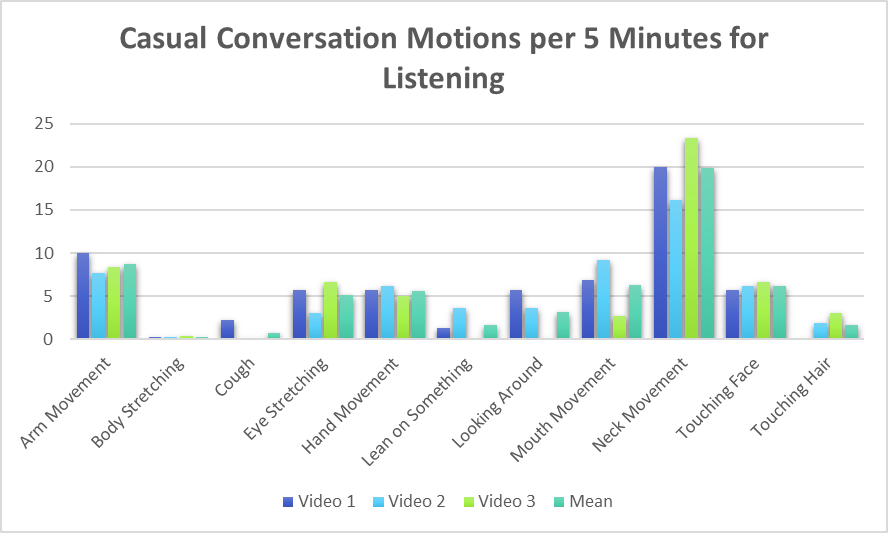

After knowing what idle movements to look for, it was important to investigate ways to tally the motions. Since there are many different ways to casually talk to someone, three different videos were taken (all from youtube). One of them is a podcast between four women <ref> Magical and Millennial Episode on Friends Like Us Podcast, Marina Franklin, https://www.youtube.com/watch?v=Vw9M4bVZCSk</ref>, another one is a conversation between a dutch politician and a dutch presenter <ref>Een gesprek tussen Johnny en Jesse, GroenLinks, https://www.youtube.com/watch?v=v6dk6eI1qFQ</ref> and the last one is of an American host show<ref>Joaquin Phoenix and Jimmy Fallon Trade Places, | |||

The Tonight Show Starring Jimmy Fallon, https://www.youtube.com/watch?v=_plgHxLyCt4</ref>. The three videos were all watched fully and the number of each motion was being noted. The tallying was done for the listener and for the speaker so that the differences can also be spotted. The data of both can be seen in the bar graphs in Figures 2 and 3. | |||

[[File:image007.png|left|thumb|550px|Figure 2: Bar chart of the idle motions for listening during a casual conversation]][[File:image005.png|center|thumb|550px|Figure 3: Bar chart of the idle motions for speaking during a casual conversation]] | |||

In figure 2 it can be seen that neck movements are the most important motion, followed by mouth movements, arm movements and touching face (respectively). This can be explained due to the fact that people tend to nod a lot as a way to show acceptance and agreement with what has been said. However, head shaking is also seen as such a neck movement. The mouth movement is wetting their lips or just saying "yes", "okay" or "mhm". Again, with the underlying meaning being acceptance and agreement. Both motions are also a way of showing that attention is being paid. The arm movement can be explained by the fact that people scratch or just feel bored. Nonetheless, this movement shows life-like characteristics. Touching their faces is the same principle as the arm movement as it can happen due to boredom, an itch or something else. Next to these four, there were no motions that stood out. | |||

From figure 3, the arm movement, eye stretching, hand movement, neck movement, and looking around stood out. The arm and hand movement can be explained by the fact that people tend to explain things via gestures. These gestures are done by using your arm and hand. The eye stretching is used to create emotion (e.g being in shock or being surprised). The neck movement is a result of approval or disapproval of things that are being told. Looking around is a factor in thinking and, if people look away, they are thinking about what they remember of a scenario or what the best way is to tell something. Furthermore, nothing really stood out. | |||

To confirm that the results are viable, a chi-squared statistic analysis was performed in both situations. This analysis was performed using the statistics program SPSS. This analysis was performed to assure that these outcomes aren't accidental, but actually can be verified. So the significance needs to be higher than 0,05 in order to confirm that these outcomes aren't accidental. | |||

*The significance for the motions of the listener in a casual conversation is 0,218 which is higher than 0,05 so the amount of movements per 5 minutes is no coincidence. | |||

*The significance for the motions of the speaker in a casual conversation is 0,339 which is higher than 0,05 so the amount of movements per 5 minutes is no coincidence. | |||

=== Waiting / in rest === | |||

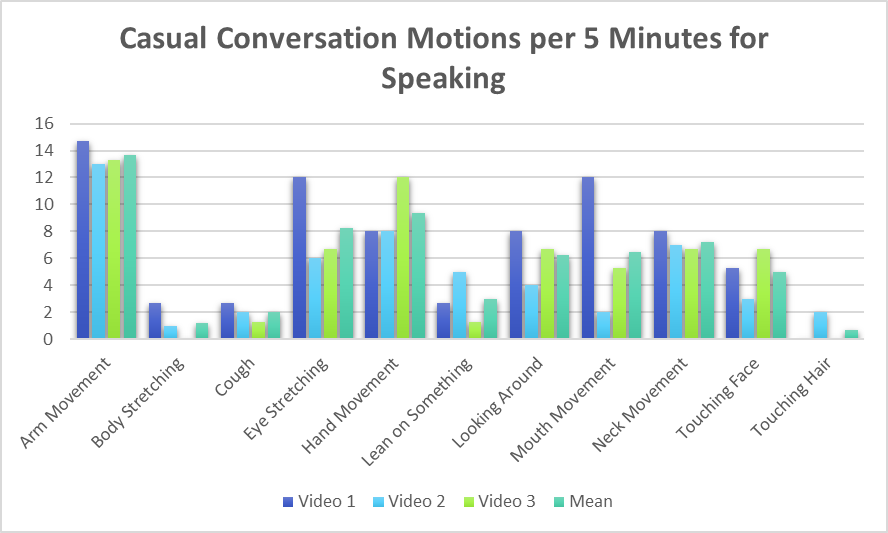

Often people find themselves in waiting or in a rest position. People normally think that they are really doing nothing in those situations. However, everybody makes some small movements when doing nothing. This is one of the things that makes a person or animal look alive. There are many different things that can be described as idle movements, but this research will only look into a couple of them. When you take a close look at people when they are doing nothing you will realize that they do these movements more often than you think. In this part of the experiment, three different videos were looked into where people are in the situation of doing nothing or are in rest. All the movements above are checked when done in the videos <ref> Doing nothing for 8 hours straight, Rens Cools, https://www.youtube.com/watch?v=sqD4f5MlPTc&t=3061s</ref> <ref>Sitting in a Chair for One Hour Doing Nothing, | |||

Robin Sealark, https://www.youtube.com/watch?v=jEjBz9FwUpE&t=1221sk </ref> <ref>Sitting in a Chair for One Hour Doing Nothing, Povilas Ramanauskas, https://www.youtube.com/watch?v=90lI-mU2o-I</ref>. All the results are then put together and added to a bar chart. In this way, we can find out which idle movements are most performed when in waiting or in rest. Figure 4 shows the bar chart of the results. | |||

[[File:image003.png|center|thumb|550px|Figure 4: Bar chart for the idle motions during waiting/in rest]] | |||

When looking at figure 4, it can be concluded that the neck movements are done the most. This can be explained by the fact that people move their heads while looking at their mobile phones or just nodding to people walking by. The second most frequent idle movements are looking around and mouth movement. Looking around is normal during waiting as people want to make sure what is happening around them and this movement makes them seem more alive and involved in the environment. The mouth movement is mainly people licking their lips or smiling to people walking by. The lip-licking is a preparation for a conversation that would maybe be happening in the near future and smiling is showing other people that you are present. The other movement that stood out is the hand movement, which can be explained by the fact that people are nervous when alone or just anxious. | |||

The movements that are most often performed should probably be implemented in a robot when it is in rest/waiting to acquire a better human-like appearance of the robot. | |||

To confirm that the results are viable, a chi-squared statistic analysis was performed in this situation. This analysis was performed using the statistics program SPSS. This analysis was performed to assure that these outcomes aren't accidental, but actually can be verified. So the significance needs to be higher than 0,05 in order to confirm that these outcomes aren't accidental. | |||

*The significance for the motions of a person waiting or in rest is 0,166 which is higher than 0,05 so the amount of movements per 5 minutes is no coincidence. | |||

=== Serious conversation === | |||

The human idle movements in a serious or formal environment have been done by studying the idle movements in conversations like job interviews <ref> Job Interview Good Example copy (2016), | |||

Katherine Johnson, https://www.youtube.com/watch?v=OVAMb6Kui6A</ref>, ted talks <ref>How motivation can fix public systems | Abhishek Gopalka (2020), TED, https://www.youtube.com/watch?v=IGJt7QmtUOk</ref> and other relative formal interactions like a debate <ref> Climate Change Debate | Kriti Joshi | Opposition, OxfordUnion, https://www.youtube.com/watch?v=Lq0iua0r0KQ</ref> or a parent-teacher conference. Comparing the idle movements with other categories as like the friendly conversation people there is a big difference to be seen. In a serious conversation, people try to come over smart or serious. As in friendly conversation, people do not think about their idle movements as much. The best example of this behavior is the tendency of humans to touch or scratch their face, nose, ear or any other part of the head. These idle movements are more common in friendly conversation because in a formal interaction people try to prevent coming over as childish or impatient. The idle movements, in this category, that are special are moves like hand gestures that follow the rhythm of speech. When talking another common idle movement is to raising eyebrows when trying to come over as convincing. When listening, however, a common idle movement is to fold hands. This comes over as smart or to express an understanding of the subject. The last type of idle movements that are very common are moves like touching the arm or blinking are also used in friendly conversations. In previous research ( rev Japanese article) there has been done research on idle movements for any type of conversation, now the same will be done for these categories counting all the idle motions for the videos mentioned, included the idle motions mentioned earlier, which stood out without counting the movements. Two bar charts have been made counting all the idle motions in the videos mentioned at the top of this paragraph. However, in two of the three videos, only one person is relevant to count the idle motions. So in the video of the job interview, only the job giver is considered because this corresponds better with the other videos and makes more sense for the robot to rather not be the applicant but the employer. However, the employer is listening most of the time and in the other two videos, the main characters are speaking. So in a serious conversation, a difference has to be made between speaking and listening. The two bar charts look like the following: | |||

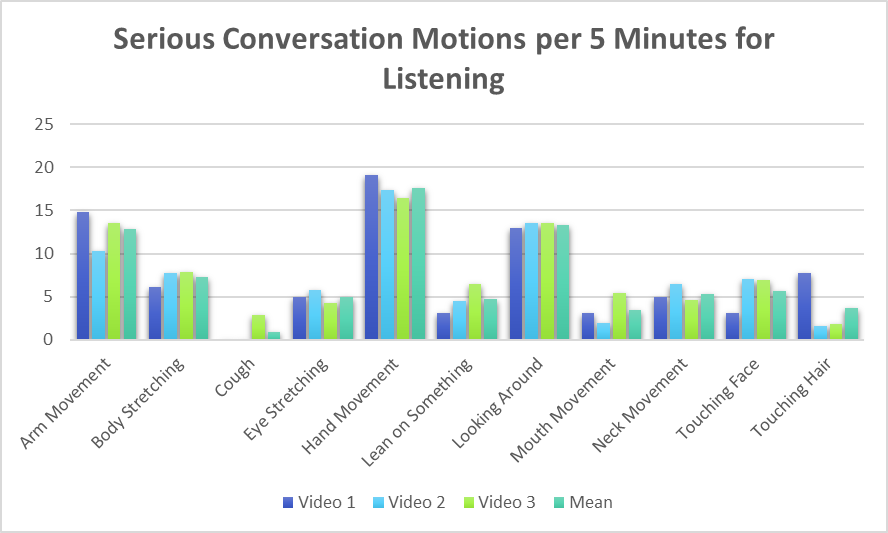

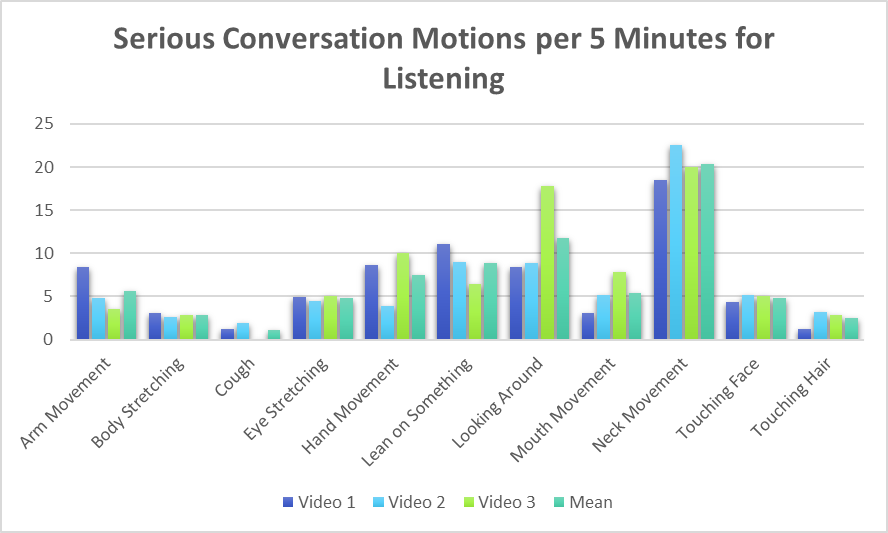

[[File:image009.png|left|thumb|550px|Figure 5: Bar chart of the idle motions for listening during a serious conversation]][[File:image100.png|center|thumb|550px|Figure 6: Bar chart of the idle motions for speaking during a serious conversation]] | |||

What can be seen in figure 5, is that neck movements really stand out. This is caused by the fact that people tend to nod a lot during a serious conversation, nodding ensures that the person comes across as understanding or smart. Furthermore, looking around and leaning on something are standing out. Looking around can be a factor of confidence and calmness during a serious conversation, showing that the person is comfortable being there. The leaning on something is mostly a table in the job interview and ensures that the person listening seems to be present and fully concentrated about the conversation (which really is helpful with, for example, a job interview). Furthermore, hand movement is an important factor. As already said, hands are put over each other on the table to show presence as well. | |||

Figure 6 shows a high frequency at hand movement. This is caused by the fact that people want to come across smart or interesting, which is achieved by using gestures while talking. That also explains the peak for arm movements as they are also important in gestures. Looking around is caused by people thinking. While thinking during a serious conversation, people look away to look concentrated. Furthermore, looking at someone while thinking might make them uncomfortable. Lastly, body stretching has a relatively high frequency. Body stretching generally gives a feeling that someone is comfortable in a conversation; it also shows that the person is present (if not done extremely abundant). | |||

To confirm that the results are viable, a chi-squared statistic analysis was performed in both situations. This analysis was performed using the statistics program SPSS. This analysis was performed to assure that these outcomes aren't accidental, but actually can be verified. So the significance needs to be higher than 0,05 in order to confirm that these outcomes aren't accidental. | |||

*The significance for the motions of the listener in a serious conversation is 0,712 which is higher than 0,05 so the amount of movements per 5 minutes is no coincidence. | |||

*The significance for the motions of the speaker in a serious conversation is 0,577 which is higher than 0,05 so the amount of movements per 5 minutes is no coincidence. | |||

== '''Experiment B1''' == | |||

Experiment B1 is divided into different parts. These are: | |||

*The NAO robot | |||

*Determining (NAO compatible) Idle movements from literature and *Experiment A. | |||

*The participants | |||

*Setting up experiment B1 | |||

*The script | |||

*The questionnaire | |||

*The results | |||

*Limitations | |||

=== The NAO robot === | |||

The NAO robot (see figure 1) is an autonomous, humanoid robot developed by SoftBank Robotics. It is widely used in education, research, and healthcare, with a great example being the research of Elena Torta. In the paper from Torta<ref name=Torta />, where the idea of this project originates from, is the NAO robot used as well. In one of her researches, the NAO robot is used with the elderly which is a great example that includes our user group as well. | |||

The NAO robot is small compared to a human being. With its approximate 57 centimeters, it is about one-third of a human. The robot has 25 degrees of freedom, eleven of which are positioned in the lower part, including legs and pelvis and fourteen of them, are present in the upper part including its trunk, arms, and head<ref>N.A. How many degrees of freedom does my robot has? SoftBank Robotics. Retrieved from: https://community.ald.softbankrobotics.com/en/resources/faq/using-robot/how-many-degrees-freedom-does-my-robot-has</ref>. This high number of degrees of freedom made it possible to make the robot look more lifelike, despite its length. The robot is packed with sensors, for example, cameras that can detect a face, position sensor, velocity sensor, acceleration sensors, motor torque sensor, and range sensors<ref> NAO - Technical overview. http://doc.aldebaran.com/2-1/family/robots/index_robots.html</ref>. It can speak multiple languages, including Dutch. As will be spoken about later, our participants were dutch. Therefore, the experiment will be done in Dutch to get the best results, without having a language barrier influencing the quality of the conversation. | |||

=== Determining (NAO compatible) idle movements from literature and Experiment A === | |||

Unfortunately, the NAO robot is not able to perform every motion a human can do due to a limited amount of links and joints as already mentioned. The list of human idle movements from experiment A and the literature are: | |||

*Eye stretching | |||

*Mouth movement | |||

*Cough | |||

*Touching face | |||

*Touching hair | |||

*Looking around | |||

*Neck movement | |||

*Arm movement | |||

*Hand movement | |||

*Lean on something | |||

*Body stretching | |||

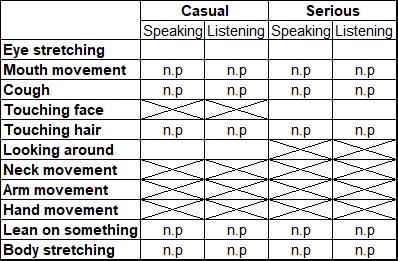

All the human idle movements, that are relevant for the research, were made in Choregraphe 2.1.4<ref> Choregraphe software. https://community.ald.softbankrobotics.com/en/resources/software/language/en-gb | |||

</ref>, to make the robot perform them during the experiment. The chosen idle movements were decided upon during experiment A. The best ones were to be chosen, this is when a movement has a high frequency in the given graph. On top of that, some movements were impossible to do as the robot was not able to hold on to objects or able to lean on something. The following table includes the motions and their usage for a casual or serious conversation and the action (listening or speaking). n.p stands for not possible and means that the robot was not able to perform the idle movement. | |||

[[File:Graph_idle.jpg|center|400px|]] | |||

A lot of human idle movements cannot be tested due to the limitations of the NAO robot or were not relevant enough for the conversation with the NAO robot. It is important to see that casual and serious have one different idle movement. This is due to the fact that the seriousness of the conversation is different, meaning that during a serious conversation people think by looking away. Furthermore, the touching of the face is just a form of comforting for the speaker and shows anthropomorphism. | |||

=== The participants === | |||

As explained in chapter 'Users', there are a lot of users that can be taken into account. Due to limitations, it was decided to take one group fo participants to participate in experiment B. 18 Participants have been doing the experiment, ranging from 18-22 years old. To make sure that no problems would occur the participants all filled in a consent form, giving their consent to let their data be used for this experiment and for this experiment only. As all of the participants were volunteers, no compensation was given to them. Furthermore, it was noted that all of their information could be deleted if they mentioned that they did not want to be apart of the experiment anymore (within 24 hours). | |||

=== Setting up experiment B === | |||

The experiment will be done in the robotics lab on the TU/e and will be done in week 6. The experiment will be performed as follows: | |||

*1. The participant needs to fill in a Godspeed questionnaire. | |||

*2. The NAO robot will start a conversation, this includes the robot asking open questions. The participant will have to give an elaborate answer to these questions. | |||

*4. During the answer, an idle movement is performed by the NAO robot. | |||

*5. After the answer is given, the NAO robot responds and will ask another question. | |||

*6. During the answer, the same idle movement is performed by the NAO robot. | |||

*7. After the conversation, the participant has to fill in another Godspeed questionnaire. | |||

At first, our plan was to pick the three most performed idle movements per category and test them all separately on the different conversations. However, having the same movement over and over again during a conversation seemed odd and unnatural, humans do not do this either. With a robot, it looks even weirder. To let the conversations be more natural, the experiment was changed to the following. Pick the four most used idle movements per category (serious and casual) for speaking and listening. Some idle movements were not an option due to the overall limitations (see 'Determining (NAO compatible) Idle movements from literature and Experiment A'). Now a file on Choregraphe is made for the serious movements and the casual movements. A total of six movements per category (three speaking and three listening) were placed randomly in the Choregraphe file to simulate human behavior. Another file was made with no movements at all, so in total there are three movements files (serious movements, casual movements, and no movements). | |||

Our goal of this research is to see if idle movements make a difference in a conversation and if different idle movements in different types of conversation will make a difference. For which two different conversations were made for a new setup. Each conversation will last about two minutes, having around eight questions asked by the robot. Where one of the three idle movement programs are done. Before and after a conversation is done, a questionnaire is taken by the participant. The order would be the same and as every participant would perform two conversations, there would be a total of three different versions of conversations that the participant could receive: | |||

*'''Casual''' conversation with '''casual''' movements and '''serious''' conversation with '''serious''' movements | |||

*'''Casual''' conversation with '''no''' movements and '''serious''' conversation with '''casual''' movements | |||

*'''Casual''' conversation with '''serious''' movements and '''serious''' conversation with '''no''' movements | |||

The participant will undergo two conversations with the NAO robot, firstly the casual conversation, in our case a chat about the weather and plans for the summer. The second conversation is a serious conversation, in our case a job interview. The three idle movements files from Choregraphe will be linked to the conversations, having nine different conversations in total (as can be seen above). The serious conversation has one with the serious idle movements, the casual movements and no movements at all. The casual conversation has the same three different types of options. To have as much data for every conversation a total of 18 participants were used. | |||

The setup also changed as the setup listed above does not include the various movements and versions: | |||

*1. The participant needs to fill in a Godspeed questionnaire. | |||

*2. The NAO robot will start a casual conversation, this includes the robot asking open questions. The participant will have to give an elaborate answer to these questions. The robot will perform a type of idle movement or not during the listening (depending on which version the participant has). | |||

*3. After the conversation, the participant will have to fill in another Godspeed questionnaire. | |||

*4. The participant has to do perform another conversation with the NAO robot, but this time it would be a serious conversation. The robot would perform a set of idle movement or not (depending on which version the participant has). | |||

*5. After the conversation, another Godspeed questionnaire has to be filled in. | |||

With 18 participants, it means that every type of conversation with different movements has been done six times and 54 questionnaires have been filled in. | |||

=== The script (Dutch and English) === | |||

Down below, the two scripts for the casual and serious conversations can be seen. The experiments were performed in Dutch, but it has been translated for non-Dutch people. | |||

'''Casual conversation''' | |||

'''Robot''': | |||

Hoi goed om je weer te zien. Hoe gaat het met je? | |||

''Hi, good to see you again. How are you?'' | |||

'''u''': | |||

Het gaat lekker. | |||

''It is going well.'' | |||

'''Robot''': | |||

Dat is goed om te horen. Alleen het weer is wat minder vandaag helaas. | |||

''That's good to hear. Only the weather is bad today, unfortunately.'' | |||

'''u''': | |||

Ja klopt. ik heb zin in de zomer. | |||

''Yes. I'm looking forward to the summer.'' | |||

'''Robot''': | |||

Inderdaad. Ik kan niet wachten om aan het strand te liggen. en jij? | |||

''Indeed. I can't wait to lie on the beach. and you?'' | |||

'''u''': | |||

Ik ga liever barbecuen/terassen | |||

''I prefer to barbecue / go to a terrace'' | |||

'''Robot''': | |||

Dat lijkt me inderdaad ook erg leuk om te doen. | |||

''I think it would be fun to do.'' | |||

'''u''': | |||

Met vrienden samen iets doen is altijd gezellig. | |||

''Doing something with friends is always fun.'' | |||

'''Robot''': | |||

Jazeker, ik ga met mijn maatjes een rondreis maken tijdens de vakantie, heb jij nog plannen? | |||

''Yes, I'm going on a tour with my buddies during the holidays, do you have any plans?'' | |||

'''u''': | |||

Ik ga denk ik vaak zwemmen. | |||

''I think I will go swimming often.'' | |||

'''Robot''': | |||

Wat leuk. dan heb je iets om naar uit te kijken. | |||

''How nice. then you have something to look forward to.'' | |||

'''u''': | |||

Inderdaad heb er veel zin in. | |||

''Indeed I am looking forward to it.'' | |||

'''Robot''': | |||

Ik moet er weer vandoor. Leuk je weer gesproken te hebben. | |||

''I have to run again. Nice to have talked to you again.'' | |||

'''u''': | |||

Oke, tot de volgende keer! | |||

''Okay, until next time!'' | |||

'''Robot''': | |||

Doei! | |||

''Bye!'' | |||

'''Job interview''' | |||

'''Robot''': | |||

Hallo, welkom bij dit sollicitatiegesprek. Wat zijn uw beste vaardigheden? | |||

''Hello, welcome to this interview. What are your best skills?'' | |||

'''u''': | |||

Ik ben goed in samenwerken. | |||

''I am good at collaborating'' | |||

'''Robot''': | |||

Dat is goed om te horen. Welke opleiding heeft u gedaan? | |||

''That's good to hear. What study did you do?'' | |||

'''u''': | |||

(kies je eigen opleiding) | |||

''(choose your own education)'' | |||

'''Robot''': | |||

Dat komt goed uit. Zulke mensen kunnen we goed gebruiken in ons bedrijf. Bent u op zoek naar een volledige werkweek of een halve werkweek? | |||

''That is convenient. We can put such people to good use in our company. Are you looking for a full working week or a half working week?'' | |||

'''u''': | |||

(kies uit een volledige werkweek of een halve werkweek) | |||

''(choose from a full working week or a half working week)'' | |||

'''Robot''': | |||

Oke, daarvoor hebben we nog een plek beschikbaar. Binnenkort kunt u een mail verwachten met het contractvoorstel. | |||

''Okay, we still have a place available for that. You can expect an email with the contract proposal soon.'' | |||

'''u''': | |||

Daar kijk ik naar uit bedankt. | |||

''Thank you for that.'' | |||

'''Robot''': | |||

Tot ziens! | |||

''Bye!'' | |||

=== The questionnaire === | |||

To determine the user's perception of the NAO robot the Godspeed questionnaire<ref name=godspeed/> will be used. The Godspeed uses a 5-point scale. Five categories are used. These are Anthropomorphism, Animacy, Likeability, Perceived Intelligence, and Perceived Safety. As noted in the questionnaire as well, there is an overlap between Anthropomorphism and Animacy. The link to the actual questionnaire can be found here(https://www.overleaf.com/7569336777bwmwdmhvmmbc). The different categories will be used to represent the importance of idle movements. Ideally, all of the categories would be better with idle movements, but that will be further discussed in the chapter 'The results', down below. Nonetheless the size of the questionnaire, the questionnaire has its flaws and limitations. These are discussed in the chapter 'limitations'. | |||

=== The results === | |||

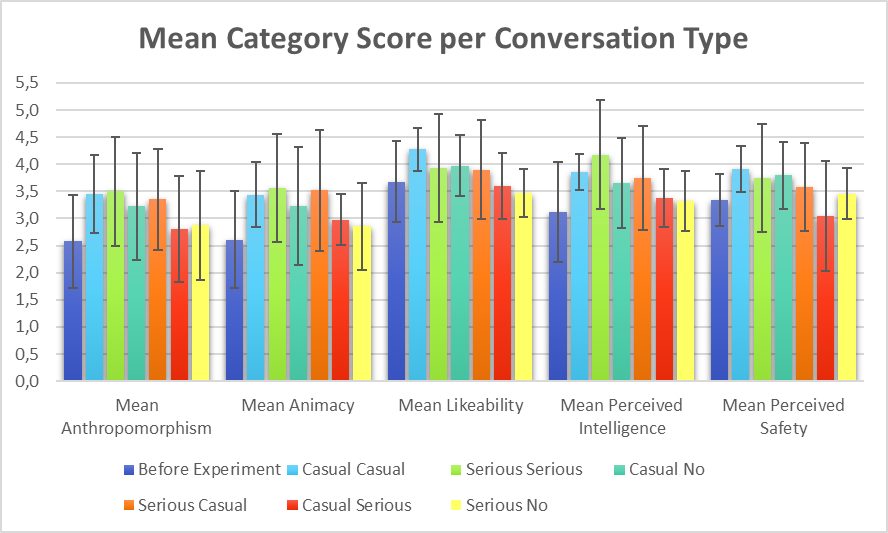

The acquired data which we achieved from performing experiment B.1 was analyzed using SPSS. Because we used the godspeed questionnaire the data was acquired in Likert scale scores. We used a five-point Likert scale. The results were analyzed by taking the mean score of each of the five categories per conversation type. In total, we tested seven different conversations which are all explained in previous chapters. The following bar chart shows the mean score of every category per conversation type. | |||

[[File:image77.png|center|thumb|550px|Figure 7: Bar chart for the mean scores of the godspeed questionnaire]] | |||

To give a small explanation of the graph: on the y-axis, we can see the mean scores of the five points Likert scale. On the x-axis, we can see the five categories that are acquired from the godspeed questionnaire. These five categories are subdivided in different bars which represent a conversation type. | |||

*Before experiment: Before the experiment started we let the participants fill in the godspeed questionnaire to set a standard to compare the results to. | |||

*Casual Casual: The casual conversation script mentioned previously, supplemented with the casual idle movements. | |||

*Serious Serious: The serious conversation script mentioned previously, supplemented with the serious idle movements. | |||

*Casual No: The casual conversation script mentioned previously, using no movements at all. | |||

*Serious Casual: The serious conversation script mentioned previously, supplemented with the casual idle movements. | |||

*Casual Serious: The casual conversation script mentioned previously, supplemented with the serious idle movements. | |||

*Serious No: The serious conversation script mentioned previously, using no movements at all. | |||

===='''Chart analysis'''==== | |||

'''Anthropomorphism''' | |||

Contradicting with expectation, the ''serious conversation with casual movements'' and ''casual conversation with serious movements'' are peaking with the serious conversation being the highest. Followed by a ''serious conversation with serious movements'' and ''casual conversation with casual movements'' (without taking no-movements into account). The only big difference is that a ''casual conversation with casual movements'' has a lower mean than the mean before the experiment. This would suggest that the movements for the casual and serious conversation should be turned around for a higher mean value for anthropomorphism. What is remarkable about this result is that the no-movement combinations are overall lower for every other bar chart except the ''casual conversation with casual movements''. This is totally in contradiction with what is expected (as it was expected to be one of the largest). However, even though there is a difference in the mean value between all the combinations, the difference is not significant. | |||

'''Animacy''' | |||

The bar chart for animacy has one big peak compared to the mean of "before experiments", which is the "serious conversation with casual movements" with an increase of about 0.5 points on the 1-5 scale. Followed by the ''serious conversation with serious movements'' and ''casual conversation with casual movements''. All three had an increase compared to the mean ''before experiment'' (this increase is not immense for ''serious serious'' and ''casual casual''). The opposite has happened for ''casual conversation with serious movements'', ''casual conversation with no-movements'' and ''serious conversation with no-movements'', as they have decreased compared to the measurement before (become worse relatively). The only increase and decrease that are really big are the ''serious conversation with casual movements'' and ''serious conversation with no-movements'' relatively. The other four combinations do not change significantly. | |||

'''Likeability''' | |||

Here, only one combination had an increase relative to the beginning mean value. The bar chart of the ''serious conversation with serious movements'' performs better than the beginning mean value (by just a tiny bit). The other four bar charts are scoring lower than the premeasured mean value. With the ''casual conversation with casual movements'' scoring second best, followed by ''casual conversation with serious movements'' and ''casual conversation with no movements'' and ''serious conversation with casual movements''. Again, the ''serious conversation with no movements'' scores the lowest. This is corresponding with the expectation as the two 'right' combinations are present in the top two bars. However, as before this increase and decrease is so small that it is uncertain if it can be trusted and whether a definite conclusion can be drawn from them. | |||

'''Perceived Intelligence''' | |||

The two bars that peak relatively higher than the premeasured mean are both the ''serious conversation'', but with the casual movements peaking higher than the serious movements. The others are decreased relative to the premeasured values. The ''casual conversation with casual movements'' is just a little smaller followed by the ''casual conversation with serious movements''. The two combinations with no-movements are again the smallest. The increase and decrease are for all but the ''serious conversation with casual movements'' and ''no-movements conversations'' relatively small and cannot be drawn a direct conclusion from. On top of that, the result is also contradicting the expectation. | |||

'''Perceived Safety''' | |||

The only increase relative to the premeasured is the ''serious conversation with serious movements''. A little decrease in the mean is present in the ''casual conversation with casual movements''. The ''serious conversation with casual movements'' is the lowest after the latter followed by almost a three equal no-movements combination and the ''casual conversation with serious movements''. This result is corresponding with the expectation and the difference is rather big. This result can, thus, be used to draw conclusions. | |||

===Conclusion === | |||

Overall, it can be said that no movements give worse results in all five categories than the two conversations with movements. This will most likely be the result of the robot looking real static and fake when not moving during talking. This can be seen by the fact that every bar is lower than the mean before the experiment. Especially the ''serious conversation without movements'' scores low compared to the others. It can be concluded that serious conversations are hugely impacted by the use of movements, more than casual conversations. As will be spoken about later on in this paragraph: the serious conversations score the best overall when looking at these bar charts. | |||

From all the conversations with movements, one combination peaks in three of the five graphs. This is the ''serious conversation with casual movements'' (orange bar). The combination peaks in anthropomorphism, animacy and perceived intelligence. On top of that, the combination that peaks in every bar chart consist of a serious conversation (with either a serious movement or casual movement). Suggesting that serious conversations are in general perceived as better than casual conversations, resulting in the robots maybe being destined for serious conversations only? Adding to that, that the runner up is usually the same type of conversation but with the other movement type. For example, if the best result is given by the ''serious conversation with casual movements'', the second-best is ''serious conversation with serious movements'' (look at animacy and perceived intelligence). The opposite combination is happening for likeability and perceived safety. Anthropomorphism's runner up is ''casual conversation with serious movements'', but after that, the ''serious conversation with serious movements'' comes up, suggesting almost the same. | |||

Though, as the increase is not immense between all the bars it cannot be said for certain. What can be said, as a result of this experiment, is that no movements during listening are a no-go and the robot should always perform a movement during listening and that serious conversations seem to be the better type of conversation for the NAO robot to perform. | |||

=== Limitations === | |||

''' The participants ''' | |||

In the ideal case, more participants could be used. The users' groups would be better represented. This would mean that the data could show differences in data between different user groups. Unfortunately, with the resources, limitations, and duration of the project, this is not possible. On top of that, since the robot does not know what he is saying, the participants might still find the robot static. | |||

''' The Godspeed questionnaire ''' | |||

The interpretations of the results of the Godspeed questionnaire<ref name=godspeed/> do have limitations, as explained above. These are: | |||

*Extremely difficult to determine the ground truth. As it is all relative, it is hard to find the real truth what people think about it. | |||

*Many factors influence the measurements (e.g. cultural background, prior experience with robots, etc.), this would create outliers. If there are too many outliers, the data would be unusable. | |||

These limitations of the questionnaires result in the fact that the results of the measurements can not be used as an absolute value, but rather to see which option of idling is better. | |||

== '''Experiment B2''' == | |||

Experiment B1 is divided into different parts. These are: | |||

*Setting up experiment B2 | |||

*The participants | |||

*The questionnaire | |||

*Results | |||

*Chart analysis | |||

*Limitations | |||

=== Setting up experiment B2 === | |||

During experiment B1, conversations with a team member and the NAO robot are filmed (these can be seen in the questionnaires mentioned down below). Two different conversations, serious and casual, were filmed with different idle movements: | |||

*Serious conversation with serious idle movements | |||

*Serious conversation with casual idle movements | |||

*Serious conversation with no idle movements | |||

*Casual conversation with serious idle movements | |||

*Casual conversation with casual idle movements | |||

*Casual conversation with no idle movements | |||

At first, the participant did first a questionnaire, after seeing a picture of an NAO robot. Then twice a conversation following a questionnaire. The 6 different conversations were divided between the participants so that the online survey would not take too much time. This resulted in three questionnaires, which can be found below: | |||

1: https://forms.gle/zx7HH38f8Tjqqwee6 | |||

2: https://forms.gle/9zhfTbCHRCNMgR1b9 | |||

3: https://forms.gle/ZjX54tzKW89LntgH9 | |||

where the first questionnaire has a casual conversation with according idle movements as well as a serious conversation with according idle movements. | |||

The second questionnaire has a casual conversation without idle movements and a serious conversation with the idle movements intended for the casual conversation. | |||

The third questionnaire has a casual conversation with the idle movements intended for a serious conversation and a serious conversation without idle movements. | |||

=== The participant === | |||

A total of 24 participants took part in this online experiment. The range of age was between 17 and 60 years. | |||

=== The questionnaire === | |||

The same questionnaire as in B1, the Godspeed questionnaire, was used. | |||

=== The results === | |||

The acquired data which we achieved from performing experiment B.2 was analyzed using SPSS. Because we used the godspeed questionnaire the data was acquired in Likert scale scores. We used a five-point Likert scale. The results were analyzed by taking the mean score of each of the five categories per conversation type. In total, we tested seven different conversations which are all explained in previous chapters. The following bar chart shows the mean score of every category per conversation type. | |||

[[File:imageJohnny.png|center|thumb|550px|Figure 8: Bar chart for the mean scores of the godspeed questionnaire]] | |||

To give a small explanation of the graph: on the y-axis, we can see the mean scores of the five points Likert scale. On the x-axis, we can see the five categories that are acquired from the godspeed questionnaire. These five categories are subdivided in different bars which represent a conversation type. | |||

*Before experiment: Before the experiment started we let the participants fill in the godspeed questionnaire to set a standard to compare the results to. | |||

*Casual Casual: The casual conversation script mentioned previously, supplemented with the casual idle movements. | |||

*Serious Serious: The serious conversation script mentioned previously, supplemented with the serious idle movements. | |||

*Casual No: The casual conversation script mentioned previously, using no movements at all. | |||

*Serious Casual: The serious conversation script mentioned previously, supplemented with the casual idle movements. | |||

*Casual Serious: The casual conversation script mentioned previously, supplemented with the serious idle movements. | |||

*Serious No: The serious conversation script mentioned previously, using no movements at all. | |||

===='''Chart analysis B2'''==== | |||

'''Anthropomorphism''' | |||

In line with expectation, the ''serious conversation with serious movements'' and ''casual conversation with casual movements'' are peaking with the serious conversation being the highest. Followed by ''a serious conversation with casual movements''. What is remarkable about this result is that the no-movement combinations are overall lower for every other bar chart except the ''casual conversation with serious movements''. This is in contradiction with what is expected. Furthermore, the means of all categories lie higher than before the experiment. | |||

'''Animacy''' | |||

The bar chart for animacy has three peaks which lay quite close to each other: ''casual conversation with casual movements'', ''serious conversation with serious movements'' and ''serious conversation with casual movements''. After that come ''casual conversation with no movements'' and below that comes ''casual conversation with serious movements'' and ''serious conversation with no movements''. The means of all categories are higher than the mean before the experiment. | |||

'''Likeability''' | |||

The peak is with the ''casual conversation with casual movements'' category. The means of ''casual conversation with serious movements'' and | |||

''serious conversation with no movements'' lay lower than the mean of before the experiment. The means of ''serious conversation with serious movements'', ''casual conversation with no movements'' and ''serious conversation with casual movements'' are a bit higher than before the experiment. What stands out is that ''casual conversation with no movements'' has a large mean score, which was not expected. | |||

'''Perceived Intelligence''' | |||

It can be noted that the mean of ''serious conversation with serious movements'' is the highest. After that ''casual conversation with casual movements'', then ''serious conversation with casual movements''. What stands out is that the means of all categories are higher than the mean before the experiment. Meaning that people found that seeing the robot positively stimulates the thought of perceived intelligence. | |||

'''Perceived Safety''' | |||

The category standing out is the ''casual conversation with serious movements''. The mean is lower than before the experiment. This is a result that was not expected. The means of other categories are a bit higher than before the experiment, with ''casual conversation with casual movements'' the highest mean. What stands out as well, is the fact that the ''casual conversation with no movements'' is the second-highest. This can be caused by the reason that no movements may give the person watching the idea that the robot is harmless as it cannot do precision movements anyway. | |||

=== Conclusion === | |||

Overall, it can be said that the right combination (''casual conversation with casual movements'' or ''serious conversation with serious movements'') gives the expected result: namely that it is received better overall. The ''casual conversation with casual movements'' peaks twice over the five categories and ''serious conversation with serious movements'' peaking, the resulting, three times. It is remarkable to see that the range of the means is overall smaller for the ''casual conversation with casual movements'' than for the ''serious conversation with serious movements'', meaning that the results of the latter can be less trusted than the ''casual conversation with casual movements''. | |||

Furthermore, it is remarkable to see that there is an increase of mean for all combinations in three categories (relative to the premeasured mean),. With a fourth having all but one higher than the premeasured mean and the last one, two bars being lower than the premeasured. This means that (nonetheless the combination of conversation and movement) the view of the NAO robot is positively influenced by seeing the robot on a video, with likeability and perceived safety is less certain. | |||

What is on the contrary, to being expected, is that ''casual conversation with no-movements'' has a high mean with an average (to comparison) range, while the ''serious conversation with no-movements'' is perceived as the overall worse. On top of that, the ''casual conversation with no-movements'' scores better in all categories than the ''casual conversation with serious movements''. On the opposite combination, the ''serious conversation with no-movements'' scores worse than the ''serious conversation with casual movements'', suggesting that the wrong type of movement only influences the casual conversation really much. | |||

Last but not least, there seems not to be a large difference between the two types of conversation (serious and casual). Meaning that over the internet, one conversation type is not perceived as good as the other. | |||

Since the increases between the different types of movements are significant, it can be said that the use of the right movements for each conversation contributes to the perceiving of the robot over a video. | |||

=== Limitations === | |||

Conducting an online questionnaire always brings limitations to your experiment, especially when the participants are asked what they think about a social robot. As the participants are not interacting with the robot, nor they are physically there to see the robot in person, we still wanted to conduct the online questionnaire. This would give a better representation of the user's base, which was one of our limitations from experiment B1. | |||

== '''Feedback loop on the users''' == | |||

In the first two experiments, the primary focus was to find out if the robot's appearance would be more 'natural' while having the right idle motions. This was determined while not telling the participants what type of movements they got with their conversation. This was a good way to try and understand the human perception of robots. Another way to have more interaction with the users was to just ask them. 'Do you think these idle motions make the robot better'. | |||

=== '''Primary users''' === | |||

At first, the primary users were looked at. Since in elderly care it is more relevant to have a casual conversation. They want to be comfortable and have fun with the robot. Several elderly were shown the videos of the casual conversations and explained the differences between the movements. Their reaction was very positive. Something that stood out with the other user groups was that the elderly are just really impressed by the robot. They tend to anthropomorphize a bit more. They explained how the robots were so smart and liked all the functions on the robot. However, they still agreed on the idea of implementing casual motions rather than no or serious movements, because in the future elderly will have more experience with robots and this will change. | |||

=== '''Secondary users''' === | |||

The secondary users were asked the same questions as the primary users. The secondary users available for questioning in this short time and due to corona restrictions consisted of a general practitioner, a nurse, and a salesman. They reacted more normally to the videos instead of, 'wow this is cool'. They understood the reason for researching different idle motions better. Most of the secondary users fall in the 'serious' category, because for instance in a business or when going to the doctor you do not know this person very well. These users advised when using social robots in the future for their professions to use serious movements. It could make a difference. | |||

=== '''Final takeaway''' === | |||

As read before, the reactions of the users were relatively positive, especially in the first group. The explanation of the project made sense to all users. However when seeing the video of the NAO robot and the limitations in degrees of freedom some users were slightly less positive. They explained that now with these robots the movements could look weird and the distinguishing of serious and casual movements would not really be noted, only the weird movements the robot made. So for in the future with better human-like robots, they would use these idle motions for when robots would work in their industry, but for now, it is still too complicated for the robot motions. | |||

== '''Conclusion and Discussion'''== | |||

=== '''Conclusion'''=== | |||

'''Which idle movements are considered the most appropriate human-human interaction?''' | |||

By using two different types of conversation (casual and serious) and a neutral position (waiting/in rest), it was figured out what kind of idle movements are most appropriate in human-human interaction. The first indication to find the idle movements was by using a Japanese paper suggesting eleven movements that are done often by humans<ref name=japanpaper/>. By tallying the number of repetitions of each movement per category, it was found that (for listening) a lot of the same movements resulted in peaking higher than others. The peaks of the casual conversation were for neck movement the highest, followed by arm movement, mouth movement, touching face and hand movement. The peaks of the serious conversation were to be found for hand movement being the highest, being followed by looking around, arm movement, body stretching, touching face and neck movement. The big difference between the two is, is that the frequency differences between the peaks were much higher for the serious conversation. Meaning that the difference in idle movements is clearly visible between the two conversation types as the serious conversation consists of less variation in the eleven used movement types. | |||

The waiting's peaks were to be found for neck movement, looking around, and mouth movement. Furthermore, the waiting movements were not surprising and were not to be further used in the research. | |||

'''Which idle movements are considered the most appropriate human-robot interaction?''' | |||

In the following experiment, combination sets were created for the different types of conversation together with different types of movement combinations. As was being told in the first paragraph, there were different movements for the different types of conversation. The best four movements were chosen for the robot, adding to this that the movements had to be doable by the robot. This resulted in movements not being able to be used, such as mouth movement or body stretching (as the robot tended to do it too slow or to collapse once doing it faster). This resulted (see table 1) in the neck arm and hand movement being chosen for both the conversations and touching face for the casual conversation and looking around for the serious conversation. | |||

Followed to this setup, is the execution of experiment B.1. Experiment B.1 consisted of the aforementioned movements and a conversation. The conversation type differed and, thus, also needed a different topic. It was chosen that the casual conversation was a conversation about upcoming plans for the summer and the serious conversation was a job interview. It was made so that a movement was performed after the question or sentence of the NAO robot. Participants (in total 18) were used to talking to the robot and were needed to fill in Godspeed questionnaires (in total three per participant), covering anthropomorphism, animacy, likeability, perceived intelligence, and perceived safety. | |||

The latter experiment was done in real life, but another experiment was done (B.2) which was done via the internet. Participants (in total 24) were shown a video with the same Godspeed questionnaires. The video was a conversation being recorded of one of our own and consisted of exactly the same combinations as for experiment B.1. | |||

This resulted in two completely different outcomes. | |||

Experiment B.1 showed the opposite of what was expected: the combination of a casual conversation with serious movements and vice versa was concluded to be the best in most of the categories of the Godspeed questionnaire. This would suggest that experiment A is either wrong or that the perceiving of the movements between human-robot interaction is different. | |||

On top of that, it was concluded that the serious conversation was perceived as being the best in all categories, when not looking at what type of movements were performed. Suggesting that in real life, serious conversations are perceived by humans better in terms of the five categories. However, the usage of movements has improved the result overall. On the other hand, there is a large range of means and a small difference in mean suggesting that the data can not completely be trusted. | |||

Experiment B.2 showed that the expected result, casual conversation with casual movements peaking together with the vice versa. This is nearly in all of the categories the result. On top of that, the range of means seems to be pretty small for the casual conversation with casual movements, suggesting that this type is perceived as much better. The serious conversation with serious movements has a large range but is often the highest peak. On top of this, the peaks of the two latter are significantly bigger than the others, suggesting that this data can be used to conclude from. On the downside, there seems to be a peak for the casual conversation with no-movement, which seems off. This will be explained in the discussion. | |||

Overall, the usage of movements positively stimulates the participants (and, thus, speakers). The best set of movements seems to be dependent on whether the speaker is directly in front of the robot or sees the robot on their screen. For when people see the robot in real life, the best combinations would be the casual conversation with serious movements and the serious conversation with casual movements. For when people see the robot on a screen, the best combinations would be the casual conversation with casual movements and the serious conversation with serious movements. However, since the difference for means was small for the categories in experiment B.1, it would not matter if it were used as casual – casual and serious – serious. This would result in an easier to program software. | |||

=== '''Discussion'''=== | |||

'''Experiment A''' | |||

The most important result from experiment A is that people tend to use idle movements as a means to show acceptance or agreement with what has been said. This is important to our research, to make robots more human-like. It is important that robots use idle movements in conversations to show their understanding of what has been said. It can also be noted that people always move, from scratching their faces to moving their arms around. These results were implemented in experiment B. | |||

Unfortunately, the NAO robot has a limited range of motion. Eight specific idle movements were chosen with the help of literature research and eventually determined by experiment A, but unfortunately, the NAO robot was only able to perform six of eight idle movements. | |||

In experiment A, it was difficult to determine in what category an idle movement would fall in. All of the videos used in experiment A were taken from YouTube. This could have affected the results of experiment A, as people might unconsciously behave differently when they know they are recorded, i.e. they might use less idle movements, or use their hands more to show social affection. To try to counter this, the experiment has been done by two people. On top of that, the significance has been used as well, to see whether the results were a coincidence (but they were not). | |||

'''Experiment B1''' | |||

Conversations without movements clearly score lower than conversations with movements, whether the movements were intended for the casual or serious conversation. This is likely due to the fact that the robot is completely static, which results in looking lifeless as expected. This tells us that the idle movements are key to making a robot look human-like in a conversation. However, due to the fact that not every movement is tested individually, the difference between the casual movements and serious movements cannot be seen clearly in the results. | |||

Ideally, the idle movements are tested individually to have a clear result on what idle movements work best, especially with robots that have a limited range of motion, like the NAO robot. However, to test this thoroughly, a lot more participants were needed. | |||

Experiment B1 would have benefited if more participants were used. The users' groups would be better represented, especially if those participants worked in healthcare. This would mean that the data could show differences in data between different user groups. This would benefit the feedback loop for the primary users. Unfortunately, with the resources, limitations, and duration of the project, this is not possible. | |||

'''Experiment B2''' | |||

In contradiction to experiment B1, the right combination of movements did show the expected results. This shows that the view of the NAO robot is positively influenced by seeing it on a video. However, the results of experiment B2 cannot be compared to a real-life conversation with a robot. | |||

Experiment B2 would have benefited if more participants were used. Because this experiment was conducted online, participants who work in healthcare were asked to participate, in order to counteract the limitation of experiment B1. However, not enough primary users were contacted to draw a conclusion from this data. Due to the set up used in experiment B1, it was decided upon to not change the set-up of experiment B2. | |||

Although experiment B2 is an extension of experiment B1, its data cannot be used on its own, due to the fact that the social interaction with the NAO robot is replaced in the form of an online video. | |||

== ''' Follow up study '''== | |||

For studies that want to dig in deeper into this subject, the following advice is given: | |||